热门标签

热门文章

- 1线性规划求解器总结与比较

- 2成功解决自己写的模块包导入问题ModuleNotFoundError: No module named ‘core‘报错_modulenotfounderror: no module named 'core

- 3ClickHouse 在日志存储与分析方面作为 ElasticSearch 和 MySQL 的替代方案_elasticsearch 替换方案

- 4SpringMVC与SpringWebFlux对比使用及使用建议_springmvc和springwebflux

- 5文本分类 | 基于朴素贝叶斯的文本分类方法_朴素贝叶斯文本分类

- 6【附源码】基于java的宠物领养系统l3a769计算机毕设SSM_宠物管理系统用户用例图

- 7【论文精读】融合知识图谱和语义匹配的医疗问答系统_混合语义相似度的中文知识图谱问答系统

- 810款常见的Webshell检测工具

- 9SpringBoot+Nacos实现配置中心_springboot2.4.5 nacos

- 10Git的思想和基本工作原理_git的设计思想是啥

当前位置: article > 正文

hive查询hudi表使用count报java.lang.ClassNotFoundException: org.apache.hudi.hadoop.HoodieParquetInputFormat_cannot find class org.apache.hudi.hadoop.hoodiepar

作者:weixin_40725706 | 2024-05-20 10:57:13

赞

踩

cannot find class org.apache.hudi.hadoop.hoodieparquetinputformat

问题描述:

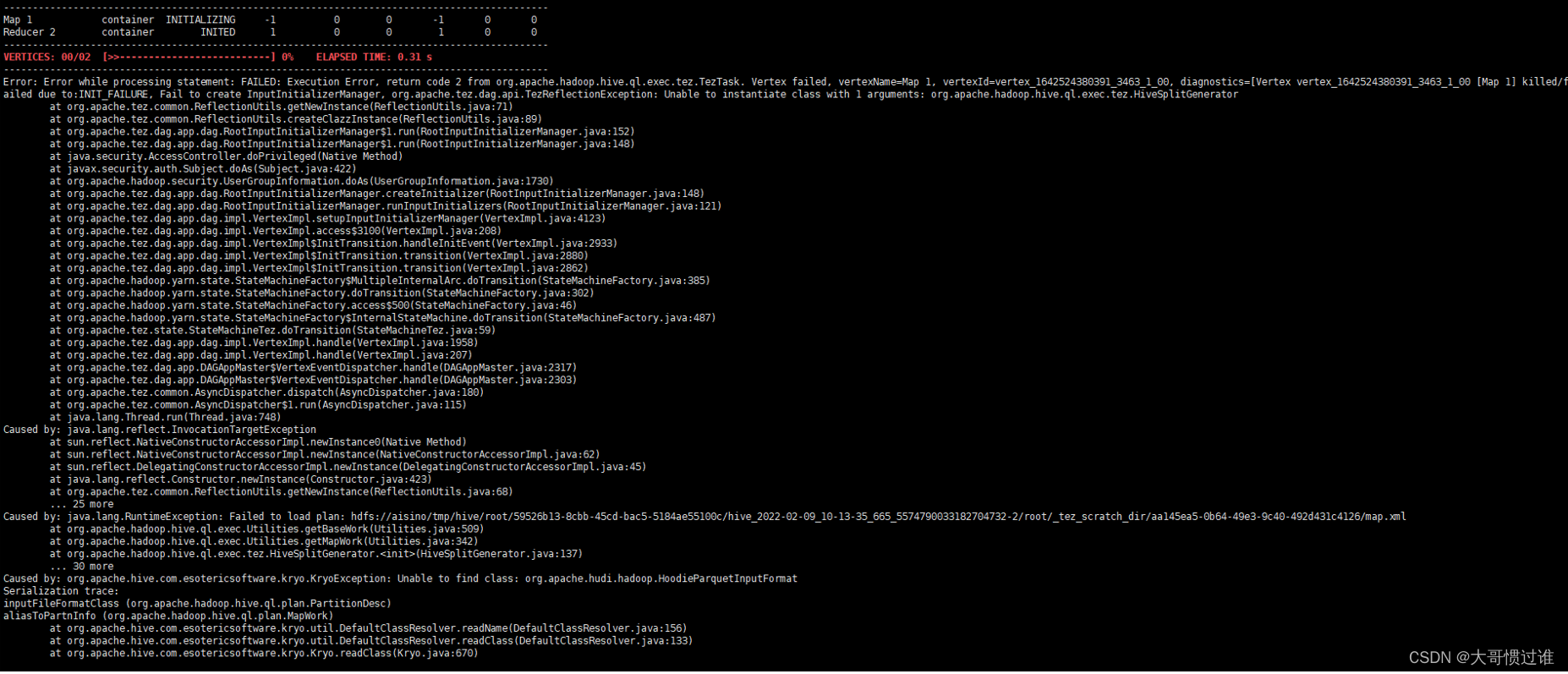

hive 查询hudi 报错,

已将hudi 对应包hudi-hadoop-mr-bundle-0.9.0.jar放入lib目录下并重启hive

select count(1) from table ;

ERROR : Vertex failed, vertexName=Map 1, vertexId=vertex_1642524380391_3302_1_00, diagnostics=[Vertex vertex_1642524380391_3302_1_00 [Map 1] killed/failed due to:INIT_FAILURE, Fail to create InputInitializerManager, org.apache.tez.dag.api.TezReflectionException: Unable to instantiate class with 1 arguments: org.apache.hadoop.hive.ql.exec.tez.HiveSplitGenerator at org.apache.tez.common.ReflectionUtils.getNewInstance(ReflectionUtils.java:71) at org.apache.tez.common.ReflectionUtils.createClazzInstance(ReflectionUtils.java:89) at org.apache.tez.dag.app.dag.RootInputInitializerManager$1.run(RootInputInitializerManager.java:152) at org.apache.tez.dag.app.dag.RootInputInitializerManager$1.run(RootInputInitializerManager.java:148) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730) at org.apache.tez.dag.app.dag.RootInputInitializerManager.createInitializer(RootInputInitializerManager.java:148) at org.apache.tez.dag.app.dag.RootInputInitializerManager.runInputInitializers(RootInputInitializerManager.java:121) at org.apache.tez.dag.app.dag.impl.VertexImpl.setupInputInitializerManager(VertexImpl.java:4123) at org.apache.tez.dag.app.dag.impl.VertexImpl.access$3100(VertexImpl.java:208) at org.apache.tez.dag.app.dag.impl.VertexImpl$InitTransition.handleInitEvent(VertexImpl.java:2933) at org.apache.tez.dag.app.dag.impl.VertexImpl$InitTransition.transition(VertexImpl.java:2880) at org.apache.tez.dag.app.dag.impl.VertexImpl$InitTransition.transition(VertexImpl.java:2862) at org.apache.hadoop.yarn.state.StateMachineFactory$MultipleInternalArc.doTransition(StateMachineFactory.java:385) at org.apache.hadoop.yarn.state.StateMachineFactory.doTransition(StateMachineFactory.java:302) at org.apache.hadoop.yarn.state.StateMachineFactory.access$500(StateMachineFactory.java:46) at org.apache.hadoop.yarn.state.StateMachineFactory$InternalStateMachine.doTransition(StateMachineFactory.java:487) at org.apache.tez.state.StateMachineTez.doTransition(StateMachineTez.java:59) at org.apache.tez.dag.app.dag.impl.VertexImpl.handle(VertexImpl.java:1958) at org.apache.tez.dag.app.dag.impl.VertexImpl.handle(VertexImpl.java:207) at org.apache.tez.dag.app.DAGAppMaster$VertexEventDispatcher.handle(DAGAppMaster.java:2317) at org.apache.tez.dag.app.DAGAppMaster$VertexEventDispatcher.handle(DAGAppMaster.java:2303) at org.apache.tez.common.AsyncDispatcher.dispatch(AsyncDispatcher.java:180) at org.apache.tez.common.AsyncDispatcher$1.run(AsyncDispatcher.java:115) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.tez.common.ReflectionUtils.getNewInstance(ReflectionUtils.java:68) ... 25 more Caused by: java.lang.RuntimeException: Failed to load plan: hdfs://xxx/tmp/hive/root/8344ec71-67c8-4733-8dd1-41123f1e1729/hive_2022-02-08_16-54-15_006_1554035332667755211-922/root/_tez_scratch_dir/fcdb3e29-0d57-40cc-8bfe-21cfa9490cd6/map.xml at org.apache.hadoop.hive.ql.exec.Utilities.getBaseWork(Utilities.java:509) at org.apache.hadoop.hive.ql.exec.Utilities.getMapWork(Utilities.java:342) at org.apache.hadoop.hive.ql.exec.tez.HiveSplitGenerator.<init>(HiveSplitGenerator.java:137) ... 30 more Caused by: org.apache.hive.com.esotericsoftware.kryo.KryoException: Unable to find class: org.apache.hudi.hadoop.HoodieParquetInputFormat Serialization trace: inputFileFormatClass (org.apache.hadoop.hive.ql.plan.PartitionDesc) aliasToPartnInfo (org.apache.hadoop.hive.ql.plan.MapWork) at org.apache.hive.com.esotericsoftware.kryo.util.DefaultClassResolver.readName(DefaultClassResolver.java:156) at org.apache.hive.com.esotericsoftware.kryo.util.DefaultClassResolver.readClass(DefaultClassResolver.java:133) at org.apache.hive.com.esotericsoftware.kryo.Kryo.readClass(Kryo.java:670) at org.apache.hadoop.hive.ql.exec.SerializationUtilities$KryoWithHooks.readClass(SerializationUtilities.java:185) at org.apache.hive.com.esotericsoftware.kryo.serializers.DefaultSerializers$ClassSerializer.read(DefaultSerializers.java:326) at org.apache.hive.com.esotericsoftware.kryo.serializers.DefaultSerializers$ClassSerializer.read(DefaultSerializers.java:314) at org.apache.hive.com.esotericsoftware.kryo.Kryo.readObjectOrNull(Kryo.java:759) at org.apache.hadoop.hive.ql.exec.SerializationUtilities$KryoWithHooks.readObjectOrNull(SerializationUtilities.java:203) at org.apache.hive.com.esotericsoftware.kryo.serializers.ObjectField.read(ObjectField.java:132) at org.apache.hive.com.esotericsoftware.kryo.serializers.FieldSerializer.read(FieldSerializer.java:551) at org.apache.hive.com.esotericsoftware.kryo.Kryo.readClassAndObject(Kryo.java:790) at org.apache.hadoop.hive.ql.exec.SerializationUtilities$KryoWithHooks.readClassAndObject(SerializationUtilities.java:180) at org.apache.hive.com.esotericsoftware.kryo.serializers.MapSerializer.read(MapSerializer.java:161) at org.apache.hive.com.esotericsoftware.kryo.serializers.MapSerializer.read(MapSerializer.java:39) at org.apache.hive.com.esotericsoftware.kryo.Kryo.readObject(Kryo.java:708) at org.apache.hadoop.hive.ql.exec.SerializationUtilities$KryoWithHooks.readObject(SerializationUtilities.java:218) at org.apache.hive.com.esotericsoftware.kryo.serializers.ObjectField.read(ObjectField.java:125) at org.apache.hive.com.esotericsoftware.kryo.serializers.FieldSerializer.read(FieldSerializer.java:551) at org.apache.hive.com.esotericsoftware.kryo.Kryo.readObject(Kryo.java:686) at org.apache.hadoop.hive.ql.exec.SerializationUtilities$KryoWithHooks.readObject(SerializationUtilities.java:210) at org.apache.hadoop.hive.ql.exec.SerializationUtilities.deserializeObjectByKryo(SerializationUtilities.java:707) at org.apache.hadoop.hive.ql.exec.SerializationUtilities.deserializePlan(SerializationUtilities.java:613) at org.apache.hadoop.hive.ql.exec.SerializationUtilities.deserializePlan(SerializationUtilities.java:590) at org.apache.hadoop.hive.ql.exec.Utilities.getBaseWork(Utilities.java:470) ... 32 more Caused by: java.lang.ClassNotFoundException: org.apache.hudi.hadoop.HoodieParquetInputFormat at java.net.URLClassLoader.findClass(URLClassLoader.java:382) at java.lang.ClassLoader.loadClass(ClassLoader.java:418) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:355) at java.lang.ClassLoader.loadClass(ClassLoader.java:351) at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:348) at org.apache.hive.com.esotericsoftware.kryo.util.DefaultClassResolver.readName(DefaultClassResolver.java:154) ... 55 more ]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

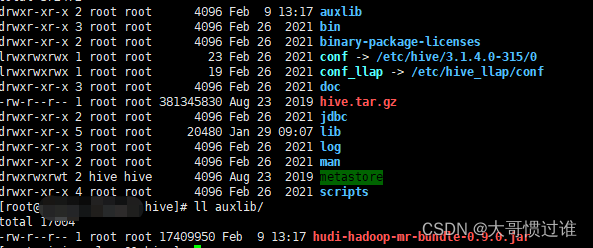

解决办法:

hive lib目录同级目录下创建auxlib,并将包放入此目录后 重启Hive

必须要auxlib目录

select count(1) from document_ro; INFO : Compiling command(queryId=hive_20220209132712_713df3f8-91ab-46a2-8e1a-17bda938a644): select count(1) from document_ro INFO : Semantic Analysis Completed (retrial = false) INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:_c0, type:bigint, comment:null)], properties:null) INFO : Completed compiling command(queryId=hive_20220209132712_713df3f8-91ab-46a2-8e1a-17bda938a644); Time taken: 1.619 seconds INFO : Executing command(queryId=hive_20220209132712_713df3f8-91ab-46a2-8e1a-17bda938a644): select count(1) from document_ro INFO : Query ID = hive_20220209132712_713df3f8-91ab-46a2-8e1a-17bda938a644 INFO : Total jobs = 1 INFO : Launching Job 1 out of 1 INFO : Starting task [Stage-1:MAPRED] in serial mode INFO : Subscribed to counters: [] for queryId: hive_20220209132712_713df3f8-91ab-46a2-8e1a-17bda938a644 INFO : Tez session hasn't been created yet. Opening session INFO : Dag name: select count(1) from document_ro (Stage-1) INFO : Status: Running (Executing on YARN cluster with App id application_1644382793459_0002) ---------------------------------------------------------------------------------------------- VERTICES MODE STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED ---------------------------------------------------------------------------------------------- Map 1 .......... container SUCCEEDED 3 3 0 0 0 0 Reducer 2 ...... container SUCCEEDED 1 1 0 0 0 0 ---------------------------------------------------------------------------------------------- VERTICES: 02/02 [==========================>>] 100% ELAPSED TIME: 3.55 s ---------------------------------------------------------------------------------------------- INFO : Status: DAG finished successfully in 3.45 seconds INFO : INFO : Query Execution Summary INFO : ---------------------------------------------------------------------------------------------- INFO : OPERATION DURATION INFO : ---------------------------------------------------------------------------------------------- INFO : Compile Query 1.62s INFO : Prepare Plan 4.15s INFO : Get Query Coordinator (AM) 0.01s INFO : Submit Plan 0.23s INFO : Start DAG 0.89s INFO : Run DAG 3.45s INFO : ---------------------------------------------------------------------------------------------- INFO : INFO : Task Execution Summary INFO : ---------------------------------------------------------------------------------------------- INFO : VERTICES DURATION(ms) CPU_TIME(ms) GC_TIME(ms) INPUT_RECORDS OUTPUT_RECORDS INFO : ---------------------------------------------------------------------------------------------- INFO : Map 1 1925.00 17,270 126 31,623 3 INFO : Reducer 2 1.00 600 0 3 0 INFO : ---------------------------------------------------------------------------------------------- INFO : INFO : org.apache.tez.common.counters.DAGCounter: INFO : NUM_SUCCEEDED_TASKS: 4 INFO : TOTAL_LAUNCHED_TASKS: 4 INFO : DATA_LOCAL_TASKS: 1 INFO : RACK_LOCAL_TASKS: 2 INFO : AM_CPU_MILLISECONDS: 3110 INFO : AM_GC_TIME_MILLIS: 0 INFO : File System Counters: INFO : FILE_BYTES_READ: 57 INFO : FILE_BYTES_WRITTEN: 174 INFO : HDFS_BYTES_READ: 48409293 INFO : HDFS_BYTES_WRITTEN: 105 INFO : HDFS_READ_OPS: 303 INFO : HDFS_WRITE_OPS: 2 INFO : HDFS_OP_CREATE: 1 INFO : HDFS_OP_GET_FILE_STATUS: 153 INFO : HDFS_OP_OPEN: 150 INFO : HDFS_OP_RENAME: 1 INFO : org.apache.tez.common.counters.TaskCounter: INFO : SPILLED_RECORDS: 0 INFO : NUM_SHUFFLED_INPUTS: 3 INFO : NUM_FAILED_SHUFFLE_INPUTS: 0 INFO : GC_TIME_MILLIS: 126 INFO : TASK_DURATION_MILLIS: 5607 INFO : CPU_MILLISECONDS: 17870 INFO : PHYSICAL_MEMORY_BYTES: 4219469824 INFO : VIRTUAL_MEMORY_BYTES: 36327419904 INFO : COMMITTED_HEAP_BYTES: 4219469824 INFO : INPUT_RECORDS_PROCESSED: 82 INFO : INPUT_SPLIT_LENGTH_BYTES: 26237450 INFO : OUTPUT_RECORDS: 3 INFO : OUTPUT_LARGE_RECORDS: 0 INFO : OUTPUT_BYTES: 12 INFO : OUTPUT_BYTES_WITH_OVERHEAD: 36 INFO : OUTPUT_BYTES_PHYSICAL: 150 INFO : ADDITIONAL_SPILLS_BYTES_WRITTEN: 0 INFO : ADDITIONAL_SPILLS_BYTES_READ: 0 INFO : ADDITIONAL_SPILL_COUNT: 0 INFO : SHUFFLE_BYTES: 78 INFO : SHUFFLE_BYTES_DECOMPRESSED: 36 INFO : SHUFFLE_BYTES_TO_MEM: 53 INFO : SHUFFLE_BYTES_TO_DISK: 0 INFO : SHUFFLE_BYTES_DISK_DIRECT: 25 INFO : SHUFFLE_PHASE_TIME: 193 INFO : FIRST_EVENT_RECEIVED: 91 INFO : LAST_EVENT_RECEIVED: 190 INFO : HIVE: INFO : CREATED_FILES: 1 INFO : DESERIALIZE_ERRORS: 0 INFO : RECORDS_IN_Map_1: 31623 INFO : RECORDS_OUT_0: 1 INFO : RECORDS_OUT_INTERMEDIATE_Map_1: 3 INFO : RECORDS_OUT_INTERMEDIATE_Reducer_2: 0 INFO : RECORDS_OUT_OPERATOR_FS_11: 1 INFO : RECORDS_OUT_OPERATOR_GBY_10: 1 INFO : RECORDS_OUT_OPERATOR_GBY_8: 3 INFO : RECORDS_OUT_OPERATOR_MAP_0: 0 INFO : RECORDS_OUT_OPERATOR_RS_9: 3 INFO : RECORDS_OUT_OPERATOR_SEL_7: 31623 INFO : RECORDS_OUT_OPERATOR_TS_0: 31623 INFO : TaskCounter_Map_1_INPUT_document_ro: INFO : INPUT_RECORDS_PROCESSED: 79 INFO : INPUT_SPLIT_LENGTH_BYTES: 26237450 INFO : TaskCounter_Map_1_OUTPUT_Reducer_2: INFO : ADDITIONAL_SPILLS_BYTES_READ: 0 INFO : ADDITIONAL_SPILLS_BYTES_WRITTEN: 0 INFO : ADDITIONAL_SPILL_COUNT: 0 INFO : OUTPUT_BYTES: 12 INFO : OUTPUT_BYTES_PHYSICAL: 150 INFO : OUTPUT_BYTES_WITH_OVERHEAD: 36 INFO : OUTPUT_LARGE_RECORDS: 0 INFO : OUTPUT_RECORDS: 3 INFO : SPILLED_RECORDS: 0 INFO : TaskCounter_Reducer_2_INPUT_Map_1: INFO : FIRST_EVENT_RECEIVED: 91 INFO : INPUT_RECORDS_PROCESSED: 3 INFO : LAST_EVENT_RECEIVED: 190 INFO : NUM_FAILED_SHUFFLE_INPUTS: 0 INFO : NUM_SHUFFLED_INPUTS: 3 INFO : SHUFFLE_BYTES: 78 INFO : SHUFFLE_BYTES_DECOMPRESSED: 36 INFO : SHUFFLE_BYTES_DISK_DIRECT: 25 INFO : SHUFFLE_BYTES_TO_DISK: 0 INFO : SHUFFLE_BYTES_TO_MEM: 53 INFO : SHUFFLE_PHASE_TIME: 193 INFO : TaskCounter_Reducer_2_OUTPUT_out_Reducer_2: INFO : OUTPUT_RECORDS: 0 INFO : org.apache.hadoop.hive.ql.exec.tez.HiveInputCounters: INFO : GROUPED_INPUT_SPLITS_Map_1: 3 INFO : INPUT_DIRECTORIES_Map_1: 11 INFO : INPUT_FILES_Map_1: 50 INFO : RAW_INPUT_SPLITS_Map_1: 50 INFO : Completed executing command(queryId=hive_20220209132712_713df3f8-91ab-46a2-8e1a-17bda938a644); Time taken: 8.802 seconds INFO : OK +--------+ | _c0 | +--------+ | 31623 | +--------+ 1 row selected (10.553 seconds)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/weixin_40725706/article/detail/597395

推荐阅读

相关标签