- 1大地测量观测数据可视化MATLAB工具箱:(1)时间序列、统计数据可视化_visbundle

- 2ubuntu如何限制系统日志大小?

- 3UINO优锘科技助力银行业开启智慧运维,踏入智慧金融时代_优锘 故障运营

- 4SpringBoot启动流程源码分析二、SpringApplication准备阶段_设定managementfactory.getruntimemxbean().name 配置

- 5JAVA同城服务台球助教台球教练系统源码的实现流程

- 6文本生成图像新SOTA!RealCompo:逼真和构图的动态平衡(清北最新)_realcompo: dynamic equilibrium between realism and

- 7深入理解Spring Boot Controller层的作用与搭建过程_spring boot controller 处理流程

- 8PyTorch指标计算库TorchMetrics详解_pytorchlighning torchmetrics f1

- 9基础课5——垂直领域对话系统架构_垂直领域问答对话实现方法

- 10NLP-文本蕴含(文本匹配):概述【单塔模型、双塔模型】_文本蕴含任务

pytorch深度学习框架看着一篇就够了!_pytorch csdn

赞

踩

目录

部分内容参考pytorch中文教程

1.安装—如何管理环境不同pytorch

在做不同的项目需要使用不同版本的环境,比如有的项目需要pytorch0.4有的需要1.0版本,anaconda集成的conda包可以解决这个问题,它可以创建两个房间,一个房间放0.4版本,一个房子放1.0版本,需要哪个版本就进入哪个房间进行工作。

- 查看自己电脑驱动版本命令

- Win+R cmd 进入命令行

- cd C:\Program Files\NVIDIA Corporation\NVSMI

- nvidia-smi

1.创建虚拟环境

创建虚拟环境报错该如何解决:

安装pytorch的GPU版本但是显示的是CPU版本

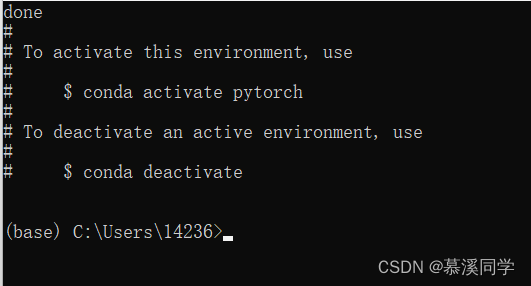

conda create -n pytorch python=3.6 #创建虚拟环境

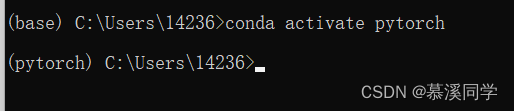

创建成功之后输入conda activate pytorch激活pytorch环境

可以看见环境由base环境转换到pytorch环境当中。

使用pip list查看pytorch当中的包

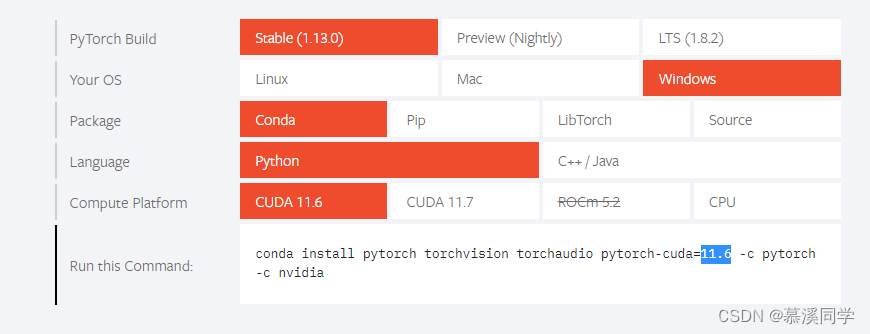

2.选择pytorch版本

如果想在自己电脑(具有NVIDIA显卡)上跑通代码,就选CUDA,如果不需要在自己电脑上跑(在服务器上跑)或者没有独立显卡,就选CPU。查看自己的CUDA版本是11.6,所以选择比自己版本低的。

使用自动生成的命令:conda install pytorch torchvision torchaudio pytorch-cuda=11.6 -c pytorch -c nvidia来进行安装

安装9.2版本命令:conda install pytorch torchvision cudatoolkit=9.2 -c defaults -c numba/label/dev

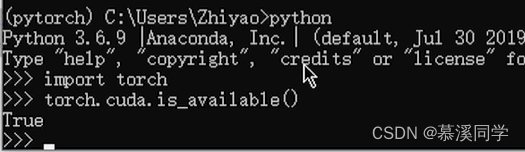

安装完毕后可以进入python执行下面命令来测试torch是否安装成功:

写作pytorch命令为conda uninstall pytorch

pytorch查看版本和测试是否安装成功代码:

- import torch

- print(torch.__version__)

- print(torch.cuda.is_available())

2.在pytorch当中安装jupyter

在pytorch虚拟环境当中输入:conda install nb_conda命令

3.学习pytorch必备两个函数

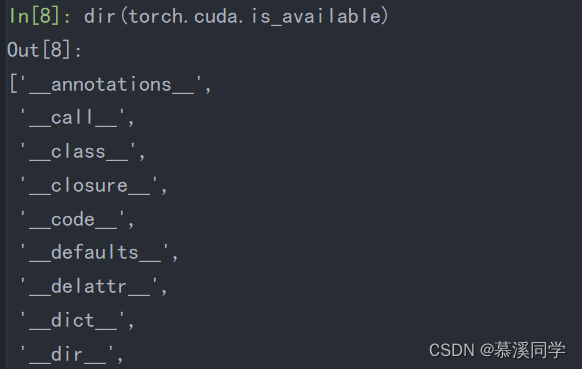

dir():打开,看见:使用dir可以查看某一个工具箱里面有什么工具

dir(torch.cuda.is_available):可以查看torch工具箱里面的cuda工具里面的is_available工具

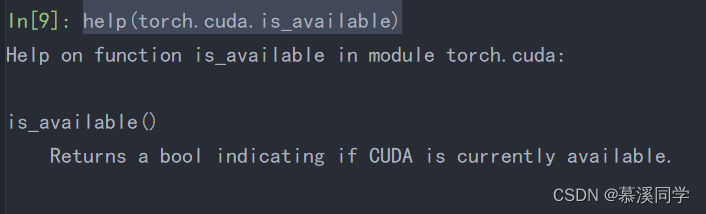

help():说明书 可以查看一个工具的用法

help(torch.cuda.is_available) 可以查看torch工具箱里面的cuda工具里面的is_available工具的用法

可以看出is_available返回的是一个bool类型的值

4.pytorch如何加载数据

需要理解两个类:Dataset Dataloader

Dataset :提供一种方式去获取数据及其label(如何获取每一个数据以及告诉我们有多少数据)

Dataloader:为后面的网络提供不同的数据形式

- from torch.utils.data import Dataset

- from PIL import Image

- import os

-

-

- # 使用图片操作等相关的功能

- class MyData(Dataset):

- def __init__(self, root_dir, label_dir):

- self.root_dir = root_dir # 将root_dir设置为这个类当中的全局变量

- self.label_dir = label_dir

- self.path = os.path.join(self.root_dir, self.label_dir)

- self.img_path = os.listdir(self.path) # 获取到路径下的所有图片 存储到list列表当中

-

- def __getitem__(self, item): # 获取到每一个图片的路径

- img_name = self.img_path[item]

- img_item_path = os.path.join(self.root_dir, self.label_dir, img_name)

- img = Image.open(img_item_path)

- label = self.label_dir

- return img, label

-

- def __len__(self): # 返回图片的数量

- return len(self.img_path)

-

-

- root_dir = 'dataset/train'

- ants_label_dir = 'ants'

- bees_label_dir = 'bees'

- ants_dataset = MyData(root_dir, ants_label_dir)

- bees_dataset = MyData(root_dir, bees_label_dir)

- train_dataset = ants_dataset + bees_dataset

- print(len(train_dataset))

- img, label = train_dataset[121]

- img.show()

-

- import os

- # 目的:将图像信息转换为txt文本信息进行存储

- root_dir = 'dataset/train'

- target_dir = 'ants_img'

- img_path = os.listdir(os.path.join(root_dir, target_dir))

- label = target_dir.split('_')[0]

- out_dir = 'ants_label'

- for i in img_path:

- file_name = i.split('.jpg')[0]

- with open(os.path.join(root_dir, out_dir, '{}.txt'.format(file_name)), 'w') as f:

- f.write(label)

-

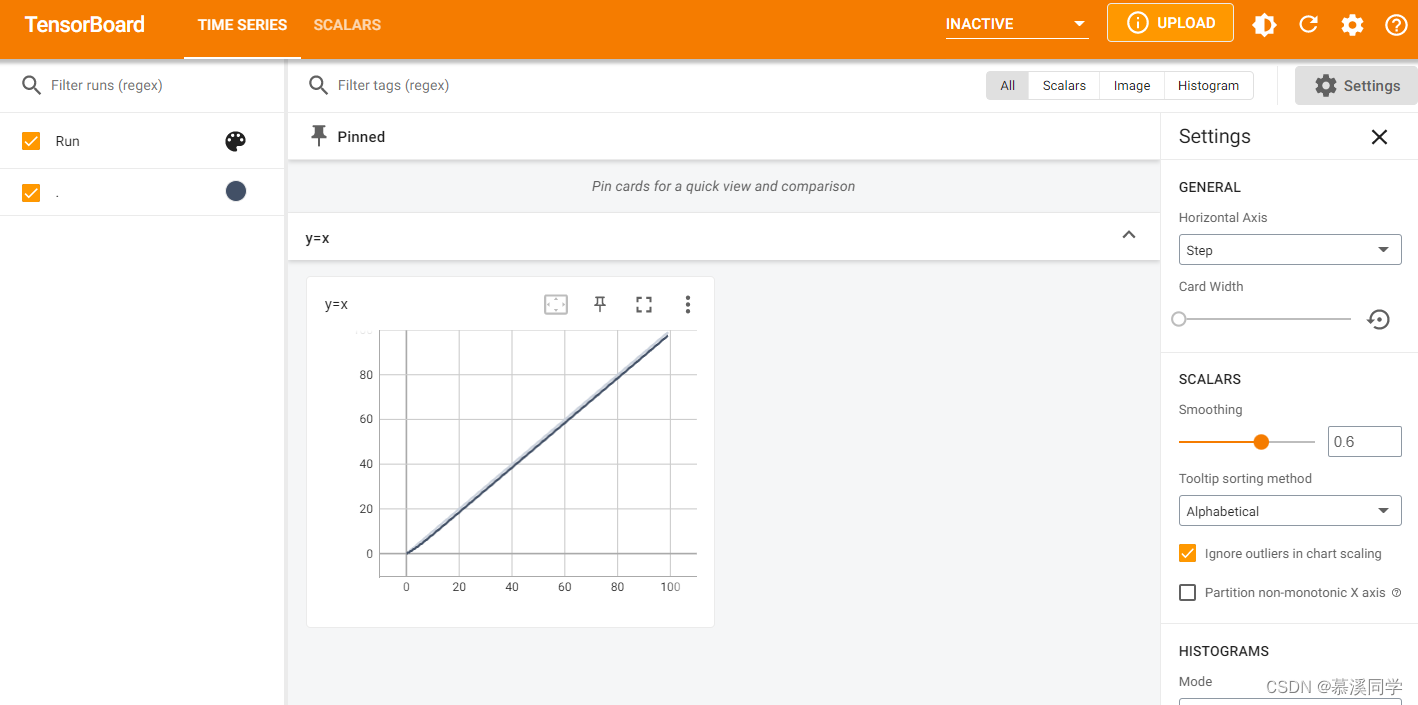

5.tensorboard使用方法

tensorboard需要tensor数据类型才能进行显示。

add_scalar()使用方法

需要在tensorboard中调用SummaryWriter,使用SummaryWriter中的add_scalar方法:

- def add_scalar(self, tag, scalar_value, global_step=None, walltime=None):

- tag:数据标签,类似于图标的标题

- scalar_value:对应的y轴数据

- global_step:训练的步长

如果使用tensorboard报错:ImportError: TensorBoard logging requires TensorBoard with Python summary writer installed. This should be available in 1.14 or above.则需要进行安装tensorboard

运行结果:

- from torch.utils.tensorboard import SummaryWriter

-

- writer = SummaryWriter('logs') # 将数据保存到指定的文件夹当中

- # writer.add_image()

- # y=x

- for i in range(100):

- writer.add_scalar('y=x', i, i)

- writer.close()

-

代码运行结束之后会自动生成一个logs文件夹,在logs文件夹当中会有一个数据,如何打开该数据?在terminal窗口当中使用tensorboard --logdir=logs命令可以打开一个页面,这个就是上述代码的执行数据展示效果。

add_image()函数使用方法:

- def add_image(self, tag, img_tensor, global_step=None, walltime=None, dataformats='CHW'):

- Args:

- tag (string): Data identifier

- img_tensor (torch.Tensor, numpy.array, or string/blobname): Image data(必须是这几个类型)

- global_step (int): Global step value to record

- Shape:

- img_tensor: Default is :math:`(3, H, W)`. You can use ``torchvision.utils.make_grid()`` to

- convert a batch of tensor into 3xHxW format or call ``add_images`` and let us do the job.

- Tensor with :math:`(1, H, W)`, :math:`(H, W)`, :math:`(H, W, 3)` is also suitable as long as

- corresponding ``dataformats`` argument is passed, e.g. ``CHW``, ``HWC``, ``HW``.

-

-

当我们使用PIL中的方法打开图片时的类型如下:PIL不满足add_image函数要求的类型,可以使用opencv读取图片,获取numpy类型图片数据

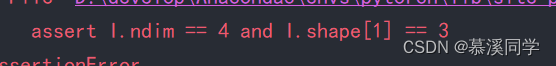

转换之后会发现add_image()函数依旧会报错,因为该函数要求图片的格式是(3,H,W),但是使用numpy转换之后的图片(print(img_array.shape))的格式是(512, 768, 3),如果想要使用,则需要使用dataformats将图片格式进行转换。

- from torch.utils.tensorboard import SummaryWriter

- import numpy as np

- from PIL import Image

-

- writer = SummaryWriter('logs') # 将数据保存到指定的文件夹当中

- image_path = 'test_dataset/train/ants_image/0013035.jpg'

- img_PIL = Image.open(image_path)

- img_array = np.array(img_PIL)

- print(img_array.shape)

- writer.add_image('test_img', img_array, 1, dataformats='HWC')

- # y=x

- for i in range(100):

- writer.add_scalar('y=2x', 2 * i, i)

- writer.close()

-

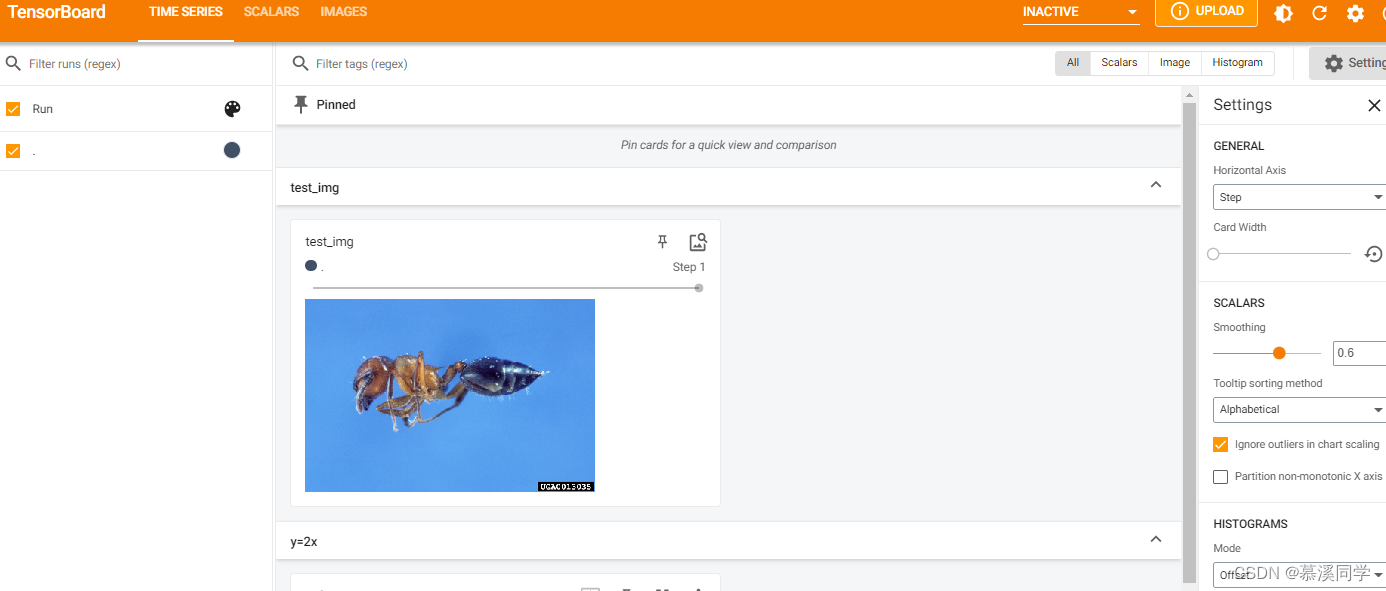

结果:可以看见测试数据中生成一个图片

读取一个新的图片,将add_image函数当中的步长设置为2,结果如下:

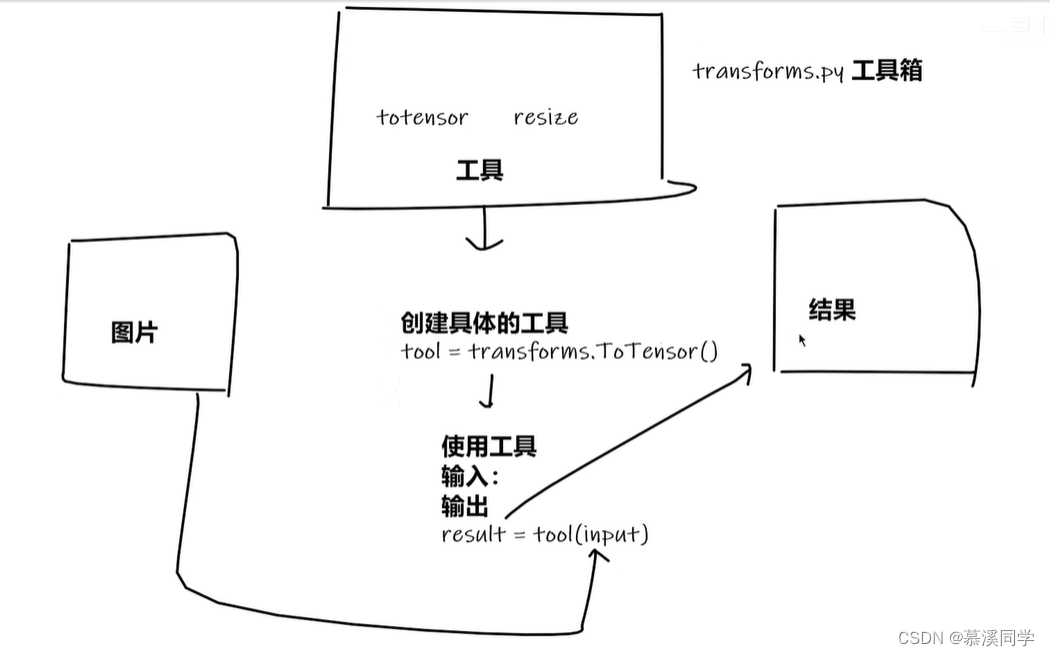

6.transform使用方法

Totensor

transform是torchvision中的一个python文件,可以比喻成一个工具箱,里面有很多工具,比如:ToTensor工具是用来把图片转换为tensor类型。

- from PIL import Image

- from torchvision import transforms

-

- # python用法 -》tensor数据类型

- # 通过transform.ToTensor解决两个问题

- # 1.transform该如何使用

- # 2.为什么需要tensor数据类型

-

- img_path = 'test_dataset/train/ants_image/9715481_b3cb4114ff.jpg'

- img = Image.open(img_path)

- tensor_train = transforms.ToTensor() # 创建一个对象 注意ToTensor是一个类,不能直接传入img参数

- tensor_img = tensor_train(img)

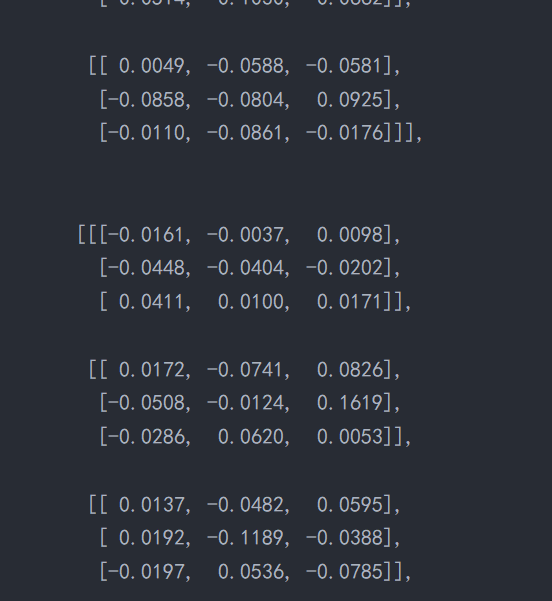

- print(tensor_img)

-

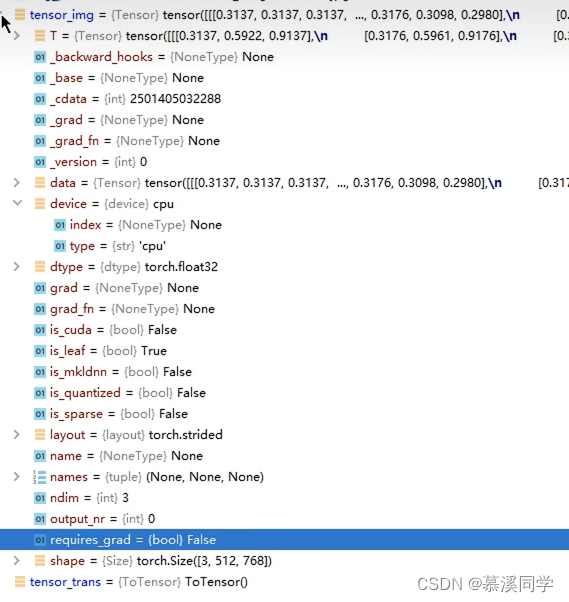

结果:

转换成tensor数据之后可以直接使用add_image函数

- from PIL import Image

- from torchvision import transforms

- import cv2

- from torch.utils.tensorboard import SummaryWriter

-

- # python用法 -》tensor数据类型

- # 通过transform.ToTensor解决两个问题

- # 1.transform该如何使用

- # 2.为什么需要tensor数据类型

-

- img_path = 'test_dataset/train/ants_image/9715481_b3cb4114ff.jpg'

- img = Image.open(img_path)

- tensor_train = transforms.ToTensor() # 创建一个对象 注意ToTensor是一个类,不能直接传入img参数

- tensor_img = tensor_train(img)

- cv_img = cv2.imread('test_dataset/train/ants_image/69639610_95e0de17aa.jpg') # 使用opencv生成的是numpy类型的数据

- writer = SummaryWriter('logs')

- writer.add_image('tensor_img', tensor_img)

- writer.close()

为什么要使用Tensor类型的数据,因为该类型里面包含了神经网络理论的一些参数,例如反向传播(backward_hook)、梯度(grad)、数据(data)等

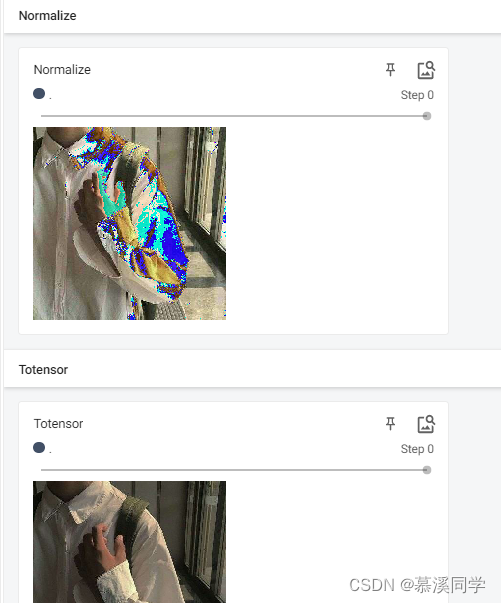

Normalize归一化

原图和归一化的对比

归一化的公式:output[channel] = (input[channel] - mean[channel]) / std[channel])(输入值-平均值)/标准差

代码:

- from PIL import Image

- from torchvision import transforms

- from torch.utils.tensorboard import SummaryWriter

-

- writer = SummaryWriter('logs')

- img = Image.open("image/头像二.jpg")

- print(img)

- # 1.totensor方法使用

- train_totensor = transforms.ToTensor()

- img_tensor = train_totensor(img)

- writer.add_image('Totensor', img_tensor)

-

- # Normalize归一化

- print(img_tensor[0][0][0])

- trans_norm = transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5]) #两个[]里面分别表示平均值的标准差,因为图片是3通道的,所以每个[]里面有三个值。

- img_norm = trans_norm(img_tensor)

- print(img_norm[0][0][0])

- writer.add_image('Normalize',img_norm)

- writer.close()

-

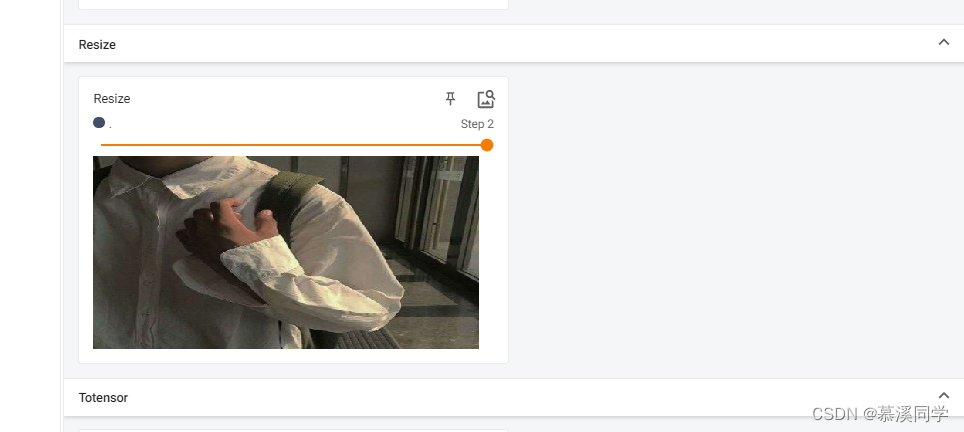

Resize

用来改变图片的尺寸,参数是需要设置的图片大小

代码:

- # Resize 用来修改图片的尺寸

- print(img.size)

- trains_resize = transforms.Resize((500, 1000))

- # img PIL->resize->img_resize PIL

- img_resize1 = trains_resize(img)

- print(img_resize1)

- # img_resize PIL ->ToTensor->img_resize tensor

- img_resize2 = train_totensor(img_resize1)

- writer.add_image('Resize', img_resize2, 2)

- print(img_resize2)

- writer.close()

Compose用法

Compose()中的参数需要的是一个列表,在compose中,数据需要的是参数是transforms类型,所以可以得到compose([transforms1,transforms2])

代码:

- # Compose-resize -2

- trans_resize_2 = transforms.Resize(512)

- trans_compose = transforms.Compose([trans_resize_2, train_totensor]) # 传入的参数是一个Resize对象和一个ToTensor对象,注意两个对象不能交换位置

- #使用compose可以对同一个图片设置多个变换

- img_resize_2 = trans_compose(img)

- writer.add_image('Resize', img_resize_2, 1)

- writer.close()

compose类中__call__的作用:举例

- class Person:

- def __call__(self, name):

- print('__call__' + 'hello' + name)

-

- def hello(self, name):

- print('hello' + name)

-

-

- person = Person()

- person("li")

- person.hello('you')

-

- # call作用是对象不需要调用函数就能使用,不需要使用“.”来进行调用

RandomCrop

作用是对一个图像进行随机裁剪

代码:

- # RandomCrop 随机裁剪

- trans_random = transforms.RandomCrop(128)

- trans_compose_2 = transforms.Compose([trans_random, train_totensor])

- for i in range(10):

- img_crop = trans_compose_2(img)

- writer.add_image('RandomCrop', img_crop, i)

如果报错:raise ValueError("empty range for randrange() (%d,%d, %d)" % (istart, istop, width))那就是随机裁剪的时候设置参数设置太大,需要将参数减小。

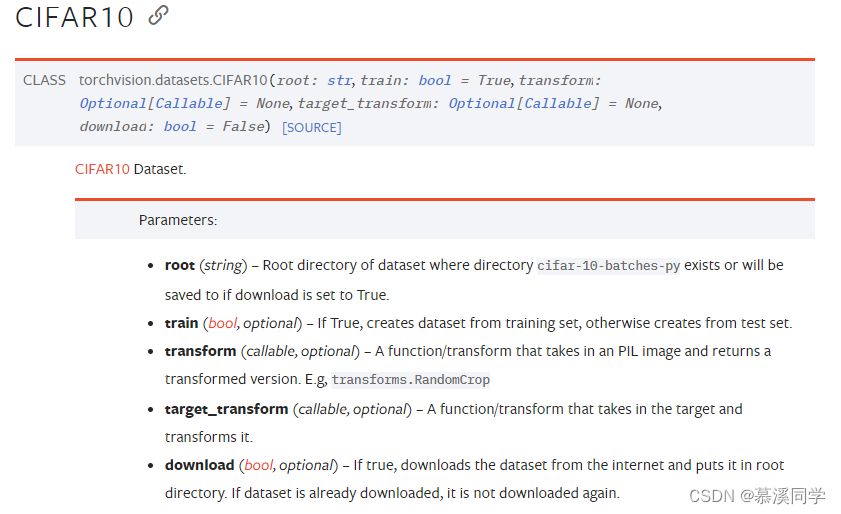

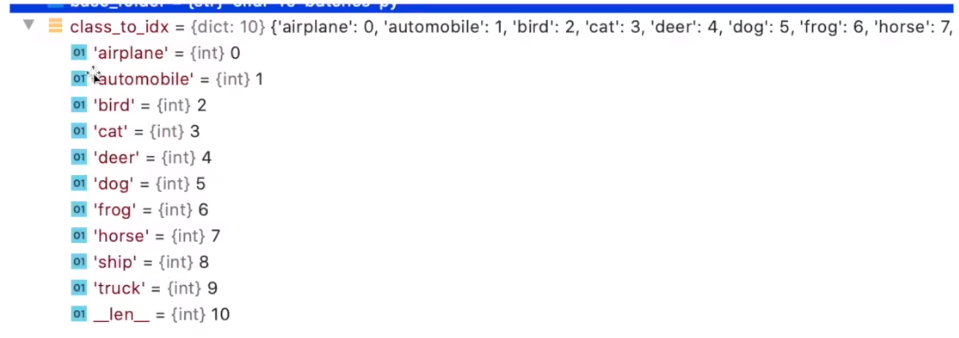

7.数据集

pytorch当中有需要数据集这里以CIFAR10为例,看一些相关参数配置

- root:必须要设置的一个参数

- train:如果是ture创建的就是一个训练数据集,如果为false则创建的是一个测试数据集

- transform:表示对图片进行什么操作放在这个里面

- download:设置为true的时候可以自动从网上下载这些数据集

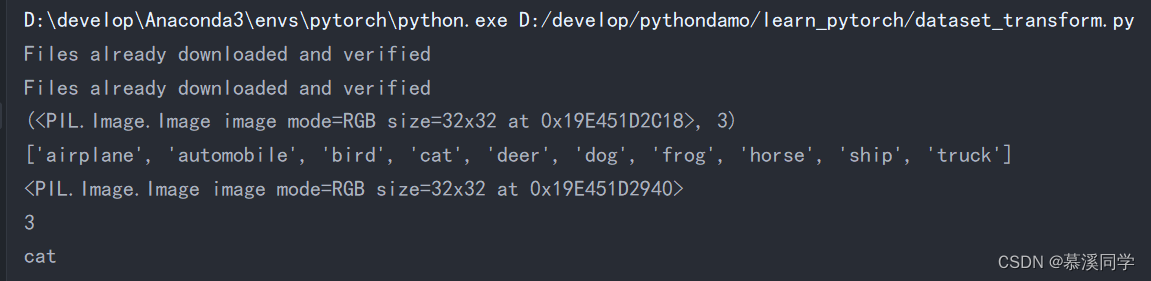

代码实现:

- import torchvision

-

- train_set = torchvision.datasets.CIFAR10(root='./CIFAR_dataset', train=True, download=True)

- test_set = torchvision.datasets.CIFAR10(root='./CIFAR_dataset', train=False, download=True)

-

- print(test_set[0]) # 查看第一个数据

- print(test_set.classes) # 查看classes类

- img, target = test_set[0] # target=3

- print(img)

- print(target)

- print(test_set.classes[target]) # 查看目标为3的类别是什么

- img.show()

-

运行结果:

将所有的图片转换为ToTensor:

- import torchvision

- from torch.utils.tensorboard import SummaryWriter

-

- dataset_transform = torchvision.transforms.Compose([

- torchvision.transforms.ToTensor()

- ]) # 使用compose方法将所有图片设置为ToTensor对象

-

- train_set = torchvision.datasets.CIFAR10(root='./CIFAR_dataset', train=True, transform=dataset_transform, download=True)

- test_set = torchvision.datasets.CIFAR10(root='./CIFAR_dataset', train=False, transform=dataset_transform, download=True)

-

- writer = SummaryWriter('CIFAR')

- for i in range(100):

- img, target = test_set[i]

- writer.add_image('test_set', img, i)

- writer.close()

-

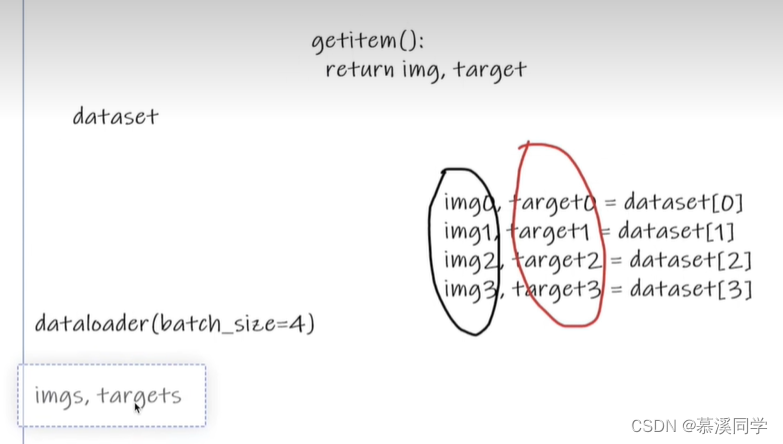

8.DataLoader

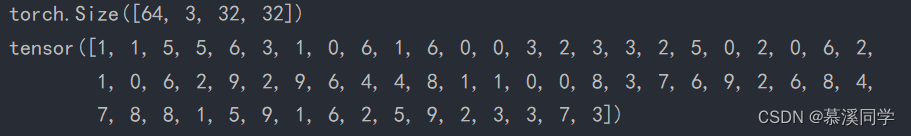

运行报错:size of input tensor and input format are different. tensor shape: (64, 3, 32, 32), input_format: CHW 表示tensorboard 使用不当,自动补全是add_image,实际应该使用add_images

代码展示:

- import torchvision

- from torch.utils.data import DataLoader

-

- # 准备的测试数据集

- from torch.utils.tensorboard import SummaryWriter

-

- test_data = torchvision.datasets.CIFAR10(root="./CIFAR_dataset", train=False,

- transform=torchvision.transforms.ToTensor())

-

- test_loader = DataLoader(dataset=test_data, batch_size=64, shuffle=True,

- num_workers=0, drop_last=True)

-

- # 测试数据集中的第一张图片

- img, target = test_data[0]

- print(img.shape)

- print(target)

- writer = SummaryWriter('dataloader')

- step = 0

- for data in test_loader:

- images, targets = data

- writer.add_images("test_data_drop_last", images, step)

- step += 1

- writer.close()

- DataLoader里面参数设置:

- dataset:需要加载的数据集

- batch_size:每个批处理需要加载多少的样本

- shuffle:设置为Ture的时候,每次运行都会重新清洗数据

- num_workers:于数据加载的子进程数。0表示将在主进程中加载数据。

- drop_last=True:会将不够batch_size的图片进行一个舍去,设置为false则会保留所有的图片

将dataloader中的batch_size设置为4代表将dataset数据集当中的4个数据中的img0-img3 4个数据封装到一起,同时将target0-target4也封装到一起。

9.神经网络基本骨架

pytorch中关于神经网络的操作都是在torch模块当中的torch.nn中

MODULE 模组:所有神经网络模块的基类,您的模型还应该继承这个类。

- import torch.nn as nn

- import torch.nn.functional as F

-

- class Model(nn.Module):

- def __init__(self):

- super().__init__()

- self.conv1 = nn.Conv2d(1, 20, 5)

- self.conv2 = nn.Conv2d(20, 20, 5)

-

- def forward(self, x):

- x = F.relu(self.conv1(x))

- return F.relu(self.conv2(x))

- # forward函数执行了4个步骤 卷积->非线性->卷积->非线性

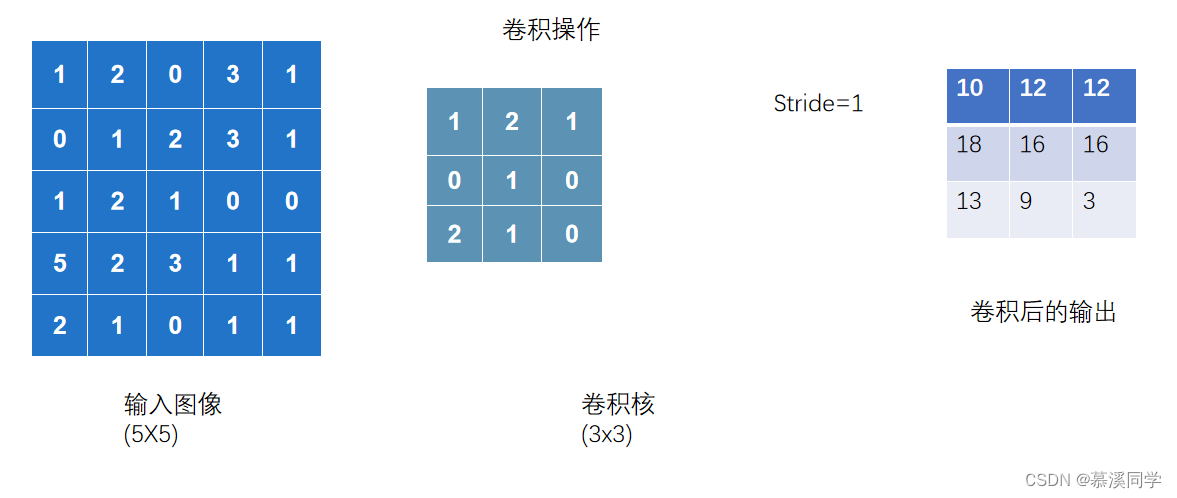

10.卷积

使用torch.nn中的function

代码实现:

- import torch

- import torch.nn.functional as F

-

- x = torch.tensor([[1, 2, 0, 3, 1],

- [0, 1, 2, 3, 1],

- [1, 2, 1, 0, 0],

- [5, 2, 3, 1, 1],

- [2, 1, 0, 1, 1]])

-

- kernel = torch.tensor([[1, 2, 1],

- [0, 1, 0],

- [2, 1, 0]])

-

- x = torch.reshape(x, (1, 1, 5, 5))

- kernel = torch.reshape(kernel, (1, 1, 3, 3))

- print(x.shape)

- print(kernel.shape)

-

- output = F.conv2d(x, kernel, stride=1)

- print(output)

-

- output2 = F.conv2d(x, kernel, stride=2) # 每次移动两步

- print(output2)

-

- output3 = F.conv2d(x, kernel, stride=1, padding=1)

- print(output3)

-

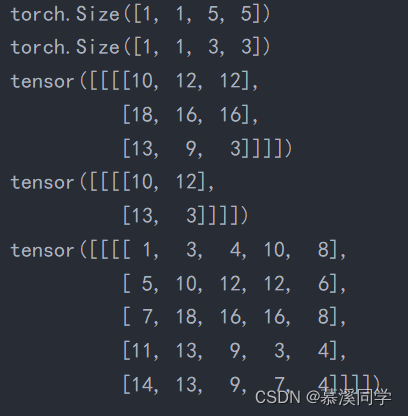

运行结果:

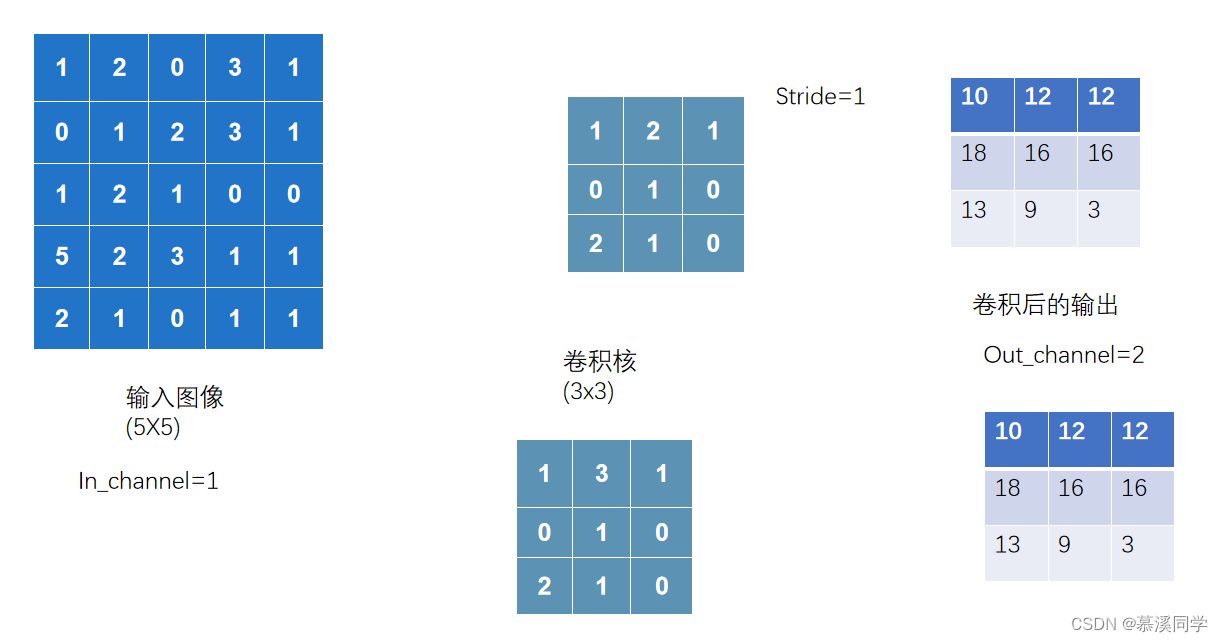

11.卷积层

conv2d

- CLASS torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0,

- dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None)

相关参数介绍:

- In _ channel (int)-输入图像中的通道数

- Out _ channel (int)-卷积产生的通道数

当In _ channel =1并且out _ channel=1的时候,卷积核为1,如下图:

当当In _ channel =1并且out _ channel=2的时候,会产生两个卷积核来进行计算,如下:

- Kernel _ Size (int 或 tuple)-卷积内核的大小

- Stride (int 或 tuple,可选)-卷积的大步。默认值: 1

- 填充(int、 tuple 或 str,可选)-将填充添加到输入的所有四边。默认值: 0

- Pding _ mode (str,可选)-‘ zeros’,‘ response’,‘ copy’或‘ circulal’。默认值: ‘ zeros’

- Dilation (int 或 tuple,可选)-内核元素之间的间距。默认值: 1

- Group (int,可选)-从输入通道到输出通道的阻塞连接数

- bias(bool,可选)-如果为真,则在输出中添加一个可学习的偏见

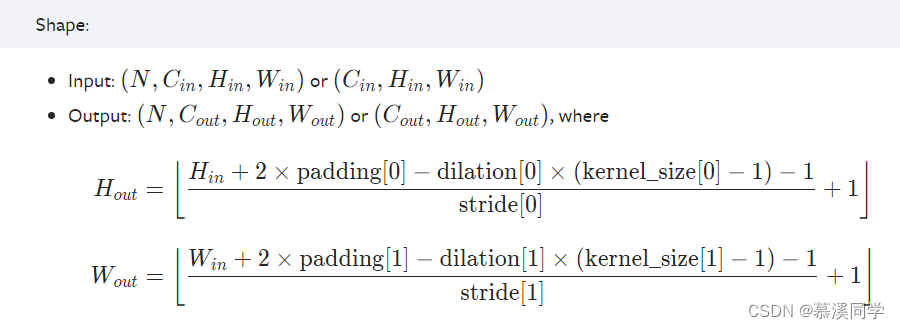

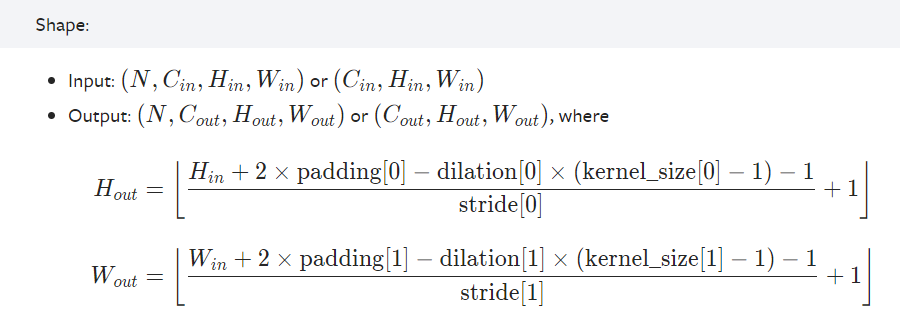

计算公式:

代码实现卷积层:

- import torch

- import torchvision

- from torch import nn

- from torch.utils.data import DataLoader

- from torch.utils.tensorboard import SummaryWriter

-

- dataset = torchvision.datasets.CIFAR10(root="./data", train=False, transform=torchvision.transforms.ToTensor(),

- download=True) # 下载测试数据集

- dataloader = DataLoader(dataset, batch_size=64)

-

-

- class Model(torch.nn.Module):

- def __init__(self):

- super(Model, self).__init__()

- self.conv1 = nn.Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1,

- padding=0)

- #彩色图片的通道都为3

-

- def forward(self, x):

- x = self.conv1(x)

- return x

-

-

- model = Model()

-

- writer = SummaryWriter('logs')

- step = 0

- for data in dataloader:

- images, targets = data

- output = model(images)

- # print(images.shape)

- # torch.Size([64, 3, 32, 32])

- # print('----')

- # print(output.shape)

- # torch.Size([64, 6, 30, 30])

- # print('****')

- writer.add_images('input', images, step)

- output = torch.reshape(output, (-1, 3, 30, 30)) #将6个通道变成3个通道,这个时候

- #batch_size会增加,这里不缺定batch_size是多少所以设置为-1,batch_size会自动进行计算.

- writer.add_images('output', output, step)

- step += 1

- writer.close()

- # 通过输出可以看见原来图片的大小是3个in_channels,经过卷积后变成6个in_channels,

- 图片大小由原来的32变成30

-

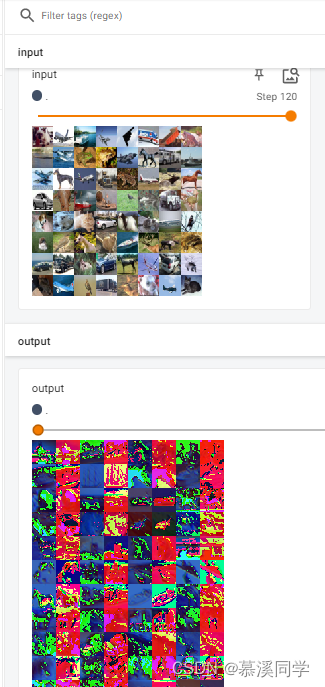

注意点:彩色图像需要3个channel才能显示,但是这个图片经过卷积层之后变成了6个channel,所以需要使用reshape对图像进行一个转换。

代码运行结果:

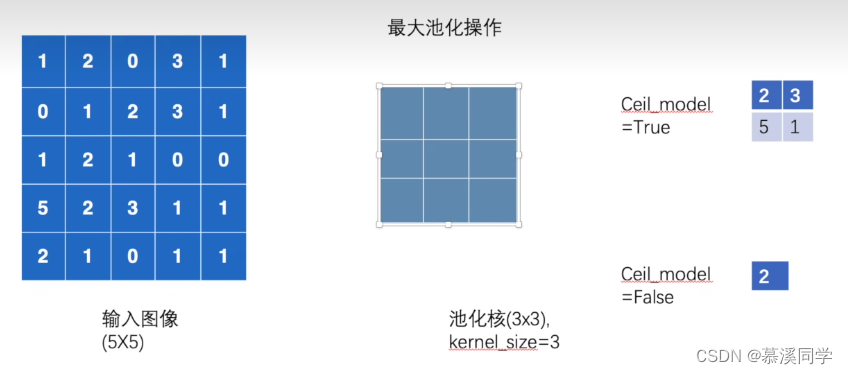

12.最大池化使用

最大池化得作用是保留数据得特征,同时将数据量减小,这样训练的参数就会减少,训练的速度就会加快.一般在经过卷积层之后都会再进行一层池化.

- torch.nn.MaxPool2d(kernel_size, stride=None, padding=0, dilation=1, return_indices=False,

- ceil_mode=False)

参数设置:

- kernel_size:窗口的大小

- stride:窗口的跨度(注意:这里的默认值大小为kernel_size大小)

- padding:填充大小

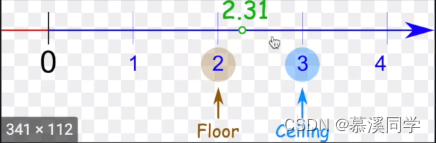

- ceil_mode:当为 True 时,将使用 ceil 而不是 floor 来计算输出形状(2者的区别:2.31这个数,使用floor的话就是向下取整取的是2,如果使用ceiling向上取整的话取得就是3)

dilation:一个控制窗口中元素步幅的参数,如下图每个核当中的元素会和另外一个元素之间差1.

最大池化得操作:

将步长设置为3的时候,当ceil_model设置为true的时候可以得到4个输出值,设置为False只有一个输出.ceil_model可以保留当前卷积图中最大的数据

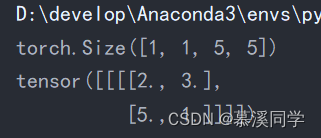

代码实现:

- import torch

- import torchvision

- from torch import nn

-

- x = torch.tensor([[1, 2, 0, 3, 1],

- [0, 1, 2, 3, 1],

- [1, 2, 1, 0, 0],

- [5, 2, 3, 1, 1],

- [2, 1, 0, 1, 1]], dtype=torch.float32) # 这里需要将数据设置成浮点型

- x = torch.reshape(x, (-1, 1, 5, 5))

- print(x.shape)

-

-

- class Model(nn.Module):

- def __init__(self):

- super(Model, self).__init__()

- self.maxpool1 = nn.MaxPool2d(kernel_size=3, ceil_mode=True)

-

- def forward(self, x):

- output = self.maxpool1(x)

- return output

-

-

- model = Model()

- output = model(x)

- print(output)

-

实现效果:

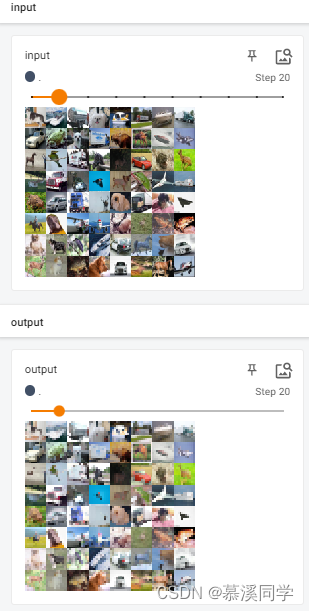

使用图片数据集进行最大池化:

- import torch

- import torchvision

- from torch import nn

-

- from torch.utils.data import DataLoader

- from torch.utils.tensorboard import SummaryWriter

-

- dataset = torchvision.datasets.CIFAR10(root="./data", train=False, download=True,

- transform=torchvision.transforms.ToTensor())

- dataloader = DataLoader(dataset, batch_size=64)

-

-

- # x = torch.tensor([[1, 2, 0, 3, 1],

- # [0, 1, 2, 3, 1],

- # [1, 2, 1, 0, 0],

- # [5, 2, 3, 1, 1],

- # [2, 1, 0, 1, 1]], dtype=torch.float32) # 这里需要将数据设置成浮点型

- # x = torch.reshape(x, (-1, 1, 5, 5))

- # print(x.shape)

-

-

- class Model(nn.Module):

- def __init__(self):

- super(Model, self).__init__()

- self.maxpool1 = nn.MaxPool2d(kernel_size=3, ceil_mode=True)

-

- def forward(self, x):

- output = self.maxpool1(x)

- return output

-

-

- model = Model()

- writer = SummaryWriter('log_maxpool')

- step = 0

- for data in dataloader:

- images, targets = data

- writer.add_images('input', images, step)

- output = model(images)

- writer.add_images('output', output, step)

- step += 1

-

- writer.close()

池化之后得图片:

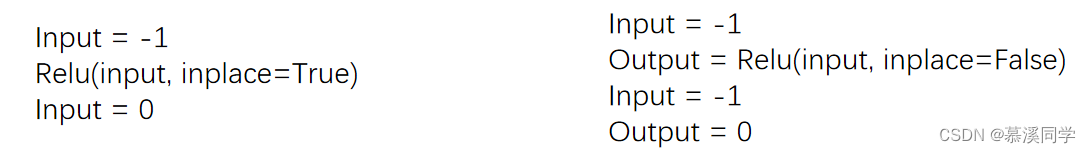

13.非线性激活

非线性激活函数有很多,有RELU,sigmoid等函数.

以ReLU函数举例

- torch.nn.ReLU(inplace=False)

- #Inplace (bool)-可以选择就地执行操作

inplace:

当inplace设置为ture的时候,可以在原来的变量上进行操作,为false的时候需要有一个新的变量接收返回值.一般采用false来保存原始数据.

代码实现:

- import torch

- from torch import nn

-

- x = torch.tensor([[1, -0.5],

- [-1, 3]])

-

- x = torch.reshape(x, (-1, 1, 2, 2)) # relu函数输入需要设置batch_size

- print(x.shape)

-

-

- class Model(nn.Module):

- def __init__(self):

- super(Model, self).__init__()

- self.relu = nn.ReLU()

-

- def forward(self, x):

- y = self.relu(x)

- return y

-

-

- model = Model()

- y = model(x)

- print(y)

-

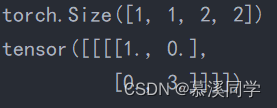

实现效果:

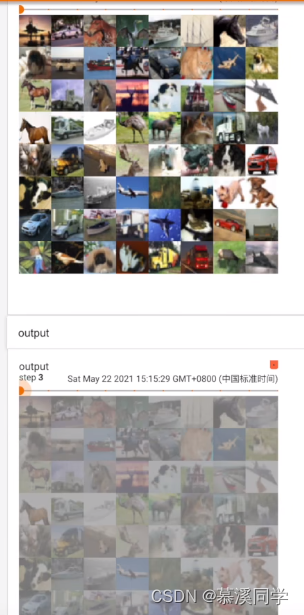

代码转换图片:

- import torch

- from torch import nn

- import torchvision

- from torch.utils.data import DataLoader

- from torch.utils.tensorboard import SummaryWriter

-

- # x = torch.tensor([[1, -0.5],

- # [-1, 3]])

- #

- # x = torch.reshape(x, (-1, 1, 2, 2)) # relu函数输入需要设置batch_size

- # print(x.shape)

-

- dataset = torchvision.datasets.CIFAR10('./CIFAR_dataset', train=False, download=True,

- transform=torchvision.transforms.ToTensor())

-

- dataloader = DataLoader(dataset, batch_size=64)

-

-

- class Model(nn.Module):

- def __init__(self):

- super(Model, self).__init__()

- self.relu = nn.ReLU()

- self.sigmoid = nn.Sigmoid()

-

- def forward(self, x):

- y = self.sigmoid(x)

- return y

-

-

- model = Model()

-

- writer = SummaryWriter('log_nn_relu')

- step = 0

- for data in dataloader:

- images, targets = data

- writer.add_images('input', images, step)

- output = model(images)

- writer.add_images('output', output, step)

- step += 1

-

- writer.close()

-

转化结果:

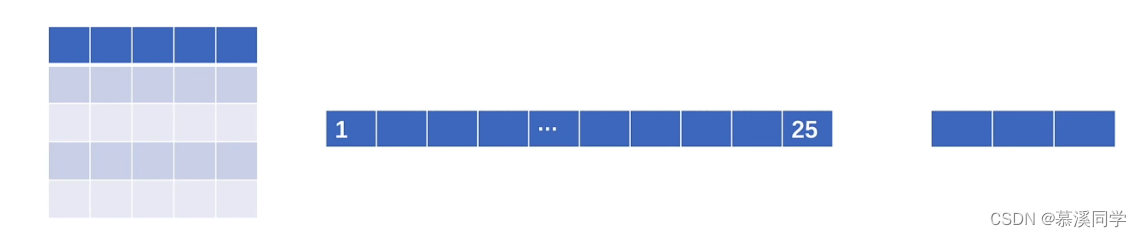

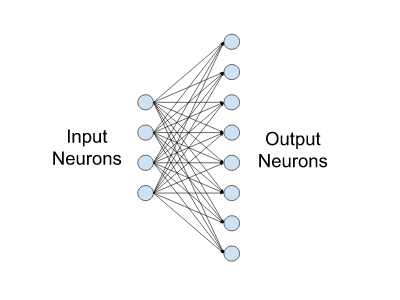

14.神经网络线性层

如上图,线形层就是将展开后的25个数据变成3个,类似于这样的操作

线性回归:

- torch.nn.Linear(in_features, out_features, bias=True, device=None, dtype=None)

- output=input*w+b(偏置)

flatten函数:

- torch.flatten(input, start_dim=0, end_dim=- 1)

- #input (Tensor) – the input tensor.

- #start_dim (int) – the first dim to flatten

- #end_dim (int) – the last dim to flatten

-

- Example:

- >>> t = torch.tensor([[[1, 2],

- ... [3, 4]],

- ... [[5, 6],

- ... [7, 8]]])

- >>> torch.flatten(t)

- tensor([1, 2, 3, 4, 5, 6, 7, 8])

- >>> torch.flatten(t, start_dim=1)

- tensor([[1, 2, 3, 4],

- [5, 6, 7, 8]])

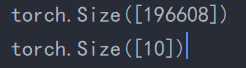

线性层代码实现:

- import torchvision

- import torch

- from torch import nn

- from torch.utils.data import DataLoader

-

- dataset = torchvision.datasets.CIFAR10(root="./CIFAR_dataset", train=False, transform=torchvision.transforms.ToTensor(),

- download=True)

- dataloader = DataLoader(dataset, batch_size=64)

-

-

- class Model(nn.Module):

- def __init__(self):

- super(Model, self).__init__()

- self.linear1 = nn.Linear(196608, 10)

-

- def forward(self, x):

- y = self.linear1(x)

- return y

-

-

- model = Model()

- for data in dataloader:

- images, targets = data

- # print(images.shape) torch.Size([64, 3, 32, 32])

- # output = torch.reshape(images, (1, 1, 1, -1)) torch.Size([1, 1, 1, 196608])

- output = torch.flatten(images) # 将图片进行扁平化操作 可以代替上一行reshape操作

- print(output.shape)

- output = model(output)

- print(output.shape)

-

运行效果:

![]()

15.神经网络搭建-sequential

sequential:

- torch.nn.Sequential(*args: Module)

-

- Example:

- # Using Sequential to create a small model. When `model` is run,

- # input will first be passed to `Conv2d(1,20,5)`. The output of

- # `Conv2d(1,20,5)` will be used as the input to the first

- # `ReLU`; the output of the first `ReLU` will become the input

- # for `Conv2d(20,64,5)`. Finally, the output of

- # `Conv2d(20,64,5)` will be used as input to the second `ReLU`

- model = nn.Sequential(

- nn.Conv2d(1,20,5),

- nn.ReLU(),

- nn.Conv2d(20,64,5),

- nn.ReLU()

- )

-

- # Using Sequential with OrderedDict. This is functionally the

- # same as the above code

- model = nn.Sequential(OrderedDict([

- ('conv1', nn.Conv2d(1,20,5)),

- ('relu1', nn.ReLU()),

- ('conv2', nn.Conv2d(20,64,5)),

- ('relu2', nn.ReLU())

- ]))

-

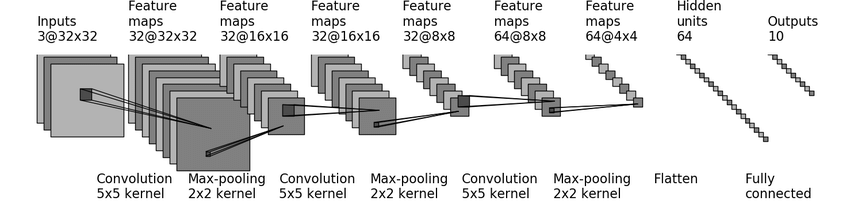

cifar10的模型结构:

如何实现上述cifar10模型结构,需要使用下面函数来进行相关参数推导:

dilation一般默认为1,stride默认为1

代码实现上述cifar10模型结构:

- import torch

- from torch import nn

-

-

- class Model(nn.Module):

- def __init__(self):

- super(Model, self).__init__()

- # in_channels开始为3,out_channels为32,卷积核kernel_size为5,通过conv2d公式进行计算可以求出padding=2

- self.conv1 = nn.Conv2d(3, 32, 5, padding=2)

- self.maxpool1 = nn.MaxPool2d(2)

- self.conv2 = nn.Conv2d(32, 32, 5, padding=2)

- self.maxpool2 = nn.MaxPool2d(2)

- self.conv3 = nn.Conv2d(32, 64, 5, padding=2)

- self.maxpool3 = nn.MaxPool2d(2)

- self.flatten = nn.Flatten()

- self.linear1 = nn.Linear(1024, 64)

- self.linear2 = nn.Linear(64, 10)

-

- def forward(self, x):

- x = self.conv1(x)

- x = self.maxpool1(x)

- x = self.conv2(x)

- x = self.maxpool2(x)

- x = self.conv3(x)

- x = self.maxpool3(x)

- x = self.flatten(x)

- x = self.linear1(x)

- x = self.linear2(x)

- return x

-

- model = Model()

- print(model)

- input = torch.ones((64, 3, 32, 32))

- output = model(input)

- print(output.shape)

-

代码运行效果:

使用sequential优化之后的代码:

- import torch

- from torch import nn

- from torch.nn import Conv2d, MaxPool2d, Linear, Sequential, Flatten

- from torch.utils.tensorboard import SummaryWriter

-

-

- class Model(nn.Module):

- def __init__(self):

- super(Model, self).__init__()

- self.model1 = Sequential(

- # in_channels开始为3,out_channels为32,卷积核kernel_size为5,通过conv2d公式进行计算可以求出padding=2

- Conv2d(3, 32, 5, padding=2),

- MaxPool2d(2),

- Conv2d(32, 32, 5, padding=2),

- MaxPool2d(2),

- Conv2d(32, 64, 5, padding=2),

- MaxPool2d(2),

- Flatten(),

- Linear(1024, 64),

- Linear(64, 10)

- )

-

- def forward(self, x):

- x = self.model1(x)

- return x

-

-

- model = Model()

- print(model)

- x = torch.ones((64, 3, 32, 32))

- y = model(x)

- # y=torch.unsqueeze(y, dim=0)

- print(y.shape)

-

- writer = SummaryWriter('log_seq')

- writer.add_graph(model, x)

- writer.close()

-

-

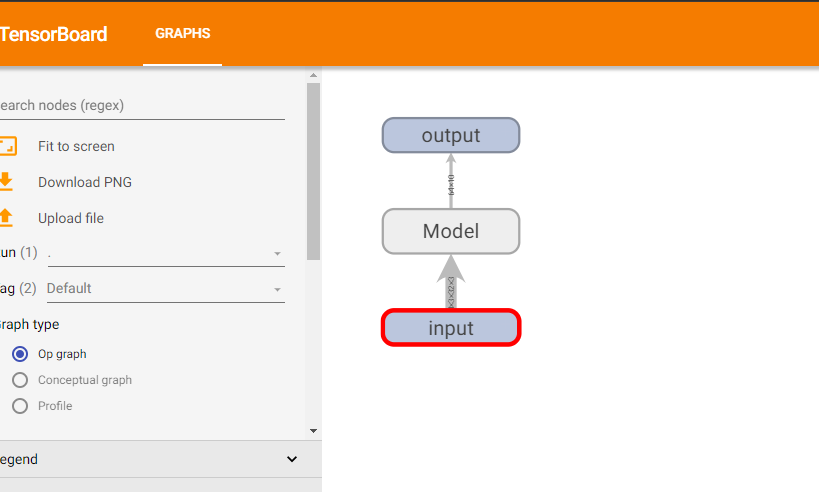

通过tensorboard打开一个可视化界面可以看到代码的

16.损失函数和反向传播

损失函数

损失函数的作用:

1.计算实际输出和目标输出之间的差距

2.为我们更新输出提供一定的依据(反向传播),grad梯度

平均绝对误差L1Loss

对应项相减,然后求平均值

- torch.nn.L1Loss(size_average=None, reduce=None, reduction='mean')

- #reduction默认为求平均,也可以改成sum

实现代码:

- import torch

- from torch.nn import L1Loss

-

- inputs = torch.tensor([1, 2, 3], dtype=torch.float32)

- targets = torch.tensor([1, 2, 5], dtype=torch.float32)

-

- inputs = torch.reshape(inputs, (1, 1, 1, 3,))

- targets = torch.reshape(targets, (1, 1, 1, 3))

-

- loss = L1Loss()

- result = loss(inputs, targets)

- #L1oss=(1-1+2-2+5-3)/3

- print(result)

-

实现结果:

均方差MSELoss

创建一个标准,测量输入xx和目标yy中每个元素之间的均方误差(L2范数的平方)。

torch.nn.MSELoss(size_average=None, reduce=None, reduction='mean')

代码实现:

- loss_mse = MSELoss()

- result2 = loss_mse(inputs, targets)

- # MSELoss=(0+0+2^2)/3

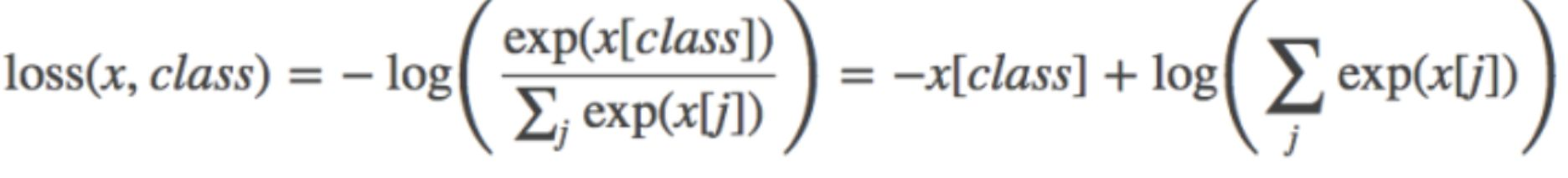

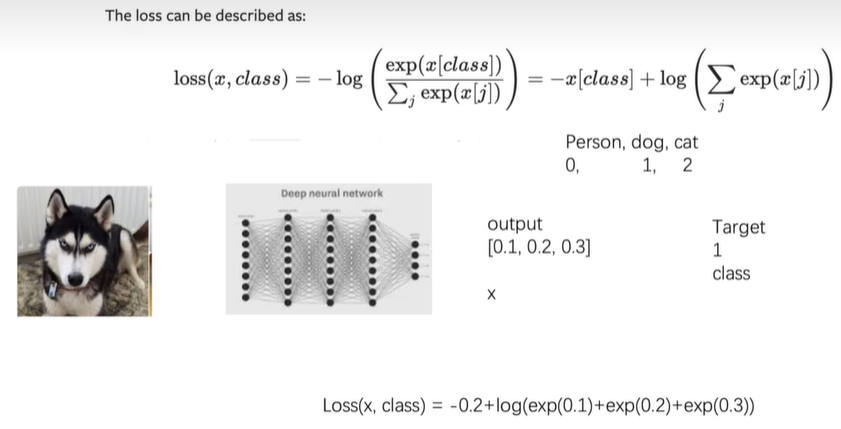

交叉熵CrossEntropyLoss

(本质上是交叉熵公式+softmax公式)

当训练一个有C类的分类问题时,它很有用。如果提供的话,可选的参数weight应该是一个1D张量,为每个类分配权重。当你有一个不平衡的训练集时,这特别有用。

公式:

公式详解如下图:

我们需要预测一个图片是狗种类的概率,假设输出层设置输出人的概率为0.1,狗的概率为0.2,猫的概率为0.3,我们设置预测对象target=1,也就是预测狗的概率,这个时候x=[0.1,0.2,0.3],class=1,代入公式即可。

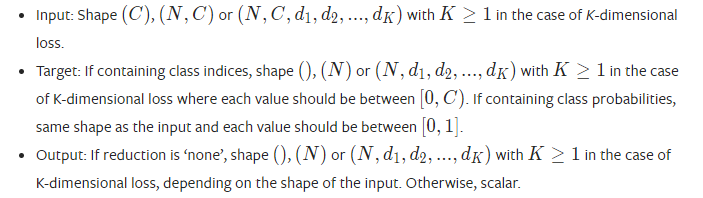

交叉熵对于形状的要求:

N=batch_size,C代表分类问题,之前的cifar10就是分了10类,这里的C就是10

反向传播backward

当我们使用损失函数的时候,我们可以调用损失函数的一个backward函数,得到一个反向传播,反向传播可以调节每一步我们需要的参数,这个参数对应的一个梯度,有了这个梯度可以利用优化器,可以调剂我们的梯度,这样就可以达到一个降低误差的目的。

优化器optim

- import torch

- from torch import nn

- import torchvision

- from torch.nn import Conv2d, MaxPool2d, Linear, Sequential, Flatten, CrossEntropyLoss

- from torch.utils.data import DataLoader

- from torch.utils.tensorboard import SummaryWriter

-

- from nn_lose import loss

-

- dataset = torchvision.datasets.CIFAR10('./data', train=False, transform=torchvision.transforms.ToTensor(),

- download=True)

- dataloader = DataLoader(dataset, batch_size=1)

-

-

- class Model(nn.Module):

- def __init__(self):

- super(Model, self).__init__()

- self.model1 = Sequential(

- # in_channels开始为3,out_channels为32,卷积核kernel_size为5,通过conv2d公式进行计算可以求出padding=2

- Conv2d(3, 32, 5, padding=2),

- MaxPool2d(2),

- Conv2d(32, 32, 5, padding=2),

- MaxPool2d(2),

- Conv2d(32, 64, 5, padding=2),

- MaxPool2d(2),

- Flatten(),

- Linear(1024, 64),

- Linear(64, 10)

- )

-

- def forward(self, x):

- x = self.model1(x)

- return x

-

-

- loss = CrossEntropyLoss()

-

- model = Model()

-

- optim = torch.optim.SGD(model.parameters(), lr=0.01, ) # lr是学习速率 (优化器)

- for epoch in range(20): # 设置进行多轮学习

- running_loss = 0

- for data in dataloader:

- images, targets = data

- outputs = model(images)

- result_loss = loss(outputs, targets)

- optim.zero_grad() # 将每一个可以调节梯度的参数调节为0

- result_loss.backward() # 计算每一个参数对应的梯度

- optim.step() # 对每一个参数进行一个调优

- running_loss = running_loss + result_loss

- print(1)

-

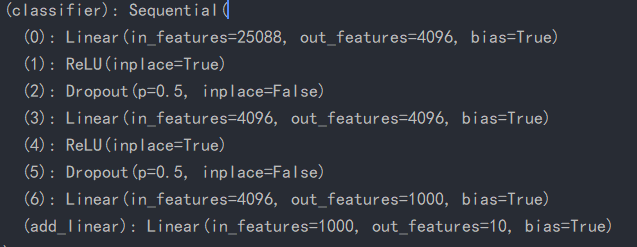

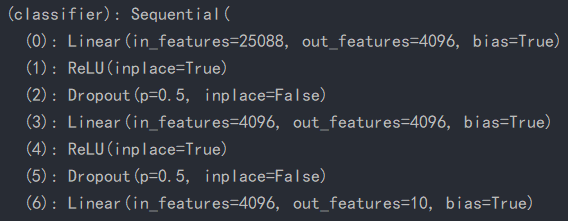

17现有神经网络的修改

VGG网络模型

VGG网络模型是在ImageNET上面进行训练的,一共有1000个种类,可以用来提取一些特殊的特征

网络结构:

- VGG(

- (features): Sequential(

- (0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (1): ReLU(inplace=True)

- (2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (3): ReLU(inplace=True)

- (4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

- (5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (6): ReLU(inplace=True)

- (7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (8): ReLU(inplace=True)

- (9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

- (10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (11): ReLU(inplace=True)

- (12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (13): ReLU(inplace=True)

- (14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (15): ReLU(inplace=True)

- (16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

- (17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (18): ReLU(inplace=True)

- (19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (20): ReLU(inplace=True)

- (21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (22): ReLU(inplace=True)

- (23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

- (24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (25): ReLU(inplace=True)

- (26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (27): ReLU(inplace=True)

- (28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- (29): ReLU(inplace=True)

- (30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

- )

- (avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

- (classifier): Sequential(

- (0): Linear(in_features=25088, out_features=4096, bias=True)

- (1): ReLU(inplace=True)

- (2): Dropout(p=0.5, inplace=False)

- (3): Linear(in_features=4096, out_features=4096, bias=True)

- (4): ReLU(inplace=True)

- (5): Dropout(p=0.5, inplace=False)

- (6): Linear(in_features=4096, out_features=1000, bias=True)

- )

- )

-

对vgg网络中的一些结构进行修改(模型的保存和加载以及修改和增加)

- import torchvision

- from torch import nn

-

- vgg16_false = torchvision.models.vgg16(pretrained=False)

- # 参数:当pretrained=false的时候 只是进行加载数据;为true的时候就是下载数据

- vgg16_true = torchvision.models.vgg16(pretrained=True)

-

- vgg16_true.classifier.add_module('add_linear', nn.Linear(1000, 10))

- # 在原有的vgg网络中classifier中增加一个线性层,设置输入为1000输出为10

-

- print(vgg16_true)

- print('---------------')

- print(vgg16_false)

- vgg16_false.classifier[6] = nn.Linear(4096, 10) # 改变classifier中线性层的输出值

- print(vgg16_false)

-

vgg16_true.classifier.add_module('add_linear', nn.Linear(1000, 10)):

vgg16_false.classifier[6] = nn.Linear(4096, 10)

18.网络模型的保存与读取

完整的模型验证套路

保存模型

- import torch

- import torchvision

- from torch import nn

-

- vgg16 = torchvision.models.vgg16(pretrained=False)

- # 网络模型保存

- # 方式1

- torch.save(vgg16, 'vgg16_method1.pth')

-

- # 保存方式2 将vgg16中的参数保存为一个字典的一个格式(官方推荐的一个保存格式)

- torch.save(vgg16.state_dict(), 'vgg16_method2.pth')

-

-

- # 自己定义网络模型

- class Model(nn.Module):

- def __init__(self):

- super(Model, self).__init__()

- self.conv1 = nn.Conv2d(3, 64, kernel_size=3)

-

- def forward(self, x):

- x = self.conv1(x)

- return x

-

-

- model = Model()

- torch.save(model, 'model.pth')

-

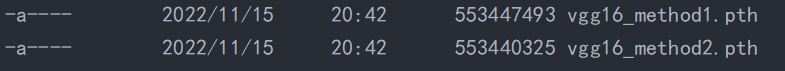

使用方式2要比方式1内存小

使用方式2是以字典的格式进行一个保存

如何加载模型

- # 方式1 加载方式1的模型

- import torch

- import torchvision

- from model_save import *

-

- model = torch.load('vgg16_method1.pth')

- # print(model)

- print('------------')

- # 方式2加载模型

- model2 = torch.load('vgg16_method2.pth')

- # print(model2)

- print('************')

- # 如何加载以网络的格式已经保存为字典类型的模型

- vgg16 = torchvision.models.vgg16(pretrained=False)

- vgg16.load_state_dict(torch.load('vgg16_method2.pth'))

- # print(vgg16)

-

- # 当我们调用自己写好的网络时候,一定要把该网络代码所有内容导入进来

- model = torch.load('model.pth')

- print(model)

-

19.完整的模型训练

- import torch

- import torchvision

- from torch.utils.data import DataLoader

- from torch.nn import CrossEntropyLoss

- from torch.utils.tensorboard import SummaryWriter

-

- from model import *

-

- # 1.获取训练和测试数据集

- train_data = torchvision.datasets.CIFAR10(root="./data", train=True, transform=torchvision.transforms.ToTensor(),

- download=True)

-

- test_data = torchvision.datasets.CIFAR10(root="./data", train=False, transform=torchvision.transforms.ToTensor(),

- download=True)

-

- # 求数据集的大小

- train_data_size = len(train_data)

- test_data_size = len(test_data)

-

- # python当中字符串格式化方法,使用format可以将{}替换成format里面的内容

- print('训练数据集的大小为:{}'.format(train_data_size))

- print('训练数据集的大小为:{}'.format(test_data_size))

-

- # 2、使用Dataloader来加载数据集

- train_dataloader = DataLoader(train_data, batch_size=64)

- test_dataloader = DataLoader(test_data, batch_size=64)

-

- # 4.创建网络模型

- network = Network()

-

- # 5.创建损失函数

- loss_fn = CrossEntropyLoss()

-

- # 6.创建优化器

- # learning_rate = 0.01

- learning_rate = 1e-2

- optimizer = torch.optim.SGD(network.parameters(), lr=learning_rate)

-

- # 7.设置网络训练过程中的参数

- # 记录训练的次数

- total_train_steps = 0

- # 记录测试次数

- total_test_steps = 0

- # 训练的轮数

- epochs = 10

-

- # 添加tensorboard

- writer = SummaryWriter('log_train')

-

- for i in range(10):

- print('-----第{}轮训练开始-----'.format(i + 1))

- # 8.训练步骤开始

- network.train() # 这行代码是为了应对神经网络中的一些特殊的层

- for data in train_dataloader:

- images, targets = data

- outputs = network(images)

- loss = loss_fn(outputs, targets) # 计算误差

-

- # 放入优化器模型当中进行优化

- optimizer.zero_grad() # 使用优化器清零

- loss.backward() # 使用反向传播

- optimizer.step()

- total_train_steps += 1

-

- # 展示输出结果

- if total_train_steps % 100 == 0:

- print("训练次数:{},损失值为:{}".format(total_train_steps, loss.item()))

- writer.add_scalar("train_loss", loss.item(), total_train_steps)

-

- # 9、进行检测

- network.eval() # 这行代码是为了应对神经网络中的一些特殊的层

- total_test_loss = 0 # 统计整体测试损失

- total_accuracy = 0 # 统计整体精确度

- with torch.no_grad(): # 消除网络模型当中的梯度

- for data in test_dataloader:

- images, targets = data

- outputs = network(images)

- loss = loss_fn(outputs, targets)

- total_test_loss += loss.item()

- accuracy = (outputs.argmax(1) == targets).sum()

- total_accuracy += accuracy

- print('整体测试集上面的loss:{}'.format(total_test_loss))

- print('整体测试集上面的准确率:{}'.format(total_accuracy / test_data_size))

- writer.add_scalar("test_loss", total_test_loss, total_test_steps)

- writer.add_scalar("test_accuracy", total_accuracy / test_data_size, total_test_steps)

- total_test_steps += 1

-

- # 10.保存模型

- torch.save(network, 'network_{}.pth'.format(i))

- # 官方推荐保存模式 torch.save(network.state_dict(),'network_{}.pth'.format(i))

- print('模型已经保存')

-

- writer.close()

-

第4步的神经网络层

- from torch.nn import Module, Sequential, Conv2d, MaxPool2d, Flatten, Linear

- import torch

-

-

- # 3.来搭建神经网络

- class Network(Module):

- def __init__(self):

- super(Network, self).__init__()

- self.model = Sequential(

- Conv2d(3, 32, 5, 1, 2),

- MaxPool2d(2),

- Conv2d(32, 32, 5, 1, 2),

- MaxPool2d(2),

- Conv2d(32, 64, 5, 1, 2),

- MaxPool2d(2),

- Flatten(),

- Linear(64 * 4 * 4, 64),

- Linear(64, 10)

- )

-

- def forward(self, x):

- x = self.model(x)

- return x

-

-

- if __name__ == '__main__':

- network = Network()

- inputs = torch.ones((64, 3, 32, 32))

- print(inputs.shape)

- outputs = network(inputs)

- print(outputs.shape)

-

计算精确度

- import torch

-

- print(torch.__version__)

- print(torch.cuda.is_available())

-

- outputs = torch.tensor([[0.1, 0.2],

- [0.3, 0.4]])

-

- print(outputs.argmax(1))

- preds = outputs.argmax(1)

- targets = torch.tensor([0, 1])

- print((preds == targets).sum())

- """

- argmax用法

- 当参数设置为1的时候,横向进行对比,0.1<0.2 返回0.2的位置1 同理0.05<0.4 返回0.4的位置1

- 当参数设置为0的时候,纵向进行对比,0.1>0.005 返回0.1的位置0 同理0.2<0.4 返回0.4的位置1

- """

20.使用GPU进行训练

第一种训练方式

需要找到以下三个地方,使用.cuda(),就可以实现GPU训练

- 网络模型

- 数据(输入,标注)

- 损失函数

第二种训练方式

- 定义device=torch.device('cpu') 或者 torch.device('cuda') 同时可以使用torch.device('cuda:?')来指定使用第几个显卡

- 使用.to(device)来进行调用

21完整的测试套路

- from PIL import Image

- import torchvision

- import torch

- from torch.nn import Module, Sequential, Conv2d, MaxPool2d, Flatten, Linear

-

- image_path = "image/img_2.png"

- image = Image.open(image_path)

- print(image)

- '''

- 使用convert的原因:因为png是4个通道,除了RGB通道以外还有一个透明的通道

- 所以调用image.convert('RGB')来保留原来的通道,可以更好的适应jpd和png格式

- '''

- image = image.convert('RGB')

- transform = torchvision.transforms.Compose([torchvision.transforms.Resize((32, 32)), torchvision.transforms.ToTensor()])

- image = transform(image)

- print(image.shape)

-

-

- class Network(Module):

- def __init__(self):

- super(Network, self).__init__()

- self.model = Sequential(

- Conv2d(3, 32, 5, 1, 2),

- MaxPool2d(2),

- Conv2d(32, 32, 5, 1, 2),

- MaxPool2d(2),

- Conv2d(32, 64, 5, 1, 2),

- MaxPool2d(2),

- Flatten(),

- Linear(64 * 4 * 4, 64),

- Linear(64, 10)

- )

-

- def forward(self, x):

- x = self.model(x)

- return x

-

-

- # 加载cpu训练的模型需要加上map_location=torch.device('cpu')

- network = torch.load('network_9.pth', map_location=torch.device('cpu'))

- image = torch.reshape(image, (1, 3, 32, 32))

- network.eval()

- with torch.no_grad():

- output = network(image)

- print(output)

- print(output.argmax(1))

-

附件:yolov5常见的注意事项和报错

注意1:不要在.yaml中添加任何中文注释,这样会报‘gbk’的错误