- 1【079期】面试官:设计一个安全的登录都要考虑哪些?我一脸懵逼。。_设计用户登录表的时候考虑哪些因素

- 2云安全架构的设计_纯云业务如何设计安全架构

- 3FileProvider 安装 apk_fileprovider apk miui

- 4XtraBackup

- 57-36 社交网络图中结点的“重要性”计算 (30 分)(思路加详解)兄弟们PTA乙级题目冲起来

- 6解决 linux 系统中Error: Unable to access jarfile XXX.jar问题_linux unable to access jarfile

- 7DevOps 内容分享(九):利用AI掌握DevOps,构建新的CI/CD流水线_ai devops

- 8数据结构绪论——什么是数据结构?_数据结构由数据的1 point(s)、1 point(s)和1 point(s)三部分组成。

- 9基础环境:wsl2安装Ubuntu22.04 + miniconda_wsl 2 上安装 ubuntu 22.04

- 105年测试被裁,恶补3个月上岸字节28K,面试差点被问哭了_程序员刷题上岸

LLama3最新医疗大模型安装与应用指南_openbiollm

赞

踩

为什么要介绍医疗模型,因为平时我们工作繁忙,可能身体不舒服会拖着到不得已的时候才到医院,特别是老年人怕麻烦,拖延更严重。如果有了这些模型,我们可以向这些模型提问,给一个初步的了解,同时也可以获取一些养生保健知识。因此这些模型是比较良心,造福人类的。

不过如果对于个人医疗需求,请务必咨询合格的医疗保健提供者。

1.医疗大模型介绍

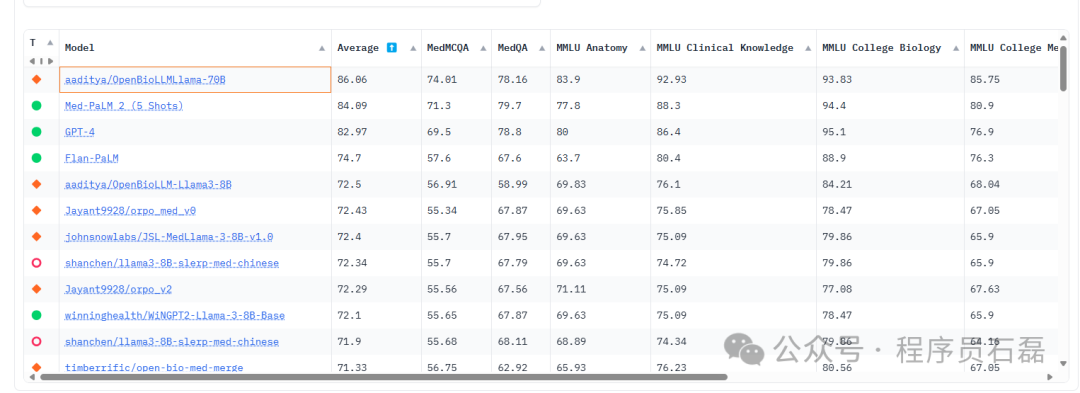

医疗领域的开源 LLM:OpenBioLLM-Llama3,在生物医学领域优于GPT-4、Gemini、Meditron-70B、Med-PaLM-1、Med-PaLM-2

OpenBioLLM-Llama3有两个版本,分别是70B 和 8B

OpenBioLLM-70B提供了SOTA性能,为同等规模模型设立了新的最先进水平

OpenBioLLM-8B模型甚至超越了GPT-3.5、Gemini和Meditron-70B。

- 医疗-LLM排行榜:https://huggingface.co/spaces/openlifescienceai/open_medical_llm_leaderboard

- 70B:https://huggingface.co/aaditya/Llama3-OpenBioLLM-70B

- 8B:https://huggingface.co/aaditya/Llama3-OpenBioLLM-8B

2.安装指南

2.1 下载llama依赖

pip install llama-cpp-python

- 1

安装过程

Collecting llama-cpp-python Downloading llama_cpp_python-0.2.65.tar.gz (38.0 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 38.0/38.0 MB 42.3 MB/s eta 0:00:00 Installing build dependencies ... done Getting requirements to build wheel ... done Installing backend dependencies ... done Preparing metadata (pyproject.toml) ... done Requirement already satisfied: typing-extensions>=4.5.0 in /usr/local/lib/python3.10/dist-packages (from llama-cpp-python) (4.11.0) Requirement already satisfied: numpy>=1.20.0 in /usr/local/lib/python3.10/dist-packages (from llama-cpp-python) (1.25.2) Collecting diskcache>=5.6.1 (from llama-cpp-python) Downloading diskcache-5.6.3-py3-none-any.whl (45 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 45.5/45.5 kB 6.7 MB/s eta 0:00:00 Requirement already satisfied: jinja2>=2.11.3 in /usr/local/lib/python3.10/dist-packages (from llama-cpp-python) (3.1.3) Requirement already satisfied: MarkupSafe>=2.0 in /usr/local/lib/python3.10/dist-packages (from jinja2>=2.11.3->llama-cpp-python) (2.1.5) Building wheels for collected packages: llama-cpp-python Building wheel for llama-cpp-python (pyproject.toml) ... done Created wheel for llama-cpp-python: filename=llama_cpp_python-0.2.65-cp310-cp310-linux_x86_64.whl size=39397391 sha256=6f91e47e67bea9fd5cae38ebcc05ea19b6c344a1a609a9d497e4e92e026b611a Stored in directory: /root/.cache/pip/wheels/46/37/bf/f7c65dbafa5b3845795c23b6634863c1fdf0a9f40678de225e Successfully built llama-cpp-python Installing collected packages: diskcache, llama-cpp-python Successfully installed diskcache-5.6.3 llama-cpp-python-0.2.65

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

2.2 下载模型

from huggingface_hub import hf_hub_download

from llama_cpp import Llama

model_name = "aaditya/OpenBioLLM-Llama3-8B-GGUF"

model_file = "openbiollm-llama3-8b.Q5_K_M.gguf"

model_path = hf_hub_download(model_name,

filename=model_file,

local_dir='/content')

print("My model path: ", model_path)

llm = Llama(model_path=model_path,

n_gpu_layers=-1)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

安装过程

openbiollm-llama3-8b.Q5_K_M.gguf: 100% 5.73G/5.73G [00:15<00:00, 347MB/s] llama_model_loader: loaded meta data with 22 key-value pairs and 291 tensors from /content/openbiollm-llama3-8b.Q5_K_M.gguf (version GGUF V3 (latest)) llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output. llama_model_loader: - kv 0: general.architecture str = llama llama_model_loader: - kv 1: general.name str = . llama_model_loader: - kv 2: llama.vocab_size u32 = 128256 llama_model_loader: - kv 3: llama.context_length u32 = 8192 llama_model_loader: - kv 4: llama.embedding_length u32 = 4096 llama_model_loader: - kv 5: llama.block_count u32 = 32 llama_model_loader: - kv 6: llama.feed_forward_length u32 = 14336 llama_model_loader: - kv 7: llama.rope.dimension_count u32 = 128 llama_model_loader: - kv 8: llama.attention.head_count u32 = 32 llama_model_loader: - kv 9: llama.attention.head_count_kv u32 = 8 llama_model_loader: - kv 10: llama.attention.layer_norm_rms_epsilon f32 = 0.000010 llama_model_loader: - kv 11: llama.rope.freq_base f32 = 500000.000000 llama_model_loader: - kv 12: general.file_type u32 = 17 llama_model_loader: - kv 13: tokenizer.ggml.model str = gpt2 llama_model_loader: - kv 14: tokenizer.ggml.tokens arr[str,128256] = ["!", "\"", "#", "$", "%", "&", "'", ... llama_model_loader: - kv 15: tokenizer.ggml.scores arr[f32,128256] = [0.000000, 0.000000, 0.000000, 0.0000... llama_model_loader: - kv 16: tokenizer.ggml.token_type arr[i32,128256] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ... llama_model_loader: - kv 17: tokenizer.ggml.merges arr[str,280147] = ["Ġ Ġ", "Ġ ĠĠĠ", "ĠĠ ĠĠ", "... llama_model_loader: - kv 18: tokenizer.ggml.bos_token_id u32 = 128000 llama_model_loader: - kv 19: tokenizer.ggml.eos_token_id u32 = 128001 llama_model_loader: - kv 20: tokenizer.ggml.padding_token_id u32 = 128001 llama_model_loader: - kv 21: general.quantization_version u32 = 2 llama_model_loader: - type f32: 65 tensors My model path: /content/openbiollm-llama3-8b.Q5_K_M.gguf llama_model_loader: - type q5_K: 193 tensors llama_model_loader: - type q6_K: 33 tensors llm_load_vocab: special tokens definition check successful ( 256/128256 ). llm_load_print_meta: format = GGUF V3 (latest) llm_load_print_meta: arch = llama llm_load_print_meta: vocab type = BPE llm_load_print_meta: n_vocab = 128256 llm_load_print_meta: n_merges = 280147 llm_load_print_meta: n_ctx_train = 8192 llm_load_print_meta: n_embd = 4096 llm_load_print_meta: n_head = 32 llm_load_print_meta: n_head_kv = 8 llm_load_print_meta: n_layer = 32 llm_load_print_meta: n_rot = 128 llm_load_print_meta: n_embd_head_k = 128 llm_load_print_meta: n_embd_head_v = 128 llm_load_print_meta: n_gqa = 4 llm_load_print_meta: n_embd_k_gqa = 1024 llm_load_print_meta: n_embd_v_gqa = 1024 llm_load_print_meta: f_norm_eps = 0.0e+00 llm_load_print_meta: f_norm_rms_eps = 1.0e-05 llm_load_print_meta: f_clamp_kqv = 0.0e+00 llm_load_print_meta: f_max_alibi_bias = 0.0e+00 llm_load_print_meta: f_logit_scale = 0.0e+00 llm_load_print_meta: n_ff = 14336 llm_load_print_meta: n_expert = 0 llm_load_print_meta: n_expert_used = 0 llm_load_print_meta: causal attn = 1 llm_load_print_meta: pooling type = 0 llm_load_print_meta: rope type = 0 llm_load_print_meta: rope scaling = linear llm_load_print_meta: freq_base_train = 500000.0 llm_load_print_meta: freq_scale_train = 1 llm_load_print_meta: n_yarn_orig_ctx = 8192 llm_load_print_meta: rope_finetuned = unknown llm_load_print_meta: ssm_d_conv = 0 llm_load_print_meta: ssm_d_inner = 0 llm_load_print_meta: ssm_d_state = 0 llm_load_print_meta: ssm_dt_rank = 0 llm_load_print_meta: model type = 8B llm_load_print_meta: model ftype = Q5_K - Medium llm_load_print_meta: model params = 8.03 B llm_load_print_meta: model size = 5.33 GiB (5.70 BPW) llm_load_print_meta: general.name = . llm_load_print_meta: BOS token = 128000 '<|begin_of_text|>' llm_load_print_meta: EOS token = 128001 '<|end_of_text|>' llm_load_print_meta: PAD token = 128001 '<|end_of_text|>' llm_load_print_meta: LF token = 128 'Ä' llm_load_print_meta: EOT token = 128009 '<|eot_id|>' llm_load_tensors: ggml ctx size = 0.30 MiB llm_load_tensors: offloading 32 repeating layers to GPU llm_load_tensors: offloading non-repeating layers to GPU llm_load_tensors: offloaded 33/33 layers to GPU llm_load_tensors: CPU buffer size = 344.44 MiB llm_load_tensors: CUDA0 buffer size = 5115.49 MiB ......................................................................................... llama_new_context_with_model: n_ctx = 512 llama_new_context_with_model: n_batch = 512 llama_new_context_with_model: n_ubatch = 512 llama_new_context_with_model: freq_base = 500000.0 llama_new_context_with_model: freq_scale = 1 llama_kv_cache_init: CUDA0 KV buffer size = 64.00 MiB llama_new_context_with_model: KV self size = 64.00 MiB, K (f16): 32.00 MiB, V (f16): 32.00 MiB llama_new_context_with_model: CUDA_Host output buffer size = 0.49 MiB llama_new_context_with_model: CUDA0 compute buffer size = 258.50 MiB llama_new_context_with_model: CUDA_Host compute buffer size = 9.01 MiB llama_new_context_with_model: graph nodes = 1030 llama_new_context_with_model: graph splits = 2 AVX = 1 | AVX_VNNI = 0 | AVX2 = 1 | AVX512 = 1 | AVX512_VBMI = 0 | AVX512_VNNI = 1 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 | MATMUL_INT8 = 0 | LAMMAFILE = 1 | Model metadata: {'tokenizer.ggml.padding_token_id': '128001', 'tokenizer.ggml.eos_token_id': '128001', 'general.quantization_version': '2', 'tokenizer.ggml.model': 'gpt2', 'general.architecture': 'llama', 'llama.rope.freq_base': '500000.000000', 'llama.context_length': '8192', 'general.name': '.', 'llama.vocab_size': '128256', 'general.file_type': '17', 'llama.embedding_length': '4096', 'llama.feed_forward_length': '14336', 'llama.attention.layer_norm_rms_epsilon': '0.000010', 'llama.rope.dimension_count': '128', 'tokenizer.ggml.bos_token_id': '128000', 'llama.attention.head_count': '32', 'llama.block_count': '32', 'llama.attention.head_count_kv': '8'} Using fallback chat format: None

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

2.3 提问问题

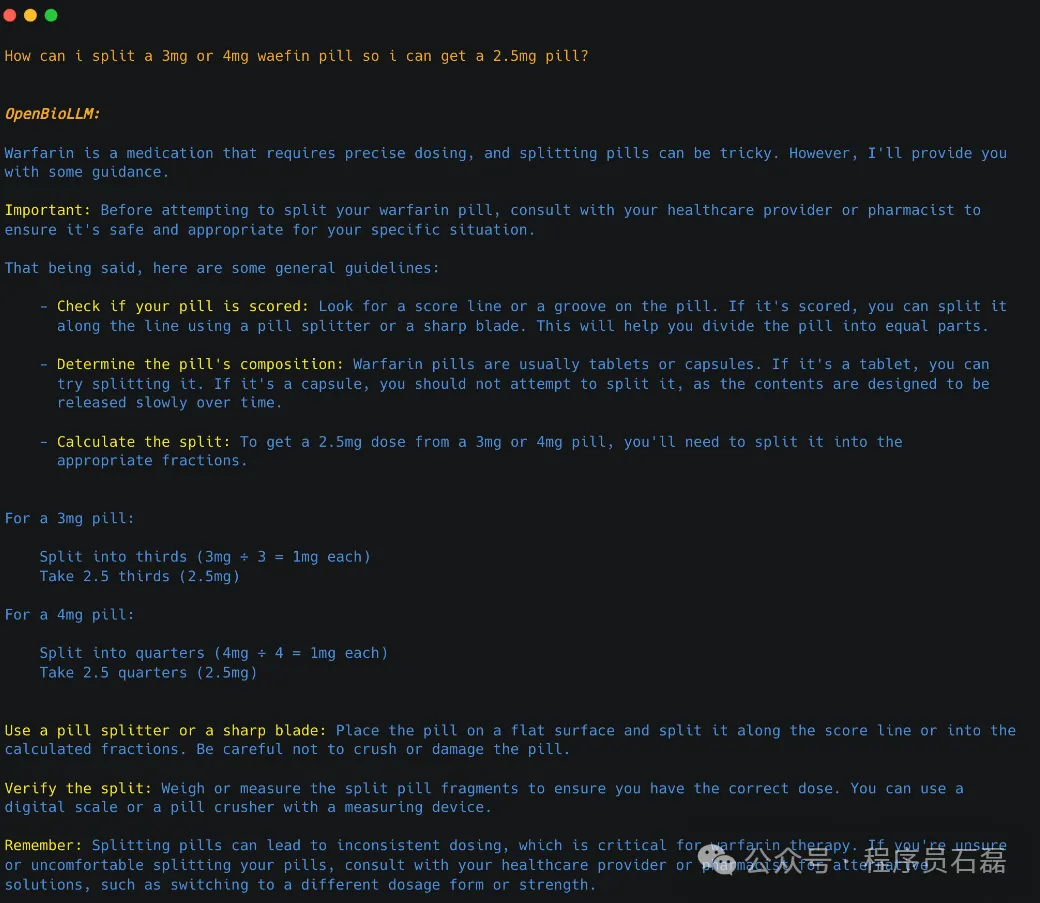

Question = "How can i split a 3mg or 4mg waefin pill so i can get a 2.5mg pill?"

prompt = f"You are an expert and experienced from the healthcare and biomedical domain with extensive medical knowledge and practical experience. Your name is OpenBioLLM, and you were developed by Saama AI Labs with Open Life Science AI. who's willing to help answer the user's query with explanation. In your explanation, leverage your deep medical expertise such as relevant anatomical structures, physiological processes, diagnostic criteria, treatment guidelines, or other pertinent medical concepts. Use precise medical terminology while still aiming to make the explanation clear and accessible to a general audience. Medical Question: {Question} Medical Answer:"

response = llm(prompt, max_tokens=4000)['choices'][0]['text']

print("\n\n\n", response)

- 1

- 2

- 3

- 4

- 5

- 6

结果展示

Llama.generate: prefix-match hit

llama_print_timings: load time = 10599.68 ms

llama_print_timings: sample time = 412.74 ms / 200 runs ( 2.06 ms per token, 484.57 tokens per second)

llama_print_timings: prompt eval time = 0.00 ms / 1 tokens ( 0.00 ms per token, inf tokens per second)

llama_print_timings: eval time = 2192.19 ms / 200 runs ( 10.96 ms per token, 91.23 tokens per second)

llama_print_timings: total time = 4622.41 ms / 201 tokens

To split a 3mg or 4mg Waefin pill into a 2.5mg dose, follow these steps: 1. Use a pill splitter or a sharp knife to divide the pill in half. 2. If using a pill splitter, place the pill in the device and apply even pressure to cut it evenly. 3. If using a knife, carefully place the pill on a non-stick surface and use a sharp blade to slice it into two equal portions. 4. To ensure accuracy, weigh each half-pill on a scale until you find one that weighs approximately 1250mg (which will be close to 2.5mg). 5. Once you have identified the correct half-pill for your desired dosage, consume it as directed by your healthcare provider. It is important to note that pill splitting should only be performed with certain medications under the guidance of a healthcare professional. Always consult with your doctor or pharmacist before attempting to split any medication.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

3.用例和示例

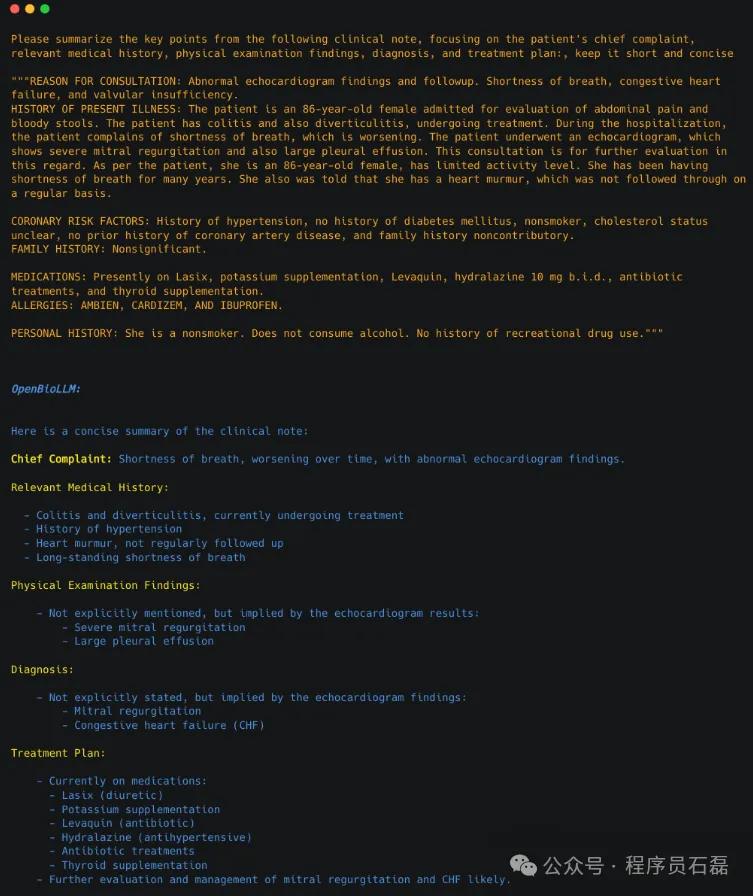

3.1.总结临床笔记

OpenBioLLM-70B 可以有效地分析和总结复杂的临床笔记、EHR 数据和出院摘要,提取关键信息并生成简洁、结构化的摘要

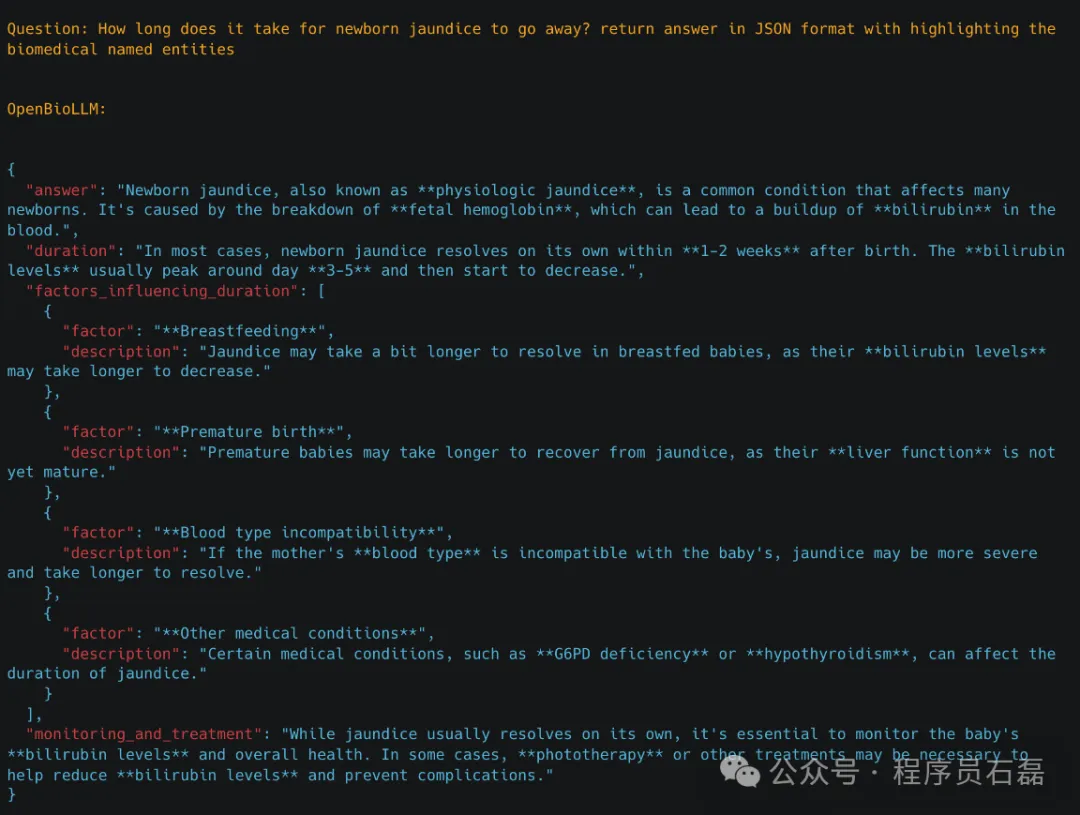

3.2 回答医疗问题

OpenBioLLM-70B 可以有效地分析和总结复杂的临床笔记、EHR 数据和出院摘要,提取关键信息并生成简洁、结构化的摘要

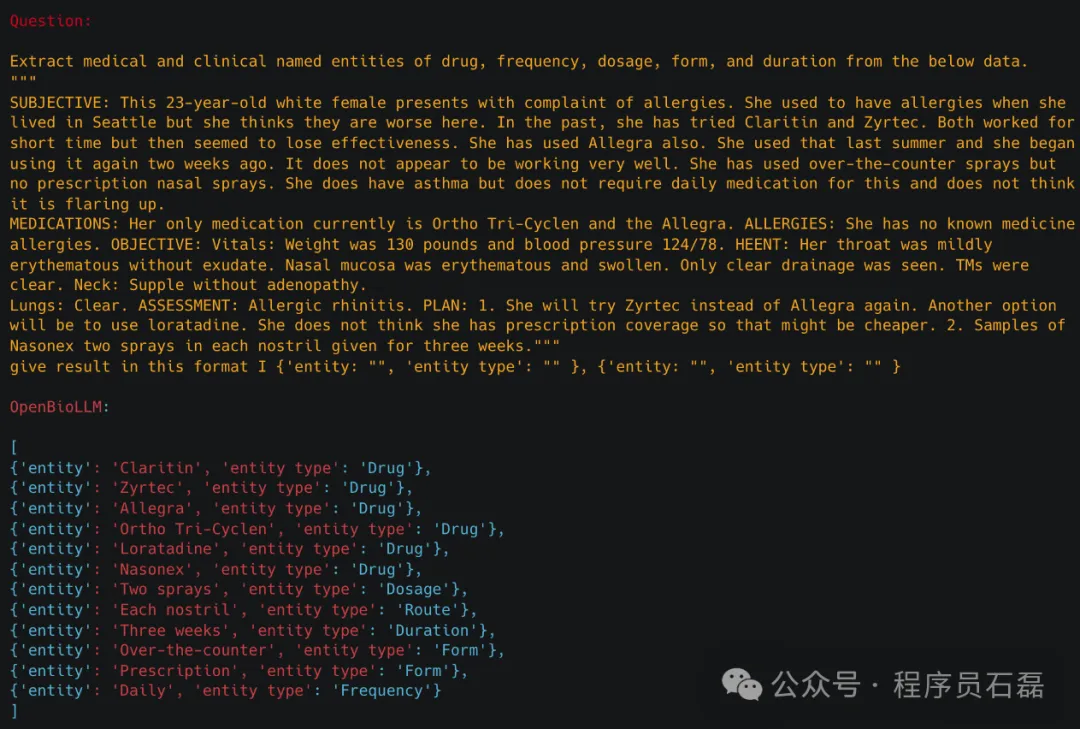

临床实体识别OpenBioLLM-70B可以通过从非结构化临床文本中识别和提取关键的医学概念,如疾病、症状、药物、程序和解剖结构,进行先进的临床实体识别。通过利用其对医学术语和上下文的深刻理解,该模型可以准确地对临床实体进行注释和分类,从而从电子健康记录、研究文章和其他生物医学文本源中实现更高效的信息检索、数据分析和知识发现。此功能可以支持各种下游应用,例如临床决策支持、药物警戒和医学研究。

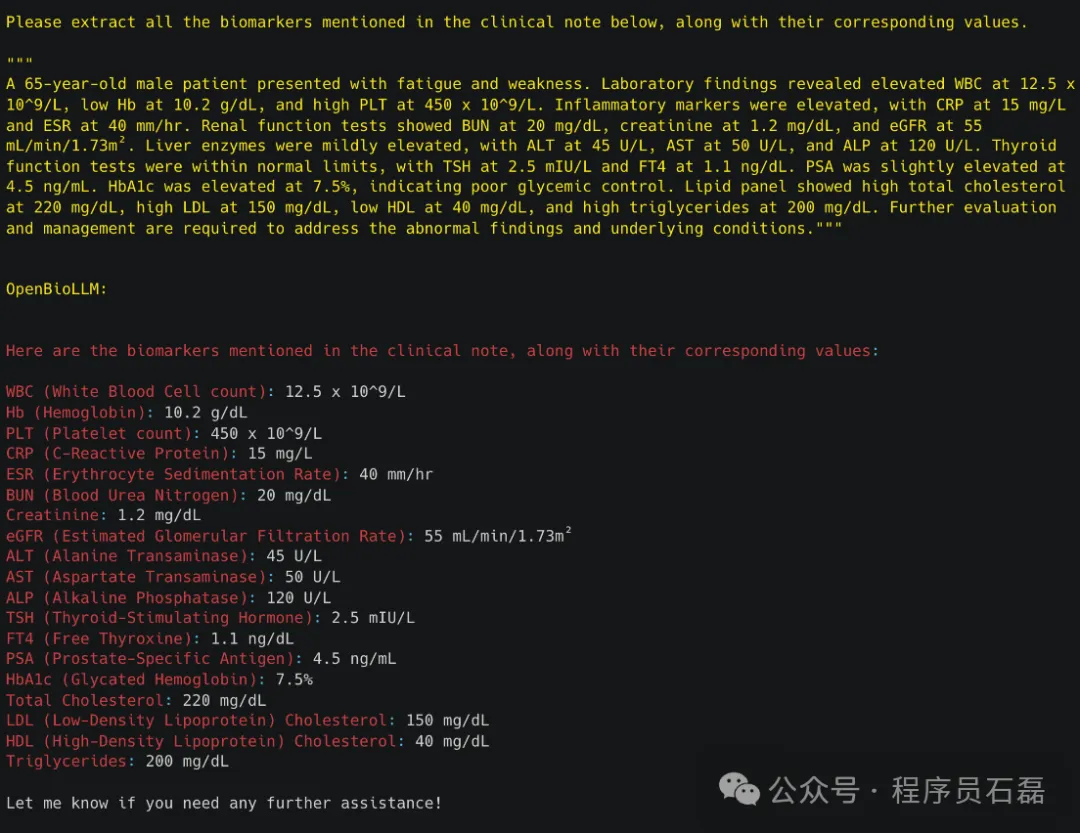

3.3 生物标志物提取

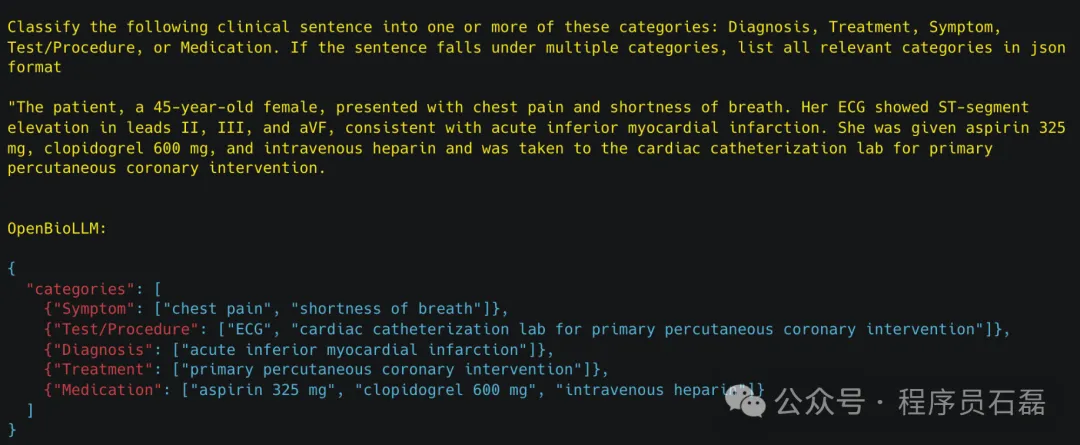

3.4 分类

OpenBioLLM-70B可以执行各种生物医学分类任务,如疾病预测、情感分析、医疗文档分类等

4.注意事项

虽然OpenBioLLM-70B和8B利用了高质量的数据源,但其输出仍可能包含不准确,偏差或错位,如果依赖这些不准确,偏差或错位,如果不进行进一步的测试和改进,可能会带来风险。该模型的性能尚未在随机对照试验或真实世界的医疗保健环境中进行严格评估。因此,我们强烈建议目前不要将OpenBioLLM-70B和8B用于任何直接的患者护理,临床决策支持或其他专业医疗目的。它的使用应仅限于了解其局限性的合格人员的研究、开发和探索性应用。OpenBioLLM-70B和8B仅作为协助医疗保健专业人员的研究工具,绝不应被视为合格医生的专业判断和专业知识的替代品。针对特定的医疗用例适当调整和验证OpenBioLLM-70B和8B将需要大量的额外工作,可能包括:

- 在相关临床场景中进行全面测试和评估

- 与循证指南和最佳实践保持一致

- 减轻潜在的偏差和故障模式

- 与人工监督和解释相结合

如何学习大模型 AI ?

由于新岗位的生产效率,要优于被取代岗位的生产效率,所以实际上整个社会的生产效率是提升的。

但是具体到个人,只能说是:

“最先掌握AI的人,将会比较晚掌握AI的人有竞争优势”。

这句话,放在计算机、互联网、移动互联网的开局时期,都是一样的道理。

我在一线互联网企业工作十余年里,指导过不少同行后辈。帮助很多人得到了学习和成长。

我意识到有很多经验和知识值得分享给大家,也可以通过我们的能力和经验解答大家在人工智能学习中的很多困惑,所以在工作繁忙的情况下还是坚持各种整理和分享。但苦于知识传播途径有限,很多互联网行业朋友无法获得正确的资料得到学习提升,故此将并将重要的AI大模型资料包括AI大模型入门学习思维导图、精品AI大模型学习书籍手册、视频教程、实战学习等录播视频免费分享出来。

第一阶段(10天):初阶应用

该阶段让大家对大模型 AI有一个最前沿的认识,对大模型 AI 的理解超过 95% 的人,可以在相关讨论时发表高级、不跟风、又接地气的见解,别人只会和 AI 聊天,而你能调教 AI,并能用代码将大模型和业务衔接。

- 大模型 AI 能干什么?

- 大模型是怎样获得「智能」的?

- 用好 AI 的核心心法

- 大模型应用业务架构

- 大模型应用技术架构

- 代码示例:向 GPT-3.5 灌入新知识

- 提示工程的意义和核心思想

- Prompt 典型构成

- 指令调优方法论

- 思维链和思维树

- Prompt 攻击和防范

- …

第二阶段(30天):高阶应用

该阶段我们正式进入大模型 AI 进阶实战学习,学会构造私有知识库,扩展 AI 的能力。快速开发一个完整的基于 agent 对话机器人。掌握功能最强的大模型开发框架,抓住最新的技术进展,适合 Python 和 JavaScript 程序员。

- 为什么要做 RAG

- 搭建一个简单的 ChatPDF

- 检索的基础概念

- 什么是向量表示(Embeddings)

- 向量数据库与向量检索

- 基于向量检索的 RAG

- 搭建 RAG 系统的扩展知识

- 混合检索与 RAG-Fusion 简介

- 向量模型本地部署

- …

第三阶段(30天):模型训练

恭喜你,如果学到这里,你基本可以找到一份大模型 AI相关的工作,自己也能训练 GPT 了!通过微调,训练自己的垂直大模型,能独立训练开源多模态大模型,掌握更多技术方案。

到此为止,大概2个月的时间。你已经成为了一名“AI小子”。那么你还想往下探索吗?

- 为什么要做 RAG

- 什么是模型

- 什么是模型训练

- 求解器 & 损失函数简介

- 小实验2:手写一个简单的神经网络并训练它

- 什么是训练/预训练/微调/轻量化微调

- Transformer结构简介

- 轻量化微调

- 实验数据集的构建

- …

第四阶段(20天):商业闭环

对全球大模型从性能、吞吐量、成本等方面有一定的认知,可以在云端和本地等多种环境下部署大模型,找到适合自己的项目/创业方向,做一名被 AI 武装的产品经理。

- 硬件选型

- 带你了解全球大模型

- 使用国产大模型服务

- 搭建 OpenAI 代理

- 热身:基于阿里云 PAI 部署 Stable Diffusion

- 在本地计算机运行大模型

- 大模型的私有化部署

- 基于 vLLM 部署大模型

- 案例:如何优雅地在阿里云私有部署开源大模型

- 部署一套开源 LLM 项目

- 内容安全

- 互联网信息服务算法备案

- …

学习是一个过程,只要学习就会有挑战。天道酬勤,你越努力,就会成为越优秀的自己。

如果你能在15天内完成所有的任务,那你堪称天才。然而,如果你能完成 60-70% 的内容,你就已经开始具备成为一名大模型 AI 的正确特征了。

这份完整版的大模型 AI 学习资料已经上传CSDN,朋友们如果需要可以微信扫描下方CSDN官方认证二维码免费领取【保证100%免费】