- 1爬虫爬取微博用户粉丝及其关注_爬取微博关注列表

- 2Python3,9行批量提取PDF文件的指定内容,这种操作,保证人见人爱...._python爬取pdf指定内容

- 3VSCODE配置SSH连接服务器_vscode ssh

- 4ubuntu18.04安装IntelRealsense D435的SDK及相机标定记录_[camera/realsense2_camera_manager-1] process has d

- 5在 centOS 上设置目录文件权限_centos设置用户访问目录权限

- 6试题 基础练习 龟兔赛跑预测C++_龟兔赛跑预测 c++

- 7C++中::域操作符

- 8python脚本自启动_.sh文件开机自启动运行py

- 9服务器机柜组件是,机柜及组件系统参数

- 10深度学习框架之tensorflow_基于tensorflow开发算法框架

Java服务器开发环境搭建_java服务器搭建

赞

踩

Java服务器开发环境搭建

随着学习,持续更新

配置虚拟机服务器

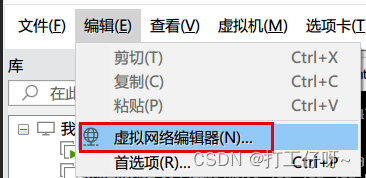

配置虚拟机网络

按照截图配置虚拟机网络即可。虚机机的网络模式自行百度

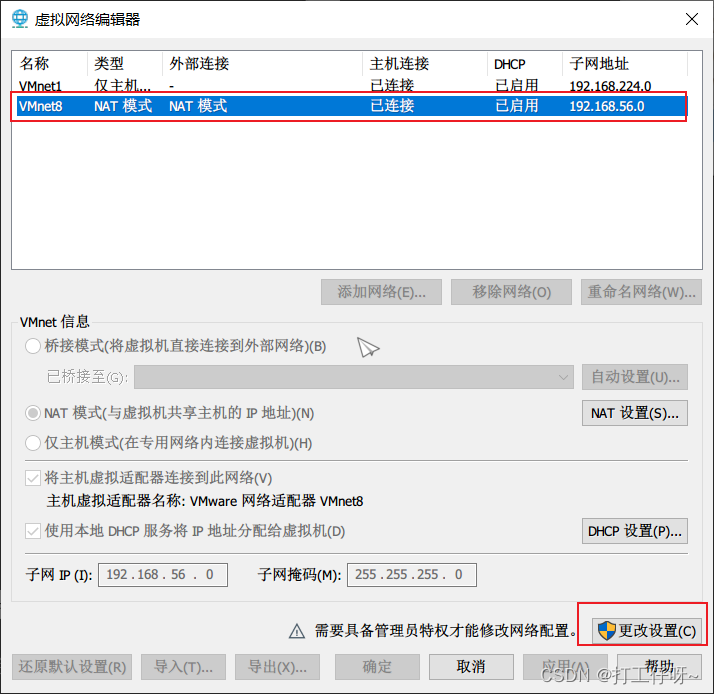

配置虚拟机网络模式,及其子网IP、子网掩码、DHCP设置

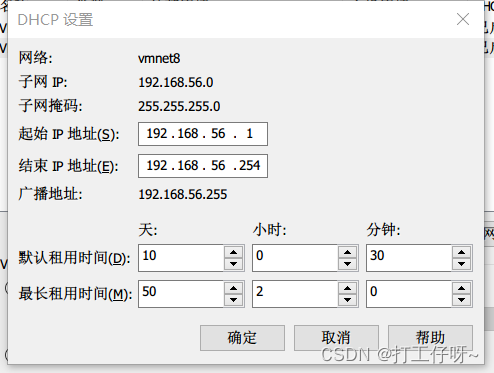

配置NAT,网关IP 或者 安装系统后的IP端口映射

虚拟机CentOS网络配置

-

进入目录

cd /etc/sysconfig/network-scripts/- 1

-rw-r--r--. 1 root root 381 2月 7 21:30 ifcfg-ens33 # ifcfg-ens 开头的一般为网络配置文件,根据你的网卡设备来定 有几个ifcfg-ens -rw-r--r--. 1 root root 254 5月 22 2020 ifcfg-lo lrwxrwxrwx. 1 root root 24 2月 7 19:28 ifdown -> ../../../usr/sbin/ifdown -rwxr-xr-x. 1 root root 654 5月 22 2020 ifdown-bnep -rwxr-xr-x. 1 root root 6532 5月 22 2020 ifdown-eth -rwxr-xr-x. 1 root root 781 5月 22 2020 ifdown-ippp -rwxr-xr-x. 1 root root 4540 5月 22 2020 ifdown-ipv6 lrwxrwxrwx. 1 root root 11 2月 7 19:28 ifdown-isdn -> ifdown-ippp -rwxr-xr-x. 1 root root 2130 5月 22 2020 ifdown-post -rwxr-xr-x. 1 root root 1068 5月 22 2020 ifdown-ppp -rwxr-xr-x. 1 root root 870 5月 22 2020 ifdown-routes -rwxr-xr-x. 1 root root 1456 5月 22 2020 ifdown-sit -rwxr-xr-x. 1 root root 1621 12月 9 2018 ifdown-Team -rwxr-xr-x. 1 root root 1556 12月 9 2018 ifdown-TeamPort -rwxr-xr-x. 1 root root 1462 5月 22 2020 ifdown-tunnel lrwxrwxrwx. 1 root root 22 2月 7 19:28 ifup -> ../../../usr/sbin/ifup -rwxr-xr-x. 1 root root 12415 5月 22 2020 ifup-aliases -rwxr-xr-x. 1 root root 910 5月 22 2020 ifup-bnep -rwxr-xr-x. 1 root root 13758 5月 22 2020 ifup-eth -rwxr-xr-x. 1 root root 12075 5月 22 2020 ifup-ippp -rwxr-xr-x. 1 root root 11893 5月 22 2020 ifup-ipv6 lrwxrwxrwx. 1 root root 9 2月 7 19:28 ifup-isdn -> ifup-ippp -rwxr-xr-x. 1 root root 650 5月 22 2020 ifup-plip -rwxr-xr-x. 1 root root 1064 5月 22 2020 ifup-plusb -rwxr-xr-x. 1 root root 4997 5月 22 2020 ifup-post -rwxr-xr-x. 1 root root 4154 5月 22 2020 ifup-ppp -rwxr-xr-x. 1 root root 2001 5月 22 2020 ifup-routes -rwxr-xr-x. 1 root root 3303 5月 22 2020 ifup-sit -rwxr-xr-x. 1 root root 1755 12月 9 2018 ifup-Team -rwxr-xr-x. 1 root root 1876 12月 9 2018 ifup-TeamPort -rwxr-xr-x. 1 root root 2780 5月 22 2020 ifup-tunnel -rwxr-xr-x. 1 root root 1836 5月 22 2020 ifup-wireless -rwxr-xr-x. 1 root root 5419 5月 22 2020 init.ipv6-global -rw-r--r--. 1 root root 20678 5月 22 2020 network-functions -rw-r--r--. 1 root root 30988 5月 22 2020 network-functions-ipv6

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

-

选择网络配置文件进行编辑

vi ifcfg-ens33- 1

配置信息结合虚拟机网络配置

TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static # dhcp改为static DEFROUTE=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_FAILURE_FATAL=no IPV6_ADDR_GEN_MODE=stable-privacy NAME=ens33 UUID=d163b238-24cb-4c77-a59e-6ef020159add DEVICE=ens33 ONBOOT=yes # 开机启动本机配置 IPADDR=192.168.56.10 # 静态IP NETMASK=255.255.255.0 # 子网掩码 GATEWAY=192.168.56.100 # 默认网关 DNS1=192.168.56.100 # DNS 配置 DNS2=8.8.8.8

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

-

配置生效

systemctl restart network # 或 service network restart- 1

- 2

- 3

-

测试

ping www.baidu.com- 1

[root@localhost network-scripts]# ping www.baidu.com PING www.a.shifen.com (110.242.68.3) 56(84) bytes of data. 64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=1 ttl=128 time=32.8 ms 64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=2 ttl=128 time=30.8 ms 64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=3 ttl=128 time=31.3 ms 64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=4 ttl=128 time=29.1 ms 64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=5 ttl=128 time=31.2 ms 64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=6 ttl=128 time=27.4 ms 64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=7 ttl=128 time=33.0 ms 64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=8 ttl=128 time=35.7 ms 64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=9 ttl=128 time=29.1 ms 64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=10 ttl=128 time=28.9 ms ^C --- www.a.shifen.com ping statistics --- 10 packets transmitted, 10 received, 0% packet loss, time 9016ms rtt min/avg/max/mdev = 27.492/30.978/35.795/2.329 ms

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

虚拟机CentOS开发环境

安装配置SSH

-

查看是否安装SSH

yum list installed | grep openssh-server # 或者 rpm -qa | grep ssh- 1

- 2

- 3

-

安装SSH(未安装情况)

yum install openssh-server- 1

-

编辑ssh配置文件

vi /etc/ssh/sshd_config- 1

# SSH 开放端口 Port 22 # SSH 监听IP ListenAddress 0.0.0.0 ListenAddress :: # 允许Puke登录 PubkeyAuthentication yes # Key保存文件名 AuthorizedKeysFile .ssh/authorized_keys # 允许Root登录 PermitRootLogin yes # 允许使用密码登录 PasswordAuthentication yes- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

-

检查SSH是否运行

ps -e | grep sshd # 或者查看端口状态 netstat -an | grep 22 # 或者查看服务运行状态 systemctl status sshd.service- 1

- 2

- 3

- 4

- 5

-

启用ssh服务

service sshd restart #重启ssh服务 service sshd start #启动ssh服务- 1

- 2

-

开机启动ssh服务

systemctl enable sshd #开机启动sshd服务 systemctl list-unit-files |grep ssh #检查是否开机启动 systemctl stop sshd #停止sshd服务 systemctl disable sshd #禁用开机启动sshd服务- 1

- 2

- 3

- 4

-

防火墙打开22端口

firewall-cmd --zone=public --add-port=22/tcp --permanent #向防火墙中添加端口 --zone #作用域 --add-port=80/tcp #添加端口,格式为:端口/通讯协议 --permanent #永久生效 firewall-cmd --reload #使防火墙规则生效 firewall-cmd --zone=public --query-port=22/tcp #查看端口是否添加成功: firewall-cmd --list-ports #查看防火墙开放的端口- 1

- 2

- 3

- 4

- 5

- 6

- 7

换源

-

安装wget

yum install wget -y- 1

-

备份源文件

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.bak- 1

-

下载源文件并命名阿里源

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo- 1

- 2

-

清理并生成新缓存

yum clean all && yum makecache- 1

已加载插件:fastestmirror 正在清理软件源: base epel extras updates Cleaning up list of fastest mirrors 已加载插件:fastestmirror Determining fastest mirrors * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com base | 3.6 kB 00:00:00 epel | 4.7 kB 00:00:00 extras | 2.9 kB 00:00:00 updates | 2.9 kB 00:00:00 base/7/x86_64/primary_db FAILED http://mirrors.cloud.aliyuncs.com/centos/7/os/x86_64/repodata/6d0c3a488c282fe537794b5946b01e28c7f44db79097bb06826e1c0c88bad5ef-primary.sqlite.bz2: [Errno 14] curl#6 - "Could not resolve host: mirrors.cloud.aliyuncs.com; Unknown error" 正在尝试其它镜像。 (1/16): epel/x86_64/group_gz | 96 kB 00:00:00 (2/16): base/7/x86_64/group_gz | 153 kB 00:00:00 (3/16): epel/x86_64/updateinfo | 1.1 MB 00:00:02 (4/16): epel/x86_64/prestodelta | 862 B 00:00:00 (5/16): base/7/x86_64/other_db | 2.6 MB 00:00:06 (6/16): epel/x86_64/primary_db | 7.0 MB 00:00:14 base/7/x86_64/filelists_db FAILED ] 928 kB/s | 21 MB 00:00:50 ETA http://mirrors.aliyuncs.com/centos/7/os/x86_64/repodata/d6d94c7d406fe7ad4902a97104b39a0d8299451832a97f31d71653ba982c955b-filelists.sqlite.bz2: [Errno 14] curl#7 - "Failed connect to mirrors.aliyuncs.com:80; Connection refused" 正在尝试其它镜像。 (7/16): extras/7/x86_64/other_db | 148 kB 00:00:00 (8/16): epel/x86_64/other_db | 3.4 MB 00:00:07 (9/16): epel/x86_64/filelists_db | 12 MB 00:00:28 extras/7/x86_64/primary_db FAILED ============= ] 995 kB/s | 40 MB 00:00:26 ETA http://mirrors.aliyuncs.com/centos/7/extras/x86_64/repodata/68cf05df72aa885646387a4bd332a8ad72d4c97ea16d988a83418c04e2382060-primary.sqlite.bz2: [Errno 14] curl#7 - "Failed connect to mirrors.aliyuncs.com:80; Connection refused" 正在尝试其它镜像。 extras/7/x86_64/filelists_db FAILED http://mirrors.aliyuncs.com/centos/7/extras/x86_64/repodata/ceff3d07ce71906c0f0372ad5b4e82ba2220030949b032d7e63b7afd39d6258e-filelists.sqlite.bz2: [Errno 14] curl#7 - "Failed connect to mirrors.aliyuncs.com:80; Connection refused" 正在尝试其它镜像。 (10/16): updates/7/x86_64/filelists_db | 9.1 MB 00:00:19 (11/16): extras/7/x86_64/primary_db | 247 kB 00:00:00 (12/16): extras/7/x86_64/filelists_db | 277 kB 00:00:00 (13/16): updates/7/x86_64/other_db | 1.1 MB 00:00:02 (14/16): base/7/x86_64/primary_db | 6.1 MB 00:00:13 (15/16): base/7/x86_64/filelists_db | 7.2 MB 00:00:15 (16/16): updates/7/x86_64/primary_db | 16 MB 00:00:35 元数据缓存已建立

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

安装Docker与部署容器

1.安装Docker

-

通过脚本进行安装

curl -fsSL https://get.docker.com -o get-docker.sh && sudo sh get-docker.sh- 1

-

启动Docker

sudo systemctl enable docker.service && sudo systemctl enable containerd.service sudo systemctl start docker- 1

- 2

-

检查Docker是否启动

docker ps- 1

2.安装Docker-Compose

-

下载docker-compose

sudo curl -L "https://github.com/docker/compose/releases/download/2.6.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose- 1

-

更改docker-compose权限

sudo chmod +x /usr/local/bin/docker-compose- 1

3.安装Docker应用

docker-compose.yml公共部分version: '2.4' networks: app_start: driver: bridge ipam: config: - subnet: 172.18.0.0/16 #CIDR 格式的子网,代表一个网段 ip_range: 172.18.0.0/24 #分配容器 IP 的 IP 范围 gateway: 172.18.0.1 #主子网的 IPv4 或 IPv6 网关 services: # 应用容器映射配置

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

执行

docker-compose在/home/app_start中进行

3.1安装Portainer

-

创建目录

mkdir -p ../build/portainer/data && mkdir -p ../build/portainer/public- 1

-

编写docker-compose.yml配置

portainer: restart: always container_name: portainer image: portainer/portainer ports: - "8000:8000" - "9000:9000" volumes: - "/var/run/docker.sock:/var/run/docker.sock" - "/home/app_start/build/portainer/data:/data" #- "/home/app_start/build/portainer/public:/public" networks: app_start: ipv4_address: 172.18.0.2- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

-

拉取安装Portainer

docker-compose up -d portainer- 1

-

访问Portainer,设置密码

初次访问,进去网页就是设置密码,根据需求设置Portainer

http://部署容器IP地址:9000- 1

-

汉化Portainer

-

下载汉化包

curl -o /home/app_start/build/portainer/public.tar -L "https://data.smallblog.cn/blog-images/back/public.tar" sudo tar -vxf /home/app_start/build/portainer/public.tar -C /home/app_start/build/portainer/- 1

- 2

-

修改docker-compose.ym,去掉注释

- "/home/app_start/build/portainer/public:/public"- 1

-

重启部署

docker-compose up -d portainer- 1

-

重启访问浏览器,登录

-

3.2安装Nginx

-

创建目录

mkdir -p ./build/nginx && mkdir -p ./build/nginx/log && mkdir -p ./build/nginx/www- 1

-

编写docker-compose.yml配置

nginx: image: nginx restart: always container_name: nginx environment: - TZ=Asia/Shanghai ports: - "80:80" - "443:443" volumes: - /home/app_start/build/nginx/conf.d:/etc/nginx/conf.d - /home/app_start/build/nginx/log:/var/log/nginx - /home/app_start/build/nginx/www:/etc/nginx/html - /etc/letsencrypt:/etc/letsencrypt networks: app_start: ipv4_address: 172.18.0.6

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

-

启动一个临时的Nginx容器

docker run -p 80:80 --name nginx_test -d nginx- 1

-

将临时容器中的配置Copy到创建的目录中

docker container cp nginx_test:/etc/nginx /home/app_start/build- 1

查看容器Copy到目录的内容

ls /home/app_start/build/nginx #------------------------------------ conf.d fastcgi_params log mime.types modules nginx.conf scgi_params uwsgi_params www- 1

- 2

- 3

-

停掉临时容器,启动构建正确的容器

docker stop nginx_test docker-compose up -d nginx- 1

- 2

-

访问浏览器,测试是否启动成功

http://部署容器IP地址:80- 1

3.3安装Redis

-

创建目录

mkdir -p ./build/redis/data && mkdir -p ./build/redis/conf && mkdir -p ./build/redis/logs- 1

-

下载Redis配置文件

curl -o /home/app_start/build/redis/conf/redis.conf -L "https://gitee.com/grocerie/centos-deployment/raw/master/redis.conf"- 1

-

编写docker-compose.yml配置

redis: restart: always image: redis container_name: redis volumes: - "/home/app_start/build/redis/data:/data" - "/home/app_start/build/redis/conf:/usr/local/etc/redis" - "/home/app_start/build/redis/logs:/logs" command: # 两个写入操作 只是为了解决启动后警告 可以去掉,123456 为密码 redis-server --requirepass 123456 --appendonly yes ports: - 6379:6379 environment: - TZ=Asia/Shanghai networks: app_start: ipv4_address: 172.18.0.5

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

-

构建启动容器

docker-compose up -d redis- 1

-

启动后测试连接Redis

3.4安装MySQL

-

创建目录

mkdir -p ./build/mysql/data- 1

-

编写docker-compose.yml配置

mysql: image: mysql restart: always container_name: "mysql" ports: - 3306:3306 volumes: - "/home/app_start/build/mysql/data:/var/lib/mysql" - "/home/app_start/build/mysql/log:/var/log/mysql" - "/home/app_start/build/mysql/config:/etc/mysql" - "/etc/localtime:/etc/localtime:ro" command: --default-authentication-plugin=mysql_native_password --character-set-server=utf8mb4 --collation-server=utf8mb4_general_ci --explicit_defaults_for_timestamp=true --lower_case_table_names=1 --default-time-zone=+8:00 environment: MYSQL_ROOT_PASSWORD: "12345678" networks: app_start: ipv4_address: 172.18.0.4

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

-

启动后测试连接MySQL

3.5安装Elasticsearch

-

创建目录

mkdir -p ./build/elasticsearch/config && mkdir -p ./build/elasticsearch/data && mkdir -p ./build/elasticsearch/plugins- 1

-

编写配置文件

# 表示elasticsearch允许远程的任何机器调用 >>: 写入 echo "http.host: 0.0.0.0" >> /home/app_start/build/elasticsearch/config/elasticsearch.yml- 1

- 2

-

对目录进行权限升级

chmod -R 777 /home/app_start/build/elasticsearch- 1

-

编写docker-compose.yml配置

elasticsearch: container_name: elasticsearch image: docker.io/elasticsearch:7.4.2 environment: - discovery.type=single-node - ES_JAVA_OPTS=-Xms64m -Xmx512m volumes: - /home/app_start/build/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml - /home/app_start/build/elasticsearch/data:/usr/share/elasticsearch/data - /home/app_start/build/elasticsearch/plugins:/usr/share/elasticsearch/plugins ports: - "9200:9200" - "9300:9300" networks: app_start: ipv4_address: 172.18.0.20 restart: always

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

-

启动后浏览器访问

http://部署容器IP地址:9200/- 1

-

Elasticsearch 安装 IK分词器

-

进入Elasticsearch挂载目录

cd /home/app_start/build/elasticsearch- 1

-

下载IK分词器

# 下载ik7.4.2 wget https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.4.2/elasticsearch-analysis-ik-7.4.2.zip # 下载unzip yum install unzip -y # 用unzip解压ik unzip elasticsearch-analysis-ik-7.4.2.zip -d ik # 移动到plugins目录下 mv ik plugins/ # 分配权限 chmod -R 777 plugins/ik- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

-

确认是否安装好了分词器

-

进入容器:

/usr/share/elasticsearch/bin -

运行:

elasticsearch-pluginA tool for managing installed elasticsearch plugins Non-option arguments: command Option Description ------ ----------- -h, --help show help -s, --silent show minimal output -v, --verbose show verbose output- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

-

列出所有已安装的插件:

elasticsearch-plugin list[root@36a2ac9a6bd7 bin]# elasticsearch-plugin list # 安装成功 ik- 1

- 2

- 3

-

重启elasticsearch

docker restart elasticsearch- 1

-

-

测试IK分词器

最大的分词组合

POST _analyze { "analyzer": "ik_smart", "text": "我爱我的祖国!" }- 1

- 2

- 3

- 4

- 5

返回结果:

{ "tokens" : [ { "token" : "我", "start_offset" : 0, "end_offset" : 1, "type" : "CN_CHAR", "position" : 0 }, { "token" : "爱我", "start_offset" : 1, "end_offset" : 3, "type" : "CN_WORD", "position" : 1 }, { "token" : "的", "start_offset" : 3, "end_offset" : 4, "type" : "CN_CHAR", "position" : 2 }, { "token" : "祖国", "start_offset" : 4, "end_offset" : 6, "type" : "CN_WORD", "position" : 3 } ] }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

-

3.6安装kibana

-

编写docker-compose.yml配置

kibana: container_name: kibana image: kibana:7.4.2 environment: - ELASTICSEARCH_HOSTS=http://172.18.0.20:9200 - I18N_LOCALE=zh-CN ports: - "5601:5601" depends_on: - elasticsearch restart: on-failure networks: app_start: ipv4_address: 172.18.0.21- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

-

启动后浏览器访问

http://部署容器IP地址:5601/- 1

访问浏览器出现:Kibana server is not ready yet

可能就是Kibana服务器还没有准备好

查看docker Kibana 日志,出现一下字段为就绪(Kibana准备好了)

{ "type": "log", "@timestamp": "2022-07-18T09:03:16Z", "tags": [ "status", "plugin:spaces@7.4.2", "info" ], "pid": 7, "state": "green", // 状态由黄色变为绿色-准备好 "message": "Status changed from yellow to green - Ready", "prevState": "yellow", "prevMsg": "Waiting for Elasticsearch" }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

3.7安装Logstash

-

启动临时容器

docker run -d --name=logstash logstash:7.4.2- 1

-

将临时容器中的文件Copy出来

docker cp logstash:/usr/share/logstash/config /home/app_start/build/logstash/ docker cp logstash:/usr/share/logstash/data /home/app_start/build/logstash/ docker cp logstash:/usr/share/logstash/pipeline /home/app_start/build/logstash/- 1

- 2

- 3

-

授权文件

这步必须做,否则后续后出现未知报错,logstash 启动不起来

#文件夹赋权 chmod -R 777 /home/app_start/build/logstash/- 1

- 2

-

修改logstash/config 下的 logstash.yml 文件

http.host: 0.0.0.0 #path.config: /usr/share/logstash/config/conf.d/*.conf path.logs: /usr/share/logstash/logs pipeline.batch.size: 10 # 主要修改 es 的地址 xpack.monitoring.elasticsearch.hosts: - http://172.18.0.20:9200- 1

- 2

- 3

- 4

- 5

- 6

- 7

-

修改 logstash/pipeline 下的 logstash.conf 文件

input { tcp { mode => "server" host => "0.0.0.0" # 允许任意主机发送日志 port => 5045 codec => json_lines # 数据格式 } } output { elasticsearch { hosts => ["http://172.18.0.20:9200"] # ElasticSearch 的地址和端口 index => "elk" # 指定索引名 codec => "json" } stdout { codec => rubydebug } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

-

编写docker-compose.yml配置

logstash: image: logstash:7.4.2 container_name: logstash restart: always ports: - "5044:5044" #设置端口 默认端口 - "9600:9600" - "5045:5045" #自定义修改的 日志转换接口 volumes: - ./build/logstash/config:/usr/share/logstash/config - ./build/logstash/data:/usr/share/logstash/data - ./build/logstash/pipeline:/usr/share/logstash/pipeline networks: app_start: ipv4_address: 172.18.0.23- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

-

启动后查看日志

查看启动过程是否正常启动,及时发现问题

docker logs -f logstash- 1

-

常见错误

[2022-07-18T13:51:50,582][INFO ][org.logstash.beats.Server] Starting server on port: 5044 [2022-07-18T13:51:54,827][WARN ][logstash.runner ] SIGTERM received. Shutting down. [2022-07-18T13:51:55,897][INFO ][logstash.javapipeline ] Pipeline terminated {"pipeline.id"=>".monitoring-logstash"} [2022-07-18T13:51:56,823][ERROR][logstash.javapipeline ] A plugin had an unrecoverable error. Will restart this plugin. Pipeline_id:main Plugin: <LogStash::Inputs::Beats port=>5044, id=>"1db05a8093f0a1ba531f41f086b7b6aae3f181480601fd93614e3bf59e5a6608", enable_metric=>true, codec=><LogStash::Codecs::Plain id=>"plain_aadd2f75-f190-4183-a533-51389850e1b1", enable_metric=>true, charset=>"UTF-8">, host=>"0.0.0.0", ssl=>false, add_hostname=>false, ssl_verify_mode=>"none", ssl_peer_metadata=>false, include_codec_tag=>true, ssl_handshake_timeout=>10000, tls_min_version=>1, tls_max_version=>1.2, cipher_suites=>["TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384", "TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384", "TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256", "TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256", "TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA384", "TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA384", "TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256", "TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA256"], client_inactivity_timeout=>60, executor_threads=>4> Error: Address already in use- 1

- 2

- 3

- 4

- 5

- 6

- 7

注意:

Address already in use(端口占用 5044),检查配置文件

[2022-07-18T13:51:55,924][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"7.4.2", "jruby.version"=>"jruby 9.2.13.0 (2.5.7) 2020-08-03 9a89c94bcc OpenJDK 64-Bit Server VM 25.161-b14 on 1.8.0_161-b14 +indy +jit [linux-x86_64]"} [2022-07-18T13:51:55,262][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified [2022-07-18T13:51:55,286][INFO ][logstash.agent ] No persistent UUID file found. Generating new UUID {:uuid=>"8fa4bf61-3190-4e9d-ac9a-eccf68220b9f", :path=>"/usr/share/logstash/data/uuid"} [2022-07-18T13:51:55,618][INFO ][logstash.config.source.local.configpathloader] No config files found in path {:path=>"/usr/share/logstash/config/conf.d/*.conf"} [2022-07-18T13:51:55,674][ERROR][logstash.config.sourceloader] No configuration found in the configured sources. [2022-07-18T13:51:55,820][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} [2022-07-18T13:51:55,896][INFO ][logstash.runner ] Logstash shut down.- 1

- 2

- 3

- 4

- 5

- 6

- 7

注意:

No config files found in path {:path=>"/usr/share/logstash/config/conf.d/*.conf"},找不到配置文件,在logstash.yml 文件中注释掉就可以了

3.8安装ElasticSearch-head

-

启动临时容器

docker pull mobz/elasticsearch-head:5 docker run -d --name es_admin -p 9100:9100 mobz/elasticsearch-head:5- 1

- 2

-

将临时容器中的文件Copy出来

docker cp es_admin:/usr/src/app/ /home/app_start/build/elasticsearch-head/- 1

-

修改elasticsearch-head/app下的

Gruntfile.js文件connect: { server: { options: { # 添加 hostname: '0.0.0.0', hostname: '0.0.0.0', port: 9100, base: '.', keepalive: true } } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

-

编写docker-compose.yml配置

elasticsearch-head: image: mobz/elasticsearch-head:5 container_name: elasticsearch-head restart: always ports: - "9100:9100" volumes: - ./build/elasticsearch-head/app/:/usr/src/app/ networks: app_start: ipv4_address: 172.18.0.24- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

-

启动后浏览器访问

http://部署容器IP地址:9100/- 1

-

遇到跨域问题

去修改elasticsearch挂载目录下修改

elasticsearch.yml文件http.host: 0.0.0.0 # 添加如下两条 http.cors.enabled: true http.cors.allow-origin: "*"- 1

- 2

- 3

- 4

3.9安装RabbitMQ

-

编写docker-compose.yml配置

rabbitmq: restart: always image: rabbitmq:management container_name: rabbitmq ports: - 5671:5671 - 5672:5672 - 4369:4369 - 25672:25672 - 15671:15671 - 15672:15672 networks: app_start: ipv4_address: 172.18.0.22- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

4369, 25672 (Erlang发现&集群端口) 5672, 5671 (AMQP端口) 15672 (web管理后台端口) 61613, 61614 (STOMP协议端口) 1883, 8883 (MQTT协议端口)- 1

- 2

- 3

- 4

- 5

-

启动后浏览器访问

http://部署容器IP地址:15672/- 1

默认账号密码:

guest/guest

3.10 MySQL 主从

-

创建用户从数据库同步的用户

GRANT SUPER, REPLICATION SLAVE ON *.* TO 'xiaozhengrep'@'%' IDENTIFIED BY 'rep123456'; flush privileges; -- 刷新权限- 1

- 2

-

主数据库

my.ini[mysqld] skip-host-cache skip-name-resolve datadir=/var/lib/mysql socket=/var/run/mysqld/mysqld.sock secure-file-priv=/var/lib/mysql-files user=mysql sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION # 主库配置 server-id=1 #服务器标志号,注意在配置文件中不能出现多个这样的标识,如果出现多个的话mysql以第一个为准,一组主从中此标识号不能重复。 #log-bin=/home/mysql/logs/binlog/bin-log #开启bin-log,并指定文件目录和文件名前缀。 log-bin=bin-log max_binlog_size = 500M #每个bin-log最大大小,当此大小等于500M时会自动生成一个新的日志文件。一条记录不会写在2个日志文件中,所以有时日志文件会超过此大小。 binlog_cache_size = 128K #日志缓存大小 binlog-do-db = xz_demo #需要同步的数据库名字,如果是多个,重复设置这个选项即可。 binlog-ignore-db = mysql #不需要同步的数据库名字,如果是多个,重复设置这个选项即可。 log-slave-updates #当Slave从Master数据库读取日志时更新新写入日志中,如果只启动log-bin 而没有启动log-slave-updates则Slave只记录针对自己数据库操作的更新。 expire_logs_days=2 #设置bin-log日志文件保存的天数,此参数mysql5.0以下版本不支持。 binlog_format="MIXED" #设置bin-log日志文件格式为:MIXED,可以防止主键重复。 # end pid-file=/var/run/mysqld/mysqld.pid [client] socket=/var/run/mysqld/mysqld.sock !includedir /etc/mysql/conf.d/

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

-

将主数据库所需配置放入

3.4小节配置目录下的my.ini -

连接

主数据库查看信息mysql -u root -h 192.168.56.10 -P3306 -p12345678 SHOW MASTER STATUS;- 1

- 2

+----------------+----------+--------------+------------------+-------------------+ | File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set | +----------------+----------+--------------+------------------+-------------------+ | bin-log.000001 | 817 | xz_demo | mysql | | +----------------+----------+--------------+------------------+-------------------+ 1 row in set (0.00 sec) # 记录 File | Position 的值- 1

- 2

- 3

- 4

- 5

- 6

- 7

-

创建从数据库目录

mkdir -p ./build/mysql-slave/data ./build/mysql-slave/config- 1

-

编写从数据库docker-compose.yml配置

mysql: image: mysql restart: always container_name: "mysql-slave" ports: - 3307:3306 volumes: - "/home/app_start/build/mysql-slave/data:/var/lib/mysql" - "/home/app_start/build/mysql-slave/config/my.cnf:/etc/my.cnf" command: --default-authentication-plugin=mysql_native_password --character-set-server=utf8mb4 --collation-server=utf8mb4_general_ci --explicit_defaults_for_timestamp=true --lower_case_table_names=1 --default-time-zone=+8:00 environment: MYSQL_ROOT_PASSWORD: "12345678" networks: app_start: ipv4_address: 172.18.0.5

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

-

从数据库my.ini[mysqld] skip-host-cache skip-name-resolve datadir=/var/lib/mysql socket=/var/run/mysqld/mysqld.sock secure-file-priv=/var/lib/mysql-files user=mysql sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION server-id=2 # mysql 5.5 以前版本配置,以后在命令行中 #master_host==172.18.0.14 #master_user=xiaozhengrep #master_password=rep123456 #master_port=3307 #master_connect_retry=30 #master_info_file = /var/lib/mysql/logs/ # end slave-skip-errors=1062 replicate-do-db = xz_demo replicate-ignore-db = mysql #如果master库名[testdemo]与salve库名[testdemo01]不同,使用以下配置[需要做映射] #replicate-rewrite-db = testdemo -> testdemo01 #如果不是要全部同步[默认全部同步],则指定需要同步的表 #replicate-wild-do-table=testdemo.user #replicate-wild-do-table=testdemo.demotable #read_only参数的值设 为1( set global read_only=1 ; ) 这样就可以限制用户写入数据 #read_only=1 #如果需要限定super权限的用户写数据,可以设置super_read_only=0。另外如果要想连super权限用户的写操作也禁止,就使用"flush tables with read lock;", #super_read_only=0 slave-skip-errors=1007,1008,1053,1062,1213,1158,1159 relay-log = slavel-relay-bin pid-file=/var/run/mysqld/mysqld.pid [client] socket=/var/run/mysqld/mysqld.sock !includedir /etc/mysql/conf.d/

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

属性有两个需要注意的地方:

1、read_only=1设置的只读模式,不会影响slave同步复制的功能。 所 以在MySQL slave库中设定了read_only=1后,通过"show slave status\G"命令查看salve状态,可以看到salve仍然会读取master上的日 志,并且在slave库中应用日志,保证主从数据库同步一致;

2、read_only=1设置的只读模式, 限定的是普通用户进行数据修改的操 作,但不会限定具有super权限的用户的数据修改操作。 在MySQL中设 置read_only=1后,普通的应用用户进行insert、update、delete等会 产生数据变化的DML操作时,都会报出数据库处于只读模式不能发生数据变化的错误,但具有super权限的用户,例如在本地或远程通过root用 户登录到数据库,还是可以进行数据变化的DML操作; 如果需要限定 super权限的用户写数据,可以设置super_read_only=0。另外 如果要 想连super权限用户的写操作也禁止,就使用"flush tables with read lock;",这样设置也会阻止主从同步复制! -

将

my.ini放入从数据库配置目录下vim ./build/mysql-slave/config/my.ini- 1

-

部署

从数据库docker-compose -f docker-compose.yml up mysql-slave -d- 1

-

重启

主数据库,然后连接从数据库

mysql -u root -h 192.168.56.10 -P3307 -p12345678 STOP SLAVE; -- 终止从库线程 change master to master_host='172.18.0.14', -- docker 内部网络IP MASTER_USER='xiaozhengrep', MASTER_PASSWORD='rep123456', MASTER_PORT=3306, -- docker 容器内部端口 MASTER_CONNECT_RETRY=30, -- 重试时间 MASTER_LOG_FILE='bin-log.000001', -- file 主库记录的FILE 值 MASTER_LOG_POS=817; -- pos 主库记录的Position 值 START SLAVE; -- 启动从库线程 SHOW SLAVE STATUS; -- 查看从库状态 -- Slave_IO_Running | Slave_SQL_Running 均为YES 则基本成功 +----------------------------------+-------------+--------------+-------------+---------------+-----------------+---------------------+-------------------------------+---------------+-----------------------+------------------+-------------------+-----------------+---------------------+--------------------+------------------------+-------------------------+-----------------------------+------------+------------+--------------+---------------------+-----------------+-----------------+----------------+---------------+--------------------+--------------------+--------------------+-----------------+-------------------+----------------+-----------------------+-------------------------------+---------------+---------------+----------------+----------------+-----------------------------+------------------+--------------------------------------+----------------------------+-----------+---------------------+--------------------------------------------------------+--------------------+-------------+-------------------------+--------------------------+----------------+--------------------+--------------------+-------------------+---------------+----------------------+--------------+--------------------+ | Slave_IO_State | Master_Host | Master_User | Master_Port | Connect_Retry | Master_Log_File | Read_Master_Log_Pos | Relay_Log_File | Relay_Log_Pos | Relay_Master_Log_File | Slave_IO_Running | Slave_SQL_Running | Replicate_Do_DB | Replicate_Ignore_DB | Replicate_Do_Table | Replicate_Ignore_Table | Replicate_Wild_Do_Table | Replicate_Wild_Ignore_Table | Last_Errno | Last_Error | Skip_Counter | Exec_Master_Log_Pos | Relay_Log_Space | Until_Condition | Until_Log_File | Until_Log_Pos | Master_SSL_Allowed | Master_SSL_CA_File | Master_SSL_CA_Path | Master_SSL_Cert | Master_SSL_Cipher | Master_SSL_Key | Seconds_Behind_Master | Master_SSL_Verify_Server_Cert | Last_IO_Errno | Last_IO_Error | Last_SQL_Errno | Last_SQL_Error | Replicate_Ignore_Server_Ids | Master_Server_Id | Master_UUID | Master_Info_File | SQL_Delay | SQL_Remaining_Delay | Slave_SQL_Running_State | Master_Retry_Count | Master_Bind | Last_IO_Error_Timestamp | Last_SQL_Error_Timestamp | Master_SSL_Crl | Master_SSL_Crlpath | Retrieved_Gtid_Set | Executed_Gtid_Set | Auto_Position | Replicate_Rewrite_DB | Channel_Name | Master_TLS_Version | +----------------------------------+-------------+--------------+-------------+---------------+-----------------+---------------------+-------------------------------+---------------+-----------------------+------------------+-------------------+-----------------+---------------------+--------------------+------------------------+-------------------------+-----------------------------+------------+------------+--------------+---------------------+-----------------+-----------------+----------------+---------------+--------------------+--------------------+--------------------+-----------------+-------------------+----------------+-----------------------+-------------------------------+---------------+---------------+----------------+----------------+-----------------------------+------------------+--------------------------------------+----------------------------+-----------+---------------------+--------------------------------------------------------+--------------------+-------------+-------------------------+--------------------------+----------------+--------------------+--------------------+-------------------+---------------+----------------------+--------------+--------------------+ | Waiting for master to send event | 172.18.0.14 | xiaozhengrep | 3306 | 30 | bin-log.000001 | 817 | 5ebafbe94a17-relay-bin.000002 | 981 | bin-log.000001 | Yes | Yes | xz_demo | mysql | | | | | 0 | | 0 | 817 | 1195 | None | | 0 | No | | | | | | 0 | No | 0 | | 0 | | | 1 | 8506633c-6182-11ed-92f9-0242ac12000e | /var/lib/mysql/master.info | 0 | NULL | Slave has read all relay log; waiting for more updates | 86400 | | | | | | | | 0 | | | | +----------------------------------+-------------+--------------+-------------+---------------+-----------------+---------------------+-------------------------------+---------------+-----------------------+------------------+-------------------+-----------------+---------------------+--------------------+------------------------+-------------------------+-----------------------------+------------+------------+--------------+---------------------+-----------------+-----------------+----------------+---------------+--------------------+--------------------+--------------------+-----------------+-------------------+----------------+-----------------------+-------------------------------+---------------+---------------+----------------+----------------+-----------------------------+------------------+--------------------------------------+----------------------------+-----------+---------------------+--------------------------------------------------------+--------------------+-------------+-------------------------+--------------------------+----------------+--------------------+--------------------+-------------------+---------------+----------------------+--------------+--------------------+ 1 row in set (0.00 sec)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 在

主库插入数据,从库查看

- 续

下面六项需要在slave上设置: Replicate_Do_DB:设定需要复制的数据库,多个DB用逗号分隔 Replicate_Ignore_DB:设定可以忽略的数据库. Replicate_Do_Table:设定需要复制的Table Replicate_Ignore_Table:设定可以忽略的Table Replicate_Wild_Do_Table:功能同Replicate_Do_Table,但可以带通配符来进行设置。 Replicate_Wild_Ignore_Table:功能同Replicate_Do_Table,功能同Replicate_Ignore_Table,可以带通配符。- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 同步中断

如果同步中断,查看主数据库的File | Position值,重新配置,执行START SLAVE;出现Slave failed to initialize relay log info structure from the repository错误,需要在START SLAVE;之前执行RESET SLAVE;,在START SLAVE; - 首次配置

主从表结构要相同

3.11 Redis 主从

- 构建Redis从库镜像

redis-slave: restart: always image: redis container_name: redis-slave volumes: - "/home/app_start/build/redis-slave/data:/data" - "/home/app_start/build/redis-slave/conf:/usr/local/etc/redis/" - "/home/app_start/build/redis-slave/logs:/logs" command: # 指定启动配置文件 redis-server /usr/local/etc/redis/redis.conf ports: - 6380:6379 environment: - TZ=Asia/Shanghai networks: app_start: ipv4_address: 172.18.0.16

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 修改

redis.config# appendonly no #redis持久化 默认是no appendonly yes # 主库 IP 端口 # replicaof <masterip> <masterport> replicaof 172.18.0.5 6379 # 主库密码 # masterauth <master-password> masterauth 123456- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 启动

redis-slave连接从库查看是否同步

本机安装JDK

-

安装jdk1.8,推荐路径/usr/local/src,先创建目录

mkdir -p /usr/local/src/jdk- 1

-

查看是否已安装了jdk

rpm -qa | grep -i jdk -- 查看 rpm -e --nodeps 文件名 -- 卸载- 1

- 2

-

下载jdk包

wget --no-check-certificate --no-cookies --header "Cookie: oraclelicense=accept-securebackup-cookie" http://download.oracle.com/otn-pub/java/jdk/8u131-b11/d54c1d3a095b4ff2b6607d096fa80163/jdk-8u131-linux-x64.tar.gz- 1

-

解压安装包

tar -zxvf jdk-8u131-linux-x64.tar.gz- 1

-

配置环境变量

vi /etc/profile- 1

在文件最后面加上

export JAVA_HOME=/usr/local/src/jdk/jdk1.8.0_131 export JRE_HOME=$JAVA_HOME/jre export CLASSPATH=$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$PATH- 1

- 2

- 3

- 4

-

让配置文件生效

source /etc/profile- 1

-

检查是否安装成功

java -version- 1

[root@localhost jdk]# java -version java version "1.8.0_131" Java(TM) SE Runtime Environment (build 1.8.0_131-b11) Java HotSpot(TM) 64-Bit Server VM (build 25.131-b11, mixed mode)- 1

- 2

- 3

- 4

systemctl和防火墙firewalld命令

一、防火墙的开启、关闭、禁用命令 (1)设置开机启用防火墙:systemctl enable firewalld.service (2)设置开机禁用防火墙:systemctl disable firewalld.service (3)启动防火墙:systemctl start firewalld (4)关闭防火墙:systemctl stop firewalld (5)检查防火墙状态:systemctl status firewalld 二、使用firewall-cmd配置端口 (1)查看防火墙状态:firewall-cmd --state (2)重新加载配置:firewall-cmd --reload (3)查看开放的端口:firewall-cmd --list-ports (4)开启防火墙端口:firewall-cmd --zone=public --add-port=9200/tcp --permanent 命令含义: –zone #作用域 –add-port=9200/tcp #添加端口,格式为:端口/通讯协议 –permanent #永久生效,没有此参数重启后失效 注意:添加端口后,必须用命令firewall-cmd --reload重新加载一遍才会生效 (5)关闭防火墙端口:firewall-cmd --zone=public --remove-port=9200/tcp --permanent

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 该博客教程旨在帮助初学者了解如何在Java前端和MySQL数据库之间建立连接。通过简单易懂的指导,教程覆盖了从前端到后端的完整流程。首先,它介绍了Java编程语言的基础知识,为初学者提供了必要的背景。接着,教程引导读者学习如何使用Java中... [详细]

赞

踩

- 开发工具:Eclipse/IDEAJDK版本:jdk1.8Mysql版本:5.7Java+Swing+Mysql主要功能包括1.管理学生信息,其中包括添加,删除,修改等操作。2.管理课程信息,其中包括添加,删除,修改等操作。3.管理选课信息... [详细]

赞

踩

- 要出栈时,如果栈二不为空,就出栈二中的元素,如果栈二为空,将栈一中的所有元素一次性的全部push到栈二中,此时就将入栈的元素全部倒转过来了,(例如入栈时在栈中的入栈顺序依次排序为182535,栈二中此时的元素入栈顺序是352518,出栈时就... [详细]

赞

踩

- Java19的未来:新特性、性能优化和更多Java19的未来:新特性、性能优化和更多目录 前言 新特性的引入1.模式匹配的扩展 2.增强的模式匹配异常处理 3.基于记录的反射 4.引入静态方... [详细]

赞

踩

- SSM框架是Spring、SpringMVC和MyBatis的集合,它简化了企业级应用的开发,提高了开发效率。通过微信平台,我们可以方便地进行沟通、协作和共享资源,为现代企业提供了一种高效、便捷的办公解决方案。管理员主要负责用户管理、部门管... [详细]

赞

踩

- 通过yaml配置接口操作和用例_java接口自动化测试框架java接口自动化测试框架需求分析需求点需求分析通过yaml配置接口操作和用例后续新增接口和测试用例只需要编写yaml文件即可实现。可以支持接口间的参数传递具有参数依赖的接口可以进行... [详细]

赞

踩

- 在本博文中,我们将探讨如何使用Java重命名AmazonS3存储桶中的对象(文件或文件夹)。AmazonSimpleStorageService(AmazonS3)是一种流行的云存储服务。它允许用户在云中存储和检索具有高持久性、可用性和可扩... [详细]

赞

踩

- Java新手如何使用SpringMVC中的查询字符串和查询参数Java新手如何使用SpringMVC中的查询字符串和查询参数目录前言 什么是查询字符串和查询参数?SpringMVC中的查询参数 处理可选参数处... [详细]

赞

踩

- 大家好,我是栗筝i,从2022年10月份开始,我将全面梳理Java技术栈的相关内容,一方面是对自己学习内容进行整合梳理,另一方面是希望对大家有所帮助,使我们一同进步。在更新上我将尽量保证文章的高频、高质、高量,希望大家积极交流监督,以此让我... [详细]

赞

踩

- 数据库的课程设计,题目[机票预订系统],(javasql代码系统说明书)的下载地址放在末尾。_基于mysql,设计并实现一个简单的旅行预订系统。该系统涉及的信息有航班、大巴班车基于mysql,设计并实现一个简单的旅行预订系统。该系统涉及的信... [详细]

赞

踩

- 基于Javaswing+MySQL实现学生信息管理系统:功能:1录入学生基本信息的功能;2查询学生基本信息的功能;3修改学生基本信息的功能;4删除学生基本信息的功能;5显示所有学生信息的功能;应付一般课设足矣,分享给大家。如有需要:http... [详细]

赞

踩

- 最近CSDN开展了猿创征文,希望博主写文章讲述自己在某个领域的技术成长历程。之前也曾想找个机会写篇文章,记录下自己的成长历程。因此,借着这个机会写下这篇文章。!_弃文就工弃文就工一、前言1.1背景最近CSDN开展了猿创征文,希望博主写文章讲... [详细]

赞

踩

- 2022.11开始华为机试Od应该加新题了,优先更新最新的题目。_华为od机试真题华为od机试真题 各语言题库:【Python+JS+Java合集】【超值优惠】:Py/JS/Java合集【Python】:Python真题题库【Ja... [详细]

赞

踩

- OD,全称(OutsourcingDispacth)模式,目前华为和德科联合招聘的简称。华为社招基本都是OD招聘,17级以下都是OD模式(13-17)。_华为od机试-2023真题-考点分类华为od机试-2023真题-考点分类华为OD机考:... [详细]

赞

踩

- 项目运行环境配置:Jdk1.8+Tomcat7.0+Mysql+HBuilderX(Webstorm也行)+Eclispe(IntelliJIDEA,Eclispe,MyEclispe,Sts都支持)。项目技术:SSM+mybatis+Ma... [详细]

赞

踩

- 【毕业设计】基于SpringBoot+Vue高校校园点餐系统_校园点餐系统:点餐、食堂管理、商户管理和菜品管理(java和mysql)校园点餐系统:点餐、食堂管理、商户管理和菜品管理(java和mysql)文章目录1、效果演示2、前言介绍3... [详细]

赞

踩

- 演示视频项目源码、文档、数据库脚本下载地址:https://github.com/Chaim16/CurseManager.git一、描述1.数据库“学生管理”中“课程”表如下:用java设计一个应用程序,实现对“课程”表的增、删、改、查... [详细]

赞

踩

- 选题背景:随着社会的发展和科技的进步,校园卡食堂前端管理系统逐渐成为高校食堂管理的重要组成部分。传统的人工管理方式存在诸多问题,如信息不透明、效率低下、易出错等。而引入校园卡食堂前端管理系统可以有效解决这些问题,提升食堂管理的科学性和智能化... [详细]

赞

踩

- 我所设计的教务管理系统包括6大部分,学生信息、教师信息、课程信息、班级信息、选课信息、教授信息。该系统可以通过不同的群体进行操作:学生群体可以对个人信息、班级、教师信息进行查询操作,对于课程则可以进行选课操作;教师群体可以个人信息、班级信息... [详细]

赞

踩

- javase计算机专业技能-Java专项练习(选择题):1.类ABC定义如下:1.publicclassABC{2.publicintmax(inta,intb){}3.4.}将以下哪个方法插入行3是不合法的。()。publicfloatm... [详细]

赞

踩

![[ 云计算 | AWS 实践 ] Java 如何重命名 Amazon S3 中的文件和文件夹_java pom amazons3](https://img-blog.csdnimg.cn/direct/f6b4b45ef01445aa9a147e7c5f1a8f15.png?x-oss-process=image/resize,m_fixed,h_300,image/format,png)