- 1微信小程序自定义弹出框组件,模拟wx.showModal_wx.showmodal源码

- 2sd卡卡槽_SD卡面包板插槽DIY图解

- 3Unity基础——List的用法_unity list

- 4Vue中使用require.context()自动引入组件和自动生成路由的方法介绍_vue自动引入组件

- 5STM32的三种Boot模式的差异_system memory启动方式

- 6stm32启动过程、cortex-m3架构、堆栈代码位置、编译汇编链接分析

- 7element-ui el-table 数据变化时表格出现抖动_el-table抖动

- 8java搭建阿里云服务器环境(java环境+mysql+tomcat)和部署 JavaWeb 项目到云服务器(十分详细)_tomcat+mysql的项目迁移到移动云上

- 9ue5.2 数字孪生(11)——Web_UI插件网页通信_ue jsonlibrary-5.2下载

- 10【Java学习路线之JavaWeb】Spring Cloud教程(非常详细)_spring cloud web应用

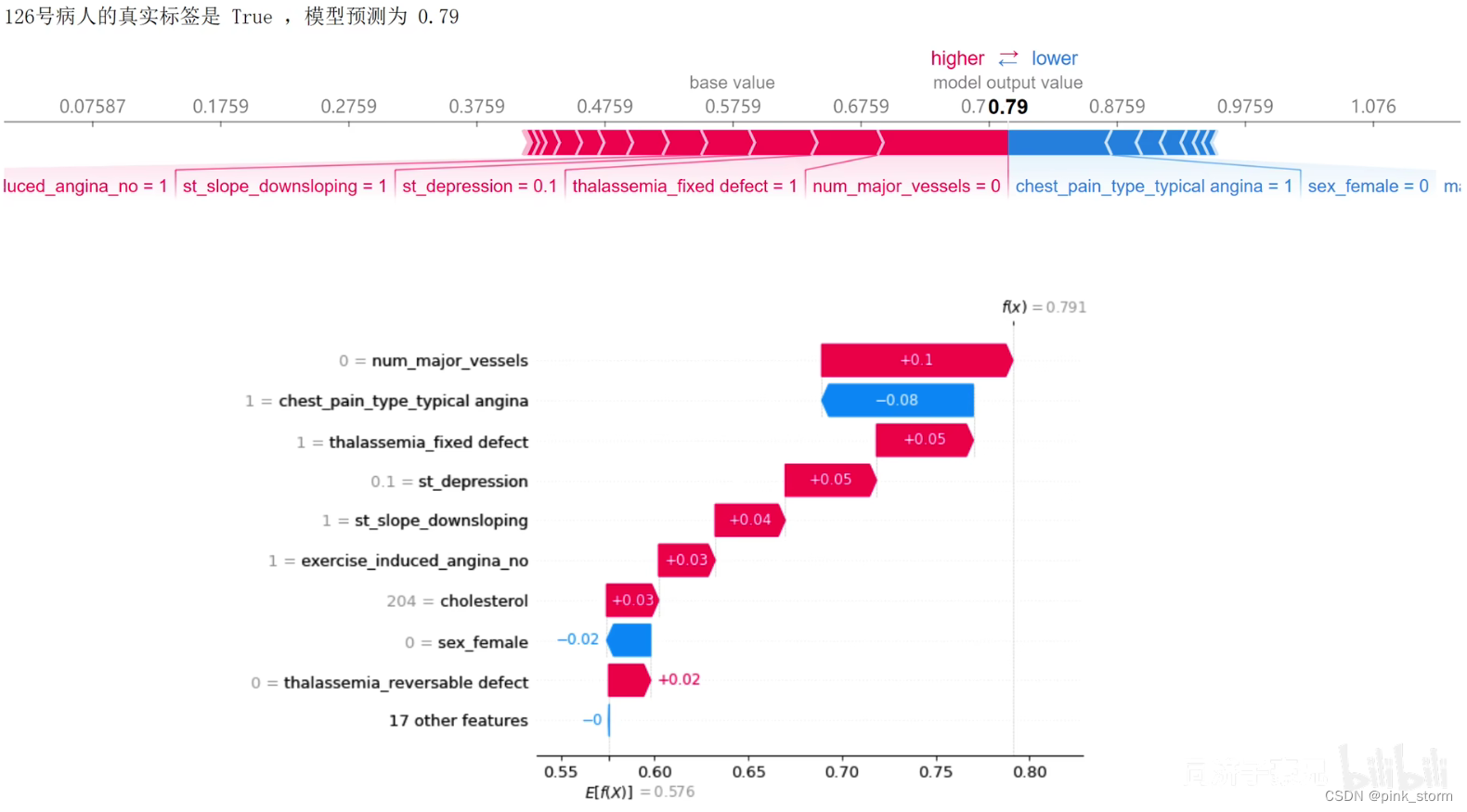

[可解释机器学习]Task07:LIME、shap代码实战_shap的pytorch

赞

踩

GPU平台:Kaggle

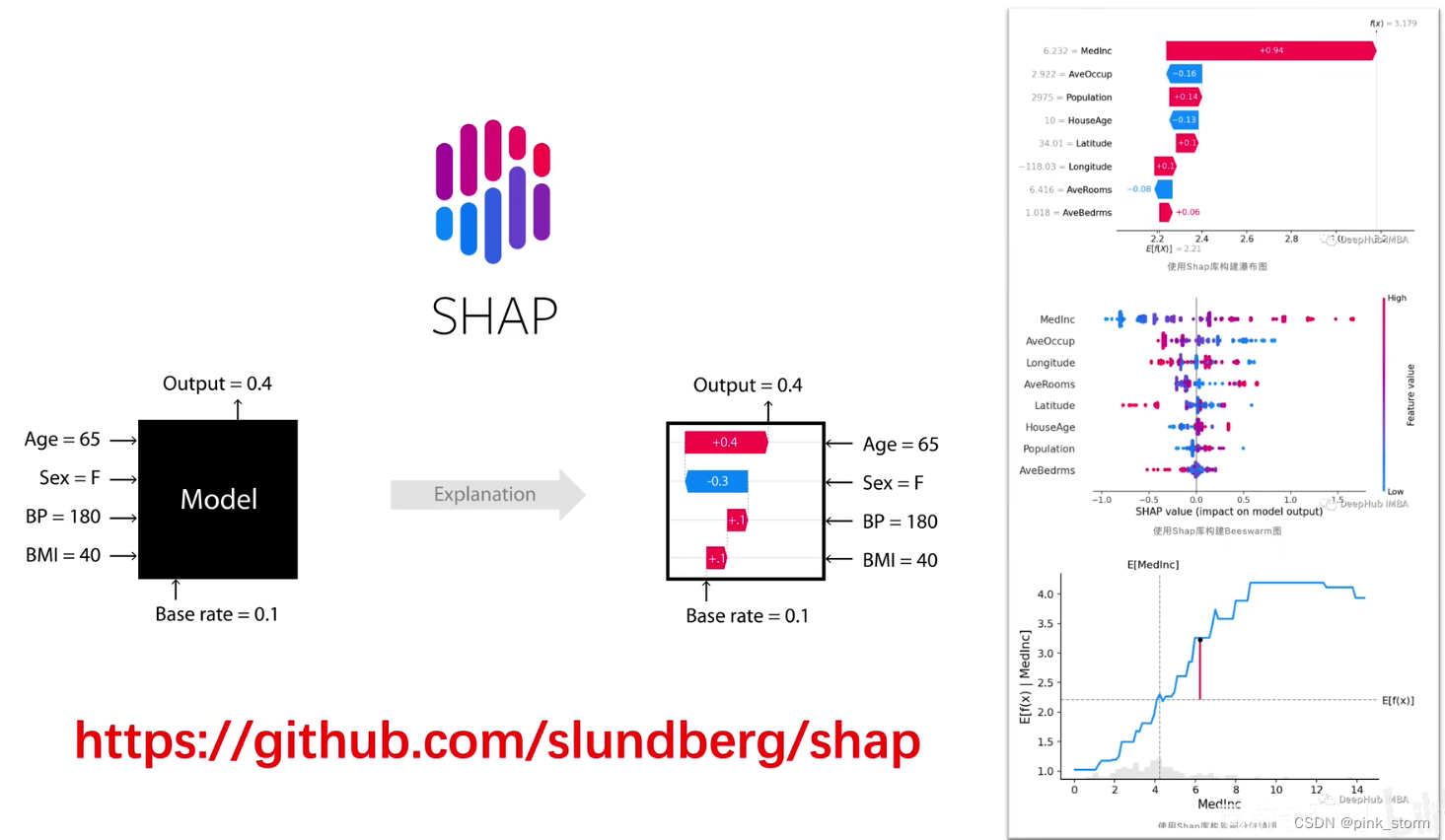

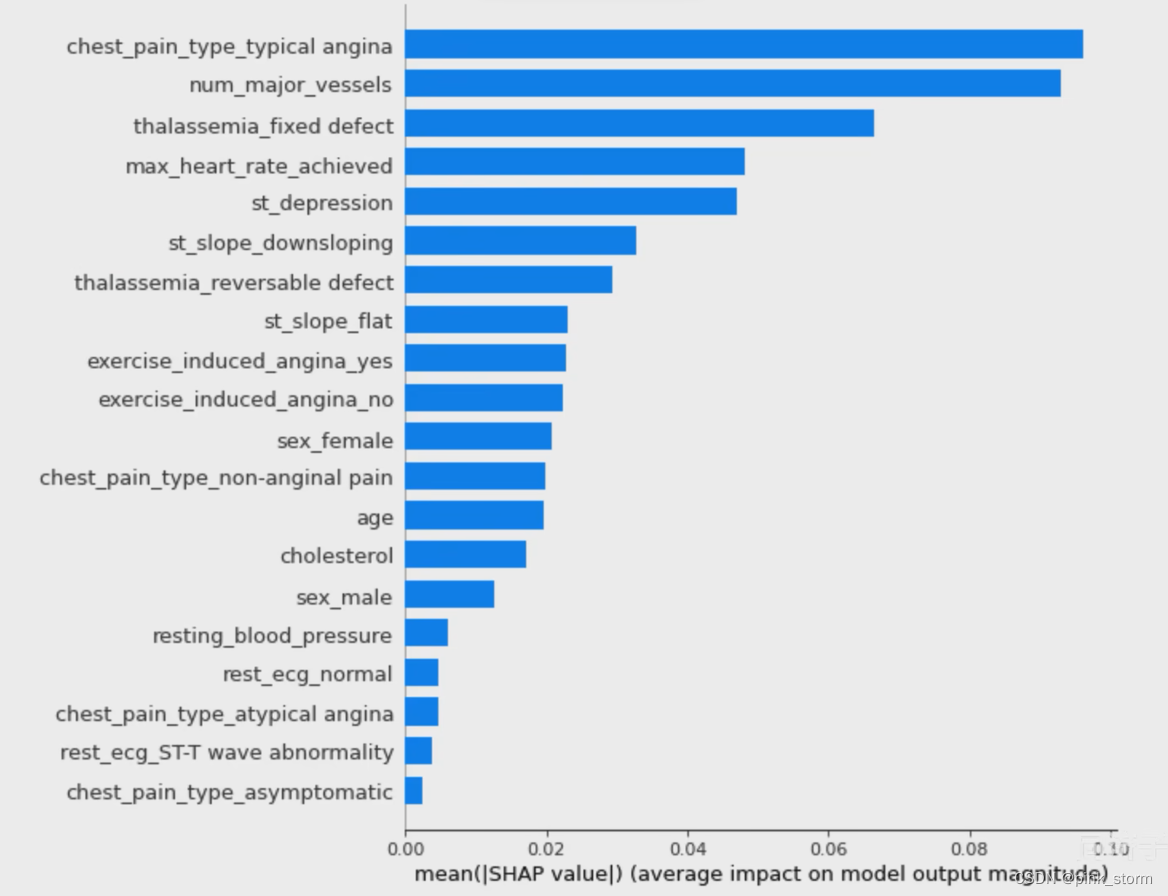

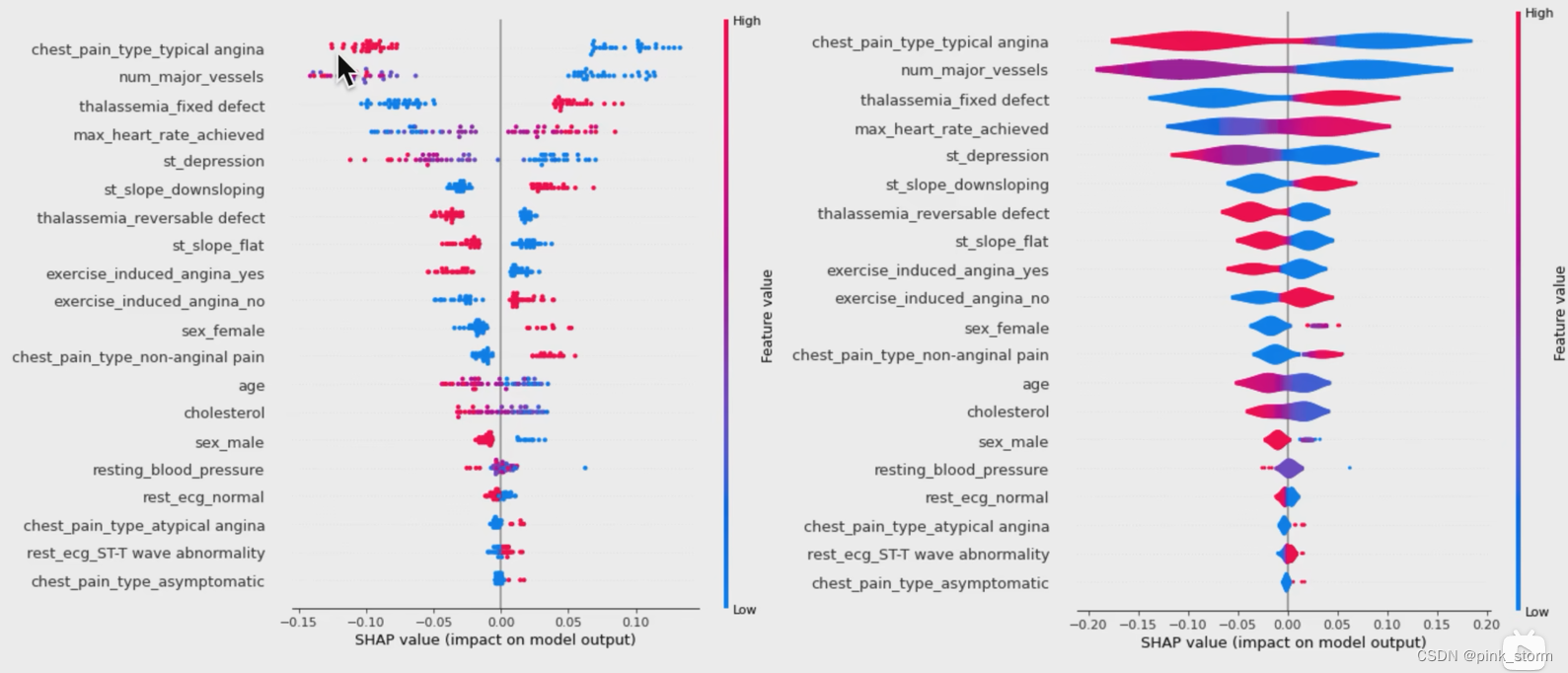

一、shap工具包

第一部分:基于shapley值的机器学习可解释分析

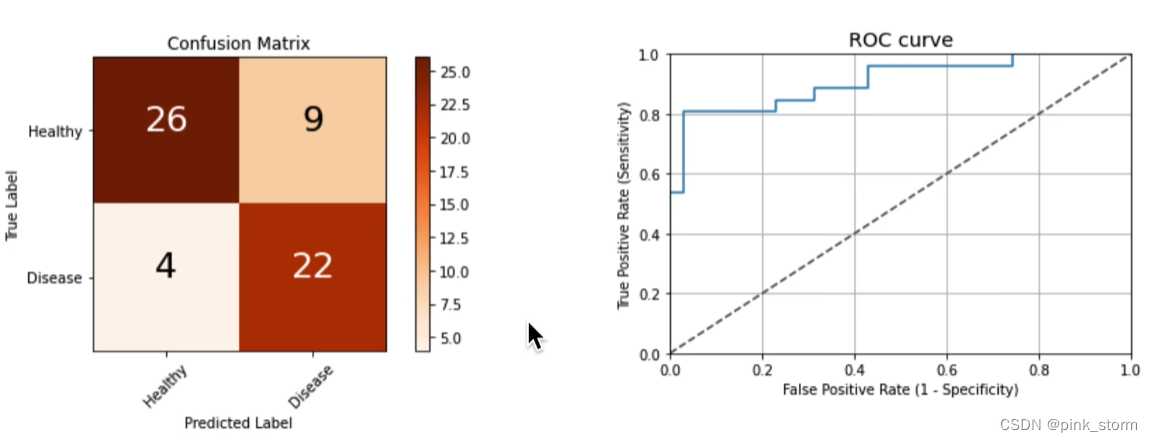

第二部分:代码实战-UCl心脏病二分类随机森林可解释性分析

第三部分:使用SHAP工具包对pytorch的图像分类模型进行可解释分析

第一部分:基于shapley值的机器学习可解释分析

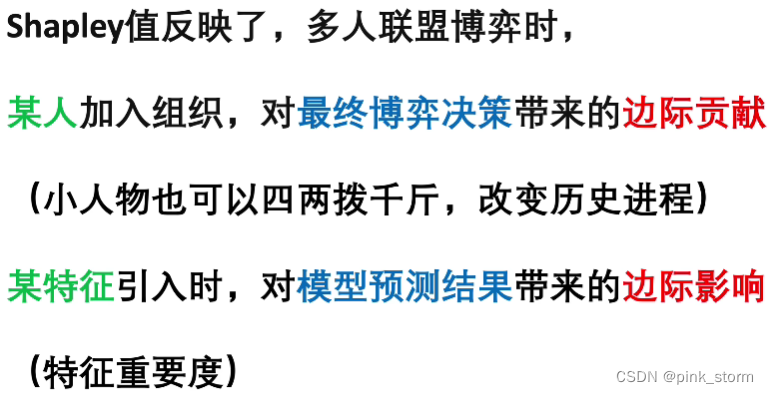

- 什么是Shapley值?博弈论中的Shapley值

在机器学习中,Shapley值反映了特定样本的特征重要度

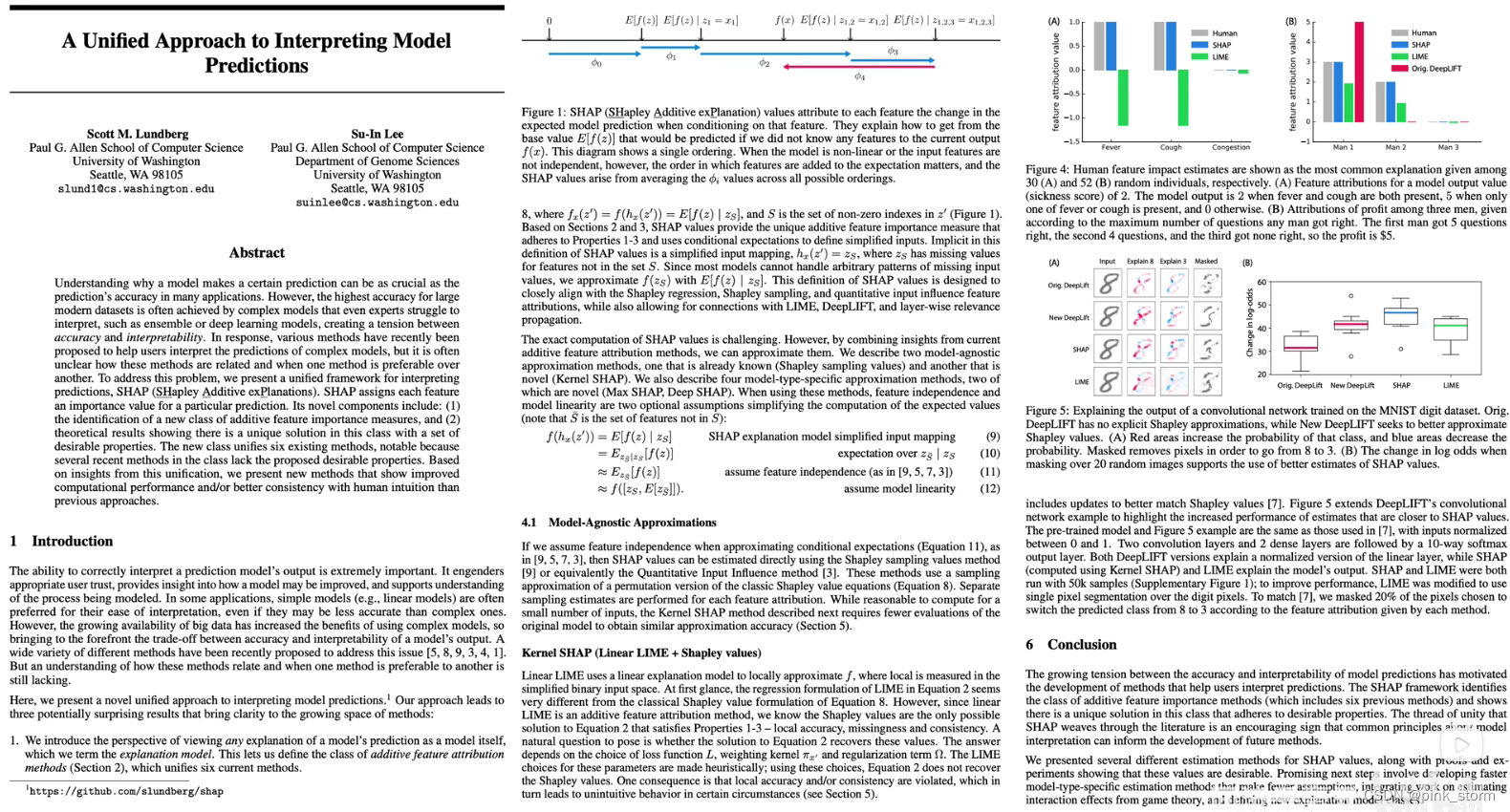

相关论文如下:

- 介绍一下shap工具包

这里是做简略描述,具体还得看具体讲解

模型在测试集上表现不错

但在实际上模型到底有没有学到关键特征呢,是不是符合人类常识?需要可解释分析

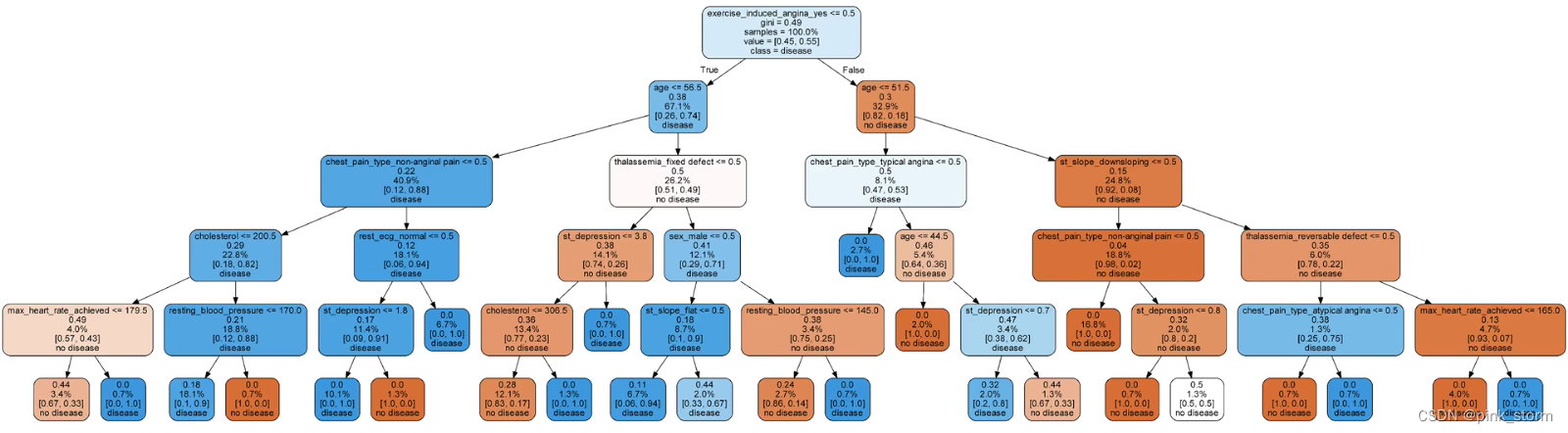

可以选本身可解释性就很好的模型

如:决策树

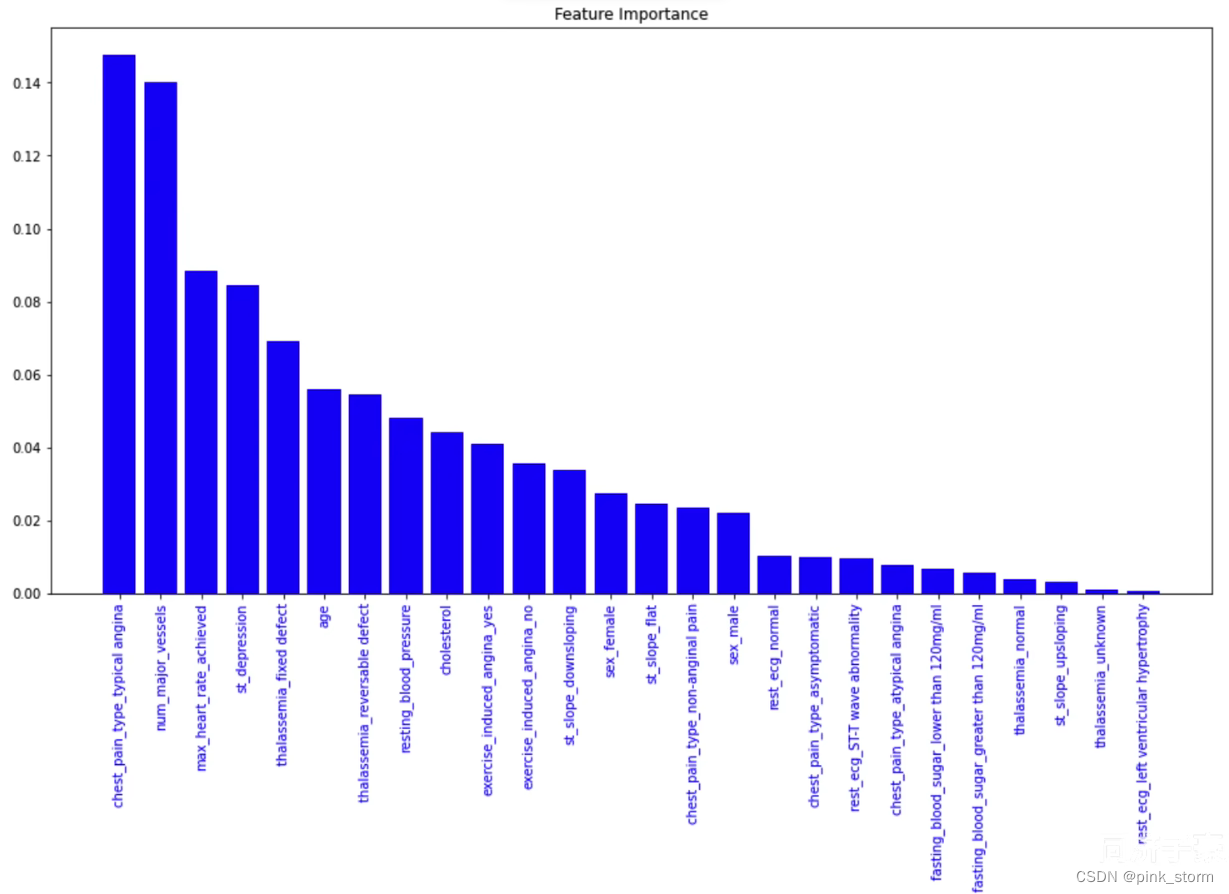

随机森林的特征重要性

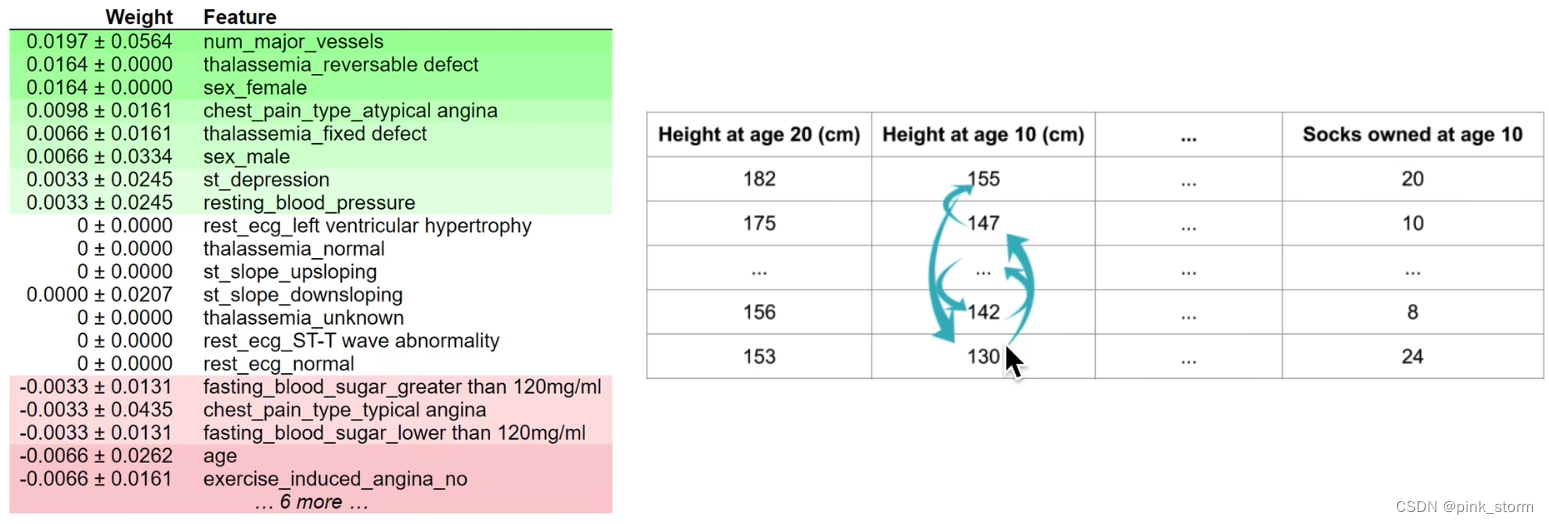

置换特征重要性

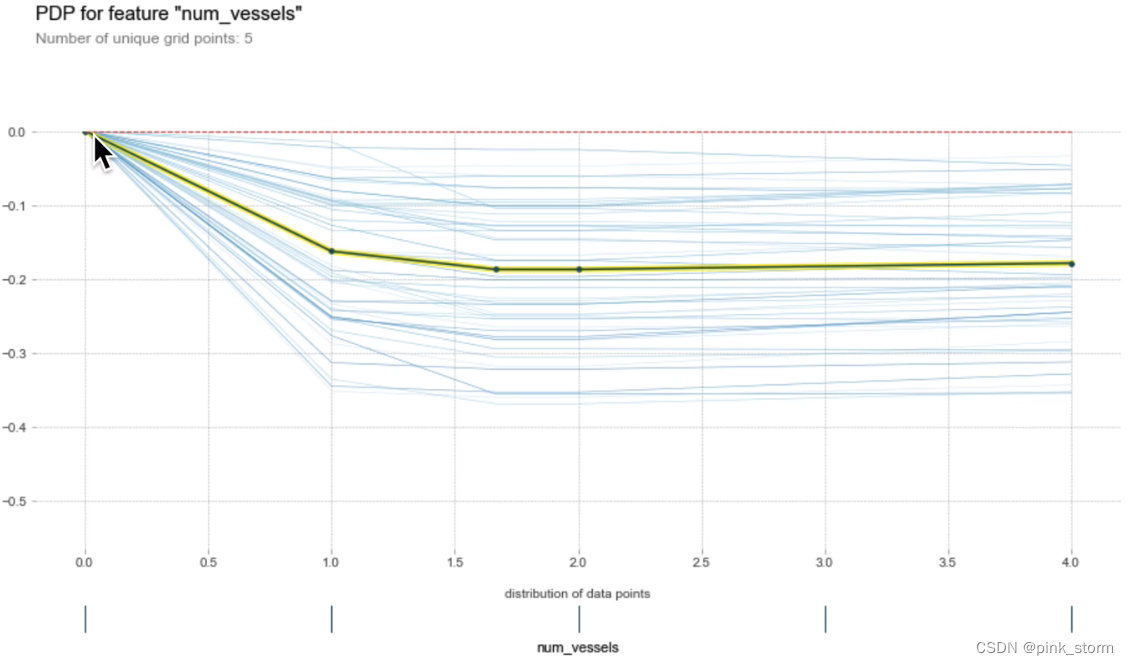

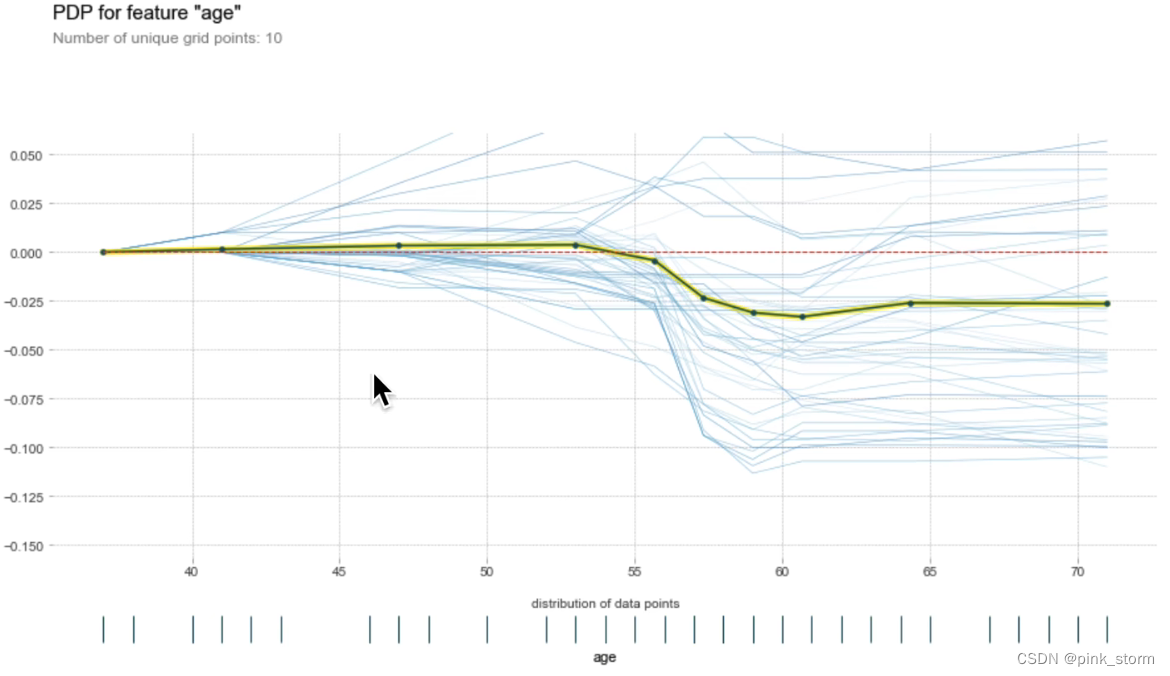

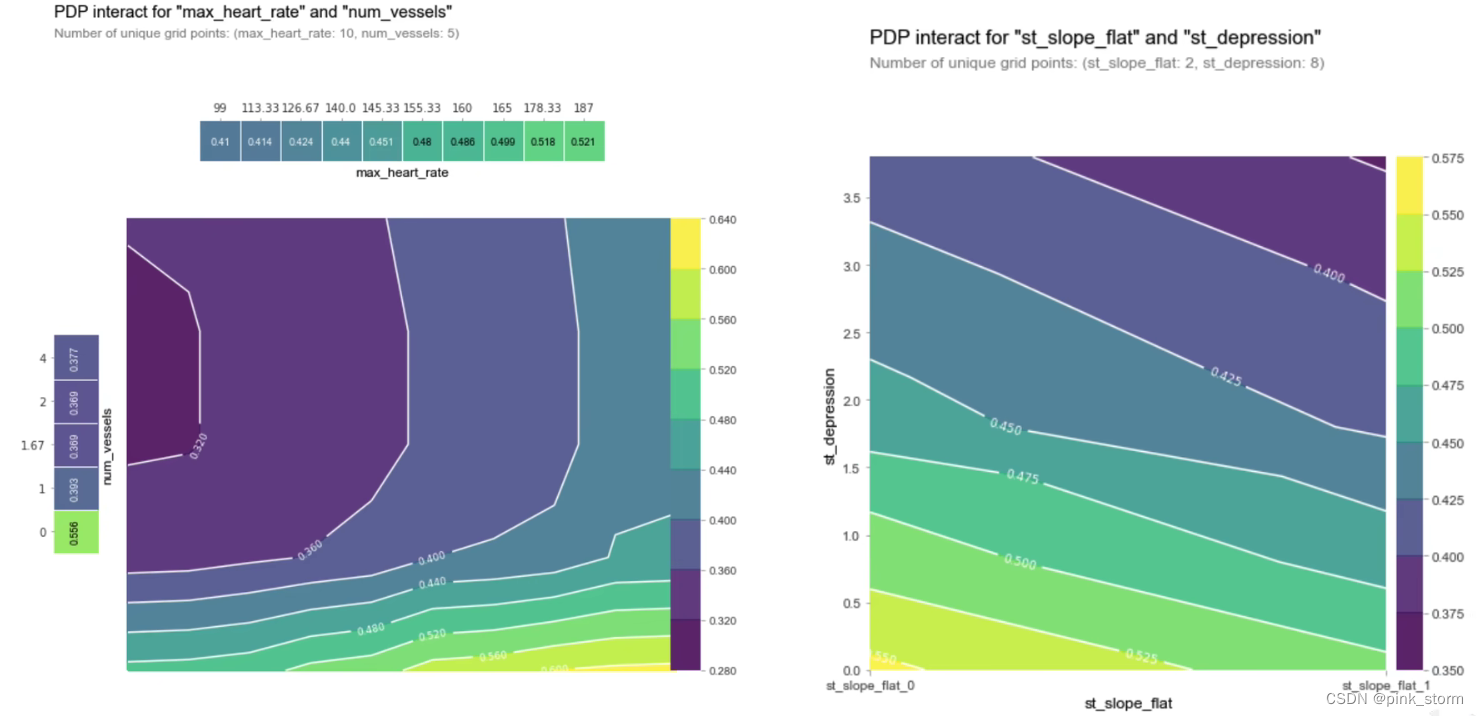

PDP图与ICE图

第三部分:使用SHAP工具包对pytorch的图像分类模型进行可解释分析

A-安装配置环境

- ##下载shap工具包

- !pip install numpy pandas matplotlib requests tqdm opencv-python pillow shap tensorflow keras -i https://pypi.tuna.tsinghua.edu.cn/simple

-

- ##验证shap工具包安装成功

- import shap

-

- ##下载安装Pytorch

- !pip3 install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu113

-

- ##创建目录

- import os

-

- #存放测试图片

- os.mkdir('test_img')

-

- #存放结果文件

- os.mkdir('output')

-

- #存放标注文件

- os.mkdir('data')

-

- ##下载中文字体文件

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/dataset/SimHei.ttf -P data

-

- ##下载ImageNet1000类别信息

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/dataset/meta_data/imagenet_class_index.csv -P data

-

- ##下载训练好的水果图像分类模型,及类别名称信息

- # 下载样例模型文件

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/checkpoints/fruit30_pytorch_20220814.pth -P checkpoint

-

- # 下载 类别名称 和 ID索引号 的映射字典

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/dataset/fruit30/labels_to_idx.npy -P data

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/dataset/fruit30/idx_to_labels.npy -P data

-

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220919-explain/imagenet_class_index.json -P data

-

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/dataset/fruit30/idx_to_labels_en.npy -P data

-

- ##下载测试图像文件至test_img文件夹

- # 边牧犬,来源:https://www.woopets.fr/assets/races/000/066/big-portrait/border-collie.jpg

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/test/border-collie.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/test/cat_dog.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/test/0818/room_video.mp4 -P test_img

-

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/test/swan-3299528_1280.jpg -P test_img

-

- # 草莓图像,来源:https://www.pexels.com/zh-cn/photo/4828489/

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/test/0818/test_草莓.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.myhuaweicloud.com/20220716-mmclassification/test/0818/test_fruits.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.myhuaweicloud.com/20220716-mmclassification/test/0818/test_orange_2.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/test/banana-kiwi.png -P test_img

-

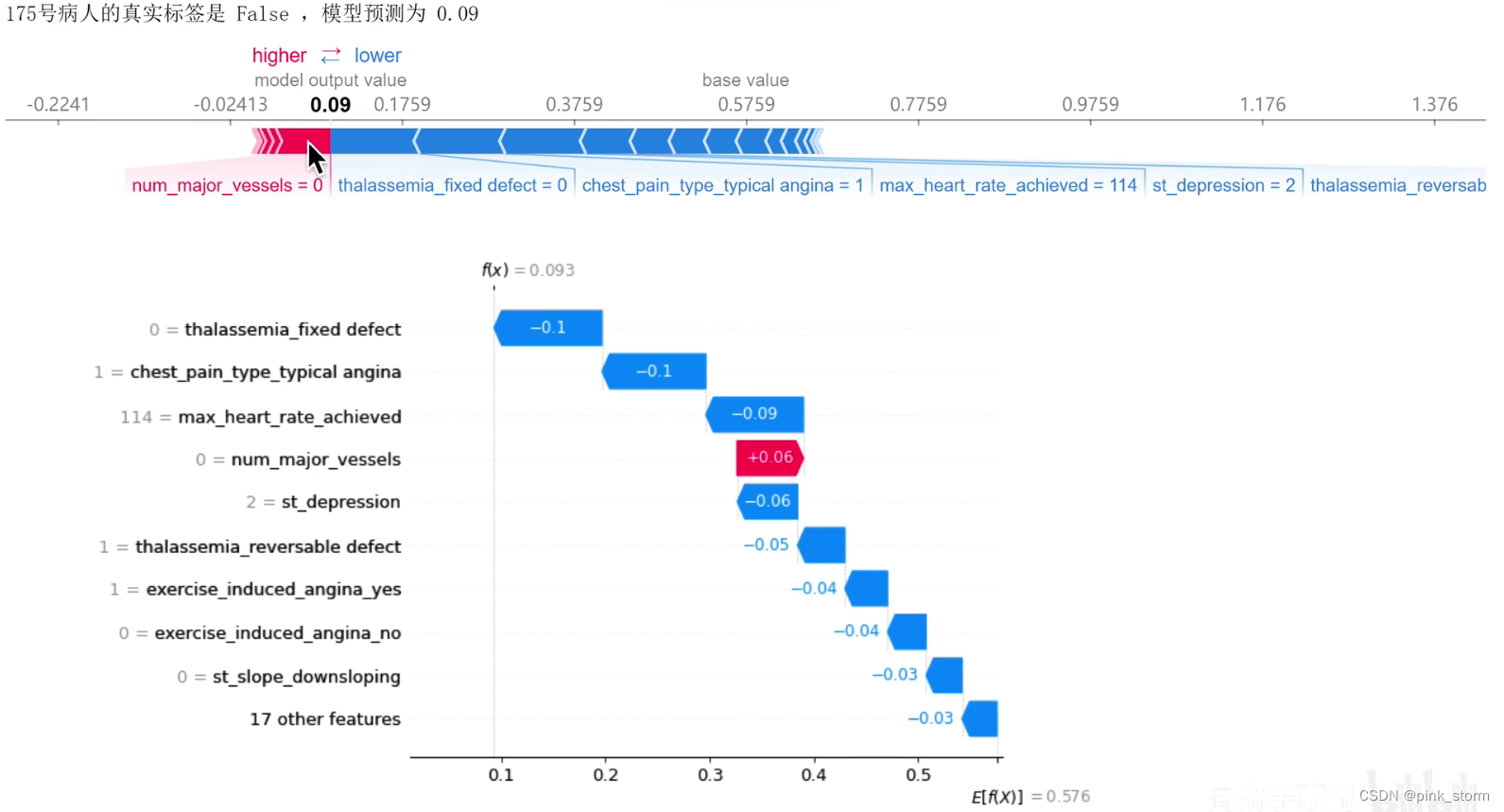

- ##设置matplotlib中文字体

- import matplotlib.pyplot as plt

- %matplotlib inline

-

- # # windows操作系统

- # plt.rcParams['font.sans-serif']=['SimHei'] # 用来正常显示中文标签

- # plt.rcParams['axes.unicode_minus']=False # 用来正常显示负号

-

- # Mac操作系统,参考 https://www.ngui.cc/51cto/show-727683.html

- # 下载 simhei.ttf 字体文件

- # !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/dataset/SimHei.ttf

-

- # Linux操作系统,例如 云GPU平台:https://featurize.cn/?s=d7ce99f842414bfcaea5662a97581bd1

- # 如果报错 Unable to establish SSL connection.,重新运行本代码块即可

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/dataset/SimHei.ttf -O /environment/miniconda3/lib/python3.7/site-packages/matplotlib/mpl-data/fonts/ttf/SimHei.ttf --no-check-certificate

- !rm -rf /home/featurize/.cache/matplotlib

-

- import matplotlib

- import matplotlib.pyplot as plt

- %matplotlib inline

- matplotlib.rc("font",family='SimHei') # 中文字体

- plt.rcParams['axes.unicode_minus']=False # 用来正常显示负号

-

- plt.plot([1,2,3], [100,500,300])

- plt.title('matplotlib中文字体测试', fontsize=25)

- plt.xlabel('X轴', fontsize=15)

- plt.ylabel('Y轴', fontsize=15)

- plt.show()

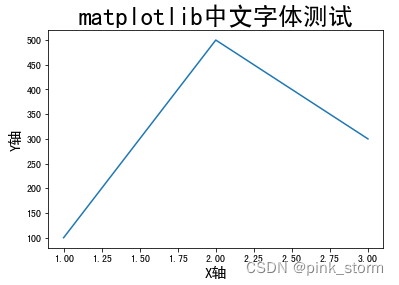

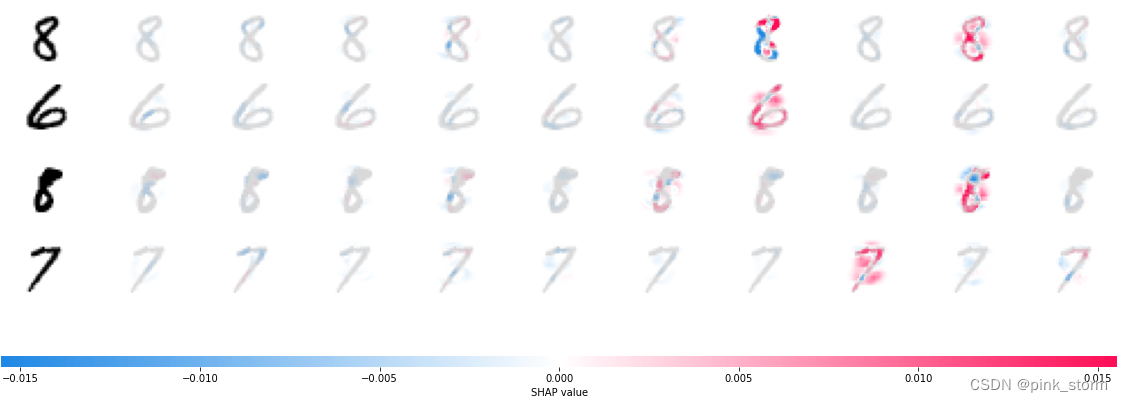

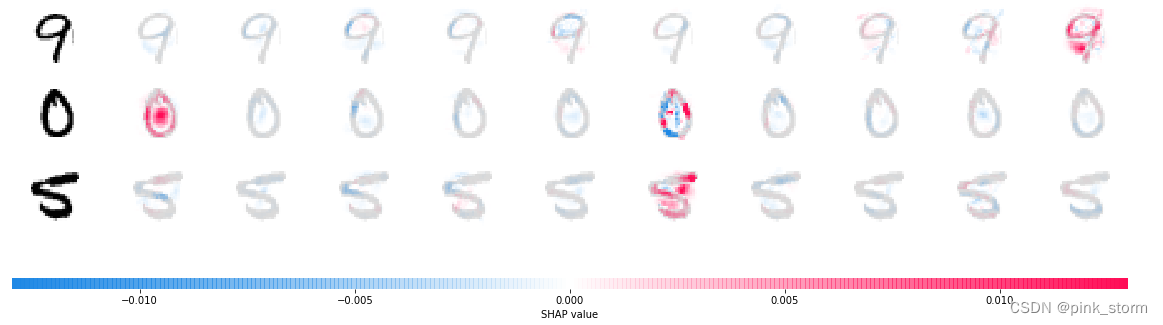

B-Pytorch-MNIST分类可解释性分析

用Pythorch构建简单的 卷积神经网络,在MNIST手写数字数据集上,使用shap的Deep Explainer进行可解释性分析。

可视化每一张图像的每一个像素,对模型预测为每一个类别的影响。

- ##导入工具包

- import torch, torchvision

- from torchvision import datasets, transforms

- from torch import nn, optim

- from torch.nn import functional as F

-

- import numpy as np

- import shap

-

- ##用Pytorch构建卷积神经网络

- class Net(nn.Module):

- def __init__(self):

- super(Net, self).__init__()

-

- self.conv_layers = nn.Sequential(

- nn.Conv2d(1, 10, kernel_size=5),

- nn.MaxPool2d(2),

- nn.ReLU(),

- nn.Conv2d(10, 20, kernel_size=5),

- nn.Dropout(),

- nn.MaxPool2d(2),

- nn.ReLU(),

- )

- self.fc_layers = nn.Sequential(

- nn.Liner(320, 50),

- nn.ReLU(),

- nn.Dropout(),

- nn.Linear(50, 10),

- nn.Softmax(dim=1)

- )

- def forward(self, x):

- x = self.conv_layers(x)

- x = x.view(-1, 320)

- x = self.fc_layers(x)

- return x

-

- ##初始化模型

- model = Net()

- optimizer = optim.SGD(model.parameters(), 1r=0.01, momentum=0.5)

-

- ##载入MNIST数据集

- train_dataset = dataset.MNIST('mnist_data',

- train=True,

- download=True,

- transform=transforms.Compose([transforms.ToTensor()]))

-

- test_dataset = dataset.MNIST('mnist_data',

- train=False,

- download=True,

- transform=transforms.Compose([transforms.ToTensor()]))

-

- bantch_size = 256

- train_loader = torch.utils.data.DataLoader(

- train_dataset,

- batch_size=ban_size,

- shuffle=True)

-

- test_loader = torch.utils.data.DataLoader(

- test_dataset,

- batch_size=ban_size,

- shuffle=True)

-

- ##训练模型

- num_epochs = 5

- device = torch.device('cpu')

-

- def train(model, device, train_loader, optimizer, epoch):

- #训练一个epoch

- model.train()

- for batch_idx, (data, target) in enumerate(train_loader):

- data, target = data.to(device), target.to(device)

- optimizer.zero_grad()

- output = model(data)

- loss = F.null_loss(output.log(), target)

- loss.backward()

- optimizer.step()

- if batch_idx % 100 == 0:

- print('Train Epoch: {} [{} / {} ({:.0f}%)]\tLoss: {:.6f}'.format(

- epoch, batch_idx * len(data), len(train_loader.dataset),

- 100. *batch_idx / len(train_loader), loss.item()))

-

- def test(model, device, test_loader):

- # 测试一个 epoch

- model.eval()

- test_loss = 0

- correct = 0

- with torch.no_grad():

- for data, target in test_loader:

- data, target = data.to(device), target.to(device)

- output = model(data)

- test_loss += F.nll_loss(output.log(), target).item() # sum up batch loss

- pred = output.max(1, keepdim=True)[1] # get the index of the max log-probability

- correct += pred.eq(target.view_as(pred)).sum().item()

-

- test_loss /= len(test_loader.dataset)

- print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

- test_loss, correct, len(test_loader.dataset),

- 100. * correct / len(test_loader.dataset)))

-

- for epoch in range(1, num_epochs + 1):

- train(model, device, train_loader, optimizer, epoch)

- test(model, device, test_loader)

- ##获取背景样本和测试样本

- images, labels = next(iter(test_loader))

-

- images.shape

-

- #B背景图像样本

- background = images[:250]

-

- #测试图像样本

- test_images = images[250:254]

-

- ##初始化Deep Explainer

- e = shap.DeepExplainer(model, background)

-

- ##计算每个类别、每张测试图像、每个像素,对应的shap值

- shap_values = e.shap_values(test_images)

-

- #类别1,所有测试图像,每个像素的shap值

- print(shap_values[1].shape)

输出结果为(4, 1, 28, 28)

- ##整理张量结构

- #shap值

- shap_numpy = [np.swapaxes(np.swapaxes(s, 1, -1), 1, 2) for s in shap_values]

-

- #测试图像

- test_numpy = np.swapaxes(np.swapaxes(test_images.numpy(), 1, -1), 1, 2)

-

- ##可视化

- print(shap.image_plot(shap_numpy, -test_numpy))

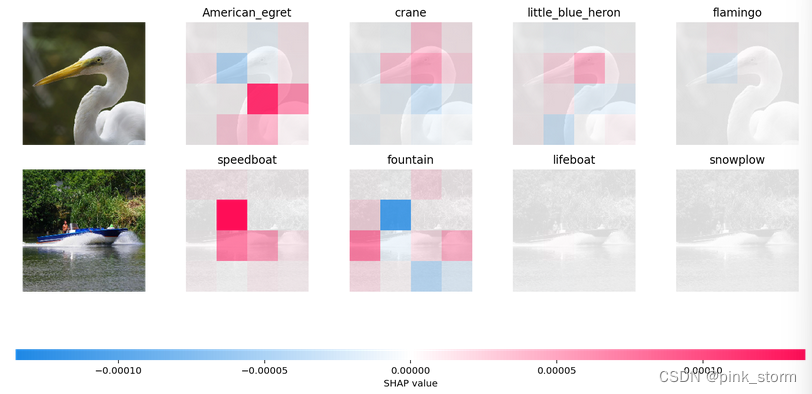

展示了每个测试图像样本的每个像素,对10个类别的shap值。

红色代表shap正值:对模型预测为该类别有正向作用

蓝色代表shap正值:对模型预测为该类别有正向作用

AI告诉我们,它认为7和9的区别,2和3的区别等等

#无论像素值高低,都可能对某个类别产生较大影响

钱和你对我都不重要,没有你,对我很重要。

—电影"让子弹飞"台词

扩展阅读:

这部分放在另一个文里

https://github.com/slundberg/shap/tree/master/notebooks/image_examples/image_classification

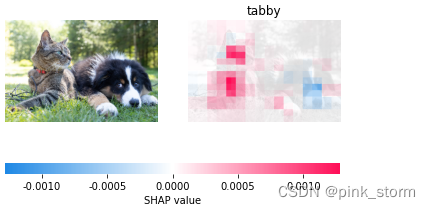

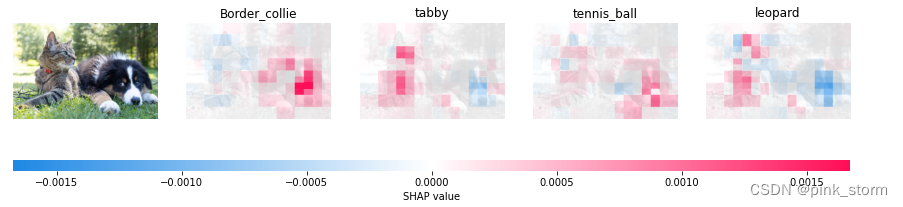

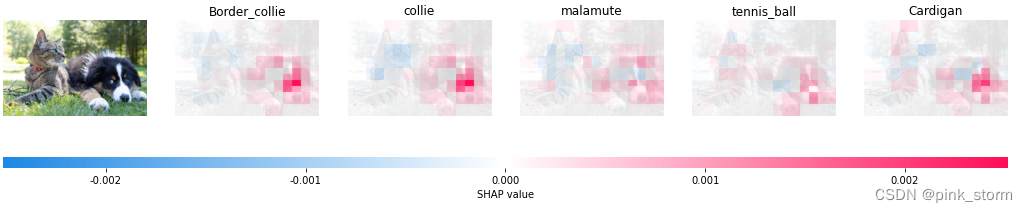

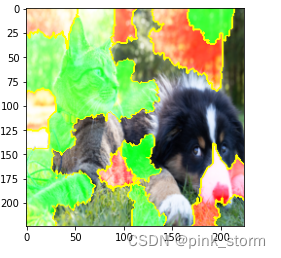

C1-Pytorch-预训练ImageNet图像分类可解释分析

对Pytorch模型中的ImageNet预训练图像分类模型进行可解释性分析,可视化指定预测类别的shap值热力图

- ##导入工具包

- import json

- import numpy as np

- from PIL import Image

- import torch

- import torch.nn as nn

- import torchvision

- from torchvision import transforms

- import shap

-

- # 有 GPU 就用 GPU,没有就用 CPU

- device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

- print('device', device)

-

- ##载入ImageNet预训练图像分类模型

- model = torchvision.models.mobilenet_v2(pretrained=True, progress=False).eval().to(device)

-

- ##载入ImageNet1000类别标注名称

- with open('data/imagenet_class_index.json') as file:

- class_names = [v[1] for v in json.load(file).values()]

-

- ##载入一张测试图像,整理维度

- img_path = 'test_img/cat_dog.jpg'

-

- img_pil = Image.open(img_path)

- X = torch.Tensor(np.array(img_pil)).unsqueeze(0)

-

- ##预处理

- mean = [0.485, 0.456, 0.406]

- std = [0.229, 0.224, 0.225]

-

- def nhwc_to_nchw(x: torch.Tensor) -> torch.Tensor:

- if x.dim() == 4:

- x = x if x.shape[1] == 3 else x.permute(0, 3, 1, 2)

- elif x.dim() == 3:

- x = x if x.shape[0] == 3 else x.permute(2, 0, 1)

- return x

-

- def nchw_to_nhwc(x: torch.Tensor) -> torch.Tensor:

- if x.dim() == 4:

- x = x if x.shape[3] == 3 else x.permute(0, 2, 3, 1)

- elif x.dim() == 3:

- x = x if x.shape[2] == 3 else x.permute(1, 2, 0)

- return x

-

-

- transform= [

- transforms.Lambda(nhwc_to_nchw),

- transforms.Resize(224),

- transforms.Lambda(lambda x: x*(1/255)),

- transforms.Normalize(mean=mean, std=std),

- transforms.Lambda(nchw_to_nhwc),

- ]

-

- inv_transform= [

- transforms.Lambda(nhwc_to_nchw),

- transforms.Normalize(

- mean = (-1 * np.array(mean) / np.array(std)).tolist(),

- std = (1 / np.array(std)).tolist()

- ),

- transforms.Lambda(nchw_to_nhwc),

- ]

-

- transform = torchvision.transforms.Compose(transform)

- inv_transform = torchvision.transforms.Compose(inv_transform)

-

- ##构建模型预测函数

- def predict(img: np.ndarray) -> torch.Tensor:

- img = nhwc_to_nchw(torch.Tensor(img)).to(device)

- output = model(img)

- return output

-

- def predict(img):

- img = nhwc_to_nchw(torch.Tensor(img)).to(device)

- output = model(img)

- return output

-

- Xtr = transform(X)

- out = predict(Xtr[0:1])

-

- classes = torch.argmax(out, axis=1).detach().cpu().numpy()

- print(f'Classes: {classes}: {np.array(class_names)[classes]}')

-

输出为: classes:[232]:[ 'Border_collie']

- ##设置shap可解释性分析算法

- #构造输入图像

- input_img = Xtr[0].unsqueeze(0)

-

- batch_size = 50

- n_evals = 5000 #迭代次数越大,显著性分析粒度越细,计算消耗时间越长

-

- #定义mask,遮盖输入图像上的局部区域

- masker_blur = shap.maskers.Image("blur(64, 64)", Xtr[0].shape)

-

- #创建可解释分析算法

- explainer = shap.Explainer(predict, masker_blur, output_names=class_names)

-

- ##指定单个预测类别

- # 281:虎斑猫 tabby

- shap_values = explainer(input_img, max_evals=n_evals, batch_size=batch_size, outputs=[281])

-

- # 整理张量维度

- shap_values.data = inv_transform(shap_values.data).cpu().numpy()[0] # 原图

- shap_values.values = [val for val in np.moveaxis(shap_values.values[0],-1, 0)] # shap值热力图

-

-

- # 可视化

- shap.image_plot(shap_values=shap_values.values,

- pixel_values=shap_values.data,

- labels=shap_values.output_names)

- ##指定多个预测类别

- # 232 边牧犬 border collie

- # 281:虎斑猫 tabby

- # 852 网球 tennis ball

- # 288 豹子 leopard

- shap_values = explainer(input_img, max_evals=n_evals, batch_size=batch_size, outputs=[232, 281, 852, 288])

-

- # 整理张量维度

- shap_values.data = inv_transform(shap_values.data).cpu().numpy()[0] # 原图

- shap_values.values = [val for val in np.moveaxis(shap_values.values[0],-1, 0)] # shap值热力图

-

- # shap值热力图:每个像素,对于每个类别的shap值

- shap_values.shape

-

- # 可视化

- shap.image_plot(shap_values=shap_values.values,

- pixel_values=shap_values.data,

- labels=shap_values.output_names)

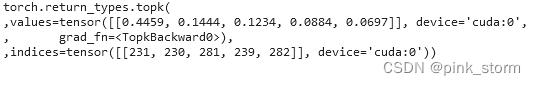

- ##前k个预测类别

- topk = 5

- shap_values = explainer(input_img, max_evals=n_evals, batch_size=batch_size, outputs=shap.Explanation.argsort.flip[:topk])

-

- # shap值热力图:每个像素,对于每个类别的shap值

- shap_values.shape

-

- # 整理张量维度

- shap_values.data = inv_transform(shap_values.data).cpu().numpy()[0] # 原图

- shap_values.values = [val for val in np.moveaxis(shap_values.values[0],-1, 0)] # 各个类别的shap值热力图

-

- # 各个类别的shap值热力图

- len(shap_values.values)

-

- # 第一个类别,shap值热力图

- shap_values.values[0].shape

-

- # 可视化

- shap.image_plot(shap_values=shap_values.values,

- pixel_values=shap_values.data,

- labels=shap_values.output_names

- )

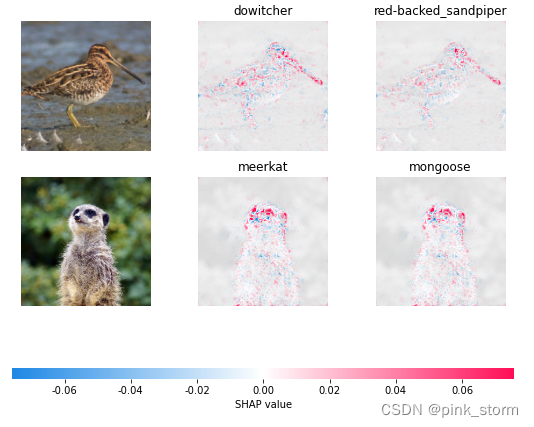

D1-Pytorch-预训练VGG中间层可解释性分析

使用shap库的GradientExplainer,对预训练VGG16模型的中间层输出,计算shap值

这个结果的运行时间格外长

- ##导入工具包

- import torch, torchvision

- from torch import nn

- from torchvision import transforms, models, datasets

- import shap

- import json

- import numpy as np

-

- ##载入模型

- model = models.vgg16(pretrained=True).eval()

-

- ##载入数据集,预处理

- mean = [0.485, 0.456, 0.406]

- std = [0.229, 0.224, 0.225]

-

- def normalize(image):

- if image.max() > 1:

- image /= 255

- image = (image - mean) / std

- # in addition, roll the axis so that they suit pytorch

- return torch.tensor(image.swapaxes(-1, 1).swapaxes(2, 3)).float()

-

- ##指定测试图像

- X, y = shap.datasets.imagenet50()

-

- X /= 255

-

- to_explain = X[[39, 41]]

-

- ##载入类别和索引号

- url = "https://s3.amazonaws.com/deep-learning-models/image-models/imagenet_class_index.json"

- fname = shap.datasets.cache(url)

- with open(fname) as f:

- class_names = json.load(f)

-

- ##计算模型中间层,在输入图像上的shap值

- # 指定中间层

- layer_index = 7

-

- # 迭代次数,200次大约需计算 5 分钟

- samples = 200

-

- ##预测类别名称

- index_names = np.vectorize(lambda x: class_names[str(x)][1])(indexes)

- print(index_names)

- ##可视化

- shap_values = [np.swapaxes(np.swapaxes(s, 2, 3), 1, -1) for s in shap_values]

-

- shap.image_plot(shap_values, to_explain, index_names)

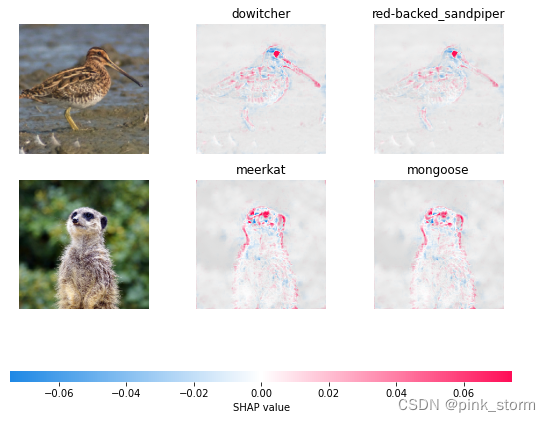

在图像上引入局部平滑

Gradient explainer的期望梯度,融合了integrated gradients, SHAP, SmoothGrad的思想,只需将local_smoothing参数设置为非0即可。在计算期望时,在输入图像加入正态分布噪声,绘制出更平滑的显著性分析图。

- # 计算模型中间层,在输入图像上的shap值

- explainer = shap.GradientExplainer((model, model.features[layer_index]), normalize(X), local_smoothing=0.5)

- shap_values, indexes = explainer.shap_values(normalize(to_explain), ranked_outputs=2, nsamples=samples)

-

- # 预测类别名称

- index_names = np.vectorize(lambda x: class_names[str(x)][1])(indexes)

-

- # 可视化

- shap_values = [np.swapaxes(np.swapaxes(s, 2, 3), 1, -1) for s in shap_values]

-

- shap.image_plot(shap_values, to_explain, index_names)

可以看出,模型浅层输出的显著性分析图,虽然具有细粒度、高分辨率,但不具有类别判别性(class discriminative)

模型深层输出的显著性分析图,虽然分辨率较低,但具有类别判别性。

D2-Tensorflow-预训练ResNet50可解释性分析

将输入图像局部遮挡,对ResNet50图像分类模型的预测结果进行可解释性分析

- ##导入工具包

- import json

- import numpy as np

- import tensorflow as tf

- from tensorflow.keras.applications.resnet50 import ResNet50, preprocess_input

- import shap

-

- ##导入预训练模型

- model = ResNet50(weights='imagenet')

-

- ##导入数据集

- X, y = shap.datasets.imagenet50()

-

- ##构建模型预测函数

- def f(x):

- tmp =

- x.copy()

- preprocess_input(tmp)

- return model(tmp)

-

- ##构建局部遮挡函数

- masker = shap.maskers.Image("inpaint_telea", X[0].shape)

-

- ##输出类别名称

- url = "https://s3.amazonaws.com/deep-learning-models/image-models/imagenet_class_index.json"

- with open(shap.datasets.cache(url)) as file:

- class_names = [v[1] for v in json.load(file).values()]

-

- ##创建Explainer

- explainer = shap.Explainer(f, masker, output_names=class_names)

-

- ##计算shap值

- shap_values = explainer(X[1:3], max_evals=100, batch_size=50, outputs=shap.Explanation.argsort.flip[:4])

-

- ##可视化

- shap.image_plot(shap_values)

原图可视化如果有误,不用担心,不影响后面几个图的shap可视化效果

- ##更加细粒度的shap计算和可视化

- masker_blur = shap.maskers.Image("blur(128,128)", X[0].shape)

-

- explainer_blur = shap.Explainer(f, masker_blur, output_names=class_names)

-

- shap_values_fine = explainer_blur(X[1:3], max_evals=5000, batch_size=50, outputs=shap.Explanation.argsort.flip[:4])

-

- shap.image_plot(shap_values_fine)

Z-扩展阅读

预备知识:

图像分类全流程:构建数据集、训练模型、预测新图、测试集评估、可解释分析、终端部署

视频教程:构建自己的图像分类数据集【两天搞定AI毕设】_哔哩哔哩_bilibili

代码教程:https://github.com/TommyZihao/Train_Custom_Dataset

UCl心脏病二分类+可解释性分析:【子豪兄Kaggle】玩转UCI心脏病二分类数据集_哔哩哔哩_bilibili

shap工具包相关

shap工具包论文:https://proceedings.neurips.cc/paper/2017/file/8a20a8621978632d76c43dfd28b67767-Paper.pdf

DataWhale公众号推送【6个机器学习可解释性框架!】:6个机器学习可解释性框架!

二、LIME工具包

A-安装配置环境

- ##安装工具包

- !pip install lime scikit-learn numpy pandas matplotlib pillow

-

- ##创建目录

- import os

-

- # 存放测试图片

- os.mkdir('test_img')

-

- # 存放模型权重文件

- os.mkdir('checkpoint')

-

- ##自己训练得到的30类水果图像分类模型

- # 下载样例模型文件

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/checkpoints/fruit30_pytorch_20220814.pth -P checkpoint

-

- # 下载 类别名称 和 ID索引号 的映射字典

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/dataset/fruit30/labels_to_idx.npy

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/dataset/fruit30/idx_to_labels.npy

-

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/test/cat_dog.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.myhuaweicloud.com/20220716-mmclassification/test/0818/test_fruits.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.myhuaweicloud.com/20220716-mmclassification/test/0818/test_orange_2.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.myhuaweicloud.com/20220716-mmclassification/test/0818/test_bananan.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.myhuaweicloud.com/20220716-mmclassification/test/0818/test_kiwi.jpg -P test_img

-

- # 草莓图像,来源:https://www.pexels.com/zh-cn/photo/4828489/

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/test/0818/test_草莓.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.myhuaweicloud.com/20220716-mmclassification/test/0818/test_石榴.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.myhuaweicloud.com/20220716-mmclassification/test/0818/test_orange.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.myhuaweicloud.com/20220716-mmclassification/test/0818/test_lemon.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.myhuaweicloud.com/20220716-mmclassification/test/0818/test_火龙果.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/test/watermelon1.jpg -P test_img

-

- !wget https://zihao-openmmlab.obs.cn-east-3.myhuaweicloud.com/20220716-mmclassification/test/banana1.jpg -P test_img

-

- import lime

- import sklearn

B-葡萄酒二分类-lime可解释分析

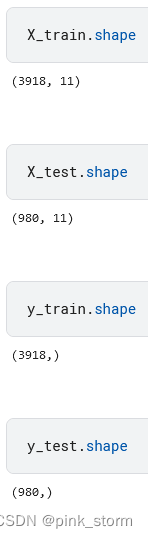

在葡萄酒质量二分类数据集上训练随机森林分类模型,对测试集样本预测结果,基于LIME进行可解释分析。

定量评估出某个样本、某个特征,对模型预测为某个类别的贡献影响。

- ##导入工具包

- import numpy as np

- import pandas as pd

-

- import lime

- from lime import lime_tabular

-

- ##载入数据集

- df = pd.read_csv('wine.csv')

-

- ##划分训练集和测试集

- from sklearn.model_selection import train_test_split

-

- X = df.drop('quality', axis=1)

- y = df['quality']

-

- X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

- ##训练模型

- from sklearn.ensemble import RandomForestClassifier

- model = RandomForestClassidier(random_state=42)

- model.fit(X_train, y_train)

![]()

- ##评估模型

- score = model.score(X_test, y_test)

- print(score)

![]()

- ##初始化LIME可解释性分析算法

- explainer = lime_tabular.LimeTabularExplainer(

- training_data=np.array(X_train), # 训练集特征,必须是 numpy 的 Array

- feature_names=X_train.columns, # 特征列名

- class_names=['bad', 'good'], # 预测类别名称

- mode='classification' # 分类模式

- )

-

- ##从测试集中选取一个样本,输入训练好的模型中预测,查看预测结果

- # idx = 1

-

- idx = 3

-

- data_test = np.array(X_test.iloc[idx]).reshape(1, -1)

- prediction = model.predict(data_test)[0]

- y_true = np.array(y_test)[idx]

- print('测试集中的 {} 号样本, 模型预测为 {}, 真实类别为 {}'.format(idx, prediction, y_true))

![]()

- ##可解释分析

- exp = explainer.explain_instance(

- data_row=X_test.iloc[idx],

- predict_fn=model.predict_proba

- )

-

- exp.show_in_notebook(show_table=True)

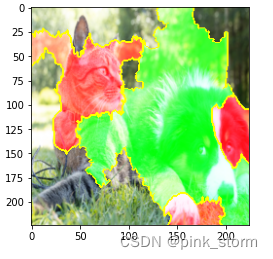

C1-LIME可解释性分析-ImageNet图像分类

对Pytorch的ImageNet预训练图像分类模型,运行LIME可解释性分析

可视化某个输入图像,某个图块区域,对模型预测为某个类别的贡献影响

- ##导入工具包

- import matplotlib.pyplot as plt

- from PIL import Image

- import torch.nn as nn

- import numpy as np

- import os, json

-

- import torch

- from torchvision import models, transforms

- from torch.autograd import Variable

- import torch.nn.functional as F

-

- # 有 GPU 就用 GPU,没有就用 CPU

- device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

- print('device', device)

-

- ##载入测试图片

- img_path = 'test_img/cat_dog.jpg'

-

- img_pil = Image.open(img_path)

-

- print(img_pil)

- ##载入模型

- model = models.inception_v3(pretrained=True).eval().to(device)

-

- ##载入ImageNet-1000类别

- idx2label, cls2label, cls2idx = [], {}, {}

- with open(os.path.abspath('imagenet_class_index.json'), 'r') as read_file:

- class_idx = json.load(read_file)

- idx2label = [class_idx[str(k)][1] for k in range(len(class_idx))]

- cls2label = {class_idx[str(k)][0]: class_idx[str(k)][1] for k in range(len(class_idx))}

- cls2idx = {class_idx[str(k)][0]: k for k in range(len(class_idx))}

-

- ##预处理

- trans_norm = transforms.Normalize(mean=[0.485, 0.456, 0.406],

- std=[0.229, 0.224, 0.225])

-

- trans_A = transforms.Compose([

- transforms.Resize((256, 256)),

- transforms.CenterCrop(224),

- transforms.ToTensor(),

- trans_norm

- ])

-

- trans_B = transforms.Compose([

- transforms.ToTensor(),

- trans_norm

- ])

-

- trans_C = transforms.Compose([

- transforms.Resize((256, 256)),

- transforms.CenterCrop(224)

- ])

-

- ##图像分类预测

- input_tensor = trans_A(img_pil).unsqueeze(0).to(device)

- pred_logits = model(input_tensor)

- pred_softmax = F.softmax(pred_logits, dim=1)

- top_n = pred_softmax.topk(5)

- print(top_n)

- ##定义分类预测函数

- def batch_predict(images):

- batch = torch.stack(tuple(trans_B(i) for i in images), dim=0)

- batch = batch.to(device)

-

- logits = model(batch)

- probs = F.softmax(logits, dim=1)

- return probs.detach().cpu().numpy()

-

- test_pred = batch_predict([trans_C(img_pil)])

- test_pred.squeeze().argmax()

输出为231

- ##LIME可解释性分析

- from lime import lime_image

-

- explainer = lime_image.LimeImageExplainer()

- explanation = explainer.explain_instance(np.array(trans_C(img_pil)),

- batch_predict, # 分类预测函数

- top_labels=5,

- hide_color=0,

- num_samples=8000) # LIME生成的邻域图像个数

-

- ##可视化

- from skimage.segmentation import mark_boundaries

-

- temp, mask = explanation.get_image_and_mask(explanation.top_labels[0], positive_only=False, num_features=20, hide_rest=False)

- img_boundry = mark_boundaries(temp/255.0, mask)

- plt.imshow(img_boundry)

- plt.show()

- ##修改可视化参数

- temp, mask = explanation.get_image_and_mask(281, positive_only=False, num_features=20, hide_rest=False)

- img_boundry = mark_boundaries(temp/255.0, mask)

- plt.imshow(img_boundry)

- plt.show()

Z-博客链接

lime工具包: GitHub - marcotcr/lime: Lime: Explaining the predictions of any machine learning classifier

总结:

本文主要演示了对shap、LIME两个工具包的使用

shap是一种解释任何机器学习模型输出的博弈论方法,它利用博弈论中的经典Shapley值及其相关扩展将最优信贷分配与局部解释联系起来。

LIME帮助解释学习模型正在学习什么以及为什么他们以某种方式预测。目前支持对表格的数据,文本分类器和图像分类器的解释。

- 近年来,机器学习和深度学习取得了较大的发展,深度学习方法在检测精度和速度方面与传统方法相比表现出更良好的性能。YOLOv8是Ultralytics公司继YOLOv5算法之后开发的下一代算法模型,目前支持图像分类、物体检测和实例分割任务。YO... [详细]

赞

踩

- pytorch多卡GPU训练基础知识常用方法torch.cuda.is_available():判断GPU是否可用 torch.cuda.device_count():计算当前可见可用的GPU数 torch.cuda.get_device_... [详细]

赞

踩

- 在深度学习中,对某一个离散随机变量X进行采样,并且又要保证采样过程是可导的(因为要用梯度下降进行权重更新),那么就可以用Gumbelsoftmaxtrick。属于重参数技巧(re-parameterization)的一种。首先我们要介绍,什... [详细]

赞

踩

- (PyTorch)TCN和RNN/LSTM/GRU结合实现时间序列预测(PyTorch)TCN和RNN/LSTM/GRU结合实现时间序列预测目录I.前言II.TCNIII.TCN-RNN/LSTM/GRU3.1TCN-RNN3.2TCN-L... [详细]

赞

踩

- 了解了LSTM原理后,一直搞不清Pytorch中input_size,hidden_size和output的size应该是什么,现整理一下假设我现在有个时间序列,timestep=11,每个timestep对应的时刻上特征维度是50,那么i... [详细]

赞

踩

- Pytorch版本问题THC/THC.h:Nosuchfileordirectory该问题发生于安装c语言扩展时。这个问题我经常遇见,也是因为我之前不关心pytorch版本造成的坏习惯。thc/thc.hPytorch版本问题THC/THC... [详细]

赞

踩

- PyTorch2.2将FlashAttention内核更新到了v2版本,不过需要注意的是,之前的FlashAttention内核具有Windows实现,Windows用户可以强制使用sdp_kernel,仅启用FlashAttention的... [详细]

赞

踩

- article

【基于Ubuntu下Yolov5的目标识别】保姆级教程 | 虚拟机安装 - Ubuntu安装 - 环境配置(Anaconda/Pytorch/Vscode/Yolov5) |全过程图文by.Akaxi_ubuntu运行yolo

【基于Ubuntu下Yolov5的目标识别】保姆级教程|虚拟机安装-Ubuntu安装-环境配置(Anaconda/Pytorch/Vscode/Yolov5)|全过程图文by.Akaxi_ubuntu运行yoloubuntu运行yolo目录... [详细]赞

踩

- 首先,开发工具我们选择jetbrains公司的Pycharm,打开Pycharm,选择newProject,flask,路径根据自己的自身情况改,最好点击create创建成功!此时,新建好的flask工程目录长这样static文件夹下存放一... [详细]

赞

踩

- 使用Web应用程序框架Flask进行YOLOv5的Web应用部署课程链接:https://edu.csdn.net/course/detail/31087PyTorch版的YOLOv5是轻量而高性能的实时目标检测方法。利用YOLOv5训练完... [详细]

赞

踩

- openvino部署流程如图所示:OpenVINO工具套件中用于AI推理加速的组件包括用于优化神经网络模型的Python工具ModelOptimizer(模型优化器)和用于加速推理计算的软件包InferenceEngine(推理引擎)。中间... [详细]

赞

踩

- Win11系统配置CUDA+cuDNN+Anaconda+Miniconda+Pytorch+yolov5。安装完成后,可以进行模型检测示例。win11cudacudnn环境变量1.1CDUA1.1.1CUDAToolkit及显卡驱动版本对... [详细]

赞

踩

- 本文简要介绍了U-Net网络的结构和特点,并基于Pytorch在公开的医学图像数据集ACDC进行实验,记录实验过程中所遇到的问题和解决思路,希望能为读者带来一定的参考价值。本文持续更新..._acdc数据集预处理acdc数据集预处理【本文持... [详细]

赞

踩

- config.py:配置初始的参数importml_collectionsdefget_3DReg_config():config=ml_collections.ConfigDict()config.patches=ml_collectio... [详细]

赞

踩

- 使用PyTorch深度学习搭建模型后,如果想查看模型结构,可以直接使用print(model)函数打印。但该输出结果不是特别直观,查阅发现有个能输出类似keras风格model.summary()的模型可视化工具。这里记录一下方便以后查阅。... [详细]

赞

踩

- 本专栏整理了《图神经网络代码实战》,内包含了不同图神经网络的相关代码实现(PyG以及自实现),理论与实践相结合,如GCN、GAT、GraphSAGE等经典图网络,每一个代码实例都附带有完整的代码。_torch_geometric.nnsam... [详细]

赞

踩

- 这是一篇总结文,给大家来捋清楚12大深度学习开源框架的快速入门,这是有三AI的GitHub项目,欢迎大家star/fork。https://github.com/longpeng2008/yousan.ai1概述1.1开源框架总览现如今开源... [详细]

赞

踩

- 图像去雾毕业论文准备15-深度学习框架(pytorch)——超级详细(收集数据集)还是之前的那个例子,之前是抽象的进行讲解,本节拆分细讲!线性回归细讲#!/usr/bin/python3.6#-*-coding:utf-8-*-#@Time... [详细]

赞

踩

- 1.目的有时候直接进行resize会有形变,所以想到这样的方式,同比例缩放,然后补0。2.实现_pytorchresizewithpaddingpytorchresizewithpadding1.目的有时候直接进行resize会有形变,所以... [详细]

赞

踩

- 1.介绍数据集数据集在data文件夹里,分别训练集在“imgs”,label在“mask”里,数据集用的是医学影像细胞分割的样本,其实在train集里有原图和对应的样本图。train_x的样图:train_y(label)样图:2.训练模型... [详细]

赞

踩