热门标签

热门文章

- 1Python自动化测试【软件测试最全教程(附笔记、学习路线)】,看完即就业_自动化测试课程

- 2基于jsp(java)高校学生考勤管理系统设计与实现_基于jsp的高校靠勤管理系统的设计与实现

- 3【Node.js】json-server

- 45分钟了解啥是数仓_大数据数仓的作用

- 5【AIGC调研系列】MiniMax 稀宇科技的abab 6.5 系列模型与国外先进模型相比的优缺点_稀宇科技 abab大模型的特点

- 6Idea双击打不开(macbook)_macbook idea 双击图标没反应

- 7微信小程序post和get请求_微信小程序 get请求

- 8《汇编语言(第四版)》王爽 第十章 CALL和RET指令_汇编语言王爽第四版 电子书

- 9使用IntelliJ IDEA进行Android应用开发_idea开发android教程

- 10看了下雷军的两份个人简历,的确厉害。。。_雷军履历对比法

当前位置: article > 正文

快来看,我让chatGPT帮我写代码了!!!_chatgpt 写代码

作者:笔触狂放9 | 2024-04-26 05:22:36

赞

踩

chatgpt 写代码

现在AIGC很火,chatGPT的功能也是非常的强大,作为一个学计算机的,我想chatGPT必须要玩起来,哈哈哈哈~~~

突发奇想,虽然学了几年的计算机,但是编程能力还是不行,既然chatGPT那么滴niubility,那么我来试一下让他帮我学代码吧!

- 1

- 2

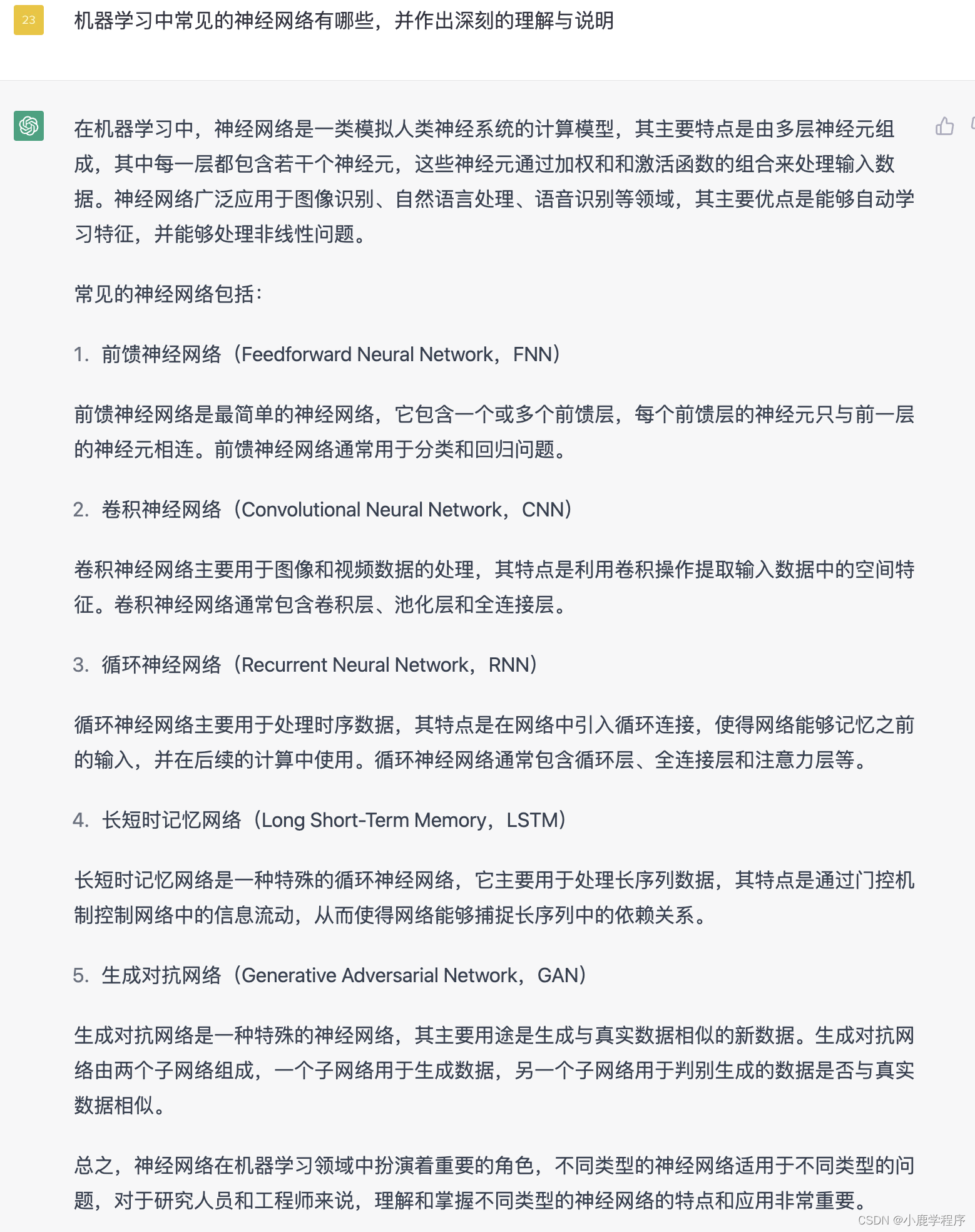

我:机器学习中常见的神经网络有哪些,并作出深刻的理解与说明

chatGPT答:

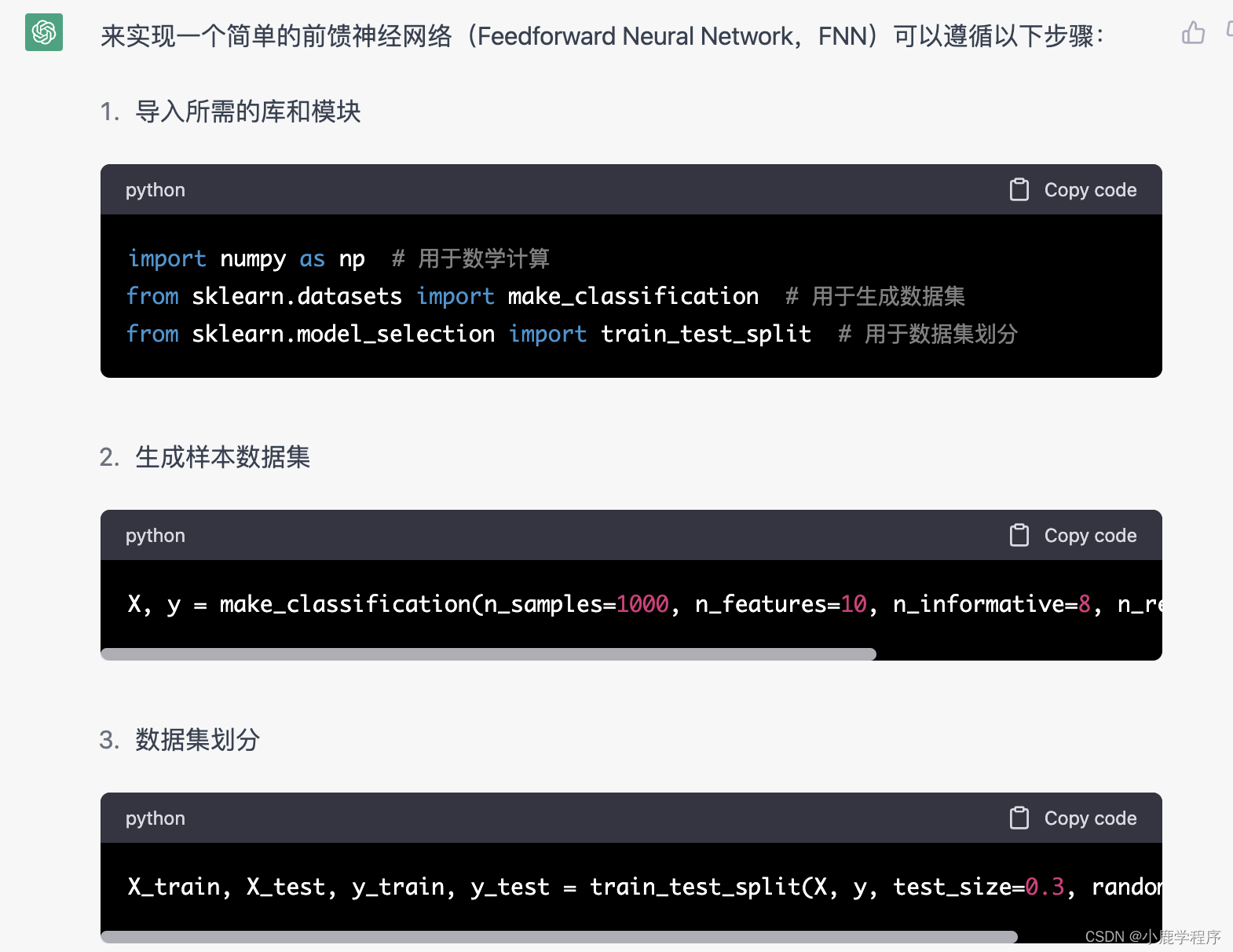

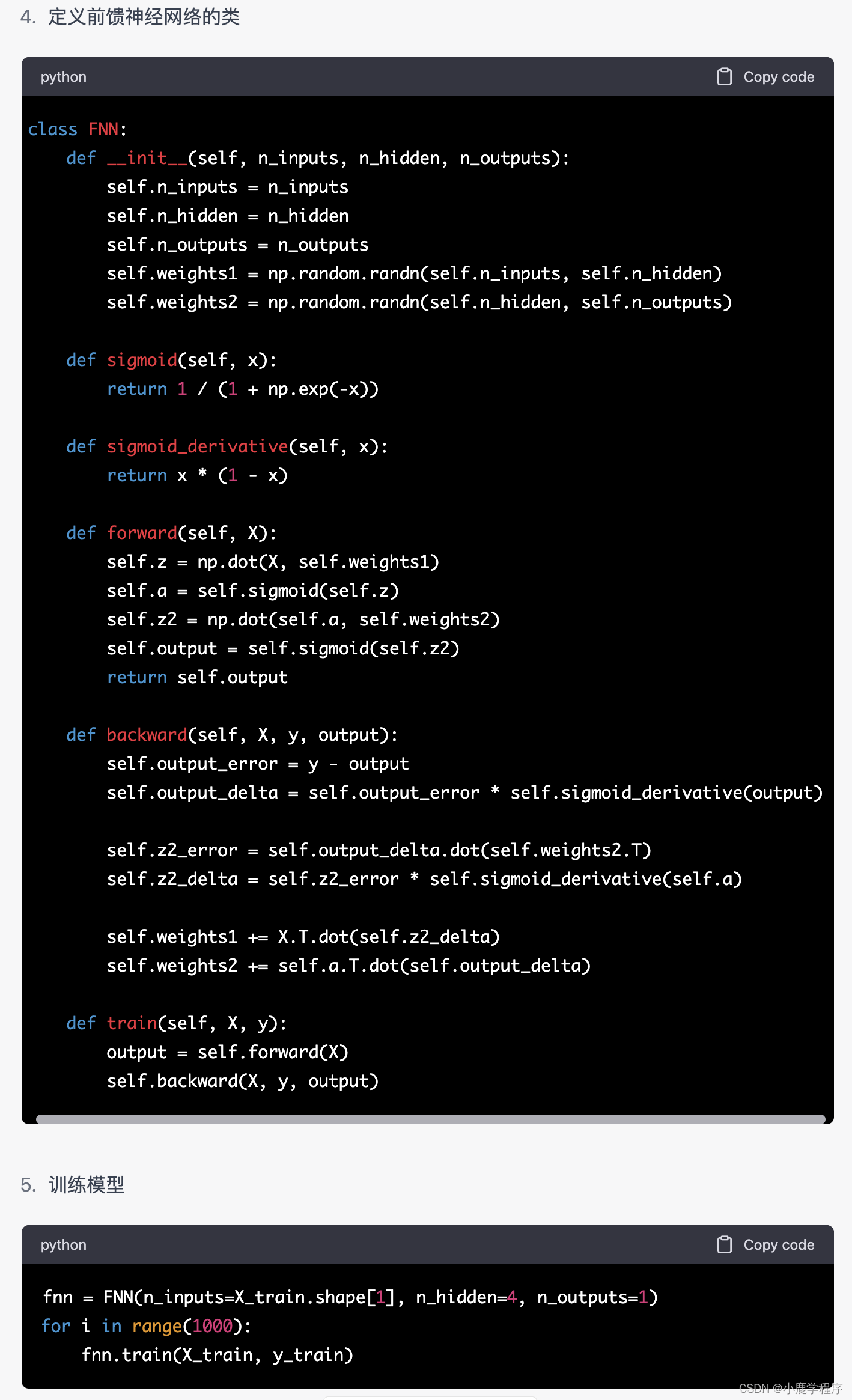

我:用python来实现一个简单的前馈神经网络(Feedforward Neural Network,FNN)

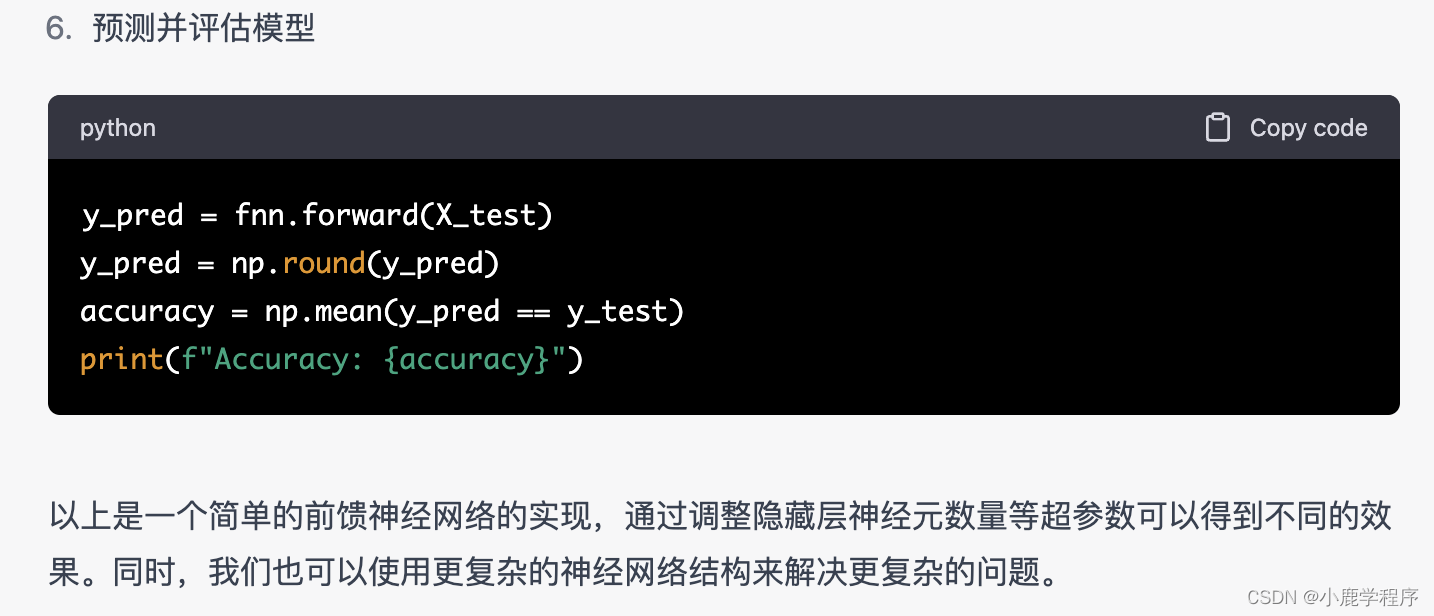

chatGPT答: 实现一个简单的前馈神经网络(Feedforward Neural Network,FNN)可以遵循以下步骤

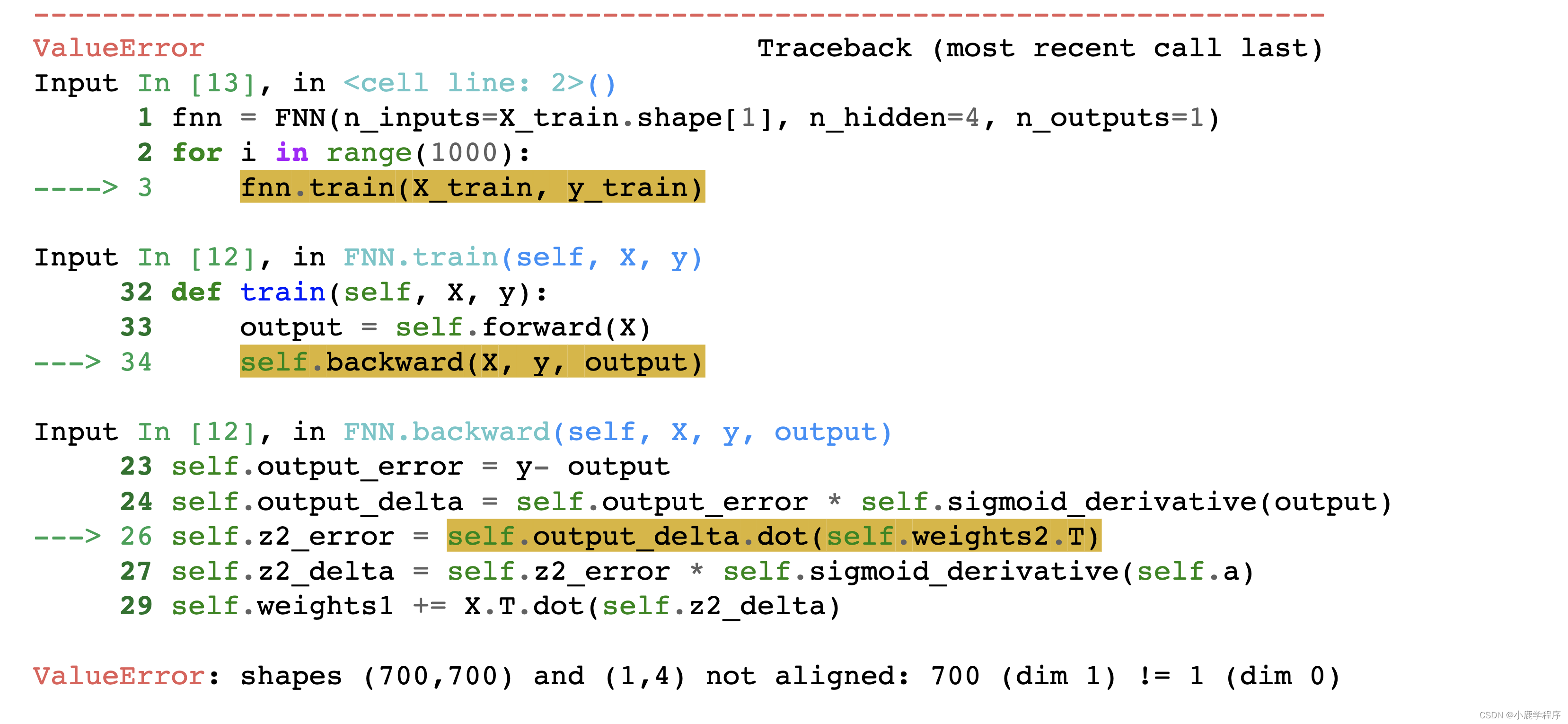

总结: 生成的代码直接复制粘贴到jupyter中,发现一个错误:

然后我又把它的错误返回给chatGPT,他就解决了:

== self.output_error = y.reshape(-1, 1) - output ==

== self.output_error = y.reshape(-1, 1) - output ==

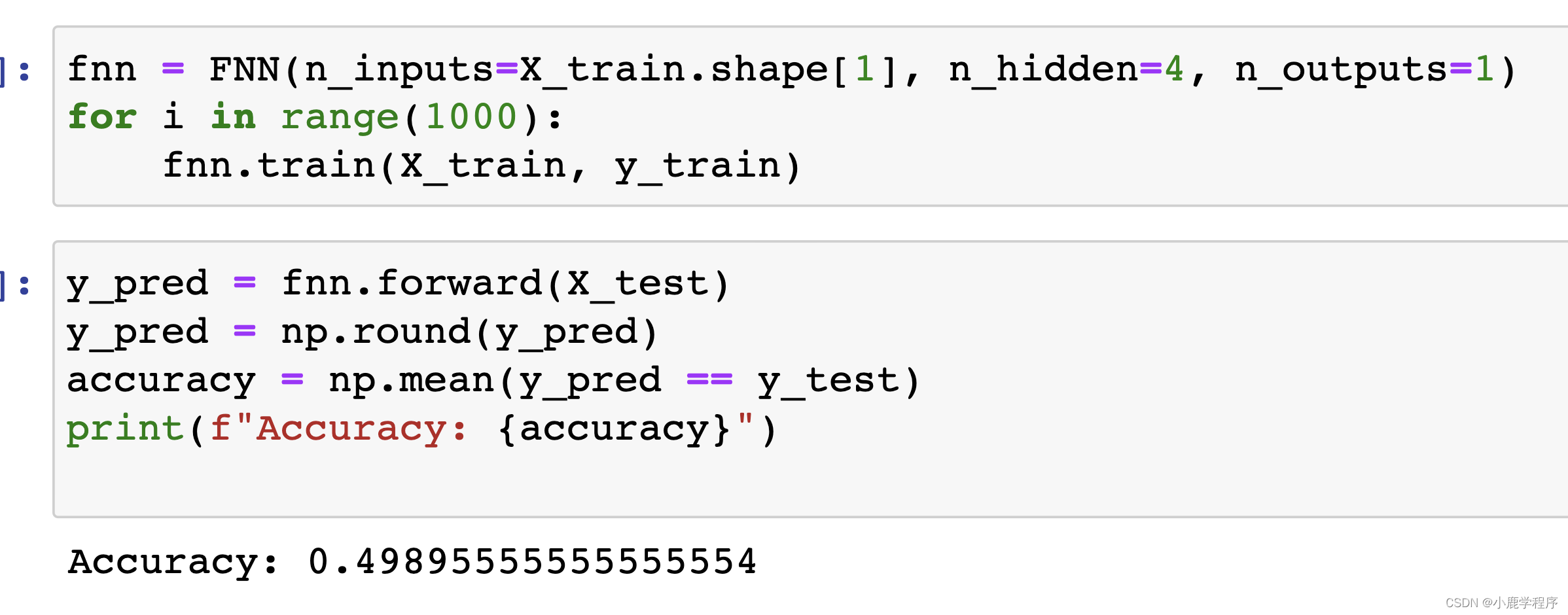

把这一句改变之后,程序代码确实正确了,但是准确率很低,才50%左右。

问:用pytorch实现前馈神经网络(Feedforward Neural Network,FNN)

chatGPT答:

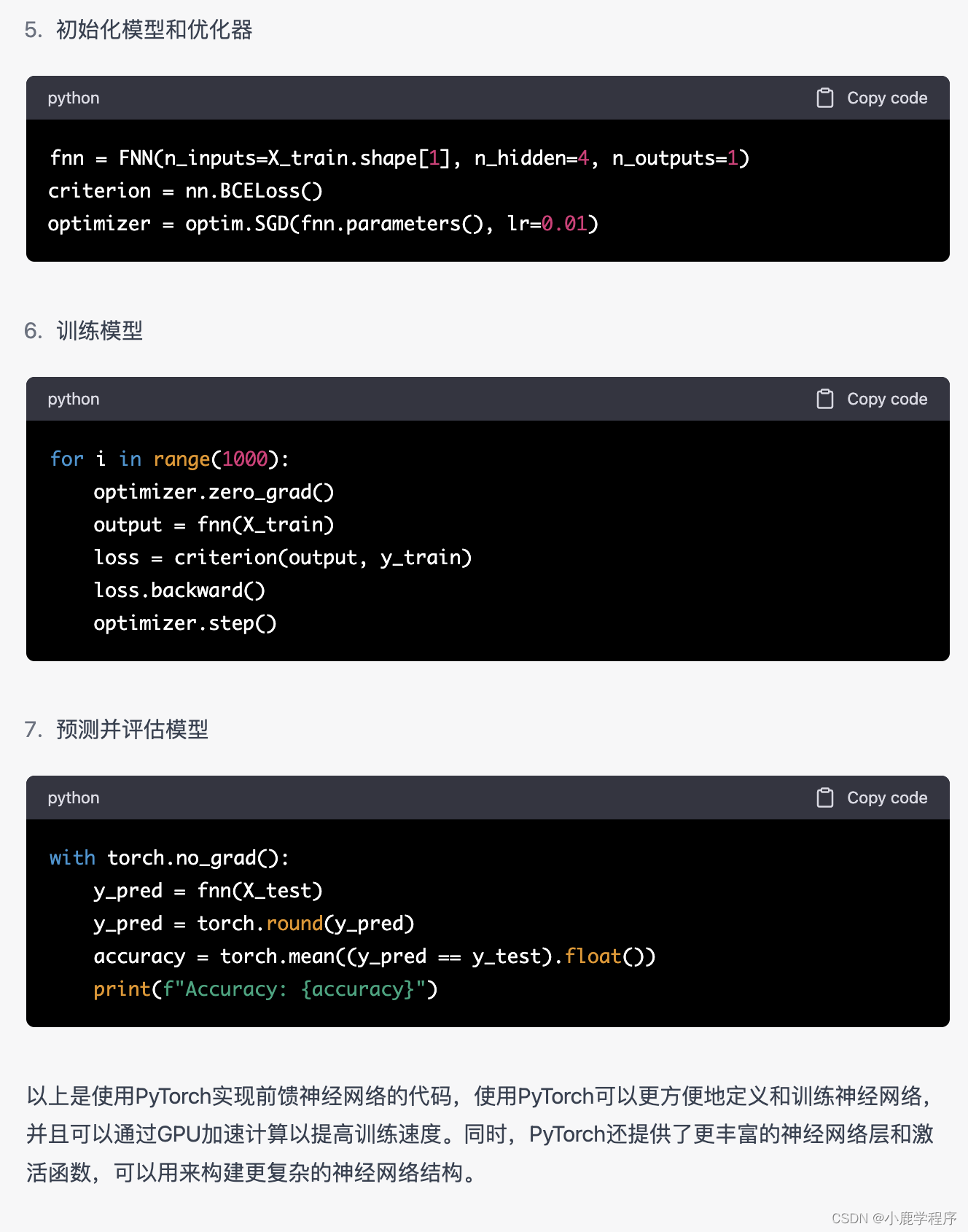

用pytorch实现的代码也是有错误的,用同样的方法来解决

完整的代码如下:

import torch

import torch.nn as nn

import torch.optim as optim

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

- 1

- 2

- 3

- 4

- 5

X, y = make_classification(n_samples=1000, n_features=10, n_informative=8, n_redundant=2, random_state=42)

X = torch.tensor(X).float()

y = torch.tensor(y).float()

- 1

- 2

- 3

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

- 1

class FNN(nn.Module):

def __init__(self, n_inputs, n_hidden, n_outputs):

super(FNN, self).__init__()

self.fc1 = nn.Linear(n_inputs, n_hidden)

self.fc2 = nn.Linear(n_hidden, n_outputs)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

x = self.fc1(x)

x = self.sigmoid(x)

x = self.fc2(x)

x = self.sigmoid(x)

return x

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

fnn = FNN(n_inputs=X_train.shape[1], n_hidden=4, n_outputs=1)

criterion = nn.BCELoss()

optimizer = optim.SGD(fnn.parameters(), lr=0.01)

- 1

- 2

- 3

for i in range(1000):

optimizer.zero_grad()

output = fnn(X_train)

# loss = criterion(output, y_train)

loss = criterion(output, y_train.unsqueeze(1))

loss.backward()

optimizer.step()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

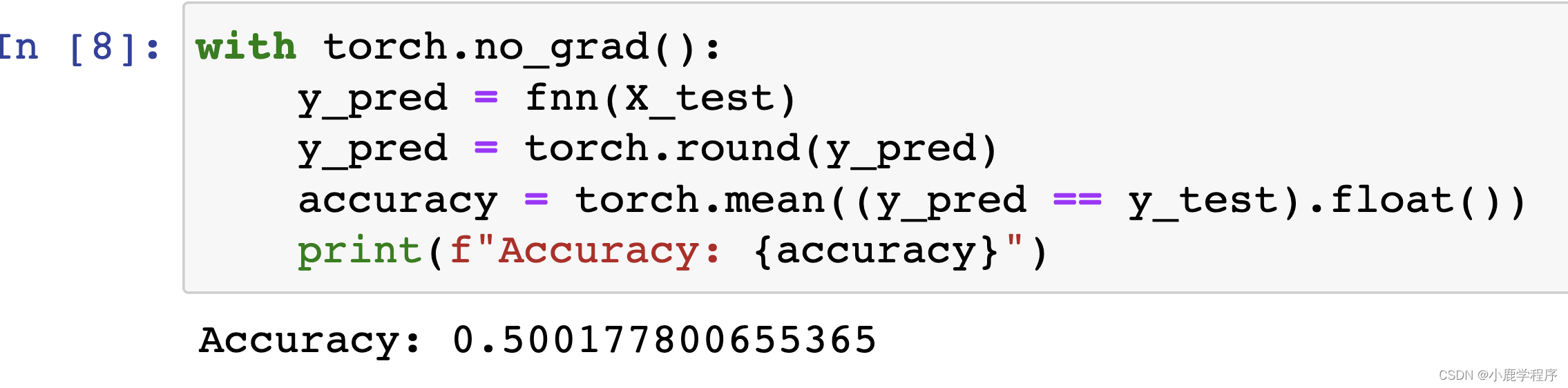

with torch.no_grad():

y_pred = fnn(X_test)

y_pred = torch.round(y_pred)

accuracy = torch.mean((y_pred == y_test).float())

print(f"Accuracy: {accuracy}")

- 1

- 2

- 3

- 4

- 5

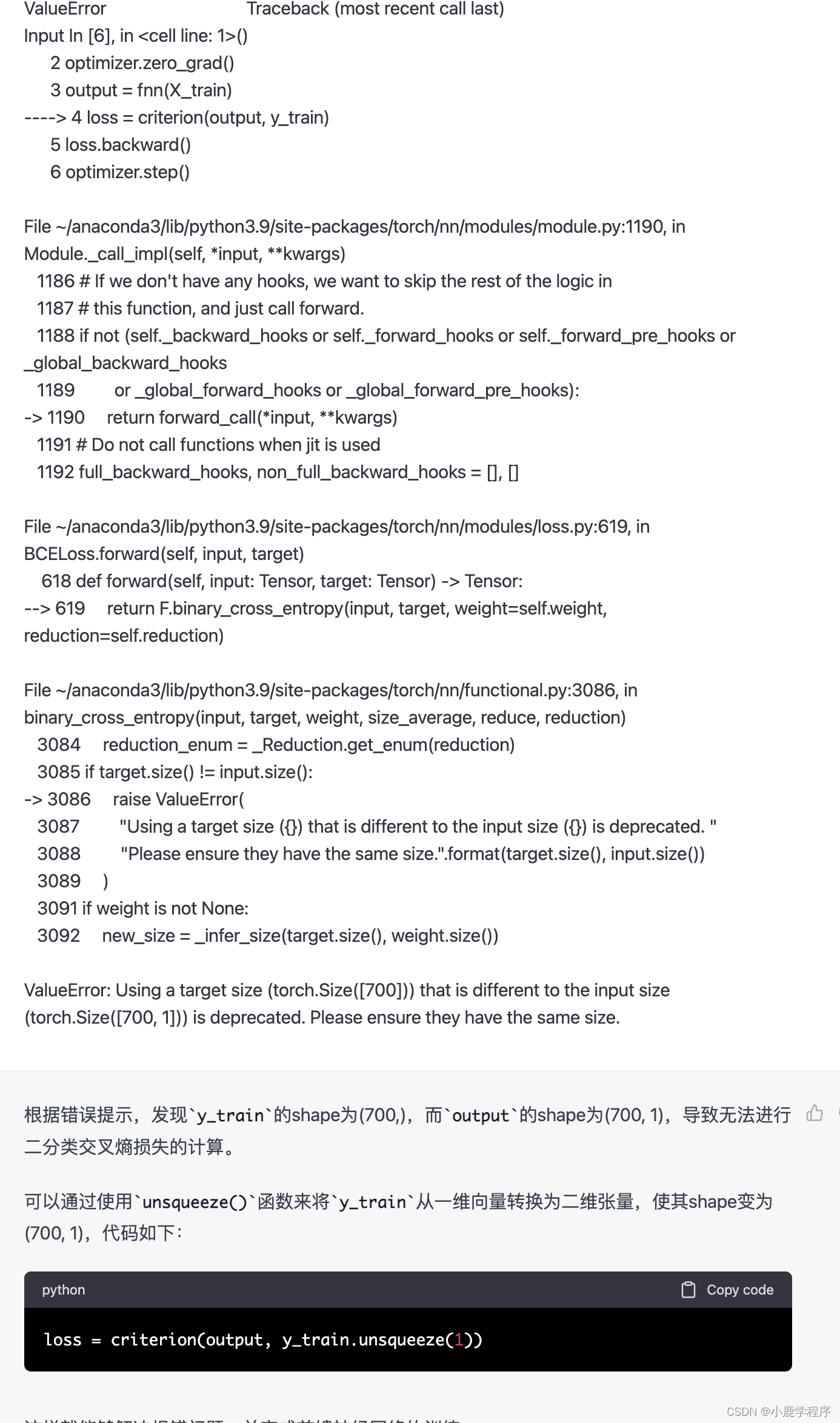

实验结果:依旧效果很差

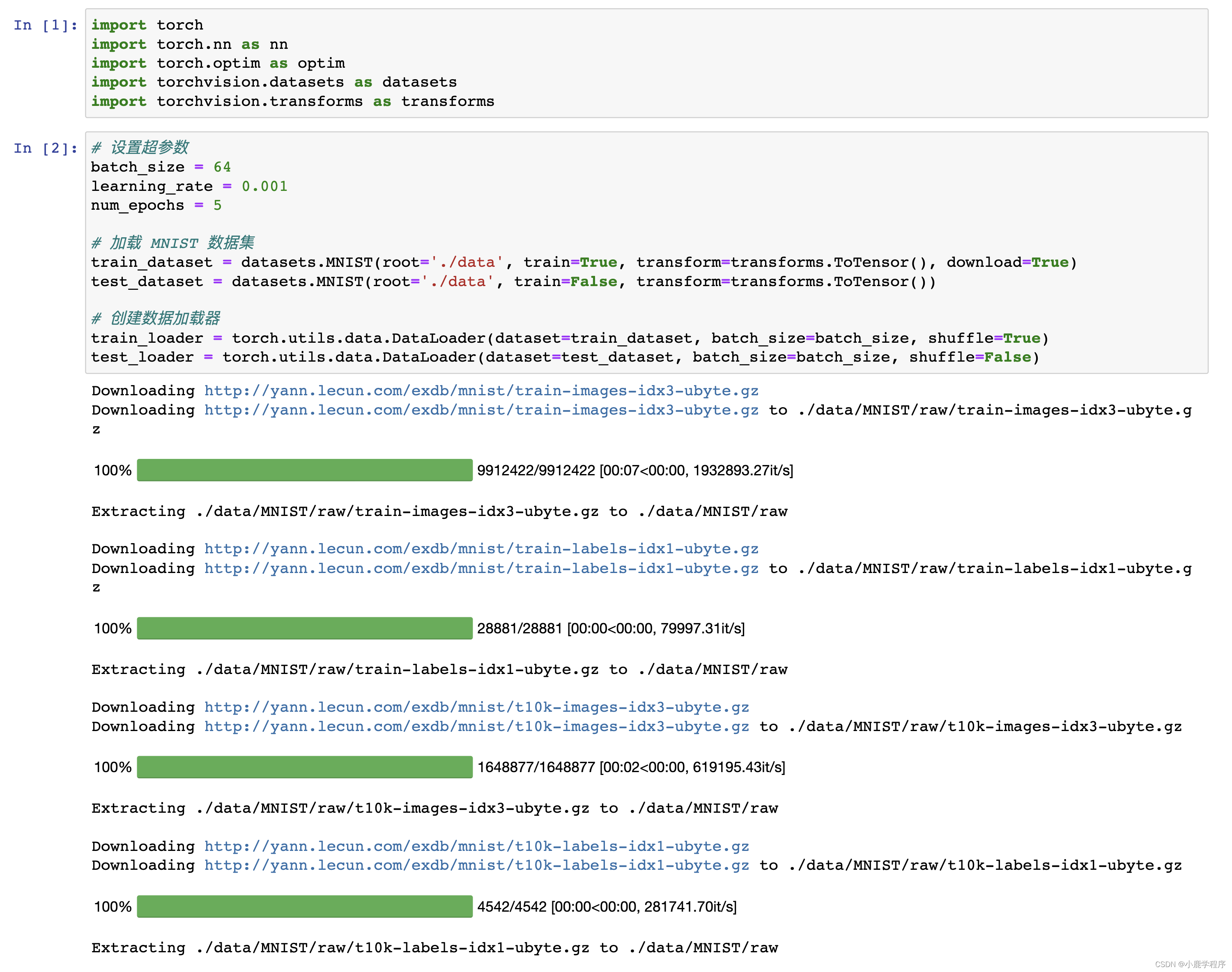

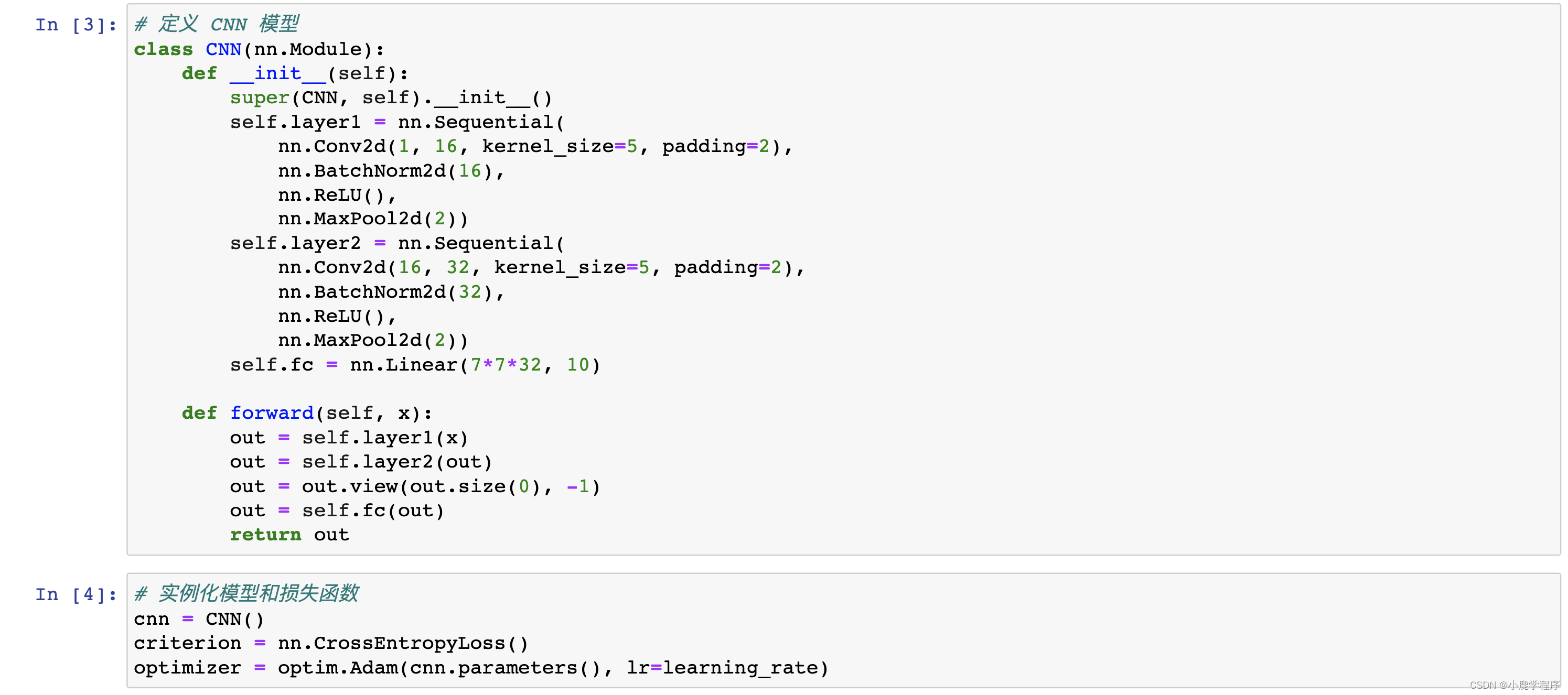

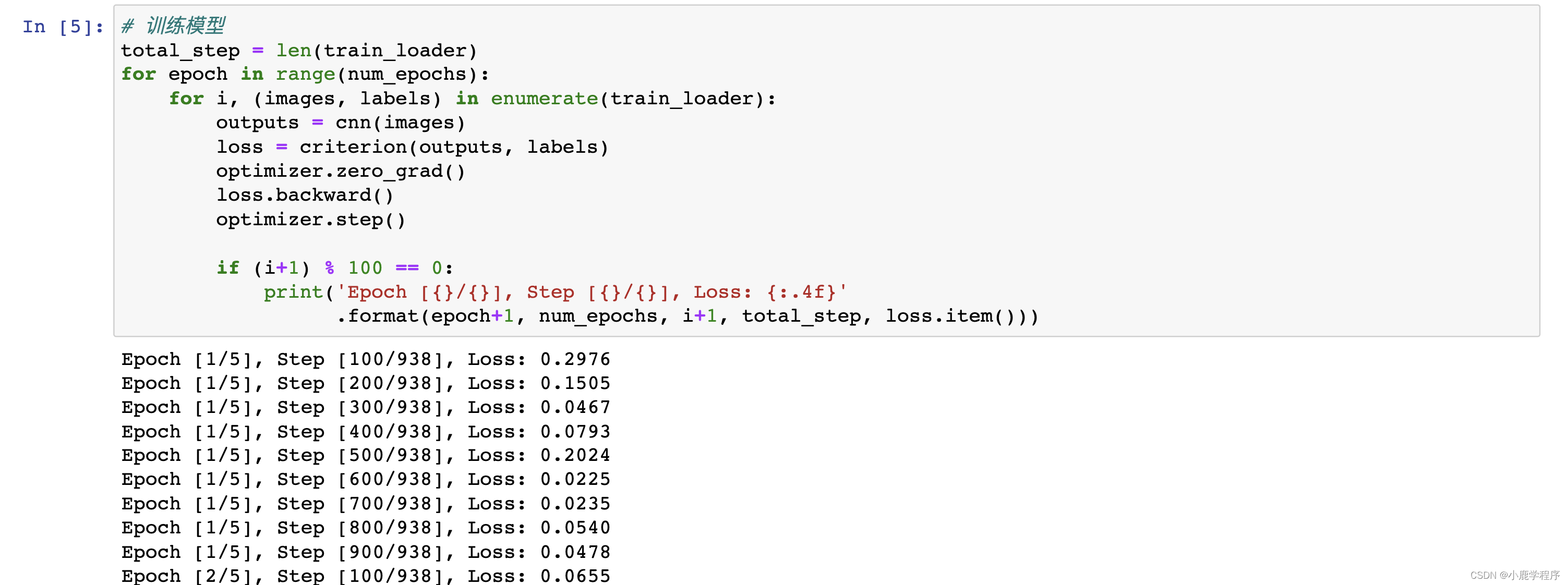

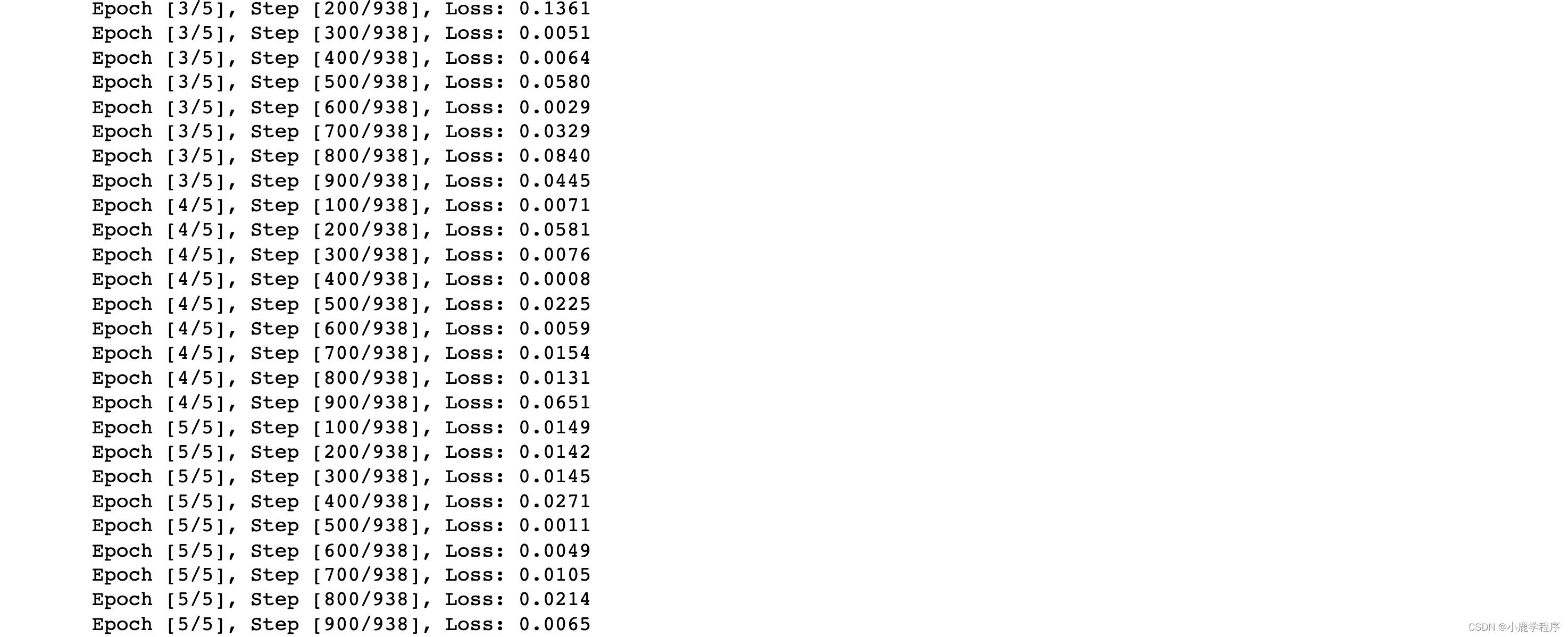

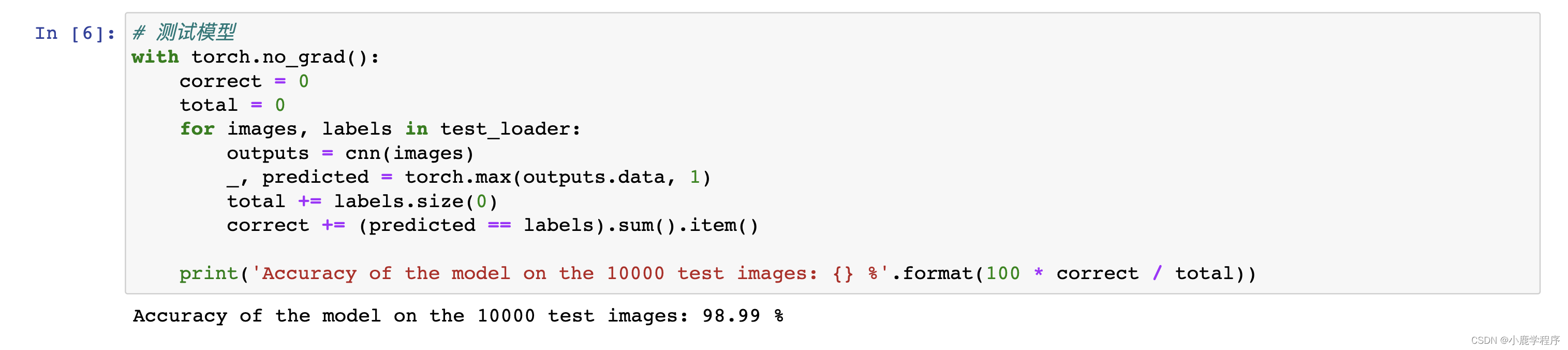

问用python和pytorch实现卷积神经网络CNN

chatGPT答:

import torch import torch.nn as nn import torch.optim as optim import torchvision.datasets as datasets import torchvision.transforms as transforms # 设置超参数 batch_size = 64 learning_rate = 0.001 num_epochs = 5 # 加载 MNIST 数据集 train_dataset = datasets.MNIST(root='./data', train=True, transform=transforms.ToTensor(), download=True) test_dataset = datasets.MNIST(root='./data', train=False, transform=transforms.ToTensor()) # 创建数据加载器 train_loader = torch.utils.data.DataLoader(dataset=train_dataset, batch_size=batch_size, shuffle=True) test_loader = torch.utils.data.DataLoader(dataset=test_dataset, batch_size=batch_size, shuffle=False) # 定义 CNN 模型 class CNN(nn.Module): def __init__(self): super(CNN, self).__init__() self.layer1 = nn.Sequential( nn.Conv2d(1, 16, kernel_size=5, padding=2), nn.BatchNorm2d(16), nn.ReLU(), nn.MaxPool2d(2)) self.layer2 = nn.Sequential( nn.Conv2d(16, 32, kernel_size=5, padding=2), nn.BatchNorm2d(32), nn.ReLU(), nn.MaxPool2d(2)) self.fc = nn.Linear(7*7*32, 10) def forward(self, x): out = self.layer1(x) out = self.layer2(out) out = out.view(out.size(0), -1) out = self.fc(out) return out # 实例化模型和损失函数 cnn = CNN() criterion = nn.CrossEntropyLoss() optimizer = optim.Adam(cnn.parameters(), lr=learning_rate) # 训练模型 total_step = len(train_loader) for epoch in range(num_epochs): for i, (images, labels) in enumerate(train_loader): outputs = cnn(images) loss = criterion(outputs, labels) optimizer.zero_grad() loss.backward() optimizer.step() if (i+1) % 100 == 0: print('Epoch [{}/{}], Step [{}/{}], Loss: {:.4f}' .format(epoch+1, num_epochs, i+1, total_step, loss.item())) # 测试模型 with torch.no_grad(): correct = 0 total = 0 for images, labels in test_loader: outputs = cnn(images) _, predicted = torch.max(outputs.data, 1) total += labels.size(0) correct += (predicted == labels).sum().item() print('Accuracy of the model on the 10000 test images: {} %'.format(100 * correct / total))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

这次没有错误,结果也还行:

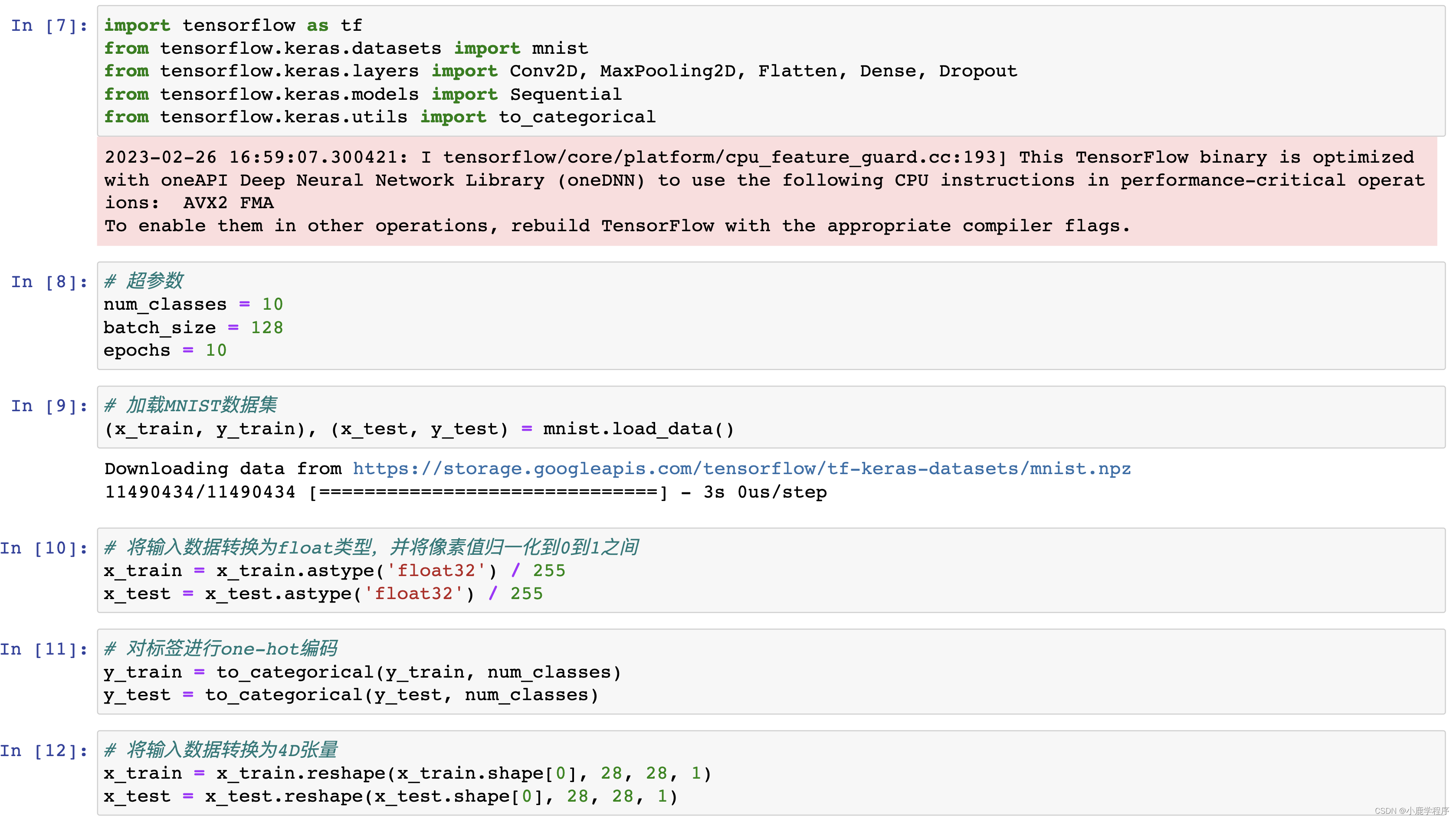

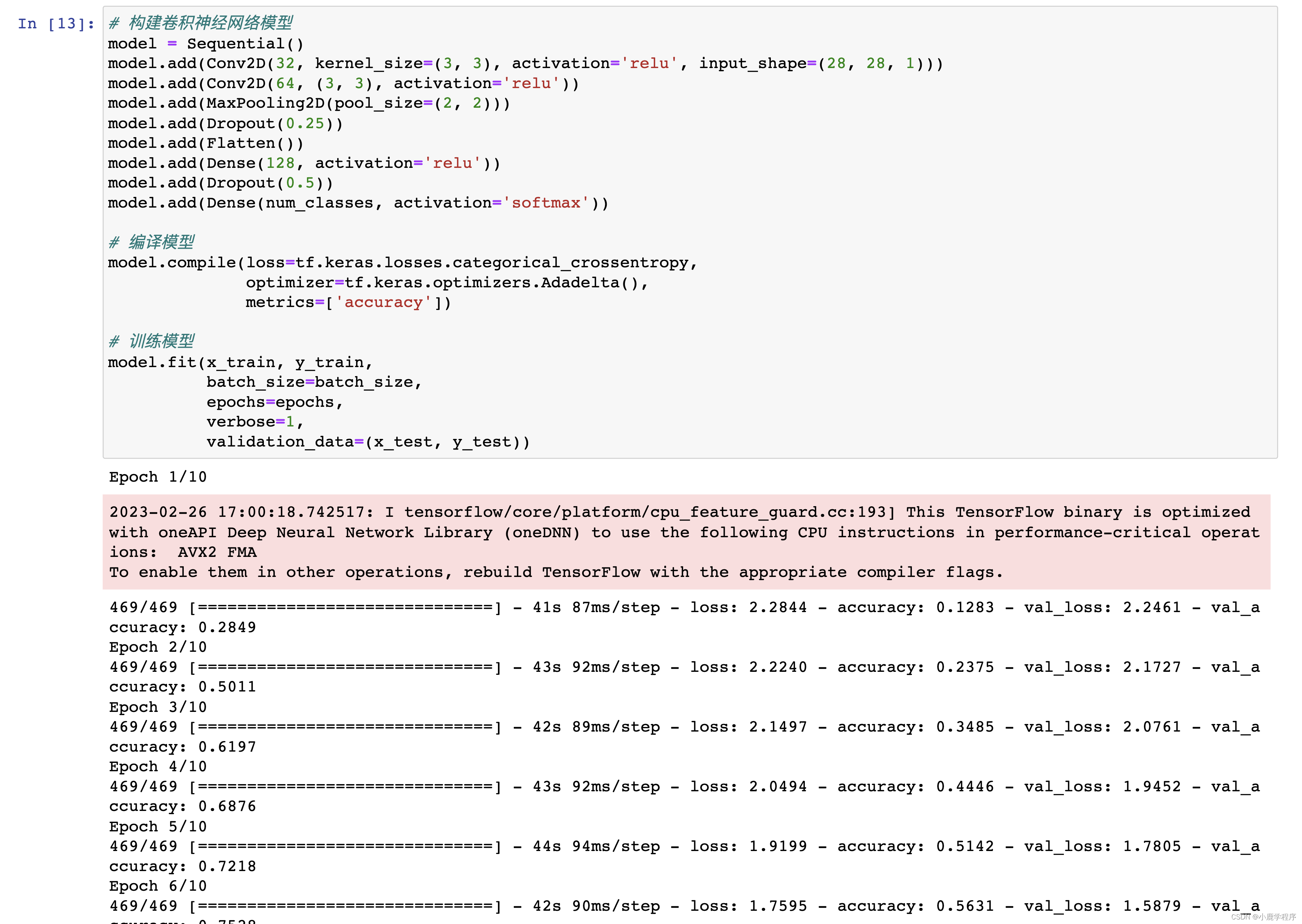

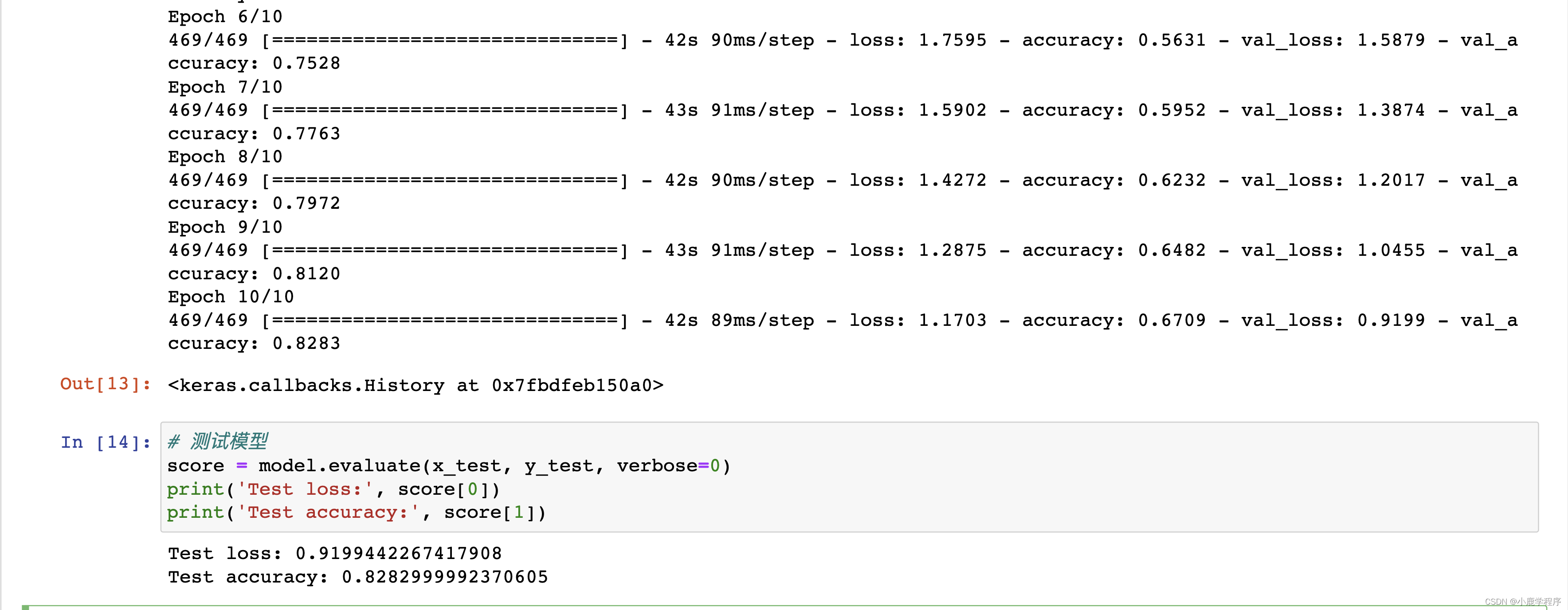

问用tensorflow实现卷积神经网络CNN

chatGPT答

import tensorflow as tf from tensorflow.keras.datasets import mnist from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense, Dropout from tensorflow.keras.models import Sequential from tensorflow.keras.utils import to_categorical # 超参数 num_classes = 10 batch_size = 128 epochs = 10 # 加载MNIST数据集 (x_train, y_train), (x_test, y_test) = mnist.load_data() # 将输入数据转换为float类型,并将像素值归一化到0到1之间 x_train = x_train.astype('float32') / 255 x_test = x_test.astype('float32') / 255 # 对标签进行one-hot编码 y_train = to_categorical(y_train, num_classes) y_test = to_categorical(y_test, num_classes) # 将输入数据转换为4D张量 x_train = x_train.reshape(x_train.shape[0], 28, 28, 1) x_test = x_test.reshape(x_test.shape[0], 28, 28, 1) # 构建卷积神经网络模型 model = Sequential() model.add(Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=(28, 28, 1))) model.add(Conv2D(64, (3, 3), activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(128, activation='relu')) model.add(Dropout(0.5)) model.add(Dense(num_classes, activation='softmax')) # 编译模型 model.compile(loss=tf.keras.losses.categorical_crossentropy, optimizer=tf.keras.optimizers.Adadelta(), metrics=['accuracy']) # 训练模型 model.fit(x_train, y_train, batch_size=batch_size, epochs=epochs, verbose=1, validation_data=(x_test, y_test)) # 测试模型 score = model.evaluate(x_test, y_test, verbose=0) print('Test loss:', score[0]) print('Test accuracy:', score[1])

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

运行结果如下:

总结:本人学疏才浅,也不一定全能判断出它生成的到底对不对,如果有什么不对的地方还请大家留言or私聊指出,谢谢大家!!让我们一起进步吧!

总的来说写的还行,我不会写的他都会,我要失业了,呜呜~

准确率比较低,等有时间改进一下。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/笔触狂放9/article/detail/489227

推荐阅读

相关标签