热门标签

热门文章

- 1AI金融:利用LSTM预测股票每日最高价

- 2华为OD技术面试案例5-2024年

- 3AI是计算机科学,人工智能计算机科学(79种)...

- 4MQTTX如何订阅监控查看消息?_mqttx怎么配置topic监听数据

- 5spring+hibernate出错小结:_hibernate报错org.hibernate.exception.dataexception:

- 6Ubuntu中安装IDEA,并配置桌面快捷方式_ubunto添加ider快捷方式

- 7uniapp 实现不同用户展示不同的tabbar(底部导航栏)_uni-app自定义tabbar

- 8Unity2D入门手册_filtermode point

- 92023最新pytorch安装教程,简单易懂,面向初学者(Anaconda+GPU)

- 10Android V3 签名方案,使用密钥转轮为签名更新做准备!

当前位置: article > 正文

ELK入门(四)-logstash

作者:AllinToyou | 2024-02-23 09:47:32

赞

踩

ELK入门(四)-logstash

Logstash

Logstash 是开源的服务器端数据处理管道,能够同时从多个来源采集数据,转换数据,然后将数据发送到您最喜欢的存储库中。

Logstash 能够动态地采集、转换和传输数据,不受格式或复杂度的影响。利用 Grok 从非结构化数据中派生出结构,从 IP 地址解码出地理坐标,匿名化或排除敏感字段,并简化整体处理过程。

数据往往以各种各样的形式,或分散或集中地存在于很多系统中。Logstash 支持各种输入选择 ,可以在同一时间从众多常用来源捕捉事件,能够以连续的流式传输方式,轻松地从您的日志、指标、Web 应用、数据存储以及各种 AWS 服务采集数据。

下载logstash镜像

查询镜像

[root@localhost elk]# docker search logstash

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

logstash Logstash is a tool for managing events and l… 2141 [OK]

opensearchproject/logstash-oss-with-opensearch-output-plugin The Official Docker Image of Logstash with O… 17

grafana/logstash-output-loki Logstash plugin to send logs to Loki 3

- 1

- 2

- 3

- 4

- 5

拉取镜像

[root@localhost elk]# docker pull logstash:7.17.7 7.17.7: Pulling from library/logstash fb0b3276a519: Already exists 4a9a59914a22: Pull complete 5b31ddf2ac4e: Pull complete 162661d00d08: Pull complete 706a1bf2d5e3: Pull complete 741874f127b9: Pull complete d03492354dd2: Pull complete a5245bb90f80: Pull complete 05103a3b7940: Pull complete 815ba6161ff7: Pull complete 7777f80b5df4: Pull complete Digest: sha256:93030161613312c65d84fb2ace25654badbb935604a545df91d2e93e28511bca Status: Downloaded newer image for logstash:7.17.7 docker.io/library/logstash:7.17.7

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

创建logstash容器

创建logstash容器,为拷贝配置文件。

docker run --name logstash -d logstash:7.17.7

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

511e983027e7 logstash:7.17.7 "/usr/local/bin/dock…" 4 seconds ago Up 4 seconds 5044/tcp, 9600/tcp logstash

- 1

- 2

- 3

- 4

配置文件

挂载文件夹

从容器中拷贝文件到/usr/local/software/elk/logstash下

[root@localhost logstash]#

- 1

配置文件

从容器中拷贝文件到linux

[root@localhost logstash]# docker cp logstash:/usr/share/logstash/config/ ./

Successfully copied 11.8kB to /usr/local/software/elk/logstash/./

[root@localhost logstash]# docker cp logstash:/usr/share/logstash/data/ ./

Successfully copied 4.1kB to /usr/local/software/elk/logstash/./

[root@localhost logstash]# docker cp logstash:/usr/share/logstash/pipeline/ ./

Successfully copied 2.56kB to /usr/local/software/elk/logstash/./

- 1

- 2

- 3

- 4

- 5

- 6

创建一个日志文件夹

mkdir logs

- 1

logstash.yml

path.logs: /usr/share/logstash/logs

config.test_and_exit: false

config.reload.automatic: false

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "http://192.168.198.128:9200" ]

- 1

- 2

- 3

- 4

- 5

pipelines.yml

# This file is where you define your pipelines. You can define multiple.

# For more information on multiple pipelines, see the documentation:

# https://www.elastic.co/guide/en/logstash/current/multiple-pipelines.html

- pipeline.id: main

path.config: "/usr/share/logstash/pipeline/logstash.conf"

- 1

- 2

- 3

- 4

- 5

- 6

logstash.conf

input { tcp { mode => "server" host => "0.0.0.0" port => 5044 codec => json_lines } } filter{ } output { elasticsearch { hosts => ["192.168.198.128:9200"] #elasticsearch的ip地址 index => "elk" #索引名称 } stdout { codec => rubydebug } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

创建运行容器

docker run -it \

--name logstash \

--privileged=true \

-p 5044:5044 \

-p 9600:9600 \

--network wn_docker_net \

--ip 172.18.12.54 \

-v /etc/localtime:/etc/localtime \

-v /usr/local/software/elk/logstash/config:/usr/share/logstash/config \

-v /usr/local/software/elk/logstash/data:/usr/share/logstash/data \

-v /usr/local/software/elk/logstash/logs:/usr/share/logstash/logs \

-v /usr/local/software/elk/logstash/pipeline:/usr/share/logstash/pipeline \

-d logstash:7.17.7

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

由于挂载有时候不成功,可以先创建容器后把文件拷贝到config, pipeline中。

docker run -it \

--name logstash \

--privileged=true \

-p 5044:5044 \

-p 9600:9600 \

--network wn_docker_net \

--ip 172.18.12.54 \

-v /etc/localtime:/etc/localtime \

-d logstash:7.17.7

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

springboot整合logstash

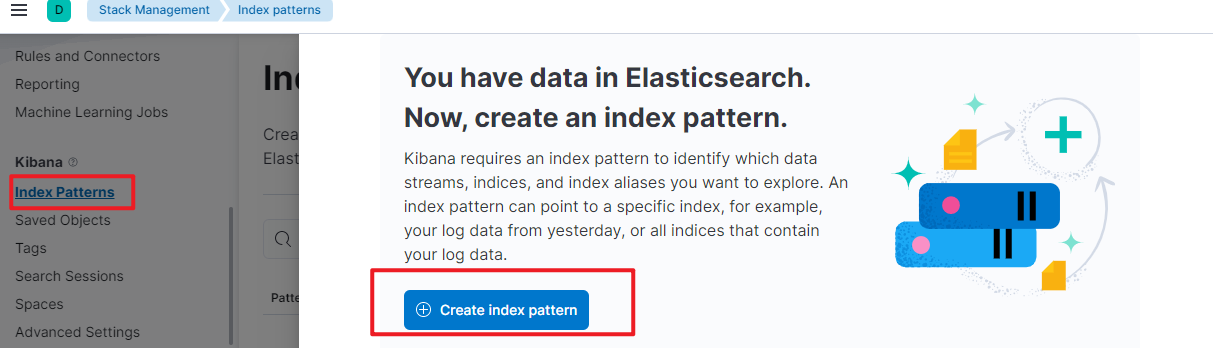

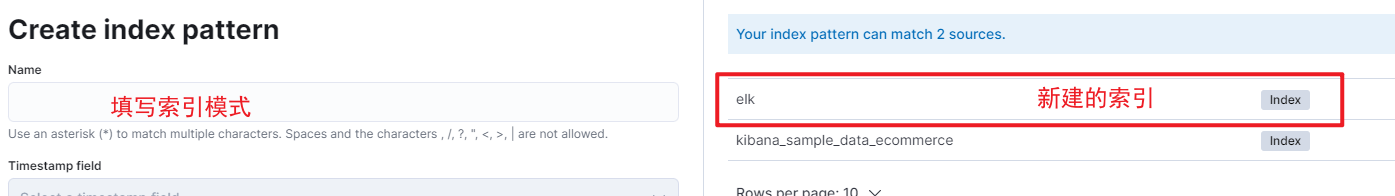

kibana创建索引

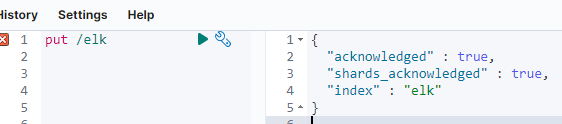

创建索引对象

在kibana中创建索引,索引名称和logstash.conf中的index名称一致。

#logstash.conf中配置的内容

elasticsearch {

hosts => ["192.168.198.128:9200"] #elasticsearch的ip地址

index => "elk" #索引名称

}

- 1

- 2

- 3

- 4

- 5

·

·

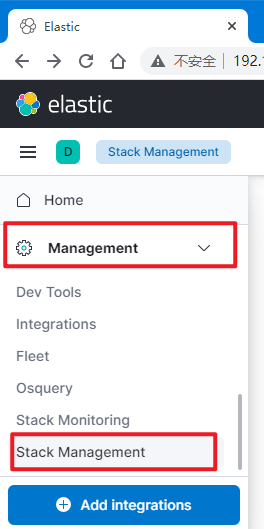

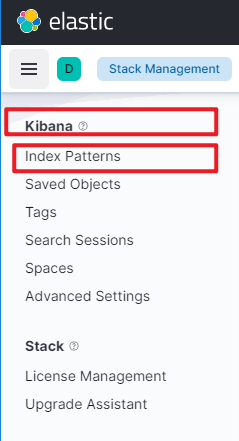

创建索引模式

在菜单中选择Management —> StackManagement 点击 Add integrations

Kibana–>index Patterns

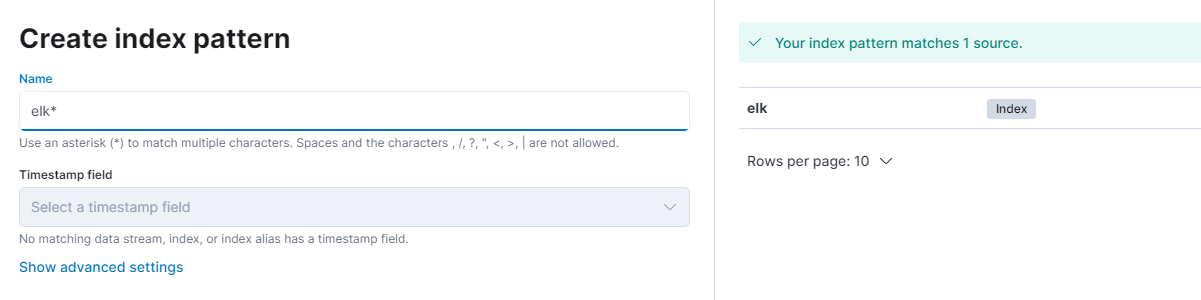

springboot

引入依赖

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.wnhz.elk</groupId> <artifactId>springboot-elk</artifactId> <version>1.0-SNAPSHOT</version> <properties> <maven.compiler.source>8</maven.compiler.source> <maven.compiler.target>8</maven.compiler.target> </properties> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>2.5.14</version> </parent> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> </dependency> <dependency> <groupId>net.logstash.logback</groupId> <artifactId>logstash-logback-encoder</artifactId> <version>7.3</version> </dependency> </dependencies> </project>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

编辑logback-spring.xml文件

<?xml version="1.0" encoding="UTF-8"?> <!-- 日志级别从低到高分为TRACE < DEBUG < INFO < WARN < ERROR < FATAL,如果设置为WARN,则低于WARN的信息都不会输出 --> <!-- scan:当此属性设置为true时,配置文档如果发生改变,将会被重新加载,默认值为true --> <!-- scanPeriod:设置监测配置文档是否有修改的时间间隔,如果没有给出时间单位,默认单位是毫秒。 当scan为true时,此属性生效。默认的时间间隔为1分钟。 --> <!-- debug:当此属性设置为true时,将打印出logback内部日志信息,实时查看logback运行状态。默认值为false。 --> <configuration scan="true" scanPeriod="10 seconds"> <!--1. 输出到控制台--> <appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender"> <!--此日志appender是为开发使用,只配置最低级别,控制台输出的日志级别是大于或等于此级别的日志信息--> <filter class="ch.qos.logback.classic.filter.ThresholdFilter"> <level>DEBUG</level> </filter> <encoder> <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} -%5level ---[%15.15thread] %-40.40logger{39} : %msg%n</pattern> <!-- 设置字符集 --> <charset>UTF-8</charset> </encoder> </appender> <!-- 2. 输出到文件 --> <appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender"> <!--日志文档输出格式--> <append>true</append> <encoder> <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} -%5level ---[%15.15thread] %-40.40logger{39} : %msg%n</pattern> <charset>UTF-8</charset> <!-- 此处设置字符集 --> </encoder> </appender> <!--LOGSTASH config --> <appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender"> <destination>192.168.198.128:5044</destination> <encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"> <!--自定义时间戳格式, 默认是yyyy-MM-dd'T'HH:mm:ss.SSS<--> <timestampPattern>yyyy-MM-dd HH:mm:ss</timestampPattern> <customFields>{"appname":"App"}</customFields> </encoder> </appender> <root level="DEBUG"> <appender-ref ref="CONSOLE"/> <appender-ref ref="FILE"/> <appender-ref ref="LOGSTASH"/> </root> </configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

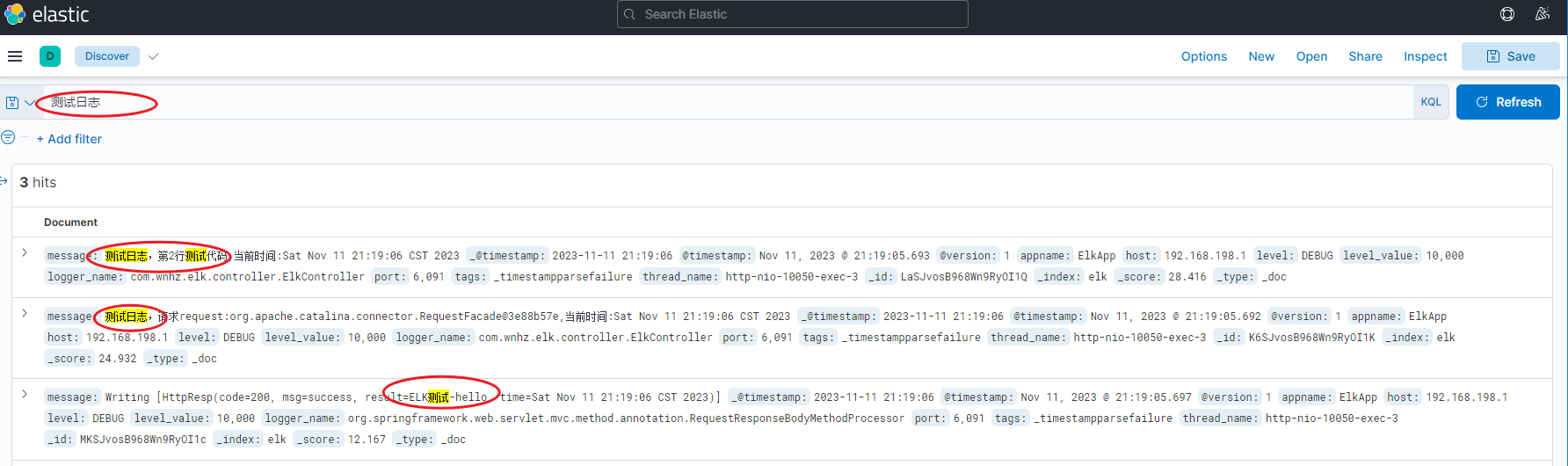

代码测试

package com.wnhz.elk.controller; import com.wnhz.elk.result.HttpResp; import io.swagger.annotations.Api; import io.swagger.annotations.ApiOperation; import lombok.extern.slf4j.Slf4j; import org.springframework.web.bind.annotation.GetMapping; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.RestController; import javax.servlet.http.HttpServletRequest; import java.util.Date; @Api(tags = "ELK测试模块") @RestController @RequestMapping("/api/elk") @Slf4j public class ElkController { @ApiOperation(value = "elkHello",notes = "测试ELK的结果") @GetMapping("/elkHello") public HttpResp<String> elkHello(HttpServletRequest request) { log.debug("测试日志,请求request:{},当前时间:{}", request, new Date()); log.debug("测试日志,第{}行测试代码,当前时间:{}", 2, new Date()); return HttpResp.success("ELK测试-hello"); } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

kibana中查看索引

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/AllinToyou/article/detail/135462

推荐阅读

相关标签