- 1@程序员 千万不能错过!这项政策让你有每年多赚20万的能力!

- 2java报错状态码_java 错误码规范

- 3建立SFTP服务器

- 4博客改版,迎接新的一年!

- 5【AI大模型应用开发】【LangChain系列】实战案例2:通过URL加载网页内容 - LangChain对爬虫功能的封装_langchain 爬虫

- 6在Docker中安装RabbitMQ_docker里面安装rabbitmq

- 7MobileNet实战:tensorflow2(1),2024年最新跳槽 面试

- 8华为OD机试C、D卷 - 最多购买宝石数目(Java & JS & Python & C & C++)_attributeerror: 'str' object has no attribute 'dec

- 9REACT实战项目从0到1搭建(仅供参考)_react项目搭建

- 10凸多边形的最优三角划分最详细求解步骤演示[动态规划]_凸多边形三角化 最优解

windows系统下配置Mamba环境_windows mamba

赞

踩

最近在学习AI知识,试图在安装最近爆火的mamba模型时遇到困难。参考网上各位达人,经过努力,终于在win11系统anaconda中成功编译安装了mamba包。

我用的是visual studio 2019

1.在windows下构建Mamba使用环境:

conda create -n mamba python=3.10

conda activate mamba

注:Mamba需要triton,然而triton没有windows版,有人编译了triton2.0.0的windows版本,但python是3.10的。所以需要创建python-3.10的环境

2.安装torch:

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

这里torch很大,下载很慢,我是用迅雷下载后安装的

3.安装cmake

pip install cmake

4.安装triton

下载triton2.0.0的windows二进制文件,地址:https://hf-mirror.com/r4ziel/xformers_pre_built/blob/main/triton-2.0.0-cp310-cp310-win_amd64.whl,安装:

pip install D:\Downloads\triton-2.0.0-cp310-cp310-win_amd64.whl

5.下载mamba的源码并安装依赖包

git clone https://github.com/state-spaces/mamba,

切换到mamba目录:

cd mamba

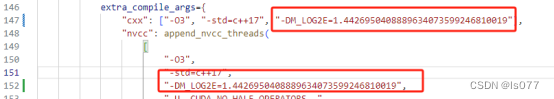

修改setup.py,添加编译参数:-DM_LOG2E=1.44269504

注:selective_scan_fwd_kernel.cuh和selective_scan_bwd_kernel.cuh使用了次预定义常量,但文件里没定义。

将csrc\selective_scan\selective_scan_fwd_kernel.cuh中selective_scan_fwd_launch和csrc\selective_scan\selective_scan_bwd_kernel.cuh中selective_scan_bwd_launch里最内层的匿名函数内容抽取为独立方法。

selective_scan_fwd_kernel.cuh:

- template<int kNThreads, int kNItems, int kNRows, bool kIsEvenLen, bool kIsVariableB, bool kIsVariableC, bool kHasZ, typename input_t, typename weight_t>

- void selective_scan_fwd_call(SSMParamsBase ¶ms, cudaStream_t stream) {

- using Ktraits = Selective_Scan_fwd_kernel_traits<kNThreads, kNItems, kNRows, kIsEvenLen, kIsVariableB, kIsVariableC, kHasZ, input_t, weight_t>;

- // constexpr int kSmemSize = Ktraits::kSmemSize;

- constexpr int kSmemSize = Ktraits::kSmemSize + kNRows * MAX_DSTATE * sizeof(typename Ktraits::scan_t);

- // printf("smem_size = %d\n", kSmemSize);

- dim3 grid(params.batch, params.dim / kNRows);

- auto kernel = &selective_scan_fwd_kernel<Ktraits>;

- if (kSmemSize >= 48 * 1024) {

- C10_CUDA_CHECK(cudaFuncSetAttribute(

- kernel, cudaFuncAttributeMaxDynamicSharedMemorySize, kSmemSize));

- }

- kernel<<<grid, Ktraits::kNThreads, kSmemSize, stream>>>(params);

- C10_CUDA_KERNEL_LAUNCH_CHECK();

- }

-

- template<int kNThreads, int kNItems, typename input_t, typename weight_t>

- void selective_scan_fwd_launch(SSMParamsBase ¶ms, cudaStream_t stream) {

- // Only kNRows == 1 is tested for now, which ofc doesn't differ from previously when we had each block

- // processing 1 row.

- BOOL_SWITCH(params.seqlen % (kNThreads * kNItems) == 0, kIsEvenLen, [&] {

- BOOL_SWITCH(params.is_variable_B, kIsVariableB, [&] {

- BOOL_SWITCH(params.is_variable_C, kIsVariableC, [&] {

- BOOL_SWITCH(params.z_ptr != nullptr , kHasZ, [&] {

- selective_scan_fwd_call<kNThreads, kNItems, 1, kIsEvenLen, kIsVariableB, kIsVariableC, kHasZ, input_t, weight_t>(params, stream);

- });

- });

- });

- });

- }

selective_scan_bwd_kernel.cuh:

- template<int kNThreads, int kNItems, bool kIsEvenLen, bool kIsVariableB, bool kIsVariableC, bool kDeltaSoftplus, bool kHasZ, typename input_t, typename weight_t>

- void selective_scan_bwd_call(SSMParamsBwd ¶ms, cudaStream_t stream) {

- using Ktraits = Selective_Scan_bwd_kernel_traits<kNThreads, kNItems, kIsEvenLen, kIsVariableB, kIsVariableC, kDeltaSoftplus, kHasZ, input_t, weight_t>;

- // using Ktraits = Selective_Scan_bwd_kernel_traits<kNThreads, kNItems, true, kIsVariableB, kIsVariableC, kDeltaSoftplus, kHasZ, input_t, weight_t>;

- // TODO: check this

- constexpr int kSmemSize = Ktraits::kSmemSize + MAX_DSTATE * sizeof(typename Ktraits::scan_t) + (kNThreads + 4 * MAX_DSTATE) * sizeof(typename Ktraits::weight_t);

- // printf("smem_size = %d\n", kSmemSize);

- dim3 grid(params.batch, params.dim);

- auto kernel = &selective_scan_bwd_kernel<Ktraits>;

- if (kSmemSize >= 48 * 1024) {

- C10_CUDA_CHECK(cudaFuncSetAttribute(

- kernel, cudaFuncAttributeMaxDynamicSharedMemorySize, kSmemSize));

- }

- kernel<<<grid, Ktraits::kNThreads, kSmemSize, stream>>>(params);

- C10_CUDA_KERNEL_LAUNCH_CHECK();

-

- }

-

- template<int kNThreads, int kNItems, typename input_t, typename weight_t>

- void selective_scan_bwd_launch(SSMParamsBwd ¶ms, cudaStream_t stream) {

- BOOL_SWITCH(params.seqlen % (kNThreads * kNItems) == 0, kIsEvenLen, [&] {

- BOOL_SWITCH(params.is_variable_B, kIsVariableB, [&] {

- BOOL_SWITCH(params.is_variable_C, kIsVariableC, [&] {

- BOOL_SWITCH(params.delta_softplus, kDeltaSoftplus, [&] {

- BOOL_SWITCH(params.z_ptr != nullptr , kHasZ, [&] {

- selective_scan_bwd_call<kNThreads, kNItems, kIsEvenLen, kIsVariableB, kIsVariableC, kDeltaSoftplus, kHasZ, input_t, weight_t>(params, stream);

- });

- });

- });

- });

- });

-

- }

注:估计是宏嵌套过多,展开后代码超过编译器允许长度了。

设置环境变量:

set MAMBA_FORCE_BUILD=TRUE

pip install .

编译安装成功,在虚拟环境的Lib\site-packages目录下,多了422MB文件:selective_scan_cuda.cp310-win_amd64.pyd。

6.安装causal-conv1d(可选)

git clone https://github.com/Dao-AILab/causal-conv1d.git

cd causal-conv1d

set CAUSAL_CONV1D_FORCE_BUILD=TRUE

打开csrc\causal_conv1d.cpp文件:

159、277、278行:将判断条件中的and改为&&

pip install .

编译安装成功,在虚拟环境的Lib\site-packages目录下,多了157MB文件:causal_conv1d_cuda.cp310-win_amd64.pyd。

说明:

代码构建时出现错误:

subprocess.CalledProcessError: Command '['ninja', '-v']' returned non-zero exit status 1.

其实是ninja编译过程中发生了错误。

代码构建失败后,build目录会被删除,重新构建很花时间。

我注释了D:\anaconda3\envs\mamba\Lib\site-packages\pip\_internal\wheel_builder.py的285行的call_subprocess调用,使build目录保留下来,

然后cd build\temp.win-amd64-cpython-310\Release到build.ninja所在目录,

再执行ninja,重现错误就快很多了。