热门标签

热门文章

- 1uniapp 简易通用的ajax 请求 封装 以及携带token去请求 (代码优化(新增请求日志和响应日志))_uniapp使用ajax

- 2AIGC盛行,带你轻松调用开发_aigc 有开放的api调用吗

- 3ChatGLM2本地部署的实战方案

- 4adb环境变量创建-----为什么“adb”不是内部或外部命令,也不是可运行的程序或批处理文件_win11adb不是内部或外部命令,也不是可运行的程序

- 5Delphi 抓图小插件_抓图插件

- 6Android — 深色主题适配_android深色模式适配

- 7Common 7B Language Models Already Possess Strong Math Capabilities

- 8[C++] 第三方开源csv解析库介绍和使用_c++解析csv第三方库

- 9JS实现UTF8编解码及Base64编解码_js base64 utf8

- 10双N卡完美运行ChatGLM3_chatglm3 多卡

当前位置: article > 正文

基于django的协同过滤食谱推荐系统、python_基于协同过滤算法的食谱推荐

作者:Cpp五条 | 2024-03-16 18:48:42

赞

踩

基于协同过滤算法的食谱推荐

1.内容简介

所用技术:scrapy、django、协同过滤

2.过程

2.1爬虫

使用scrapy爬取https://www.douguo.com网站的食谱数据

主要代码:

- # 在 parse 方法中获取下一页链接并发送请求

- def parse(self, response):

- # 获取当前页面的菜谱详情链接并发送请求

- for url in response.xpath('//ul[@class="cook-list"]//a[@class="cook-img"]/@href').getall():

- pipei_url = re.sub("/0.*", "", response.url)

- tag_id = self.url_tag[unquote(pipei_url)][0]

- tag_name = self.url_tag[unquote(pipei_url)][1]

- m = {"tag_id": tag_id, "tag_name": tag_name}

- yield scrapy.Request(url=self.url_root + url, callback=self.parse_detail, meta=m)

-

- # 解析出下一页链接并发送请求

- next_page = response.xpath('//a[@class="anext"]/@href')

- if next_page:

- yield scrapy.Request(url=next_page[0].get().replace("http", "https"), callback=self.parse)

-

- def md5_encrypt(self, s):

- md5 = hashlib.md5()

- md5.update(s.encode("utf-8"))

- return md5.hexdigest()

-

- def parse_detail(self, response, **kwargs):

- tag_id = response.meta["tag_id"]

- tag_name = response.meta["tag_name"]

- divs = response.xpath('//div[@class="step"]/div')

- title = response.xpath("//h1/text()").get()

-

- # 步骤

- step_list = []

- for div in divs:

- step_img = div.xpath("a/img//@src").get()

- step_index = div.xpath('div[@class="stepinfo"]/p/text()').get()

- step_text = "\n".join(div.xpath('div[@class="stepinfo"]/text()').getall()).strip()

- step_list.append([step_img, step_index, step_text])

-

- # 配料

- mix_list = []

- for td in response.xpath("//table/tr/td"):

- mix_name = td.xpath('span[@class="scname"]//text()').get()

- mix_cot = td.xpath('span[@class="right scnum"]//text()').get()

- mix_list.append([mix_name, mix_cot])

-

- info_item = FoodInfoItem(

- tag_id=tag_id,

- tag_name=tag_name,

- food_id=self.md5_encrypt(response.url),

- food_url=response.url,

- title=title,

- step_list=str(step_list),

- img=response.xpath('//*[@id="banner"]/a/img/@src').get(),

- desc1="\n".join(response.xpath('//p[@class="intro"]/text()').getall()).strip(),

- mix_list=str(mix_list),

- all_key=tag_name + title + str(mix_list),

- )

- yield info_item

2.2django展示

2.2.1主页展示(协同过滤推荐)

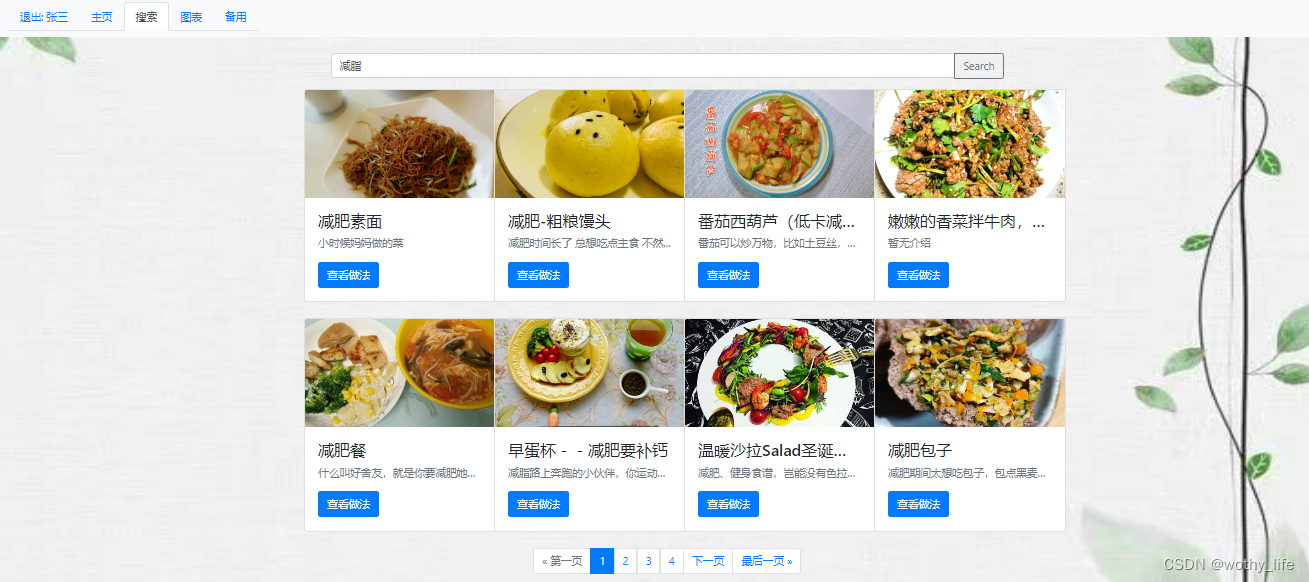

2.2.2搜索功能

2.2.3 饮食营养构成

2.3协同过滤推荐

基于用户的协同过滤推荐算法

- def recommend_by_user_cf(user_id, similarity_matrix, N=10):

- """

- 基于用户的协同过滤推荐算法

- Args:

- user_id: 目标用户ID

- similarity_matrix: 用户之间的相似度矩阵

- records: 所有用户的点菜记录,格式为 [(user_id, food_id, eat_date), ...]

- N: 推荐菜品数量

- Returns:

- recommended_foods: 推荐的菜品列表,格式为 [(food_id_1, score_1), (food_id_2, score_2), ...]

- """

- # 找到和指定用户吃过相同菜品的其他用户

- user_eating_records = EatingRecord.objects.filter(user_id=user_id).values("food_id", "eat_date")

- user_food_ids = [record["food_id"] for record in user_eating_records]

- similar_users = []

- for i in range(similarity_matrix.shape[0]):

- if i != user_id - 1:

- similarity = similarity_matrix[user_id - 1, i]

- if similarity > 0:

- # 找到该相似用户在最近M天内吃过的所有菜品

- similar_user_eating_records = (

- EatingRecord.objects.filter(user_id=i + 1).exclude(food_id__in=user_food_ids).values("food_id", "eat_date").order_by("-eat_date")[:10]

- )

- similar_user_food_ids = [record["food_id"] for record in similar_user_eating_records]

-

- # 计算该相似用户与指定用户之间的相似度,并加入相似用户列表中

- similar_users.append((i + 1, similarity, similar_user_food_ids))

-

- # 统计所有相似用户对每个菜品的兴趣度得分

- scores = {}

- for similar_user_id, similarity, similar_user_food_ids in similar_users:

- for food_id in similar_user_food_ids:

- if food_id not in user_food_ids:

- scores[food_id] = scores.get(food_id, 0) + similarity

-

- # 按照得分从高到低排序,选取前N个菜品作为推荐结果

- sorted_scores = sorted(scores.items(), key=lambda x: x[1], reverse=True)

- recommended_foods = sorted_scores[:N]

-

- return recommended_foods

python 毕设帮助,指导,源码分享,调试部署:worthy_life_

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/Cpp五条/article/detail/251417

推荐阅读

相关标签