- 1Git操作_gerrit 撤销提交

- 2决策树ID3、C4.5、CART、随机森林、bagging、boosting、Adaboost、GBDT、xgboost_bagging与id3 c4.5 cart的关系

- 3MYSQL多表查询_多个表连接查询

- 4过期域名

- 5自然语言处理(NLP)—— 信息提取与文档分类_nlp文本信息提取

- 6tomcat跨域问题CORS踩坑点_tomcat设置跨域访问

- 7史上最全VPS+云服务器运维面板汇总(收藏)_vps管理面板

- 8基于Yolov5的车流量检测系统_yolov5 车流量检测

- 9yolov8改进-添加Wise-IoU,yolov8损失改进_wise-iou损失函数结合yolov8

- 10为什么2024年Java 就业一地鸡毛,找工作这么难找?

Hive基础知识(十五):Hive中SQL排序方式全解_hive sql 排序

赞

踩

1. 全局排序(Order By)

Order By:全局排序,只有一个 Reducer

1)使用 ORDER BY 子句排序

ASC(ascend): 升序(默认)

DESC(descend): 降序

2)ORDER BY 子句在 SELECT 语句的结尾

3)案例实操

(1)查询员工信息按工资升序排列

- hive (hive3)> select e.ename,e.sal from emp e order by e.sal;

- e.ename e.sal

- KING NULL

- SMITH 800.0

- JAMES 950.0

- ADAMS 1100.0

- WARD 1250.0

- MARTIN 1250.0

- MILLER 1300.0

- TURNER 1500.0

- ALLEN 1600.0

- CLARK 2450.0

- BLAKE 2850.0

- JONES 2975.0

- SCOTT 3000.0

- FORD 3000.0

- Time taken: 4.87 seconds, Fetched: 14 row(s)

(2)查询员工信息按工资降序排列

- hive (hive3)> select e.ename,e.sal from emp e order by e.sal desc;

- e.ename e.sal

- FORD 3000.0

- SCOTT 3000.0

- JONES 2975.0

- BLAKE 2850.0

- CLARK 2450.0

- ALLEN 1600.0

- TURNER 1500.0

- MILLER 1300.0

- MARTIN 1250.0

- WARD 1250.0

- ADAMS 1100.0

- JAMES 950.0

- SMITH 800.0

- KING NULL

- Time taken: 2.08 seconds, Fetched: 14 row(s)

2. 按照别名排序

按照员工薪水的2 倍排序

hive (hive3)> select ename, sal*2 twosal from emp order by twosal;3. 多个列排序

按照部门和工资升序排序

hive (hive3)> select e.deptno,e.sal,e.ename from emp e order by deptno, sal;4. 每个 Reduce 内部排序(Sort By)重点

Sort By:对于大规模的数据集 order by 的效率非常低。在很多情况下,并不需要全局排序,此时可以使用 sort by。

Sort by 为每个 reducer 产生一个排序文件。每个 Reducer 内部进行排序,对全局结果集来说不是排序。

1)设置 reduce 个数

hive (default)> set mapreduce.job.reduces=3;2)查看设置 reduce 个数

hive (default)> set mapreduce.job.reduces;3)根据部门编号降序查看员工信息

- hive (hive3)> select e.ename,e.deptno from emp e sort by deptno desc;

- e.ename e.deptno

- TURNER 30

- BLAKE 30

- MARTIN 30

- SCOTT 20

- CLARK 10

- KING NULL

- WARD 30

- ALLEN 30

- JAMES 30

- ADAMS 20

- JONES 20

- MILLER 10

- FORD 20

- SMITH 20

- Time taken: 34.805 seconds, Fetched: 14 row(s)

上面的数据整体上看上去不是按照排序来排序的,是因为他们是在3个MR中进行的内部排序,当全部合拢在一起时又不是排序的了。

4)将查询结果导入到文件中(按照部门编号降序排序)

- hive (hive3)> insert overwrite local directory '/home/zzdq/sortby-result' select e.ename,e.deptno from emp e sort by deptno desc;

- Query ID = zzdq_20211219114405_5c926e59-440c-4eea-b11d-51df3b88c7ba

- Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 3

- 2021-12-19 11:44:16,622 Stage-1 map = 0%, reduce = 0%

- 2021-12-19 11:44:24,137 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.03 sec

- 2021-12-19 11:44:33,876 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 4.64 sec

- 2021-12-19 11:44:34,975 Stage-1 map = 100%, reduce = 67%, Cumulative CPU 6.98 sec

- 2021-12-19 11:44:38,148 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 8.97 sec

- MapReduce Total cumulative CPU time: 8 seconds 970 msec

- Ended Job = job_1639880318289_0004

- Moving data to local directory /home/atguigu/sortby-result

- MapReduce Jobs Launched:

- Stage-Stage-1: Map: 1 Reduce: 3 Cumulative CPU: 8.97 sec HDFS Read: 21354 HDFS Write: 126 SUCCESS

- Total MapReduce CPU Time Spent: 8 seconds 970 msec

- OK

- e.ename e.deptno

- Time taken: 34.73 seconds

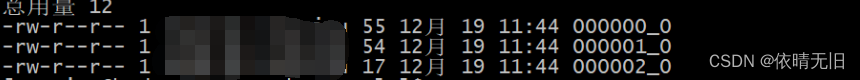

查看生成的文件:

查看文件:

- [zzdq@hadoop100 sortby-result]$ cat 000000_0 -n

- 1 TURNER•30

- 2 BLAKE•30

- 3 MARTIN•30

- 4 SCOTT•20

- 5 CLARK•10

- 6 KING•\N

- [zzdq@hadoop100 sortby-result]$ cat 000001_0 -n

- 1 WARD•30

- 2 ALLEN•30

- 3 JAMES•30

- 4 ADAMS•20

- 5 JONES•20

- 6 MILLER•10

- [zzdq@hadoop100 sortby-result]$ cat 000002_0 -n

- 1 FORD•20

- 2 SMITH•20

- [zzdq@hadoop100 sortby-result]$

可以看到,在文件内部的数据是有序的,也就是进行过排序了。排序过程中,数据是随机拿出来排序的,这是为了防止数据倾斜。

5. 分区(Distribute By)

Distribute By:在有些情况下,我们需要控制某个特定行应该到哪个 reducer,通常是为了进行后续的聚集操作。distribute by 子句可以做这件事。distribute by 类似 MR 中 partition (自定义分区),进行分区,结合 sort by 使用。

对于 distribute by 进行测试,一定要分配多 reduce 进行处理,否则无法看到 distribute by 的效果。

1)案例实操:

(1)先按照部门编号分区,再按照员工编号降序排序。

- hive (hive3)> select deptno,ename,sal from emp distribute by deptno sort by sal desc;

- 2021-12-19 11:53:46,343 Stage-1 map = 0%, reduce = 0%

- 2021-12-19 11:53:56,877 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.35 sec

- 2021-12-19 11:54:07,429 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 5.83 sec

- 2021-12-19 11:54:08,484 Stage-1 map = 100%, reduce = 67%, Cumulative CPU 8.31 sec

- 2021-12-19 11:54:09,519 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 11.05 sec

- MapReduce Total cumulative CPU time: 11 seconds 50 msec

- Ended Job = job_1639880318289_0005

- MapReduce Jobs Launched:

- Stage-Stage-1: Map: 1 Reduce: 3 Cumulative CPU: 11.05 sec HDFS Read: 23443 HDFS Write: 647 SUCCESS

- Total MapReduce CPU Time Spent: 11 seconds 50 msec

- OK

- deptno ename sal

- 30 BLAKE 2850.0

- 30 ALLEN 1600.0

- 30 TURNER 1500.0

- 30 WARD 1250.0

- 30 MARTIN 1250.0

- 30 JAMES 950.0

- NULL KING NULL

- 10 CLARK 2450.0

- 10 MILLER 1300.0

- 20 SCOTT 3000.0

- 20 FORD 3000.0

- 20 JONES 2975.0

- 20 ADAMS 1100.0

- 20 SMITH 800.0

- Time taken: 36.518 seconds, Fetched: 14 row(s)

同样可以输出到本地来进行查看

- hive (hive3)> set mapreduce.job.reduces=3;

- hive (hive3)> insert overwrite local directory '/home/zzdq/distribute-result' select deptno,ename,sal from emp distribute by deptno sort by sal desc;

使用多个reduce时,hive会退出本地模式

Cannot run job locally: Number of reducers (= 3) is more than 1注意:

➢ distribute by 的分区规则是根据分区字段的 hash 码与 reduce 的个数进行模除后,余数相同的分到一个区。

➢ Hive 要求 DISTRIBUTE BY 语句要写在 SORT BY 语句之前。

6. Cluster By(上面两个结合)

当 distribute by 和 sorts by 字段相同时,可以使用 cluster by 方式。

cluster by 除了具有 distribute by 的功能外还兼具 sort by 的功能。缺点:但是排序只能是升序排序,不能指定排序规则为 ASC 或者 DESC。

(1)以下两种写法等价

- hive (default)> select * from emp cluster by deptno;

- hive (default)> select * from emp distribute by deptno sort by deptno;

注意:按照部门编号分区,不一定就是固定死的数值,可以是20 号和30 号部门分到一个分区里面去。