- 1词性标注教程_propn

- 2Android使用Tesseract-ocr进行文字识别_android tesseract

- 3基于神经网络的依存句法分析_神经网络 依存句法分析

- 4跟着Kimi Chat学习提示工程Prompt Engineering!让AI更高效地给你打工!

- 5区别探索:掩码语言模型 (MLM) 和因果语言模型 (CLM)的区别

- 6UEditor富文本编辑器

- 7人工智能原理(学习笔记)_生理学派起源于仿生学

- 8知识图谱基础知识(一): 概念和构建_本体作为知识图谱的模式层,在约束概念关系、明确概念属性上起到了重要 作用。

- 9TCP系列教程—SIM7000X TCP 通信猫之旅_tcp 猫

- 10【NLP】第15章 从 NLP 到与任务无关的 Transformer 模型_google/reformer-crime-and-punishment

Coggle数据科学 | Streamlit + LangChain 问答可见即所得~_金融问答coggle

赞

踩

本文来源公众号“Coggle数据科学”,仅用于学术分享,侵权删,干货满满。

原文链接:Streamlit + LangChain 问答可见即所得~

LangChain是一个框架,旨在开发由语言模型驱动的应用程序。它不仅仅是通过API调用语言模型,还提供了数据感知、代理性质和一系列模块支持,使开发者能够构建更强大、更灵活的应用程序。

https://github.com/langchain-ai/streamlit-agent

本文包含了各种LangChain代理的参考实现,这些代理以Streamlit应用程序的形式展示出来。

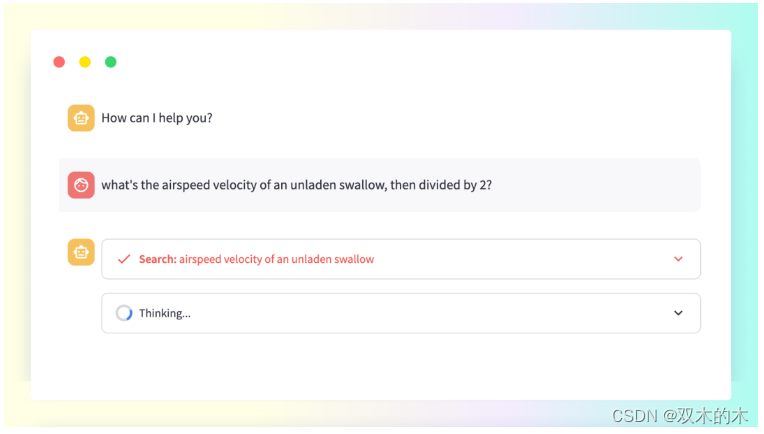

1 基础案例

https://github.com/langchain-ai/streamlit-agent/blob/main/streamlit_agent/minimal_agent.py

-

导入了LangChain框架的相关模块以及Streamlit库。

-

创建了一个OpenAI的语言模型对象(LLM),并设置了温度参数为0,以及启用了流式处理。

-

在Streamlit应用程序中,当用户输入文本时,通过st.chat_input()获取用户的输入。

- from langchain.llms import OpenAI

- from langchain.agents import AgentType, initialize_agent, load_tools

- from langchain.callbacks import StreamlitCallbackHandler

- import streamlit as st

-

- llm = OpenAI(temperature=0, streaming=True)

- tools = load_tools(["ddg-search"])

- agent = initialize_agent(

- tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

- )

-

- if prompt := st.chat_input():

- st.chat_message("user").write(prompt)

- with st.chat_message("assistant"):

- st_callback = StreamlitCallbackHandler(st.container())

- response = agent.run(prompt, callbacks=[st_callback])

- st.write(response)

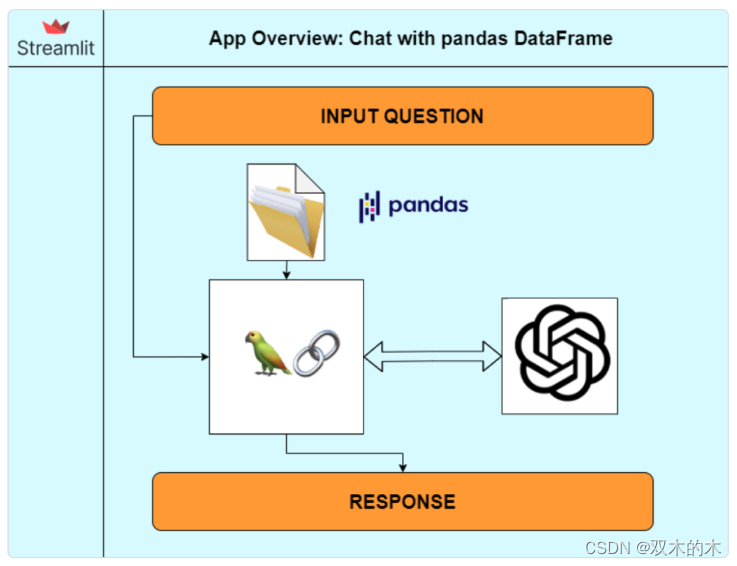

2 与DataFrame进行交互

https://github.com/langchain-ai/streamlit-agent/blob/main/streamlit_agent/chat_pandas_df.py

-

上传文档并读取文件的内容

-

创建了一个OpenAI的LLM

-

创建对DataFrame进行交互的Agent

- uploaded_file = st.file_uploader(

- "Upload a Data file",

- type=list(file_formats.keys()),

- help="Various File formats are Support",

- on_change=clear_submit,

- )

-

- if uploaded_file:

- df = load_data(uploaded_file)

-

- openai_api_key = st.sidebar.text_input("OpenAI API Key", type="password")

- if "messages" not in st.session_state or st.sidebar.button("Clear conversation history"):

- st.session_state["messages"] = [{"role": "assistant", "content": "How can I help you?"}]

-

- for msg in st.session_state.messages:

- st.chat_message(msg["role"]).write(msg["content"])

-

- if prompt := st.chat_input(placeholder="What is this data about?"):

- st.session_state.messages.append({"role": "user", "content": prompt})

- st.chat_message("user").write(prompt)

-

- if not openai_api_key:

- st.info("Please add your OpenAI API key to continue.")

- st.stop()

-

- llm = ChatOpenAI(

- temperature=0, model="gpt-3.5-turbo-0613", openai_api_key=openai_api_key, streaming=True

- )

-

- pandas_df_agent = create_pandas_dataframe_agent(

- llm,

- df,

- verbose=True,

- agent_type=AgentType.OPENAI_FUNCTIONS,

- handle_parsing_errors=True,

- )

-

- with st.chat_message("assistant"):

- st_cb = StreamlitCallbackHandler(st.container(), expand_new_thoughts=False)

- response = pandas_df_agent.run(st.session_state.messages, callbacks=[st_cb])

- st.session_state.messages.append({"role": "assistant", "content": response})

- st.write(response)

3 与文档进行交互

https://github.com/langchain-ai/streamlit-agent/blob/main/streamlit_agent/chat_with_documents.py

-

上传文档并读取文件的内容

-

创建了一个OpenAI的LLM

-

创建对文档进行交互的Agent

- uploaded_files = st.sidebar.file_uploader(

- label="Upload PDF files", type=["pdf"], accept_multiple_files=True

- )

-

- retriever = configure_retriever(uploaded_files)

-

- # Setup memory for contextual conversation

- msgs = StreamlitChatMessageHistory()

- memory = ConversationBufferMemory(memory_key="chat_history", chat_memory=msgs, return_messages=True)

-

- # Setup LLM and QA chain

- llm = ChatOpenAI(

- model_name="gpt-3.5-turbo", openai_api_key=openai_api_key, temperature=0, streaming=True

- )

- qa_chain = ConversationalRetrievalChain.from_llm(

- llm, retriever=retriever, memory=memory, verbose=True

- )

-

- if len(msgs.messages) == 0 or st.sidebar.button("Clear message history"):

- msgs.clear()

- msgs.add_ai_message("How can I help you?")

-

- avatars = {"human": "user", "ai": "assistant"}

- for msg in msgs.messages:

- st.chat_message(avatars[msg.type]).write(msg.content)

-

- if user_query := st.chat_input(placeholder="Ask me anything!"):

- st.chat_message("user").write(user_query)

-

- with st.chat_message("assistant"):

- retrieval_handler = PrintRetrievalHandler(st.container())

- stream_handler = StreamHandler(st.empty())

- response = qa_chain.run(user_query, callbacks=[retrieval_handler, stream_handler])

4 与数据库进行交互

https://github.com/langchain-ai/streamlit-agent/blob/main/streamlit_agent/chat_with_sql_db.py

-

上传文档并读取文件的内容

-

创建了一个OpenAI的LLM

-

创建对数据库进行交互的Agent

- # Setup agent

- llm = OpenAI(openai_api_key=openai_api_key, temperature=0, streaming=True)

-

-

- @st.cache_resource(ttl="2h")

- def configure_db(db_uri):

- if db_uri == LOCALDB:

- # Make the DB connection read-only to reduce risk of injection attacks

- # See: https://python.langchain.com/docs/security

- db_filepath = (Path(__file__).parent / "Chinook.db").absolute()

- creator = lambda: sqlite3.connect(f"file:{db_filepath}?mode=ro", uri=True)

- return SQLDatabase(create_engine("sqlite:///", creator=creator))

- return SQLDatabase.from_uri(database_uri=db_uri)

-

-

- db = configure_db(db_uri)

- toolkit = SQLDatabaseToolkit(db=db, llm=llm)

-

- agent = create_sql_agent(

- llm=llm,

- toolkit=toolkit,

- verbose=True,

- agent_type=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

- )

-

- if "messages" not in st.session_state or st.sidebar.button("Clear message history"):

- st.session_state["messages"] = [{"role": "assistant", "content": "How can I help you?"}]

-

- for msg in st.session_state.messages:

- st.chat_message(msg["role"]).write(msg["content"])

-

- user_query = st.chat_input(placeholder="Ask me anything!")

-

- if user_query:

- st.session_state.messages.append({"role": "user", "content": user_query})

- st.chat_message("user").write(user_query)

-

- with st.chat_message("assistant"):

- st_cb = StreamlitCallbackHandler(st.container())

- response = agent.run(user_query, callbacks=[st_cb])

- st.session_state.messages.append({"role": "assistant", "content": response})

- st.write(response)

THE END!

文章结束,感谢阅读。您的点赞,收藏,评论是我继续更新的动力。大家有推荐的公众号可以评论区留言,共同学习,一起进步。