热门标签

热门文章

- 1使用Pillow来进行图像处理_pillow 溶解

- 2sudo yum install -y yum-utils 时出错

- 3Java知识体系总结_java 知识体系结构

- 4张阿姨也能看懂的Controlnet简明教程(Stable Diffusion最强插件)_controlnet 控制类型教程

- 5PPG心率血氧检测健康型沙发方案_ppg 心率变异 压力等报告

- 6C++学习之文件操作_c++实现用数据库,xml 格式,txt 文本作为存储格式。要求有文件操作的基本功能,有建

- 7微信群机器人(仿真企业微信群机器人)

- 8SpringBoot信息泄露利用_spring boot信息泄露工具

- 9GIT中fatal: Authentication failed的问题

- 10最全真实大数据简历模版(二)【大数据-3年经验】金融_大数据 简历,2024年最新阿里P7级别面试经验总结_大数据简历项目

当前位置: article > 正文

【ChatGLM2-6B微调】bash train.sh 报错train.sh: line 4: $‘\r‘: command not found master_addr is only used f_git bash运行显示bash: .train.sh: command not found

作者:Guff_9hys | 2024-07-10 00:09:34

赞

踩

git bash运行显示bash: .train.sh: command not found

1. 错误状态

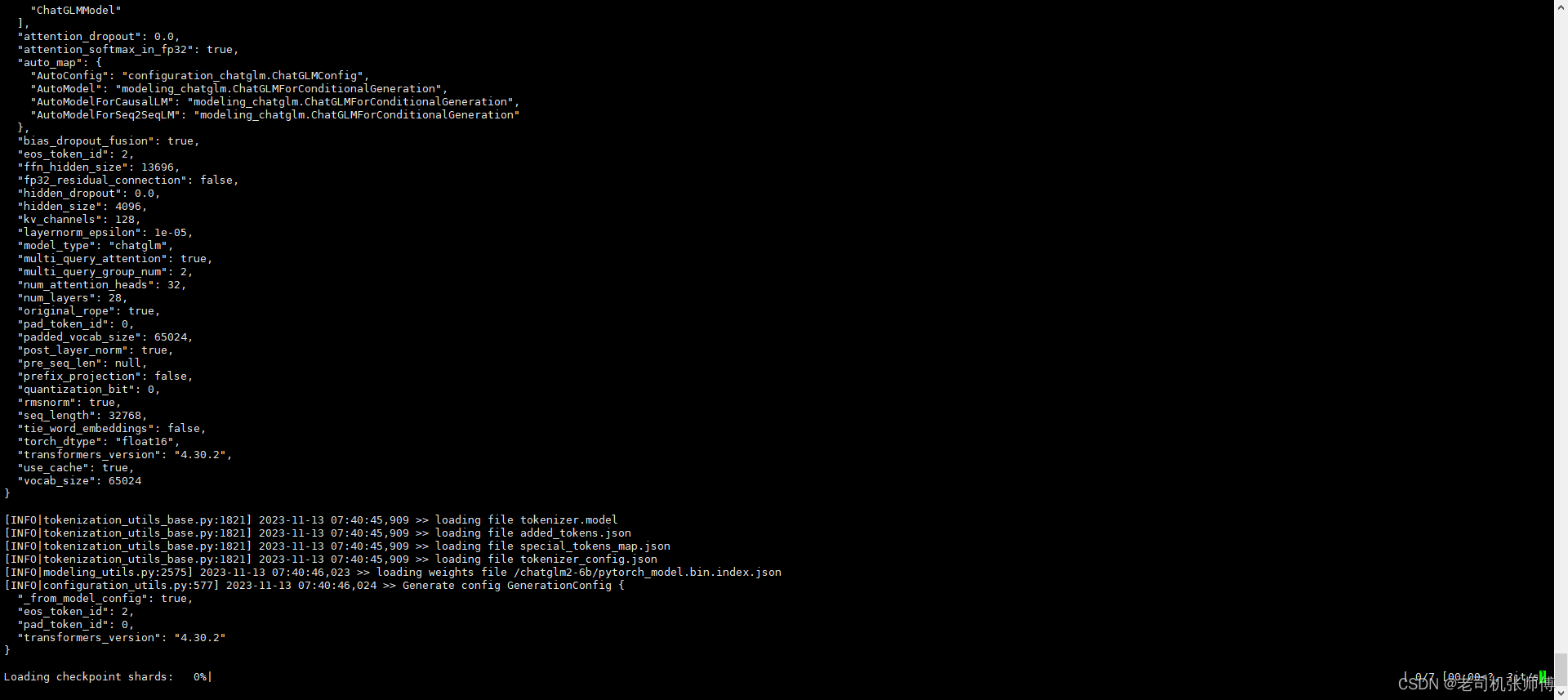

在Linux系统中,运行Shell脚本,出现了$‘\r’: command not found错误

train.sh: line 4: $'\r': command not found master_addr is only used for static rdzv_backend and when rdzv_endpoint is not specified. usage: main.py [-h] --model_name_or_path MODEL_NAME_OR_PATH [--ptuning_checkpoint PTUNING_CHECKPOINT] [--config_name CONFIG_NAME] [--tokenizer_name TOKENIZER_NAME] [--cache_dir CACHE_DIR] [--use_fast_tokenizer [USE_FAST_TOKENIZER]] [--no_use_fast_tokenizer] [--model_revision MODEL_REVISION] [--use_auth_token [USE_AUTH_TOKEN]] [--resize_position_embeddings RESIZE_POSITION_EMBEDDINGS] [--quantization_bit QUANTIZATION_BIT] [--pre_seq_len PRE_SEQ_LEN] [--prefix_projection [PREFIX_PROJECTION]] [--lang LANG] [--dataset_name DATASET_NAME] [--dataset_config_name DATASET_CONFIG_NAME] [--prompt_column PROMPT_COLUMN] [--response_column RESPONSE_COLUMN] [--history_column HISTORY_COLUMN] [--train_file TRAIN_FILE] [--validation_file VALIDATION_FILE] [--test_file TEST_FILE] [--overwrite_cache [OVERWRITE_CACHE]] [--preprocessing_num_workers PREPROCESSING_NUM_WORKERS] [--max_source_length MAX_SOURCE_LENGTH] [--max_target_length MAX_TARGET_LENGTH] [--val_max_target_length VAL_MAX_TARGET_LENGTH] [--pad_to_max_length [PAD_TO_MAX_LENGTH]] [--max_train_samples MAX_TRAIN_SAMPLES] [--max_eval_samples MAX_EVAL_SAMPLES] [--max_predict_samples MAX_PREDICT_SAMPLES] [--num_beams NUM_BEAMS] [--ignore_pad_token_for_loss [IGNORE_PAD_TOKEN_FOR_LOSS]] [--no_ignore_pad_token_for_loss] [--source_prefix SOURCE_PREFIX] [--forced_bos_token FORCED_BOS_TOKEN] --output_dir OUTPUT_DIR [--overwrite_output_dir [OVERWRITE_OUTPUT_DIR]] [--do_train [DO_TRAIN]] [--do_eval [DO_EVAL]] [--do_predict [DO_PREDICT]] [--evaluation_strategy {no,steps,epoch}] [--prediction_loss_only [PREDICTION_LOSS_ONLY]] [--per_device_train_batch_size PER_DEVICE_TRAIN_BATCH_SIZE] [--per_device_eval_batch_size PER_DEVICE_EVAL_BATCH_SIZE] [--per_gpu_train_batch_size PER_GPU_TRAIN_BATCH_SIZE] [--per_gpu_eval_batch_size PER_GPU_EVAL_BATCH_SIZE] [--gradient_accumulation_steps GRADIENT_ACCUMULATION_STEPS] [--eval_accumulation_steps EVAL_ACCUMULATION_STEPS] [--eval_delay EVAL_DELAY] [--learning_rate LEARNING_RATE] [--weight_decay WEIGHT_DECAY] [--adam_beta1 ADAM_BETA1] [--adam_beta2 ADAM_BETA2] [--adam_epsilon ADAM_EPSILON] [--max_grad_norm MAX_GRAD_NORM] [--num_train_epochs NUM_TRAIN_EPOCHS] [--max_steps MAX_STEPS] [--lr_scheduler_type {linear,cosine,cosine_with_restarts,polynomial,constant,constant_with_warmup,inverse_sqrt,reduce_lr_on_plateau}] [--warmup_ratio WARMUP_RATIO] [--warmup_steps WARMUP_STEPS] [--log_level {debug,info,warning,error,critical,passive}] [--log_level_replica {debug,info,warning,error,critical,passive}] [--log_on_each_node [LOG_ON_EACH_NODE]] [--no_log_on_each_node] [--logging_dir LOGGING_DIR] [--logging_strategy {no,steps,epoch}] [--logging_first_step [LOGGING_FIRST_STEP]] [--logging_steps LOGGING_STEPS] [--logging_nan_inf_filter [LOGGING_NAN_INF_FILTER]] [--no_logging_nan_inf_filter] [--save_strategy {no,steps,epoch}] [--save_steps SAVE_STEPS] [--save_total_limit SAVE_TOTAL_LIMIT] [--save_safetensors [SAVE_SAFETENSORS]] [--save_on_each_node [SAVE_ON_EACH_NODE]] [--no_cuda [NO_CUDA]] [--use_mps_device [USE_MPS_DEVICE]] [--seed SEED] [--data_seed DATA_SEED] [--jit_mode_eval [JIT_MODE_EVAL]] [--use_ipex [USE_IPEX]] [--bf16 [BF16]] [--fp16 [FP16]] [--fp16_opt_level FP16_OPT_LEVEL] [--half_precision_backend {auto,cuda_amp,apex,cpu_amp}] [--bf16_full_eval [BF16_FULL_EVAL]] [--fp16_full_eval [FP16_FULL_EVAL]] [--tf32 TF32] [--local_rank LOCAL_RANK] [--ddp_backend {nccl,gloo,mpi,ccl}] [--tpu_num_cores TPU_NUM_CORES] [--tpu_metrics_debug [TPU_METRICS_DEBUG]] [--debug DEBUG] [--dataloader_drop_last [DATALOADER_DROP_LAST]] [--eval_steps EVAL_STEPS] [--dataloader_num_workers DATALOADER_NUM_WORKERS] [--past_index PAST_INDEX] [--run_name RUN_NAME] [--disable_tqdm DISABLE_TQDM] [--remove_unused_columns [REMOVE_UNUSED_COLUMNS]] [--no_remove_unused_columns] [--label_names LABEL_NAMES [LABEL_NAMES ...]] [--load_best_model_at_end [LOAD_BEST_MODEL_AT_END]] [--metric_for_best_model METRIC_FOR_BEST_MODEL] [--greater_is_better GREATER_IS_BETTER] [--ignore_data_skip [IGNORE_DATA_SKIP]] [--sharded_ddp SHARDED_DDP] [--fsdp FSDP] [--fsdp_min_num_params FSDP_MIN_NUM_PARAMS] [--fsdp_config FSDP_CONFIG] [--fsdp_transformer_layer_cls_to_wrap FSDP_TRANSFORMER_LAYER_CLS_TO_WRAP] [--deepspeed DEEPSPEED] [--label_smoothing_factor LABEL_SMOOTHING_FACTOR] [--optim {adamw_hf,adamw_torch,adamw_torch_fused,adamw_torch_xla,adamw_apex_fused,adafactor,adamw_anyprecision,sgd,adagrad,adamw_bnb_8bit,adamw_8bit,lion_8bit,lion_32bit,paged_adamw_32bit,paged_adamw_8bit,paged_lion_32bit,paged_lion_8bit}] [--optim_args OPTIM_ARGS] [--adafactor [ADAFACTOR]] [--group_by_length [GROUP_BY_LENGTH]] [--length_column_name LENGTH_COLUMN_NAME] [--report_to REPORT_TO [REPORT_TO ...]] [--ddp_find_unused_parameters DDP_FIND_UNUSED_PARAMETERS] [--ddp_bucket_cap_mb DDP_BUCKET_CAP_MB] [--dataloader_pin_memory [DATALOADER_PIN_MEMORY]] [--no_dataloader_pin_memory] [--skip_memory_metrics [SKIP_MEMORY_METRICS]] [--no_skip_memory_metrics] [--use_legacy_prediction_loop [USE_LEGACY_PREDICTION_LOOP]] [--push_to_hub [PUSH_TO_HUB]] [--resume_from_checkpoint RESUME_FROM_CHECKPOINT] [--hub_model_id HUB_MODEL_ID] [--hub_strategy {end,every_save,checkpoint,all_checkpoints}] [--hub_token HUB_TOKEN] [--hub_private_repo [HUB_PRIVATE_REPO]] [--gradient_checkpointing [GRADIENT_CHECKPOINTING]] [--include_inputs_for_metrics [INCLUDE_INPUTS_FOR_METRICS]] [--fp16_backend {auto,cuda_amp,apex,cpu_amp}] [--push_to_hub_model_id PUSH_TO_HUB_MODEL_ID] [--push_to_hub_organization PUSH_TO_HUB_ORGANIZATION] [--push_to_hub_token PUSH_TO_HUB_TOKEN] [--mp_parameters MP_PARAMETERS] [--auto_find_batch_size [AUTO_FIND_BATCH_SIZE]] [--full_determinism [FULL_DETERMINISM]] [--torchdynamo TORCHDYNAMO] [--ray_scope RAY_SCOPE] [--ddp_timeout DDP_TIMEOUT] [--torch_compile [TORCH_COMPILE]] [--torch_compile_backend TORCH_COMPILE_BACKEND] [--torch_compile_mode TORCH_COMPILE_MODE] [--xpu_backend {mpi,ccl,gloo}] [--sortish_sampler [SORTISH_SAMPLER]] [--predict_with_generate [PREDICT_WITH_GENERATE]] [--generation_max_length GENERATION_MAX_LENGTH] [--generation_num_beams GENERATION_NUM_BEAMS] [--generation_config GENERATION_CONFIG] main.py: error: the following arguments are required: --model_name_or_path, --output_dir ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: 2) local_rank: 0 (pid: 2142) of binary: /usr/bin/python3 Traceback (most recent call last): File "/usr/local/bin/torchrun", line 8, in <module> sys.exit(main()) ^^^^^^ File "/usr/local/lib/python3.11/dist-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py", line 346, in wrapper return f(*args, **kwargs) ^^^^^^^^^^^^^^^^^^ File "/usr/local/lib/python3.11/dist-packages/torch/distributed/run.py", line 794, in main run(args) File "/usr/local/lib/python3.11/dist-packages/torch/distributed/run.py", line 785, in run elastic_launch( File "/usr/local/lib/python3.11/dist-packages/torch/distributed/launcher/api.py", line 134, in __call__ return launch_agent(self._config, self._entrypoint, list(args)) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/usr/local/lib/python3.11/dist-packages/torch/distributed/launcher/api.py", line 250, in launch_agent raise ChildFailedError( torch.distributed.elastic.multiprocessing.errors.ChildFailedError: ============================================================ main.py FAILED ------------------------------------------------------------ Failures: <NO_OTHER_FAILURES> ------------------------------------------------------------ Root Cause (first observed failure): [0]: time : 2023-11-13_06:25:22 host : 9ef635c410cc rank : 0 (local_rank: 0) exitcode : 2 (pid: 2142) error_file: <N/A> traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html ============================================================ train.sh: line 6: --do_train: command not found train.sh: line 7: --train_file: command not found train.sh: line 8: --validation_file: command not found train.sh: line 9: --preprocessing_num_workers: command not found train.sh: line 10: --prompt_column: command not found train.sh: line 11: --response_column: command not found train.sh: line 12: --overwrite_cache: command not found train.sh: line 13: --model_name_or_path: command not found train.sh: line 14: --output_dir: command not found train.sh: line 15: --overwrite_output_dir: command not found train.sh: line 16: --max_source_length: command not found train.sh: line 17: --max_target_length: command not found train.sh: line 18: --per_device_train_batch_size: command not found train.sh: line 19: --per_device_eval_batch_size: command not found train.sh: line 20: --gradient_accumulation_steps: command not found train.sh: line 21: --predict_with_generate: command not found train.sh: line 22: --max_steps: command not found train.sh: line 23: --logging_steps: command not found train.sh: line 24: --save_steps: command not found train.sh: line 25: --learning_rate: command not found train.sh: line 26: --pre_seq_len: command not found train.sh: line 27: --quantization_bit: command not found train.sh: line 28: $'\r': command not found

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

2. 解决办法

可能因为该Shell脚本是在Windows系统编写时,每行结尾是\r\n

而在Linux系统中行每行结尾是\n

在Linux系统中运行脚本时,会认为\r是一个字符,导致运行错误

使用dos2unix 转换一下就可以了

dos2unix <文件名>

# dos2unix: converting file one-more.sh to Unix format ...

- 1

- 2

- 3

如果出现:

-bash: dos2unix: command not found

就是还没安装,安装一下就可以了

apt install dos2unix

- 1

解决

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/Guff_9hys/article/detail/804187

推荐阅读

相关标签