- 1《Python编程:从入门到实践》项目一“外星人入侵”实现保存最高分至本地文件_外星人项目 将最高分写入文件

- 2Linux Zabbix——zabbix可视化、监控模板配置、自定义监控参数、自动发现监控下设备、数据库监控、企业proxy分布式监控搭建配置..._zabbix自定义倍数

- 3深度学习模型的Android部署方法_深度学习模型移动端部署平台

- 4【异常总结】集合(Set)对象遍历遇到的空指针异常_set集合空指针

- 5主题模型-LDA浅析_lda主题模型快速入门

- 6用好VS2010扩展管理器

- 7python多分类与数据预测分析_python数据预测模型

- 8大前端系列之ES6_jdk1.6 前端let

- 9echarts 实现tooltip自动轮播显示,鼠标悬浮时暂停_echarts 折线图悬浮窗自动切换

- 10Android问题集锦之四十六:改包名后出现Error type 3_android更改包名后不能打包

B站教学 手把手教你使用YOLOV5之口罩检测项目 最全记录详解 ( 深度学习 / 目标检测 / pytorch )_results saved to runs\detect\train是在那个位置

赞

踩

目录

感谢作者肆十二!!!

作者博客资源:

(88条消息) 手把手教你使用YOLOV5训练自己的目标检测模型-口罩检测-视频教程_肆十二的博客-CSDN博客

一、环境搭建

pytorch的下载

由于我的GPU版本太低,所以使用CPU下载pytorch,具体如何下载在视频中有。

测试(cmd窗口中)

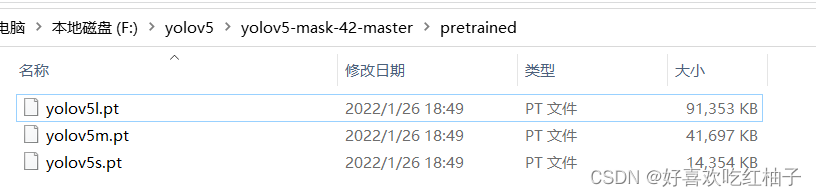

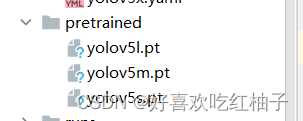

- 在代码目录下的pretrained文件夹下有3个预训练好的模型,这3个预训练模型具有检测物体的能力,因为已经在CoCo数据集上做过训练。后续我们需要应用在口罩的检测上,再把口罩的数据集做一个微调即可。我们使用yolov5s这个较小的模型去做测试。

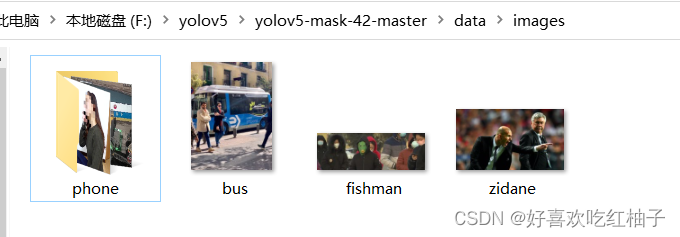

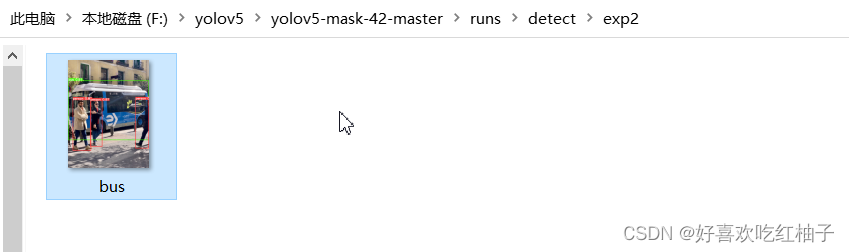

- 使用bus图片进行测试 (在data中的image包下)

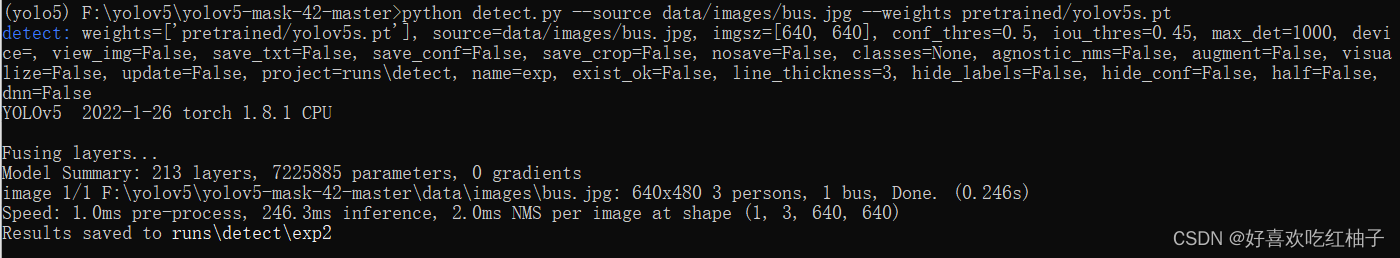

- 输入训练命令之后的运行截图:

注意,要在之前创建好的虚拟环境下运行(我的虚拟环境叫yolo5,在代码所在的文件夹下)

输入命令:

python detect.py --source data/images/bus.jpg --weights pretrained/yolov5s.pt把bus这张图片使用权重为yolov5s的预训练模型进行测试

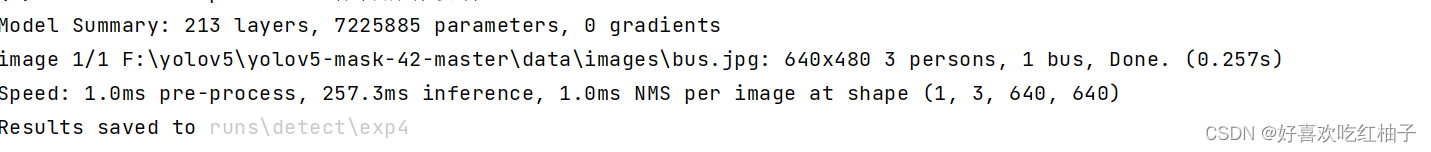

命令行会输出一下相关信息:

- 权重文件:weights=['pretrained/yolov5s.pt']

- 要检测的图片:source=data/images/bus.jpg

- 图片输入的大小640*640

- 置信度大小:0.5(当有50%的信心认为该图片里面有检测的目标时,就输出)

- 交并比:0.45

- 设备信息:torch版本1.8.1

- 检测结果放置的位置:Results saved to runs\detect\exp2

pycharm下测试(要配置pycharm中的虚拟环境)

1. 使用pycharm打开代码文件夹,在interpreter setting中配置python的虚拟环境,视频中有,如下图所示配置成功。

2. 在terminal中输入刚刚的命令,注意检查pycharm命令行前面有没有大写的PS,如果有的话说明pycharm的命令行不是自己的虚拟环境,需要在setting中的terminal里,把shell path设置成自己的命令行cmd.exe。

(88条消息) pycharm中的terminal运行前面的PS如何修改成自己环境_呜哇哈哈嗝~的博客-CSDN博客_pycharm的terminal更改环境

3. 然后再运行就可以了

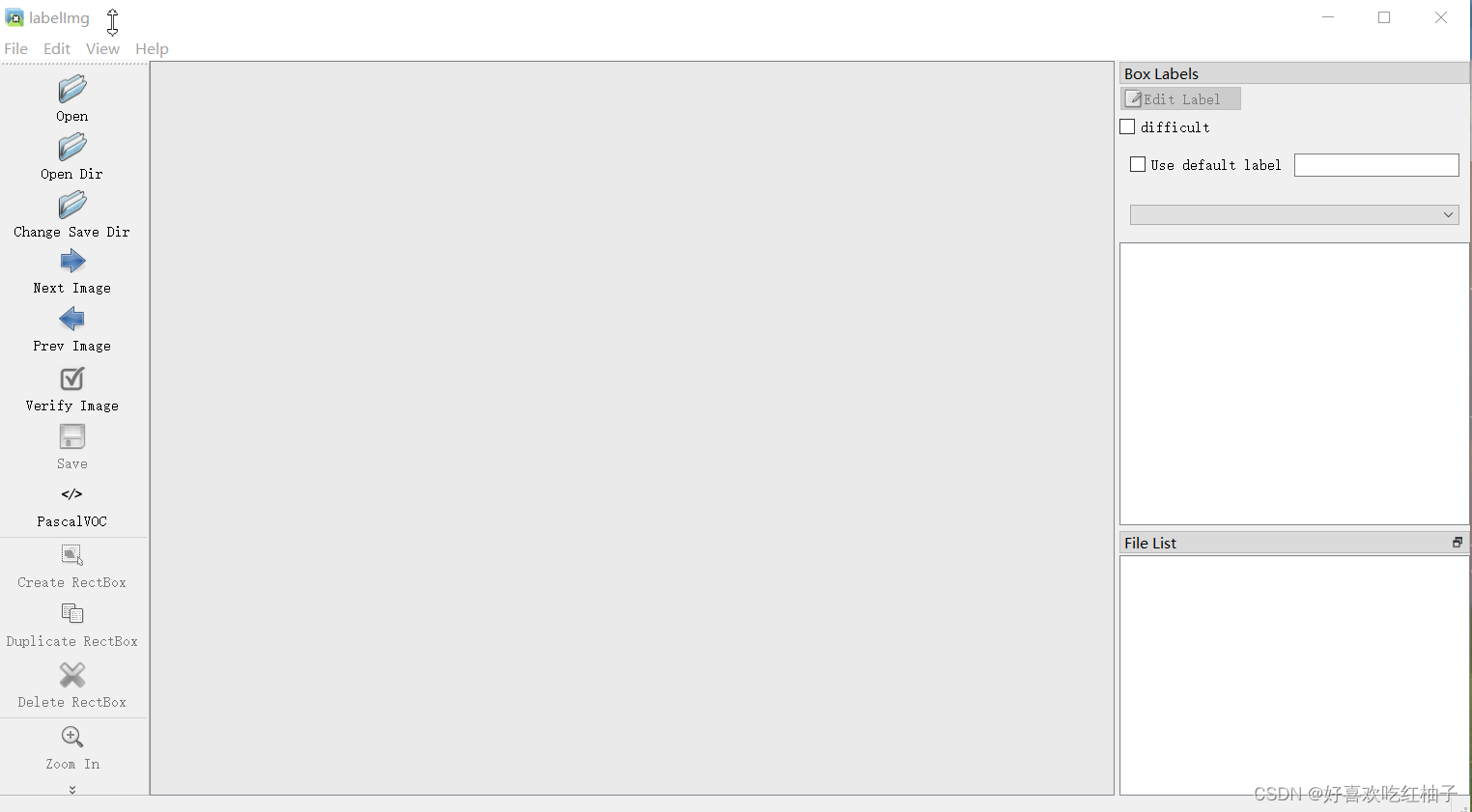

二、数据标注

因为我们的应用场景是在检测口罩的场景下使用的,所以我们需要先标注一个口罩的数据集,然后给模型,让模型进行训练。

如何对口罩进行标注?

使用labor image软件

cmd中激活虚拟环境,用pip安装

(yolo5) F:\yolov5\yolov5-mask-42-master>pip install labelimg安装成功后直接输入labelimg,即可直接打开该软件

(yolo5) F:\yolov5\yolov5-mask-42-master>labelimg

把方式切换为YOLO

使用labelimg进行图片标注

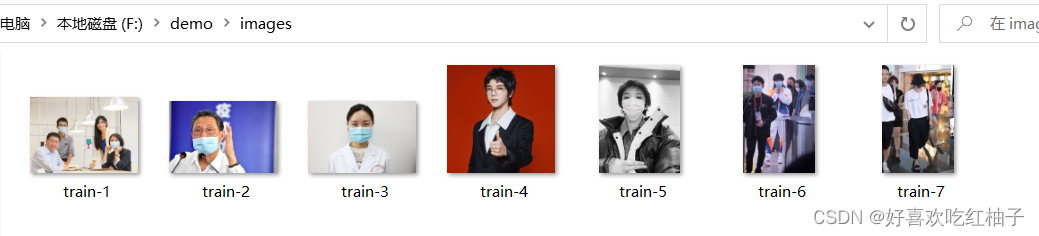

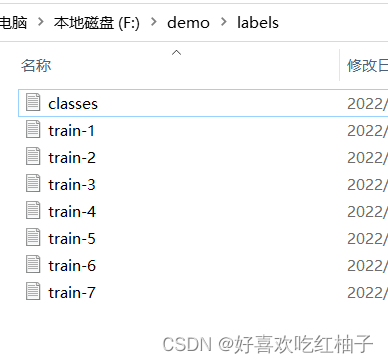

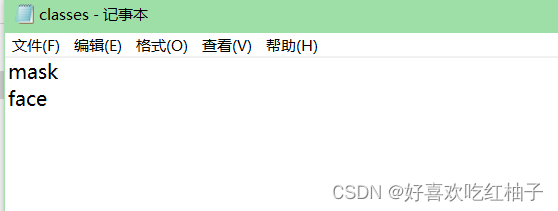

我选了7张图片,会对应生成7个标注的txt文件,classes文件里会显示标注的类别

- images

- labels

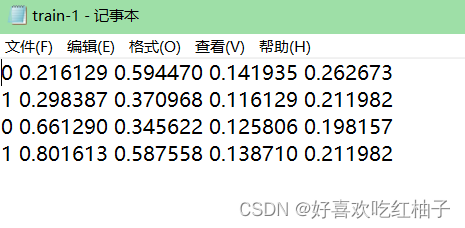

0和1代表分别两个类,后面的数字代表定位框的位置,前两个代表中心点的坐标,后两个数字代表w和h(宽和高)

划分训练集、测试集和验证集

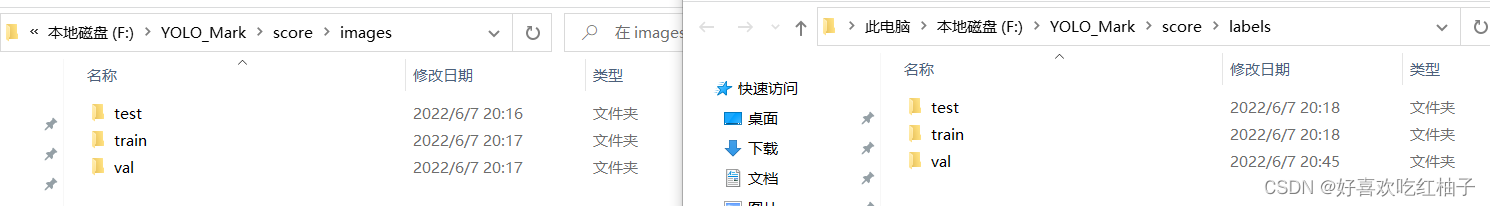

有images和labels两个包下每个都有训练集、测试集和验证集(验证集的标签是我自己打的,如果后续能用的话再传上来)

三、模型的训练检验和使用

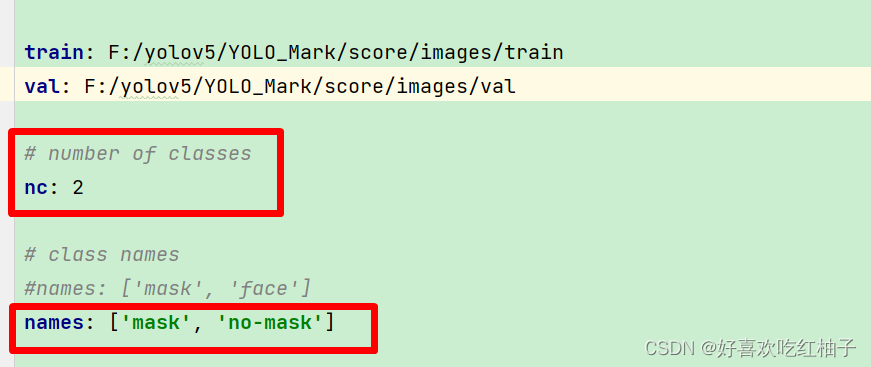

1. mask_data.yaml文件

- train和val代表自己数据集的train和val的地址

- names数组代表两个类的名字

- # Custom data for safety helmet

-

-

- # train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/]

- #train: F:/up/1212/YOLO_Mask/score/images/train

- #val: F:/up/1212/YOLO_Mask/score/images/val

-

- train: F:/YOLO_Mark/score/images/train

- val: F:/YOLO_Mark/score/images/val

-

- # number of classes

- nc: 2

-

- # class names

- #names: ['mask', 'face']

- names: ['mask', 'no-mask']

2. yolov5s.yaml

需要改一下nc的值,有几个类就是几。

3. train.py文件

在train.py中使用python训练数据集

- 数据集的配置文件是mask_data.yaml,根据该文件找到该口罩数据集,

- 再调用模型的配置文件mask_yolov5s.yaml,训练一个yolov5的small的模型,

- weights:所要使用到的预训练的模型,是已经下载好的yolov5s的模型

- epoch:在数据集上跑100轮

- batch:输入的图片数据是4张一批(可以修改batch大小,如果显存不够改为1/2,显存够大改为16)

-

- # python train.py --data mask_data.yaml --cfg mask_yolov5s.yaml --weights pretrained/yolov5s.pt --epoch 100 --batch-size 4 --device cpu

- # python train.py --data mask_data.yaml --cfg mask_yolov5l.yaml --weights pretrained/yolov5l.pt --epoch 100 --batch-size 4

- # python train.py --data mask_data.yaml --cfg mask_yolov5m.yaml --weights pretrained/yolov5m.pt --epoch 100 --batch-size 4

把命令粘贴在pycharm命令行中运行。

4. 出现问题

1. proco版本过低

TypeError: Descriptors cannot not be created directly 解决方法:

(88条消息) TypeError: Descriptors cannot not be created directly 解决方法_zyrant丶的博客-CSDN博客

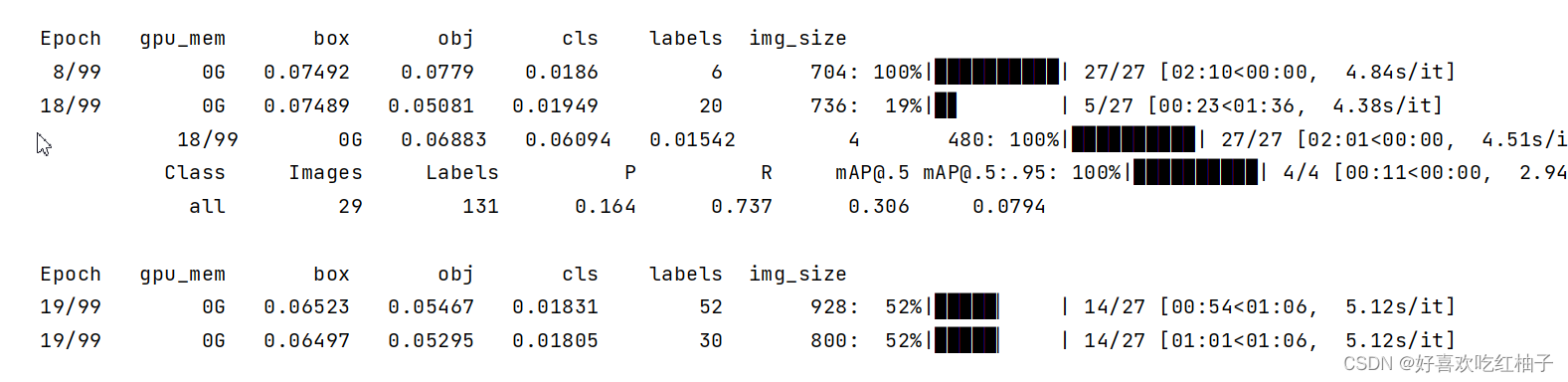

模型已经开始训练了,现在就是等着啦

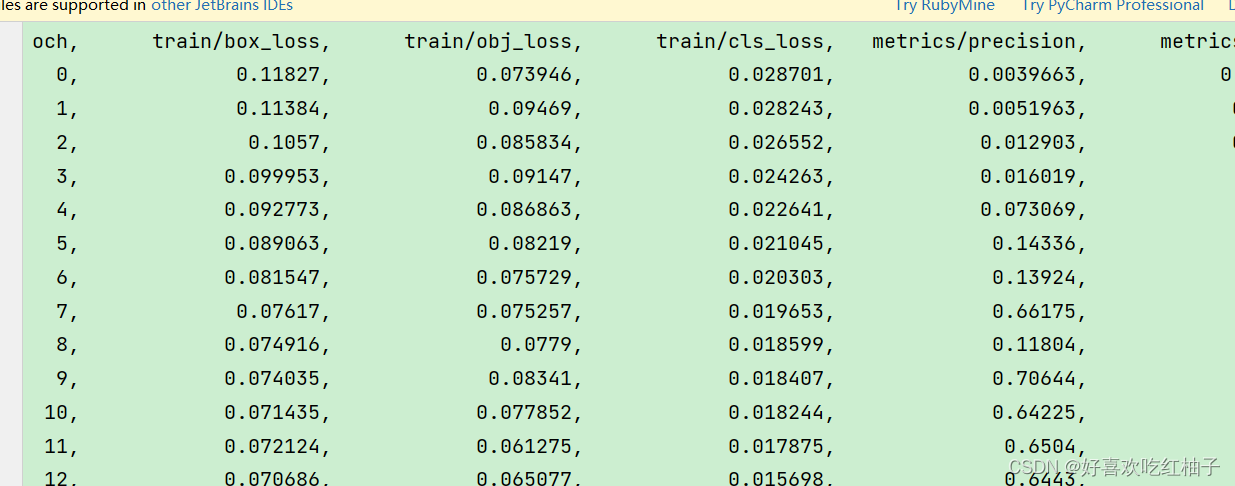

results.csv文件中可以看到训练的损失值、准确率、召回率等等

5. 训练结束

结束!100轮跑完了,我看命令行里说用了3.37小时,我记得开始训练的时候是晚上九点半,这样的话应该是凌晨一点左右跑完的,我今天早上来可以验收成功啦!

我用的训练集只有105张图片,所以准确度很低,主要是为了完成这个过程来学习用的,所以就不要求高的精度值啦

四、 模型产生的文件解读

训练结束后产生的文件

在train/runs/exp的目录下可以找到训练得到的模型和日志文件

1. weights

weights目录下会产生两个权重文件,分别是最好的模型和最后的模型

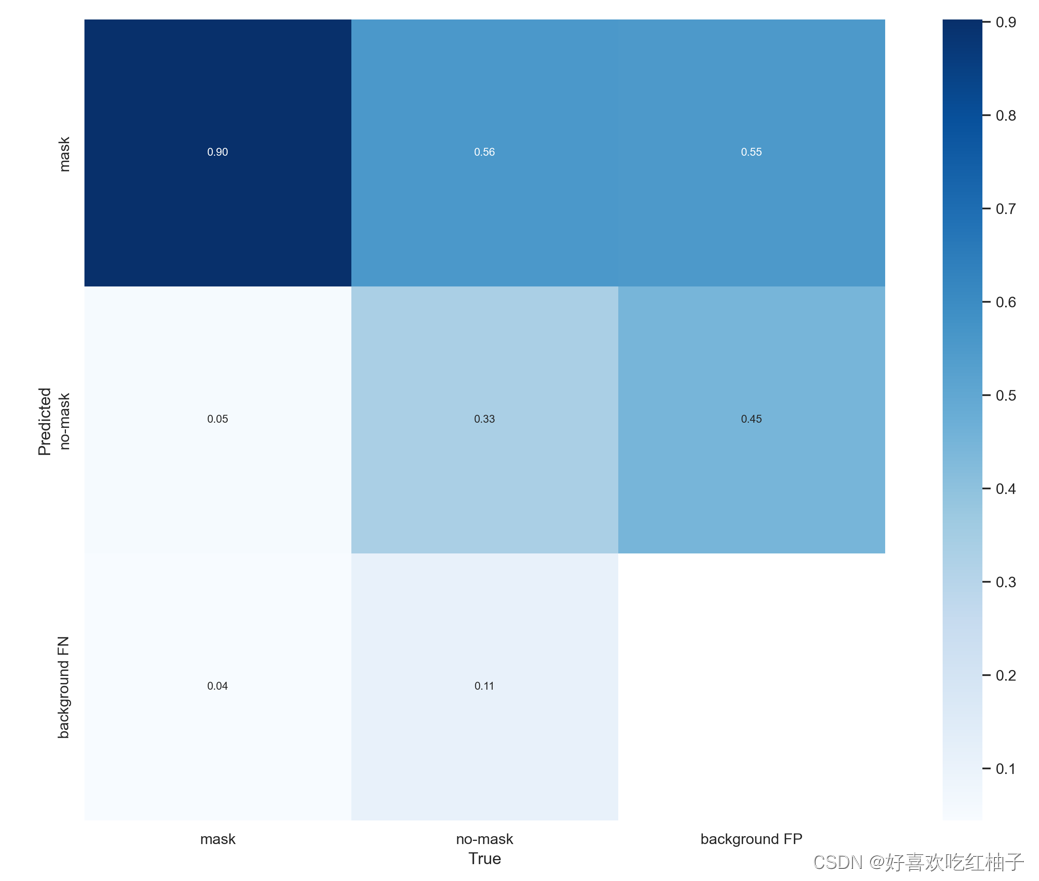

2. confusion_matrix 混淆矩阵

指明在类别上的精度,可以看到在我训练的模型中mask类的准确度较高,能达到0.9,但是no-mask类只有0.33的准确度,非常低。

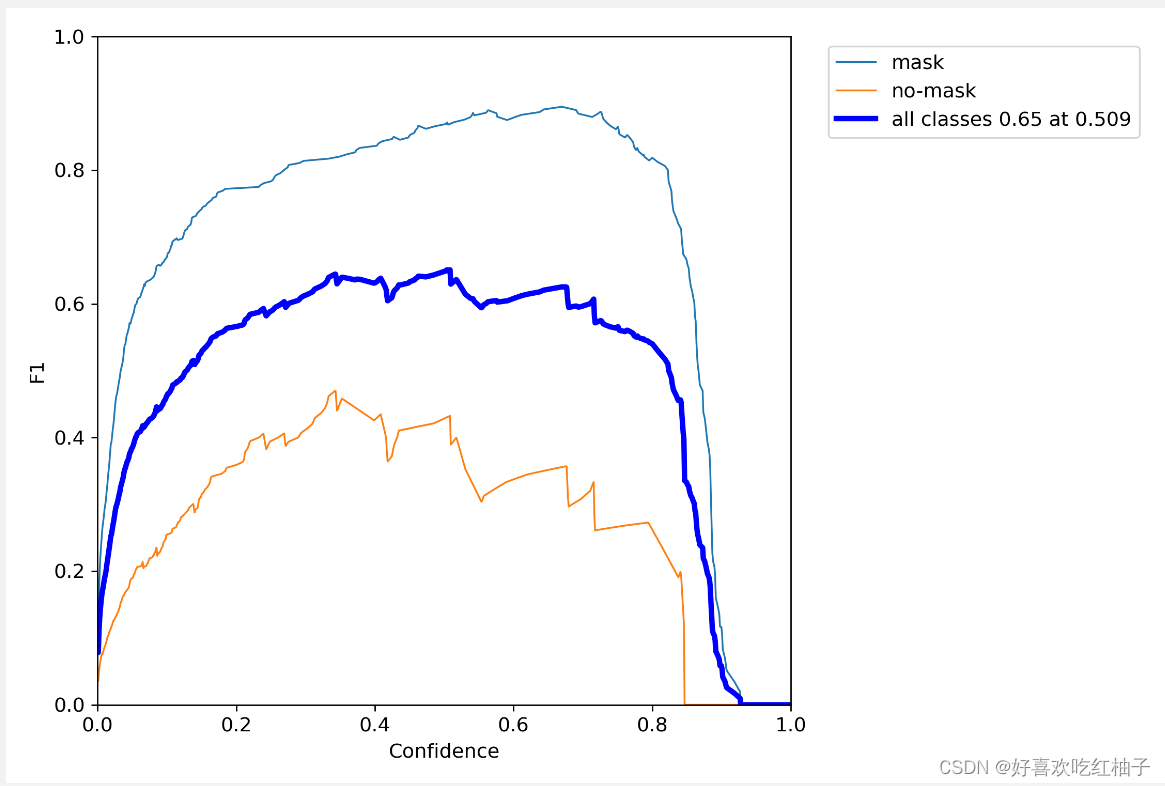

3. F1_cure.png

F1是衡量指标,可以看到all class0.65 at 0.509,即所有类别的判断精度大约是在0.65左右

4. hypl.yaml

表明超参数的文件

- 学习率 lr0:0.01

- 动量(学习率衰减)momentum:0.937

- lr0: 0.01

- lrf: 0.1

- momentum: 0.937

- weight_decay: 0.0005

- warmup_epochs: 3.0

- warmup_momentum: 0.8

- warmup_bias_lr: 0.1

- box: 0.05

- cls: 0.5

- cls_pw: 1.0

- obj: 1.0

- obj_pw: 1.0

- iou_t: 0.2

- anchor_t: 4.0

- fl_gamma: 0.0

- hsv_h: 0.015

- hsv_s: 0.7

- hsv_v: 0.4

- degrees: 0.0

- translate: 0.1

- scale: 0.5

- shear: 0.0

- perspective: 0.0

- flipud: 0.0

- fliplr: 0.5

- mosaic: 1.0

- mixup: 0.0

- copy_paste: 0.0

5. P_cure.png

精度曲线

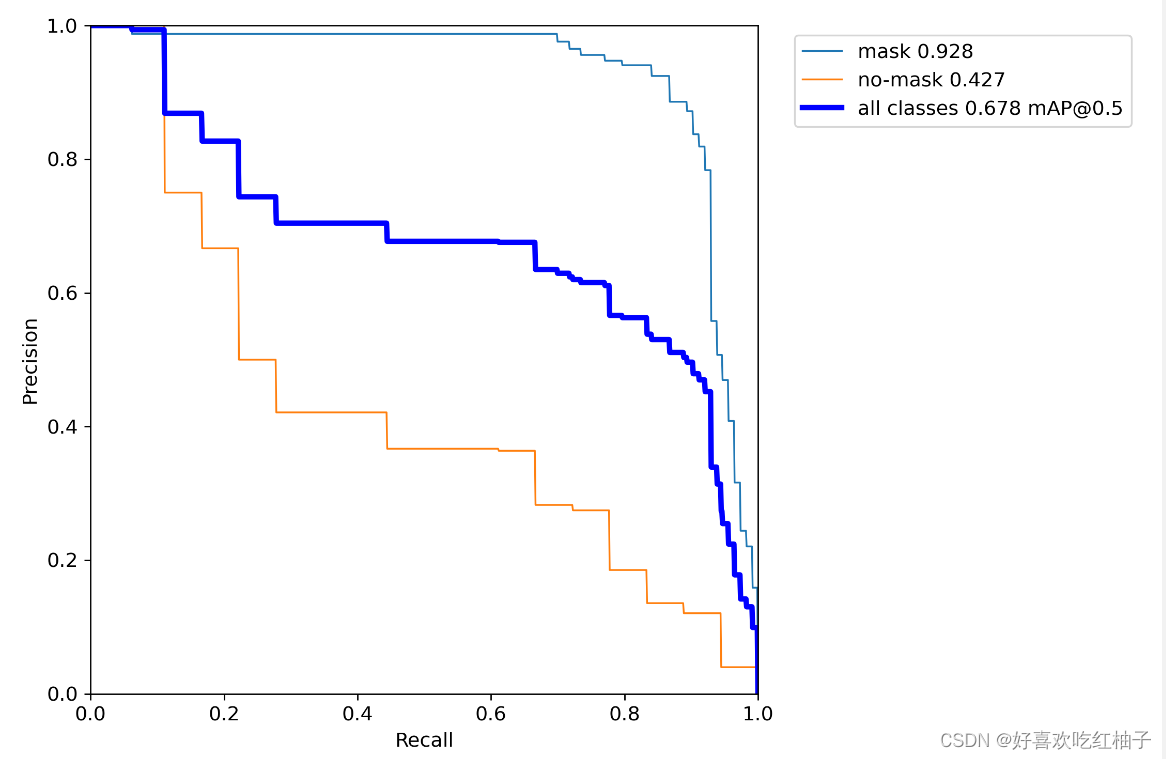

6. PR_cure.png

mAP 是 Mean Average Precision 的缩写,即 均值平均精度。作为 object dection 中衡量检测精度的指标。

计算公式为: mAP = 所有类别的平均精度求和除以所有类别。

(88条消息) 【深度学习小常识】什么是mAP?_水亦心的博客-CSDN博客_map是什么

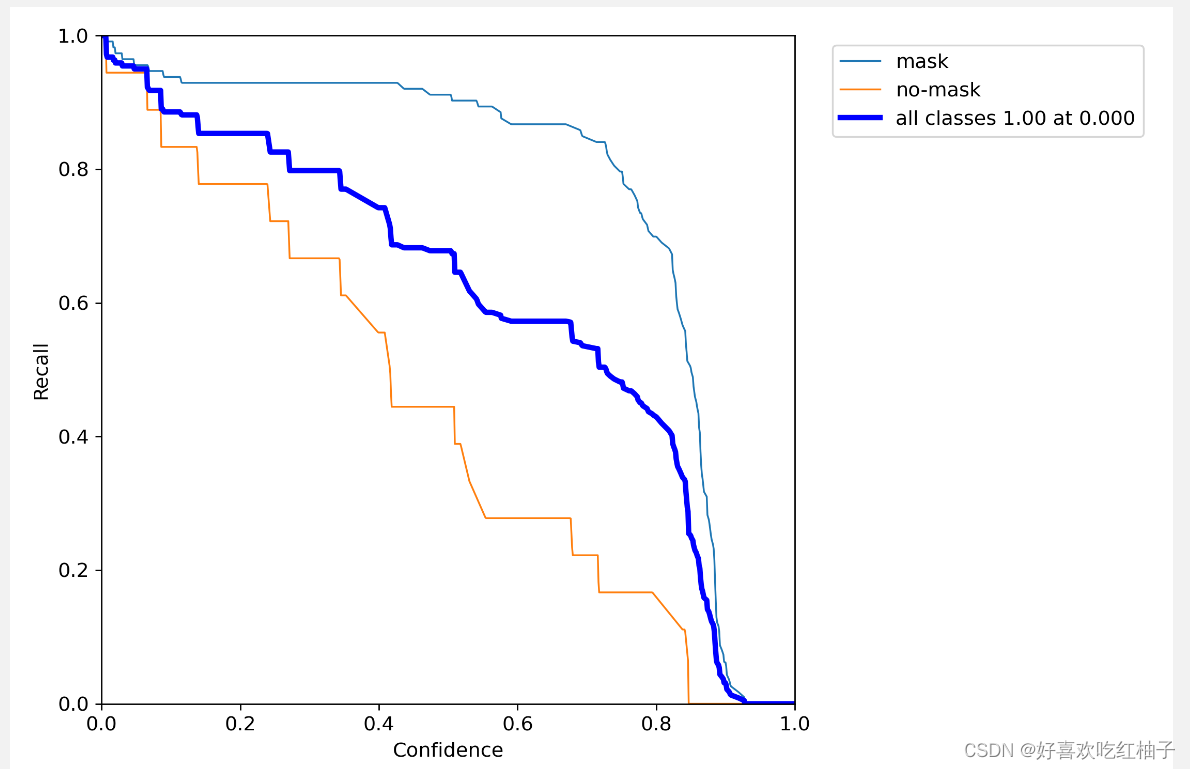

7. R_cure.png

召回率曲线

8. results.csv

记录了从0-99轮所有的相关数值,如损失率、准确率等等

- epoch, train/box_loss, train/obj_loss, train/cls_loss, metrics/precision, metrics/recall, metrics/mAP_0.5,metrics/mAP_0.5:0.95, val/box_loss, val/obj_loss, val/cls_loss, x/lr0, x/lr1, x/lr2

- 0, 0.11827, 0.073946, 0.028701, 0.0039663, 0.0088496, 0.0010384, 0.00022928, 0.1126, 0.04107, 0.027971, 0.00026, 0.00026, 0.09766

- 1, 0.11384, 0.09469, 0.028243, 0.0051963, 0.045477, 0.0015374, 0.00035155, 0.10621, 0.042533, 0.026938, 0.00052988, 0.00052988, 0.09523

- 2, 0.1057, 0.085834, 0.026552, 0.012903, 0.041052, 0.0048862, 0.00094498, 0.098147, 0.045064, 0.025205, 0.00079929, 0.00079929, 0.092799

- 3, 0.099953, 0.09147, 0.024263, 0.016019, 0.11185, 0.0093479, 0.0019994, 0.090472, 0.046923, 0.02335, 0.0010679, 0.0010679, 0.090368

- 4, 0.092773, 0.086863, 0.022641, 0.073069, 0.206, 0.044384, 0.0082303, 0.082259, 0.047229, 0.020974, 0.0013352, 0.0013352, 0.087935

- 5, 0.089063, 0.08219, 0.021045, 0.14336, 0.15929, 0.10416, 0.021328, 0.074287, 0.045153, 0.019245, 0.0016011, 0.0016011, 0.085501

- 6, 0.081547, 0.075729, 0.020303, 0.13924, 0.22124, 0.14065, 0.029077, 0.069554, 0.042244, 0.017914, 0.001865, 0.001865, 0.083065

- 7, 0.07617, 0.075257, 0.019653, 0.66175, 0.19027, 0.16194, 0.035453, 0.0682, 0.040773, 0.017001, 0.0021267, 0.0021267, 0.080627

- 8, 0.074916, 0.0779, 0.018599, 0.11804, 0.71444, 0.20915, 0.047715, 0.06213, 0.039259, 0.016546, 0.0023858, 0.0023858, 0.078186

- 9, 0.074035, 0.08341, 0.018407, 0.70644, 0.22566, 0.22019, 0.045716, 0.064939, 0.035718, 0.016504, 0.0026419, 0.0026419, 0.075742

- 10, 0.071435, 0.077852, 0.018244, 0.64225, 0.30791, 0.17581, 0.04388, 0.062539, 0.035256, 0.016511, 0.0028948, 0.0028948, 0.073295

- 11, 0.072124, 0.061275, 0.017875, 0.6504, 0.2646, 0.20819, 0.056578, 0.063117, 0.034939, 0.016554, 0.0031441, 0.0031441, 0.070844

- 12, 0.070686, 0.065077, 0.015698, 0.6443, 0.36259, 0.18392, 0.039542, 0.065903, 0.030739, 0.016197, 0.0033894, 0.0033894, 0.068389

- 13, 0.07227, 0.068656, 0.014215, 0.69753, 0.24779, 0.24174, 0.052705, 0.064055, 0.028667, 0.01606, 0.0036305, 0.0036305, 0.06593

- 14, 0.073751, 0.063407, 0.015677, 0.14771, 0.61676, 0.20236, 0.063066, 0.068077, 0.028618, 0.016239, 0.003867, 0.003867, 0.063467

- 15, 0.070441, 0.069869, 0.016322, 0.31469, 0.65005, 0.37826, 0.13011, 0.06401, 0.028028, 0.016283, 0.0040986, 0.0040986, 0.060999

- 16, 0.068428, 0.05836, 0.01625, 0.40733, 0.59784, 0.34434, 0.1077, 0.063769, 0.028005, 0.01628, 0.0043251, 0.0043251, 0.058525

- 17, 0.07147, 0.058589, 0.017207, 0.34527, 0.53589, 0.34856, 0.11075, 0.062767, 0.027597, 0.016055, 0.0045461, 0.0045461, 0.056046

- 18, 0.06883, 0.06094, 0.015417, 0.16423, 0.73673, 0.30564, 0.079353, 0.067952, 0.026534, 0.015758, 0.0047613, 0.0047613, 0.053561

- 19, 0.068752, 0.062422, 0.018029, 0.17281, 0.65339, 0.25524, 0.077221, 0.062195, 0.027298, 0.015416, 0.0049706, 0.0049706, 0.051071

- 20, 0.070327, 0.059319, 0.017845, 0.66294, 0.45698, 0.33813, 0.096921, 0.05992, 0.027039, 0.016189, 0.0051736, 0.0051736, 0.048574

- 21, 0.068288, 0.057712, 0.017123, 0.19065, 0.68559, 0.31681, 0.096766, 0.070108, 0.024293, 0.015921, 0.00537, 0.00537, 0.04607

- 22, 0.068325, 0.072588, 0.016803, 0.27846, 0.69654, 0.41265, 0.14594, 0.057593, 0.02801, 0.015518, 0.0055597, 0.0055597, 0.04356

- 23, 0.069229, 0.061088, 0.015701, 0.36501, 0.58014, 0.36567, 0.13024, 0.057249, 0.025246, 0.015572, 0.0057424, 0.0057424, 0.041042

- 24, 0.06736, 0.05221, 0.017174, 0.21078, 0.64454, 0.33496, 0.099098, 0.061012, 0.02649, 0.015009, 0.005918, 0.005918, 0.038518

- 25, 0.074049, 0.075404, 0.01449, 0.27087, 0.62561, 0.40405, 0.11375, 0.054189, 0.025911, 0.015053, 0.0060861, 0.0060861, 0.035986

- 26, 0.058839, 0.050327, 0.014765, 0.20948, 0.68945, 0.35042, 0.10948, 0.060506, 0.027191, 0.014996, 0.0062466, 0.0062466, 0.033447

- 27, 0.067345, 0.070826, 0.015696, 0.20182, 0.68559, 0.31744, 0.10897, 0.061892, 0.026819, 0.015467, 0.0063993, 0.0063993, 0.030899

- 28, 0.070905, 0.072069, 0.017019, 0.16653, 0.68117, 0.23469, 0.074507, 0.062715, 0.025221, 0.015132, 0.0065441, 0.0065441, 0.028344

- 29, 0.068223, 0.06158, 0.015939, 0.78393, 0.36726, 0.36203, 0.14323, 0.056891, 0.024924, 0.015147, 0.0066808, 0.0066808, 0.025781

- 30, 0.066498, 0.066809, 0.014634, 0.8394, 0.32743, 0.3869, 0.089883, 0.063115, 0.025785, 0.015087, 0.0068092, 0.0068092, 0.023209

- 31, 0.066933, 0.049077, 0.017202, 0.19672, 0.56686, 0.27103, 0.069863, 0.0675, 0.026634, 0.015079, 0.0069294, 0.0069294, 0.020629

- 32, 0.068608, 0.063936, 0.017622, 0.33182, 0.58899, 0.3113, 0.091792, 0.06029, 0.025533, 0.015215, 0.007041, 0.007041, 0.018041

- 33, 0.065241, 0.047265, 0.016083, 0.22816, 0.65123, 0.36312, 0.13558, 0.060908, 0.024666, 0.014723, 0.0071441, 0.0071441, 0.015444

- 34, 0.070225, 0.0496, 0.017066, 0.25633, 0.55801, 0.21068, 0.071231, 0.064753, 0.025012, 0.015049, 0.0072385, 0.0072385, 0.012838

- 35, 0.060453, 0.061236, 0.01583, 0.1689, 0.61234, 0.24364, 0.076019, 0.062829, 0.024263, 0.014583, 0.0073242, 0.0073242, 0.010224

- 36, 0.063953, 0.061289, 0.015241, 0.31847, 0.6231, 0.40346, 0.10105, 0.052562, 0.024883, 0.014127, 0.0074012, 0.0074012, 0.0076012

- 37, 0.060006, 0.0524, 0.014625, 0.35952, 0.60349, 0.36902, 0.1122, 0.058348, 0.025517, 0.01388, 0.0072872, 0.0072872, 0.0072872

- 38, 0.056348, 0.059489, 0.014069, 0.88224, 0.22979, 0.37532, 0.15297, 0.059744, 0.024865, 0.014094, 0.0072872, 0.0072872, 0.0072872

- 39, 0.061262, 0.048377, 0.014491, 0.25862, 0.63127, 0.31974, 0.08272, 0.056094, 0.025057, 0.014122, 0.0071566, 0.0071566, 0.0071566

- 40, 0.066728, 0.080006, 0.013925, 0.78884, 0.30531, 0.29944, 0.10856, 0.056627, 0.029518, 0.01513, 0.0070243, 0.0070243, 0.0070243

- 41, 0.057282, 0.070652, 0.015018, 0.84558, 0.36726, 0.42601, 0.19688, 0.048028, 0.028732, 0.014637, 0.0068906, 0.0068906, 0.0068906

- 42, 0.051025, 0.059585, 0.014957, 0.88895, 0.42035, 0.46279, 0.1917, 0.044764, 0.027758, 0.014586, 0.0067555, 0.0067555, 0.0067555

- 43, 0.056562, 0.050542, 0.014718, 0.75545, 0.35841, 0.32844, 0.091805, 0.053533, 0.026234, 0.013923, 0.0066191, 0.0066191, 0.0066191

- 44, 0.05128, 0.050714, 0.012445, 0.83994, 0.37611, 0.44403, 0.17927, 0.045354, 0.02589, 0.014554, 0.0064816, 0.0064816, 0.0064816

- 45, 0.046539, 0.069677, 0.014711, 0.91026, 0.36726, 0.45327, 0.18943, 0.044647, 0.025463, 0.014898, 0.0063432, 0.0063432, 0.0063432

- 46, 0.047577, 0.058224, 0.013631, 0.24286, 0.61676, 0.38939, 0.13361, 0.047555, 0.024975, 0.014549, 0.006204, 0.006204, 0.006204

- 47, 0.048531, 0.058935, 0.013855, 0.26402, 0.58456, 0.42828, 0.15189, 0.046562, 0.025329, 0.014774, 0.006064, 0.006064, 0.006064

- 48, 0.04566, 0.049603, 0.013348, 0.91374, 0.40708, 0.48891, 0.22959, 0.043677, 0.024474, 0.014314, 0.0059235, 0.0059235, 0.0059235

- 49, 0.050058, 0.059226, 0.013598, 0.29818, 0.64897, 0.49434, 0.14328, 0.048268, 0.025074, 0.014423, 0.0057826, 0.0057826, 0.0057826

- 50, 0.050137, 0.040702, 0.01179, 0.93191, 0.39381, 0.48446, 0.20568, 0.045885, 0.025535, 0.014546, 0.0056413, 0.0056413, 0.0056413

- 51, 0.045032, 0.070952, 0.012342, 0.34258, 0.65306, 0.50306, 0.23871, 0.040142, 0.025838, 0.0139, 0.0055, 0.0055, 0.0055

- 52, 0.041642, 0.051994, 0.012271, 0.35905, 0.62119, 0.50214, 0.23969, 0.042127, 0.025545, 0.013267, 0.0053587, 0.0053587, 0.0053587

- 53, 0.044805, 0.05751, 0.013511, 0.46594, 0.55984, 0.52771, 0.19312, 0.045933, 0.024737, 0.013548, 0.0052174, 0.0052174, 0.0052174

- 54, 0.044743, 0.052347, 0.012212, 0.53665, 0.48918, 0.50818, 0.21893, 0.044703, 0.02444, 0.014096, 0.0050765, 0.0050765, 0.0050765

- 55, 0.044736, 0.057142, 0.012814, 0.77027, 0.48033, 0.56781, 0.2424, 0.043069, 0.024358, 0.014416, 0.004936, 0.004936, 0.004936

- 56, 0.043671, 0.05097, 0.01306, 0.63739, 0.47425, 0.54878, 0.25517, 0.042334, 0.024461, 0.014416, 0.004796, 0.004796, 0.004796

- 57, 0.040343, 0.058296, 0.012172, 0.4839, 0.67137, 0.59761, 0.27372, 0.041664, 0.024222, 0.013611, 0.0046568, 0.0046568, 0.0046568

- 58, 0.037285, 0.050664, 0.010976, 0.48987, 0.64454, 0.58022, 0.27709, 0.039894, 0.024669, 0.013371, 0.0045184, 0.0045184, 0.0045184

- 59, 0.040715, 0.067929, 0.013086, 0.40427, 0.70452, 0.55269, 0.21571, 0.043384, 0.024927, 0.013063, 0.0043809, 0.0043809, 0.0043809

- 60, 0.038697, 0.0509, 0.010661, 0.49591, 0.73833, 0.59121, 0.27684, 0.03928, 0.024557, 0.0127, 0.0042445, 0.0042445, 0.0042445

- 61, 0.03642, 0.055975, 0.012875, 0.39364, 0.64897, 0.53356, 0.27788, 0.039718, 0.025578, 0.013435, 0.0041094, 0.0041094, 0.0041094

- 62, 0.034472, 0.045737, 0.010772, 0.41212, 0.67675, 0.54766, 0.25564, 0.04032, 0.025577, 0.013627, 0.0039757, 0.0039757, 0.0039757

- 63, 0.037448, 0.047154, 0.010439, 0.42494, 0.67232, 0.53497, 0.25987, 0.041361, 0.025718, 0.013672, 0.0038434, 0.0038434, 0.0038434

- 64, 0.038587, 0.058352, 0.010351, 0.41545, 0.65462, 0.512, 0.23896, 0.040999, 0.026176, 0.012687, 0.0037128, 0.0037128, 0.0037128

- 65, 0.039968, 0.054732, 0.0099146, 0.4643, 0.68117, 0.57008, 0.2703, 0.041119, 0.026233, 0.012212, 0.003584, 0.003584, 0.003584

- 66, 0.038102, 0.042889, 0.011308, 0.45492, 0.6679, 0.56347, 0.28168, 0.041374, 0.025945, 0.011885, 0.003457, 0.003457, 0.003457

- 67, 0.035614, 0.045882, 0.010174, 0.49739, 0.72345, 0.58561, 0.29875, 0.038708, 0.024997, 0.011485, 0.0033321, 0.0033321, 0.0033321

- 68, 0.03435, 0.051877, 0.011103, 0.47934, 0.70452, 0.57385, 0.29845, 0.038263, 0.025442, 0.011421, 0.0032093, 0.0032093, 0.0032093

- 69, 0.032034, 0.03505, 0.0085354, 0.48633, 0.70001, 0.58086, 0.29281, 0.039562, 0.025576, 0.011483, 0.0030888, 0.0030888, 0.0030888

- 70, 0.035074, 0.069821, 0.0094962, 0.49138, 0.78786, 0.60411, 0.32341, 0.037168, 0.024791, 0.010555, 0.0029706, 0.0029706, 0.0029706

- 71, 0.033903, 0.045108, 0.0090304, 0.49288, 0.81337, 0.60005, 0.30997, 0.037606, 0.024819, 0.010493, 0.002855, 0.002855, 0.002855

- 72, 0.034674, 0.058453, 0.0084299, 0.48919, 0.80236, 0.59975, 0.30505, 0.039405, 0.025095, 0.010677, 0.0027419, 0.0027419, 0.0027419

- 73, 0.035418, 0.058167, 0.009096, 0.48303, 0.80674, 0.61423, 0.30122, 0.039118, 0.025532, 0.011023, 0.0026316, 0.0026316, 0.0026316

- 74, 0.032954, 0.035803, 0.0078539, 0.53935, 0.74238, 0.6279, 0.32421, 0.038515, 0.024935, 0.010618, 0.0025241, 0.0025241, 0.0025241

- 75, 0.034768, 0.057479, 0.0087766, 0.52438, 0.69125, 0.61701, 0.32876, 0.037768, 0.025436, 0.010624, 0.0024195, 0.0024195, 0.0024195

- 76, 0.032023, 0.044387, 0.0073166, 0.54293, 0.68682, 0.62101, 0.33014, 0.038265, 0.02557, 0.010101, 0.002318, 0.002318, 0.002318

- 77, 0.033379, 0.052682, 0.0079826, 0.56289, 0.69567, 0.62618, 0.32756, 0.037961, 0.02547, 0.0095888, 0.0022196, 0.0022196, 0.0022196

- 78, 0.032989, 0.049031, 0.0077676, 0.50676, 0.80634, 0.62793, 0.32638, 0.037864, 0.025741, 0.009531, 0.0021245, 0.0021245, 0.0021245

- 79, 0.036435, 0.070149, 0.0085562, 0.51859, 0.77364, 0.63817, 0.31516, 0.039418, 0.02553, 0.0092248, 0.0020327, 0.0020327, 0.0020327

- 80, 0.034297, 0.056937, 0.0075546, 0.51911, 0.82571, 0.65606, 0.33026, 0.039132, 0.02568, 0.0090519, 0.0019443, 0.0019443, 0.0019443

- 81, 0.030353, 0.044885, 0.0079435, 0.53369, 0.83456, 0.65735, 0.3361, 0.038348, 0.025584, 0.0089138, 0.0018594, 0.0018594, 0.0018594

- 82, 0.031562, 0.048363, 0.006391, 0.57755, 0.69125, 0.6413, 0.32771, 0.038439, 0.025732, 0.0090517, 0.0017781, 0.0017781, 0.0017781

- 83, 0.03023, 0.044663, 0.0077737, 0.52885, 0.74667, 0.61712, 0.31247, 0.03826, 0.025933, 0.0099889, 0.0017005, 0.0017005, 0.0017005

- 84, 0.031993, 0.042127, 0.0074711, 0.54067, 0.76961, 0.62372, 0.31279, 0.038384, 0.025838, 0.0092811, 0.0016267, 0.0016267, 0.0016267

- 85, 0.034058, 0.069304, 0.0078305, 0.51686, 0.80236, 0.62381, 0.31117, 0.038734, 0.025922, 0.0093382, 0.0015566, 0.0015566, 0.0015566

- 86, 0.031397, 0.054115, 0.0074804, 0.50381, 0.80236, 0.60823, 0.30825, 0.037925, 0.025972, 0.0098775, 0.0014905, 0.0014905, 0.0014905

- 87, 0.032812, 0.053529, 0.0064338, 0.49371, 0.80236, 0.60179, 0.30719, 0.037996, 0.026082, 0.0099742, 0.0014283, 0.0014283, 0.0014283

- 88, 0.030087, 0.045519, 0.0065488, 0.50928, 0.74631, 0.60312, 0.30383, 0.037711, 0.026214, 0.010212, 0.0013701, 0.0013701, 0.0013701

- 89, 0.031549, 0.051331, 0.0057826, 0.50756, 0.77812, 0.59977, 0.30403, 0.038007, 0.026048, 0.010538, 0.001316, 0.001316, 0.001316

- 90, 0.029909, 0.044738, 0.0058295, 0.52291, 0.77458, 0.60493, 0.30465, 0.03801, 0.026054, 0.01066, 0.001266, 0.001266, 0.001266

- 91, 0.032071, 0.054327, 0.0065371, 0.55263, 0.74238, 0.6132, 0.31097, 0.038026, 0.025726, 0.01046, 0.0012202, 0.0012202, 0.0012202

- 92, 0.030226, 0.049584, 0.0057191, 0.55546, 0.74238, 0.62076, 0.31411, 0.038199, 0.025868, 0.010043, 0.0011787, 0.0011787, 0.0011787

- 93, 0.029781, 0.041098, 0.0054073, 0.59837, 0.71415, 0.64338, 0.32448, 0.03835, 0.02592, 0.0096366, 0.0011414, 0.0011414, 0.0011414

- 94, 0.029206, 0.047728, 0.0056247, 0.61807, 0.7146, 0.64706, 0.3308, 0.038554, 0.025797, 0.0095149, 0.0011084, 0.0011084, 0.0011084

- 95, 0.03066, 0.045028, 0.0065227, 0.58791, 0.77016, 0.66239, 0.33488, 0.038489, 0.025461, 0.0093016, 0.0010797, 0.0010797, 0.0010797

- 96, 0.029913, 0.054846, 0.0052682, 0.58472, 0.74238, 0.66402, 0.3469, 0.037872, 0.025386, 0.0091582, 0.0010554, 0.0010554, 0.0010554

- 97, 0.028202, 0.031975, 0.0055008, 0.59876, 0.74201, 0.66649, 0.3482, 0.037768, 0.025535, 0.0091494, 0.0010355, 0.0010355, 0.0010355

- 98, 0.029025, 0.042972, 0.0064648, 0.62657, 0.67797, 0.67745, 0.35293, 0.037822, 0.02554, 0.0091181, 0.00102, 0.00102, 0.00102

- 99, 0.030325, 0.037819, 0.0047815, 0.64056, 0.68157, 0.66853, 0.34725, 0.037966, 0.025703, 0.0090817, 0.0010089, 0.0010089, 0.0010089

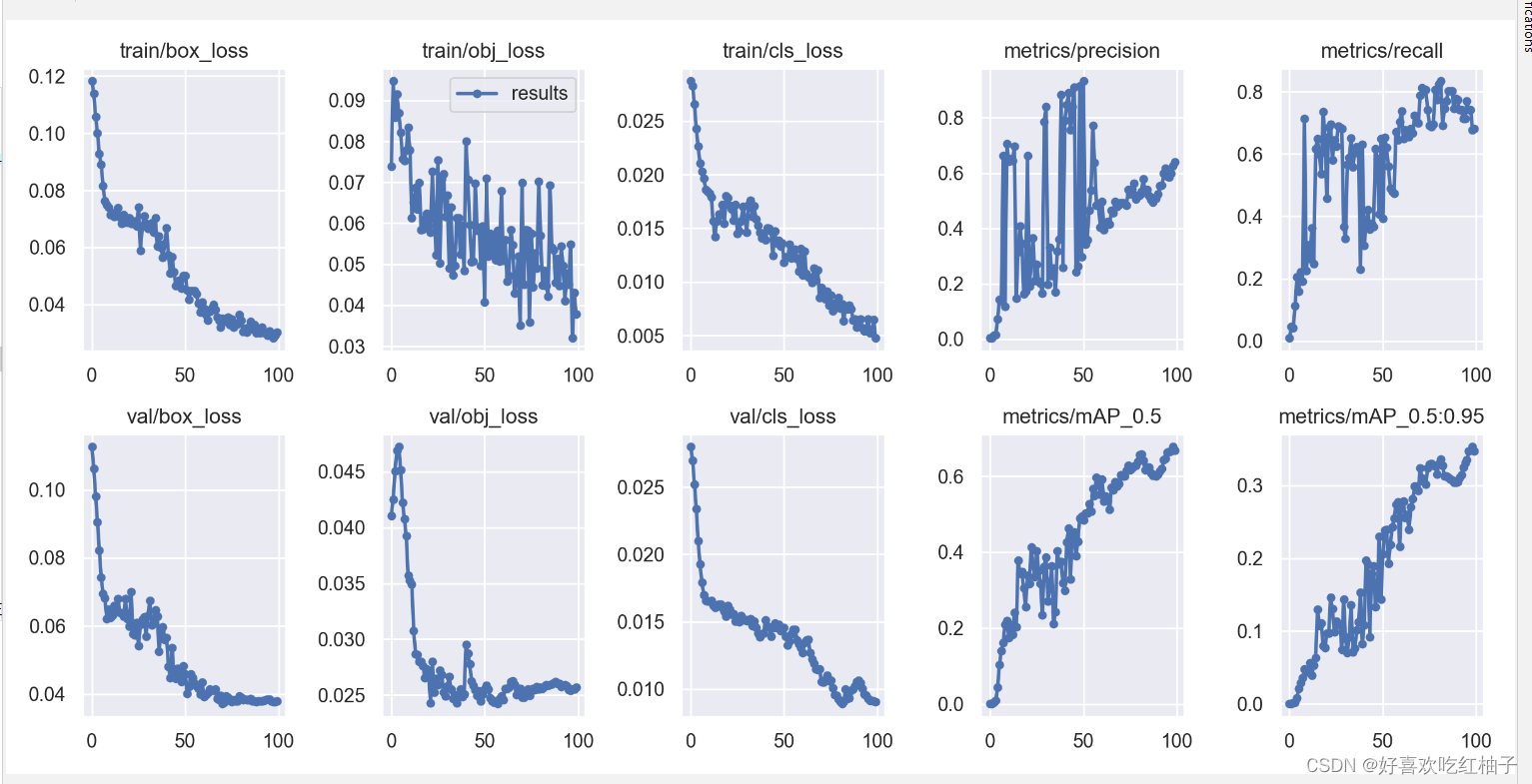

9. results.png

可视化了上面数值的结果,可以大体看出误差是在不断下降的,准确率是在不断提高的

10. 标准结果和预测结果

可以看到图中标出了标注框的位置,也给了对应的预测值

对产生的权重文件进行单独验证

使用val.py文件来进行对best.pt进行单独验证

- val.py和train.py使用方法类似,需要给出数据集的配置文件和权重文件的配置文件

- # python val.py --data data/mask_data.yaml --weights runs/train/exp_yolov5s/weights/best.pt --img 640

- if __name__ == "__main__":

- opt = parse_opt()

- main(opt)

- 把下面这个命令输入到命令框中,注意weights要改成自己的best.pt的路径

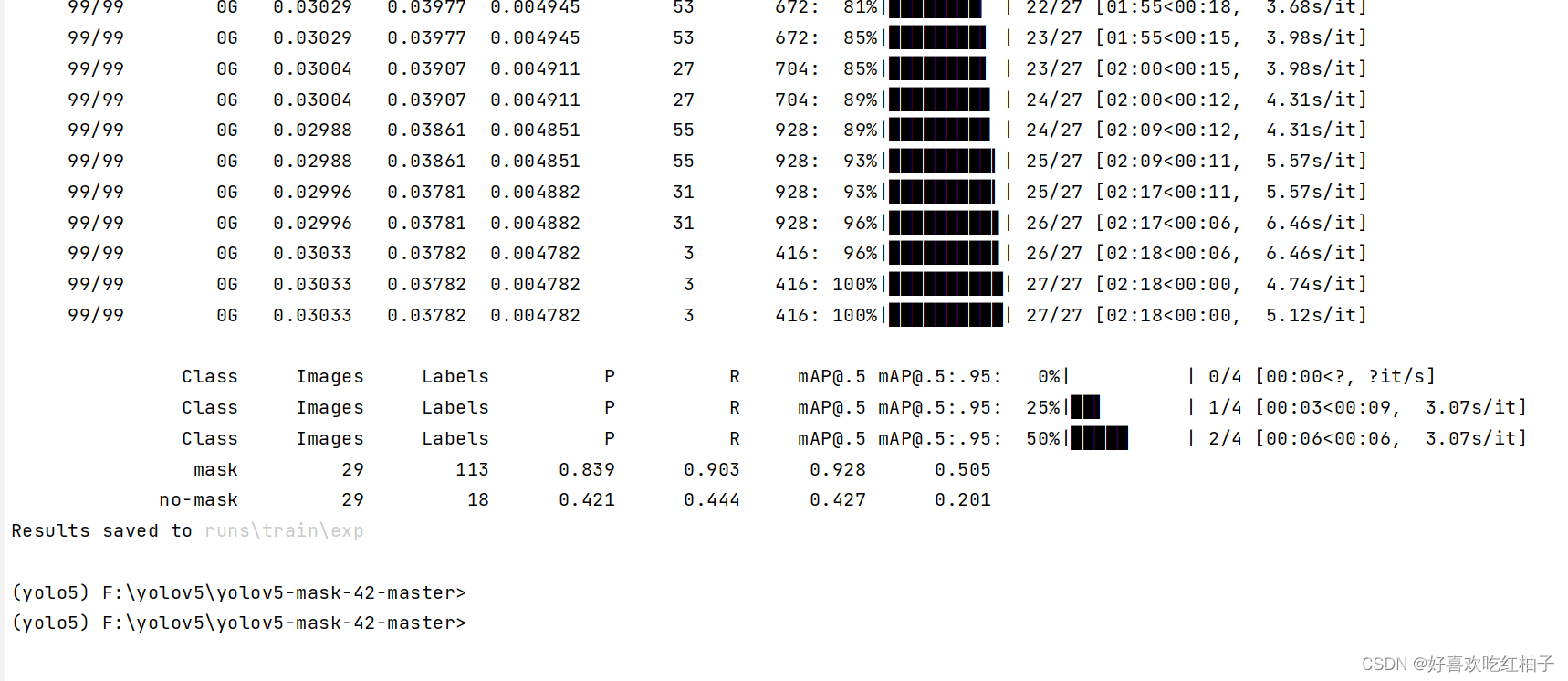

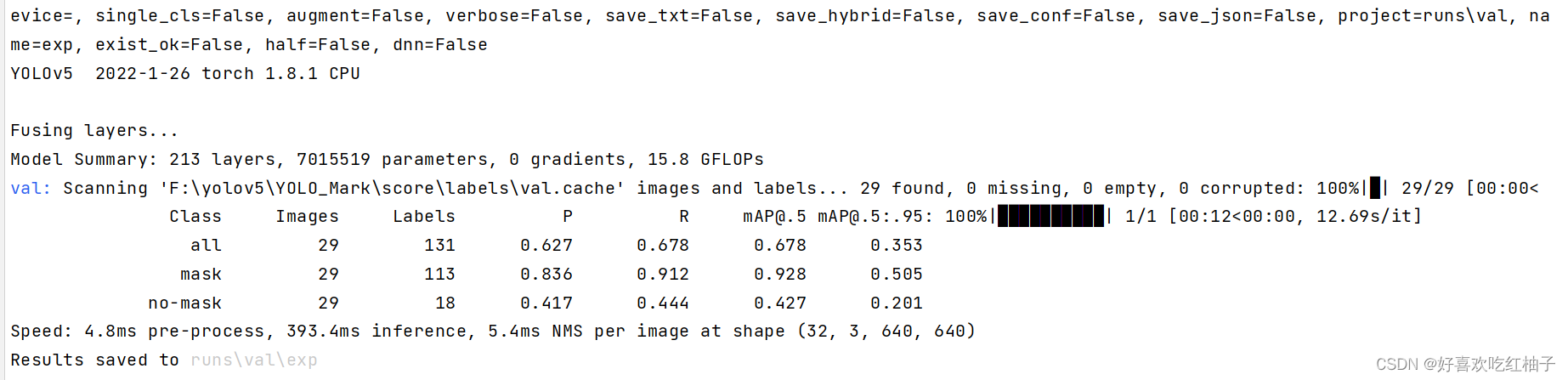

python val.py --data data/mask_data.yaml --weights runs/train/exp/weights/best.pt --img 640- 可以看出他扫描到了验证集中的29张图片,然后进行验证,验证结束

可以看到该模型在全部的类别准确率可以达到0.627,口罩类可以达到0.836,无口罩类可达到0.417

还可以看出处理一张图片需要的预处理时间是4.8ms,393.4ms的推理时间和5.4ms的后处理时间

验证结果保存在runs\val\exp目录下,下图是结果

图形化界面验证

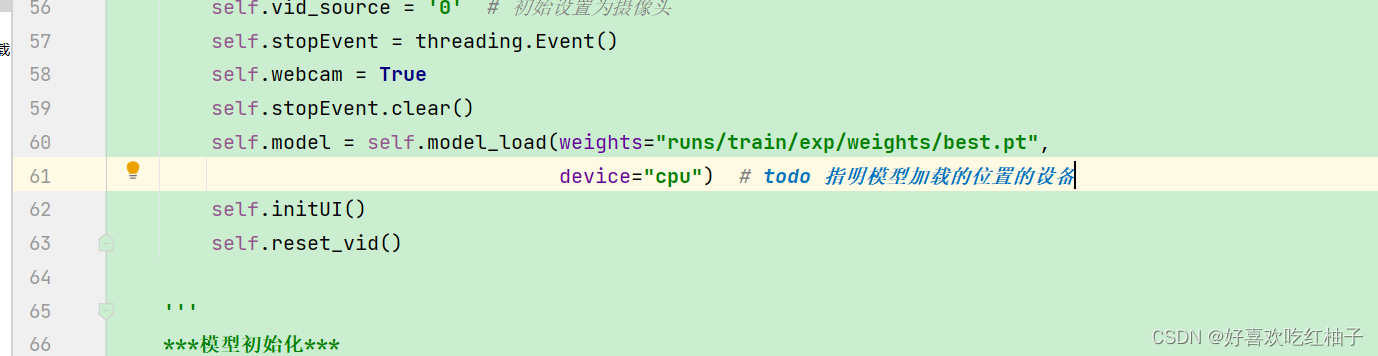

windows.py

把weights路径改为自己训练出来的模型的路径,device写CPU

点击run即可开始运行,弹出GUI界面

检测结果:可以看到我训练的模型的检测结果是不准确的。最大的人脸被识别成了mask类

我又尝试了一下摄像头实时监测自己的脸,发现我没戴口罩,还是有0.8的可能性把我识别成了mask类,所以我的模型由于数据集太小的原因是非常不准确的。

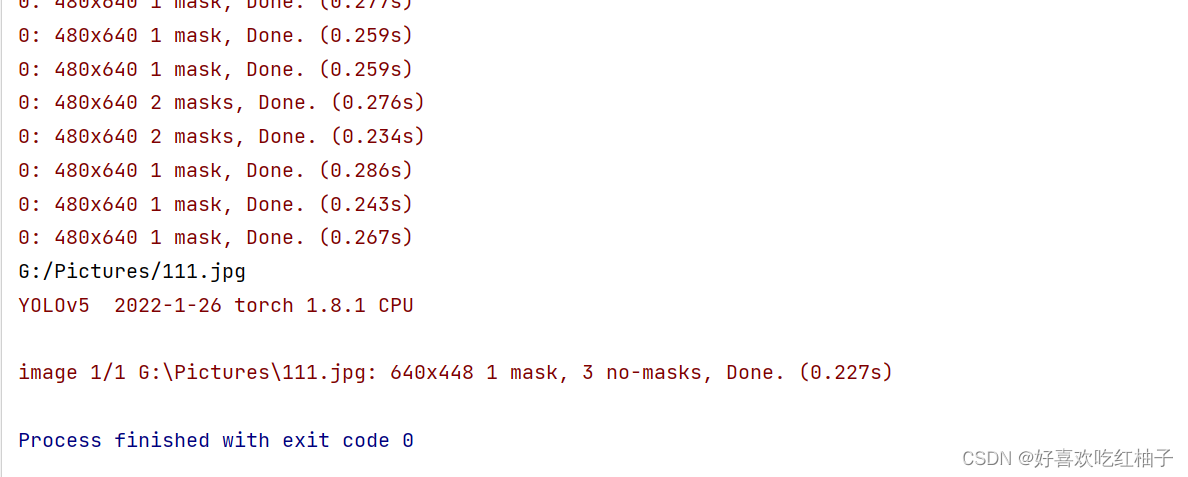

下图是对应命令窗的输出

五、代码详解

项目结构

代码和数据集要放在一个目录下,YOLO_Mask是数据集,下面的是代码包

代码结构

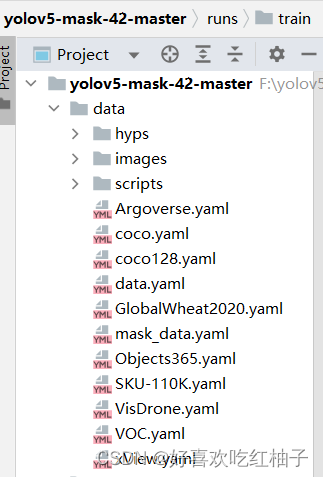

1. data目录

主要是用来指明数据集的配置文件,在mask_data.yaml文件中就说明了数据集的类别个数、类别名等等

(data_mask.yaml需要修改成自己对应的数据集类别和名称)

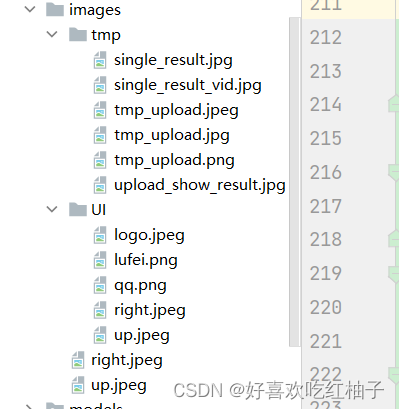

2. image目录

存放 图形化界面相关的文件和中间图片

3. models目录

存放YOLO模型的配置文件,因为yolov5模型有许多不同大小的模型,如s/m/l等三个大中小模型,他们的配置文件都放在这里

(yolov5s.yaml需要修改成自己对应的数据集类别的个数)

4. pretrained目录

存放预训练模型,在CoCo数据集中提前训练好的模型,预训练模型在后续应用到具体类的识别过程中可以提供一定的辅助作用

5. runs目录

存放在运行代码的过程产生的中间的或者最终的结果文件

detect就是最开始进行验证时存放的结果

train就是后来经过自己的数据集训练之后得到的结果

val存放的是训练过程中产生的一些结果,如果模型没有训练完被中断了之后想要验证模型,就可以从val中看结果

6. utils工具包

7. 主文件

- data_gen.py,转换vico格式数据

- detect.py做可视化

- export和hubconf没用

- licence声明版权

- requirement:项目运行需要安装的依赖包,在代码运行前就先通过cmd安装好

- train.py:训练用到的

- val.py:验证用到的

- window.py:运行可视化界面用到的