热门标签

热门文章

- 1Python 教学 | Pandas 分组聚合与数据排序_python聚合并按聚合值从大到小排序

- 2unity 粒子插件_收藏就完事了!Oculus Quest和Unity创意开源项目,你不码住嘛?!...

- 3乌班图初识命令小字典(欢迎补充)_乌班图指令

- 4has been injected into other beans[XXXXXXXXXX] in its raw version as part of a circular reference

- 5anaconda创建并切换多版本python环境_python是3.8,anaconda中是3.10

- 6微服务--实用篇-笔记大全_给黑马旅游添加排序功能

- 7推荐几款便宜幻兽帕鲁(Palworld)联机服务专用服务器_幻兽帕鲁 华为云服务器

- 8Xss(跨站脚本攻击)漏洞解析_xss 攻击, 转义

- 9Java 18中简单 Web 服务器

- 10编程笔记 Golang基础 026 函数

当前位置: article > 正文

使用cloudera-manager CDH大数据集群运维工作记录_hive报创建同一张表 failed to move to trash: hdfs://m

作者:IT小白 | 2024-03-06 02:06:20

赞

踩

hive报创建同一张表 failed to move to trash: hdfs://m

工作中遇到的问题记录

kafka异常退出:

解决思路:

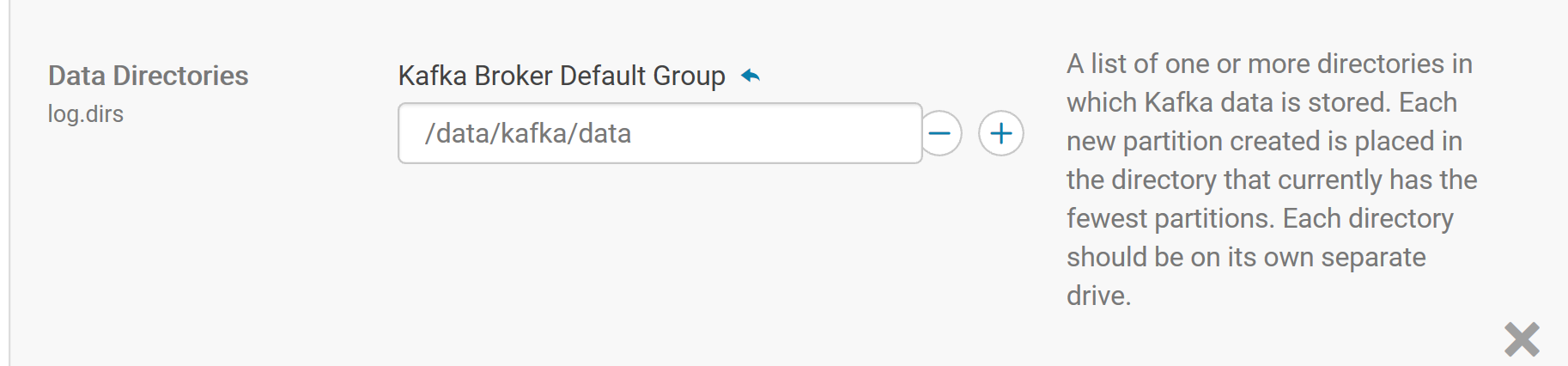

1、排查kafka log,log.dirs:

2、日志如下:

# pwd

/data/log/kafka

# ls -lh

总用量 78M

-rw-r--r-- 1 kafka kafka 78M 9月 18 14:18 kafka-broker-rc-nmg-kfk-rds-woasis1.log

drwxr-xr-x 2 kafka kafka 10 5月 18 18:29 stacks 183308 2017-09-16 08:16:00,397 INFO kafka.controller.RequestSendThread: [Controller-115-to-broker-115-send-thread], Controller 115 connected to rc-n mg-kfk-rds-woasis1:9092 (id: 115 rack: null) for sending state change requests

183309 2017-09-16 08:16:28,180 ERROR kafka.network.Acceptor: Error while accepting connection

183310 java.io.IOException: 打开的文件过多

183311 at sun.nio.ch.ServerSocketChannelImpl.accept0(Native Method)

183312 at sun.nio.ch.ServerSocketChannelImpl.accept(ServerSocketChannelImpl.java:241)

183313 at kafka.network.Acceptor.accept(SocketServer.scala:332)

183314 at kafka.network.Acceptor.run(SocketServer.scala:275)

183315 at java.lang.Thread.run(Thread.java:745)

提示打开文件过多。

解决方法:查看机器文件描述符大小。

# ulimit -ncdh集群中,使用root用户登陆,操作hdfs,无法删除hdfs上的文件:

图片如下:

排查原因是由于权限问题,排查思路和log如下:

# hadoop fs -ls /tmp

Found 3 items

drwxrwxrwx - hdfs supergroup 0 2017-09-18 11:07 /tmp/.cloudera_health_monitoring_canary_files

-rw-r--r-- 3 root supergroup 0 2017-09-18 10:55 /tmp/a.txt

drwx--x--x - hbase supergroup 0 2017-08-02 19:05 /tmp/hbase-staging# hadoop fs -rm -r /tmp/a.txt

17/09/18 11:06:05 WARN fs.TrashPolicyDefault: Can't create trash directory: hdfs://nameservice1/user/root/.Trash/Current/tmp

org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="/":hdfs:supergroup:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:281)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:262)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:242)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:169)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6632)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6614)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAncestorAccess(FSNamesystem.java:6566)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInternal(FSNamesystem.java:4359)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4329)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4302)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:869)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.mkdirs(AuthorizationProviderProxyClientProtocol.java:323)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:608)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2086)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2082)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2080)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106)

at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:73)

at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:3104)

at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:3069)

at org.apache.hadoop.hdfs.DistributedFileSystem$18.doCall(DistributedFileSystem.java:957)

at org.apache.hadoop.hdfs.DistributedFileSystem$18.doCall(DistributedFileSystem.java:953)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirsInternal(DistributedFileSystem.java:953)

at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirs(DistributedFileSystem.java:946)

at org.apache.hadoop.fs.TrashPolicyDefault.moveToTrash(TrashPolicyDefault.java:144)

at org.apache.hadoop.fs.Trash.moveToTrash(Trash.java:109)

at org.apache.hadoop.fs.Trash.moveToAppropriateTrash(Trash.java:95)

at org.apache.hadoop.fs.shell.Delete$Rm.moveToTrash(Delete.java:118)

at org.apache.hadoop.fs.shell.Delete$Rm.processPath(Delete.java:105)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.FsCommand.processRawArguments(FsCommand.java:118)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:315)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:372)

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=root, access=WRITE, inode="/":hdfs:supergroup:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:281)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:262)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:242)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:169)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6632)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6614)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAncestorAccess(FSNamesystem.java:6566)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInternal(FSNamesystem.java:4359)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4329)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4302)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:869)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.mkdirs(AuthorizationProviderProxyClientProtocol.java:323)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:608)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2086)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2082)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2080)

at org.apache.hadoop.ipc.Client.call(Client.java:1471)

at org.apache.hadoop.ipc.Client.call(Client.java:1408)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:230)

at com.sun.proxy.$Proxy14.mkdirs(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.mkdirs(ClientNamenodeProtocolTranslatorPB.java:549)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:256)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:104)

at com.sun.proxy.$Proxy15.mkdirs(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:3102)

... 21 more

rm: Failed to move to trash: hdfs://nameservice1/tmp/a.txt: Permission denied: user=root, access=WRITE, inode="/":hdfs:supergroup:drwxr-xr-x

解决方法:

1、使用root用户,转到hdfs用户,执行删除命令:

# su hdfs

$ cd

$ pwd

/var/lib/hadoop-hdfs

$ hadoop fs -ls /tmp

Found 3 items

drwxrwxrwx - hdfs supergroup 0 2017-09-18 11:32 /tmp/.cloudera_health_monitoring_canary_files

-rw-r--r-- 3 root supergroup 0 2017-09-18 10:55 /tmp/a.txt

drwx--x--x - hbase supergroup 0 2017-08-02 19:05 /tmp/hbase-staging

$ hadoop fs -rm -r /tmp/a.txt

17/09/18 11:33:06 INFO fs.TrashPolicyDefault: Moved: 'hdfs://nameservice1/tmp/a.txt' to trash at: hdfs://nameservice1/user/hdfs/.Trash/Current/tmp/a.txt

$ hadoop fs -ls /tmp

Found 2 items

drwxrwxrwx - hdfs supergroup 0 2017-09-18 11:32 /tmp/.cloudera_health_monitoring_canary_files

drwx--x--x - hbase supergroup 0 2017-08-02 19:05 /tmp/hbase-staging

成功删除/tmp/a.txt文件。

2、在cm管理界面中, 查看权限配置情况,dfs.permissions:

Hbase写入变慢的问题排查:

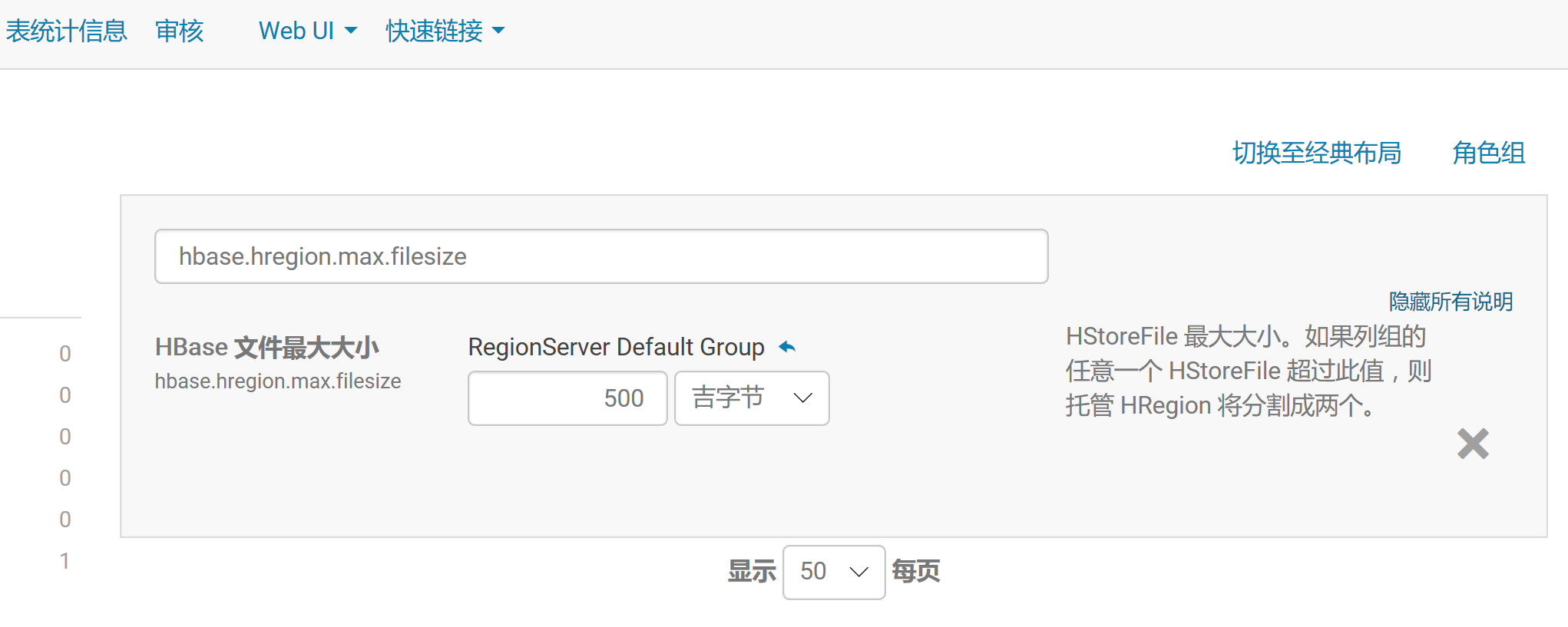

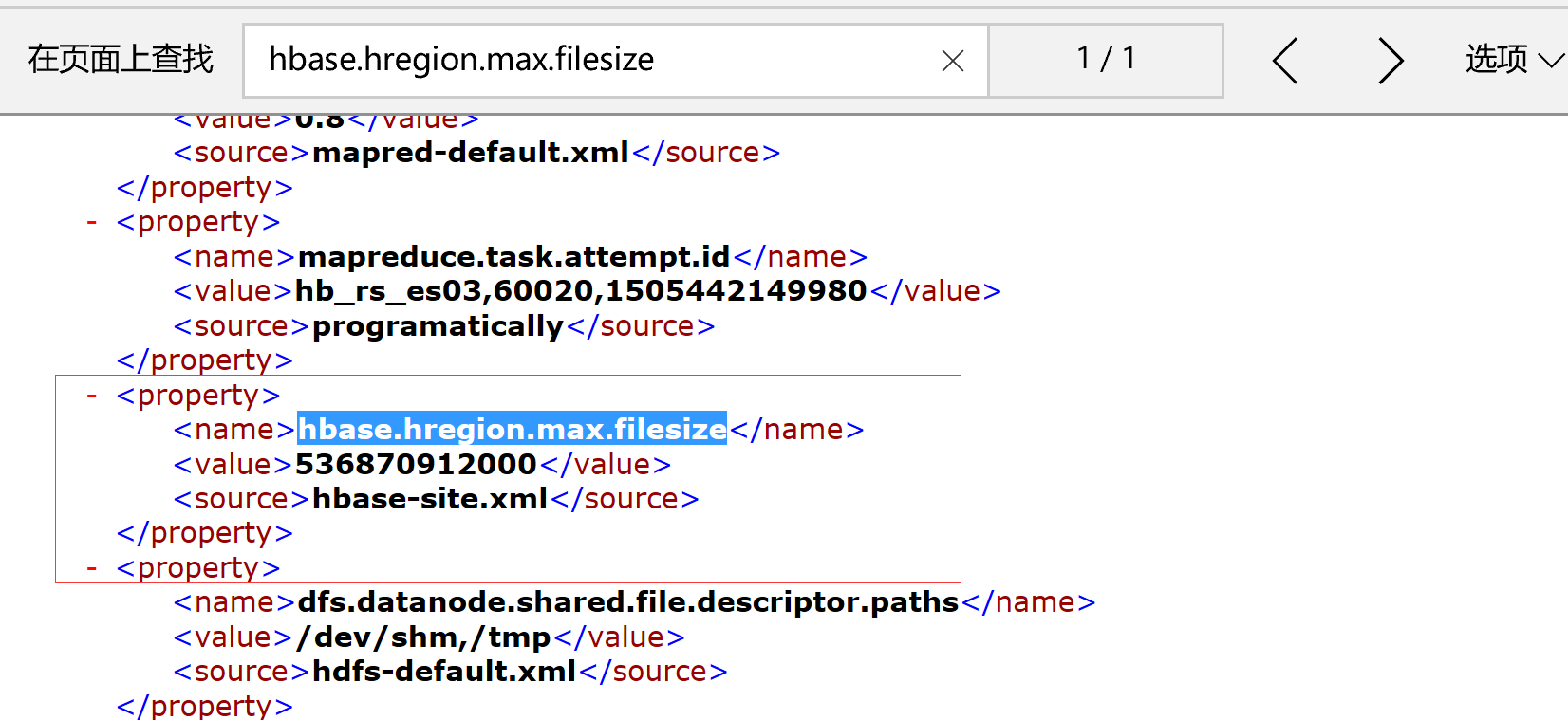

目前配置硬件配置内存32G,:memstore:256M,hbase.hregion.max.filesize:10G (一个region最多管理10G的HFile)

当写入的数据总量超过一定数量(1T)时,写入速度变慢。写入方式rowkey前加hash

推测原因:

对表预建了20个Region,随着数据量膨胀分裂到了160个,

由于写入方式是完全随机写入到各个region中,因为region数量过多,大量时间浪费在等待region释放资源,获取region连接,释放连接。

现在修改hbase.hregion.max.filesize为500G,避免region频繁分裂。使之恢复初始写入速度,修改后进行测试,查看写入慢的问题是否得到解决。

当写入的数据总量超过一定数量(1T)时,写入速度变慢。写入方式rowkey前加hash

推测原因:

对表预建了20个Region,随着数据量膨胀分裂到了160个,

由于写入方式是完全随机写入到各个region中,因为region数量过多,大量时间浪费在等待region释放资源,获取region连接,释放连接。

现在修改hbase.hregion.max.filesize为500G,避免region频繁分裂。使之恢复初始写入速度,修改后进行测试,查看写入慢的问题是否得到解决。

查看hbase 某个rs的配置文件,已经修改成了500G:

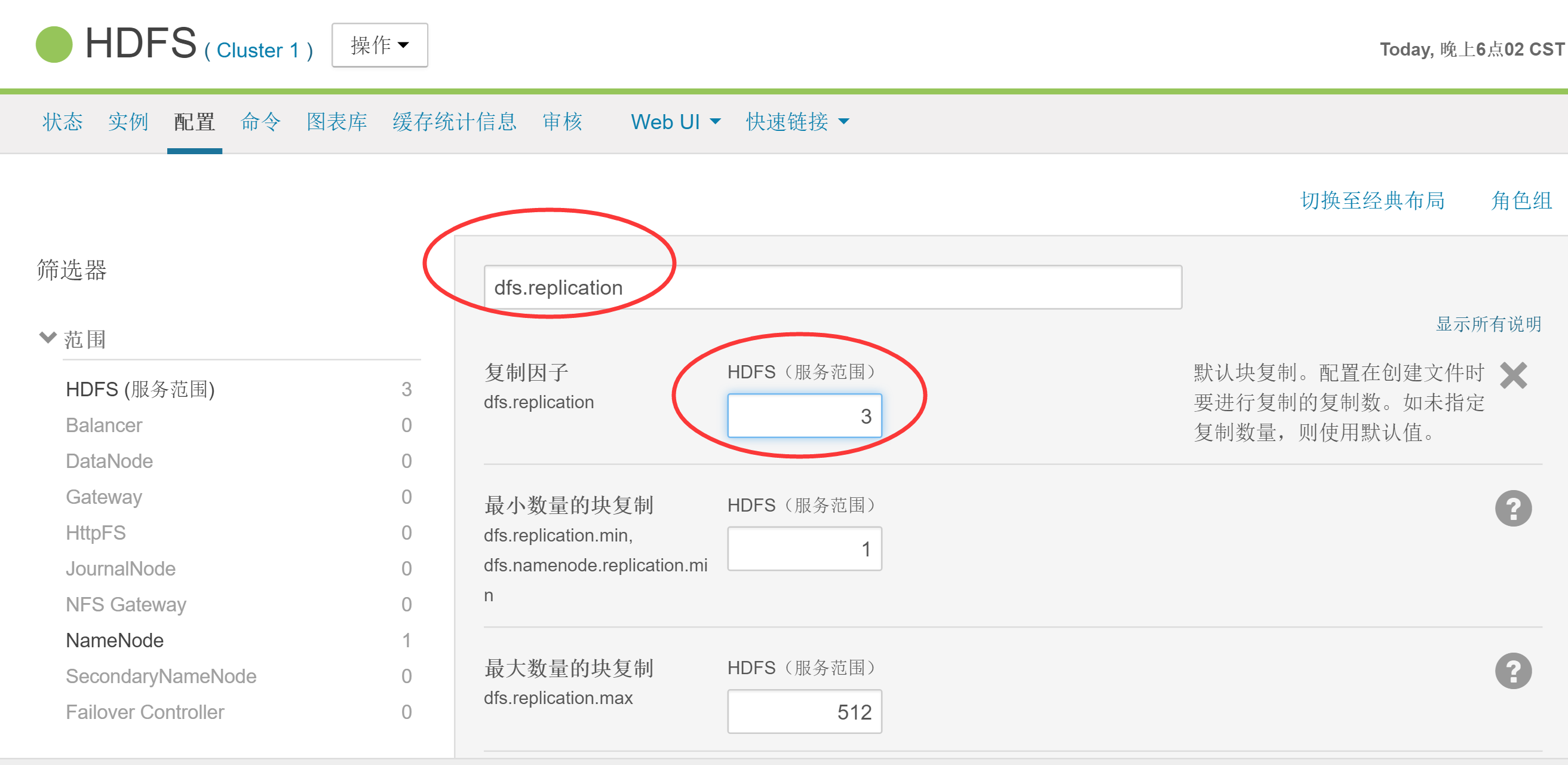

修改hadoop hdfs存储的副本数:

默认情况下,hadoop存储的副本数为3,如果想节省磁盘空间,可以将副本数调小,hdfs-配置-dfs.replication

cdh常用功能目录,方便日志排查:

cdh安装日志目录:

# pwd

/var/log/cloudera-manager-installer

# ls -lh

total 28K

-rw-r--r-- 1 root root 0 Sep 8 15:04 0.check-selinux.log

-rw-r--r-- 1 root root 105 Sep 8 15:04 1.install-repo-pkg.log

-rw-r--r-- 1 root root 1.5K Sep 8 15:04 2.install-oracle-j2sdk1.7.log

-rw-r--r-- 1 root root 2.0K Sep 8 15:05 3.install-cloudera-manager-server.log

-rw-r--r-- 1 root root 33 Sep 8 15:05 4.check-for-systemd.log

-rw-r--r-- 1 root root 3.0K Sep 8 15:05 5.install-cloudera-manager-server-db-2.log

-rw-r--r-- 1 root root 2.0K Sep 8 15:05 6.start-embedded-db.log

-rw-r--r-- 1 root root 59 Sep 8 15:05 7.start-scm-server.logcloudera-scm-server-db数据库目录,密码文件:

# pwd

/var/lib/cloudera-scm-server-db/data

# ls -lh

total 88K

drwx------ 10 cloudera-scm cloudera-scm 4.0K Sep 8 15:05 base

-rw------- 1 cloudera-scm cloudera-scm 264 Sep 8 15:05 generated_password.txt

drwx------ 2 cloudera-scm cloudera-scm 4.0K Sep 8 16:55 global

drwx------ 2 cloudera-scm cloudera-scm 4.0K Sep 8 15:05 pg_clog

-rw------- 1 cloudera-scm cloudera-scm 3.7K Sep 8 15:05 pg_hba.conf

-rw------- 1 cloudera-scm cloudera-scm 1.6K Sep 8 15:05 pg_ident.conf

drwx------ 2 cloudera-scm cloudera-scm 4.0K Sep 8 15:05 pg_log

drwx------ 4 cloudera-scm cloudera-scm 4.0K Sep 8 15:05 pg_multixact

drwx------ 2 cloudera-scm cloudera-scm 4.0K Sep 8 16:55 pg_stat_tmp

drwx------ 2 cloudera-scm cloudera-scm 4.0K Sep 8 15:05 pg_subtrans

drwx------ 2 cloudera-scm cloudera-scm 4.0K Sep 8 15:05 pg_tblspc

drwx------ 2 cloudera-scm cloudera-scm 4.0K Sep 8 15:05 pg_twophase

-rw------- 1 cloudera-scm cloudera-scm 4 Sep 8 15:05 PG_VERSION

drwx------ 3 cloudera-scm cloudera-scm 4.0K Sep 8 15:14 pg_xlog

-rw------- 1 cloudera-scm cloudera-scm 17K Sep 8 15:05 postgresql.conf

-rw------- 1 cloudera-scm cloudera-scm 62 Sep 8 15:05 postmaster.opts

-rw-r--r-- 1 cloudera-scm cloudera-scm 24 Sep 8 15:05 scm.db.list

-rw-r--r-- 1 cloudera-scm cloudera-scm 4 Sep 8 15:05 scm.db.list.20170908-150526

# cat generated_password.txt

W8gEj3gZe1

The password above was generated by /usr/share/cmf/bin/initialize_embedded_db.sh (part of the cloudera-manager-server-db package)

and is the password for the user 'cloudera-scm' for the database in the current directory.

Generated at 20170908-150526.

# cat scm.db.list.20170908-150526

scm

# cat scm.db.list

scm

amon

rman

nav

navms

# cat PG_VERSION

8.4

cloudera-scm-agent配置文件目录:

# pwd

/etc/cloudera-scm-agent

# ls

config.ini

# cat config.ini

[General]

# Hostname of the CM server.

server_host=10.27.166.13

# Port that the CM server is listening on.

server_port=7182

## It should not normally be necessary to modify these.

# Port that the CM agent should listen on.

# listening_port=9000

# IP Address that the CM agent should listen on.

# listening_ip=

# Hostname that the CM agent reports as its hostname. If unset, will be

# obtained in code through something like this:

#

# python -c 'import socket; \

# print socket.getfqdn(), \

# socket.gethostbyname(socket.getfqdn())'

#

# listening_hostname=

# An alternate hostname to report as the hostname for this host in CM.

# Useful when this agent is behind a load balancer or proxy and all

# inbound communication must connect through that proxy.

# reported_hostname=

# Port that supervisord should listen on.

# NB: This only takes effect if supervisord is restarted.

# supervisord_port=19001

# Log file. The supervisord log file will be placed into

# the same directory. Note that if the agent is being started via the

# init.d script, /var/log/cloudera-scm-agent/cloudera-scm-agent.out will

# also have a small amount of output (from before logging is initialized).

# log_file=/var/log/cloudera-scm-agent/cloudera-scm-agent.log

# Persistent state directory. Directory to store CM agent state that

# persists across instances of the agent process and system reboots.

# Particularly, the agent's UUID is stored here.

# lib_dir=/var/lib/cloudera-scm-agent

# Parcel directory. Unpacked parcels will be stored in this directory.

# Downloaded parcels will be stored in <parcel_dir>/../parcel-cache

# parcel_dir=/opt/cloudera/parcels

# Enable supervisord event monitoring. Used in eager heartbeating, amongst

# other things.

# enable_supervisord_events=true

# Maximum time to wait (in seconds) for all metric collectors to finish

# collecting data.

max_collection_wait_seconds=10.0

# Maximum time to wait (in seconds) when connecting to a local role's

# webserver to fetch metrics.

metrics_url_timeout_seconds=30.0

# Maximum time to wait (in seconds) when connecting to a local TaskTracker

# to fetch task attempt data.

task_metrics_timeout_seconds=5.0

# The list of non-device (nodev) filesystem types which will be monitored.

monitored_nodev_filesystem_types=nfs,nfs4,tmpfs

# The list of filesystem types which are considered local for monitoring purposes.

# These filesystems are combined with the other local filesystem types found in

# /proc/filesystems

local_filesystem_whitelist=ext2,ext3,ext4

# The largest size impala profile log bundle that this agent will serve to the

# CM server. If the CM server requests more than this amount, the bundle will

# be limited to this size. All instances of this limit being hit are logged to

# the agent log.

impala_profile_bundle_max_bytes=1073741824

# The largest size stacks log bundle that this agent will serve to the CM

# server. If the CM server requests more than this amount, the bundle will be

# limited to this size. All instances of this limit being hit are logged to the

# agent log.

stacks_log_bundle_max_bytes=1073741824

# The size to which the uncompressed portion of a stacks log can grow before it

# is rotated. The log will then be compressed during rotation.

stacks_log_max_uncompressed_file_size_bytes=5242880

# The orphan process directory staleness threshold. If a diretory is more stale

# than this amount of seconds, CM agent will remove it.

orphan_process_dir_staleness_threshold=5184000

# The orphan process directory refresh interval. The CM agent will check the

# staleness of the orphan processes config directory every this amount of

# seconds.

orphan_process_dir_refresh_interval=3600

# A knob to control the agent logging level. The options are listed as follows:

# 1) DEBUG (set the agent logging level to 'logging.DEBUG')

# 2) INFO (set the agent logging level to 'logging.INFO')

scm_debug=INFO

# The DNS resolution collecion interval in seconds. A java base test program

# will be executed with at most this frequency to collect java DNS resolution

# metrics. The test program is only executed if the associated health test,

# Host DNS Resolution, is enabled.

dns_resolution_collection_interval_seconds=60

# The maximum time to wait (in seconds) for the java test program to collect

# java DNS resolution metrics.

dns_resolution_collection_timeout_seconds=30

# The directory location in which the agent-wide kerberos credential cache

# will be created.

# agent_wide_credential_cache_location=/var/run/cloudera-scm-agent

[Security]

# Use TLS and certificate validation when connecting to the CM server.

use_tls=0

# The maximum allowed depth of the certificate chain returned by the peer.

# The default value of 9 matches the default specified in openssl's

# SSL_CTX_set_verify.

max_cert_depth=9

# A file of CA certificates in PEM format. The file can contain several CA

# certificates identified by

#

# -----BEGIN CERTIFICATE-----

# ... (CA certificate in base64 encoding) ...

# -----END CERTIFICATE-----

#

# sequences. Before, between, and after the certificates text is allowed which

# can be used e.g. for descriptions of the certificates.

#

# The file is loaded once, the first time an HTTPS connection is attempted. A

# restart of the agent is required to pick up changes to the file.

#

# Note that if neither verify_cert_file or verify_cert_dir is set, certificate

# verification will not be performed.

# verify_cert_file=

# Directory containing CA certificates in PEM format. The files each contain one

# CA certificate. The files are looked up by the CA subject name hash value,

# which must hence be available. If more than one CA certificate with the same

# name hash value exist, the extension must be different (e.g. 9d66eef0.0,

# 9d66eef0.1 etc). The search is performed in the ordering of the extension

# number, regardless of other properties of the certificates. Use the c_rehash

# utility to create the necessary links.

#

# The certificates in the directory are only looked up when required, e.g. when

# building the certificate chain or when actually performing the verification

# of a peer certificate. The contents of the directory can thus be changed

# without an agent restart.

#

# When looking up CA certificates, the verify_cert_file is first searched, then

# those in the directory. Certificate matching is done based on the subject name,

# the key identifier (if present), and the serial number as taken from the

# certificate to be verified. If these data do not match, the next certificate

# will be tried. If a first certificate matching the parameters is found, the

# verification process will be performed; no other certificates for the same

# parameters will be searched in case of failure.

#

# Note that if neither verify_cert_file or verify_cert_dir is set, certificate

# verification will not be performed.

# verify_cert_dir=

# PEM file containing client private key.

# client_key_file=

# A command to run which returns the client private key password on stdout

# client_keypw_cmd=

# If client_keypw_cmd isn't specified, instead a text file containing

# the client private key password can be used.

# client_keypw_file=

# PEM file containing client certificate.

# client_cert_file=

## Location of Hadoop files. These are the CDH locations when installed by

## packages. Unused when CDH is installed by parcels.

[Hadoop]

#cdh_crunch_home=/usr/lib/crunch

#cdh_flume_home=/usr/lib/flume-ng

#cdh_hadoop_bin=/usr/bin/hadoop

#cdh_hadoop_home=/usr/lib/hadoop

#cdh_hbase_home=/usr/lib/hbase

#cdh_hbase_indexer_home=/usr/lib/hbase-solr

#cdh_hcat_home=/usr/lib/hive-hcatalog

#cdh_hdfs_home=/usr/lib/hadoop-hdfs

#cdh_hive_home=/usr/lib/hive

#cdh_httpfs_home=/usr/lib/hadoop-httpfs

#cdh_hue_home=/usr/share/hue

#cdh_hue_plugins_home=/usr/lib/hadoop

#cdh_impala_home=/usr/lib/impala

#cdh_llama_home=/usr/lib/llama

#cdh_mr1_home=/usr/lib/hadoop-0.20-mapreduce

#cdh_mr2_home=/usr/lib/hadoop-mapreduce

#cdh_oozie_home=/usr/lib/oozie

#cdh_parquet_home=/usr/lib/parquet

#cdh_pig_home=/usr/lib/pig

#cdh_solr_home=/usr/lib/solr

#cdh_spark_home=/usr/lib/spark

#cdh_sqoop_home=/usr/lib/sqoop

#cdh_sqoop2_home=/usr/lib/sqoop2

#cdh_yarn_home=/usr/lib/hadoop-yarn

#cdh_zookeeper_home=/usr/lib/zookeeper

#hive_default_xml=/etc/hive/conf.dist/hive-default.xml

#webhcat_default_xml=/etc/hive-webhcat/conf.dist/webhcat-default.xml

#jsvc_home=/usr/libexec/bigtop-utils

#tomcat_home=/usr/lib/bigtop-tomcat

## Location of Cloudera Management Services files.

[Cloudera]

#mgmt_home=/usr/share/cmf

## Location of JDBC Drivers.

[JDBC]

#cloudera_mysql_connector_jar=/usr/share/java/mysql-connector-java.jar

#cloudera_oracle_connector_jar=/usr/share/java/oracle-connector-java.jar

#By default, postgres jar is found dynamically in $MGMT_HOME/lib

#cloudera_postgresql_jdbc_jar=

cloudera-scm-server配置文件目录:

# pwd

/etc/cloudera-scm-server

# ls

db.mgmt.properties db.properties db.properties.~1~ db.properties.~2~ db.properties.20170908-150526 db.properties.bak log4j.properties#默认元数据库用户名密码配置# cat db.properties

# Auto-generated by scm_prepare_database.sh on Fri Sep 8 17:28:27 CST 2017

#

# For information describing how to configure the Cloudera Manager Server

# to connect to databases, see the "Cloudera Manager Installation Guide."

#

com.cloudera.cmf.db.type=mysql

com.cloudera.cmf.db.host=10.27.166.13

com.cloudera.cmf.db.name=cmf

com.cloudera.cmf.db.user=用户名

com.cloudera.cmf.db.password=密码

com.cloudera.cmf.db.setupType=EXTERNAL

# cat db.mgmt.properties

# Auto-generated by initialize_embedded_db.sh

#

# 20170908-150526

#

# These are database credentials for databases

# created by "cloudera-scm-server-db" for

# Cloudera Manager Management Services,

# to be used during the installation wizard if

# the embedded database route is taken.

#

# The source of truth for these settings

# is the Cloudera Manager databases and

# changes made here will not be reflected

# there automatically.

#

com.cloudera.cmf.ACTIVITYMONITOR.db.type=postgresql

com.cloudera.cmf.ACTIVITYMONITOR.db.host=hostname:7432

com.cloudera.cmf.ACTIVITYMONITOR.db.name=amon

com.cloudera.cmf.ACTIVITYMONITOR.db.user=amon

com.cloudera.cmf.ACTIVITYMONITOR.db.password=密码

com.cloudera.cmf.REPORTSMANAGER.db.type=postgresql

com.cloudera.cmf.REPORTSMANAGER.db.host=hostname:7432

com.cloudera.cmf.REPORTSMANAGER.db.name=rman

com.cloudera.cmf.REPORTSMANAGER.db.user=rman

com.cloudera.cmf.REPORTSMANAGER.db.password=密码

com.cloudera.cmf.NAVIGATOR.db.type=postgresql

com.cloudera.cmf.NAVIGATOR.db.host=hostname:7432

com.cloudera.cmf.NAVIGATOR.db.name=nav

com.cloudera.cmf.NAVIGATOR.db.user=nav

com.cloudera.cmf.NAVIGATOR.db.password=密码

com.cloudera.cmf.NAVIGATORMETASERVER.db.type=postgresql

com.cloudera.cmf.NAVIGATORMETASERVER.db.host=hostname:7432

com.cloudera.cmf.NAVIGATORMETASERVER.db.name=navms

com.cloudera.cmf.NAVIGATORMETASERVER.db.user=navms

com.cloudera.cmf.NAVIGATORMETASERVER.db.password=密码

集群组件安装目录:

# pwd

/opt/cloudera/parcels

# ls -lh

total 8.0K

lrwxrwxrwx 1 root root 26 Sep 8 18:02 CDH -> CDH-5.9.0-1.cdh5.9.0.p0.23

drwxr-xr-x 11 root root 4.0K Oct 21 2016 CDH-5.9.0-1.cdh5.9.0.p0.23

lrwxrwxrwx 1 root root 25 Sep 11 09:57 KAFKA -> KAFKA-2.2.0-1.2.2.0.p0.68

drwxr-xr-x 6 root root 4.0K Jul 8 07:09 KAFKA-2.2.0-1.2.2.0.p0.68parcel软件包的存放位置:

# pwd

/opt/cloudera/parcel-repo

# ls -lh

total 1.5G

-rw-r----- 1 cloudera-scm cloudera-scm 1.4G Sep 8 16:12 CDH-5.9.0-1.cdh5.9.0.p0.23-el6.parcel

-rw-r----- 1 cloudera-scm cloudera-scm 41 Sep 8 16:12 CDH-5.9.0-1.cdh5.9.0.p0.23-el6.parcel.sha

-rw-r----- 1 cloudera-scm cloudera-scm 56K Sep 8 16:13 CDH-5.9.0-1.cdh5.9.0.p0.23-el6.parcel.torrent

-rw-r----- 1 cloudera-scm cloudera-scm 70M Sep 8 19:55 KAFKA-2.2.0-1.2.2.0.p0.68-el6.parcel

-rw-r----- 1 cloudera-scm cloudera-scm 41 Sep 8 19:55 KAFKA-2.2.0-1.2.2.0.p0.68-el6.parcel.sha

-rw-r----- 1 cloudera-scm cloudera-scm 2.9K Sep 8 19:55 KAFKA-2.2.0-1.2.2.0.p0.68-el6.parcel.torrent

hbase gc时间过长警告:

解决方法:

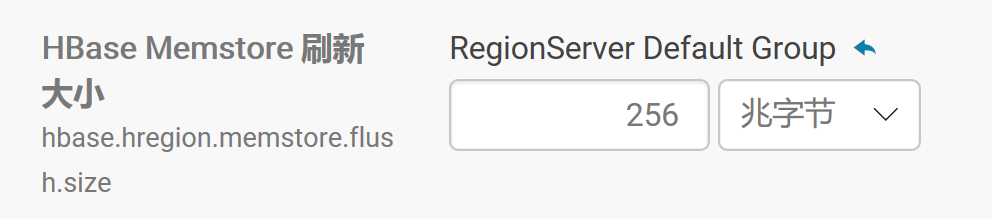

查看hbase-配置-hbase.hregion.memstore.flush.size,该值默认为128MB,如果报gc警告,可适当调小。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/IT小白/article/detail/195468

推荐阅读

相关标签