- 1Python中的iter()与next()函数_calliter.__next__()

- 2Zabbix绘制流量拓扑图_zabbix 流量地图

- 3Anaconda3 下载安装及不同python环境配置(Linux/Windows)_如何下载到指定python版本的anaconda

- 4基于YOLOv8/YOLOv7/YOLOv6/YOLOv5的智能监考系统(Python+PySide6界面+训练代码)

- 52022完整版:云计算面试题和答案(学习复习资料)_云计算售前面试

- 6pip换源命令(一行命令完成)

- 7Python打包exe和生成安装程序_python生产安装包

- 8Java项目:博客论坛管理系统(java+SpringBoot+JSP+LayUI+maven+mysql)_layui制作论坛列表带内容系统

- 9黑客爱用的HOOK技术大揭秘!_hook计算机是什么意思

- 10在 Java 中实现单例模式通常有两种方法_java两种单例创建

Jetson TX2 初体验_jetsontx2板载供电

赞

踩

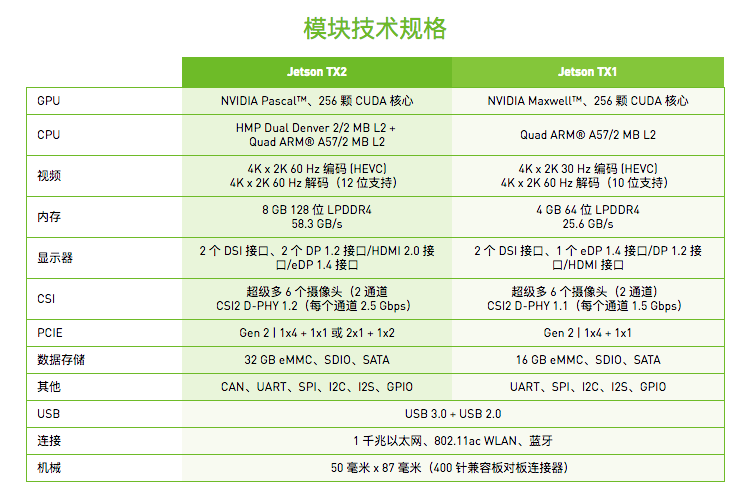

摘要: # 0. 简介 Jetson TX2【1】是基于 NVIDIA Pascal™ 架构的 AI 单模块超级计算机,性能强大(1 TFLOPS),外形小巧,节能高效(7.5W),非常适合机器人、无人机、智能摄像机和便携医疗设备等智能终端设备。 Jatson TX2 与 TX1 相比,内存和 eMMC 提高了一倍,CUDA 架构升级为 Pascal,每瓦性能提高一倍,支持 Jetson TX1

0. 简介

Jetson TX2【1】是基于 NVIDIA Pascal™ 架构的 AI 单模块超级计算机,性能强大(1 TFLOPS),外形小巧,节能高效(7.5W),非常适合机器人、无人机、智能摄像机和便携医疗设备等智能终端设备。

Jatson TX2 与 TX1 相比,内存和 eMMC 提高了一倍,CUDA 架构升级为 Pascal,每瓦性能提高一倍,支持 Jetson TX1 模块的所有功能,支持更大、更深、更复杂的深度神经网络。

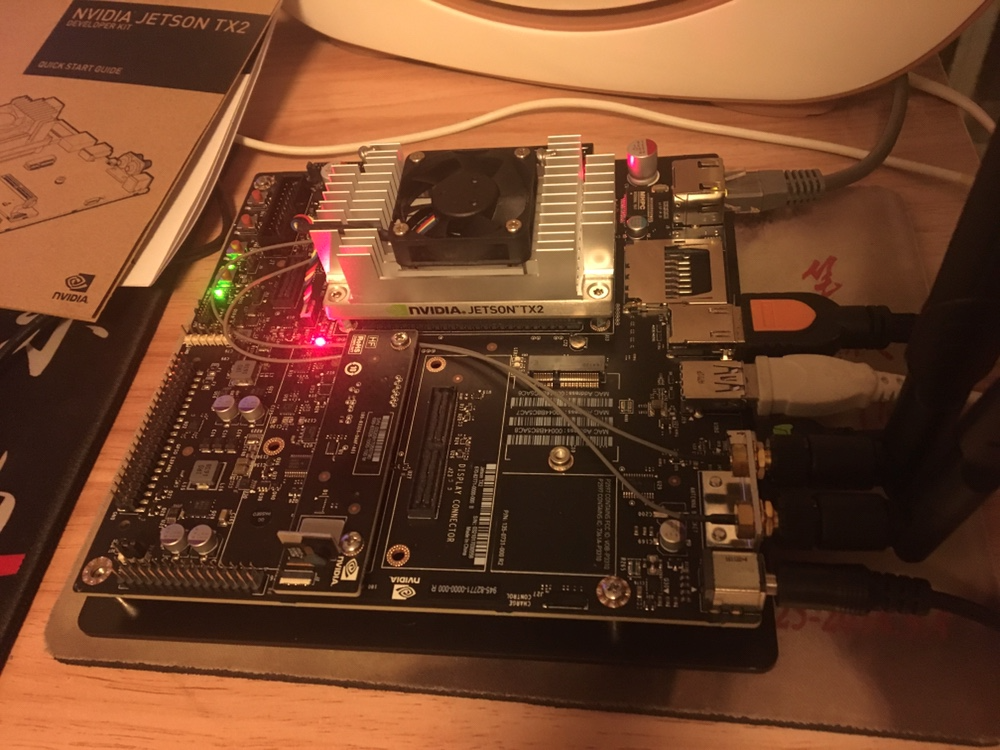

1. 开箱

2. 刷机

TX2 出厂时,已经自带了 Ubuntu 16.04 系统,可以直接启动。但一般我们会选择刷机,目的是更新到最新的 JetPack L4T,并自动安装最新的驱动、CUDA Toolkit、cuDNN、TensorRT。

刷机注意以下几点:

- Host 需要安装 Ubuntu 14.04,至少预留 15 GB 硬盘空间,不要用 root 用户运行 JetPack-${VERSION}.run,我用的是 JetPack-L4T-3.1-linux-x64.run

- TX2 需要进入 Recovery Mode,参考随卡自带的说明书步骤

- 刷机时间大概需要 1~2 小时,会格式化 eMMC,主要备份数据

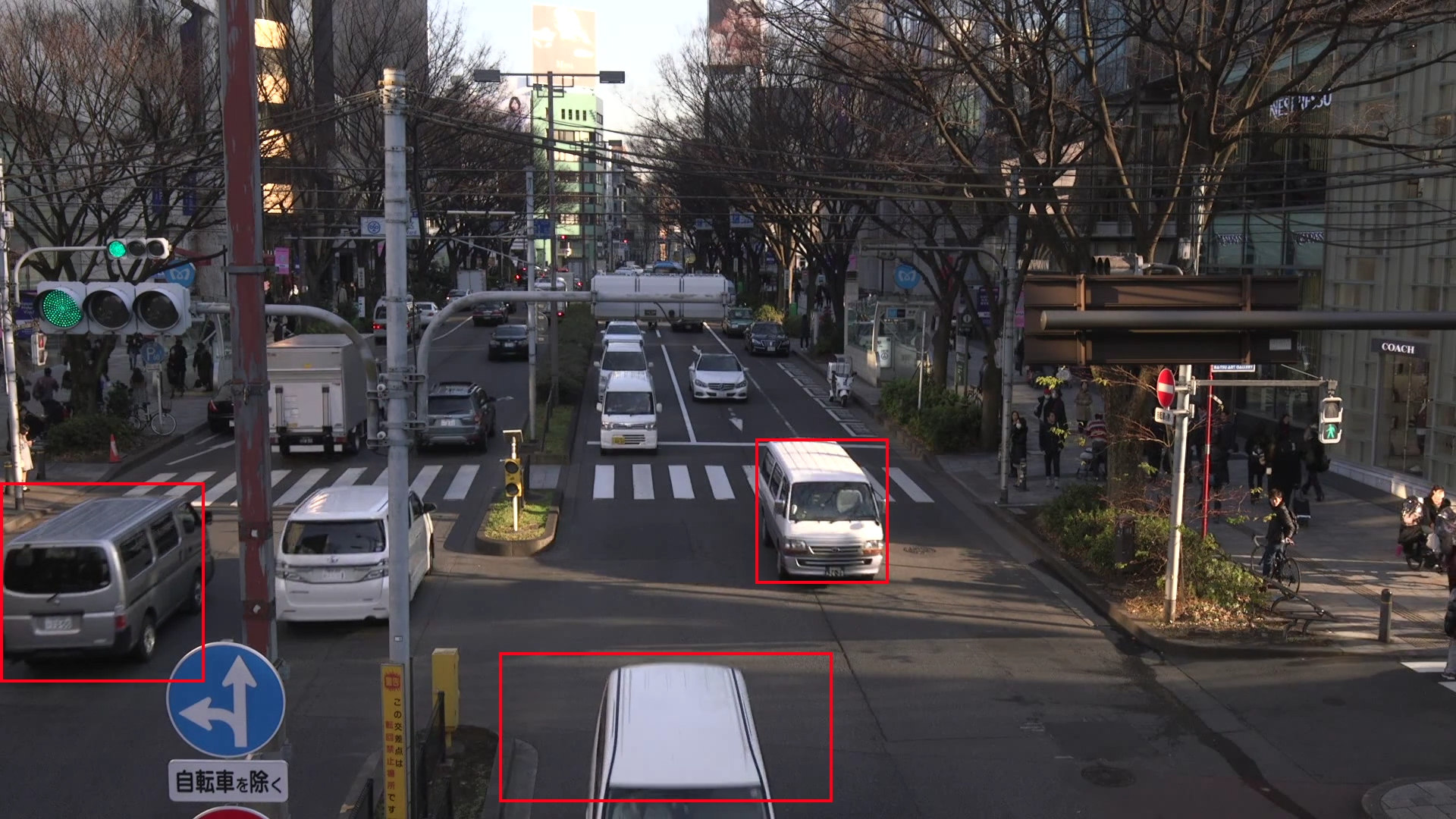

3. 运行视频目标检测 Demo

刷机成功后,重启 TX2,连接键盘鼠标显示器,就可以跑 Demo 了。

nvidia@tegra-ubuntu:~/tegra_multimedia_api/samples/backend$ ./backend 1 ../../data/Video/sample_outdoor_car_1080p_10fps.h264 H264 --trt-deployfile ../../data/Model/GoogleNet_one_class/GoogleNet_modified_oneClass_halfHD.prototxt --trt-modelfile ../../data/Model/GoogleNet_one_class/GoogleNet_modified_oneClass_halfHD.caffemodel --trt-forcefp32 0 --trt-proc-interval 1 -fps 10

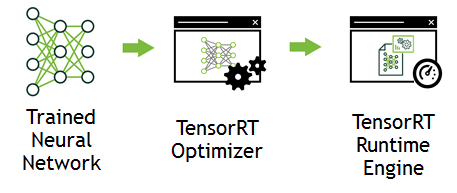

4. 运行 TensorRT Benchmark

TensorRT 【3】是 Nvidia GPU 上的深度学习 inference 优化库,可以将训练好的模型通过优化器生成 inference 引擎

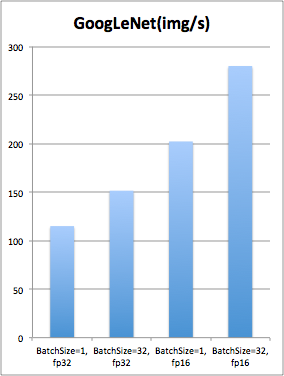

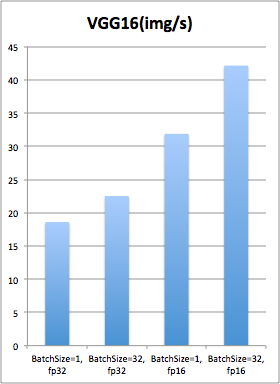

将 TX2 设置为 MAXP (最高性能)模式,运行 TensorRT 加速的 GoogLeNet、VGG16 得到处理性能如下:

5. TX2 不支持的 feature

- 不支持 int8

- 待发现

参考文献

【1】嵌入式系统开发者套件和模块 | NVIDIA Jetson | NVIDIA

【2】Download and Install JetPack L4T

【3】TensorRT

附录

deviceQuery

- nvidia@tegra-ubuntu:~/work/TensorRT/tmp/usr/src/tensorrt$ cd /usr/local/cuda/samples/1_Utilities/deviceQuery

- nvidia@tegra-ubuntu:/usr/local/cuda/samples/1_Utilities/deviceQuery$ ls

- deviceQuery deviceQuery.cpp deviceQuery.o Makefile NsightEclipse.xml readme.txt

- nvidia@tegra-ubuntu:/usr/local/cuda/samples/1_Utilities/deviceQuery$ ./deviceQuery

- ./deviceQuery Starting...

-

- CUDA Device Query (Runtime API) version (CUDART static linking)

-

- Detected 1 CUDA Capable device(s)

-

- Device 0: "NVIDIA Tegra X2"

- CUDA Driver Version / Runtime Version 8.0 / 8.0

- CUDA Capability Major/Minor version number: 6.2

- Total amount of global memory: 7851 MBytes (8232062976 bytes)

- ( 2) Multiprocessors, (128) CUDA Cores/MP: 256 CUDA Cores

- GPU Max Clock rate: 1301 MHz (1.30 GHz)

- Memory Clock rate: 1600 Mhz

- Memory Bus Width: 128-bit

- L2 Cache Size: 524288 bytes

- Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

- Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

- Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

- Total amount of constant memory: 65536 bytes

- Total amount of shared memory per block: 49152 bytes

- Total number of registers available per block: 32768

- Warp size: 32

- Maximum number of threads per multiprocessor: 2048

- Maximum number of threads per block: 1024

- Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

- Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

- Maximum memory pitch: 2147483647 bytes

- Texture alignment: 512 bytes

- Concurrent copy and kernel execution: Yes with 1 copy engine(s)

- Run time limit on kernels: No

- Integrated GPU sharing Host Memory: Yes

- Support host page-locked memory mapping: Yes

- Alignment requirement for Surfaces: Yes

- Device has ECC support: Disabled

- Device supports Unified Addressing (UVA): Yes

- Device PCI Domain ID / Bus ID / location ID: 0 / 0 / 0

- Compute Mode:

- < Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

-

- deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 8.0, CUDA Runtime Version = 8.0, NumDevs = 1, Device0 = NVIDIA Tegra X2

- Result = PASS

内存带宽测试

- nvidia@tegra-ubuntu:/usr/local/cuda/samples/1_Utilities/bandwidthTest$ ./bandwidthTest

- [CUDA Bandwidth Test] - Starting...

- Running on...

-

- Device 0: NVIDIA Tegra X2

- Quick Mode

-

- Host to Device Bandwidth, 1 Device(s)

- PINNED Memory Transfers

- Transfer Size (Bytes) Bandwidth(MB/s)

- 33554432 20215.8

-

- Device to Host Bandwidth, 1 Device(s)

- PINNED Memory Transfers

- Transfer Size (Bytes) Bandwidth(MB/s)

- 33554432 20182.2

-

- Device to Device Bandwidth, 1 Device(s)

- PINNED Memory Transfers

- Transfer Size (Bytes) Bandwidth(MB/s)

- 33554432 35742.8

-

- Result = PASS

-

- NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

GEMM 测试

- nvidia@tegra-ubuntu:/usr/local/cuda/samples/7_CUDALibraries/batchCUBLAS$ ./batchCUBLAS -m1024 -n1024 -k1024

- batchCUBLAS Starting...

-

- GPU Device 0: "NVIDIA Tegra X2" with compute capability 6.2

-

-

- ==== Running single kernels ====

-

- Testing sgemm

- #### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0xbf800000, -1) beta= (0x40000000, 2)

- #### args: lda=1024 ldb=1024 ldc=1024

- ^^^^ elapsed = 0.00372291 sec GFLOPS=576.83

- @@@@ sgemm test OK

- Testing dgemm

- #### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0x0000000000000000, 0) beta= (0x0000000000000000, 0)

- #### args: lda=1024 ldb=1024 ldc=1024

- ^^^^ elapsed = 0.10940003 sec GFLOPS=19.6296

- @@@@ dgemm test OK

-

- ==== Running N=10 without streams ====

-

- Testing sgemm

- #### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0xbf800000, -1) beta= (0x00000000, 0)

- #### args: lda=1024 ldb=1024 ldc=1024

- ^^^^ elapsed = 0.03462315 sec GFLOPS=620.245

- @@@@ sgemm test OK

- Testing dgemm

- #### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0xbff0000000000000, -1) beta= (0x0000000000000000, 0)

- #### args: lda=1024 ldb=1024 ldc=1024

- ^^^^ elapsed = 1.09212208 sec GFLOPS=19.6634

- @@@@ dgemm test OK

-

- ==== Running N=10 with streams ====

-

- Testing sgemm

- #### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0x40000000, 2) beta= (0x40000000, 2)

- #### args: lda=1024 ldb=1024 ldc=1024

- ^^^^ elapsed = 0.03504515 sec GFLOPS=612.776

- @@@@ sgemm test OK

- Testing dgemm

- #### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0xbff0000000000000, -1) beta= (0x0000000000000000, 0)

- #### args: lda=1024 ldb=1024 ldc=1024

- ^^^^ elapsed = 1.09177494 sec GFLOPS=19.6697

- @@@@ dgemm test OK

-

- ==== Running N=10 batched ====

-

- Testing sgemm

- #### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0x3f800000, 1) beta= (0xbf800000, -1)

- #### args: lda=1024 ldb=1024 ldc=1024

- ^^^^ elapsed = 0.03766394 sec GFLOPS=570.17

- @@@@ sgemm test OK

- Testing dgemm

- #### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0xbff0000000000000, -1) beta= (0x4000000000000000, 2)

- #### args: lda=1024 ldb=1024 ldc=1024

- ^^^^ elapsed = 1.09389901 sec GFLOPS=19.6315

- @@@@ dgemm test OK

-

- Test Summary

- 0 error(s)