热门标签

热门文章

- 1mysql系列之-根据月份查询数据_mysql按月份查询并汇总

- 2Ubuntu22.04与深度学习配置(已搭建三台服务器)_深度学习是用ubantu20好还是用ubantu22比较好

- 3vscode 远程开发golang_vscode ssh 远程开发怎么启动golang项目

- 4网络安全CTF夺旗赛入门到入狱-入门介绍篇_ctf夺旗赛找flag

- 5nvm安装步骤及使用方法_nvm使用教程

- 6linux 完全卸载docker_linux完全卸载docker

- 7基于hadoop或docker环境下,Kafka+flink+mysql+datav的实时数据大屏展示_flink 实时大屏

- 8springboot--跨域_springboot什么是跨域问题

- 9常见算法在实际项目中的应用_算法在实际开发中有用么

- 10MySQL 10几种索引类型,你都清楚吗?_mysql索引分类

当前位置: article > 正文

User class threw exception: org.apache.spark.SparkException: Task not serializable问题解决_diagnostics: user class threw exception: org.apach

作者:Monodyee | 2024-04-23 15:28:15

赞

踩

diagnostics: user class threw exception: org.apache.spark.sparkexception: jo

问题描述:

计划将sparksql查询结果数据通过alibaba.druid.pool.存入mysql,结果报错序列化问题

User class threw exception: org.apache.spark.SparkException: Task not serializable

at org.apache.spark.util.ClosureCleaner$.ensureSerializable(ClosureCleaner.scala:345)

at org.apache.spark.util.ClosureCleaner$.org$apache$spark$util$ClosureCleaner$$clean(ClosureCleaner.scala:335)

at org.apache.spark.util.ClosureCleaner$.clean(ClosureCleaner.scala:159)

at org.apache.spark.SparkContext.clean(SparkContext.scala:2304)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1.apply(RDD.scala:934)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1.apply(RDD.scala:933)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.foreachPartition(RDD.scala:933)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply$mcV$sp(Dataset.scala:2680)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply(Dataset.scala:2680)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply(Dataset.scala:2680)

at

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

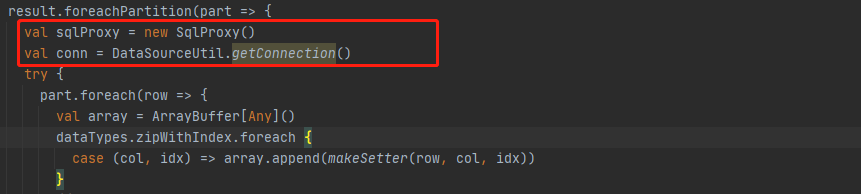

解决方法:

-

将conn写在算子操作(亲测有效)

-

序列化类并使用广播

外部创建conn sparkSession.sparkContext.broadcast(conn)- 1

- 2

但本人将DataSourceUtil序列化后报错如下,可能是这个druidpooledConnetction没有序列化?

User class threw exception: java.io.NotSerializableException: com.alibaba.druid.pool.DruidPooledConnection Serialization stack: - object not serializable (class: com.alibaba.druid.pool.DruidPooledConnection, value: com.mysql.jdbc.JDBC4Connection@194812b9) at org.apache.spark.serializer.SerializationDebugger$.improveException(SerializationDebugger.scala:40) at org.apache.spark.serializer.JavaSerializationStream.writeObject(JavaSerializer.scala:46) at org.apache.spark.broadcast.TorrentBroadcast$$anonfun$blockifyObject$2.apply(TorrentBroadcast.scala:291) at org.apache.spark.broadcast.TorrentBroadcast$$anonfun$blockifyObject$2.apply(TorrentBroadcast.scala:291) at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1381) at org.apache.spark.broadcast.TorrentBroadcast$.blockifyObject(TorrentBroadcast.scala:292) at org.apache.spark.broadcast.TorrentBroadcast.writeBlocks(TorrentBroadcast.scala:127) at org.apache.spark.broadcast.TorrentBroadcast.<init>(TorrentBroadcast.scala:88) at org.apache.spark.broadcast.TorrentBroadcastFactory.newBroadcast(TorrentBroadcastFactory.scala:34) at org.apache.spark.broadcast.BroadcastManager.newBroadcast(BroadcastManager.scala:62) at org.apache.spark.SparkContext.broadcast(SparkContext.scala:1487) at com.aisino.service.DwdDataService$.monthlyStatistics_1(DwdDataService.scala:61) at com.aisino.controller.Test11$.main(Test11.scala:27) at com.aisino.controller.Test11.main(Test11.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$4.run(ApplicationMaster.scala:721)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/Monodyee/article/detail/474620

推荐阅读

相关标签