- 1常用Web安全扫描工具合集

- 2在Linux上用Gstreamer进行推流(1)_linux 推流软件

- 3Git 教程之 设置 .gitignore, IDEA 安装插件以及设置全局文件_idea 怎么设置全局igenore

- 4利用tensorflow.js实现JS中的AI

- 5【小5聊】微信小程序开发之发布体验版后上传图片失败的情况_小程序体验版 上传头像失败

- 6推断性执行攻击 GhostRace 影响所有CPU和OS厂商

- 7STM32使用标准版RT-Thread,移植bsp中的板文件后,想使用I/O设备模型,使用串口3或者串口4收发时,发现串口3或者串口4没反应

- 8卷积神经网络CNN

- 9加速度计/磁传感器/陀螺仪_加速度计能测航向角度吗

- 10基于SpringBoot的网页版进销存-2.0版本_进销存网页版

Hadoop 3.1.3 详细安装教程_hadoop-3.1.3.tar.gz

赞

踩

Hadoop 3.1.3 详细安装教程

Hadoop运行环境搭建

虚拟机环境准备(三台虚拟机都要执行)

1.准备三台虚拟机,虚拟机配置要求如下:

(1)单台虚拟机:内存4G,硬盘50G,安装必要环境

sudo yum install -y epel-release

sudo yum install -y psmisc nc net-tools rsync vim lrzsz ntp libzstd openssl-static tree iotop git

- 1

- 2

(2) 修改克隆虚拟机的静态IP;

sudo vim /etc/sysconfig/network-scripts/ifcfg-ens33

- 1

DEVICE=ens33

TYPE=Ethernet

ONBOOT=yes

BOOTPROTO=static

NAME=“ens33”

IPADDR=192.168.43.153

PREFIX=24

GATEWAY=192.168.43.2

DNS1=192.168.43.2

2 . 修改主机名

(1)修改主机名称

sudo hostnamectl --static set-hostname hadoop001

- 1

(2)配置主机名称映射,打开/etc/hosts

sudo vim /etc/hosts

- 1

192.168.43.153 hadoop001

192.168.43.154 hadoop002

192.168.43.155 hadoop003

(3) 修改window的主机映射文件(hosts文件)

进入C:WindowsSystem32driversetc路径

192.168.43.153 hadoop001

192.168.43.154 hadoop002

192.168.43.155 hadoop003

3.关闭防火墙

sudo systemctl stop firewalld

sudo systemctl disable firewalld

- 1

- 2

4 . 在/opt目录下创建文件夹

cd /opt

sudo mkdir software

- 1

- 2

5 .安装JDK

(1)卸载现有JDK

rpm -qa | grep -i java | xargs -n1 sudo rpm -e --nodeps

- 1

(2)安装JDK (所有tar包上传至/opt/software目录)

cd /opt/software

sudo tar -zxvf jdk-8u201-linux-x64.tar.gz

sudo vi /etc/profile

- 1

- 2

- 3

文件末尾添加

export JAVA_HOME=/opt/software/jdk1.8.0_201

export PATH= J A V A _ H O M E / b i n : JAVA\_HOME/bin: JAVA_HOME/bin:PATH

使环境变量生效

sudo source /etc/profile

java -version

- 1

- 2

6 . 安装hadoop

(1) 下载Hadoop安装包

(2)解压 hadoop-3.1.3.tar.gz

tar -zxvf hadoop-3.1.3.tar.gz

- 1

(3) 配置环境变量

sudo vi /etc/profile

- 1

文件末尾添加

export HADOOP_HOME=/opt/software/hadoop-3.1.3

export PATH= P A T H : PATH: PATH:HADOOP_HOME/bin

export PATH= P A T H : PATH: PATH:HADOOP_HOME/sbin

使环境变量生效

sudo source /etc/profile

hadoop version

- 1

- 2

配置集群(三台虚拟机都要执行)

1 . 配置ssh免密登录

(1)生成公钥和私钥

然后敲(三个回车),就会生成两个文件id_rsa(私钥)、id_rsa.pub(公钥)

ssh-keygen -t rsa

- 1

(2)将公钥拷贝到要免密登录的目标机器上

ssh-copy-id hadoop001

ssh-copy-id hadoop002

ssh-copy-id hadoop003

- 1

- 2

- 3

2 . 集群配置

(1) 集群部署规划

组件

hadoop001

hadoop002

hadoop003

HDFS

NameNode,dataNode

dataNode

dataNode,SecondaryNameNode

YARN

NodeManager

ResourceManager,NodeManager

NodeManager

(2)配置集群

配置core-site.xml

cd $HADOOP_HOME/etc/hadoop

vim core-site.xml

- 1

- 2

文件内容如下:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop001:9870</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/software/hadoop-3.1.3/data</value>

</property>

<property>

<name>hadoop.proxyuser.atguigu.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.http.staticuser.user</name>

<value>root</value>

</property>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

配置hdfs-site.xml

vim hdfs-site.xml

- 1

文件内容如下:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop003:9868</value>

</property>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

配置yarn-site.xml

vim yarn-site.xml

- 1

文件内容如下:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop002</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>512</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>4096</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>4096</value>

</property>

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

配置mapred-site.xml

vim mapred-site.xml

- 1

文件内容如下:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

配置workers

vim /opt/software/hadoop-3.1.3/etc/hadoop/workers

- 1

文件内容如下:

hadoop001

hadoop002

hadoop003

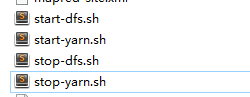

3 . 启动集群

(1)如果集群是第一次启动,需要在hadoop001节点格式化NameNode(注意格式化之前,一定要先停止上次启动的所有namenode和datanode进程,然后再删除data和log数据)

hdfs namenode -format

- 1

(2) 启动HDFS

启动时如果有这几个参数的报错信息,将这几个参数添加到 sbin/目录下这几个文件中

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

start-dfs.sh

- 1

(3)在配置了ResourceManager的节点(hadoop002)启动YARN

start-yarn.sh

- 1

(4)Web端查看相关页面

namenode

http://hadoop001:9870

yarn

http://hadoop002:8088