- 1Mac 转UTF-8编码格式_mac如何txt保存为utf8

- 2浅谈学习网络安全技术必备的一些网络基础知识

- 3ollama-python-Python快速部署Llama 3等大型语言模型最简单方法_python ollama

- 4我在华为OD的total++天(持续更新)_华为od等级d1-d5薪资表

- 5服务器自带的校时ip是多少钱,国内大概可用的NTP时间校准服务器IP地址

- 6(二)结构型模式:4、组合模式(Composite Pattern)(C++实例)_公司组织架构采用组合模式

- 7Zookeeper 安装教程和使用指南_zk 安装完后如何使用

- 8Python日志logging实战教程_line 46, in

from utils.loggers import log - 9PHP内核芒果同城获客+Ai混剪系统源码_ai素材视频混剪 源码

- 10ElementUI:设置table的背景透明、根据表格情况设置背景色、设置文字颜色、文字左右间距、表头_eltable透明背景

docker+springboot整合elk7.6.1实现不同级别日志监控(含TLS和SSL)

赞

踩

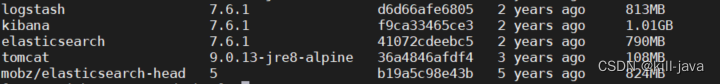

安装镜像

docker pull elasticsearch:7.6.1

docker pull kibana:7.6.1

docker pull logstash 7.6.1

docker pull mobz/elasticsearch-head

- 1

- 2

- 3

- 4

云服务器的话要放开对应端口

- 4560

- 5601

- 9100

- 9200

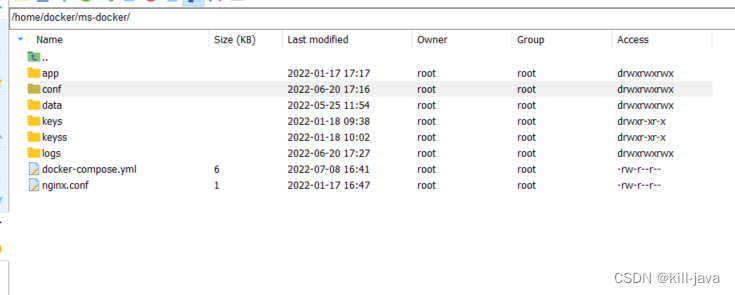

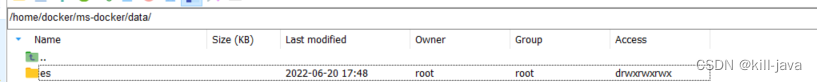

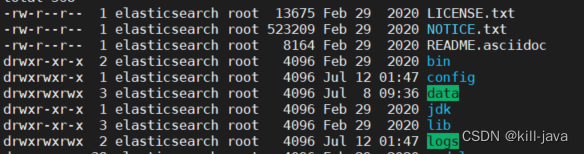

新建目录

- docker-compose.yml目录位置

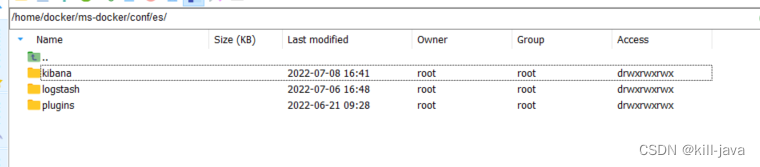

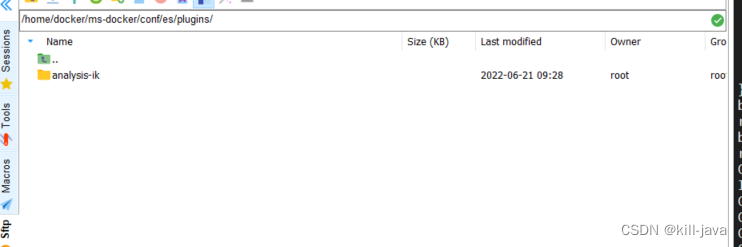

- 在conf目录下新建es

- es目录下新建kibana,logstash,plugins目录

- 给新建好的目录赋予权限chmod 777

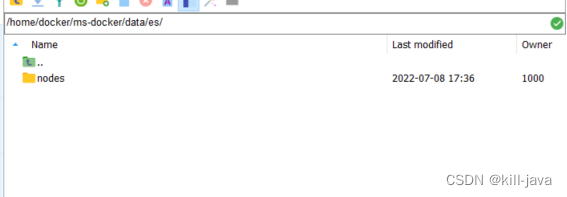

- data目录下新建es

- 给es目录赋予权限 chmod 777

- 后续elasticsearch的数据会存在这个地方

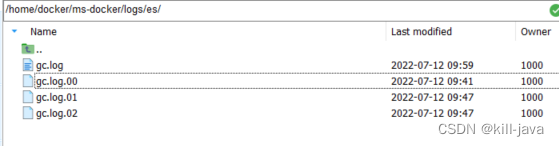

- logs目录下新建es

- 给es目录赋予权限 chmod 777

- 后续elasticsearch的日志会存放在这个地方

docker-compose

version: '3' services: es: image: elasticsearch:7.6.1 container_name: es privileged: true ports: - "9200:9200" restart: always networks: - front-ms environment: - discovery.type=single-node - "ES_JAVA_OPTS=-Xms512m -Xmx512m" volumes: - $PWD/data/es:/usr/share/elasticsearch/data - $PWD/logs/es:/usr/share/elasticsearch/logs - $PWD/conf/es/plugins:/usr/share/elasticsearch/plugins es-head: image: mobz/elasticsearch-head:5 container_name: es-head ports: - "9100:9100" kibana: image: kibana:7.6.1 container_name: kibana ports: - "5601:5601" depends_on: - es networks: - front-ms privileged: true volumes: - $PWD/conf/es/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml logstash: image: logstash:7.6.1 container_name: logstash volumes: - $PWD/conf/es/logstash/logstash.conf:/usr/share/logstash/pipeline/logstash.conf depends_on: - es ports: - 4560:4560 networks: - front-ms networks: front-ms: driver: bridge

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

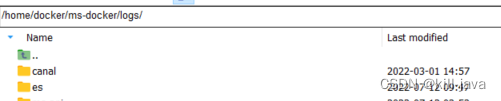

启动es

- docker-compose up -d es

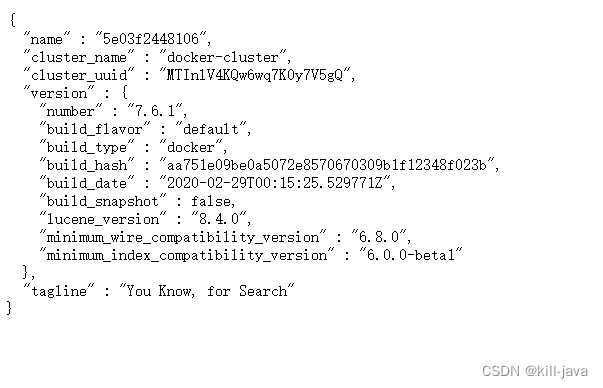

- 通过 ip:9200访问得到以下页面即为成功

start 如果正常启动了es并且挂载目录产生了log和data文件,忽略以下部分内容

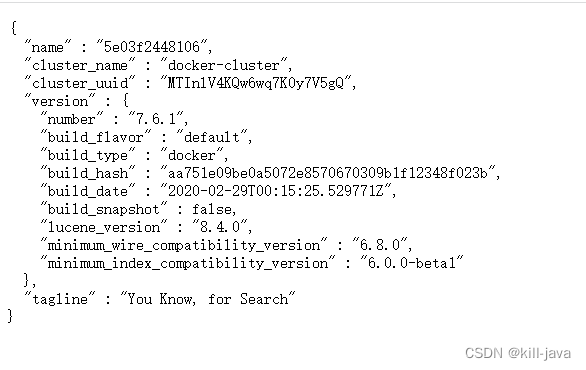

如果es挂载data或者logs导致无法启动

- 在es的-e参数里添加

- "TAKE_FILE_OWNERSHIP=true"

- 1

-

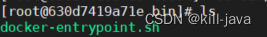

进入elasticsearch容器内部,在/usr/local/bin目录有一个启动文件

-

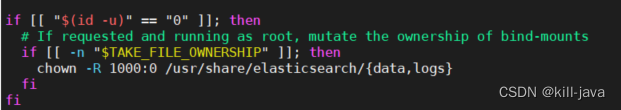

查看此文件可得知

-

TAKE_FILE_OWNERSHIP 变量为ture后会执行 chown -R 1000:0/usr/share/elasticsearch/{data,logs}命令

-

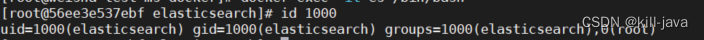

通过命令可得知,1000对应elasticsearch对象,0为root对象,root有些情况无法执行操作

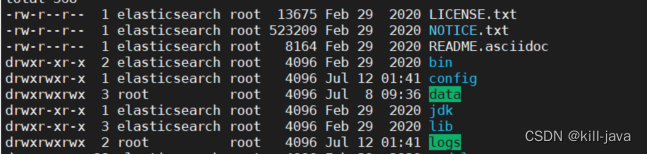

添加参数前

添加参数后

- 挂载成功示意图

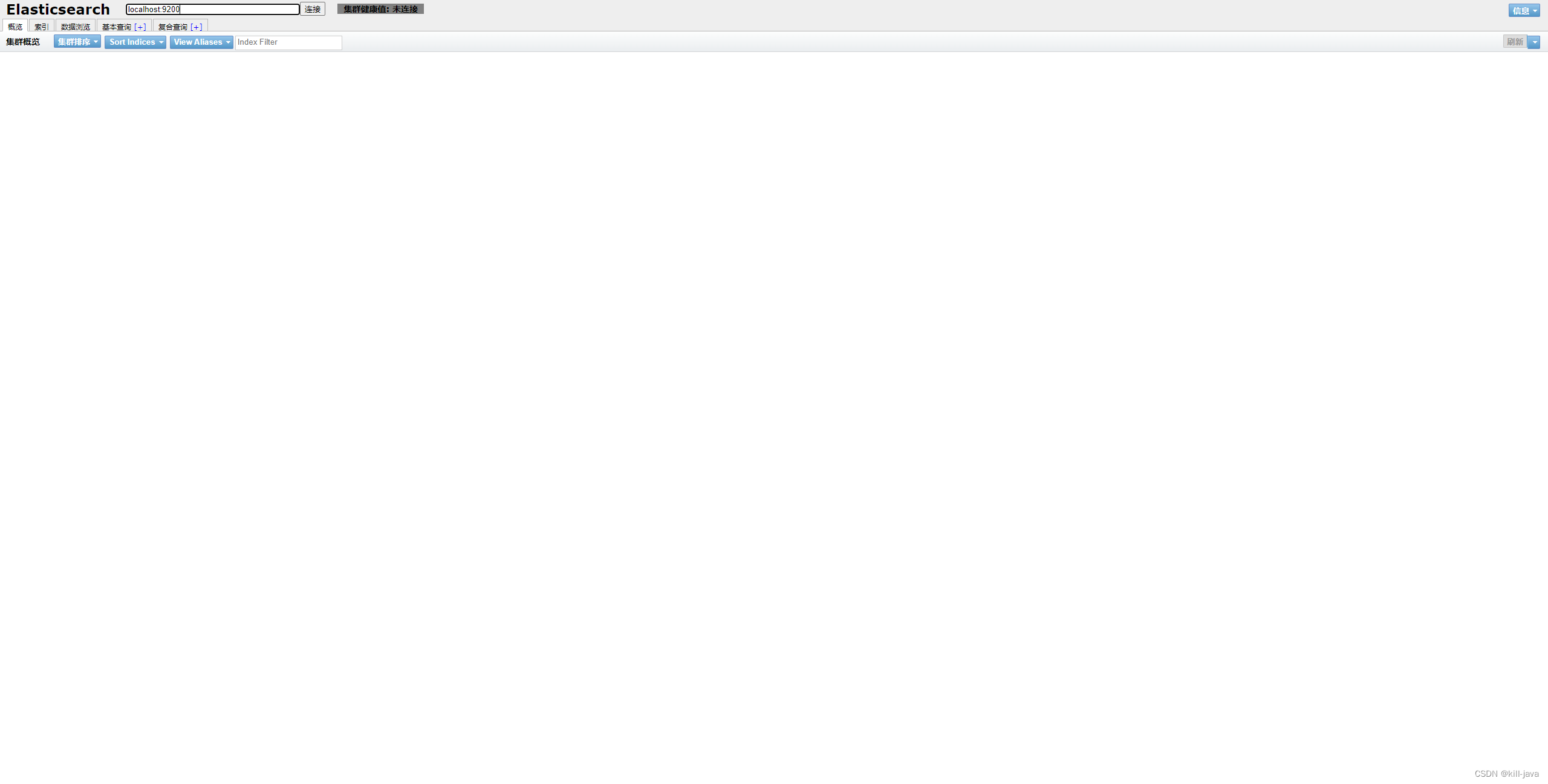

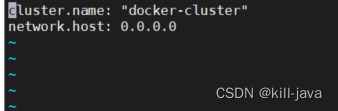

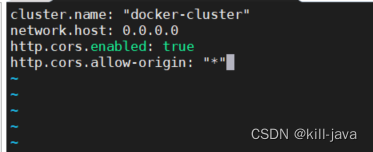

启动es-head

- docker-compose up -d es-head

- 通过ip:9100 访问得到以下页面

- F12查看未连接原因

- No ‘Access-Control-Allow-Origin’

- 发现是跨域问题

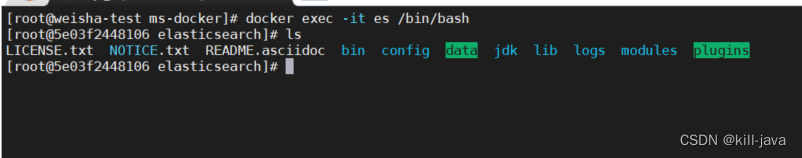

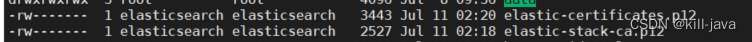

docker exec -it es /bin/bash

- 1

- 进入es容器内部

- ls找到config目录 cd config

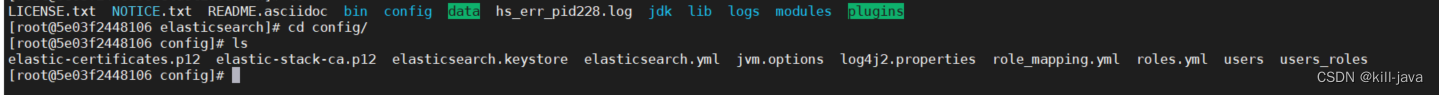

- vi elasticsearch.yml文件

- i 编写

http.cors.enabled: true

http.cors.allow-origin: "*"

- 1

- 2

- esc :wq退出

- exit退出容器

- 重启容器

docker-compose restart es

- 1

- 重启完毕继续访问9100端口

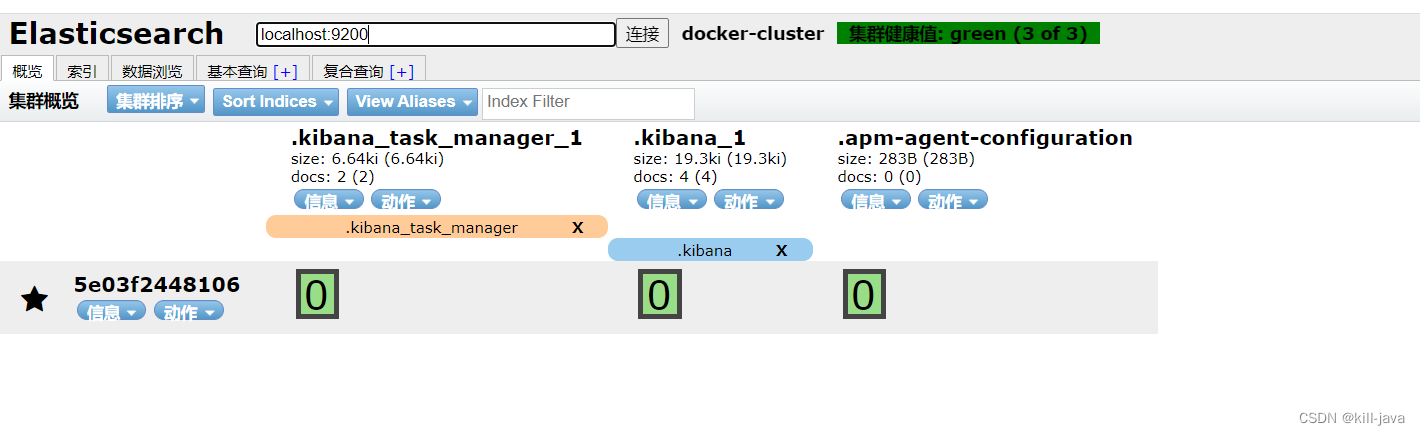

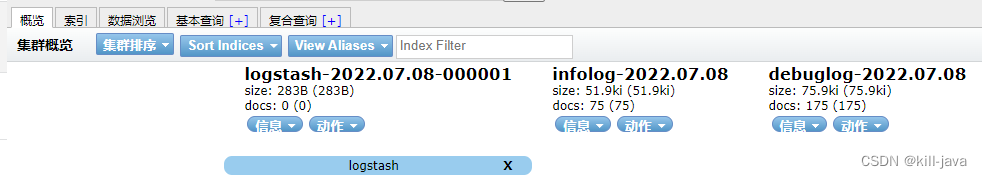

- 如下图所示及访问成功

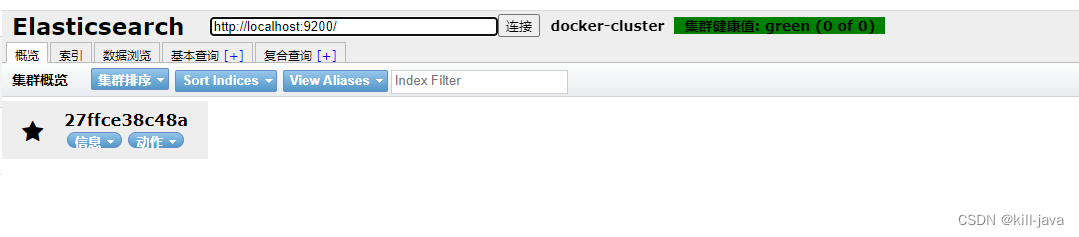

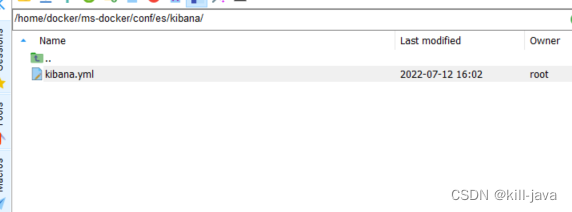

启动kibana

- 第一次启动时不要挂载kibana.yml,直接挂载是个空文件

- docker-compose up -d kibana

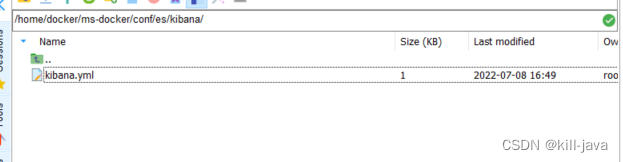

- 镜像启动后,把kibana的yml文件拷贝到宿主机的挂载目录/conf/es/kibana下

- docker cp 镜像id:/usr/share/kibana/config/kibana.yml /home/ms-docker/conf/es/kibana

- 编辑内容

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.name: kibana

server.host: "0"

elasticsearch.hosts: [ "http://es:9200" ]

xpack.monitoring.ui.container.elasticsearch.enabled: true

# 设置中文显示

i18n.locale: zh-CN

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- docker-compose up -d kibana 重启镜像

- 通过 ip:5601访问得到以下页面即为成功

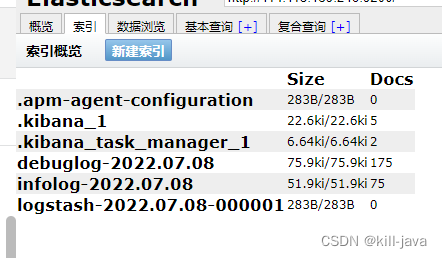

- 查看es-head中也出现了创建kibana时出现的索引

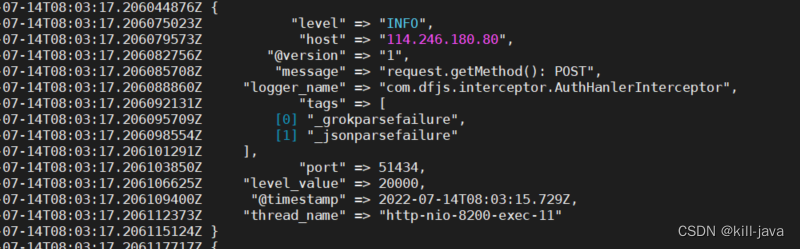

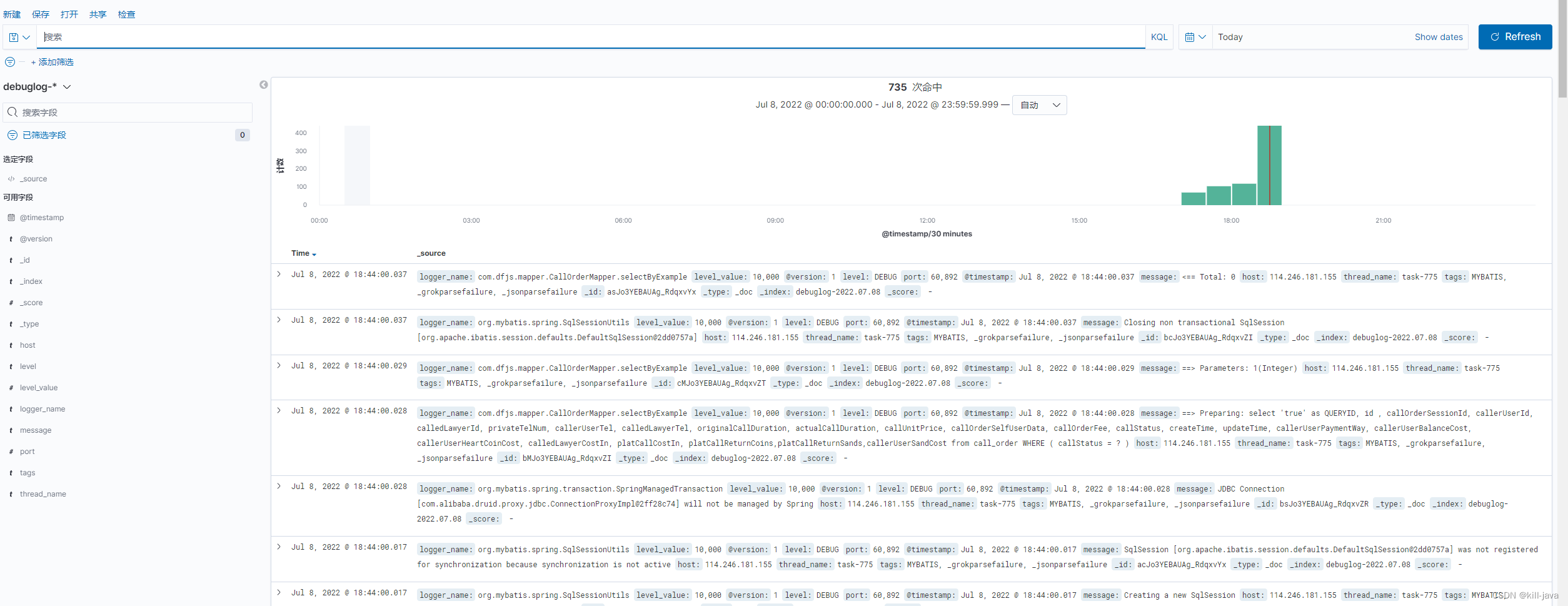

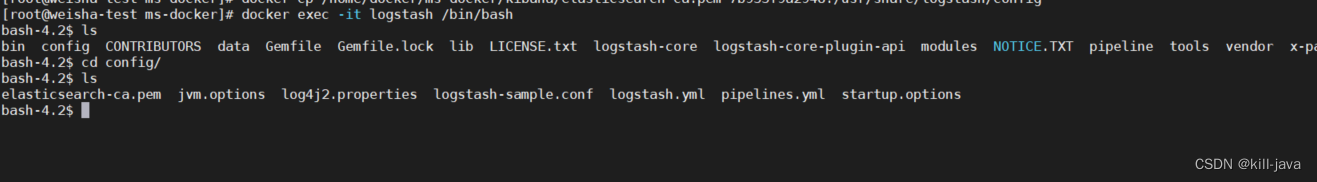

启动logstash

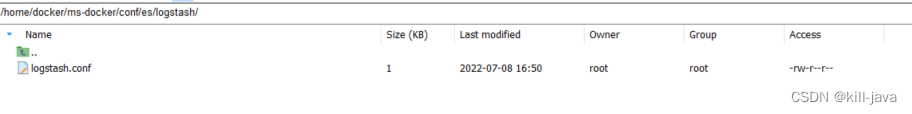

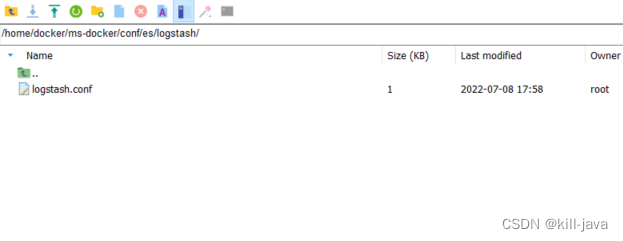

- 在上面创建好的conf/es/logstash目录下新建文件logstash.conf

- 使用tcp形式获取数据流并用json接收

- filter配置@timestamp使用java日志中的时间,而非接收日志时间,只接收ERROR,INFO,DEBUG级别日志

- 以json形式输出到message中

- output 配置要输出的index和hosts,并把收到的数据进行自定义过滤

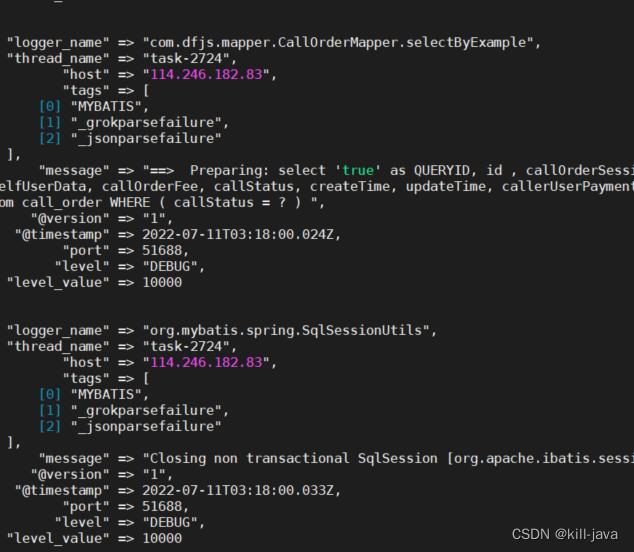

- stdout 开启控制台日志

input { tcp { mode => "server" host => "0.0.0.0" port => 4560 codec => json_lines } } filter { grok { match => ["message", "(?<customer_time>%{YEAR}\-%{MONTH}\-%{MONTHDAY}\s+%{TIME})"] } date { match => ["customer_time", "yyyy-MM-dd HH:mm:ss,SSS", "ISO8601"] locale => "en" target => ["@timestamp"] timezone => "Asia/Shanghai" } if [level] !~ "(ERROR|INFO|DEBUG)"{ drop {} } json { source => "message" } } output { if [level] == "INFO" { elasticsearch { hosts => "ip:9200" index => "infolog-%{+YYYY.MM.dd}" } }else if [level] == "ERROR" { elasticsearch { hosts => "ip:9200" index => "errorlog-%{+YYYY.MM.dd}" } }else if [level] == "DEBUG" { elasticsearch { hosts => "ip:9200" index => "debuglog-%{+YYYY.MM.dd}" } } stdout { codec => rubydebug } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- docker-compose up -d logstash

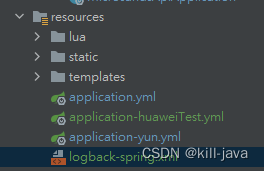

logback-spring.xml

- resources目录下新建logback-spring.xml文件,内容如下

<?xml version="1.0" encoding="UTF-8"?> <configuration> <!--设置变量,name为变量名,value为值,可以使用${变量名}方式使用--> <property name="DIR" value="log"/> <property name="LOG_HOME" value="logs"/> <property name="INFO_NAME" value="info_log"/> <property name="INFO_LOG_NAME" value="info"/> <property name="ERROR_NAME" value="error_log"/> <property name="ERROR_LOG_NAME" value="error"/> <property name="DEBUG_NAME" value="debug_log"/> <property name="DEBUG_LOG_NAME" value="debug"/> <property name="MDC_LOG_PATTERN" value="%red(%d{yyyy-MM-dd'T'HH:mm:ss.SSS}) %green(%p api %t) %blue(%logger{50}) %yellow([line:%L %msg]%n)"></property> <!-- 运行日志记录器,日期滚动记录 --> <appender name="info" class="ch.qos.logback.core.rolling.RollingFileAppender"> <!-- 正在记录的日志文件的路径及文件名 --> <file>${LOG_HOME}/${INFO_NAME}/${INFO_LOG_NAME}.log</file> <!-- 日志记录器的滚动策略,按日期,按大小记录--> <rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"> <fileNamePattern>${LOG_HOME}/${INFO_NAME}/${INFO_LOG_NAME}-%d{yyyy-MM-dd}.%i.log</fileNamePattern> <!-- 除按日志记录之外,还配置了日志文件不能超过50M,若超过50M,日志文件会以索引0开始, 命名日志文件,例如bizlog-biz-20181219.0.log --> <timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"> <maxFileSize>50MB</maxFileSize> <!--保存时间3天--> <!--<MaxHistory>3</MaxHistory>--> </timeBasedFileNamingAndTriggeringPolicy> </rollingPolicy> <!-- 追加方式记录日志 --> <append>true</append> <!-- 日志文件的格式 --> <encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder"> <pattern>%d{yyyy/MM/dd' 'HH:mm:ss.SSS} %X{req.requestId}[line:%L %msg] %n</pattern> <charset>utf-8</charset> </encoder> <!-- 此日志文件只记录info级别的 --> <filter class="ch.qos.logback.classic.filter.LevelFilter"> <level>info</level> <onMatch>ACCEPT</onMatch> <onMismatch>DENY</onMismatch> </filter> </appender> <appender name="error" class="ch.qos.logback.core.rolling.RollingFileAppender"> <!-- 正在记录的日志文件的路径及文件名 --> <file>${LOG_HOME}/${ERROR_NAME}/${ERROR_LOG_NAME}.log</file> <!-- 日志记录器的滚动策略,按日期,按大小记录--> <rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"> <fileNamePattern>${LOG_HOME}/${ERROR_NAME}/${ERROR_LOG_NAME}-%d{yyyy-MM-dd}.%i.log</fileNamePattern> <!-- 除按日志记录之外,还配置了日志文件不能超过50M,若超过50M,日志文件会以索引0开始, 命名日志文件,例如bizlog-biz-20181219.0.log --> <timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"> <maxFileSize>50MB</maxFileSize> <!--保存时间3天--> <!--<MaxHistory>3</MaxHistory>--> </timeBasedFileNamingAndTriggeringPolicy> </rollingPolicy> <!-- 追加方式记录日志 --> <append>true</append> <!-- 日志文件的格式 --> <encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder"> <pattern>%d{yyyy/MM/dd' 'HH:mm:ss.SSS} %X{req.requestId}[line:%L %msg] %n</pattern> <charset>utf-8</charset> </encoder> <!-- 此日志文件只记录info级别的 --> <filter class="ch.qos.logback.classic.filter.LevelFilter"> <level>error</level> <onMatch>ACCEPT</onMatch> <onMismatch>DENY</onMismatch> </filter> </appender> <appender name="debug" class="ch.qos.logback.core.rolling.RollingFileAppender"> <!-- 正在记录的日志文件的路径及文件名 --> <file>${LOG_HOME}/${DEBUG_NAME}/${DEBUG_LOG_NAME}.log</file> <!-- 日志记录器的滚动策略,按日期,按大小记录--> <rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"> <fileNamePattern>${LOG_HOME}/${DEBUG_NAME}/${DEBUG_LOG_NAME}-%d{yyyy-MM-dd}.%i.log</fileNamePattern> <!-- 除按日志记录之外,还配置了日志文件不能超过50M,若超过50M,日志文件会以索引0开始, 命名日志文件,例如bizlog-biz-20181219.0.log --> <timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"> <maxFileSize>50MB</maxFileSize> <!--保存时间3天--> <!--<MaxHistory>3</MaxHistory>--> </timeBasedFileNamingAndTriggeringPolicy> </rollingPolicy> <!-- 追加方式记录日志 --> <append>true</append> <!-- 日志文件的格式 --> <encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder"> <pattern>%d{yyyy/MM/dd' 'HH:mm:ss.SSS} %X{req.requestId}[line:%L %msg] %n</pattern> <charset>utf-8</charset> </encoder> <!-- 此日志文件只记录debug级别的 --> <filter class="ch.qos.logback.classic.filter.LevelFilter"> <level>debug</level> <onMatch>ACCEPT</onMatch> <onMismatch>DENY</onMismatch> </filter> </appender> <!--ConsoleAppender是打印到控制台的--> <appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender"> <!--encoder 默认配置为PatternLayoutEncoder--> <encoder> <pattern>${MDC_LOG_PATTERN}</pattern> <charset>utf-8</charset> </encoder> <!--此日志appender是为开发使用,只配置最底级别,控制台输出的日志级别是大于或等于此级别的日志信息--> <filter class="ch.qos.logback.classic.filter.ThresholdFilter"> <level>all</level> </filter> </appender> <!-- 这里配置的是logstash传输方式 --> <appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender"> <destination>ip:4560</destination> <encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/> </appender> <!--根loger。只有一个level属性,应为已经被命名为"root".--> <root level="info"> <appender-ref ref="info"/> <appender-ref ref="STDOUT"/> <appender-ref ref="error"/> <appender-ref ref="debug"/> <appender-ref ref="LOGSTASH"/> </root> </configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- application.yml文件中添加配置

logging:

level:

root: INFO

config: classpath:logback-spring.xml

- 1

- 2

- 3

- 4

- 运行java项目产生日志

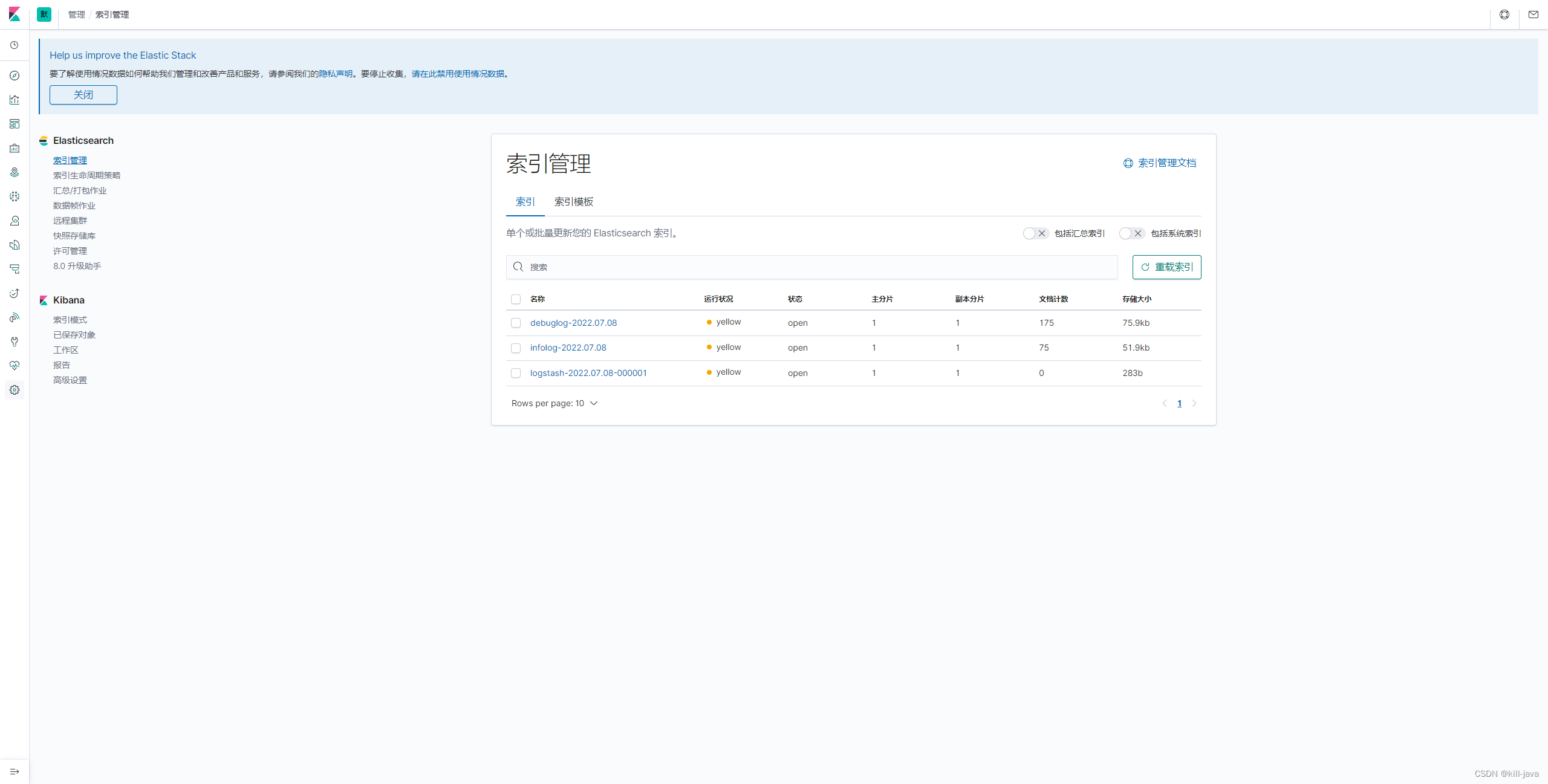

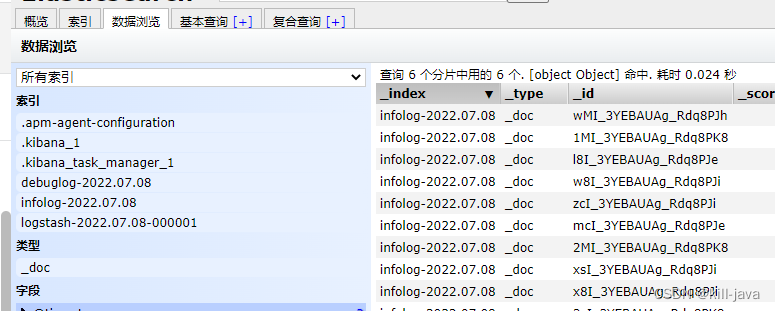

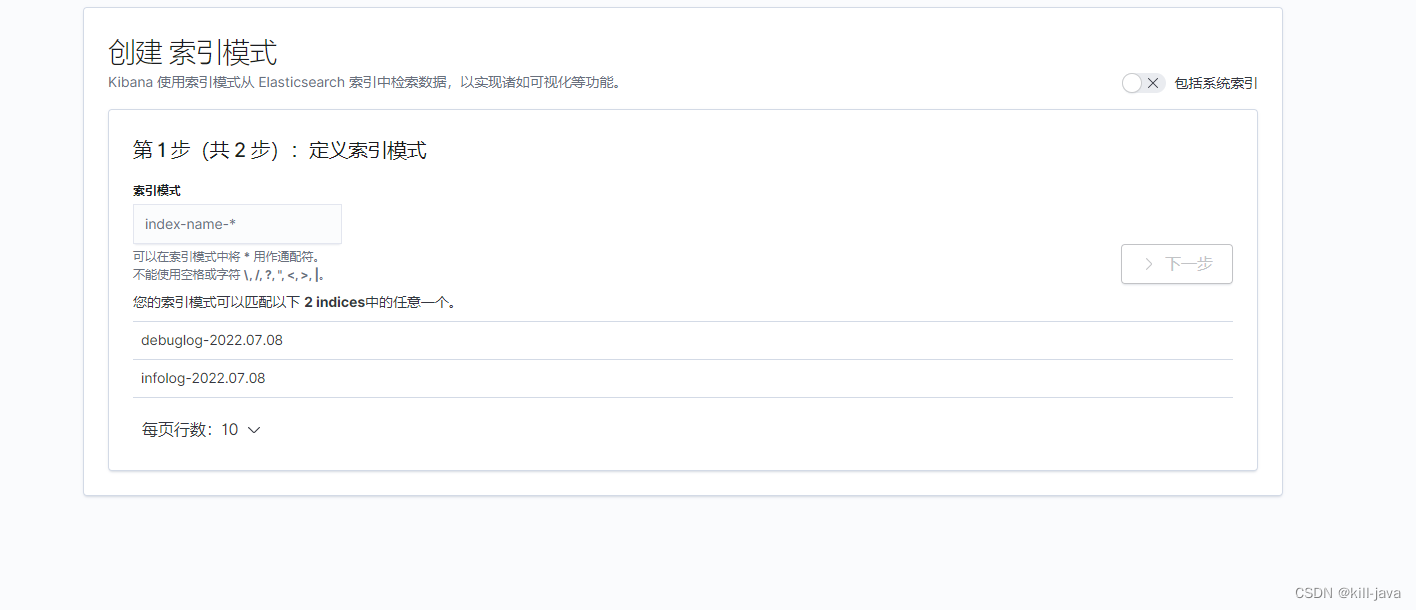

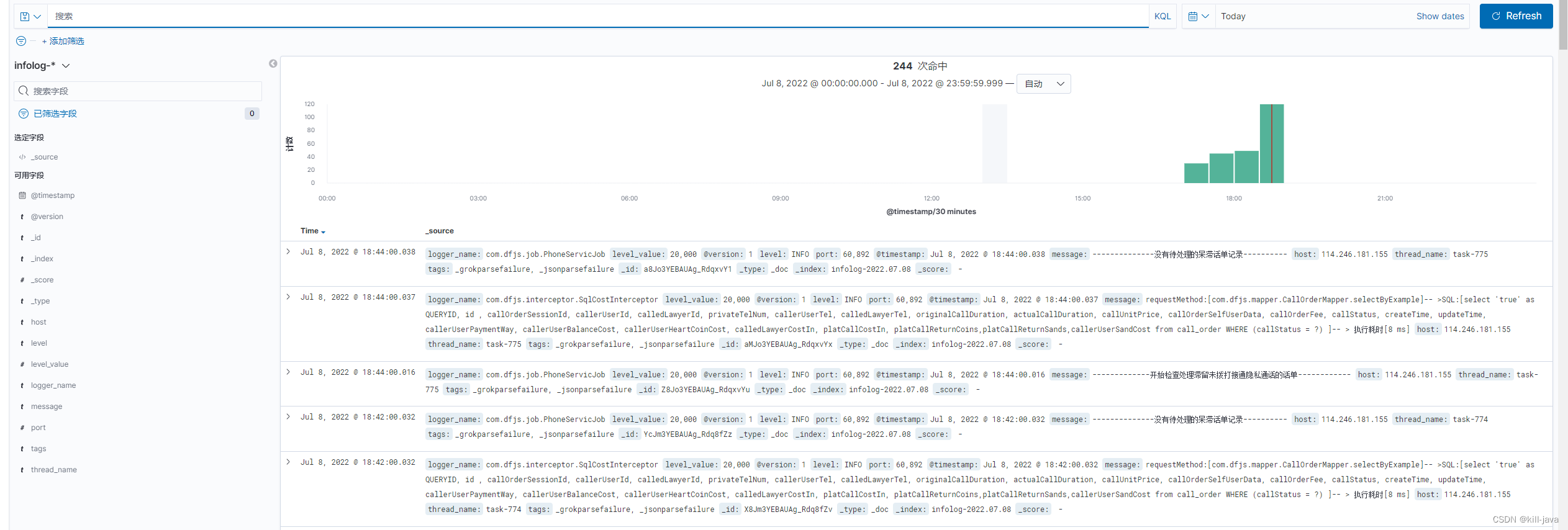

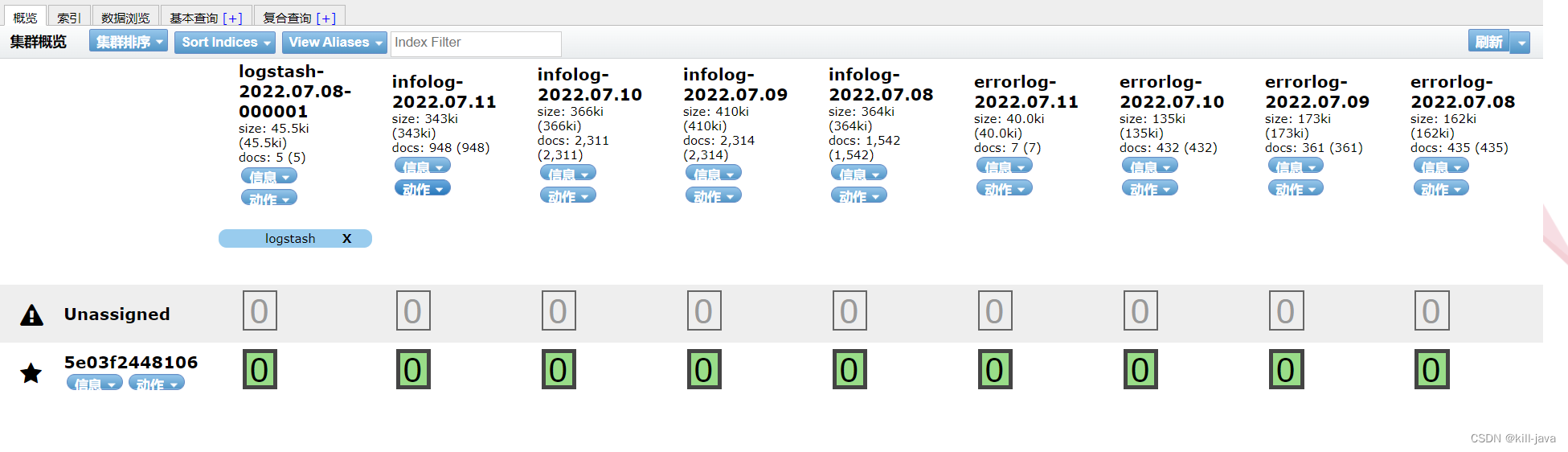

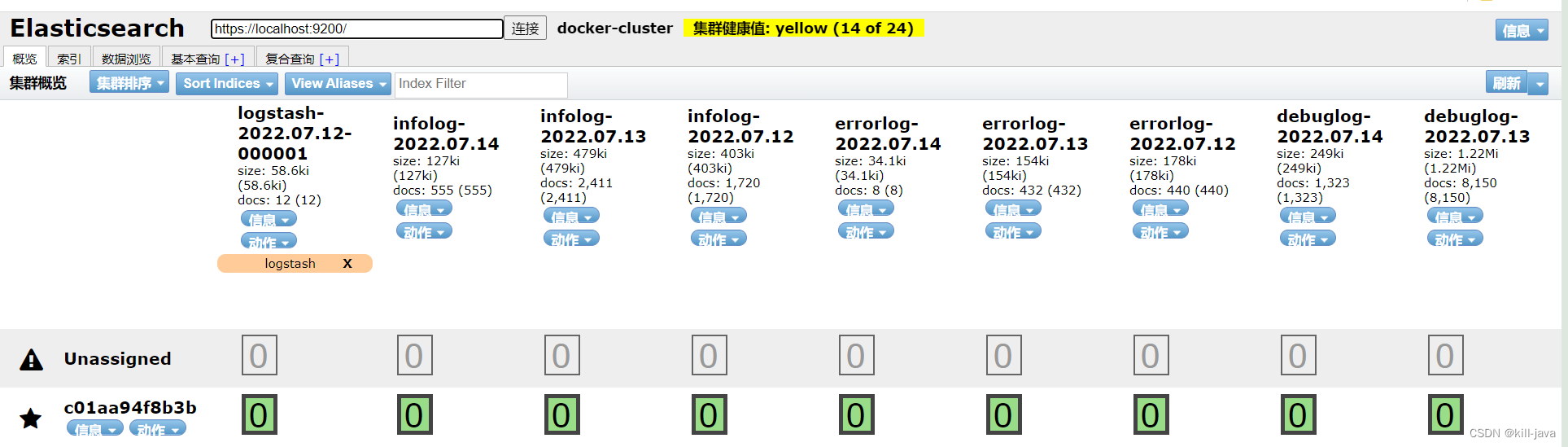

kibana配置索引模式

- 点击Management,打开索引管理,加载索引,可以发现已经收集到了日志

- 在es-head中查证

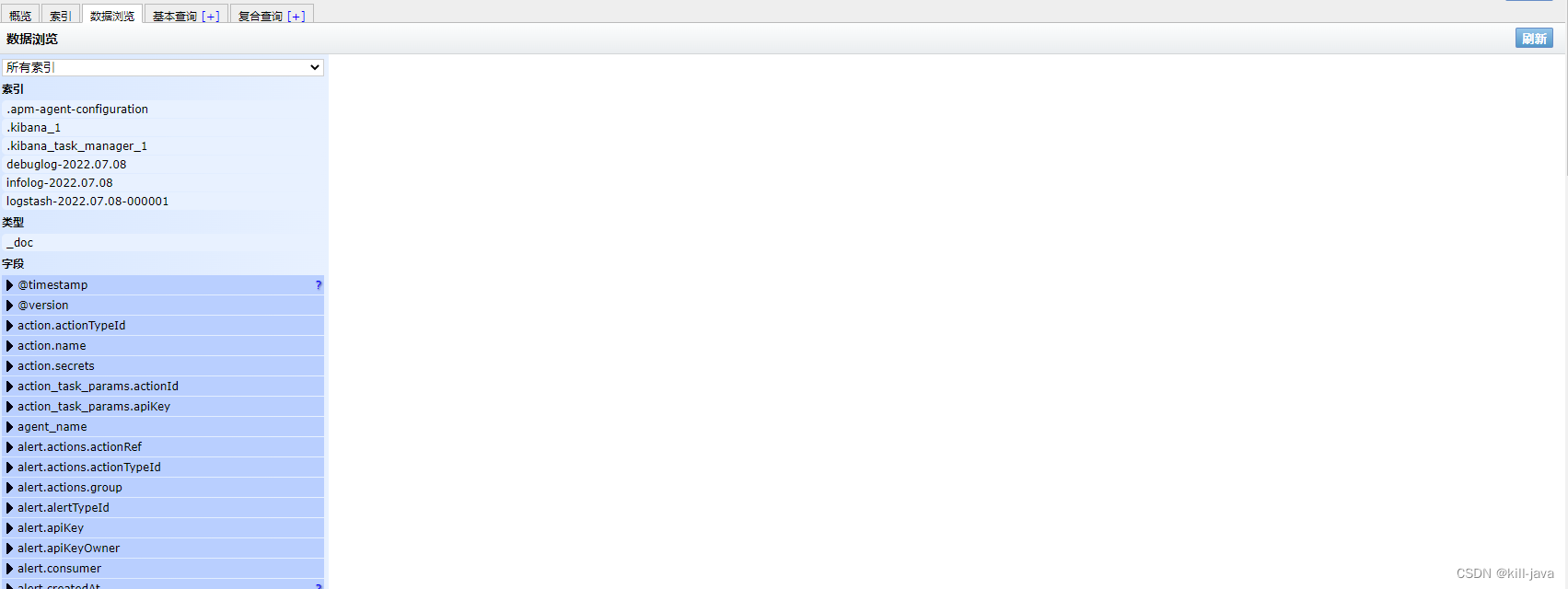

查看数据浏览发现数据没有加载出来

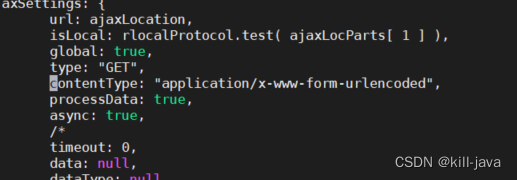

- F12查看报错 406

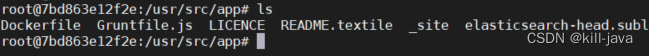

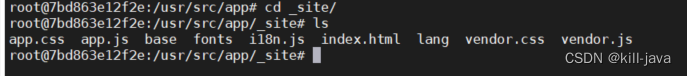

- docker exec -it es-head /bin/bash

- 进入es-head容器,找到_site/目录

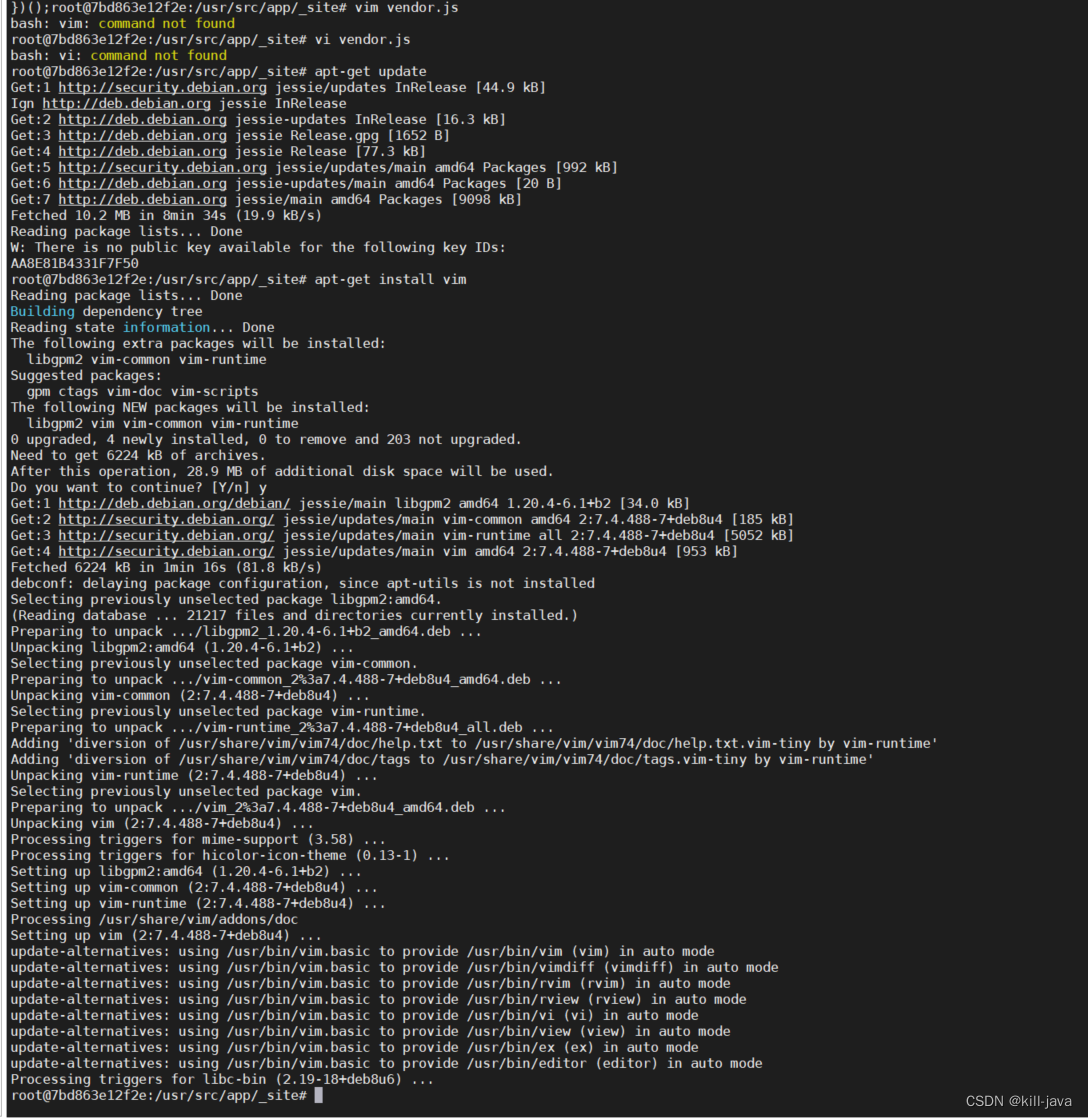

- 编辑目录下的vendor.js文件

- vi vendor.js

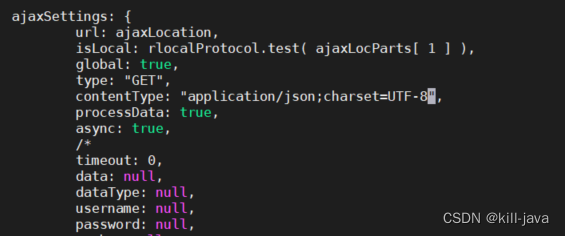

- :6886

- :7574

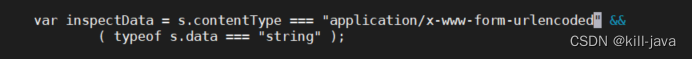

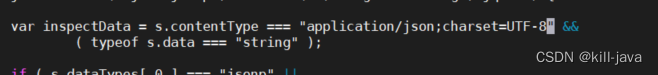

- 把这两行中 contentType: "application/x-www-form-urlencoded,改成:contentType: “application/json;charset=UTF-8”

contentType: "application/x-www-form-urlencoded

contentType: "application/json;charset=UTF-8"

- 1

- 2

- 如果报错 vi/vim command not found,直接在容器内部执行以下两条命令

- apt-get update

- apt-get install vim

- 安装完毕

- 第一处

- 第二处

- 保存退出 :wq

- 不需要重启es-head容器,刷新9100页面点击数据浏览

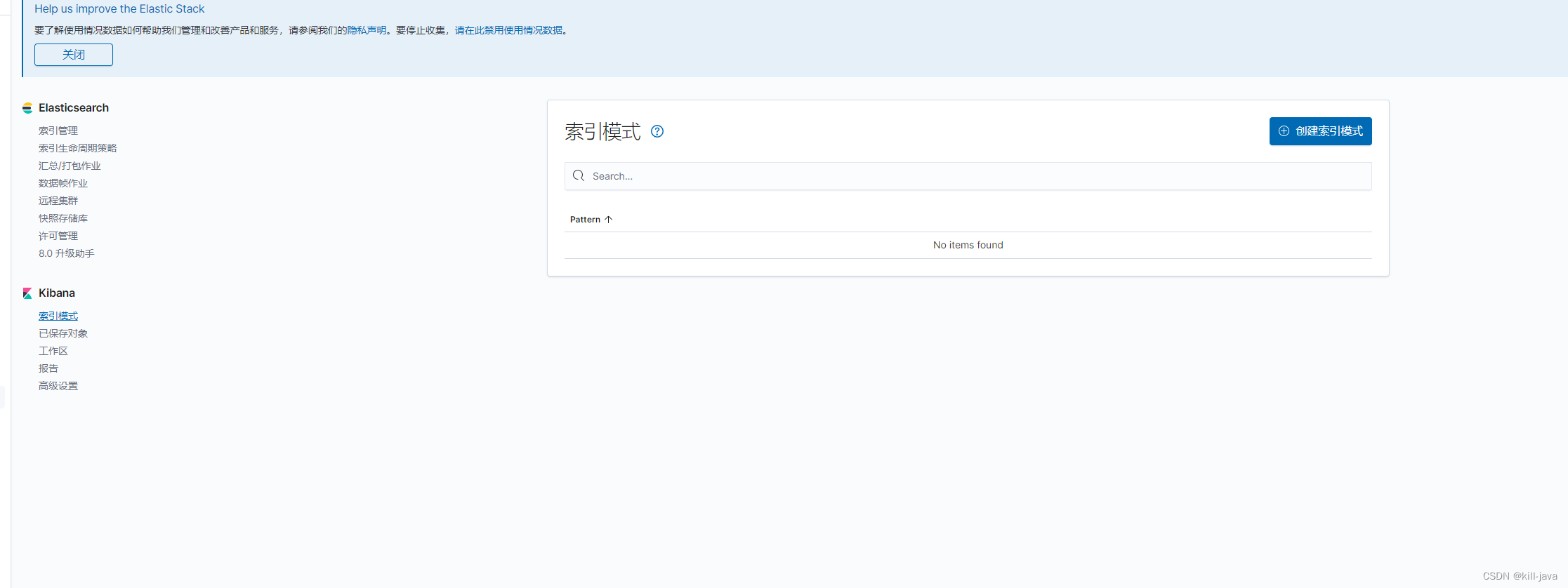

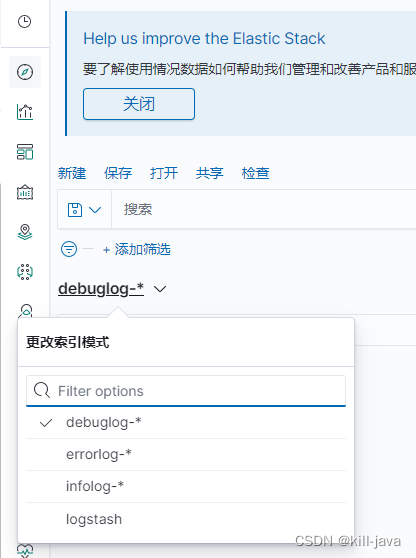

回到kibana页面

- 点击Management,在下面kibana中点击索引模式

- 创建索引

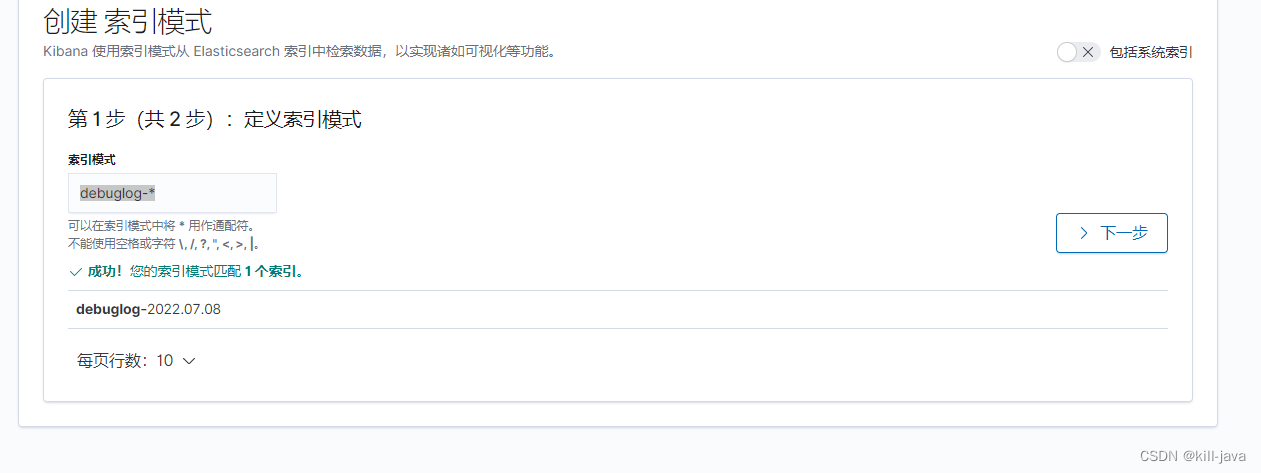

- 首先输入 debuglog-*

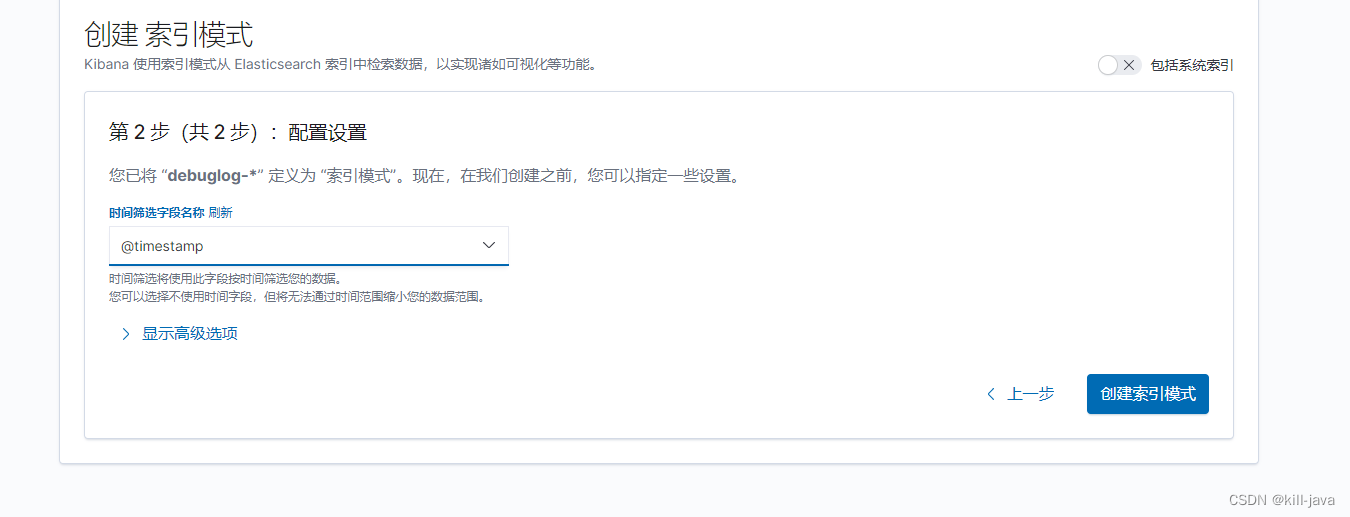

- 下一步选择@timestamp字段继续点击创建索引模式

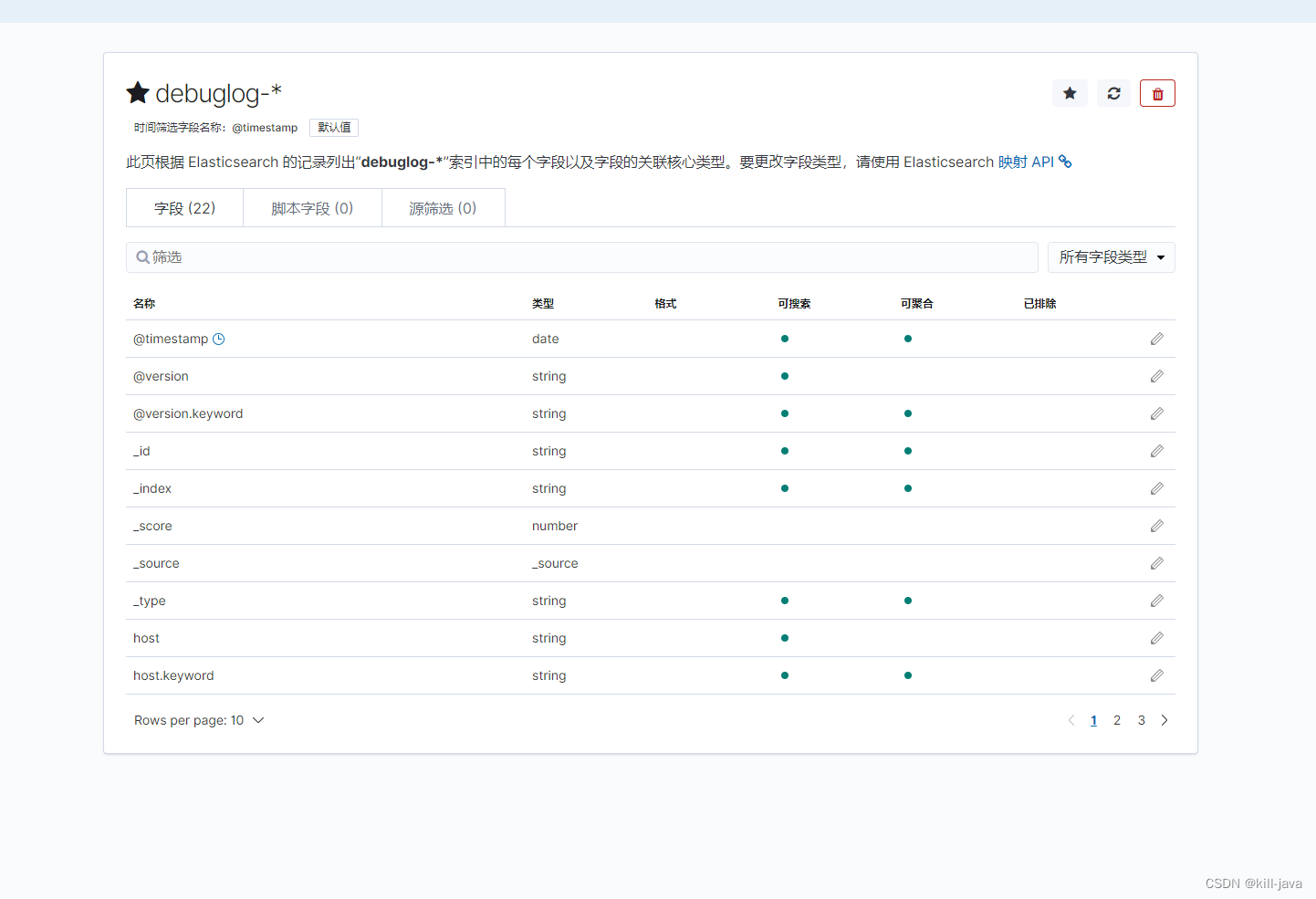

- 创建成功

- 再以同样的方式创建索引 infolog-* errorlog-*

- 如果没有办法创建errorlog索引,就在代码里写一个错误方法抛出异常,logstash接收到然后刷新索引就可以创建了

try {

String s = null;

System.out.println(s.substring(0,1));

}catch (Exception e){

logger.error("error",e);

}

- 1

- 2

- 3

- 4

- 5

- 6

-

e.printStackTrace()如果无法被logstash捕捉,就替换为slf4j包中的logger.error(“error”,e)

-

点击Discover页签进行查看

-

三种类型的日志都有了

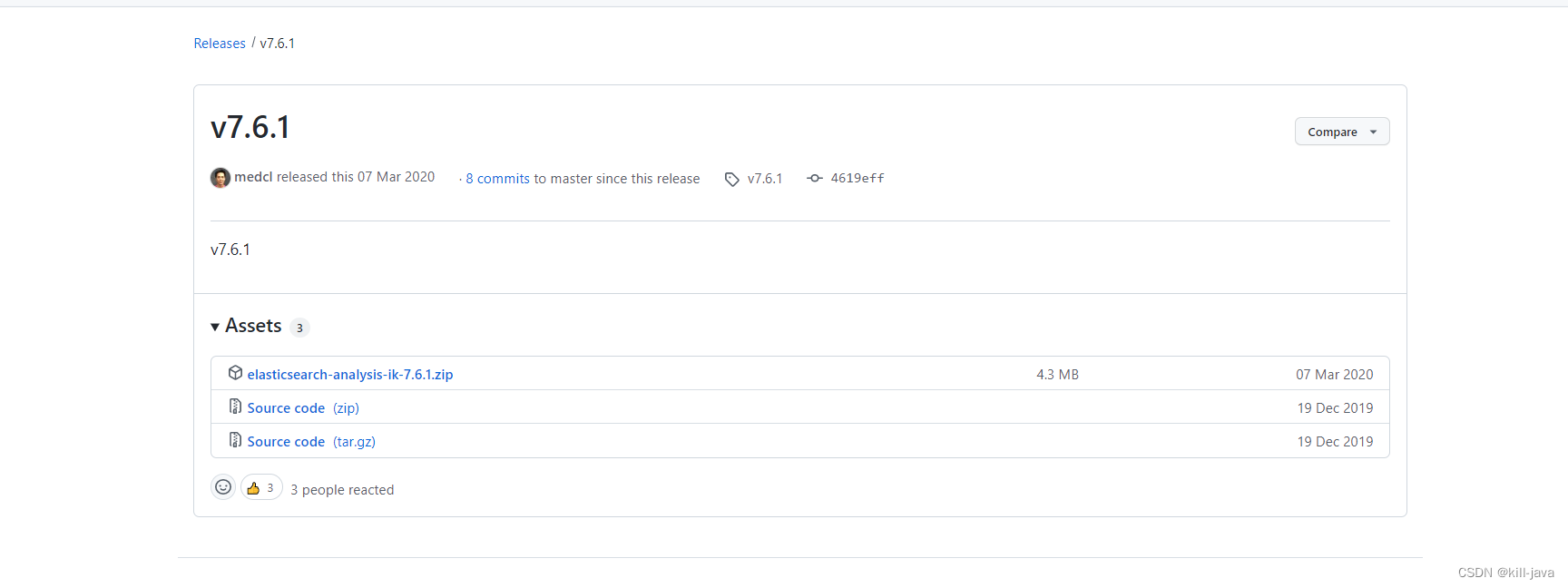

添加ik分词器

- 下载zip文件

- 上传并解压到挂载目录/conf/es/plugins

- 重启es即可

- kibana测试

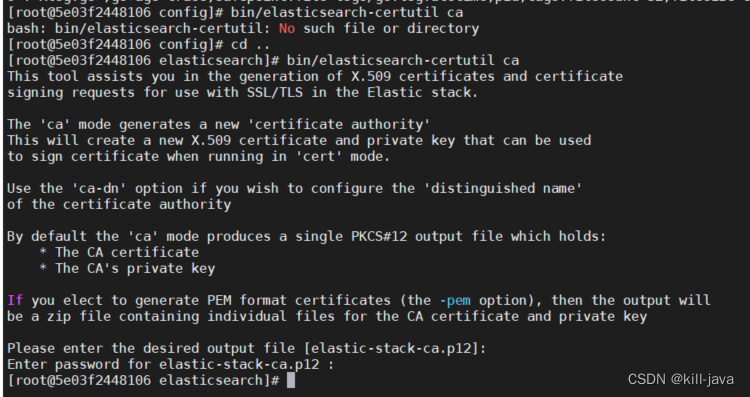

配置TLS

es

-

docker exec -it es /bin/bash

-

进入容器内部,bin目录上一级

-

依次执行以下命令

-

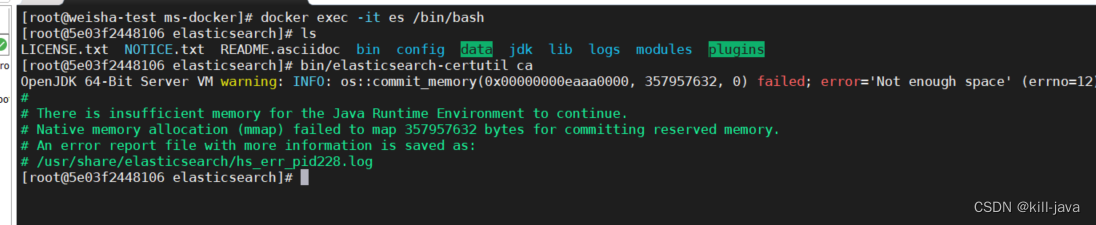

命令1

-

bin/elasticsearch-certutil ca

-

报错没有足够的内存了。

-

docker-compose中调大内存参数 “ES_JAVA_OPTS=-Xms2g -Xmx2g”

-

退出容器restart一下es

-

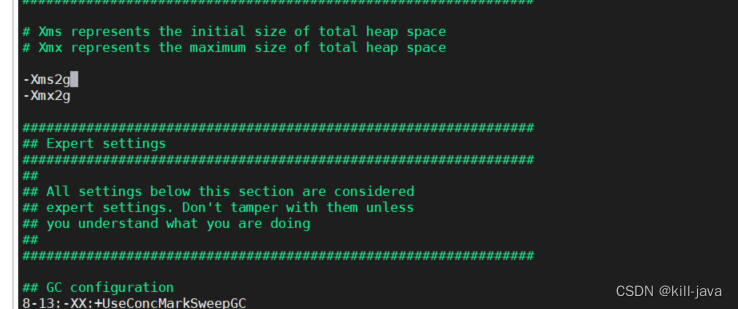

进入容器查看config目录下的jvm.options ,cat jvm.options

-

生效了,重新执行命令1创建ca证书

-

如果不生效,就进入容器内部修改jvm.options文件然后重启

-

如果宿主机内存不够 创建SWAP分区

-

此处会出现Please enter the … 和 Enter password for …

-

什么也不需要输入,直接按两下回车

-

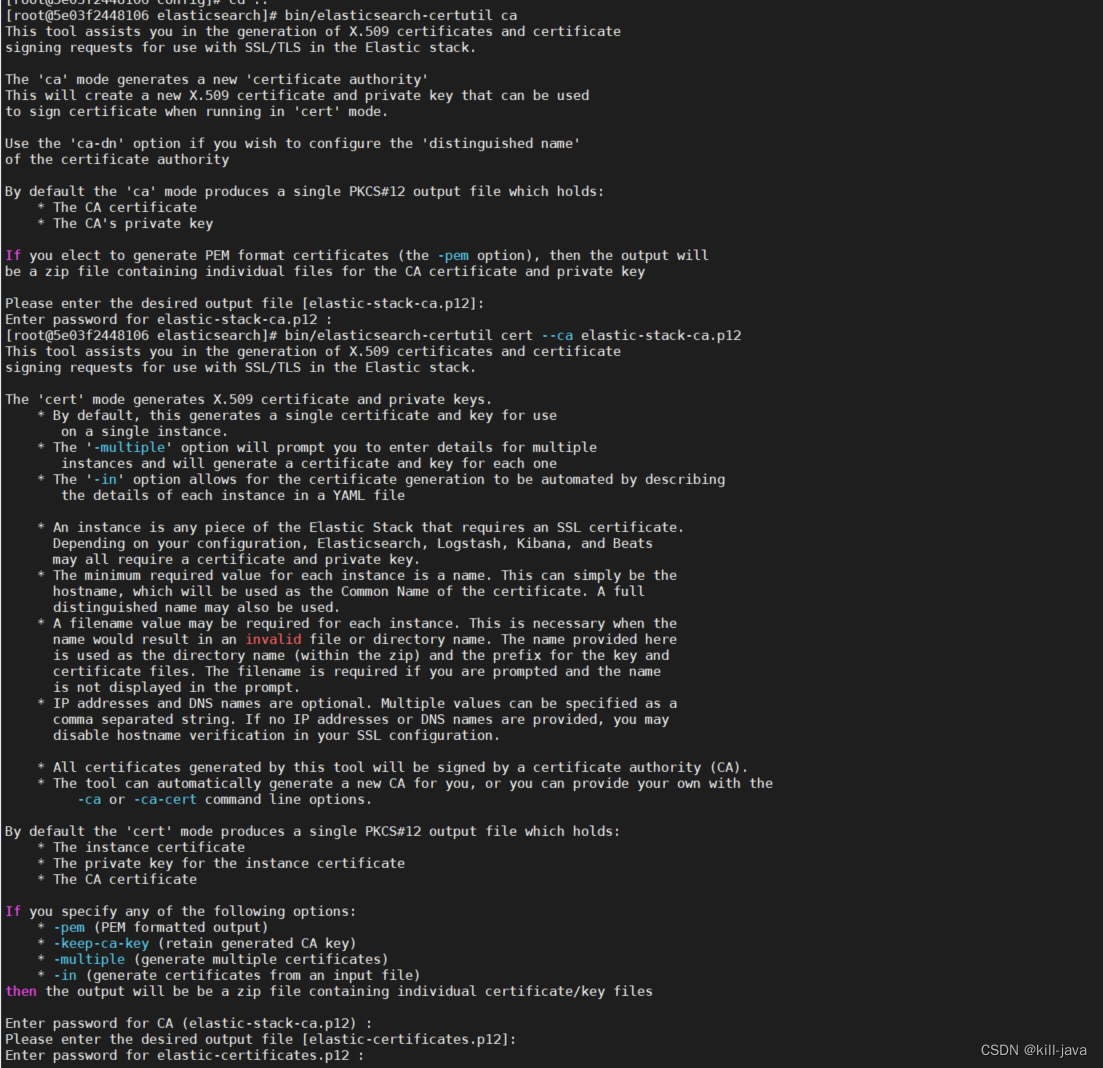

执行命令2

-

bin/elasticsearch-certutil cert --ca elastic-stack-ca.p12

-

图太长了分了两块儿截屏

-

和上一步一样,会出现三行 Enter password… Please enter the… Enter password for…

-

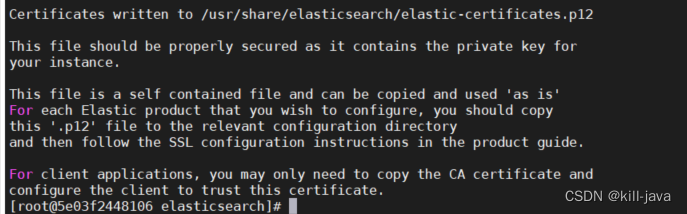

什么也不要输,直接按回车,出现下图

-

继续下一步,这一步很关键!!!!!!

-

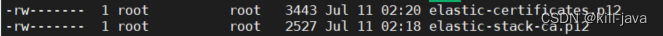

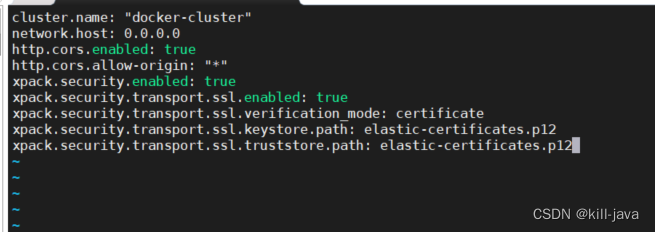

ll 查看会发现刚创建好的这两个文件是root所有,需要更改所有者

-

命令3

-

chown elasticsearch.elasticsearch elastic-*.p12

-

ll查看命令是否生效

-

命令4

-

mv elastic-*.p12 config/

-

移动到config目录下

-

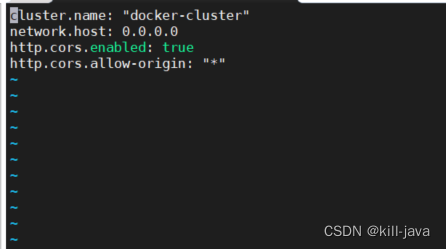

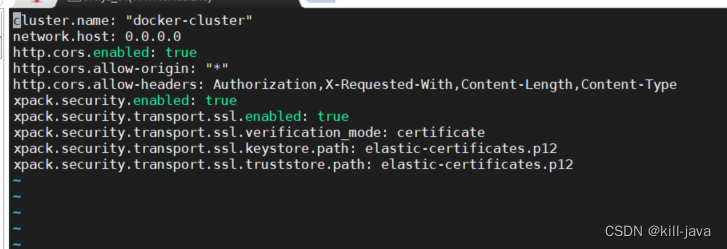

vi elasticsearch.yml

- 添加以下配置

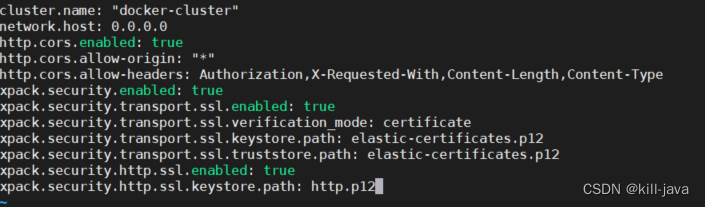

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: elastic-certificates.p12

- 1

- 2

- 3

- 4

- 5

-

:wq 保存并退出

-

退出容器并重启es

-

此时es已经需要账号密码了

-

进入容器内部继续设置密码

-

命令5

-

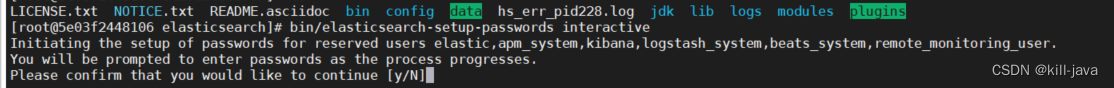

bin/elasticsearch-setup-passwords interactive

-

y 进入下一步

-

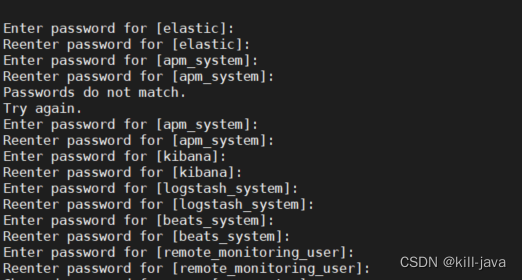

给以下这么多账户设置并确认密码。。。

-

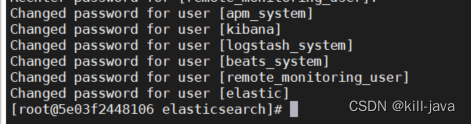

设置完成

-

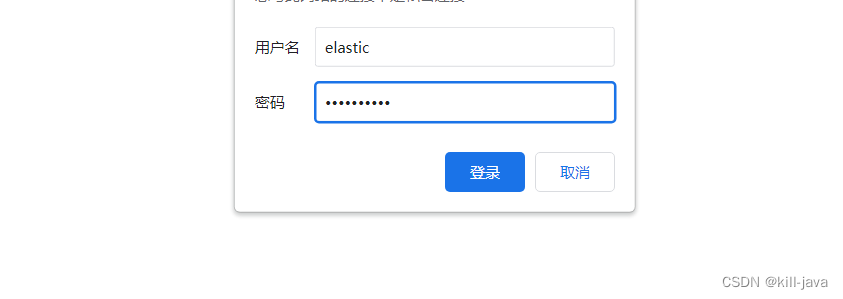

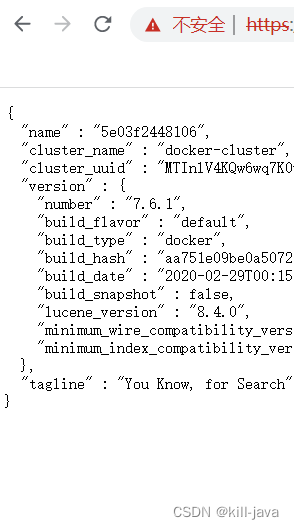

访问9200页面

-

账号elastic 密码输入刚才设置的那个

-

es配置完成

kibana

- 查看5601页面,发现kibana处于需要账号密码的状态

- 打开之前挂载的kibana.yml文件

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.name: kibana

server.host: "0"

elasticsearch.hosts: [ "http://es:9200" ]

xpack.monitoring.ui.container.elasticsearch.enabled: true

elasticsearch.username: "kibana"

elasticsearch.password: "刚才设置好的密码"

i18n.locale: zh-CN

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

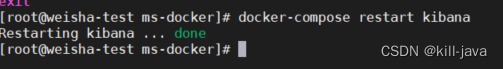

- 保存好配置文件,restart重启kibana容器

- 重启完成在kibana页面输入刚才配置好的账号密码

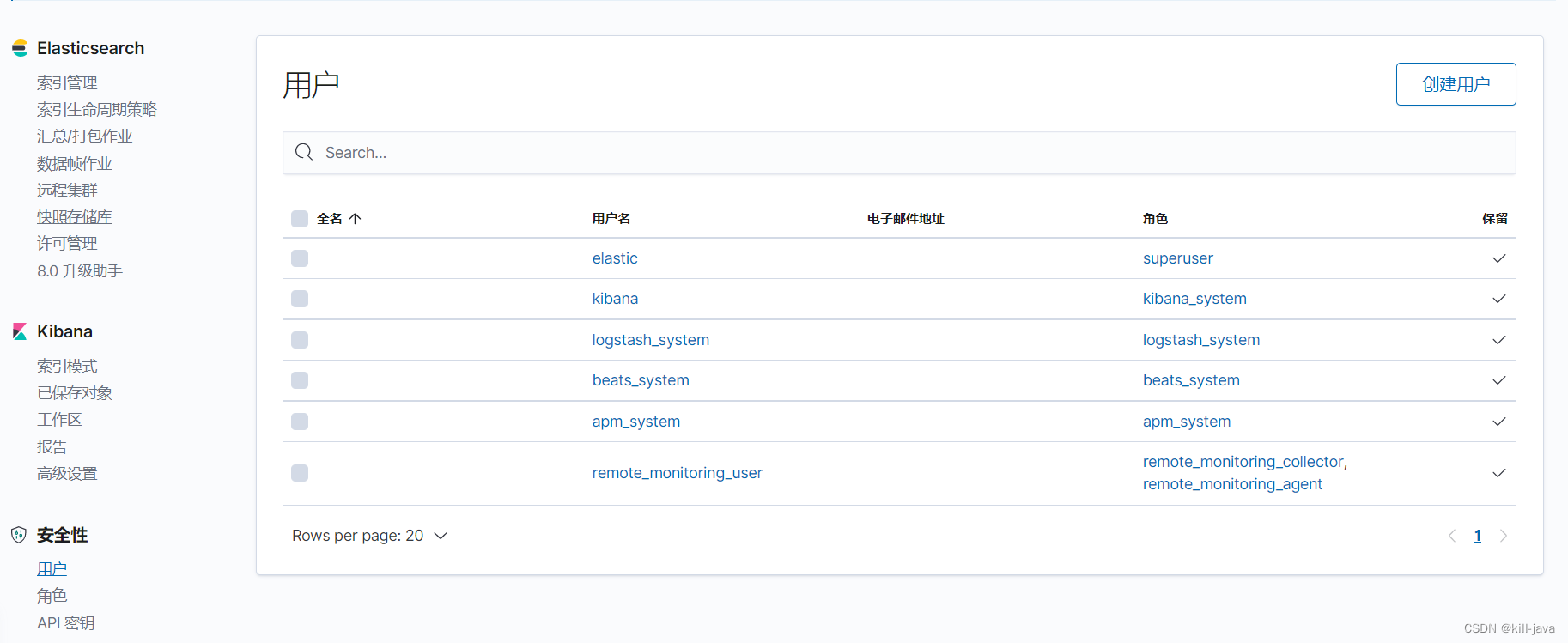

- 这里只有使用elastic超级账户才能访问成功,其它账户是没有权限的

- 通过超级账户登录后查看已有用户信息

- 这些默认的用户和角色信息都无法修改

- 如果有别的需求可以创建一个新的角色和用户,并赋予某些(全部)索引读写创建权限

- 自己点进去玩吧,我没这个需求就不写了。。。

- kibana配置完成

logstash

- 使用命令查看logstash运行日志

- docker-compose -f -t --tail 100 logstash

- 发现报了以下错误

{:url=>"http://ip:9200/",

:error_type=>LogStash:

:Outputs:

:ElasticSearch:

:HttpClient::Pool:

:BadResponseCodeError,

:error=>"Got response code '401' contacting Elasticsearch at URL 'http://ip:9200/'"}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

-

无法连接es接收日志

-

打开这个confg文件

-

给所有的elasticsaerch模块填写账号密码

output { if [level] == "INFO" { elasticsearch { hosts => "ip:9200" index => "infolog-%{+YYYY.MM.dd}" user => "elastic" password => "密码" } }else if [level] == "ERROR" { elasticsearch { hosts => "ip:9200" index => "errorlog-%{+YYYY.MM.dd}" user => "elastic" password => "密码" } }else if [level] == "DEBUG" { elasticsearch { hosts => "ip:9200" index => "debuglog-%{+YYYY.MM.dd}" user => "elastic" password => "密码" } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 保存配置文件,重启logstash

- docker-compose restart logstash

- 重启完成后查看日志

- logstash配置完成

es-head

- 刷新9100页面

- 报错 401 XHR Error Unauthorized

- 进入es容器,vi elasticsearch.yml ,添加下面行

http.cors.allow-headers: Authorization,X-Requested-With,Content-Length,Content-Type

- 1

- 保存退出重启es容器

- 通过url+认证信息方式访问es

- http://ip:9100/?auth_user=elastic&auth_password=mima

- es-head配置完成

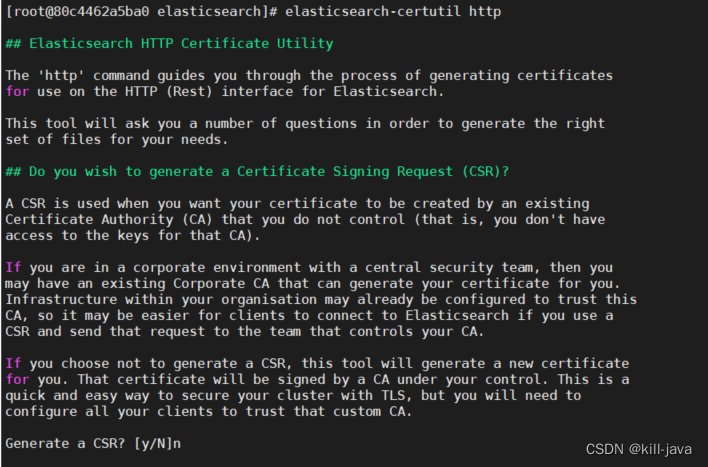

HTTPS 访问es

- 执行命令

elasticsearch-certutil http

- 1

- 生成一个CSR (N)

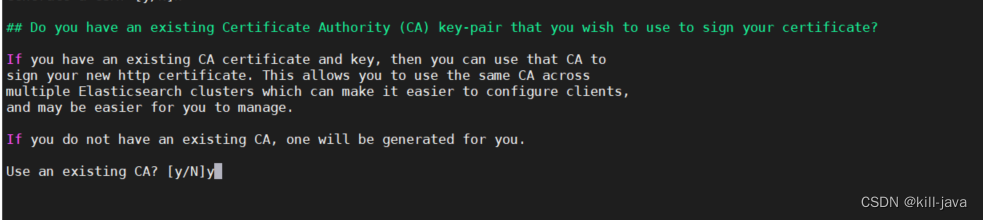

- use an existing CA (Y)

- 输入CA证书绝对路径

/usr/share/elasticsearch/config/elastic-stack-ca.p12

- 1

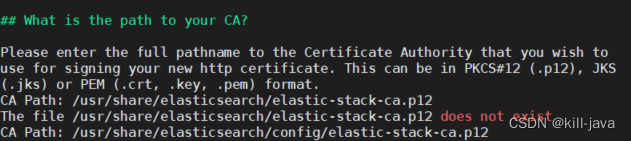

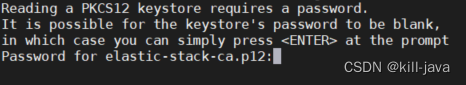

- 输入密码,按回车跳过

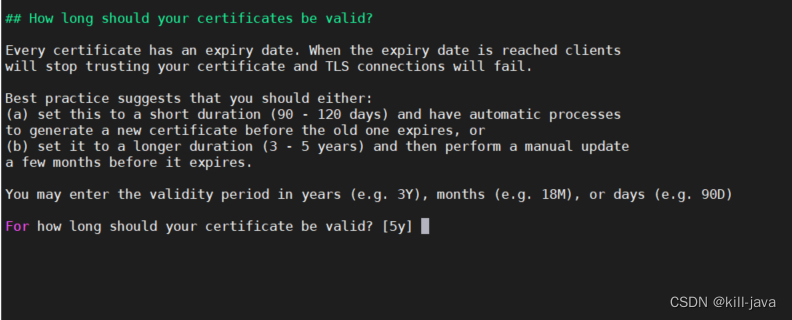

- 输入证书有效期,默认5年

-

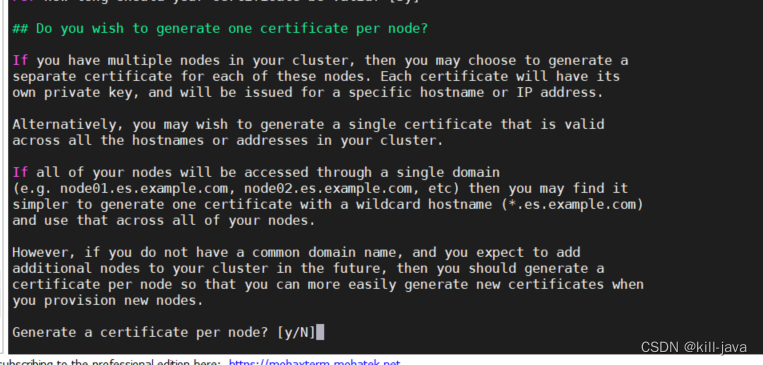

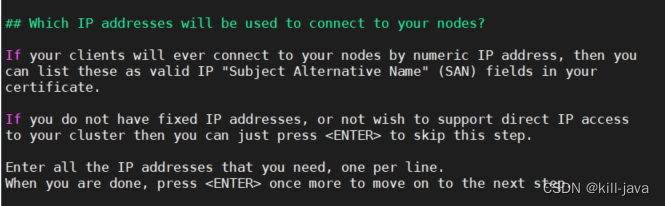

是否为每个节点创建证书 n

-

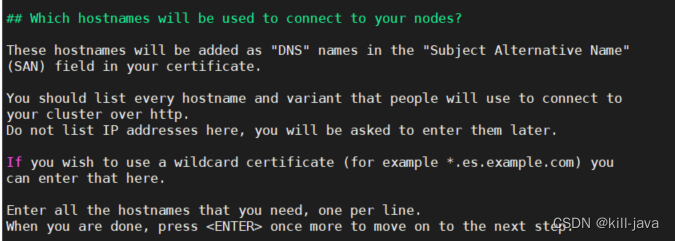

输入节点hostnames(域名),一行一个,两次回车下一步

-

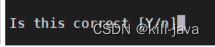

确认输入完的域名(y)

-

输入节点ip地址

- 一行一个,两次回车下一步

- 确认输入完的ip地址

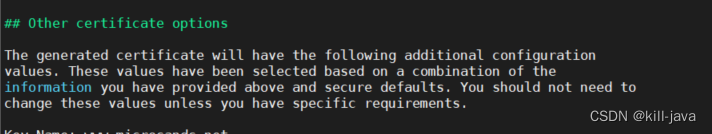

- 生成内容

Key name : www.baidu.com

Subject DN: CN=www,DC=baidu,DC=com

KeySize: 2048

- 1

- 2

- 3

-

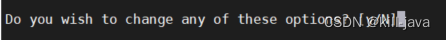

是否修改上述任意内容 (N)

-

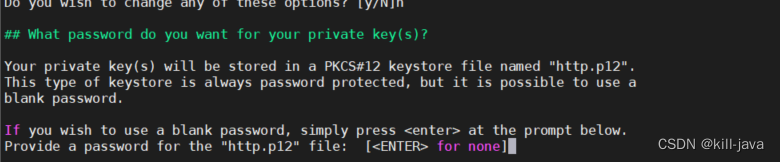

设置密码,回车跳过

-

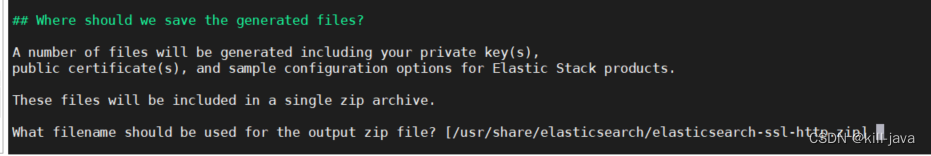

输入压缩文件的文件名,回车确认

-

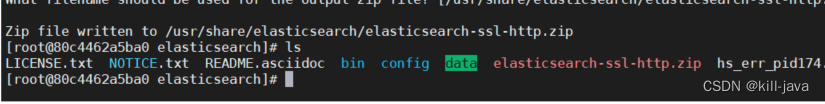

查看生成的zip文件

-

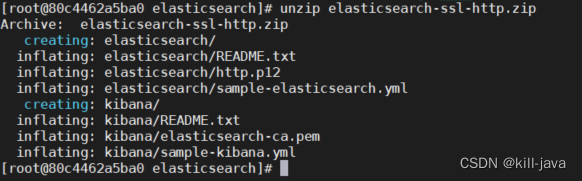

unzip elasticsearch-ssl-http.zip

- 可以看到里面包含elasticsear和kibana两个文件夹,并且包含以下内容

/elasticsearch

|_ README.txt

|_ http.p12

|_ sample-elasticsearch.yml

/kibana

|_ README.txt

|_ elasticsearch-ca.pem

|_ sample-kibana.yml

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

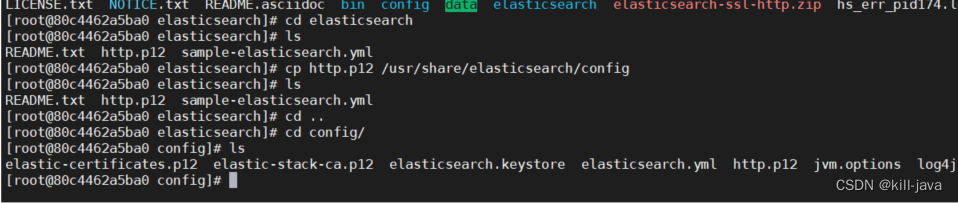

- 进入解压出来的elasticsearch目录把http.p12复制到config目录

- cp http.p12 /usr/share/elasticsearch/config

- vi 编辑 elasticsearch.yml文件,添加以下内容

xpack.security.http.ssl.enabled: true

xpack.security.http.ssl.keystore.path: http.p12

- 1

- 2

- 3

- 如果私钥设置了密码,把私钥的密码添加到 elasticsearch 的安全设置中

./bin/elasticsearch-keystore add xpack.security.http.ssl.keystore.secure_password

- 1

- 重启es

- 此时http已经不能用了,通过https的形式访问

kibana连接 es https

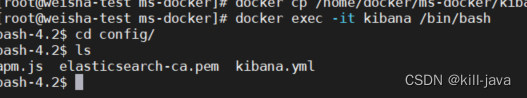

- 使用docker cp命令把解压出来的kibana文件拷贝出来,再把elasticsearch-ca.pem文件放到kibana容器的config目录下

docker cp es镜像id:/usr/share/elasticsearch/kibana /home/docker/ms-docker

docker cp /home/docker/ms-docker/kibana/elasticsearch-ca.pem kibana镜像id:/usr/share/kibana/config

- 1

- 2

- 3

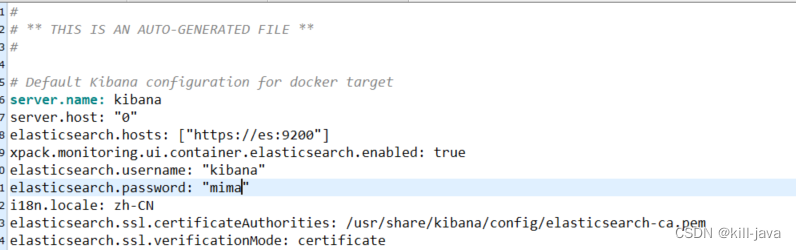

- 修改挂载的kibana.yml

//添加行

//如果重启报错找不到的话就写elasticsearch-ca.pem存放位置的全路径

elasticsearch.ssl.certificateAuthorities: /config/elasticsearch-ca.pem

//添加行

//默认是full,会校验主机名,如果在生成证书的时候没有设置主机名,这里可以改成certificate

elasticsearch.ssl.verificationMode: certificate

//修改行

elasticsearch.host: ["https://es:9200"]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- restart重启kibana镜像

https访问kibana

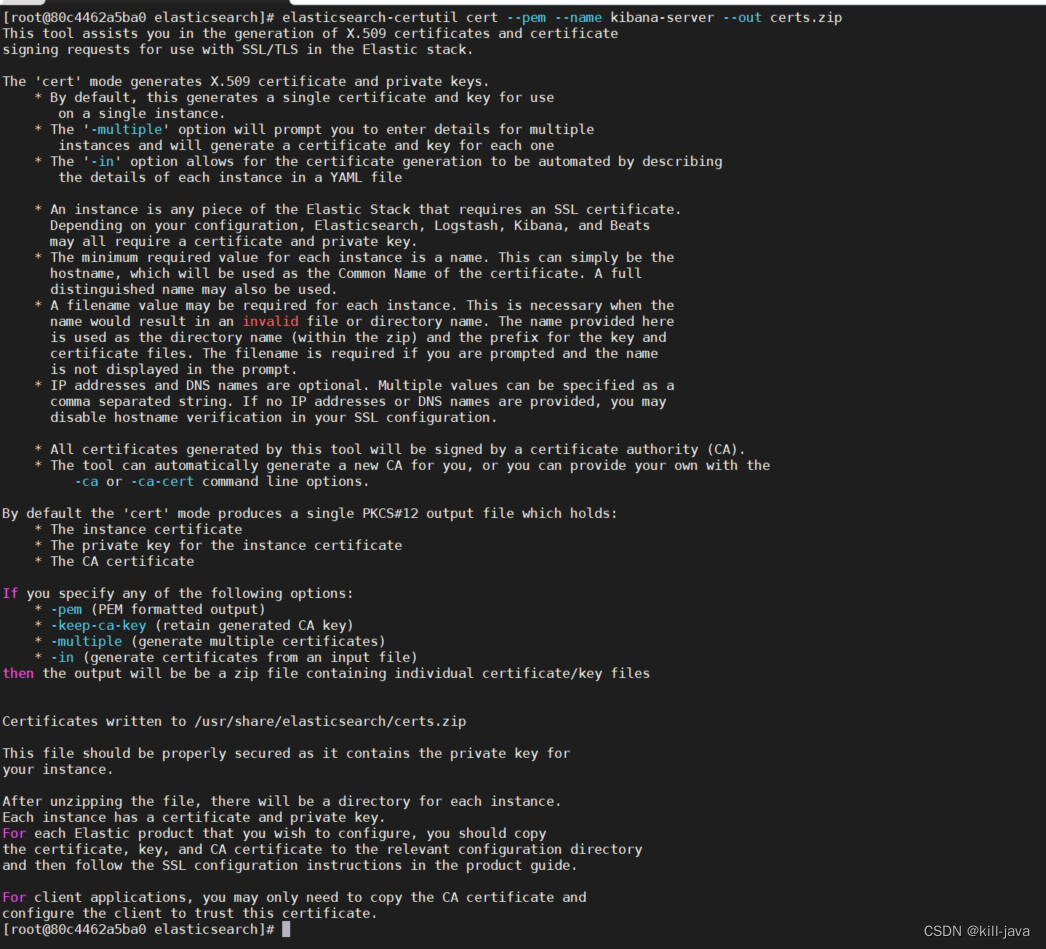

- 在es容器中生成kibana-server证书

elasticsearch-certutil cert --pem --name kibana-server --out certs.zip

- 1

- 把生成的zip拷贝到宿主机

docker cp es容器id:/usr/share/elasticsearch/certs.zip /home/docker/ms-docker

- 1

- certs.zip包含以下内容

./certs

├── ca

│ └── ca.crt

└── kibana-server

├── kibana-server.crt

└── kibana-server.key

- 1

- 2

- 3

- 4

- 5

- 6

- unzip certs.zip 解压文件

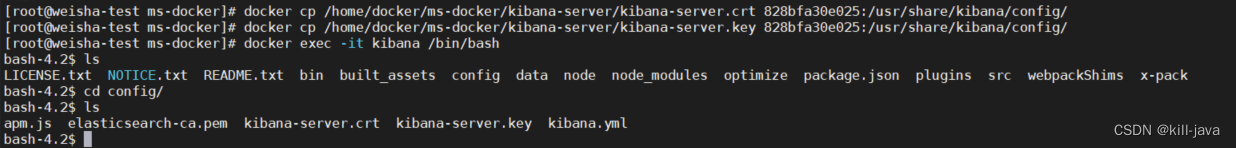

- 把kibana-server中的两个文件拷贝到kibana容器的config目录下

docker cp /home/docker/ms-docker/kibana-server.crt kibana容器id:/usr/share/kibana/config

docker cp /home/docker/ms-docker/kibana-server.key kibana容器id:/usr/share/kibana/config

- 1

- 2

- 3

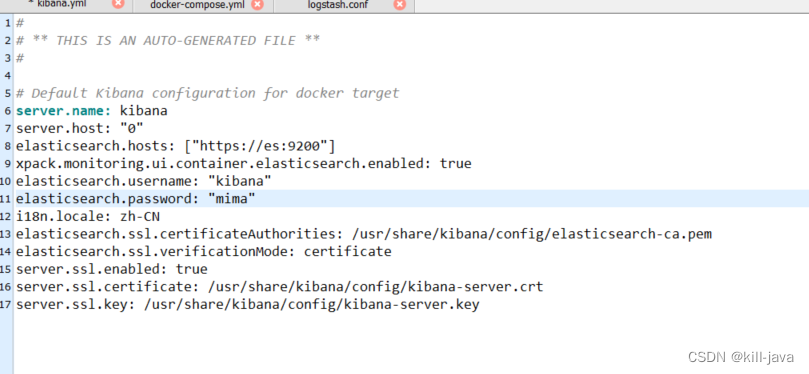

- 挂载的kibana.yml添加以下内容

server.ssl.enabled: true

server.ssl.certificate: /usr/share/kibana/config/kibana-server.crt

server.ssl.key: /usr/share/kibana/config/kibana-server.key

- 1

- 2

- 3

- restart kibana 容器

- 访问5601,此时http已经不能用了,只能用https

es-head 访问 https es

- 在连接处使用https://ip:9200

logstash https连接es

- 把elasticsearch-ssl-http.zip中解压出来的elasticsearch-ca.pem文件拷贝到logstash的config目录下

docker cp /home/docker/ms-docker/kibana/elasticsearch-ca.pem logstash镜像id:/usr/share/logstash/config

- 1

- 给output模块添加以下内容

ssl => true

cacert => "config/elasticsearch-ca.pem"

- 1

- 2

output { if [level] == "INFO" { elasticsearch { hosts => "ip:9200" index => "infolog-%{+YYYY.MM.dd}" ssl => true cacert => "config/elasticsearch-ca.pem" } }else if [level] == "ERROR" { elasticsearch { hosts => "ip:9200" index => "errorlog-%{+YYYY.MM.dd}" ssl => true cacert => "config/elasticsearch-ca.pem" } }else if [level] == "DEBUG" { elasticsearch { hosts => "ip:9200" index => "debuglog-%{+YYYY.MM.dd}" ssl => true cacert => "config/elasticsearch-ca.pem" } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- restart logstash镜像即可