热门标签

热门文章

- 1微服务学习 | Spring Cloud 中使用 Sentinel 实现服务限流

- 2如何做一个基于python旅游景点景区售票系统毕业设计毕设作品(Django框架)_基于python的景区门票管理系统

- 3微服务入门(三)反向代理、负载均衡、Nginx、缓存、分布式锁、Redisson、SpringCache_反向代理缓存

- 4zzuli OJ 1098: 复合函数求值(函数专题)_1098 : 复合函数求值(函数专题)

- 5SQL 视图创建全文索引_sqlserver中视图能用到全文索引吗

- 6华为V5服务器SAS/SATA硬盘常亮黄灯及解决方案_华为服务器硬盘黄灯和绿灯都亮

- 7如何处理ssh: connect to host port 22: Connection refused_ssh: connect to host 127.0.0.1 port 22: connection

- 8MySQL - Update 语句同时修改多表数据的实现_update多表关联修改

- 9Java IO教程 OutputStream InputStream 读写字节流_outputstream 读取字节流

- 10arma模型平稳性和可逆性的条件_时间序列分析第07讲(ARIMA模型,季节时间序列模型,均值的估计)...

当前位置: article > 正文

linux+QT+FFmpeg 6.0,把多个QImage组合成一个视频_ffmpeg 6.0 + qt

作者:不正经 | 2024-03-02 19:23:59

赞

踩

ffmpeg 6.0 + qt

直接上代码吧:

- RecordingThread.h

-

- #ifndef RECORDINGTHREAD_H

- #define RECORDINGTHREAD_H

- #include "QTimer"

- #include <QObject>

- #include <QImage>

- #include <QQueue>

-

- extern "C"{

- //因为FFmpeg是c语言,QT里面调用的话需要extern "C"

- #include "libavcodec/avcodec.h"

- #include "libavformat/avformat.h"

- #include "libswscale/swscale.h"

- #include "libavdevice/avdevice.h"

- #include "libavformat/avio.h"

- #include "libavutil/imgutils.h"

- }

-

- class RecordingThread : public QObject

- {

- Q_OBJECT

-

- public:

- void FFmpegInit();

- void saveMp4(QImage image);

- void stopMp4();

-

- void setImage(QImage image);

-

-

- public slots:

- void recordInit();

-

- signals:

- void send(QString);

-

-

- private:

- AVFormatContext* formatContext;

- AVCodecParameters* codecParameters;

- const AVCodec* codec;

- AVCodecContext* codecContext;

- AVStream* stream;

- const AVPixelFormat* pixFmt;

-

- int num = 0;

-

- QQueue<QImage> gQdata;

-

- int isRecord = -1;

-

- };

-

- #endif // RECORDINGTHREAD_H

- #include "recordingthread.h"

- #include <QPainter>

- #include <cmath>

- #include <QPainterPath>

- #include <QDebug>

- #include <QTimer>

- #include <QDateTime>

- #include <stdio.h>

- #include <string.h>

- #include <stdlib.h>

- #include <unistd.h>

-

-

- void RecordingThread::FFmpegInit(){

- isRecord = -1;

- int ret;

- // 初始化 FFmpeg

- qDebug()<<"avdevice_register_all()";

- avdevice_register_all(); //初始化所有设备

- qDebug()<<"formatContext = avformat_alloc_context()";

- formatContext = avformat_alloc_context();//分配format上下文

-

- qint64 timeT = QDateTime::currentMSecsSinceEpoch();//毫秒级时间戳

- QString outputFileName = QString("/sdcard/").append("ffmpeg").append(QString::number(timeT)).append(".mp4");

- //第三个参数可以直接使用nullptr 根据outputFileName的后缀自动识别

- qDebug()<<"avformat_alloc_output_context2(&formatContext, nullptr, \"mp4\", outputFileName.toUtf8().constData())";

- ret = avformat_alloc_output_context2(&formatContext, nullptr, nullptr, outputFileName.toUtf8().constData());

- qDebug()<<"ret===="<<ret;

- qDebug()<<"formatContext===="<<formatContext;

- qDebug()<<"formatContext->oformat = av_guess_format(nullptr, outputFileName.toUtf8().constData(), nullptr);";

- formatContext->oformat = av_guess_format(nullptr, outputFileName.toUtf8().constData(), nullptr);

- qDebug() << "avio_open(&formatContext->pb, outputFileName.toUtf8().constData(), AVIO_FLAG_WRITE) < 0";

- // 打开输出文件

- if (avio_open(&formatContext->pb, outputFileName.toUtf8().constData(), AVIO_FLAG_WRITE) < 0) {

- qDebug() << "Failed to open output file";

- return;

- }

- qDebug() << "AVStream* stream = avformat_new_stream(formatContext, nullptr);";

- // 创建一个AVStream对象

- stream = avformat_new_stream(formatContext, nullptr);

- if (!stream) {

- qDebug() << "Failed to create output stream";

- return;

- }

-

- qDebug() << "AVCodecParameters* codecParameters = stream->codecpar;";

- // 配置AVCodecContext

- codecParameters = stream->codecpar;

- codecParameters->codec_type = AVMEDIA_TYPE_VIDEO;

- codecParameters->codec_id = AV_CODEC_ID_H264; // 使用H.264编码器

- codecParameters->width = 400;

- codecParameters->height = 400;

-

-

-

- qDebug() << " const AVCodec* codec = avcodec_find_encoder(codecParameters->codec_id);";

- // 打开编解码器

- codec = avcodec_find_encoder(codecParameters->codec_id);

- codecContext = avcodec_alloc_context3(codec);

- codecContext->width = 400;

- codecContext->height = 400;

- codecContext->pix_fmt = AV_PIX_FMT_YUV420P;

- codecContext->time_base = {1, 25}; // 设置编码器的时间基为 1秒/30帧

- codecContext->framerate = {25, 1}; // 设置编码器的帧率为 30fps

-

- //codecContext->thread_count = 4;

-

-

- qDebug() << "AV_PIX_FMT_YUV420P====="<<AV_PIX_FMT_YUV420P;

- qDebug() << "codecContext->pix_fmt====="<<codecContext->pix_fmt;

- qDebug() << "avcodec_open2(codecContext, codec, nullptr);";

- //设置完成编码格式以后要立刻打开,要不然调用avcodec_parameters_to_context的时候会重置编码

- ret = avcodec_open2(codecContext, codec, nullptr);

- if(ret < 0){

- qDebug() << "Failed to avcodec_open2";

- return;

- }

- qDebug() << "avcodec_parameters_to_context(codecContext, codecParameters);";

- // 将编码器参数复制到输出流

- avcodec_parameters_to_context(codecContext, codecParameters);

- // 检查编解码器支持的像素格式

- pixFmt = codec->pix_fmts;

- qDebug() << "while";

- while (*pixFmt != AV_PIX_FMT_NONE) {

- qDebug() << av_get_pix_fmt_name(*pixFmt);

- ++pixFmt;

- }

- qDebug() << " avformat_write_header(formatContext, nullptr);";

- // 写入头部信息

- avformat_write_header(formatContext, nullptr);

- }

- void RecordingThread::saveMp4(QImage image){

- int imagewidth = image.width();

- int imageheight = image.height();

- int ret;

- //qDebug() << " AVFrame* frame = av_frame_alloc();";

- // 逐个写入图像帧

- AVFrame* frame = av_frame_alloc();

- if (!frame) {

- qDebug() << "Failed to allocate frame.";

- return;

- }

-

-

- //qDebug() << "frame->format = AV_PIX_FMT_YUV420P";

- frame->format = AV_PIX_FMT_YUV420P;

- frame->width = imagewidth;

- frame->height = imageheight;

-

-

- // 在循环中设置每一帧的时间戳 如果没有这个可能就是0秒视频

- frame->pts = (((AV_TIME_BASE / 25))/10 * num);

- // qDebug() << "frame->pts===========" << frame->pts;

-

-

- if (av_frame_get_buffer(frame, 0) < 0) {

- qDebug() << "Failed to allocate frame buffer.";

- av_frame_free(&frame);

- return;

- }

-

- // 图像格式转换

- SwsContext* swsContext = sws_getContext(imagewidth, imageheight, AV_PIX_FMT_RGB32,

- frame->width, frame->height, AV_PIX_FMT_YUV420P,

- SWS_BICUBIC, nullptr, nullptr, nullptr);

- if (!swsContext) {

- qDebug() << "Failed to create SwsContext.";

- av_frame_free(&frame);

- return;

- }

-

-

- uint8_t* destData[4] = {frame->data[0], frame->data[1], frame->data[2], nullptr};

- int destLinesize[4] = {frame->linesize[0], frame->linesize[1], frame->linesize[2], 0};

-

- //av_image_fill_arrays(frame->data, frame->linesize, destData[0], codecContext->pix_fmt, codecContext->width, codecContext->height, 1);

-

- image = image.convertToFormat(QImage::Format_RGB32);

- const uchar* bits = image.constBits();

- int bytesPerLine = image.bytesPerLine();

-

- // 函数返回的值是转换后的图像的输出行数。输出的图像高度为图像像素。

- ret = sws_scale(swsContext, &bits, &bytesPerLine, 0, image.height(), destData, destLinesize);

-

- //qDebug() << "sws_scale ret==="<<ret;

- //函数用于释放由 sws_getContext 函数创建的图像格式转换上下文

- sws_freeContext(swsContext);

-

- //qDebug() << "AVPacket packet;";

- // 编码并写入视频帧

- AVPacket packet;

- av_init_packet(&packet);

- packet.data = nullptr;

- packet.size = 0;

-

- int code = -1;

- // 接收输出包

- while (code < 0) {

- ret = avcodec_send_frame(codecContext, frame);

- // qDebug() << "avcodec_send_frame ret===="<<ret;

- code = avcodec_receive_packet(codecContext, &packet);

- //qDebug() << "while avcodec_receive_packet====" << code;

- if(code == 0){

- // 处理输出包

- ret = av_interleaved_write_frame(formatContext, &packet);

- // qDebug() << "av_interleaved_write_frame==================" << ret;

- av_packet_unref(&packet); // 释放输出包

- } else if (code == AVERROR(EAGAIN)) {

- // 当输出队列为空时,需要重新发送帧进行编码

- continue;

- } else {

- qDebug() << "Error encoding frame: " << code;

- break;

- }

- }

-

- //qDebug() << "av_frame_free(&frame);";

- av_frame_free(&frame);

- //qDebug()<<"num==============================================="<<num;

- ++num;

- }

- void RecordingThread::stopMp4(){

- isRecord = 0;

- }

-

-

- void RecordingThread::setImage(QImage image){

- isRecord = 1;

- gQdata.push_back(image);

- }

-

- void RecordingThread::recordInit(){

- while (1) {

- if(!gQdata.isEmpty() && gQdata.size()>0){

- QImage qimage = gQdata.dequeue();

- saveMp4(qimage);

- }else{

- if(isRecord == 0){

- isRecord = -1;

- num = 0;

- //写入尾部信息

- qDebug() << "av_write_trailer(formatContext)";

- int ret = av_write_trailer(formatContext);

- qDebug() << "av_write_trailer(formatContext) ret==="<<ret;

- emit send("stopRecode");

- }

- }

- usleep(5000);

- }

- }

-

-

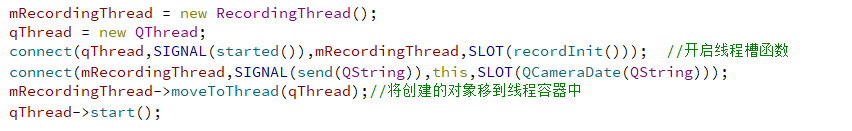

我这里是专门搞了个类封装,我把这个类当成线程使用了,在启动程序的时候直接当线程启动recordInit():比如这样

然后我在需要合成视频的时候先调用初始化:

mRecordingThread->FFmpegInit();再传入QImage:

mRecordingThread->setImage(rotatedImage);停止的时候再调用:mRecordingThread->stopMp4();这样就不会造成卡死主线程的情况

我在使用FFmpeg的时候主要出现两个比较明显的情况:

1.pix_fmt为-1的情况,原因是

设置完成编码格式以后要立刻打开,要不然调用avcodec_parameters_to_context的时候会重置编码

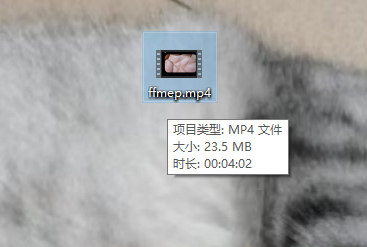

2.合成的视频只有一帧的情况

//主要是因为这个参数导致的,你们可以根据自己的需求微调 // 在循环中设置每一帧的时间戳 如果没有这个可能就是0秒视频 frame->pts = ((baseTimestamp + (num * (AV_TIME_BASE / 30)))/10);

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/不正经/article/detail/182029

推荐阅读

相关标签