热门标签

当前位置: article > 正文

大众点评评论抓取_大众点评评论爬取

作者:不正经 | 2024-04-05 22:52:42

赞

踩

大众点评评论爬取

一、背景

大众点评评论部分还是值得我们关注的,因为我们上点评网看的也就是评论,通过评论抓取分析,也有利于我们对店铺有更加清晰的定位

二、 抓取分析

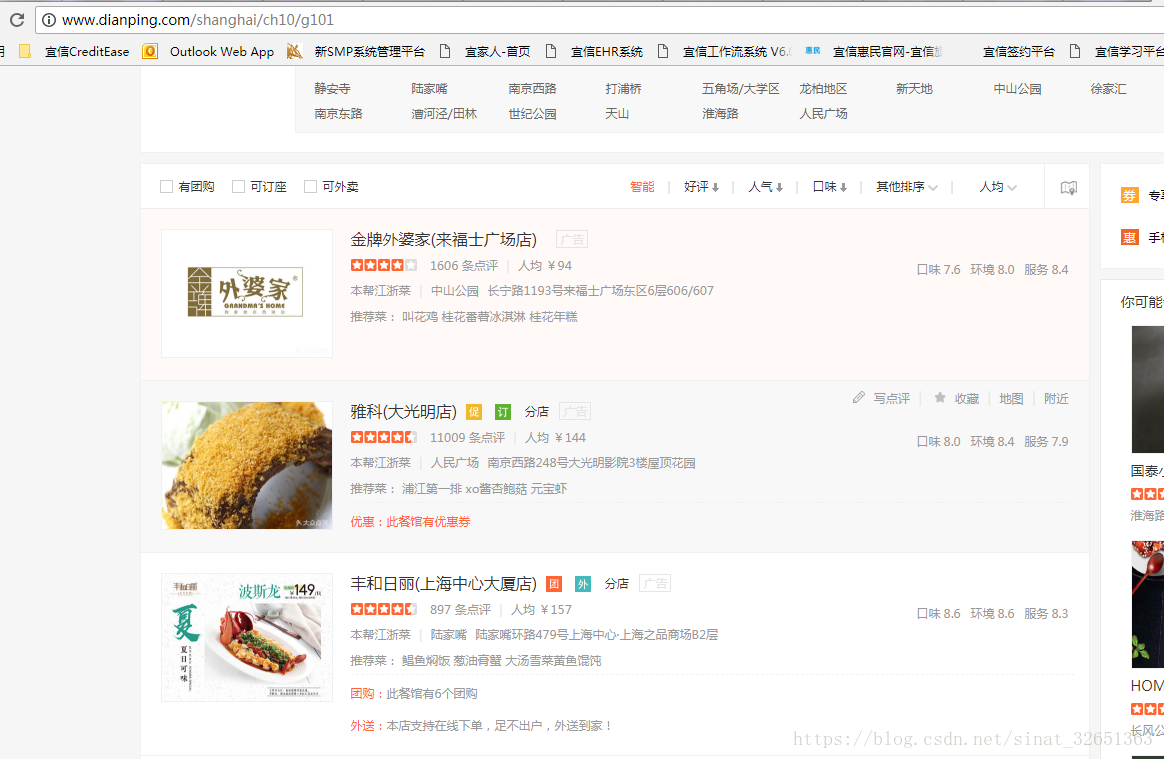

首先通过店铺列表页可以得到各家店铺的URL列表,或者店铺的ID,因为店铺详情页就是通过店铺ID做的相应拼接。如:http://www.dianping.com/shop/2972056/review_all/p; 第一个关键字就是店铺ID,第二个关键字为评论详情页,第三个P则为翻页。注意review_all是关键字。

详情页我们抓取了用户名称,用户url链接,以及用户对店铺做的一些相应评价。

三、 数据抓取

该网站好多数据都通过Ajax请求传送,但这部分评论信息没找到,因此通过普通方式抓取,同时它的user-agent很特别,不能带cookie信息,也不能带Referer信息,否则不会给你返回值,但是做大量翻页抓取的时候要不断迭代改变Regerer,这样才不至于被反爬。

代码:

四、 完整代码

完整代码可见github:https://github.com/Liangchengdeye/DaZhongdianping.git

五、加密字体解析抓取

新博文:

大众点评评论抓取-加密评论信息完整抓取: https://blog.csdn.net/sinat_32651363/article/details/85123876

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/不正经/article/detail/368346

推荐阅读

相关标签