热门标签

热门文章

- 1【UE4_002】PicoXR SDK配置_ue4 关掉hdr

- 2MySQL - Left Join和Inner Join的效率对比,以及优化_mysql left join 优化

- 3ERROR: Failed building wheel for mmcvERROR: ERROR: Failed to build installable wheels for some pypro

- 4私域流量运营数据分析:6个关键指标_私域运营测算指标

- 5STM32系统定时器

- 6Linux-用户和用户组管理

- 7基于nltk的自然语言处理---stopwords停用词处理_nltk stopwords

- 8【面试招聘】我的秋招记录——(自然语言处理-面经+感悟)

- 9CompletableFuture详解~thenAcceptAsync

- 10conda install 最常见错误的解决方案_error: unexpected symbol in "conda install

当前位置: article > 正文

服务器Ubuntu22.04系统下 ollama的详细部署安装和搭配open_webui使用_ubuntu安装ollama

作者:人工智能uu | 2024-08-08 14:10:22

赞

踩

ubuntu安装ollama

一、ubuntu和docker基本环境配置

1.更新包列表:

- 打开终端,输入以下命令:

sudo apt-get update

- 1

sudo apt upgrade

- 1

更新时间较长,请耐心等待

2. 安装docker依赖

sudo apt-get install ca-certificates curl gnupg lsb-release

- 1

3. 添加docker密钥

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

- 1

4.添加阿里云docker软件源

sudo add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

- 1

5.安装docker

apt-get install docker-ce docker-ce-cli containerd.i

- 1

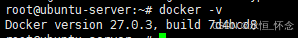

6.安装完成docker测试

docker -v

- 1

7. docker配置国内镜像源

- 7.1 编辑配置文件

vi /etc/docker/daemon.json

- 1

按i进入编辑模式

加入以下内容:

{

"registry-mirrors": [

"https://docker.mirrors.ustc.edu.cn",

"https://hub-mirror.c.163.com",

"https://docker.m.daocloud.io",

"https://ghcr.io",

"https://mirror.baidubce.com",

"https://docker.nju.edu.cn"

]

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

按ESC键退出编辑模式,接着输入:wq,保存并退出

- 7.2 重新加载docker

sudo systemctl daemon-reload

- 1

- 7.3 重启docker

sudo systemctl restart docker

- 1

二、安装英伟达显卡驱动

1.使用 Apt 安装

- 配置存储库

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey \

| sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg

curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list \

| sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' \

| sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

- 1

- 2

- 3

- 4

- 5

- 更新包列表

sudo apt-get update

- 1

- 安装 NVIDIA Container Toolkit 软件包

sudo apt-get install -y nvidia-container-toolkit

- 1

- 配置 Docker 以使用 Nvidia 驱动程序

sudo nvidia-ctk runtime configure --runtime=docker

- 1

- 重新启动docker

sudo systemctl restart docker

- 1

三、使用docker安装ollama

1. 使用docker拉取ollama镜像

docker pull ollama/ollama:latest

- 1

国内镜像

docker pull dhub.kubesre.xyz/ollama/ollama:latest

- 1

2.使用docker运行以下命令来启动 Ollama 容器

docker run -d --gpus=all --restart=always -v /root/project/docker/ollama:/root/project/.ollama -p 11434:11434 --name ollama ollama/ollama

- 1

使ollama保持模型加载在内存(显存)中

- 参考文章

ollama如何保持模型加载在内存(显存)中或立即卸载 - 执行以下命令:

docker run -d --gpus=all -e OLLAMA_KEEP_ALIVE=-1 -v /root/project/docker/ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

- 1

3.使用ollama下载模型

- 这里示例下载阿里的通义千问

docker exec -it ollama ollama run qwen2

- 1

-

运行效果如图:

-

模型库

| 模型 | 参数数量 | 大小 | 下载方式 |

|---|---|---|---|

| Llama 2 | 7B | 3.8GB | docker exec -it ollama ollama run llama2 |

| Mistral | 7B | 4.1GB | docker exec -it ollama ollama run mistral |

| Dolphin Phi | 2.7B | 1.6GB | docker exec -it ollama ollama run dolphin-phi |

| Phi-2 | 2.7B | 1.7GB | docker exec -it ollama ollama run phi |

| Neural Chat | 7B | 4.1GB | docker exec -it ollama ollama run neural-chat |

| Starling | 7B | 4.1GB | docker exec -it ollama ollama run starling-lm |

| Code Llama | 7B | 3.8GB | docker exec -it ollama ollama run codellama |

| Llama 2 Uncensored | 7B | 3.8GB | docker exec -it ollama ollama run llama2-uncensored |

| Llama 2 | 13B | 7.3GB | docker exec -it ollama ollama run llama2:13b |

| Llama 2 | 70B | 39GB | docker exec -it ollama ollama run llama2:70b |

| Orca Mini | 3B | 1.9GB | docker exec -it ollama ollama run orca-mini |

| Vicuna | 7B | 3.8GB | docker exec -it ollama ollama run vicuna |

| LLaVA | 7B | 4.5GB | docker exec -it ollama ollama run llava |

| Gemma | 2B | 1.4GB | docker exec -it ollama ollama run gemma:2b |

| Gemma | 7B | 4.8GB | docker exec -it ollama ollama run gemma:7b |

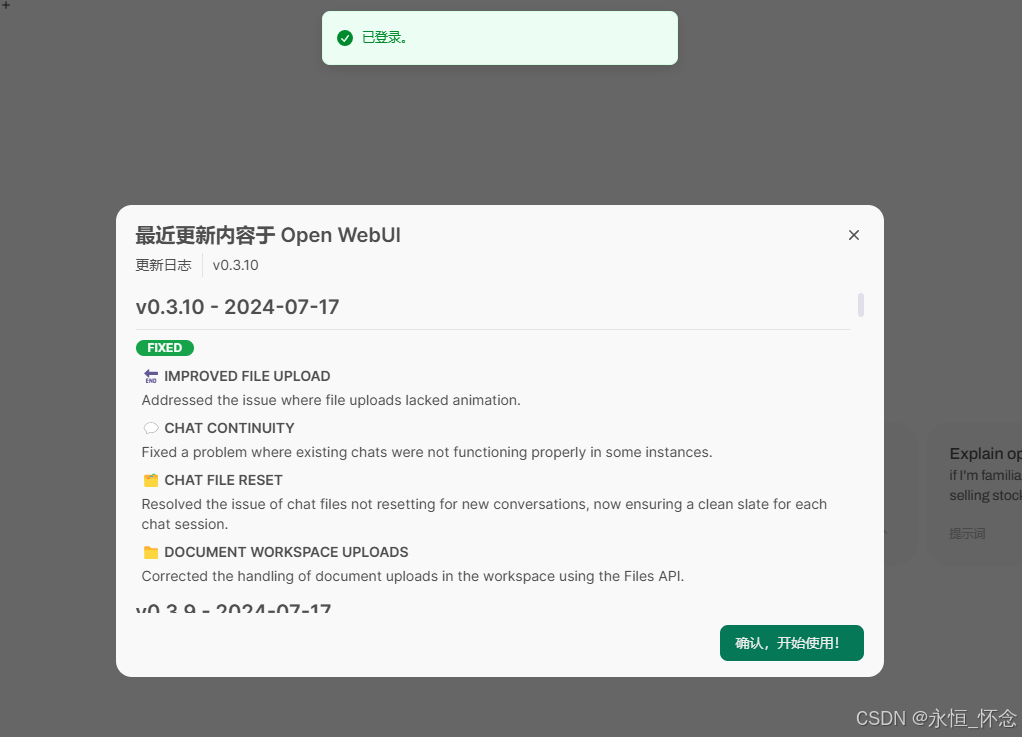

四、使用docker安装open-webui

1. docker部署ollama web ui

查看自己服务器的业务端口,我们这里是30131-30140

main版本

docker run -d -p 30131:8080 --gpus all --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.nju.edu.cn/open-webui/open-webui:main

- 1

cuda版本

docker run -d -p 30131:8080 --gpus all --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.nju.edu.cn/open-webui/open-webui:cuda

- 1

- 安装成功后,可以在另一台计算机进行访问,如下:

2.注册账号

- 默认第一个账号是管理员

3.成功进入:

4. 聊天界面

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/人工智能uu/article/detail/948668

推荐阅读

相关标签