- 1【linux】02 :Linux基础命令

- 2linux内核选项 config_alignment_trap,ARM Linux系统调用详细分析

- 3macOS Sonoma 14.3.1终于发布啦 为什么清除内存对于提高mac性能非常重要?

- 4如何入门 AI:从初学者到人工智能专家的完整指南_ai人工智能自学教程

- 5subprocess.CalledProcessError: Command ‘(‘lsb_release’, ‘-a’)’ returned non-zero exit status 1._subprocess.calledprocesserror: command '('lsb_rele

- 6进程管理之(二)进程的控制实验_用fork( )创建一个进程,再调用exec( )用新的程序替换该子进程的内容;利用wait( )

- 7java递归求5*4*3*2*1

- 8完美解決pytorch载入预训练权重时出现的CUDA error: out of memory_cuda超出内存

- 9鸿蒙 Stage模型-AbilityStage、Context、Want

- 10wiremock基本使用_httpclientresponsehandler wiremock

[问题已处理]-kubernetes使用hostpath在单节点上共享文件_0/1 nodes are available: 1 pod has unbound immedia

赞

踩

导语:有一个需求 密钥需要挂载到不同容器,并且在代码里更新指定密钥文件,并重启对应容器。原先不需要更改和重启时,是使用configmap实现的。但是现在需要多个容器共享一个文件并且需要被更新,这个就无法使用configmap实现了。考虑使用pv pvc。

使用pv和pvc遇到的问题,疯狂报以下错

Warning FailedScheduling 28s default-scheduler 0/1 nodes are available: 1 pod has unbound immediate

1 node(s) didn’t find available persistent volumes to bind.

operation cannot be fulfilled on persistentvolumeclaims

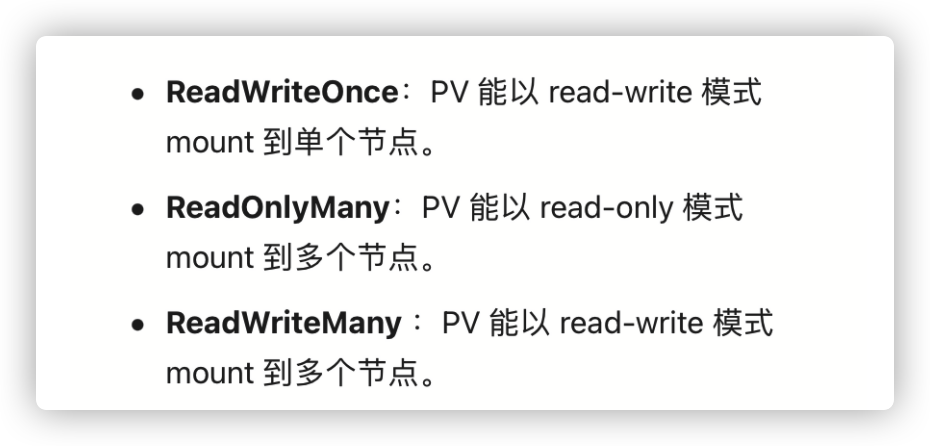

一开始以为是ReadWriteOnce的缘故 ,后面看了官方的资料并不是这个原因。明确指出可以多个pod访问卷,ReadWriteOnce 单路读写,卷只能被单一集群节点挂载读写,只是单节点和多节点(node)的区别。

后来找到了方法。使用hostpath作为storageclass

需求。A容器中有 a,b2个文件,a文件需要与B容器中的a文件共享,b文件需要与C容器中的b文件共享。

# 创建/data2文件夹

mkdir /data2

# 拷贝对应的配置文件到/data2 没有的话可以echo

echo a > /data2/sign.conf

echo b > /data2/path.conf

- 1

- 2

- 3

- 4

- 5

创建第一个配置用于a文件

pv-claim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

pv-volume.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data2"

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

创建第二个配置用于b文件

pv-claim2.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: lung-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

pv-volume2.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: lung-pv-volume

labels:

type: lung

spec:

storageClassName: manual

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data2"

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

应用上面的yaml配置

kubectl apply -f ./

- 1

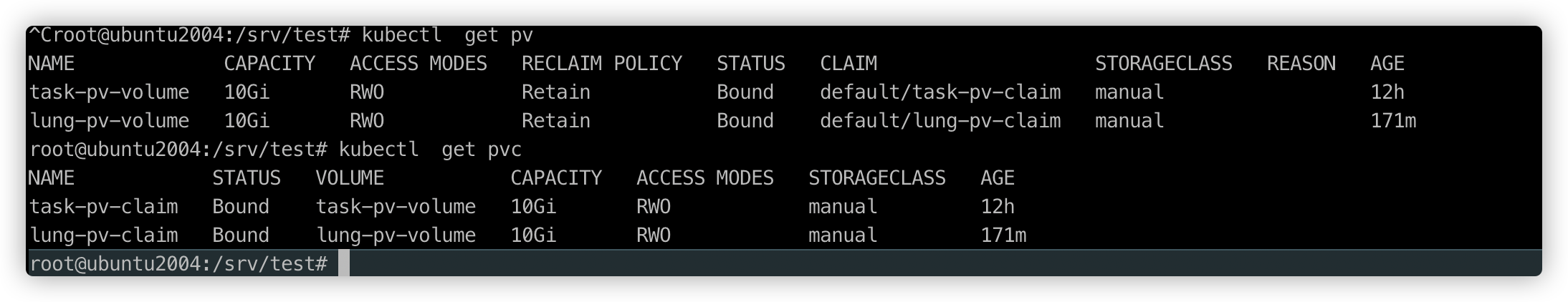

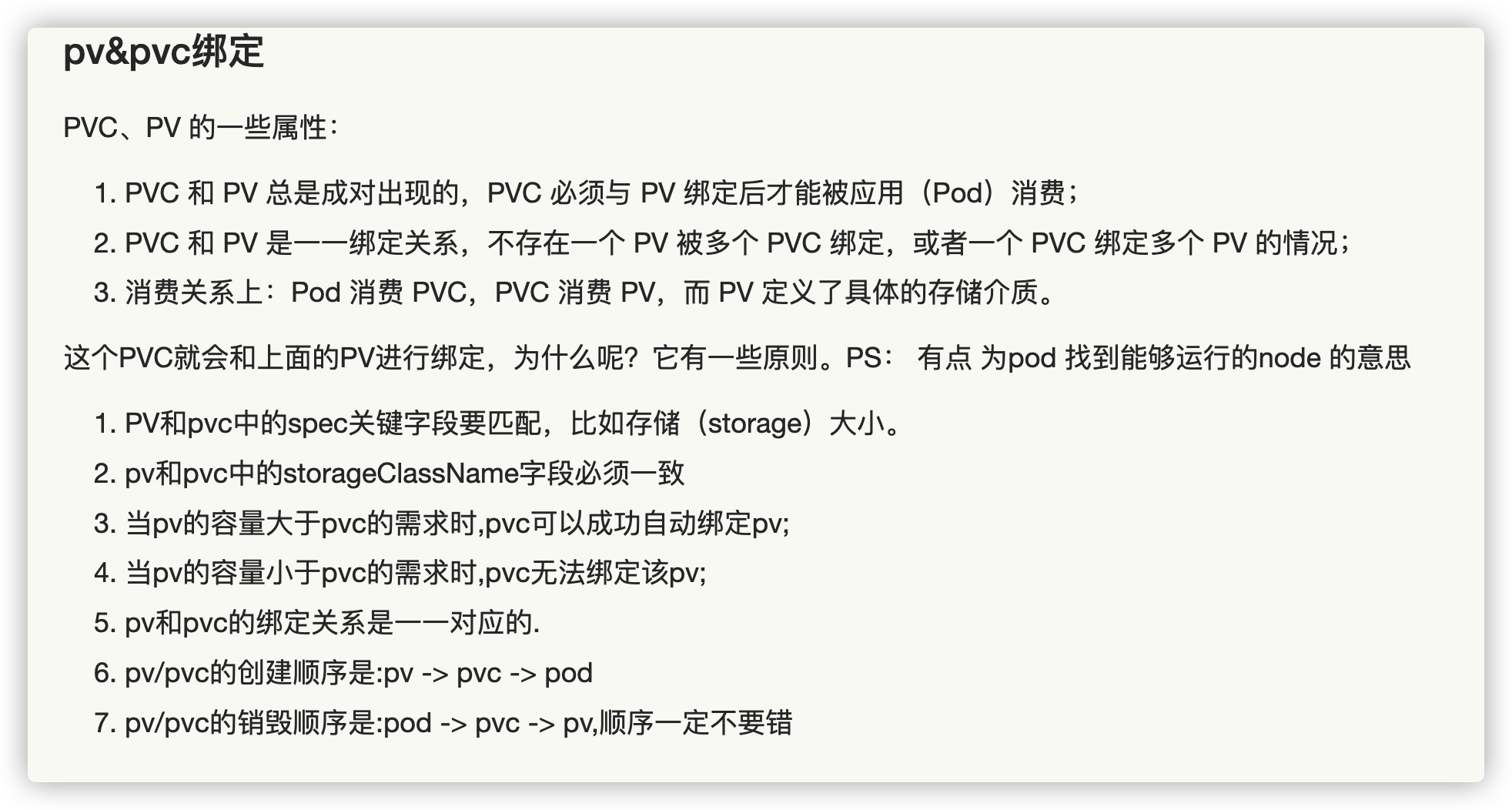

pv和pvc截图,之前有问题的时候pvc似乎都是pending状态 。违反了PVC 和 PV 总是成对出现的,PVC 必须与 PV 绑定后才能被应用(Pod)消费。

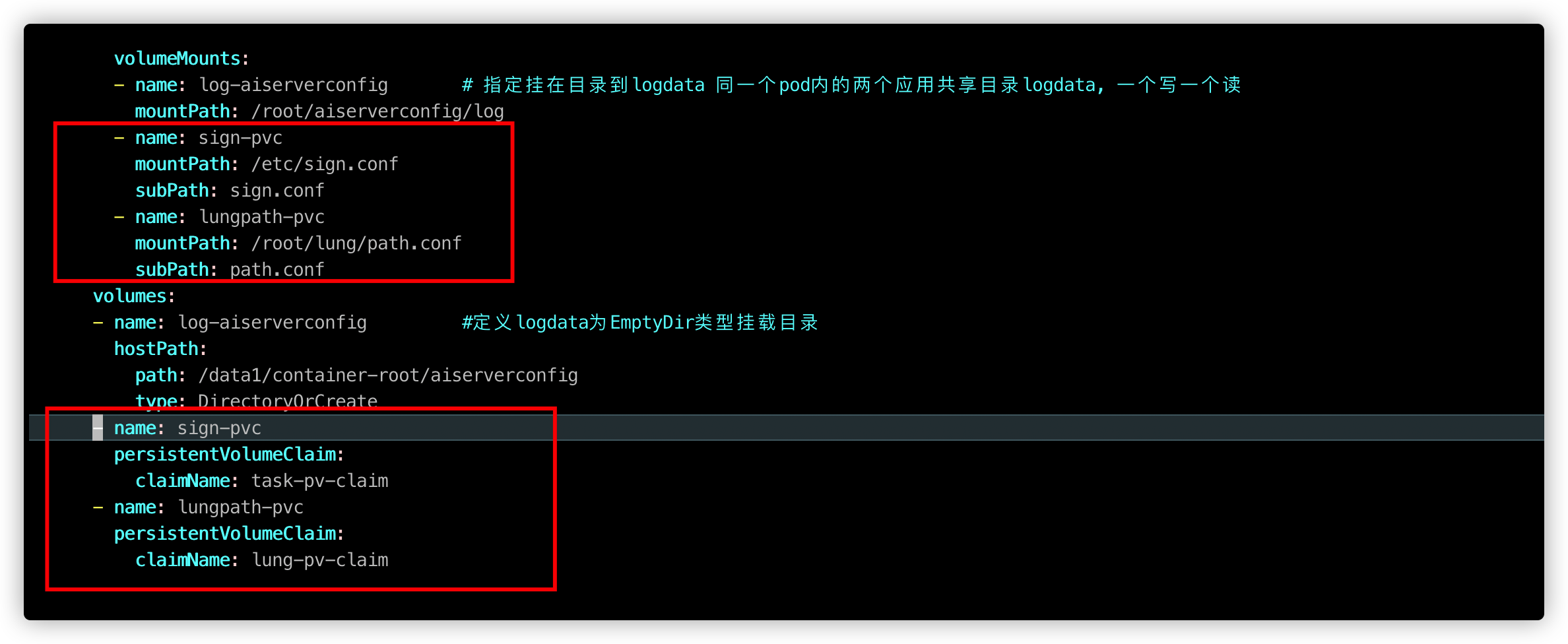

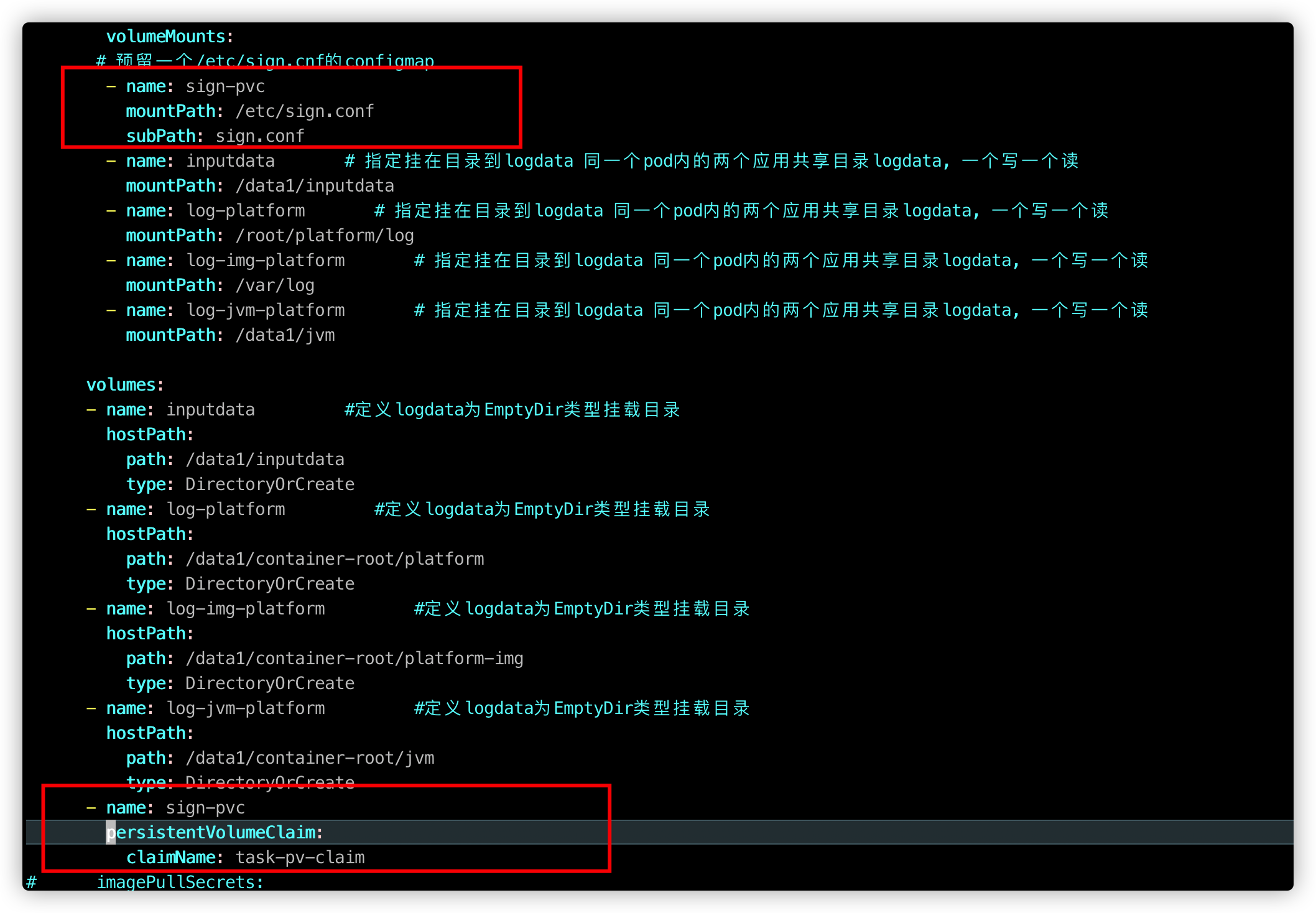

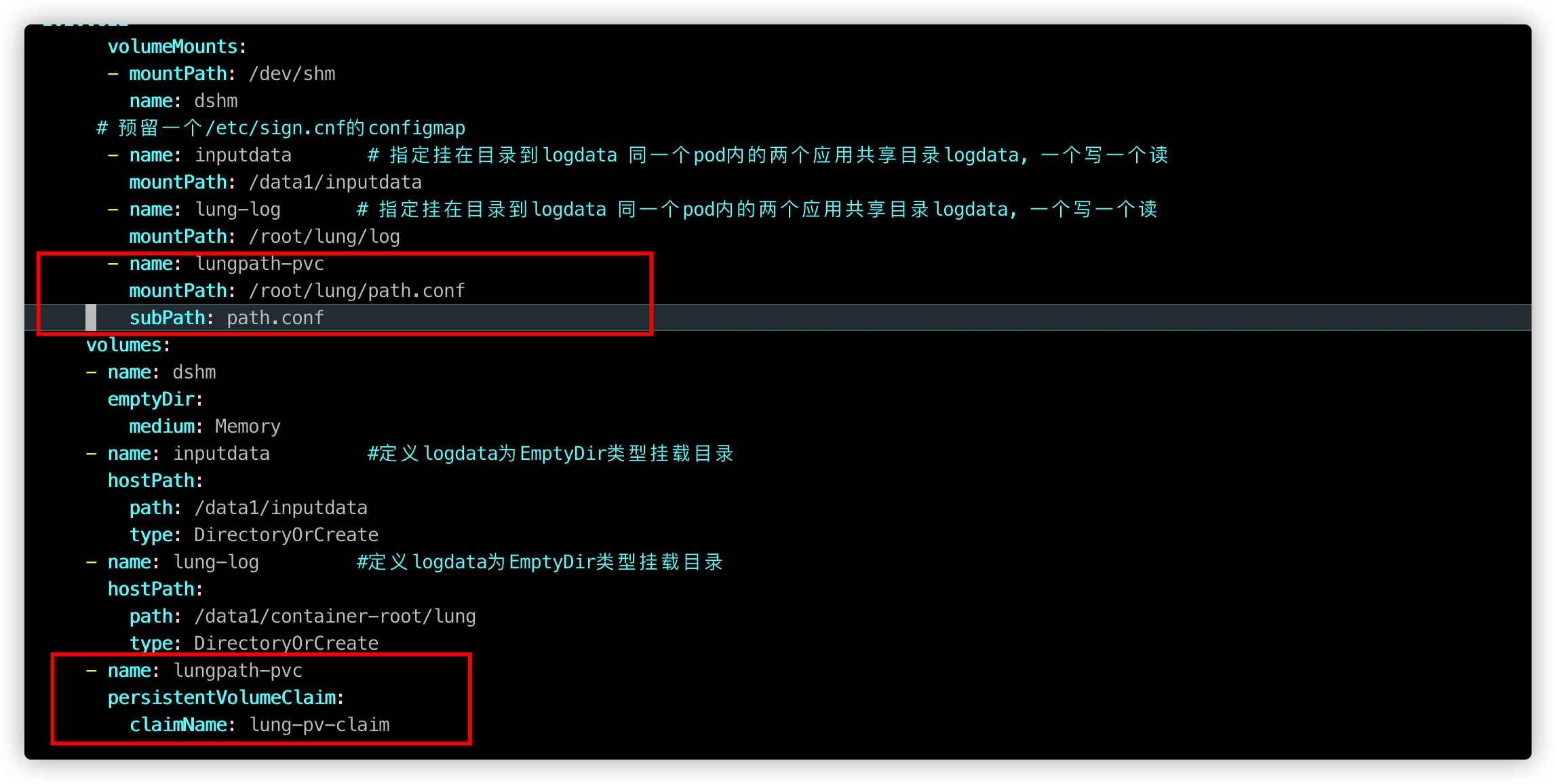

在对应deployment文件中添加对应的挂载配置

--- volumeMounts: - name: sign-pvc mountPath: /etc/sign.conf subPath: sign.conf - name: lungpath-pvc mountPath: /root/lung/path.conf subPath: path.conf volumes: - name: sign-pvc persistentVolumeClaim: claimName: task-pv-claim - name: lungpath-pvc persistentVolumeClaim: claimName: lung-pv-claim ---

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

配置是抄来的 注释没改。

其余2个pod也是类似配置

创建deployment终于不报错了。

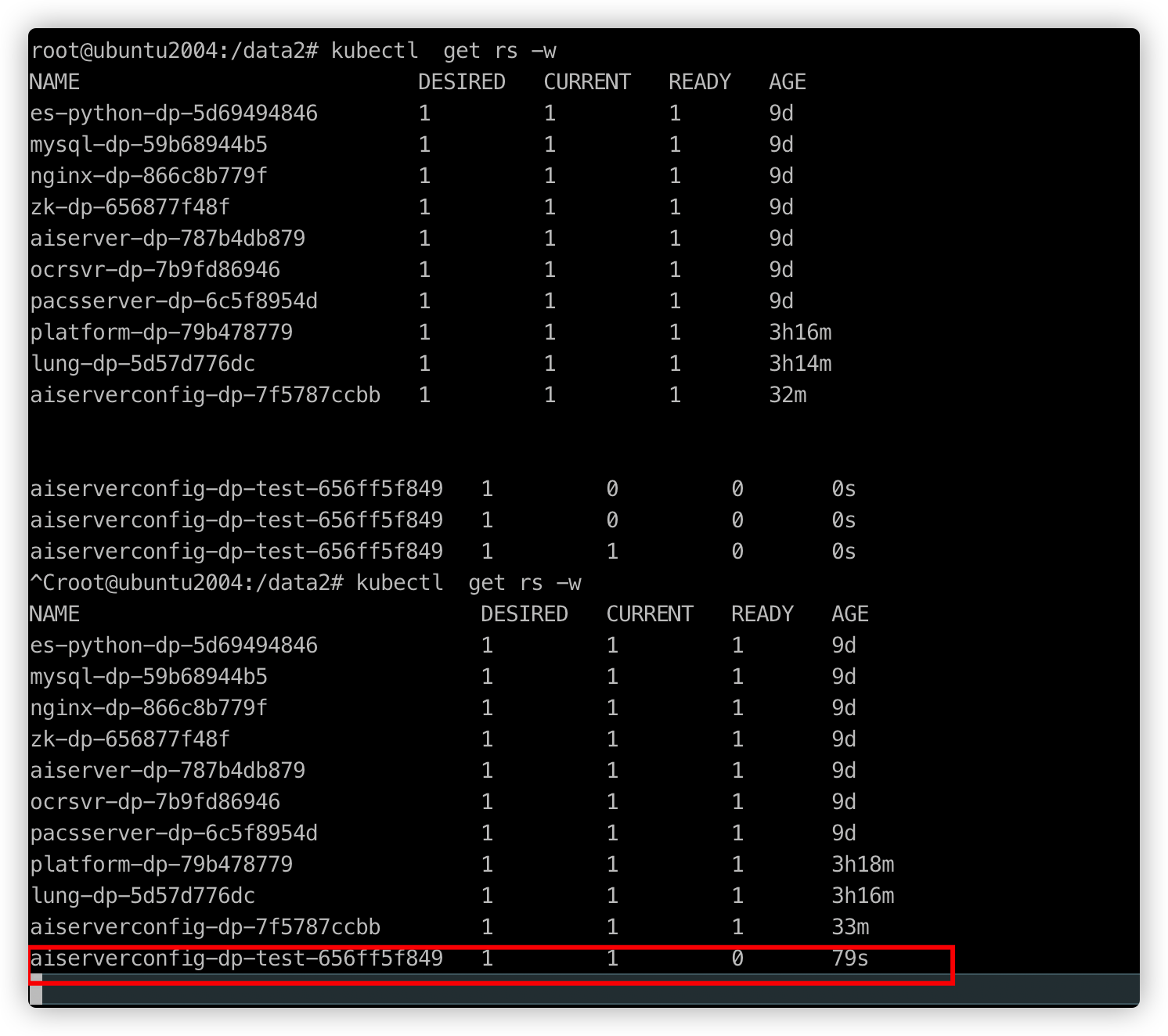

测试过程中遇到的坑。尝试2个文件a,b在一个pod中用同一个pvc,即2个volumes使用一个claimName,会报错。现象是pod处在pending状态,查看对应的rs不是ready状态。情况报错有点类似这个

https://www.lmlphp.com/user/151313/article/item/3657524/

--- volumeMounts: - name: sign-pvc mountPath: /etc/sign.conf subPath: sign.conf - name: lungpath-pvc mountPath: /root/lung/path.conf subPath: path.conf volumes: - name: sign-pvc persistentVolumeClaim: claimName: task-pv-claim - name: lungpath-pvc persistentVolumeClaim: claimName: task-pv-claim ---

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

测试复现

pod状态

rs状态

贴一下对应的k3s的报错

Apr 16 13:31:01 ubuntu2004 k3s[1468]: E0416 13:31:01.411275 1468 kubelet.go:1730] "Unable to attach or mount volumes for pod; skipping pod" err="unmounted volumes=[sign-pvc], unattached volumes=[kube-api-access-9cr49 log-aiserverconfig sign-pvc lungpath-pvc]: timed out waiting for the condition" pod="default/aiserverconfig-dp-test-656ff5f849-xsvtd" Apr 16 13:31:01 ubuntu2004 k3s[1468]: E0416 13:31:01.411353 1468 pod_workers.go:918] "Error syncing pod, skipping" err="unmounted volumes=[sign-pvc], unattached volumes=[kube-api-access-9cr49 log-aiserverconfig sign-pvc lungpath-pvc]: timed out waiting for the condition" pod="default/aiserverconfig-dp-test-656ff5f849-xsvtd" podUID=10031c70-d06b-4b80-8482-7a76c87ba7df Apr 16 13:31:02 ubuntu2004 k3s[1468]: I0416 13:31:02.228026 1468 reconciler.go:192] "operationExecutor.UnmountVolume started for volume \"lungpath-pvc\" (UniqueName: \"kubernetes.io/host-path/10031c70-d06b-4b80-8482-7a76c87ba7df-task-pv-volume\") pod \"10031c70-d06b-4b80-8482-7a76c87ba7df\" (UID: \"10031c70-d06b-4b80-8482-7a76c87ba7df\") " Apr 16 13:31:02 ubuntu2004 k3s[1468]: I0416 13:31:02.228057 1468 reconciler.go:192] "operationExecutor.UnmountVolume started for volume \"log-aiserverconfig\" (UniqueName: \"kubernetes.io/host-path/10031c70-d06b-4b80-8482-7a76c87ba7df-log-aiserverconfig\") pod \"10031c70-d06b-4b80-8482-7a76c87ba7df\" (UID: \"10031c70-d06b-4b80-8482-7a76c87ba7df\") " Apr 16 13:31:02 ubuntu2004 k3s[1468]: I0416 13:31:02.228080 1468 reconciler.go:192] "operationExecutor.UnmountVolume started for volume \"kube-api-access-9cr49\" (UniqueName: \"kubernetes.io/projected/10031c70-d06b-4b80-8482-7a76c87ba7df-kube-api-access-9cr49\") pod \"10031c70-d06b-4b80-8482-7a76c87ba7df\" (UID: \"10031c70-d06b-4b80-8482-7a76c87ba7df\") " Apr 16 13:31:02 ubuntu2004 k3s[1468]: I0416 13:31:02.228137 1468 operation_generator.go:909] UnmountVolume.TearDown succeeded for volume "kubernetes.io/host-path/10031c70-d06b-4b80-8482-7a76c87ba7df-task-pv-volume" (OuterVolumeSpecName: "lungpath-pvc") pod "10031c70-d06b-4b80-8482-7a76c87ba7df" (UID: "10031c70-d06b-4b80-8482-7a76c87ba7df"). InnerVolumeSpecName "task-pv-volume". PluginName "kubernetes.io/host-path", VolumeGidValue "" Apr 16 13:31:02 ubuntu2004 k3s[1468]: I0416 13:31:02.228154 1468 operation_generator.go:909] UnmountVolume.TearDown succeeded for volume "kubernetes.io/host-path/10031c70-d06b-4b80-8482-7a76c87ba7df-log-aiserverconfig" (OuterVolumeSpecName: "log-aiserverconfig") pod "10031c70-d06b-4b80-8482-7a76c87ba7df" (UID: "10031c70-d06b-4b80-8482-7a76c87ba7df"). InnerVolumeSpecName "log-aiserverconfig". PluginName "kubernetes.io/host-path", VolumeGidValue "" Apr 16 13:31:02 ubuntu2004 k3s[1468]: I0416 13:31:02.229023 1468 operation_generator.go:909] UnmountVolume.TearDown succeeded for volume "kubernetes.io/projected/10031c70-d06b-4b80-8482-7a76c87ba7df-kube-api-access-9cr49" (OuterVolumeSpecName: "kube-api-access-9cr49") pod "10031c70-d06b-4b80-8482-7a76c87ba7df" (UID: "10031c70-d06b-4b80-8482-7a76c87ba7df"). InnerVolumeSpecName "kube-api-access-9cr49". PluginName "kubernetes.io/projected", VolumeGidValue "" Apr 16 13:31:02 ubuntu2004 k3s[1468]: I0416 13:31:02.328733 1468 reconciler.go:295] "Volume detached for volume \"task-pv-volume\" (UniqueName: \"kubernetes.io/host-path/10031c70-d06b-4b80-8482-7a76c87ba7df-task-pv-volume\") on node \"ubuntu2004\" DevicePath \"\"" Apr 16 13:31:02 ubuntu2004 k3s[1468]: I0416 13:31:02.328824 1468 reconciler.go:295] "Volume detached for volume \"log-aiserverconfig\" (UniqueName: \"kubernetes.io/host-path/10031c70-d06b-4b80-8482-7a76c87ba7df-log-aiserverconfig\") on node \"ubuntu2004\" DevicePath \"\"" Apr 16 13:31:02 ubuntu2004 k3s[1468]: I0416 13:31:02.328861 1468 reconciler.go:295] "Volume detached for volume \"kube-api-access-9cr49\" (UniqueName: \"kubernetes.io/projected/10031c70-d06b-4b80-8482-7a76c87ba7df-kube-api-access-9cr49\") on node \"ubuntu2004\" DevicePath \"\"" Apr 16 13:31:03 ubuntu2004 k3s[1468]: I0416 13:31:03.417384 1468 kubelet_volumes.go:160] "Cleaned up orphaned pod volumes dir" podUID=10031c70-d06b-4b80-8482-7a76c87ba7df path="/var/lib/kubelet/pods/10031c70-d06b-4b80-8482-7a76c87ba7df/volumes" Apr 16 13:31:27 ubuntu2004 k3s[1468]: I0416 13:31:27.704233 1468 event.go:294] "Event occurred" object="default/aiserverconfig-dp-test" kind="Deployment" apiVersion="apps/v1" type="Normal" reason="ScalingReplicaSet" message="Scaled up replica set aiserverconfig-dp-test-656ff5f849 to 1" Apr 16 13:31:27 ubuntu2004 k3s[1468]: I0416 13:31:27.707811 1468 event.go:294] "Event occurred" object="default/aiserverconfig-dp-test-656ff5f849" kind="ReplicaSet" apiVersion="apps/v1" type="Normal" reason="SuccessfulCreate" message="Created pod: aiserverconfig-dp-test-656ff5f849-npjcm" Apr 16 13:31:27 ubuntu2004 k3s[1468]: I0416 13:31:27.710682 1468 topology_manager.go:200] "Topology Admit Handler" Apr 16 13:31:27 ubuntu2004 k3s[1468]: I0416 13:31:27.808484 1468 reconciler.go:216] "operationExecutor.VerifyControllerAttachedVolume started for volume \"log-aiserverconfig\" (UniqueName: \"kubernetes.io/host-path/af3bc87d-392a-4890-92aa-19fcdb8a7895-log-aiserverconfig\") pod \"aiserverconfig-dp-test-656ff5f849-npjcm\" (UID: \"af3bc87d-392a-4890-92aa-19fcdb8a7895\") " pod="default/aiserverconfig-dp-test-656ff5f849-npjcm" Apr 16 13:31:27 ubuntu2004 k3s[1468]: I0416 13:31:27.808551 1468 reconciler.go:216] "operationExecutor.VerifyControllerAttachedVolume started for volume \"task-pv-volume\" (UniqueName: \"kubernetes.io/host-path/af3bc87d-392a-4890-92aa-19fcdb8a7895-task-pv-volume\") pod \"aiserverconfig-dp-test-656ff5f849-npjcm\" (UID: \"af3bc87d-392a-4890-92aa-19fcdb8a7895\") " pod="default/aiserverconfig-dp-test-656ff5f849-npjcm" Apr 16 13:31:27 ubuntu2004 k3s[1468]: I0416 13:31:27.909963 1468 reconciler.go:216] "operationExecutor.VerifyControllerAttachedVolume started for volume \"kube-api-access-nt5w9\" (UniqueName: \"kubernetes.io/projected/af3bc87d-392a-4890-92aa-19fcdb8a7895-kube-api-access-nt5w9\") pod \"aiserverconfig-dp-test-656ff5f849-npjcm\" (UID: \"af3bc87d-392a-4890-92aa-19fcdb8a7895\") " pod="default/aiserverconfig-dp-test-656ff5f849-npjcm"

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

怀疑是不是一个pod中,不能重复使用同一个persistentVolumeClaim呢。又是拆除又是超时,是第二个挂载的时候把第一个拆下来了么。。。

参考

https://stackoverflow.com/questions/63970511/1-pod-has-unbound-immediate-persistentvolumeclaims-on-minikube

https://access.redhat.com/documentation/zh-cn/openshift_container_platform/4.4/html/storage/persistent-storage-using-hostpath

- ...

赞

踩