- 1APP自动化测试 ---- Appium介绍及运行原理

- 2YOLOV8在训练好的模型后增加epoch继续训练_yolov8 如何在原有模型上继续训练

- 3信息网络终端维修员中级工理论知识测试题--选择题_频段越高传播过程中功率损耗也越大覆盖能力越差,为了提升覆盖能力高频ssb波束通常

- 4本地IDEA中创建maven项目、spark程序远程访问hive_idea spark连接hive maven

- 5【SpringBoot篇】详解Bean的管理(获取bean,bean的作用域,第三方bean)_springboot 根据beanname获取bean

- 6外卖小程序|基于Java+SSM+Uniapp的外卖微信小程序设计与实现(源码+数据库+文档)_外卖java项目代码源码

- 7Java代码审计系列之 JNDI注入_jndi注入原理

- 8大屏+基于Python爬虫的旅游大数据可视化分析推荐系统-计算机毕业设计源码10903_python爬虫重庆旅游的大数据可视化分析

- 9汽车牌照-C++

- 10vivado HLS学习之数据类型使用_ap_int

Python开发面试书籍CVPR 2024 论文和开源项目合集,顺利通过阿里Python岗面试_阿里 2024 论文

赞

踩

14. Adaptive Class Suppression Loss for Long-Tail Object Detection

-

作者单位: 中科院, 国科大, ObjectEye, 北京大学, 鹏城实验室, Nexwise

-

Paper: https://arxiv.org/abs/2104.00885

-

Code: https://github.com/CASIA-IVA-Lab/ACSL

15. VarifocalNet: An IoU-aware Dense Object Detector

-

作者单位: 昆士兰科技大学, 昆士兰大学

-

Paper(Oral): https://arxiv.org/abs/2008.13367

-

Code: https://github.com/hyz-xmaster/VarifocalNet

16. OTA: Optimal Transport Assignment for Object Detection

-

作者单位: 早稻田大学, 旷视科技

-

Paper: https://arxiv.org/abs/2103.14259

-

Code: https://github.com/Megvii-BaseDetection/OTA

17. Distilling Object Detectors via Decoupled Features

-

作者单位: 华为诺亚, 悉尼大学

-

Paper: https://arxiv.org/abs/2103.14475

-

Code: https://github.com/ggjy/DeFeat.pytorch

18. Robust and Accurate Object Detection via Adversarial Learning

-

作者单位: 谷歌, UCLA, UCSC

-

Paper: https://arxiv.org/abs/2103.13886

-

Code: None

19. OPANAS: One-Shot Path Aggregation Network Architecture Search for Object Detection

-

作者单位: 北京大学, Anyvision, 石溪大学

-

Paper: https://arxiv.org/abs/2103.04507

-

Code: https://github.com/VDIGPKU/OPANAS

20. Multiple Instance Active Learning for Object Detection

-

作者单位: 国科大, 华为诺亚, 清华大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/papers/Yuan_Multiple_Instance_Active_Learning_for_Object_Detection_CVPR_2021_paper.pdf

-

Code: https://github.com/yuantn/MI-AOD

21. Towards Open World Object Detection

-

作者单位: 印度理工学院, MBZUAI, 澳大利亚国立大学, 林雪平大学

-

Paper(Oral): https://arxiv.org/abs/2103.02603

-

Code: https://github.com/JosephKJ/OWOD

22. RankDetNet: Delving Into Ranking Constraints for Object Detection

-

作者单位: 赛灵思

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Liu_RankDetNet_Delving_Into_Ranking_Constraints_for_Object_Detection_CVPR_2021_paper.html

-

Code: None

23. Dense Label Encoding for Boundary Discontinuity Free Rotation Detection

-

作者单位: 上海交通大学, 国科大

-

Paper: https://arxiv.org/abs/2011.09670

-

Code1: https://github.com/Thinklab-SJTU/DCL_RetinaNet_Tensorflow

-

Code2: https://github.com/yangxue0827/RotationDetection

24. ReDet: A Rotation-equivariant Detector for Aerial Object Detection

-

作者单位: 武汉大学

-

Paper: https://arxiv.org/abs/2103.07733

-

Code: https://github.com/csuhan/ReDet

25. Beyond Bounding-Box: Convex-Hull Feature Adaptation for Oriented and Densely Packed Object Detection

-

作者单位: 国科大, 清华大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Guo_Beyond_Bounding-Box_Convex-Hull_Feature_Adaptation_for_Oriented_and_Densely_Packed_CVPR_2021_paper.html

-

Code: https://github.com/SDL-GuoZonghao/BeyondBoundingBox

26. Accurate Few-Shot Object Detection With Support-Query Mutual Guidance and Hybrid Loss

-

作者单位: 复旦大学, 同济大学, 浙江大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Zhang_Accurate_Few-Shot_Object_Detection_With_Support-Query_Mutual_Guidance_and_Hybrid_CVPR_2021_paper.html

-

Code: None

27. Adaptive Image Transformer for One-Shot Object Detection

-

作者单位: 中央研究院, 台湾AI Labs

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Chen_Adaptive_Image_Transformer_for_One-Shot_Object_Detection_CVPR_2021_paper.html

-

Code: None

28. Dense Relation Distillation with Context-aware Aggregation for Few-Shot Object Detection

-

作者单位: 北京大学, 北邮

-

Paper: https://arxiv.org/abs/2103.17115

-

Code: https://github.com/hzhupku/DCNet

29. Semantic Relation Reasoning for Shot-Stable Few-Shot Object Detection

-

作者单位: 卡内基梅隆大学(CMU)

-

Paper: https://arxiv.org/abs/2103.01903

-

Code: None

30. FSCE: Few-Shot Object Detection via Contrastive Proposal Encoding

-

作者单位: 南加利福尼亚大学, 旷视科技

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Sun_FSCE_Few-Shot_Object_Detection_via_Contrastive_Proposal_Encoding_CVPR_2021_paper.html

-

Code: https://github.com/MegviiDetection/FSCE

31. Hallucination Improves Few-Shot Object Detection

-

作者单位: 伊利诺伊大学厄巴纳-香槟分校

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Zhang_Hallucination_Improves_Few-Shot_Object_Detection_CVPR_2021_paper.html

-

Code: https://github.com/pppplin/HallucFsDet

32. Few-Shot Object Detection via Classification Refinement and Distractor Retreatment

-

作者单位: 新加坡国立大学, SIMTech

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Li_Few-Shot_Object_Detection_via_Classification_Refinement_and_Distractor_Retreatment_CVPR_2021_paper.html

-

Code: None

33. Generalized Few-Shot Object Detection Without Forgetting

-

作者单位: 旷视科技

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Fan_Generalized_Few-Shot_Object_Detection_Without_Forgetting_CVPR_2021_paper.html

-

Code: None

34. Transformation Invariant Few-Shot Object Detection

-

作者单位: 华为诺亚方舟实验室

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Li_Transformation_Invariant_Few-Shot_Object_Detection_CVPR_2021_paper.html

-

Code: None

35. UniT: Unified Knowledge Transfer for Any-Shot Object Detection and Segmentation

-

作者单位: 不列颠哥伦比亚大学, Vector AI, CIFAR AI Chair

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Khandelwal_UniT_Unified_Knowledge_Transfer_for_Any-Shot_Object_Detection_and_Segmentation_CVPR_2021_paper.html

-

Code: https://github.com/ubc-vision/UniT

36. Beyond Max-Margin: Class Margin Equilibrium for Few-Shot Object Detection

-

作者单位: 国科大, 厦门大学, 鹏城实验室

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Li_Beyond_Max-Margin_Class_Margin_Equilibrium_for_Few-Shot_Object_Detection_CVPR_2021_paper.html

-

Code: https://github.com/Bohao-Lee/CME

37. Points As Queries: Weakly Semi-Supervised Object Detection by Points]

-

作者单位: 旷视科技, 复旦大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Chen_Points_As_Queries_Weakly_Semi-Supervised_Object_Detection_by_Points_CVPR_2021_paper.html

-

Code: None

38. Data-Uncertainty Guided Multi-Phase Learning for Semi-Supervised Object Detection

-

作者单位: 清华大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Wang_Data-Uncertainty_Guided_Multi-Phase_Learning_for_Semi-Supervised_Object_Detection_CVPR_2021_paper.html

-

Code: None

39. Positive-Unlabeled Data Purification in the Wild for Object Detection

-

作者单位: 华为诺亚方舟实验室, 悉尼大学, 北京大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Guo_Positive-Unlabeled_Data_Purification_in_the_Wild_for_Object_Detection_CVPR_2021_paper.html

-

Code: None

40. Interactive Self-Training With Mean Teachers for Semi-Supervised Object Detection

-

作者单位: 阿里巴巴, 香港理工大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Yang_Interactive_Self-Training_With_Mean_Teachers_for_Semi-Supervised_Object_Detection_CVPR_2021_paper.html

-

Code: None

41. Instant-Teaching: An End-to-End Semi-Supervised Object Detection Framework

-

作者单位: 阿里巴巴

-

Paper: https://arxiv.org/abs/2103.11402

-

Code: None

42. Humble Teachers Teach Better Students for Semi-Supervised Object Detection

-

作者单位: 卡内基梅隆大学(CMU), 亚马逊

-

Homepage: https://yihet.com/humble-teacher

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Tang_Humble_Teachers_Teach_Better_Students_for_Semi-Supervised_Object_Detection_CVPR_2021_paper.html

-

Code: https://github.com/lryta/HumbleTeacher

43. Interpolation-Based Semi-Supervised Learning for Object Detection

-

作者单位: 首尔大学, 阿尔托大学等

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Jeong_Interpolation-Based_Semi-Supervised_Learning_for_Object_Detection_CVPR_2021_paper.html

-

Code: https://github.com/soo89/ISD-SSD

===================================================================

44. Domain-Specific Suppression for Adaptive Object Detection

-

作者单位: 中科院, 寒武纪, 国科大

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Wang_Domain-Specific_Suppression_for_Adaptive_Object_Detection_CVPR_2021_paper.html

-

Code: None

45. MeGA-CDA: Memory Guided Attention for Category-Aware Unsupervised Domain Adaptive Object Detection

-

作者单位: 约翰斯·霍普金斯大学, 梅赛德斯—奔驰

-

Paper: https://arxiv.org/abs/2103.04224

-

Code: None

46. Unbiased Mean Teacher for Cross-Domain Object Detection

-

作者单位: 电子科技大学, ETH Zurich

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Deng_Unbiased_Mean_Teacher_for_Cross-Domain_Object_Detection_CVPR_2021_paper.html

-

Code: https://github.com/kinredon/umt

47. I^3Net: Implicit Instance-Invariant Network for Adapting One-Stage Object Detectors

-

作者单位: 香港大学, 厦门大学, Deepwise AI Lab

-

Paper: https://arxiv.org/abs/2103.13757

-

Code: None

48. There Is More Than Meets the Eye: Self-Supervised Multi-Object Detection and Tracking With Sound by Distilling Multimodal Knowledge

-

作者单位: 弗莱堡大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Valverde_There_Is_More_Than_Meets_the_Eye_Self-Supervised_Multi-Object_Detection_CVPR_2021_paper.html

-

Code: http://rl.uni-freiburg.de/research/multimodal-distill

49. Instance Localization for Self-supervised Detection Pretraining

-

作者单位: 香港中文大学, 微软亚洲研究院

-

Paper: https://arxiv.org/abs/2102.08318

-

Code: https://github.com/limbo0000/InstanceLoc

50. Informative and Consistent Correspondence Mining for Cross-Domain Weakly Supervised Object Detection

-

作者单位: 北航, 鹏城实验室, 商汤科技

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Hou_Informative_and_Consistent_Correspondence_Mining_for_Cross-Domain_Weakly_Supervised_Object_CVPR_2021_paper.html

-

Code: None

51. DAP: Detection-Aware Pre-training with Weak Supervision

-

作者单位: UIUC, 微软

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Zhong_DAP_Detection-Aware_Pre-Training_With_Weak_Supervision_CVPR_2021_paper.html

-

Code: None

52. Open-Vocabulary Object Detection Using Captions

-

作者单位:Snap, 哥伦比亚大学

-

Paper(Oral): https://openaccess.thecvf.com/content/CVPR2021/html/Zareian_Open-Vocabulary_Object_Detection_Using_Captions_CVPR_2021_paper.html

-

Code: https://github.com/alirezazareian/ovr-cnn

53. Depth From Camera Motion and Object Detection

-

作者单位: 密歇根大学, SIAI

-

Paper: https://arxiv.org/abs/2103.01468

-

Code: https://github.com/griffbr/ODMD

-

Dataset: https://github.com/griffbr/ODMD

54. Unsupervised Object Detection With LIDAR Clues

-

作者单位: 商汤科技, 国科大, 中科大

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Tian_Unsupervised_Object_Detection_With_LIDAR_Clues_CVPR_2021_paper.html

-

Code: None

55. GAIA: A Transfer Learning System of Object Detection That Fits Your Needs

-

作者单位: 国科大, 北理, 中科院, 商汤科技

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Bu_GAIA_A_Transfer_Learning_System_of_Object_Detection_That_Fits_CVPR_2021_paper.html

-

Code: https://github.com/GAIA-vision/GAIA-det

56. General Instance Distillation for Object Detection

-

作者单位: 旷视科技, 北航

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Dai_General_Instance_Distillation_for_Object_Detection_CVPR_2021_paper.html

-

Code: None

57. AQD: Towards Accurate Quantized Object Detection

-

作者单位: 蒙纳士大学, 阿德莱德大学, 华南理工大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Chen_AQD_Towards_Accurate_Quantized_Object_Detection_CVPR_2021_paper.html

-

Code: https://github.com/aim-uofa/model-quantization

58. Scale-Aware Automatic Augmentation for Object Detection

-

作者单位: 香港中文大学, 字节跳动AI Lab, 思谋科技

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Chen_Scale-Aware_Automatic_Augmentation_for_Object_Detection_CVPR_2021_paper.html

-

Code: https://github.com/Jia-Research-Lab/SA-AutoAug

59. Equalization Loss v2: A New Gradient Balance Approach for Long-Tailed Object Detection

-

作者单位: 同济大学, 商汤科技, 清华大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Tan_Equalization_Loss_v2_A_New_Gradient_Balance_Approach_for_Long-Tailed_CVPR_2021_paper.html

-

Code: https://github.com/tztztztztz/eqlv2

60. Class-Aware Robust Adversarial Training for Object Detection

-

作者单位: 哥伦比亚大学, 中央研究院

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Chen_Class-Aware_Robust_Adversarial_Training_for_Object_Detection_CVPR_2021_paper.html

-

Code: None

61. Improved Handling of Motion Blur in Online Object Detection

-

作者单位: 伦敦大学学院

-

Homepage: http://visual.cs.ucl.ac.uk/pubs/handlingMotionBlur/

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Sayed_Improved_Handling_of_Motion_Blur_in_Online_Object_Detection_CVPR_2021_paper.html

-

Code: None

62. Multiple Instance Active Learning for Object Detection

-

作者单位: 国科大, 华为诺亚

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Yuan_Multiple_Instance_Active_Learning_for_Object_Detection_CVPR_2021_paper.html

-

Code: https://github.com/yuantn/MI-AOD

63. Neural Auto-Exposure for High-Dynamic Range Object Detection

-

作者单位: Algolux, 普林斯顿大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Onzon_Neural_Auto-Exposure_for_High-Dynamic_Range_Object_Detection_CVPR_2021_paper.html

-

Code: None

64. Generalizable Pedestrian Detection: The Elephant in the Room

-

作者单位: IIAI, 阿尔托大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Hasan_Generalizable_Pedestrian_Detection_The_Elephant_in_the_Room_CVPR_2021_paper.html

-

Code: https://github.com/hasanirtiza/Pedestron

65. Neural Auto-Exposure for High-Dynamic Range Object Detection

-

作者单位: Algolux, 普林斯顿大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Onzon_Neural_Auto-Exposure_for_High-Dynamic_Range_Object_Detection_CVPR_2021_paper.html

-

Code: None

===================================================================================

LightTrack: Finding Lightweight Neural Networks for Object Tracking via One-Shot Architecture Search

-

Paper: https://arxiv.org/abs/2104.14545

-

Code: https://github.com/researchmm/LightTrack

Towards More Flexible and Accurate Object Tracking with Natural Language: Algorithms and Benchmark

-

Homepage: https://sites.google.com/view/langtrackbenchmark/

-

Paper: https://arxiv.org/abs/2103.16746

-

Evaluation Toolkit: https://github.com/wangxiao5791509/TNL2K_evaluation_toolkit

-

Demo Video: https://www.youtube.com/watch?v=7lvVDlkkff0&ab_channel=XiaoWang

IoU Attack: Towards Temporally Coherent Black-Box Adversarial Attack for Visual Object Tracking

-

Paper: https://arxiv.org/abs/2103.14938

-

Code: https://github.com/VISION-SJTU/IoUattack

Graph Attention Tracking

-

Paper: https://arxiv.org/abs/2011.11204

-

Code: https://github.com/ohhhyeahhh/SiamGAT

Rotation Equivariant Siamese Networks for Tracking

-

Paper: https://arxiv.org/abs/2012.13078

-

Code: None

Track to Detect and Segment: An Online Multi-Object Tracker

-

Homepage: https://jialianwu.com/projects/TraDeS.html

-

Paper: None

-

Code: None

Transformer Meets Tracker: Exploiting Temporal Context for Robust Visual Tracking

-

Paper(Oral): https://arxiv.org/abs/2103.11681

-

Code: https://github.com/594422814/TransformerTrack

Transformer Tracking

-

Paper: https://arxiv.org/abs/2103.15436

-

Code: https://github.com/chenxin-dlut/TransT

Tracking Pedestrian Heads in Dense Crowd

-

Homepage: https://project.inria.fr/crowdscience/project/dense-crowd-head-tracking/

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Sundararaman_Tracking_Pedestrian_Heads_in_Dense_Crowd_CVPR_2021_paper.html

-

Code1: https://github.com/Sentient07/HeadHunter

-

Code2: https://github.com/Sentient07/HeadHunter%E2%80%93T

-

Dataset: https://project.inria.fr/crowdscience/project/dense-crowd-head-tracking/

Multiple Object Tracking with Correlation Learning

-

Paper: https://arxiv.org/abs/2104.03541

-

Code: None

Probabilistic Tracklet Scoring and Inpainting for Multiple Object Tracking

-

Paper: https://arxiv.org/abs/2012.02337

-

Code: None

Learning a Proposal Classifier for Multiple Object Tracking

-

Paper: https://arxiv.org/abs/2103.07889

-

Code: https://github.com/daip13/LPC_MOT.git

Track to Detect and Segment: An Online Multi-Object Tracker

-

Homepage: https://jialianwu.com/projects/TraDeS.html

-

Paper: https://arxiv.org/abs/2103.08808

-

Code: https://github.com/JialianW/TraDeS

======================================================================================

1. HyperSeg: Patch-wise Hypernetwork for Real-time Semantic Segmentation

-

作者单位: Facebook AI, 巴伊兰大学, 特拉维夫大学

-

Homepage: https://nirkin.com/hyperseg/

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/papers/Nirkin_HyperSeg_Patch-Wise_Hypernetwork_for_Real-Time_Semantic_Segmentation_CVPR_2021_paper.pdf

-

Code: https://github.com/YuvalNirkin/hyperseg

2. Rethinking BiSeNet For Real-time Semantic Segmentation

-

作者单位: 美团

-

Paper: https://arxiv.org/abs/2104.13188

-

Code: https://github.com/MichaelFan01/STDC-Seg

3. Progressive Semantic Segmentation

-

作者单位: VinAI Research, VinUniversity, 阿肯色大学, 石溪大学

-

Paper: https://arxiv.org/abs/2104.03778

-

Code: https://github.com/VinAIResearch/MagNet

4. Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

-

作者单位: 复旦大学, 牛津大学, 萨里大学, 腾讯优图, Facebook AI

-

Homepage: https://fudan-zvg.github.io/SETR

-

Paper: https://arxiv.org/abs/2012.15840

-

Code: https://github.com/fudan-zvg/SETR

5. Capturing Omni-Range Context for Omnidirectional Segmentation

-

作者单位: 卡尔斯鲁厄理工学院, 卡尔·蔡司, 华为

-

Paper: https://arxiv.org/abs/2103.05687

-

Code: None

6. Learning Statistical Texture for Semantic Segmentation

-

作者单位: 北航, 商汤科技

-

Paper: https://arxiv.org/abs/2103.04133

-

Code: None

7. InverseForm: A Loss Function for Structured Boundary-Aware Segmentation

-

作者单位: 高通AI研究院

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Borse_InverseForm_A_Loss_Function_for_Structured_Boundary-Aware_Segmentation_CVPR_2021_paper.html

-

Code: None

8. DCNAS: Densely Connected Neural Architecture Search for Semantic Image Segmentation

-

作者单位: Joyy Inc, 快手, 北航等

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Zhang_DCNAS_Densely_Connected_Neural_Architecture_Search_for_Semantic_Image_Segmentation_CVPR_2021_paper.html

-

Code: None

9. Railroad Is Not a Train: Saliency As Pseudo-Pixel Supervision for Weakly Supervised Semantic Segmentation

-

作者单位: 延世大学, 成均馆大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Lee_Railroad_Is_Not_a_Train_Saliency_As_Pseudo-Pixel_Supervision_for_CVPR_2021_paper.html

-

Code: https://github.com/halbielee/EPS

10. Background-Aware Pooling and Noise-Aware Loss for Weakly-Supervised Semantic Segmentation

-

作者单位: 延世大学

-

Homepage: https://cvlab.yonsei.ac.kr/projects/BANA/

-

Paper: https://arxiv.org/abs/2104.00905

-

Code: None

11. Non-Salient Region Object Mining for Weakly Supervised Semantic Segmentation

-

作者单位: 南京理工大学, MBZUAI, 电子科技大学, 阿德莱德大学, 悉尼科技大学

-

Paper: https://arxiv.org/abs/2103.14581

-

Code: https://github.com/NUST-Machine-Intelligence-Laboratory/nsrom

12. Embedded Discriminative Attention Mechanism for Weakly Supervised Semantic Segmentation

-

作者单位: 北京理工大学, 美团

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Wu_Embedded_Discriminative_Attention_Mechanism_for_Weakly_Supervised_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: https://github.com/allenwu97/EDAM

13. BBAM: Bounding Box Attribution Map for Weakly Supervised Semantic and Instance Segmentation

-

作者单位: 首尔大学

-

Paper: https://arxiv.org/abs/2103.08907

-

Code: https://github.com/jbeomlee93/BBAM

14. Semi-Supervised Semantic Segmentation with Cross Pseudo Supervision

-

作者单位: 北京大学, 微软亚洲研究院

-

Paper: https://arxiv.org/abs/2106.01226

-

Code: https://github.com/charlesCXK/TorchSemiSeg

15. Semi-supervised Domain Adaptation based on Dual-level Domain Mixing for Semantic Segmentation

-

作者单位: 华为, 大连理工大学, 北京大学

-

Paper: https://arxiv.org/abs/2103.04705

-

Code: None

16. Semi-Supervised Semantic Segmentation With Directional Context-Aware Consistency

-

作者单位: 香港中文大学, 思谋科技, 牛津大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Lai_Semi-Supervised_Semantic_Segmentation_With_Directional_Context-Aware_Consistency_CVPR_2021_paper.html

-

Code: None

17. Semantic Segmentation With Generative Models: Semi-Supervised Learning and Strong Out-of-Domain Generalization

-

作者单位: NVIDIA, 多伦多大学, 耶鲁大学, MIT, Vector Institute

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Li_Semantic_Segmentation_With_Generative_Models_Semi-Supervised_Learning_and_Strong_Out-of-Domain_CVPR_2021_paper.html

-

Code: https://nv-tlabs.github.io/semanticGAN/

18. Three Ways To Improve Semantic Segmentation With Self-Supervised Depth Estimation

-

作者单位: ETH Zurich, 伯恩大学, 鲁汶大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Hoyer_Three_Ways_To_Improve_Semantic_Segmentation_With_Self-Supervised_Depth_Estimation_CVPR_2021_paper.html

-

Code: https://github.com/lhoyer/improving_segmentation_with_selfsupervised_depth

19. Cluster, Split, Fuse, and Update: Meta-Learning for Open Compound Domain Adaptive Semantic Segmentation

-

作者单位: ETH Zurich, 鲁汶大学, 电子科技大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Gong_Cluster_Split_Fuse_and_Update_Meta-Learning_for_Open_Compound_Domain_CVPR_2021_paper.html

-

Code: None

20. Source-Free Domain Adaptation for Semantic Segmentation

-

作者单位: 华东师范大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Liu_Source-Free_Domain_Adaptation_for_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: None

21. Uncertainty Reduction for Model Adaptation in Semantic Segmentation

-

作者单位: Idiap Research Institute, EPFL, 日内瓦大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/S_Uncertainty_Reduction_for_Model_Adaptation_in_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: https://git.io/JthPp

22. Self-Supervised Augmentation Consistency for Adapting Semantic Segmentation

-

作者单位: 达姆施塔特工业大学, hessian.AI

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Araslanov_Self-Supervised_Augmentation_Consistency_for_Adapting_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: https://github.com/visinf/da-sac

23. RobustNet: Improving Domain Generalization in Urban-Scene Segmentation via Instance Selective Whitening

-

作者单位: LG AI研究院, KAIST等

-

Paper: https://arxiv.org/abs/2103.15597

-

Code: https://github.com/shachoi/RobustNet

24. Coarse-to-Fine Domain Adaptive Semantic Segmentation with Photometric Alignment and Category-Center Regularization

-

作者单位: 香港大学, 深睿医疗

-

Paper: https://arxiv.org/abs/2103.13041

-

Code: None

25. MetaCorrection: Domain-aware Meta Loss Correction for Unsupervised Domain Adaptation in Semantic Segmentation

-

作者单位: 香港城市大学, 百度

-

Paper: https://arxiv.org/abs/2103.05254

-

Code: https://github.com/cyang-cityu/MetaCorrection

26. Multi-Source Domain Adaptation with Collaborative Learning for Semantic Segmentation

-

作者单位: 华为云, 华为诺亚, 大连理工大学

-

Paper: https://arxiv.org/abs/2103.04717

-

Code: None

27. Prototypical Pseudo Label Denoising and Target Structure Learning for Domain Adaptive Semantic Segmentation

-

作者单位: 中国科学技术大学, 微软亚洲研究院

-

Paper: https://arxiv.org/abs/2101.10979

-

Code: https://github.com/microsoft/ProDA

28. DANNet: A One-Stage Domain Adaptation Network for Unsupervised Nighttime Semantic Segmentation

-

作者单位: 南卡罗来纳大学, 天远视科技

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Wu_DANNet_A_One-Stage_Domain_Adaptation_Network_for_Unsupervised_Nighttime_Semantic_CVPR_2021_paper.html

-

Code: https://github.com/W-zx-Y/DANNet

29. Scale-Aware Graph Neural Network for Few-Shot Semantic Segmentation

-

作者单位: MBZUAI, IIAI, 哈工大

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Xie_Scale-Aware_Graph_Neural_Network_for_Few-Shot_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: None

30. Anti-Aliasing Semantic Reconstruction for Few-Shot Semantic Segmentation

-

作者单位: 国科大, 清华大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Liu_Anti-Aliasing_Semantic_Reconstruction_for_Few-Shot_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: https://github.com/Bibkiller/ASR

31. PiCIE: Unsupervised Semantic Segmentation Using Invariance and Equivariance in Clustering

-

作者单位: UT-Austin, 康奈尔大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Cho_PiCIE_Unsupervised_Semantic_Segmentation_Using_Invariance_and_Equivariance_in_Clustering_CVPR_2021_paper.html

-

Code: https:// github.com/janghyuncho/PiCIE

32. VSPW: A Large-scale Dataset for Video Scene Parsing in the Wild

-

作者单位: 浙江大学, 百度, 悉尼科技大学

-

Homepage: https://www.vspwdataset.com/

-

Paper: https://www.vspwdataset.com/CVPR2021__miao.pdf

-

GitHub: https://github.com/sssdddwww2/vspw_dataset_download

33. Continual Semantic Segmentation via Repulsion-Attraction of Sparse and Disentangled Latent Representations

-

作者单位: 帕多瓦大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Michieli_Continual_Semantic_Segmentation_via_Repulsion-Attraction_of_Sparse_and_Disentangled_Latent_CVPR_2021_paper.html

-

Code: https://lttm.dei.unipd.it/paper_data/SDR/

34. Exploit Visual Dependency Relations for Semantic Segmentation

-

作者单位: 伊利诺伊大学芝加哥分校

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Liu_Exploit_Visual_Dependency_Relations_for_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: None

35. Revisiting Superpixels for Active Learning in Semantic Segmentation With Realistic Annotation Costs

-

作者单位: Institute for Infocomm Research, 新加坡国立大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Cai_Revisiting_Superpixels_for_Active_Learning_in_Semantic_Segmentation_With_Realistic_CVPR_2021_paper.html

-

Code: None

36. PLOP: Learning without Forgetting for Continual Semantic Segmentation

-

作者单位: 索邦大学, Heuritech, Datakalab, Valeo.ai

-

Paper: https://arxiv.org/abs/2011.11390

-

Code: https://github.com/arthurdouillard/CVPR2021_PLOP

37. 3D-to-2D Distillation for Indoor Scene Parsing

-

作者单位: 香港中文大学, 香港大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Liu_3D-to-2D_Distillation_for_Indoor_Scene_Parsing_CVPR_2021_paper.html

-

Code: None

38. Bidirectional Projection Network for Cross Dimension Scene Understanding

-

作者单位: 香港中文大学, 牛津大学等

-

Paper(Oral): https://arxiv.org/abs/2103.14326

-

Code: https://github.com/wbhu/BPNet

39. PointFlow: Flowing Semantics Through Points for Aerial Image Segmentation

-

作者单位: 北京大学, 中科院, 国科大, ETH Zurich, 商汤科技等

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Li_PointFlow_Flowing_Semantics_Through_Points_for_Aerial_Image_Segmentation_CVPR_2021_paper.html

-

Code: https://github.com/lxtGH/PFSegNets

======================================================================================

DCT-Mask: Discrete Cosine Transform Mask Representation for Instance Segmentation

-

Paper: https://arxiv.org/abs/2011.09876

-

Code: https://github.com/aliyun/DCT-Mask

Incremental Few-Shot Instance Segmentation

-

Paper: https://arxiv.org/abs/2105.05312

-

Code: https://github.com/danganea/iMTFA

A^2-FPN: Attention Aggregation based Feature Pyramid Network for Instance Segmentation

-

Paper: https://arxiv.org/abs/2105.03186

-

Code: None

RefineMask: Towards High-Quality Instance Segmentation with Fine-Grained Features

-

Paper: https://arxiv.org/abs/2104.08569

-

Code: https://github.com/zhanggang001/RefineMask/

Look Closer to Segment Better: Boundary Patch Refinement for Instance Segmentation

-

Paper: https://arxiv.org/abs/2104.05239

-

Code: https://github.com/tinyalpha/BPR

Multi-Scale Aligned Distillation for Low-Resolution Detection

-

Paper: https://jiaya.me/papers/ms_align_distill_cvpr21.pdf

-

Code: https://github.com/Jia-Research-Lab/MSAD

Boundary IoU: Improving Object-Centric Image Segmentation Evaluation

-

Homepage: https://bowenc0221.github.io/boundary-iou/

-

Paper: https://arxiv.org/abs/2103.16562

-

Code: https://github.com/bowenc0221/boundary-iou-api

Deep Occlusion-Aware Instance Segmentation with Overlapping BiLayers

-

Paper: https://arxiv.org/abs/2103.12340

-

Code: https://github.com/lkeab/BCNet

Zero-shot instance segmentation(Not Sure)

-

Paper: None

-

Code: https://github.com/CVPR2021-pape-id-1395/CVPR2021-paper-id-1395

STMask: Spatial Feature Calibration and Temporal Fusion for Effective One-stage Video Instance Segmentation

-

Paper: http://www4.comp.polyu.edu.hk/~cslzhang/papers.htm

-

Code: https://github.com/MinghanLi/STMask

End-to-End Video Instance Segmentation with Transformers

-

Paper(Oral): https://arxiv.org/abs/2011.14503

-

Code: https://github.com/Epiphqny/VisTR

======================================================================================

ViP-DeepLab: Learning Visual Perception with Depth-aware Video Panoptic Segmentation

-

Paper: https://arxiv.org/abs/2012.05258

-

Code: https://github.com/joe-siyuan-qiao/ViP-DeepLab

-

Dataset: https://github.com/joe-siyuan-qiao/ViP-DeepLab

Part-aware Panoptic Segmentation

-

Paper: https://arxiv.org/abs/2106.06351

-

Code: https://github.com/tue-mps/panoptic_parts

-

Dataset: https://github.com/tue-mps/panoptic_parts

Exemplar-Based Open-Set Panoptic Segmentation Network

-

Homepage: https://cv.snu.ac.kr/research/EOPSN/

-

Paper: https://arxiv.org/abs/2105.08336

-

Code: https://github.com/jd730/EOPSN

MaX-DeepLab: End-to-End Panoptic Segmentation With Mask Transformers

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Wang_MaX-DeepLab_End-to-End_Panoptic_Segmentation_With_Mask_Transformers_CVPR_2021_paper.html

-

Code: None

Panoptic Segmentation Forecasting

-

Paper: https://arxiv.org/abs/2104.03962

-

Code: https://github.com/nianticlabs/panoptic-forecasting

Fully Convolutional Networks for Panoptic Segmentation

-

Paper: https://arxiv.org/abs/2012.00720

-

Code: https://github.com/yanwei-li/PanopticFCN

Cross-View Regularization for Domain Adaptive Panoptic Segmentation

-

Paper: https://arxiv.org/abs/2103.02584

-

Code: None

=================================================================

1. Learning Calibrated Medical Image Segmentation via Multi-Rater Agreement Modeling

-

作者单位: 腾讯天衍实验室, 北京同仁医院

-

Paper(Best Paper Candidate): https://openaccess.thecvf.com/content/CVPR2021/html/Ji_Learning_Calibrated_Medical_Image_Segmentation_via_Multi-Rater_Agreement_Modeling_CVPR_2021_paper.html

-

Code: https://github.com/jiwei0921/MRNet/

2. Every Annotation Counts: Multi-Label Deep Supervision for Medical Image Segmentation

-

作者单位: 卡尔斯鲁厄理工学院, 卡尔·蔡司等

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Reiss_Every_Annotation_Counts_Multi-Label_Deep_Supervision_for_Medical_Image_Segmentation_CVPR_2021_paper.html

-

Code: None

3. FedDG: Federated Domain Generalization on Medical Image Segmentation via Episodic Learning in Continuous Frequency Space

-

作者单位: 香港中文大学, 香港理工大学

-

Paper: https://arxiv.org/abs/2103.06030

-

Code: https://github.com/liuquande/FedDG-ELCFS

4. DiNTS: Differentiable Neural Network Topology Search for 3D Medical Image Segmentation

-

作者单位: 约翰斯·霍普金斯大大学, NVIDIA

-

Paper(Oral): https://arxiv.org/abs/2103.15954

-

Code: None

5. DARCNN: Domain Adaptive Region-Based Convolutional Neural Network for Unsupervised Instance Segmentation in Biomedical Images

-

作者单位: 斯坦福大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Hsu_DARCNN_Domain_Adaptive_Region-Based_Convolutional_Neural_Network_for_Unsupervised_Instance_CVPR_2021_paper.html

-

Code: None

视频目标分割(Video-Object-Segmentation)

============================================================================================

Learning Position and Target Consistency for Memory-based Video Object Segmentation

-

Paper: https://arxiv.org/abs/2104.04329

-

Code: None

SSTVOS: Sparse Spatiotemporal Transformers for Video Object Segmentation

-

Paper(Oral): https://arxiv.org/abs/2101.08833

-

Code: https://github.com/dukebw/SSTVOS

交互式视频目标分割(Interactive-Video-Object-Segmentation)

===========================================================================================================

Modular Interactive Video Object Segmentation: Interaction-to-Mask, Propagation and Difference-Aware Fusion

-

Homepage: https://hkchengrex.github.io/MiVOS/

-

Paper: https://arxiv.org/abs/2103.07941

-

Code: https://github.com/hkchengrex/MiVOS

-

Demo: https://hkchengrex.github.io/MiVOS/video.html#partb

Learning to Recommend Frame for Interactive Video Object Segmentation in the Wild

-

Paper: https://arxiv.org/abs/2103.10391

-

Code: https://github.com/svip-lab/IVOS-W

====================================================================================

Uncertainty-aware Joint Salient Object and Camouflaged Object Detection

-

Paper: https://arxiv.org/abs/2104.02628

-

Code: https://github.com/JingZhang617/Joint_COD_SOD

Deep RGB-D Saliency Detection with Depth-Sensitive Attention and Automatic Multi-Modal Fusion

-

Paper(Oral): https://arxiv.org/abs/2103.11832

-

Code: https://github.com/sunpeng1996/DSA2F

伪装物体检测(Camouflaged Object Detection)

===============================================================================================

Uncertainty-aware Joint Salient Object and Camouflaged Object Detection

-

Paper: https://arxiv.org/abs/2104.02628

-

Code: https://github.com/JingZhang617/Joint_COD_SOD

协同显著性检测(Co-Salient Object Detection)

===============================================================================================

Group Collaborative Learning for Co-Salient Object Detection

-

Paper: https://arxiv.org/abs/2104.01108

-

Code: https://github.com/fanq15/GCoNet

=================================================================================

Semantic Image Matting

-

Paper: https://arxiv.org/abs/2104.08201

-

Code: https://github.com/nowsyn/SIM

-

Dataset: https://github.com/nowsyn/SIM

行人重识别(Person Re-identification)

==========================================================================================

Generalizable Person Re-identification with Relevance-aware Mixture of Experts

-

Paper: https://arxiv.org/abs/2105.09156

-

Code: None

Unsupervised Multi-Source Domain Adaptation for Person Re-Identification

-

Paper: https://arxiv.org/abs/2104.12961

-

Code: None

Combined Depth Space based Architecture Search For Person Re-identification

-

Paper: https://arxiv.org/abs/2104.04163

-

Code: None

==============================================================================

Anchor-Free Person Search

-

Paper: https://arxiv.org/abs/2103.11617

-

Code: https://github.com/daodaofr/AlignPS

-

Interpretation: 首个无需锚框(Anchor-Free)的行人搜索框架 | CVPR 2021

视频理解/行为识别(Video Understanding)

=========================================================================================

Temporal-Relational CrossTransformers for Few-Shot Action Recognition

-

Paper: https://arxiv.org/abs/2101.06184

-

Code: https://github.com/tobyperrett/trx

FrameExit: Conditional Early Exiting for Efficient Video Recognition

-

Paper(Oral): https://arxiv.org/abs/2104.13400

-

Code: None

No frame left behind: Full Video Action Recognition

-

Paper: https://arxiv.org/abs/2103.15395

-

Code: None

Learning Salient Boundary Feature for Anchor-free Temporal Action Localization

-

Paper: https://arxiv.org/abs/2103.13137

-

Code: None

Temporal Context Aggregation Network for Temporal Action Proposal Refinement

-

Paper: https://arxiv.org/abs/2103.13141

-

Code: None

-

Interpretation: CVPR 2021 | TCANet:最强时序动作提名修正网络

ACTION-Net: Multipath Excitation for Action Recognition

-

Paper: https://arxiv.org/abs/2103.07372

-

Code: https://github.com/V-Sense/ACTION-Net

Removing the Background by Adding the Background: Towards Background Robust Self-supervised Video Representation Learning

-

Homepage: https://fingerrec.github.io/index_files/jinpeng/papers/CVPR2021/project_website.html

-

Paper: https://arxiv.org/abs/2009.05769

-

Code: https://github.com/FingerRec/BE

TDN: Temporal Difference Networks for Efficient Action Recognition

-

Paper: https://arxiv.org/abs/2012.10071

-

Code: https://github.com/MCG-NJU/TDN

=================================================================================

A 3D GAN for Improved Large-pose Facial Recognition

-

Paper: https://arxiv.org/abs/2012.10545

-

Code: None

MagFace: A Universal Representation for Face Recognition and Quality Assessment

-

Paper(Oral): https://arxiv.org/abs/2103.06627

-

Code: https://github.com/IrvingMeng/MagFace

WebFace260M: A Benchmark Unveiling the Power of Million-Scale Deep Face Recognition

-

Homepage: https://www.face-benchmark.org/

-

Paper: https://arxiv.org/abs/2103.04098

-

Dataset: https://www.face-benchmark.org/

When Age-Invariant Face Recognition Meets Face Age Synthesis: A Multi-Task Learning Framework

-

Paper(Oral): https://arxiv.org/abs/2103.01520

-

Code: https://github.com/Hzzone/MTLFace

-

Dataset: https://github.com/Hzzone/MTLFace

===============================================================================

HLA-Face: Joint High-Low Adaptation for Low Light Face Detection

-

Homepage: https://daooshee.github.io/HLA-Face-Website/

-

Paper: https://arxiv.org/abs/2104.01984

-

Code: https://github.com/daooshee/HLA-Face-Code

CRFace: Confidence Ranker for Model-Agnostic Face Detection Refinement

-

Paper: https://arxiv.org/abs/2103.07017

-

Code: None

=====================================================================================

Cross Modal Focal Loss for RGBD Face Anti-Spoofing

-

Paper: https://arxiv.org/abs/2103.00948

-

Code: None

Deepfake检测(Deepfake Detection)

=========================================================================================

Spatial-Phase Shallow Learning: Rethinking Face Forgery Detection in Frequency Domain

-

Paper:https://arxiv.org/abs/2103.01856

-

Code: None

Multi-attentional Deepfake Detection

-

Paper:https://arxiv.org/abs/2103.02406

-

Code: None

=================================================================================

Continuous Face Aging via Self-estimated Residual Age Embedding

-

Paper: https://arxiv.org/abs/2105.00020

-

Code: None

PML: Progressive Margin Loss for Long-tailed Age Classification

-

Paper: https://arxiv.org/abs/2103.02140

-

Code: None

人脸表情识别(Facial Expression Recognition)

================================================================================================

Affective Processes: stochastic modelling of temporal context for emotion and facial expression recognition

-

Paper: https://arxiv.org/abs/2103.13372

-

Code: None

====================================================================

MagDR: Mask-guided Detection and Reconstruction for Defending Deepfakes

-

Paper: https://arxiv.org/abs/2103.14211

-

Code: None

==============================================================================

Differentiable Multi-Granularity Human Representation Learning for Instance-Aware Human Semantic Parsing

-

Paper: https://arxiv.org/abs/2103.04570

-

Code: https://github.com/tfzhou/MG-HumanParsing

2D/3D人体姿态估计(2D/3D Human Pose Estimation)

===================================================================================================

ViPNAS: Efficient Video Pose Estimation via Neural Architecture Search

-

Paper: ttps://arxiv.org/abs/2105.10154

-

Code: None

When Human Pose Estimation Meets Robustness: Adversarial Algorithms and Benchmarks

-

Paper: https://arxiv.org/abs/2105.06152

-

Code: None

Pose Recognition with Cascade Transformers

-

Paper: https://arxiv.org/abs/2104.06976

-

Code: https://github.com/mlpc-ucsd/PRTR

DCPose: Deep Dual Consecutive Network for Human Pose Estimation

-

Paper: https://arxiv.org/abs/2103.07254

-

Code: https://github.com/Pose-Group/DCPose

End-to-End Human Pose and Mesh Reconstruction with Transformers

-

Paper: https://arxiv.org/abs/2012.09760

-

Code: https://github.com/microsoft/MeshTransformer

PoseAug: A Differentiable Pose Augmentation Framework for 3D Human Pose Estimation

-

Paper(Oral): https://arxiv.org/abs/2105.02465

-

Code: https://github.com/jfzhang95/PoseAug

Camera-Space Hand Mesh Recovery via Semantic Aggregation and Adaptive 2D-1D Registration

-

Paper: https://arxiv.org/abs/2103.02845

-

Code: https://github.com/SeanChenxy/HandMesh

Monocular 3D Multi-Person Pose Estimation by Integrating Top-Down and Bottom-Up Networks

-

Paper: https://arxiv.org/abs/2104.01797

-

https://github.com/3dpose/3D-Multi-Person-Pose

HybrIK: A Hybrid Analytical-Neural Inverse Kinematics Solution for 3D Human Pose and Shape Estimation

-

Homepage: https://jeffli.site/HybrIK/

-

Paper: https://arxiv.org/abs/2011.14672

-

Code: https://github.com/Jeff-sjtu/HybrIK

动物姿态估计(Animal Pose Estimation)

=========================================================================================

From Synthetic to Real: Unsupervised Domain Adaptation for Animal Pose Estimation

-

Paper: https://arxiv.org/abs/2103.14843

-

Code: None

=======================================================================================

Semi-Supervised 3D Hand-Object Poses Estimation with Interactions in Time

-

Homepage: https://stevenlsw.github.io/Semi-Hand-Object/

-

Paper: https://arxiv.org/abs/2106.05266

-

Code: https://github.com/stevenlsw/Semi-Hand-Object

===================================================================================

POSEFusion: Pose-guided Selective Fusion for Single-view Human Volumetric Capture

-

Homepage: http://www.liuyebin.com/posefusion/posefusion.html

-

Paper(Oral): https://arxiv.org/abs/2103.15331

-

Code: None

=======================================================================================

Fourier Contour Embedding for Arbitrary-Shaped Text Detection

-

Paper: https://arxiv.org/abs/2104.10442

-

Code: None

场景文本识别(Scene Text Recognition)

=========================================================================================

Read Like Humans: Autonomous, Bidirectional and Iterative Language Modeling for Scene Text Recognition

-

Paper: https://arxiv.org/abs/2103.06495

-

Code: https://github.com/FangShancheng/ABINet

===============================================================

Checkerboard Context Model for Efficient Learned Image Compression

-

Paper: https://arxiv.org/abs/2103.15306

-

Code: None

Slimmable Compressive Autoencoders for Practical Neural Image Compression

-

Paper: https://arxiv.org/abs/2103.15726

-

Code: None

Attention-guided Image Compression by Deep Reconstruction of Compressive Sensed Saliency Skeleton

-

Paper: https://arxiv.org/abs/2103.15368

-

Code: None

=====================================================================

Teachers Do More Than Teach: Compressing Image-to-Image Models

-

Paper: https://arxiv.org/abs/2103.03467

-

Code: https://github.com/snap-research/CAT

Dynamic Slimmable Network

-

Paper: https://arxiv.org/abs/2103.13258

-

Code: https://github.com/changlin31/DS-Net

Network Quantization with Element-wise Gradient Scaling

-

Paper: https://arxiv.org/abs/2104.00903

-

Code: None

Zero-shot Adversarial Quantization

-

Paper(Oral): https://arxiv.org/abs/2103.15263

-

Code: https://git.io/Jqc0y

Learnable Companding Quantization for Accurate Low-bit Neural Networks

-

Paper: https://arxiv.org/abs/2103.07156

-

Code: None

=======================================================================================

Distilling Knowledge via Knowledge Review

-

Paper: https://arxiv.org/abs/2104.09044

-

Code: https://github.com/Jia-Research-Lab/ReviewKD

Distilling Object Detectors via Decoupled Features

-

Paper: https://arxiv.org/abs/2103.14475

-

Code: https://github.com/ggjy/DeFeat.pytorch

=================================================================================

Image Super-Resolution with Non-Local Sparse Attention

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/papers/Mei_Image_Super-Resolution_With_Non-Local_Sparse_Attention_CVPR_2021_paper.pdf

-

Code: https://github.com/HarukiYqM/Non-Local-Sparse-Attention

Towards Fast and Accurate Real-World Depth Super-Resolution: Benchmark Dataset and Baseline

-

Homepage: http://mepro.bjtu.edu.cn/resource.html

-

Paper: https://arxiv.org/abs/2104.06174

-

Code: None

ClassSR: A General Framework to Accelerate Super-Resolution Networks by Data Characteristic

-

Paper: https://arxiv.org/abs/2103.04039

-

Code: https://github.com/Xiangtaokong/ClassSR

AdderSR: Towards Energy Efficient Image Super-Resolution

-

Paper: https://arxiv.org/abs/2009.08891

-

Code: None

=======================================================================

Contrastive Learning for Compact Single Image Dehazing

-

Paper: https://arxiv.org/abs/2104.09367

-

Code: https://github.com/GlassyWu/AECR-Net

Temporal Modulation Network for Controllable Space-Time Video Super-Resolution

-

Paper: None

-

Code: https://github.com/CS-GangXu/TMNet

==================================================================================

Multi-Stage Progressive Image Restoration

-

Paper: https://arxiv.org/abs/2102.02808

-

Code: https://github.com/swz30/MPRNet

=================================================================================

PD-GAN: Probabilistic Diverse GAN for Image Inpainting

-

Paper: https://arxiv.org/abs/2105.02201

-

Code: https://github.com/KumapowerLIU/PD-GAN

TransFill: Reference-guided Image Inpainting by Merging Multiple Color and Spatial Transformations

-

Homepage: https://yzhouas.github.io/projects/TransFill/index.html

-

Paper: https://arxiv.org/abs/2103.15982

-

Code: None

==============================================================================

StyleMapGAN: Exploiting Spatial Dimensions of Latent in GAN for Real-time Image Editing

-

Paper: https://arxiv.org/abs/2104.14754

-

Code: https://github.com/naver-ai/StyleMapGAN

-

Demo Video: https://youtu.be/qCapNyRA_Ng

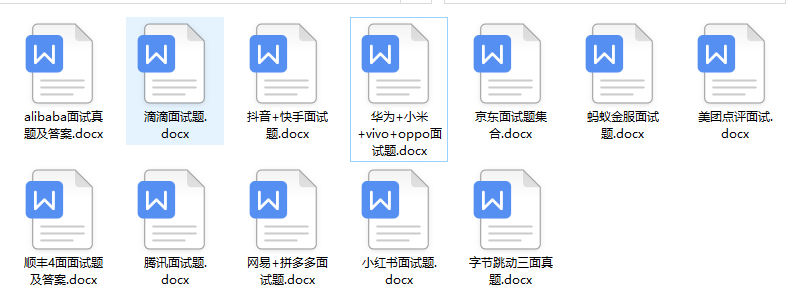

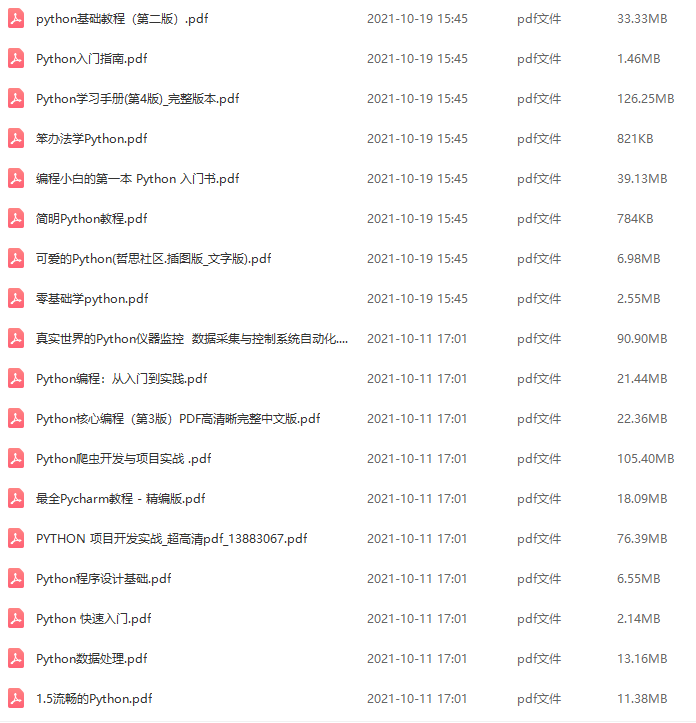

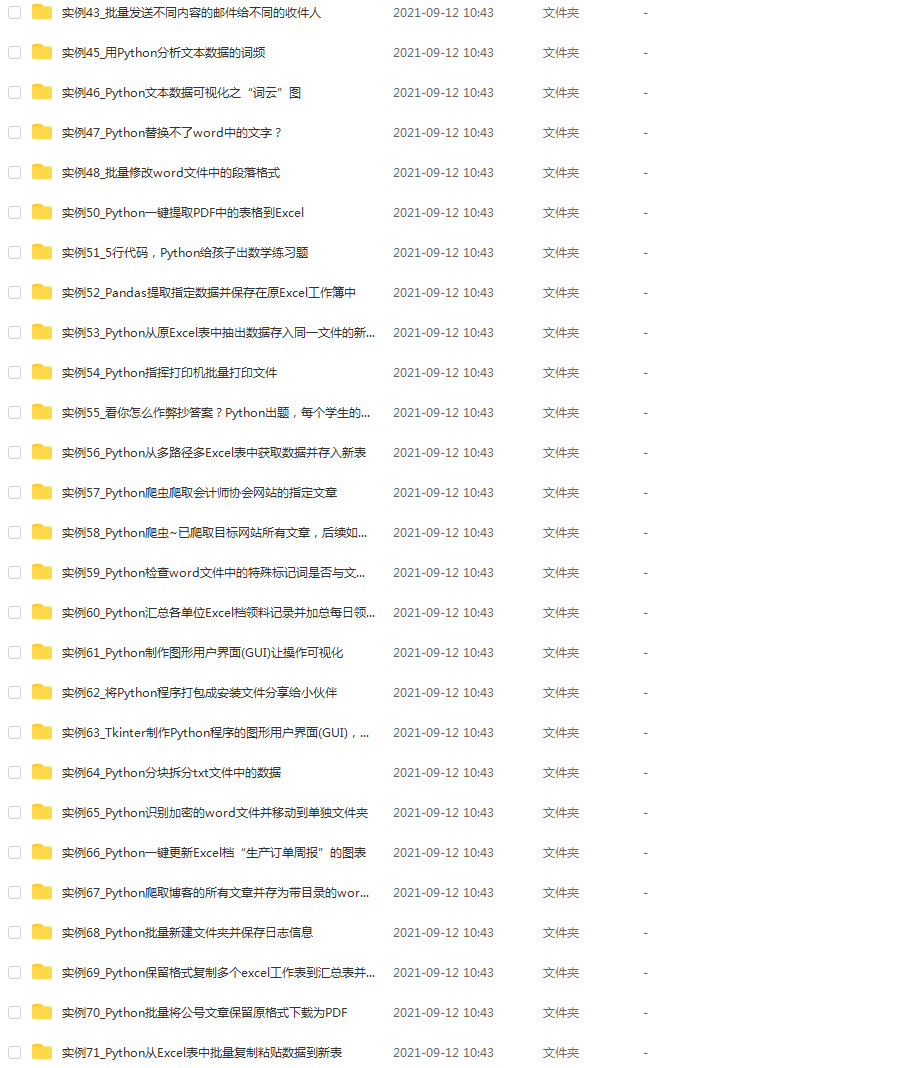

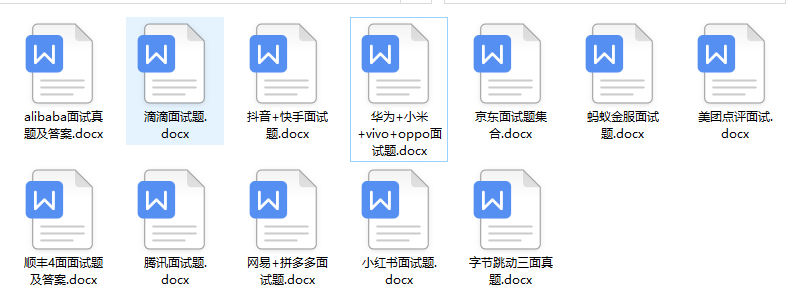

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Python工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

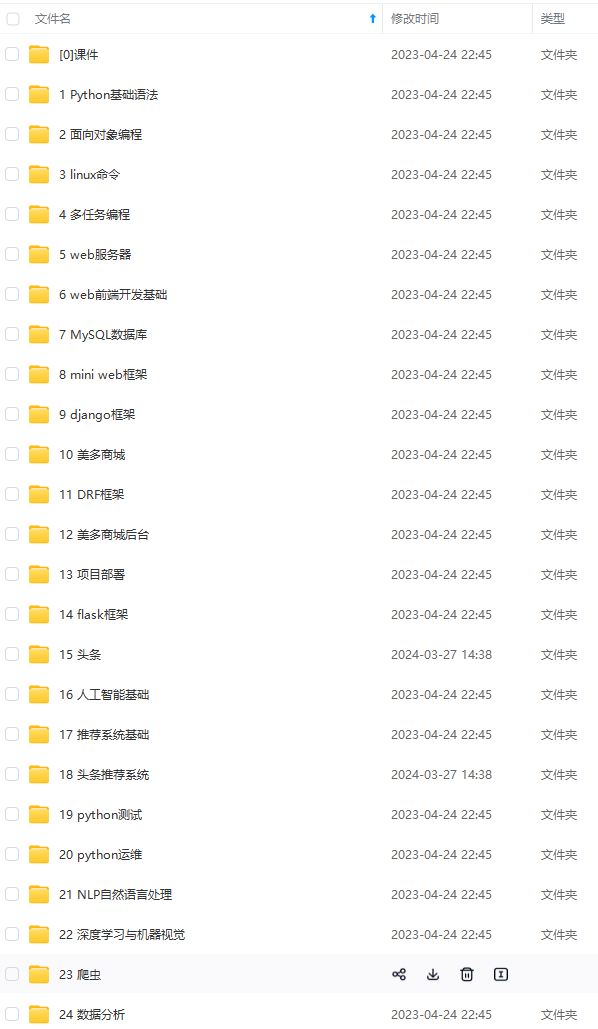

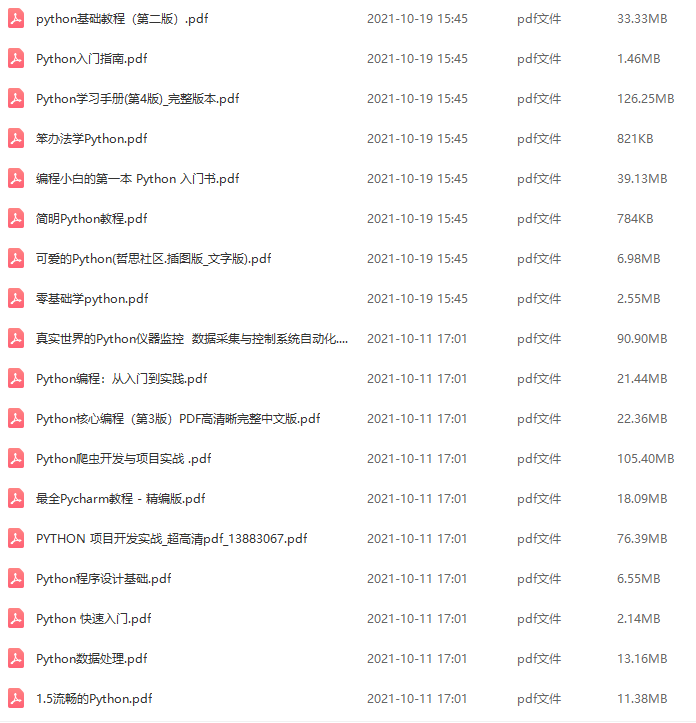

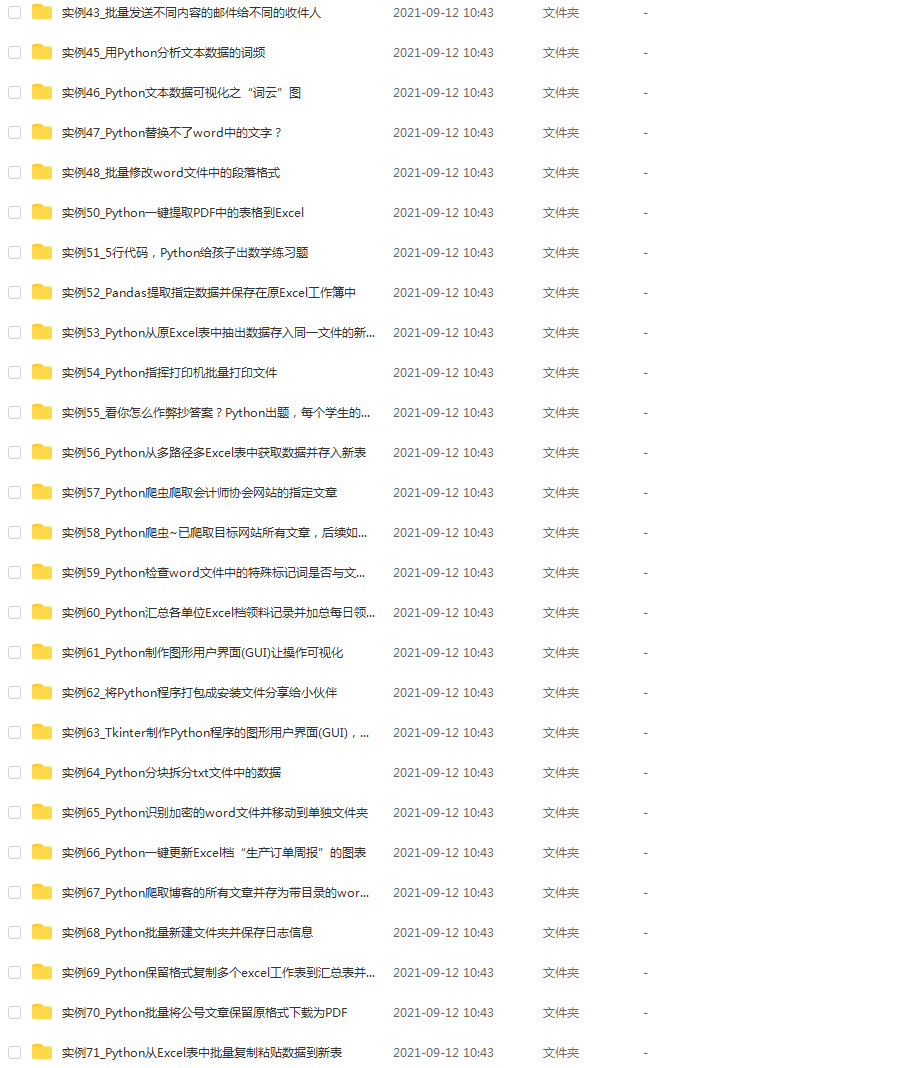

因此收集整理了一份《2024年Python开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Python开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以添加V获取:vip1024c (备注Python)

一个人可以走的很快,但一群人才能走的更远。如果你从事以下工作或对以下感兴趣,欢迎戳这里加入程序员的圈子,让我们一起学习成长!

AI人工智能、Android移动开发、AIGC大模型、C C#、Go语言、Java、Linux运维、云计算、MySQL、PMP、网络安全、Python爬虫、UE5、UI设计、Unity3D、Web前端开发、产品经理、车载开发、大数据、鸿蒙、计算机网络、嵌入式物联网、软件测试、数据结构与算法、音视频开发、Flutter、IOS开发、PHP开发、.NET、安卓逆向、云计算

-

Paper: https://arxiv.org/abs/2104.09367

-

Code: https://github.com/GlassyWu/AECR-Net

Temporal Modulation Network for Controllable Space-Time Video Super-Resolution

-

Paper: None

-

Code: https://github.com/CS-GangXu/TMNet

==================================================================================

Multi-Stage Progressive Image Restoration

-

Paper: https://arxiv.org/abs/2102.02808

-

Code: https://github.com/swz30/MPRNet

=================================================================================

PD-GAN: Probabilistic Diverse GAN for Image Inpainting

-

Paper: https://arxiv.org/abs/2105.02201

-

Code: https://github.com/KumapowerLIU/PD-GAN

TransFill: Reference-guided Image Inpainting by Merging Multiple Color and Spatial Transformations

-

Homepage: https://yzhouas.github.io/projects/TransFill/index.html

-

Paper: https://arxiv.org/abs/2103.15982

-

Code: None

==============================================================================

StyleMapGAN: Exploiting Spatial Dimensions of Latent in GAN for Real-time Image Editing

-

Paper: https://arxiv.org/abs/2104.14754

-

Code: https://github.com/naver-ai/StyleMapGAN

-

Demo Video: https://youtu.be/qCapNyRA_Ng

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Python工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Python开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

[外链图片转存中…(img-FycMlNyw-1712255698070)]

[外链图片转存中…(img-FjtJXbRx-1712255698072)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Python开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以添加V获取:vip1024c (备注Python)

[外链图片转存中…(img-HrwFJrx1-1712255698072)]

一个人可以走的很快,但一群人才能走的更远。如果你从事以下工作或对以下感兴趣,欢迎戳这里加入程序员的圈子,让我们一起学习成长!

AI人工智能、Android移动开发、AIGC大模型、C C#、Go语言、Java、Linux运维、云计算、MySQL、PMP、网络安全、Python爬虫、UE5、UI设计、Unity3D、Web前端开发、产品经理、车载开发、大数据、鸿蒙、计算机网络、嵌入式物联网、软件测试、数据结构与算法、音视频开发、Flutter、IOS开发、PHP开发、.NET、安卓逆向、云计算