- 1【Linux更新驱动、cuda和cuda toolkit】_linux系统如何更新主机的cuda版本

- 2新能源汽车2022智能化,感知方案的终极答案是?

- 3使用rust学习基本算法(四)

- 4C# Stopwatch计时器 记录方法执行时间_stopwatch stopwatch = new stopwatch();

- 5blinker小白入门学习_esp32_#include

- 6每个私域运营者都必须掌握的 5 大关键流量运营核心打法!

- 7精准测试:代码覆盖率与测试覆盖率

- 8像素与分辨率_像素与分辨率对照表

- 9「iOS」怎么修改去掉Navigation Bar上的返回按钮文本颜色,箭头颜色以及导航栏按钮的颜色_消除颜色type_navigation_bar

- 10如何快速开发一个自己的微信小程序_小程序怎么开发

NLP tokenizer (分词器) 介绍_文本分类tokenizer分词器

赞

踩

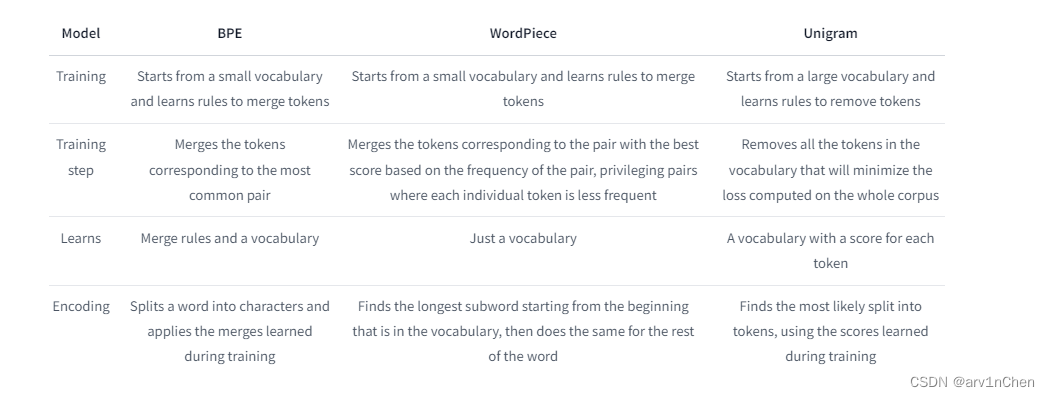

主流的三种分词算法

Byte-Pair Encoding tokenization

使用BPE的模型:GPT、GPT-2、RoBERTa、BART 和 DeBERTa

训练算法(Training algorithm)

1. 获得基础词表(base vocabulary),由语料的所有单个字符组成(是在标准化和预处理之后)

假设我们的语料为

"hug", "pug", "pun", "bun", "hugs"

那么我们的基础词表就是 ["b", "g", "h", "n", "p", "s", "u"],

2. 统计词表中,任意两个token的组合次数,次数最大的加入词表,直到词表达到设定大小(vocabulary size)

3.保存词表和合并顺序

分词算法(Tokenization algorithm)

分词算法和训练算法类似,步骤如下

Tokenization follows the training process closely, in the sense that new inputs are tokenized by applying the following steps:

- Normalization

- Pre-tokenization

- Splitting the words into individual characters

- Applying the merge rules learned in order on those splits

首先将一个单词拆成单个字符,然后应用训练时学习到的合并顺序,依次合并字符。

WordPiece tokenization

使用WordPiece的模型:BERT, DistilBERT

WordPiece的训练算法和BPE类似,但是分词算法则不一样。

训练算法(Training algorithm)

1.获得初始词表

假设我的语料如下

("hug", 10), ("pug", 5), ("pun", 12), ("bun", 4), ("hugs", 5)

那么初始词表就是

["b", "h", "p", "##g", "##n", "##s", "##u"]

注意,WordPiece会为非开头的token添加##前缀

2. 根据如下公式,计算每个组合的score,score最高的组合添加到词表中,直到词表达到设定大小(vocabulary size)

score=(freq_of_pair)/(freq_of_first_element×freq_of_second_element)

合并后的词表

["b", "h", "p", "##g", "##n", "##s", "##u", "##gs", "hu", "hug"]

分词算法(Tokenization algorithm)

WordPiece 只保存最终词表,不保存合并规则(这点和BPE不一样)

从要标记化的单词开始,WordPiece 找到词汇表中最长的子词,然后对其进行分割。例如,如果我们使用在上面的示例中学习的词汇表,对于单词,"hugs"词汇表中从头开始的最长子词是"hug",因此我们在那里进行分割并得到["hug", "##s"]。然后我们继续"##s",它在词汇表中,所以 的标记化"hugs"是["hug", "##s"]。

如果使用 BPE,按照合并规则的顺序,最红将获得["hu", "##gs"],因此编码是不同的。

另一个例子,让我们看看如何对单词"bugs"进行标记。"b"是从词汇表中单词开头开始的最长子词,因此我们在那里拆分并得到["b", "##ugs"]。然后"##u"是词汇表中从其开头开始的最长子词,因此我们将其拆分并得到["b", "##u, "##gs"]。最后,"##gs"是 在词汇表中,所以最终分词结果是["b", "##u, "##gs"]。

wordPiece 和BPE的不同

1. BPE保存合并顺序,而wordPiece不保存,总是从单词起始搜索在词表中出现的最长子词。

2. 合并规则不同,BPE简单计算共现次数,而wordPiece是计算score(计算联合概率除以边缘概率)

3. 当分某个单词分词后,只要有一个token不在词表,wordPiece将整个单词标记为UNK,而BPE只将不在词表的token标记为UNK

例如:"bum","b" and "##u"都在词表,但是"##m"不在词表,那最终的分词结果就是["[UNK]"], 而不是["b", "##u", "[UNK]"],而如果使用BPE,则结果为["b", "##u", "[UNK]"]。

Unigram tokenization

Unigram tokenization also starts with setting a desired vocabulary size. However, the main difference between unigram and the previous 2 approaches is that we don’t start with a base vocabulary of characters only. Instead, the base vocabulary has all the words and symbols. And tokens are gradually removed to arrive at the final vocabulary.

The way that tokens are removed is key to the unigram tokenizer. It uses a language model at each step and keeps removing x% of the pair (definition of pair is same as in word piece) which have the highest loss. Loss is generally defined as the log likelihood over the vocabulary at that training step.

The Unigram algorithm always keeps the base characters so that any word can be tokenized.

Unigram is mostly used in conjunction with the SentencePiece.

演示代码

- corpus = [

- "This is the Hugging Face Course.",

- "This chapter is about tokenization.",

- "This section shows several tokenizer algorithms.",

- "Hopefully, you will be able to understand how they are trained and generate tokens.",

- ]

-

- from transformers import AutoTokenizer

-

- tokenizer = AutoTokenizer.from_pretrained("/home/chenjq/model/xlnet-base-cased/")

-

-

- from collections import defaultdict

-

- word_freqs = defaultdict(int)

- for text in corpus:

- words_with_offsets = tokenizer.backend_tokenizer.pre_tokenizer.pre_tokenize_str(text)

- new_words = [word for word, offset in words_with_offsets]

- for word in new_words:

- word_freqs[word] += 1

-

- word_freqs

-

-

-

- char_freqs = defaultdict(int)

- subwords_freqs = defaultdict(int)

- for word, freq in word_freqs.items():

- for i in range(len(word)):

- char_freqs[word[i]] += freq

- # Loop through the subwords of length at least 2

- for j in range(i + 2, len(word) + 1):

- subwords_freqs[word[i:j]] += freq

-

- # Sort subwords by frequency

- sorted_subwords = sorted(subwords_freqs.items(), key=lambda x: x[1], reverse=True)

- sorted_subwords[:10]

-

-

-

- token_freqs = list(char_freqs.items()) + sorted_subwords[: 300 - len(char_freqs)]

- token_freqs = {token: freq for token, freq in token_freqs}

-

-

-

- from math import log

-

- total_sum = sum([freq for token, freq in token_freqs.items()])

- model = {token: -log(freq / total_sum) for token, freq in token_freqs.items()}

-

-

-

- def encode_word(word, model):

- best_segmentations = [{"start": 0, "score": 1}] + [

- {"start": None, "score": None} for _ in range(len(word))

- ]

- for start_idx in range(len(word)):

- # This should be properly filled by the previous steps of the loop

- best_score_at_start = best_segmentations[start_idx]["score"]

- for end_idx in range(start_idx + 1, len(word) + 1):

- token = word[start_idx:end_idx]

- if token in model and best_score_at_start is not None:

- score = model[token] + best_score_at_start

- # If we have found a better segmentation ending at end_idx, we update

- if (

- best_segmentations[end_idx]["score"] is None

- or best_segmentations[end_idx]["score"] > score

- ):

- best_segmentations[end_idx] = {"start": start_idx, "score": score}

-

- segmentation = best_segmentations[-1]

- if segmentation["score"] is None:

- # We did not find a tokenization of the word -> unknown

- return ["<unk>"], None

-

- score = segmentation["score"]

- start = segmentation["start"]

- end = len(word)

- tokens = []

- while start != 0:

- tokens.insert(0, word[start:end])

- next_start = best_segmentations[start]["start"]

- end = start

- start = next_start

- tokens.insert(0, word[start:end])

- return tokens, score

-

-

- print(encode_word("Hopefully", model))

- print(encode_word("This", model))

-

-

-

- def compute_loss(model):

- loss = 0

- for word, freq in word_freqs.items():

- _, word_loss = encode_word(word, model)

- loss += freq * word_loss

- return loss

-

-

-

- compute_loss(model)

-

-

-

- import copy

-

-

- def compute_scores(model):

- scores = {}

- model_loss = compute_loss(model)

- for token, score in model.items():

- # We always keep tokens of length 1

- if len(token) == 1:

- continue

- model_without_token = copy.deepcopy(model)

- _ = model_without_token.pop(token)

- scores[token] = compute_loss(model_without_token) - model_loss

- return scores

-

-

-

- scores = compute_scores(model)

- print(scores["ll"])

- print(scores["his"])

-

-

- percent_to_remove = 0.1

- while len(model) > 100:

- scores = compute_scores(model)

- sorted_scores = sorted(scores.items(), key=lambda x: x[1])

- # Remove percent_to_remove tokens with the lowest scores.

- for i in range(int(len(model) * percent_to_remove)):

- _ = token_freqs.pop(sorted_scores[i][0])

-

- total_sum = sum([freq for token, freq in token_freqs.items()])

- model = {token: -log(freq / total_sum) for token, freq in token_freqs.items()}

-

-

-

- def tokenize(text, model):

- words_with_offsets = tokenizer.backend_tokenizer.pre_tokenizer.pre_tokenize_str(text)

- pre_tokenized_text = [word for word, offset in words_with_offsets]

- encoded_words = [encode_word(word, model)[0] for word in pre_tokenized_text]

- return sum(encoded_words, [])

-

-

- tokenize("This is the Hugging Face course.", model)

SentencePiece

All the tokenizers discussed above assume that space separates words. This is true except for a few languages like Chinese, Japanese etc. SentencePiece does not treat space as a separator, instead, it takes the string as input in its original raw format, i.e. along with all spaces. It then uses BPE or unigram as its tokenizers to construct the vocabulary.

Example: “I just got a funky phone case!”

Tokenized: [“_I”, “_just”, “_got”, “_a”, “_fun”, “ky”, “_phone”, “_case”]

The tokens can be joined to form a string and “_” can be replaced with space to get the original string back.

https://towardsdatascience.com/a-comprehensive-guide-to-subword-tokenisers-4bbd3bad9a7c

仅训练词表

场景:当我们使用如bert(wordPiece分词)预训练模型的时候,由于预训练的bert语料可能和我们手上的不一样,导致很多token被分成[unk],这时候我们希望对词表重新训练,但是使用的分词算法保持不变(依旧是wordPiece)

https://huggingface.co/learn/nlp-course/chapter6/2

- import pandas as pd

- from datasets import Dataset

- from transformers import AutoTokenizer

-

- # 加载原有的bert tokenizer

- old_tokenizer = AutoTokenizer.from_pretrained('../models/bert-base-chinese')

-

- # 读取数据

- src = pd.read_csv('./test.src', names=['src'])

- tgt = pd.read_csv('./test.tgt', names=['tgt'])

- src_tgt = pd.concat([src[['src']], tgt[['tgt']]], axis=1)

- src_tgt.columns = ['document', 'summary']

- raw_datasets = Dataset.from_pandas(src_tgt)

- print(raw_datasets)

-

- # 构建一个生成器读取语料

- def get_training_corpus():

- dataset = raw_datasets

- for start_idx in range(0, len(dataset), 1000):

- samples = dataset[start_idx: start_idx + 1000]

- yield samples["document"]

-

-

- training_corpus = get_training_corpus()

-

- # 训练一个和原来一样的分词器,但是词表不一样,是基于我们自己的语料生成的

- tokenizer = old_tokenizer.train_new_from_iterator(training_corpus, 52000) # 设置新的词表大小为52000

-

- # 保存到test-tok目录

- tokenizer.save_pretrained("test-tok")

重新训练分词器

https://huggingface.co/learn/nlp-course/chapter6/8?fw=pt#building-a-wordpiece-tokenizer-from-scratch

训练

- from tokenizers import Tokenizer

- from tokenizers.models import BPE

- tokenizer = Tokenizer(BPE(unk_token="[UNK]"))

-

- from tokenizers.trainers import BpeTrainer

- trainer = BpeTrainer(special_tokens=["[UNK]", "[CLS]", "[SEP]", "[PAD]", "[MASK]"])

-

- from tokenizers.pre_tokenizers import Whitespace

- tokenizer.pre_tokenizer = Whitespace()

-

- files = ['./train_sample.txt']

- tokenizer.train(files, trainer)

- tokenizer.save("./my-vocab.json")

-

-

使用分词器

- from tokenizers import Tokenizer

- from tokenizers.models import BPE

-

- sent1 = "有感伤才完美 声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/从前慢现在也慢/article/detail/527425推荐阅读

相关标签

Copyright © 2003-2013 www.wpsshop.cn 版权所有,并保留所有权利。