热门标签

当前位置: article > 正文

3节点ubuntu22.04服务器docker-compose方式部署高可用elk+kafka日志系统并接入nginx日志_ubuntu三台机器中安装docker

作者:从前慢现在也慢 | 2024-05-14 18:04:41

赞

踩

ubuntu三台机器中安装docker

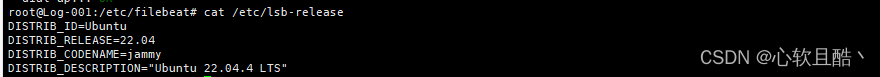

一:系统版本:

二:部署环境:

节点名称

IP

部署组件及版本

机器CPU

机器内存

机器存储

Log-001

10.10.100.1

zookeeper:3.4.13

kafka:2.8.1

elasticsearch:7.7.0

logstash:7.7.0

kibana:7.7.0

zookeeper:/data/zookeeper

kafka:/data/kafka

elasticsearch:/data/es

logstash:/data/logstash

kibana:/data/kibana

2*1c/16cores

62g

50g 系统

800g 数据盘

Log-002

10.10.100.2

zookeeper:3.4.13

kafka:2.8.1

elasticsearch:7.7.0

logstash:7.7.0

kibana:7.7.0

zookeeper:/data/zookeeper

kafka:/data/kafka

elasticsearch:/data/es

logstash:/data/logstash

kibana:/data/kibana

2*1c/16cores

62g

50g 系统

800g 数据盘

Log-003

10.10.100.3

zookeeper:3.4.13

kafka:2.8.1

elasticsearch:7.7.0

logstash:7.7.0

kibana:7.7.0

zookeeper:/data/zookeeper

kafka:/data/kafka

elasticsearch:/data/es

logstash:/data/logstash

kibana:/data/kibana

2*1c/16cores

62g

50g 系统

800g 数据盘

三:部署流程:

(1)安装docker和docker-compose

(2)提前拉取需要用到的镜像

(3)准备应用的配置文件

(4)编辑各组件配置文件

(5)所有组件的部署方式全部为docker-compose形式编排部署,docker-compose.yml文件所在路径/root/elk_docker_compose/,编排内容:

(6)启动服务

(7)验证集群各组件服务状态

三:日志收集

(1)以nginx日志为例,安装filebeat日志采集器

apt-get install filebeat(2)配置filebeat向kafka写数据

(3)配置验证:使用 Filebeat 的配置测试命令来验证配置文件的正确性:

filebeat test config

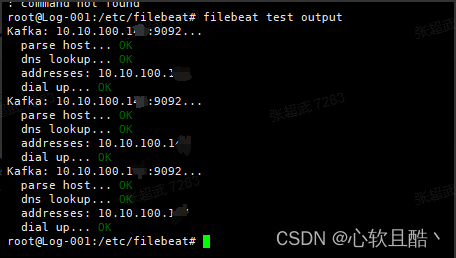

(4)连接测试:可以测试 Filebeat 到 Kafka 的连接:

filebeat test output

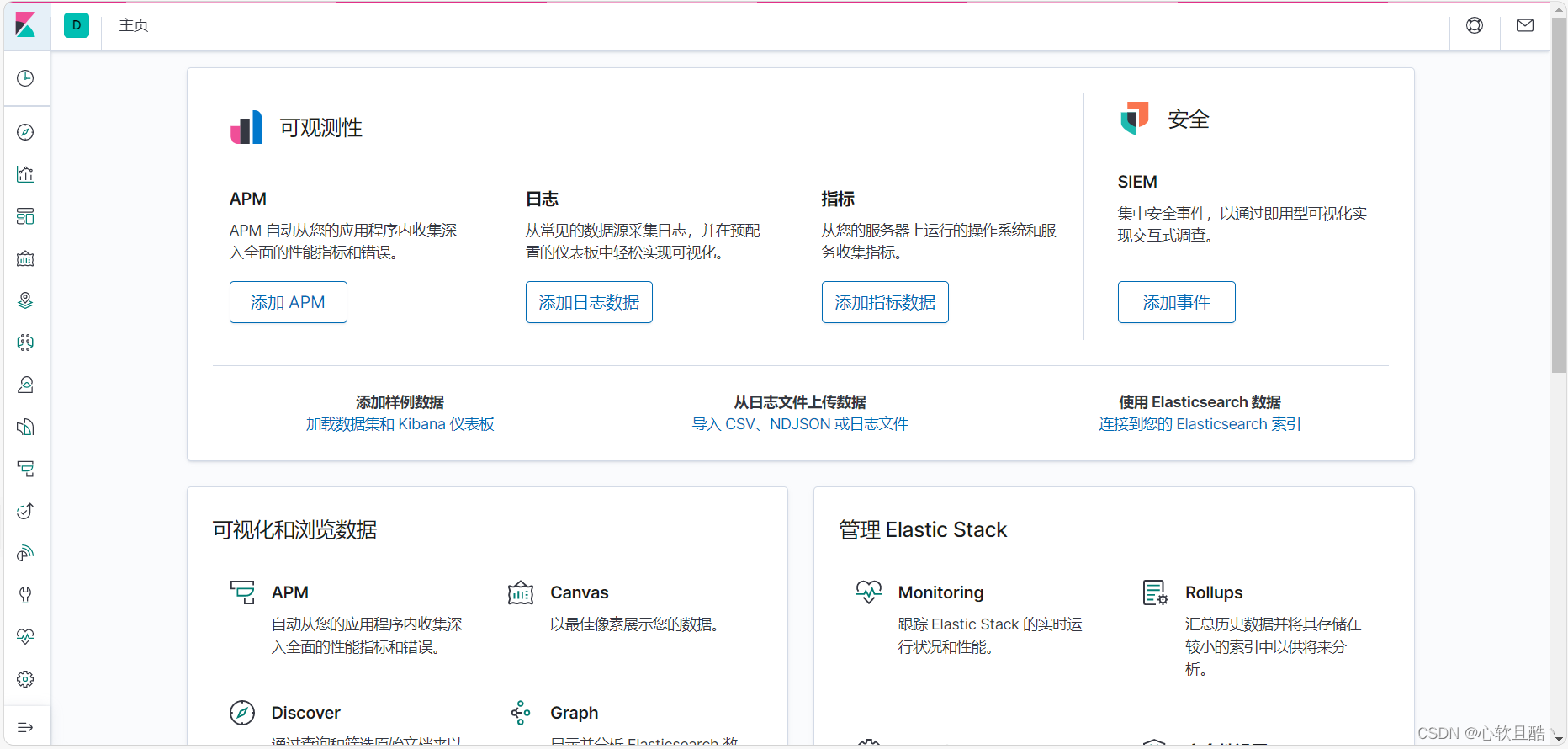

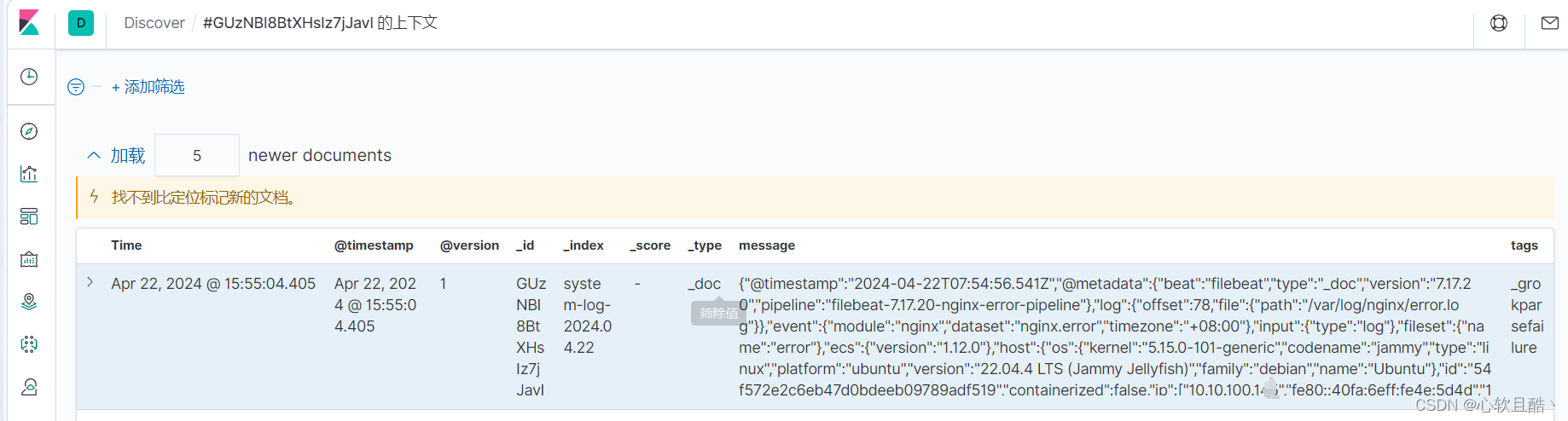

(5)登录kibana控制台查看nginx日志是否已正常收集到

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/从前慢现在也慢/article/detail/569441

推荐阅读

相关标签