- 1【LLM 论文】UPRISE:使用 prompt retriever 检索 prompt 来让 LLM 实现 zero-shot 解决 task

- 2队列的顺序、链式表示与实现_1. 创建队列的接口。 2. 队列的顺序实现。 3. 队列的链式实现。 4. 用队列求解素

- 3基于安卓的视频遥控小车——红外遥控部分_android音频转红外

- 4[Flask] Cookie与Session_flask cookies session

- 5转载:分布式文件存储系统MinIO入门教程_文件存储 minio

- 6uni_modules/uview-ui/components/u-icon/u-icon]错误: TypeError: Cannot read property ‘props‘ of undefi_页面【uni_modules/uview-ui/components/u--form/u--form

- 7mysql 安装_mysql安装

- 8UA大全

- 9西北工业大学noj_西工大noj

- 102024年 第15届蓝桥杯 省赛 python 研究生组 部分题解_蓝桥杯15届研究生组题目

LLMs:OpenAI 官方文档发布提高 GPT 使用效果指南—GPT最佳实践(GPT best practices)翻译与解读_收藏|openai最新官方gpt最佳实践指南

赞

踩

LLMs:OpenAI 官方文档发布提高 GPT 使用效果指南—GPT最佳实践(GPT best practices)翻译与解读

导读:为了获得优质输出,需要遵循几点基本原则:

>> 写清楚指令:将任务和期望输出描述得尽可能清楚。GPT 无法读取您的思维,需要明确的指令。

>> 提供参考文本:让GPT基于参考文本回答问题,可以减少错误信息。

>> 将复杂任务分解为短小简洁的子任务:这可以降低错误率。

>> 让GPT思考一段时间:显式告知GPT需要细思密 practice。让GPT先解决问题再回答,可以有更准确的答案。

>> 结合外部工具:如果外部工具能更有效完成任务,则可以利用其优化GPT输出。

>> 有系统地测试变化:通过广泛的测试集系统地评估效果。这有助于优化系统设计。

总的来说,直接询问GPT通常得到粗略的答案。只有通过改变上下文和提问方式,才能取得理想效果。所以只要有足够的时间和最新强大的GPT模型,情况通常会更好。

目录

OpenAI 官方文档发布提高 GPT 使用效果指南—GPT最佳实践GPT best practices翻译与解读

获得更好结果的六个策略Six strategies for getting better results—清晰指令/提供参考文本/拆分任务/给予思考/外部工具/量化性能

1、编写清晰的指示Write clear instructions——包含详细信息/人物角色/分隔符/划分步骤/提供示例/指定输出长度

2、提供参考文本Provide reference text——提供参考文本/提供参考引用

3、将复杂任务拆分为简单子任务Split complex tasks into simpler subtasks——意图分类/先前对话压缩为摘要再继续/长文档逐步摘要并递归建全

4、给予 GPT "思考" 的时间Give GPTs time to "think"——始于原理逐步推理/构建一系列内心独白/是否遗漏内容

5、使用外部工具Use external tools——嵌入外部知识/借鉴代码或API示例

6、系统化地测试变化Test changes systematically——黄金标准答案评估输出

1、策略:编写清晰的指示Strategy: Write clear instructions

1.1、战术:在查询中包含详细信息以获得更相关的答案Tactic: Include details in your query to get more relevant answers

1.2、战术:要求模型采用一种人物角色Tactic: Ask the model to adopt a persona

1.3、战术:使用分隔符清晰标示输入的不同部分Tactic: Use delimiters to clearly indicate distinct parts of the input

1.4、战术:指定完成任务所需的步骤Tactic: Specify the steps required to complete a task

1.5、战术:提供示例Tactic: Provide examples

1.6、战术:指定输出的期望长度Tactic: Specify the desired length of the output

2、战略:提供参考文本Strategy: Provide reference text

2.1、战术:指示模型使用参考文本回答问题Tactic: Instruct the model to answer using a reference text

2.2、战术:指示模型使用参考文本中的引用来回答问题Tactic: Instruct the model to answer with citations from a reference text

3、战略:将复杂任务拆分为更简单的子任务Strategy: Split complex tasks into simpler subtasks

4、战略:给予 GPT 模型"思考"的时间Strategy: Give GPTs time to "think"

4.3、战术:询问模型在之前的步骤中是否遗漏了任何内容Tactic: Ask the model if it missed anything on previous passes

5、策略:使用外部工具Strategy: Use external tools

6、策略:系统化地测试更改Strategy: Test changes systematically

6.1、战术:根据黄金标准答案评估模型输出Tactic: Evaluate model outputs with reference to gold-standard answers

OpenAI 官方文档发布提高 GPT 使用效果指南—GPT最佳实践GPT best practices翻译与解读

| 时间 | 2023-06-11 |

| 地址 | https://platform.openai.com/docs/guides/gpt-best-practices/six-strategies-for-getting-better-results |

| 作者 | OpenAI |

| This guide shares strategies and tactics for getting better results from GPTs. The methods described here can sometimes be deployed in combination for greater effect. We encourage experimentation to find the methods that work best for you. | 本指南分享了从 GPT 中获得更好结果的策略和战术。这里描述的方法有时可以组合使用以增加效果。我们鼓励尝试不同的方法,找出最适合您的方法。 |

| Some of the examples demonstrated here currently work only with our most capable model, gpt-4. If you don't yet have access to gpt-4 consider joining the waitlist. In general, if you find that a GPT model fails at a task and a more capable model is available, it's often worth trying again with the more capable model. | 这里演示的一些示例目前仅适用于我们最强大的模型 gpt-4。如果您还没有访问 gpt-4 的权限,请考虑加入等待名单。通常情况下,如果您发现 GPT 模型在一个任务上失败,并且有一个更强大的模型可用,重新尝试使用更强大的模型往往是值得的。 |

获得更好结果的六个策略Six strategies for getting better results—清晰指令/提供参考文本/拆分任务/给予思考/外部工具/量化性能

1、编写清晰的指示Write clear instructions——包含详细信息/人物角色/分隔符/划分步骤/提供示例/指定输出长度

| GPTs can’t read your mind. If outputs are too long, ask for brief replies. If outputs are too simple, ask for expert-level writing. If you dislike the format, demonstrate the format you’d like to see. The less GPTs have to guess at what you want, the more likely you’ll get it. | GPT 无法读取您的思想。如果输出过长,请要求简短回复。如果输出过于简单,请要求专家级的写作。如果您不喜欢的格式,请演示您想要看到的格式。GPT 越少猜测您的意图,您得到所需结果的可能性就越大。 |

| Tactics: Include details in your query to get more relevant answers Ask the model to adopt a persona Use delimiters to clearly indicate distinct parts of the input Specify the steps required to complete a task Provide examples Specify the desired length of the output | 战术: 在查询中包含详细信息以获得更相关的答案 要求模型采用一种人物角色 使用分隔符清晰地表示输入的不同部分 指定完成任务所需的步骤 提供示例 指定所需的输出长度 |

2、提供参考文本Provide reference text——提供参考文本/提供参考引用

| GPTs can confidently invent fake answers, especially when asked about esoteric topics or for citations and URLs. In the same way that a sheet of notes can help a student do better on a test, providing reference text to GPTs can help in answering with fewer fabrications. | GPT 可以自信地虚构答案,尤其是在问及奇特的主题、引用和 URL 的情况下。就像一张笔记纸可以帮助学生在考试中表现更好一样,向 GPT 提供参考文本可以帮助其以更少的虚构回答。 |

| Tactics: Instruct the model to answer using a reference text Instruct the model to answer with citations from a reference text | 战术: 指示模型使用参考文本回答问题 指示模型使用参考文本中的引用回答问题 |

3、将复杂任务拆分为简单子任务Split complex tasks into simpler subtasks——意图分类/先前对话压缩为摘要再继续/长文档逐步摘要并递归建全

| Just as it is good practice in software engineering to decompose a complex system into a set of modular components, the same is true of tasks submitted to GPTs. Complex tasks tend to have higher error rates than simpler tasks. Furthermore, complex tasks can often be re-defined as a workflow of simpler tasks in which the outputs of earlier tasks are used to construct the inputs to later tasks. | 就像软件工程中将复杂系统分解为一组模块化组件一样,提交给 GPT 的任务也是如此。复杂任务的错误率往往比简单任务高。此外,复杂任务通常可以重新定义为由较简单任务的输出构成后续任务的输入的工作流。 |

| Tactics: Use intent classification to identify the most relevant instructions for a user query For dialogue applications that require very long conversations, summarize or filter previous dialogue Summarize long documents piecewise and construct a full summary recursively | 战术: 使用意图分类来识别用户查询的最相关指令 对于需要非常长对话的对话应用程序,对先前对话进行总结或筛选 将长文档逐段摘要并递归地构建完整摘要 |

4、给予 GPT "思考" 的时间Give GPTs time to "think"——始于原理逐步推理/构建一系列内心独白/是否遗漏内容

| If asked to multiply 17 by 28, you might not know it instantly, but can still work it out with time. Similarly, GPTs make more reasoning errors when trying to answer right away, rather than taking time to work out an answer. Asking for a chain of reasoning before an answer can help GPTs reason their way toward correct answers more reliably. | 如果被要求计算 17 乘以 28,您可能不会立即知道答案,但可以通过时间来计算出来。同样,GPT 在试图立即回答问题时会出现更多的推理错误,而不是花时间思考答案。在回答之前要求一系列推理过程可以帮助 GPT 更可靠地推理出正确答案。 |

| Tactics: Instruct the model to work out its own solution before rushing to a conclusion Use inner monologue or a sequence of queries to hide the model's reasoning process Ask the model if it missed anything on previous passes | 战术: 指示模型在匆忙得出结论之前解决问题 使用内心独白或一系列查询隐藏模型的推理过程 询问模型是否在之前的处理中遗漏了任何内容 |

5、使用外部工具Use external tools——嵌入外部知识/借鉴代码或API示例

| Compensate for the weaknesses of GPTs by feeding them the outputs of other tools. For example, a text retrieval system can tell GPTs about relevant documents. A code execution engine can help GPTs do math and run code. If a task can be done more reliably or efficiently by a tool rather than by a GPT, offload it to get the best of both. | 通过将其他工具的输出提供给 GPT,可以弥补 GPT 的不足之处。例如,文本检索系统可以告诉 GPT 相关文档的信息。代码执行引擎可以帮助 GPT 进行数学计算和运行代码。如果一个任务可以通过工具而不是 GPT 更可靠或更高效地完成,可以将其卸载以获得更好的效果。 |

| Tactics: Use embeddings-based search to implement efficient knowledge retrieval Use code execution to perform more accurate calculations or call external APIs | 战术: 使用基于嵌入的搜索实现高效的知识检索 使用代码执行来进行更准确的计算或调用外部 API |

6、系统化地测试变化Test changes systematically——黄金标准答案评估输出

| Improving performance is easier if you can measure it. In some cases a modification to a prompt will achieve better performance on a few isolated examples but lead to worse overall performance on a more representative set of examples. Therefore to be sure that a change is net positive to performance it may be necessary to define a comprehensive test suite (also known an as an "eval"). | 如果能够对性能进行测量,改进性能就会更容易。在某些情况下,对提示进行修改可能会在一些孤立的示例上取得更好的性能,但在更具代表性的一组示例上导致整体性能变差。因此,为了确保改变对性能的总体影响是积极的,可能需要定义一个全面的测试套件(也称为“评估”)。 |

| Tactic: Evaluate model outputs with reference to gold-standard answers | 战术: 根据与黄金标准答案的对比评估模型输出 |

Tactics战术

| Each of the strategies listed above can be instantiated with specific tactics. These tactics are meant to provide ideas for things to try. They are by no means fully comprehensive, and you should feel free to try creative ideas not represented here. | 上述列出的每个策略都可以用具体的战术来实施。这些战术旨在提供一些可以尝试的思路。它们绝不是全面详尽的,您可以随意尝试不在此处表示的创造性思路。 |

1、策略:编写清晰的指示Strategy: Write clear instructions

1.1、战术:在查询中包含详细信息以获得更相关的答案Tactic: Include details in your query to get more relevant answers

| In order to get a highly relevant response, make sure that requests provide any important details or context. Otherwise you are leaving it up to the model to guess what you mean. | 为了获得高度相关的回答,请确保请求提供任何重要的细节或背景。否则,您将让模型去猜测您的意思。 |

| Worse | Better |

| How do I add numbers in Excel? 如何在 Excel 中相加数字? | How do I add up a row of dollar amounts in Excel? I want to do this automatically for a whole sheet of rows with all the totals ending up on the right in a column called "Total". 如何在 Excel 中将一行美元金额相加?我希望为整个工作表的每一行自动执行此操作,所有的总计都出现在右侧的名为“Total”的列中。 |

| Who’s president? 谁是总统? | Who was the president of Mexico in 2021, and how frequently are elections held? 2021年墨西哥的总统是谁,选举多久举行一次? |

| Write code to calculate the Fibonacci sequence. 编写计算斐波那契数列的代码。 | Write a TypeScript function to efficiently calculate the Fibonacci sequence. Comment the code liberally to explain what each piece does and why it's written that way. 编写一个高效计算斐波那契数列的 TypeScript 函数。对代码进行详细注释,解释每个部分的作用及其编写方式的原因。 |

| Summarize the meeting notes. 总结会议记录。 | Summarize the meeting notes in a single paragraph. Then write a markdown list of the speakers and each of their key points. Finally, list the next steps or action items suggested by the speakers, if any. 用一段文字总结会议记录。然后以 Markdown 列表的形式列出发言人及其关键观点。最后,列出发言人提出的下一步行动或建议的行动项(如果有)。 |

1.2、战术:要求模型采用一种人物角色Tactic: Ask the model to adopt a persona

| The system message can be used to specify the persona used by the model in its replies. | 可以使用系统消息来指定模型在回复中所使用的角色。 |

| SYSTEM When I ask for help to write something, you will reply with a document that contains at least one joke or playful comment in every paragraph. USER Write a thank you note to my steel bolt vendor for getting the delivery in on time and in short notice. This made it possible for us to deliver an important order. | 系统 当我请求你帮忙写东西时,你会回复我一份文件,其中每一段至少包含一个笑话或有趣的评论。 用户 给我的螺栓供应商写一封感谢信,感谢他们及时交货并在短时间内完成。这使我们能够交付一份重要订单。 |

1.3、战术:使用分隔符清晰标示输入的不同部分Tactic: Use delimiters to clearly indicate distinct parts of the input

| Delimiters like triple quotation marks, XML tags, section titles, etc. can help demarcate sections of text to be treated differently. | 像三重引号、XML 标签、节标题等分隔符可以帮助划分文本的部分,以便以不同的方式处理。 |

| USER Summarize the text delimited by triple quotes with a haiku. """insert text here""" | 用户 用一个俳句总结由三重引号包围的文本。 """在此处插入文本""" |

| SYSTEM You will be provided with a pair of articles (delimited with XML tags) about the same topic. First summarize the arguments of each article. Then indicate which of them makes a better argument and explain why. USER <article> insert first article here </article> <article> insert second article here </article> | 系统 将为您提供一对关于同一主题的文章(由 XML 标签分隔)。首先总结每篇文章的论点。然后指出哪篇文章提出了更好的论点,并解释原因。 用户 <article>在此处插入第一篇文章</article> <article>在此处插入第二篇文章</article> |

| SYSTEM You will be provided with a thesis abstract and a suggested title for it. The thesis title should give the reader a good idea of the topic of the thesis but should also be eye-catching. If the title does not meet these criteria, suggest 5 alternatives. USER Abstract: insert abstract here Title: insert title here | 系统 将为您提供一篇论文摘要和一个建议的标题。论文标题应该给读者一个关于论文主题的好主意,同时也应该引人注目。如果标题不符合这些标准,请提供5个备选项。 用户 摘要:在此处插入摘要 标题:在此处插入标题 |

| For straightforward tasks such as these, using delimiters might not make a difference in the output quality. However, the more complex a task is the more important it is to disambiguate task details. Don’t make GPTs work to understand exactly what you are asking of them. | 对于像这样的简单任务,使用分隔符可能不会对输出质量产生影响。然而,任务越复杂,将任务细节明确化就越重要。不要让 GPT 难以理解您确切的要求。 |

1.4、战术:指定完成任务所需的步骤Tactic: Specify the steps required to complete a task

| Some tasks are best specified as a sequence of steps. Writing the steps out explicitly can make it easier for the model to follow them. | 有些任务最好被指定为一系列步骤。明确写出这些步骤可以使模型更容易地遵循它们。 |

| SYSTEM Use the following step-by-step instructions to respond to user inputs. Step 1 - The user will provide you with text in triple quotes. Summarize this text in one sentence with a prefix that says "Summary: ". Step 2 - Translate the summary from Step 1 into Spanish, with a prefix that says "Translation: ". USER """insert text here""" | 系统 使用以下逐步说明来回应用户输入。 第一步 - 用户将向您提供由三重引号括起来的文本。用一个前缀为"Summary: "的句子总结这段文本。 第二步 - 将第一步中的摘要翻译成西班牙语,并用一个前缀为"Translation: "的句子进行说明。 用户 """在此处插入文本""" |

1.5、战术:提供示例Tactic: Provide examples

| Providing general instructions that apply to all examples is generally more efficient than demonstrating all permutations of a task by example, but in some cases providing examples may be easier. For example, if you intend for the model to copy a particular style of responding to user queries which is difficult to describe explicitly. This is known as "few-shot" prompting. | 提供适用于所有示例的通用指令通常比通过示例演示任务的所有排列组合更有效,但在某些情况下,提供示例可能更容易。例如,如果您打算让模型复制一种难以明确描述的特定样式来回应用户查询,这被称为"few-shot"提示。 |

| SYSTEM Answer in a consistent style. USER Teach me about patience. ASSISTANT The river that carves the deepest valley flows from a modest spring; the grandest symphony originates from a single note; the most intricate tapestry begins with a solitary thread. USER Teach me about the ocean. | 系统 以一种一致的风格回答。 用户 教我耐心。 助手 雕刻最深谷的河流源于一处谦逊的泉眼;最壮丽的交响乐由一声孤独的音符奏响;最精妙的壁毯始于一根孤寂的线头。 用户 教我关于海洋。 |

1.6、战术:指定输出的期望长度Tactic: Specify the desired length of the output

| You can ask the model to produce outputs that are of a given target length. The targeted output length can be specified in terms of the count of words, sentences, paragraphs, bullet points, etc. Note however that instructing the model to generate a specific number of words does not work with high precision. The model can more reliably generate outputs with a specific number of paragraphs or bullet points. | 您可以要求模型生成具有给定目标长度的输出。目标输出长度可以以词数、句子数、段落数、项目符号等方式指定。然而,请注意,指示模型生成特定数量的单词不具有高精确性。模型可以更可靠地生成具有特定段落数或项目符号数的输出。 |

| USER Summarize the text delimited by triple quotes in about 50 words. """insert text here""" | 用户 用大约50个词总结由三重引号分隔的文本。 """在此处插入文本""" |

| USER Summarize the text delimited by triple quotes in 2 paragraphs. """insert text here""" | 用户 用2个段落总结由三重引号分隔的文本。 """在此处插入文本""" |

| USER Summarize the text delimited by triple quotes in 3 bullet points. """insert text here""" | 用户 用3个项目符号总结由三重引号分隔的文本。 """在此处插入文本""" |

2、战略:提供参考文本Strategy: Provide reference text

2.1、战术:指示模型使用参考文本回答问题Tactic: Instruct the model to answer using a reference text

| If we can provide a model with trusted information that is relevant to the current query, then we can instruct the model to use the provided information to compose its answer. | 如果我们能为模型提供与当前查询相关的可信信息,那么我们可以指示模型使用提供的信息来组织回答。 |

| SYSTEM Use the provided articles delimited by triple quotes to answer questions. If the answer cannot be found in the articles, write "I could not find an answer." USER <insert articles, each delimited by triple quotes> Question: <insert question here> | 系统 使用由三重引号分隔的提供的文章来回答问题。如果在文章中找不到答案,则写下"I could not find an answer."。 用户 <插入文章,每篇文章用三重引号分隔> 问题:<在此处插入问题> |

| Given that GPTs have limited context windows, in order to apply this tactic we need some way to dynamically lookup information that is relevant to the question being asked. Embeddings can be used to implement efficient knowledge retrieval. See the tactic "Use embeddings-based search to implement efficient knowledge retrieval" for more details on how to implement this. | 考虑到 GPTs 的有限上下文窗口,在应用这一战术时,我们需要一种动态查找与所提问题相关的信息的方法。嵌入可以用于实现高效的知识检索。有关如何实施这一点的详细信息,请参阅战术"使用基于嵌入的搜索实现高效的知识检索"。 |

2.2、战术:指示模型使用参考文本中的引用来回答问题Tactic: Instruct the model to answer with citations from a reference text

| If the input has been supplemented with relevant knowledge, it's straightforward to request that the model add citations to its answers by referencing passages from provided documents. Note that citations in the output can then be verified programmatically by string matching within the provided documents. | 如果输入已经补充了相关知识,那么可以直接要求模型通过引用所提供文档中的段落来为其答案添加引用。请注意,输出中的引用可以通过在提供的文档中进行字符串匹配来进行程序验证。 |

| SYSTEM You will be provided with a document delimited by triple quotes and a question. Your task is to answer the question using only the provided document and to cite the passage(s) of the document used to answer the question. If the document does not contain the information needed to answer this question then simply write: "Insufficient information." If an answer to the question is provided, it must be annotated with a citation. Use the following format for to cite relevant passages ({"citation": …}). USER """<insert document here>""" Question: <insert question here> | 系统 您将获得一个由三重引号分隔的文档和一个问题。您的任务是仅使用所提供的文档回答问题,并引用回答问题所使用的文档段落。如果文档中不包含回答该问题所需的信息,则简单写下:"Insufficient information." 如果提供了问题的答案,则必须注明引用。请使用以下格式来引用相关段落({"citation": …})。 用户 """<在此处插入文档>""" 问题:<在此处插入问题> |

3、战略:将复杂任务拆分为更简单的子任务Strategy: Split complex tasks into simpler subtasks

3.1、战术:使用意图分类来识别用户查询的最相关指令Tactic: Use intent classification to identify the most relevant instructions for a user query

| For tasks in which lots of independent sets of instructions are needed to handle different cases, it can be beneficial to first classify the type of query and to use that classification to determine which instructions are needed. This can be achieved by defining fixed categories and hardcoding instructions that are relevant for handling tasks in a given category. This process can also be applied recursively to decompose a task into a sequence of stages. The advantage of this approach is that each query will contain only those instructions that are required to perform the next stage of a task which can result in lower error rates compared to using a single query to perform the whole task. This can also result in lower costs since larger prompts cost more to run (see pricing information). | 对于需要处理不同情况下的许多独立指令集的任务,首先对查询的类型进行分类并使用该分类来确定所需的指令可能是有益的。这可以通过定义固定的类别并硬编码与处理给定类别任务相关的指令来实现。该过程也可以递归地应用于将任务分解为一系列阶段。这种方法的优点是,每个查询将仅包含执行任务的下一个阶段所需的指令,这可能导致错误率较低,与使用单个查询执行整个任务相比。这也可能导致更低的成本,因为较大的提示会增加运行成本(请参阅定价信息)。 |

| Suppose for example that for a customer service application, queries could be usefully classified as follows: SYSTEM You will be provided with customer service queries. Classify each query into a primary category and a secondary category. Provide your output in json format with the keys: primary and secondary. Primary categories: Billing, Technical Support, Account Management, or General Inquiry. Billing secondary categories: - Unsubscribe or upgrade - Add a payment method - Explanation for charge - Dispute a charge Technical Support secondary categories: - Troubleshooting - Device compatibility - Software updates Account Management secondary categories: - Password reset - Update personal information - Close account - Account security General Inquiry secondary categories: - Product information - Pricing - Feedback - Speak to a human USER I need to get my internet working again. | 例如,假设对于客户服务应用程序,查询可以有以下有用的分类: 系统 您将获得客户服务查询。将每个查询分类为主要类别和次要类别。以json格式提供输出,使用键:primary 和 secondary。 主要类别:计费(Billing)、技术支持(Technical Support)、账户管理(Account Management)或一般查询(General Inquiry)。 计费的次要类别: --退订或升级 --添加付款方式 --解释费用 --纠纷费用 技术支持的次要类别: --故障排除 --设备兼容性 --软件更新 账户管理的次要类别: --密码重置 --更新个人信息 --关闭账户 --账户安全 一般查询的次要类别: --产品信息 --价格 --反馈 --联系客服人员 用户 我需要让我的互联网再次工作。 |

| Based on the classification of the customer query, a set of more specific instructions can be provided to a GPT model to handle next steps. For example, suppose the customer requires help with "troubleshooting". | 根据客户查询的分类,可以向 GPT 模型提供一组更具体的指令来处理下一步。例如,假设客户需要帮助进行"故障排除"。 |

| SYSTEM You will be provided with customer service inquiries that require troubleshooting in a technical support context. Help the user by: - Ask them to check that all cables to/from the router are connected. Note that it is common for cables to come loose over time. - If all cables are connected and the issue persists, ask them which router model they are using - Now you will advise them how to restart their device: -- If the model number is MTD-327J, advise them to push the red button and hold it for 5 seconds, then wait 5 minutes before testing the connection. -- If the model number is MTD-327S, advise them to unplug and replug it, then wait 5 minutes before testing the connection. - If the customer's issue persists after restarting the device and waiting 5 minutes, connect them to IT support by outputting {"IT support requested"}. - If the user starts asking questions that are unrelated to this topic then confirm if they would like to end the current chat about troubleshooting and classify their request according to the following scheme: <insert primary/secondary classification scheme from above here> USER I need to get my internet working again. | 系统 您将获得需要在技术支持环境中进行故障排除的客户服务查询。通过以下方式帮助用户: >>要求他们检查路由器与所有连接的电缆是否连接正常。请注意,随着时间的推移,电缆松动是常见的情况。 >>如果所有电缆连接正常且问题仍然存在,请询问他们正在使用哪个路由器型号。 >>现在,您将告诉他们如何重新启动设备: >>如果型号为 MTD-327J,请建议他们按下红色按钮并保持5秒钟,然后在测试连接之前等待5分钟。 >>如果型号为 MTD-327S,请建议他们拔下电源并重新插入,然后在测试连接之前等待5分钟。 >>如果客户在重启设备并等待5分钟后问题仍然存在,请通过输出{"IT support requested"}将其连接到 IT 支持。 >>如果用户开始提出与此主题无关的问题,请确认他们是否希望结束当前有关故障排除的聊天,并根据上述方案对其请求进行分类。 <从上面插入主/次分类方案> 用户 我需要让我的互联网再次工作。 |

| Notice that the model has been instructed to emit special strings to indicate when the state of the conversation changes. This enables us to turn our system into a state machine where the state determines which instructions are injected. By keeping track of state, what instructions are relevant at that state, and also optionally what state transitions are allowed from that state, we can put guardrails around the user experience that would be hard to achieve with a less structured approach. | 请注意,已指示模型发出特殊字符串以指示对话状态何时改变。这使我们能够将系统转变为状态机,其中状态确定要注入的指令。通过跟踪状态、该状态下相关的指令以及可选的从该状态允许的状态转换,我们可以在用户体验周围设置防护栏,这在非结构化方法中很难实现。 |

3.2、战术:对于需要非常长对话的对话应用,对先前对话进行摘要或过滤Tactic: For dialogue applications that require very long conversations, summarize or filter previous dialogue

| Since GPTs have a fixed context length, dialogue between a user and an assistant in which the entire conversation is included in the context window cannot continue indefinitely. There are various workarounds to this problem, one of which is to summarize previous turns in the conversation. Once the size of the input reaches a predetermined threshold length, this could trigger a query that summarizes part of the conversation and the summary of the prior conversation could be included as part of the system message. Alternatively, prior conversation could be summarized asynchronously in the background throughout the entire conversation. | 由于 GPT 模型有固定的上下文长度,用户和助手之间的对话如果整个对话都包含在上下文窗口中,就无法无限进行下去。 有多种解决这个问题的方法,其中之一是对先前的对话进行摘要。一旦输入的大小达到预定的阈值长度,就可以触发一个查询来摘要对话的一部分,并将先前对话的摘要作为系统消息的一部分包含进来。或者,可以在整个对话过程中异步地对先前的对话进行摘要。 |

| An alternative solution is to dynamically select previous parts of the conversation that are most relevant to the current query. See the tactic "Use embeddings-based search to implement efficient knowledge retrieval". | 另一种解决方案是动态选择与当前查询最相关的先前对话部分。参见战术"使用基于嵌入的搜索来实现高效的知识检索"。 |

3.3、战术:将长文档逐部分进行摘要,并递归构建完整摘要Tactic: Summarize long documents piecewise and construct a full summary recursively

| Since GPTs have a fixed context length, they cannot be used to summarize a text longer than the context length minus the length of the generated summary in a single query. | 由于 GPT 模型有固定的上下文长度,无法在单个查询中用于摘要长度超过上下文长度减去生成摘要长度的文本。 |

| To summarize a very long document such as a book we can use a sequence of queries to summarize each section of the document. Section summaries can be concatenated and summarized producing summaries of summaries. This process can proceed recursively until an entire document is summarized. If it’s necessary to use information about earlier sections in order to make sense of later sections, then a further trick that can be useful is to include a running summary of the text that precedes any given point in the book while summarizing content at that point. The effectiveness of this procedure for summarizing books has been studied in previous research by OpenAI using variants of GPT-3. | 要摘要非常长的文档(如书籍),可以使用一系列查询来摘要文档的每个部分。将部分摘要连接并进行摘要,从而产生摘要的摘要。此过程可以递归进行,直到对整个文档进行摘要。如果需要使用早期部分的信息来理解后续部分,那么在摘要后续内容时,包含书中给定点之前文本的运行摘要可以是一个有用的技巧。OpenAI 以前使用 GPT-3 的变体进行研究,研究了使用此方法摘要书籍的有效性。 |

4、战略:给予 GPT 模型"思考"的时间Strategy: Give GPTs time to "think"

4.1、战术:指示模型在得出结论之前从基本原理开始推理Tactic: Instruct the model to work out its own solution before rushing to a conclusion

| Sometimes we get better results when we explicitly instruct the model to reason from first principles before coming to a conclusion. Suppose for example we want a model to evaluate a student’s solution to a math problem. The most obvious way to approach this is to simply ask the model if the student's solution is correct or not. | 有时,我们明确指示模型在得出结论之前从基本原理开始推理,会获得更好的结果。例如,假设我们希望模型评估学生对数学问题的解答。最明显的方法是简单地问模型学生的解答是否正确。 |

| SYSTEM Determine if the student's solution is correct or not. USER Problem Statement: I'm building a solar power installation and I need help working out the financials. - Land costs $100 / square foot - I can buy solar panels for $250 / square foot - I negotiated a contract for maintenance that will cost me a flat $100k per year, and an additional $10 / square foot What is the total cost for the first year of operations as a function of the number of square feet. Student's Solution: Let x be the size of the installation in square feet. 1. Land cost: 100x 2. Solar panel cost: 250x 3. Maintenance cost: 100,000 + 100x Total cost: 100x + 250x + 100,000 + 100x = 450x + 100,000 ASSISTANT The student's solution is correct. | 系统 判断学生的解答是否正确。 用户 问题描述:我正在建设一个太阳能发电装置,需要帮助计算财务情况。 土地每平方英尺 100 美元 我可以以每平方英尺 250 美元的价格购买太阳能电池板 我谈判了一份维护合同,每年固定费用为 10 万美元,再加上每平方英尺 10 美元 第一年的运营总成本是作为平方英尺数的函数是多少? 学生的解答:设 x 为装置的面积(平方英尺)。 土地成本:100x 太阳能电池板成本:250x 维护成本:100,000 + 100x 总成本:100x + 250x + 100,000 + 100x = 450x + 100,000 助手 学生的解答是正确的。 |

| But the student's solution is actually not correct! We can get the model to successfully notice this by prompting the model to generate its own solution first. | 但实际上,学生的解答是不正确的!我们可以让模型通过提示模型先生成自己的解答来成功注意到这一点。 |

| SYSTEM First work out your own solution to the problem. Then compare your solution to the student's solution and evaluate if the student's solution is correct or not. Don't decide if the student's solution is correct until you have done the problem yourself. USER Problem Statement: I'm building a solar power installation and I need help working out the financials. - Land costs $100 / square foot - I can buy solar panels for $250 / square foot - I negotiated a contract for maintenance that will cost me a flat $100k per year, and an additional $10 / square foot What is the total cost for the first year of operations as a function of the number of square feet. Student's Solution: Let x be the size of the installation in square feet. 1. Land cost: 100x 2. Solar panel cost: 250x 3. Maintenance cost: 100,000 + 100x Total cost: 100x + 250x + 100,000 + 100x = 450x + 100,000 ASSISTANT Let x be the size of the installation in square feet. 1. Land cost: 100x 2. Solar panel cost: 250x 3. Maintenance cost: 100,000 + 10x Total cost: 100x + 250x + 100,000 + 10x = 360x + 100,000 The student's solution is incorrect. They made an error in the maintenance cost calculation, using 100x instead of 10x. The correct total cost for the first year of operations is 360x + 100,000. | 系统 首先自己解答这个问题。然后将你的解答与学生的解答进行比较,并评估学生的解答是否正确。在自己完成问题之前,请不要确定学生的解答是否正确。 用户 问题描述:我正在建设一个太阳能发电装置,需要帮助计算财务情况。 土地每平方英尺 100 美元 我可以以每平方英尺 250 美元的价格购买太阳能电池板 我谈判了一份维护合同,每年固定费用为 10 万美元,再加上每平方英尺 10 美元 第一年的运营总成本是作为平方英尺数的函数是多少? 学生的解答:设 x 为装置的面积(平方英尺)。 土地成本:100x 太阳能电池板成本:250x 维护成本:100,000 + 100x 总成本:100x + 250x + 100,000 + 100x = 450x + 100,000 助手 设 x 为装置的面积(平方英尺)。 土地成本:100x 太阳能电池板成本:250x 维护成本:100,000 + 10x 总成本:100x + 250x + 100,000 + 10x = 360x + 100,000 学生的解答是不正确的。他们在计算维护成本时犯了错误,使用了 100x 而不是 10x。第一年的运营总成本的正确公式是 360x + 100,000。 |

4.2、战术:使用内心独白或一系列查询来隐藏模型的推理过程Tactic: Use inner monologue or a sequence of queries to hide the model's reasoning process

| The previous tactic demonstrates that it is sometimes important for the model to reason in detail about a problem before answering a specific question. For some applications, the reasoning process that a model uses to arrive at a final answer would be inappropriate to share with the user. For example, in tutoring applications we may want to encourage students to work out their own answers, but a model’s reasoning process about the student’s solution could reveal the answer to the student. | 前面的战术表明,对于模型在回答特定问题之前对问题进行详细推理有时很重要。对于某些应用程序来说,模型用于得出最终答案的推理过程可能不适合与用户分享。例如,在辅导应用程序中,我们可能希望鼓励学生自己解决问题,但模型关于学生解决方案的推理过程可能会向学生透露答案。 |

| Inner monologue is a tactic that can be used to mitigate this. The idea of inner monologue is to instruct the model to put parts of the output that are meant to be hidden from the user into a structured format that makes parsing them easy. Then before presenting the output to the user, the output is parsed and only part of the output is made visible. | 内心独白是一种可以用来减轻这种情况的战术。内心独白的思路是指示模型将要向用户隐藏的部分输出放入一个结构化格式中,以便于解析。然后,在将输出呈现给用户之前,对输出进行解析,仅将部分输出显示给用户。 |

| SYSTEM Follow these steps to answer the user queries. Step 1 - First work out your own solution to the problem. Don't rely on the student's solution since it may be incorrect. Enclose all your work for this step within triple quotes ("""). Step 2 - Compare your solution to the student's solution and evaluate if the student's solution is correct or not. Enclose all your work for this step within triple quotes ("""). Step 3 - If the student made a mistake, determine what hint you could give the student without giving away the answer. Enclose all your work for this step within triple quotes ("""). Step 4 - If the student made a mistake, provide the hint from the previous step to the student (outside of triple quotes). Instead of writing "Step 4 - ..." write "Hint:". USER Problem Statement: <insert problem statement> Student Solution: <insert student solution> | 系统 按照以下步骤回答用户的查询。 步骤 1 - 首先独立解决问题。不要依赖学生的解答,因为它可能是不正确的。在这一步中,使用三引号(""")将所有工作括起来。 步骤 2 - 将你的解答与学生的解答进行比较,并评估学生的解答是否正确。在这一步中,使用三引号(""")将所有工作括起来。 步骤 3 - 如果学生犯了错误,确定你可以给予学生什么提示,而不透露答案。在这一步中,使用三引号(""")将所有工作括起来。 步骤 4 - 如果学生犯了错误,向学生提供上一步的提示(在三引号之外)。而不是写"步骤 4 - ...",写"提示:"。 用户 问题描述: <插入问题描述> 学生的解答: <插入学生的解答> |

| Alternatively, this can be achieved with a sequence of queries in which all except the last have their output hidden from the end user. First, we can ask the model to solve the problem on its own. Since this initial query doesn't require the student’s solution, it can be omitted. This provides the additional advantage that there is no chance that the model’s solution will be biased by the student’s attempted solution. | 另外,可以通过一系列查询来实现,其中除最后一个之外的所有查询的输出对最终用户是隐藏的。 首先,我们可以要求模型自行解决问题。由于此初始查询不需要学生的解答,可以省略该部分。这还具有额外的优势,即模型的解决方案不会受到学生尝试解答的影响。 |

| USER <insert problem statement> | 用户 <插入问题描述> |

| Next, we can have the model use all available information to assess the correctness of the student’s solution. SYSTEM Compare your solution to the student's solution and evaluate if the student's solution is correct or not. USER Problem statement: """<insert problem statement>""" Your solution: """<insert model generated solution>""" Student’s solution: """<insert student's solution>""" | 接下来,我们可以让模型利用所有可用信息来评估学生的解答的正确性。 系统 将你的解答与学生的解答进行比较,并评估学生的解答是否正确。 用户 问题描述:"""<插入问题描述>""" 你的解答:"""<插入模型生成的解答>""" 学生的解答:"""<插入学生的解答>""" |

| Finally, we can let the model use its own analysis to construct a reply in the persona of a helpful tutor. SYSTEM You are a math tutor. If the student made an error, offer a hint to the student in a way that does not reveal the answer. If the student did not make an error, simply offer them an encouraging comment. USER Problem statement: """<insert problem statement>""" Your solution: """<insert model generated solution>""" Student’s solution: """<insert student's solution>""" Analysis: """<insert model generated analysis from previous step>""" | 最后,我们可以让模型使用自己的分析,以友好辅导员的角色构建回复。 系统 你是一位数学辅导员。如果学生犯了错误,以不透露答案的方式向学生提供提示。如果学生没有犯错误,只需给他们一个鼓励的评论。 用户 问题描述:"""<插入问题描述>""" 你的解答:"""<插入模型生成的解答>""" 学生的解答:"""<插入学生的解答>""" 分析:"""<插入前一步模型生成的分析>""" |

4.3、战术:询问模型在之前的步骤中是否遗漏了任何内容Tactic: Ask the model if it missed anything on previous passes

| Suppose that we are using a model to list excerpts from a source which are relevant to a particular question. After listing each excerpt the model needs to determine if it should start writing another or if it should stop. If the source document is large, it is common for a model to stop too early and fail to list all relevant excerpts. In that case, better performance can often be obtained by prompting the model with followup queries to find any excerpts it missed on previous passes. | 假设我们正在使用一个模型列出与特定问题相关的源文件摘录。在列出每个摘录后,模型需要确定是继续写另一个摘录还是停止。如果源文件很大,模型往往会过早停止,没有列出所有相关的摘录。在这种情况下,通过提示模型使用后续查询来查找之前遗漏的任何摘录,可以获得更好的性能。 |

| SYSTEM You will be provided with a document delimited by triple quotes. Your task is to select excerpts which pertain to the following question: "What significant paradigm shifts have occurred in the history of artificial intelligence." Ensure that excerpts contain all relevant context needed to interpret them - in other words don't extract small snippets that are missing important context. Provide output in JSON format as follows: [{"excerpt": "..."}, ... {"excerpt": "..."}] USER """<insert document here>""" ASSISTANT [{"excerpt": "the model writes an excerpt here"}, ... {"excerpt": "the model writes another excerpt here"}] USER Are there more relevant excerpts? Take care not to repeat excerpts. Also ensure that excerpts contain all relevant context needed to interpret them - in other words don't extract small snippets that are missing important context. | 系统 您将获得一个由三引号分隔的文档。您的任务是选择与以下问题相关的摘录:"人工智能的历史中发生了哪些重大范式转变。" 确保摘录包含解释它们所需的所有相关上下文 - 换句话说,不要提取缺少重要上下文的小片段。以以下 JSON 格式提供输出: [{"excerpt": "..."}, ... {"excerpt": "..."}] 用户 """<插入文档>""" 助手 [{"excerpt": "模型在此处写摘录"}, ... {"excerpt": "模型在此处再写一个摘录"}] 用户 是否还有更多相关的摘录?请注意不要重复摘录。还要确保摘录包含解释它们所需的所有相关上下文 - 换句话说,不要提取缺少重要上下文的小片段。 |

5、策略:使用外部工具Strategy: Use external tools

5.1、战术:使用基于嵌入的搜索来实现高效的知识检索Tactic: Use embeddings-based search to implement efficient knowledge retrieval

| A model can leverage external sources of information if provided as part of its input. This can help the model to generate more informed and up-to-date responses. For example, if a user asks a question about a specific movie, it may be useful to add high quality information about the movie (e.g. actors, director, etc…) to the model’s input. Embeddings can be used to implement efficient knowledge retrieval, so that relevant information can be added to the model input dynamically at run-time. | 如果模型可以获得外部信息作为其输入的一部分,那么它可以利用外部信息来生成更具信息和更新的回答。例如,如果用户询问有关特定电影的问题,将有关该电影的高质量信息(如演员、导演等)添加到模型的输入中可能很有用。嵌入可以用于实现高效的知识检索,以便在运行时动态地将相关信息添加到模型输入中。 |

| A text embedding is a vector that can measure the relatedness between text strings. Similar or relevant strings will be closer together than unrelated strings. This fact, along with the existence of fast vector search algorithms means that embeddings can be used to implement efficient knowledge retrieval. In particular, a text corpus can be split up into chunks, and each chunk can be embedded and stored. Then a given query can be embedded and vector search can be performed to find the embedded chunks of text from the corpus that are most related to the query (i.e. closest together in the embedding space). Example implementations can be found in the OpenAI Cookbook. See the tactic “Instruct the model to use retrieved knowledge to answer queries” for an example of how to use knowledge retrieval to minimize the likelihood that a model will make up incorrect facts. | 文本嵌入是一种可以衡量文本字符串之间相关性的向量。相似或相关的字符串将比不相关的字符串更接近。由此可知,结合快速向量搜索算法的存在,可以使用嵌入来实现高效的知识检索。特别是,文本语料库可以被分成多个块,每个块可以被嵌入和存储。然后,可以将给定的查询进行嵌入,执行向量搜索,以找到与查询最相关的语料库中嵌入的文本块(即在嵌入空间中最接近的文本块)。 在 OpenAI Cookbook 中可以找到示例实现。参见战术“指示模型使用检索到的知识来回答查询”的示例,了解如何使用知识检索来减少模型捏造错误事实的可能性。 |

5.2、战术:使用代码执行进行更准确的计算或调用外部 APITactic: Use code execution to perform more accurate calculations or call external APIs

| GPTs cannot be relied upon to perform arithmetic or long calculations accurately on their own. In cases where this is needed, a model can be instructed to write and run code instead of making its own calculations. In particular, a model can be instructed to put code that is meant to be run into a designated format such as triple backtics. After an output is produced, the code can be extracted and run. Finally, if necessary, the output from the code execution engine (i.e. Python interpreter) can be provided as an input to the model for the next query. | GPT 模型不能单独依赖于进行精确的算术运算或长时间的计算。在需要这样做的情况下,可以指示模型编写和运行代码,而不是进行自己的计算。特别是,可以指示模型将要运行的代码放入指定的格式(如三个反引号)。在产生输出之后,可以提取并运行代码。最后,如果需要,可以将代码执行引擎(如 Python 解释器)的输出作为下一个查询的模型输入。 |

| SYSTEM You can write and execute Python code by enclosing it in triple backticks, e.g. ```code goes here```. Use this to perform calculations. USER Find all real-valued roots of the following polynomial: 3*x**5 - 5*x**4 - 3*x**3 - 7*x - 10. | 系统 您可以使用三个反引号括起来的方式编写和执行 Python 代码,例如code goes here。使用它来进行计算。 用户 找到以下多项式的所有实根:3x**5 - 5x4 - 3*x3 - 7*x - 10。 |

| Another good use case for code execution is calling external APIs. If a model is instructed in the proper use of an API, it can write code that makes use of it. A model can be instructed in how to use an API by providing it with documentation and/or code samples showing how to use the API. | 使用代码执行的另一个很好的用例是调用外部 API。如果正确使用 API 的方式对模型进行了指示,它可以编写使用该 API 的代码。可以通过提供文档和/或展示如何使用 API 的代码示例来指示模型如何使用 API。 |

| SYSTEM You can write and execute Python code by enclosing it in triple backticks. Also note that you have access to the following module to help users send messages to their friends: ```python import message message.write(to="John", message="Hey, want to meetup after work?")``` | 系统 您可以使用三个反引号括起来的方式编写和执行 Python 代码。此外,请注意,您可以访问以下模块,以帮助用户向朋友发送消息: Python import message message.write(to="John", message="Hey, want to meetup after work?")``` |

| WARNING: Executing code produced by a model is not inherently safe and precautions should be taken in any application that seeks to do this. In particular, a sandboxed code execution environment is needed to limit the harm that untrusted code could cause. | 警告:执行模型生成的代码在本质上并不安全,因此在任何试图这样做的应用程序中应采取预防措施。特别是,需要使用沙箱式代码执行环境来限制不受信任的代码可能造成的损害。 |

6、策略:系统化地测试更改Strategy: Test changes systematically

| Sometimes it can be hard to tell whether a change — e.g., a new instruction or a new design — makes your system better or worse. Looking at a few examples may hint at which is better, but with small sample sizes it can be hard to distinguish between a true improvement or random luck. Maybe the change helps performance on some inputs, but hurts performance on others. | 有时候很难判断一项改变(如新指令或新设计)是否会使系统变得更好或更差。查看几个示例可能会暗示哪个更好,但是对于样本数量较小的情况下,很难区分是真正的改进还是偶然的运气。也许该改变在某些输入上有助于性能,但对其他输入有害。 |

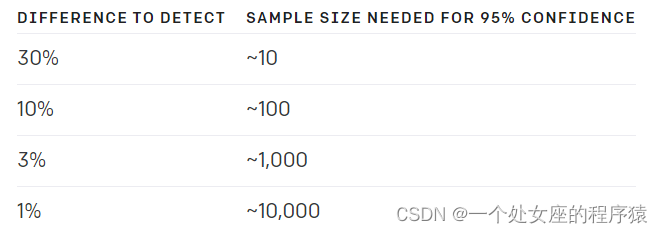

| Evaluation procedures (or "evals") are useful for optimizing system designs. Good evals are: >> Representative of real-world usage (or at least diverse) >> Contain many test cases for greater statistical power (see table below for guidelines) >> Easy to automate or repeat | 评估过程(或“评估”)对于优化系统设计是有用的。良好的评估应具备以下特点: >> 代表实际使用情况(或至少具备多样性); >> 包含许多测试案例以提高统计功效(有关准则的指导,请参见下表); >> 易于自动化或重复进行。 |

| Evaluation of outputs can be done by computers, humans, or a mix. Computers can automate evals with objective criteria (e.g., questions with single correct answers) as well as some subjective or fuzzy criteria, in which model outputs are evaluated by other model queries. OpenAI Evals is an open-source software framework that provides tools for creating automated evals. | 对输出进行评估可以由计算机、人类或两者混合进行。计算机可以通过客观标准自动化评估,例如具有单一正确答案的问题,以及一些主观或模糊的标准,在这些标准中,模型的输出由其他模型查询进行评估。OpenAI Evals 是一个开源软件框架,提供了创建自动化评估工具的工具。 |

| Model-based evals can be useful when there exists a range of possible outputs that would be considered equally high in quality (e.g. for questions with long answers). The boundary between what can be realistically evaluated with a model-based eval and what requires a human to evaluate is fuzzy and is constantly shifting as models become more capable. We encourage experimentation to figure out how well model-based evals can work for your use case. | 当存在一系列可能被视为质量相等的输出时(例如对于具有长答案的问题),基于模型的评估可以非常有用。在基于模型的评估和需要人类评估之间,可以进行模糊的界限,随着模型的能力增强,这个界限也在不断变化。我们鼓励进行实验,以了解基于模型的评估在您的使用情况下的可行性如何。 |

6.1、战术:根据黄金标准答案评估模型输出Tactic: Evaluate model outputs with reference to gold-standard answers

| Suppose it is known that the correct answer to a question should make reference to a specific set of known facts. Then we can use a model query to count how many of the required facts are included in the answer. For example, using the following system message: | 假设已知问题的正确答案应涉及特定的已知事实集。然后,我们可以使用模型查询来计算答案中包含了多少必需的事实。 例如,使用以下系统消息: |

| SYSTEM You will be provided with text delimited by triple quotes that is supposed to be the answer to a question. Check if the following pieces of information are directly contained in the answer: - Neil Armstrong was the first person to walk on the moon. - The date Neil Armstrong first walked on the moon was July 21, 1969. For each of these points perform the following steps: 1 - Restate the point. 2 - Provide a citation from the answer which is closest to this point. 3 - Consider if someone reading the citation who doesn't know the topic could directly infer the point. Explain why or why not before making up your mind. 4 - Write "yes" if the answer to 3 was yes, otherwise write "no". Finally, provide a count of how many "yes" answers there are. Provide this count as {"count": <insert count here>}. | 系统 您将获得由三个引号分隔的文本,这应该是一个问题的答案。检查以下信息是否直接包含在答案中: 尼尔·阿姆斯特朗是第一个登上月球的人。 尼尔·阿姆斯特朗首次登上月球的日期是1969年7月21日。 针对每个观点执行以下步骤: 1 - 重述观点。 2 - 提供与此观点最接近的答案中的引文。 3 - 考虑如果一个不了解该主题的人阅读引文是否能够直接推断出观点。在下定决心之前解释为什么或为什么不。 4 - 如果问题3的答案是“是”,则写入“是”,否则写入“否”。 最后,提供“是”答案的计数。将此计数提供为{"count": <插入计数值>}。 |

| Here's an example input where both points are satisfied: SYSTEM <insert system message above> USER """Neil Armstrong is famous for being the first human to set foot on the Moon. This historic event took place on July 21, 1969, during the Apollo 11 mission.""" | 下面是一个满足两个观点的示例输入: 系统 <插入上述系统消息> 用户 """尼尔·阿姆斯特朗以成为第一位登上月球的人而闻名。这一历史事件发生在1969年7月21日,阿波罗11号任务期间。""" 下面是只满足一个观点的示例输入: |

| Here's an example input where only one point is satisfied: SYSTEM <insert system message above> USER """Neil Armstrong made history when he stepped off the lunar module, becoming the first person to walk on the moon.""" | 系统 <插入上述系统消息> 用户 """尼尔·阿姆斯特朗踏出登月舱时创造了历史,成为第一个踏上月球的人。""" |

| Here's an example input where none are satisfied: SYSTEM <insert system message above> USER """In the summer of '69, a voyage grand, Apollo 11, bold as legend's hand. Armstrong took a step, history unfurled, "One small step," he said, for a new world.""" | 下面是一个没有满足任何观点的示例输入: 系统 <插入上述系统消息> 用户 """在'69的夏天,一个伟大的航程, 阿波罗11号,勇敢如传说之手。 阿姆斯特朗迈出了一步,历史展开, 他说:“迈出一小步,为了一个新世界。”""" |

| There are many possible variants on this type of model-based eval. Consider the following variation which tracks the kind of overlap between the candidate answer and the gold-standard answer, and also tracks whether the candidate answer contradicts any part of the gold-standard answer. | 这种基于模型的评估类型有很多可能的变体。考虑以下变体,它跟踪候选答案与黄金标准答案之间的重叠类型,并跟踪候选答案是否与黄金标准答案的任何部分相矛盾。 |

| SYSTEM Use the following steps to respond to user inputs. Fully restate each step before proceeding. i.e. "Step 1: Reason...". Step 1: Reason step-by-step about whether the information in the submitted answer compared to the expert answer is either: disjoint, equal, a subset, a superset, or overlapping (i.e. some intersection but not subset/superset). Step 2: Reason step-by-step about whether the submitted answer contradicts any aspect of the expert answer. Step 3: Output a JSON object structured like: {"type_of_overlap": "disjoint" or "equal" or "subset" or "superset" or "overlapping", "contradiction": true or false} | 系统 使用以下步骤回答用户输入。在继续之前完整重述每个步骤。即“第1步:推理...”。 第1步:逐步推理提交的答案与专家答案之间的信息是:不交集、相等、子集、超集还是重叠(即一些交集但不是子集/超集)。 第2步:逐步推理提交的答案是否与专家答案的任何方面相矛盾。 第3步:输出一个结构化的JSON对象,如:{"type_of_overlap": "不交集"或"相等"或"子集"或"超集"或"重叠","contradiction": true或false} |

| Here's an example input with a substandard answer which nonetheless does not contradict the expert answer: SYSTEM <insert system message above> USER Question: """What event is Neil Armstrong most famous for and on what date did it occur? Assume UTC time.""" Submitted Answer: """Didn't he walk on the moon or something?""" Expert Answer: """Neil Armstrong is most famous for being the first person to walk on the moon. This historic event occurred on July 21, 1969.""" | 下面是一个答案质量不佳但仍然不与专家答案相矛盾的示例输入: 系统 <插入上述系统消息> 用户 问题:"""尼尔·阿姆斯特朗最出名的事件是什么,它发生在什么日期?假设为UTC时间。""" 提交的答案:"""难道他不是在月球上行走之类的吗?""" 专家答案:"""尼尔·阿姆斯特朗最出名的是成为第一个在月球上行走的人。这一历史事件发生在1969年7月21日。""" |

| Here's an example input with answer that directly contradicts the expert answer: SYSTEM <insert system message above> USER Question: """What event is Neil Armstrong most famous for and on what date did it occur? Assume UTC time.""" Submitted Answer: """On the 21st of July 1969, Neil Armstrong became the second person to walk on the moon, following after Buzz Aldrin.""" Expert Answer: """Neil Armstrong is most famous for being the first person to walk on the moon. This historic event occurred on July 21, 1969.""" | 下面是一个直接与专家答案相矛盾的答案的示例输入: 系统 <插入上述系统消息> 用户 问题:"""尼尔·阿姆斯特朗最出名的事件是什么,它发生在什么日期?假设为UTC时间。""" 提交的答案:"""在1969年7月21日,尼尔·阿姆斯特朗成为第二个踏上月球的人,紧随在Buzz Aldrin之后。""" 专家答案:"""尼尔·阿姆斯特朗最出名的是成为第一个在月球上行走的人。这一历史事件发生在1969年7月21日。""" |

| Here's an example input with a correct answer that also provides a bit more detail than is necessary: SYSTEM <insert system message above> USER Question: """What event is Neil Armstrong most famous for and on what date did it occur? Assume UTC time.""" Submitted Answer: """At approximately 02:56 UTC on July 21st 1969, Neil Armstrong became the first human to set foot on the lunar surface, marking a monumental achievement in human history.""" Expert Answer: """Neil Armstrong is most famous for being the first person to walk on the moon. This historic event occurred on July 21, 1969.""" | 下面是一个正确的答案,提供了比必要的更多细节的示例输入: 系统 <插入上述系统消息> 用户 问题:"""尼尔·阿姆斯特朗最出名的事件是什么,它发生在什么日期?假设为UTC时间。""" 提交的答案:"""大约在UTC时间02:56于1969年7月21日,尼尔·阿姆斯特朗成为第一个踏上月球表面的人,标志着人类历史上的重大成就。""" 专家答案:"""尼尔·阿姆斯特朗最出名的是成为第一个在月球上行走的人。这一历史事件发生在1969年7月21日。""" |

其他资源Other resources

| For more inspiration, visit the OpenAI Cookbook, which contains example code and also links to third-party resources such as: Prompting libraries & tools Prompting guides Video courses Papers on advanced prompting to improve reasoning | 有关更多灵感,请访问OpenAI Cookbook,其中包含示例代码,还提供了第三方资源的链接,例如: 提示库和工具 提示指南 视频课程 改进推理的高级提示论文 |