热门标签

热门文章

- 1windows 查看端口占用并删除_netstat -ano | findstr 端口清空

- 2org.apache.poi Excel列与行都是动态生成的_99%的人不知道怎么利用Excel里的函数和技巧来偷懒...

- 3AAAI 2020 | 多模态基准指导的生成式多模态自动文摘_多模态多输出摘要生成

- 4力扣 在LR字符串中交换相邻字符(双指针)

- 5XTuner 大模型单卡低成本微调实战笔记_xtuner 单卡低成本微调大模型

- 6基于stm32智能取药柜物联网嵌入式软硬件开发单片机毕业源码案例设计_stm32自动取药系统

- 7navicat oracle调试器,Oracle PL/SQL 调试器 - Navicat 15 for Linux 产品手册

- 8Android 抛弃IMEI改用ANDROID_ID_android10 imei取消

- 9docker 部署带有界面的registry仓库_registry ui

- 10C++箴言:理解typename的两个含义_c++中typename是啥意思

当前位置: article > 正文

大数据从入门到实战 - HBase 开发:使用Java操作HBase_hbase 开发:使用java操作hbase

作者:你好赵伟 | 2024-05-16 21:57:36

赞

踩

hbase 开发:使用java操作hbase

大数据从入门到实战 - HBase 开发:使用Java操作HBase

叮嘟!这里是小啊呜的学习课程资料整理。好记性不如烂笔头,今天也是努力进步的一天。一起加油进阶吧!

一、关于此次实践

1、实战简介

HBase和Hadoop一样,都是用Java进行开发的,本次实训我们就来学习如何使用Java编写代码来操作HBase数据库。

实验环境:

hadoop-2.7

JDK8.0

HBase2.1.1

- 1

- 2

- 3

- 4

2、全部任务

二、实践详解

1、第1关:创建表

package step1; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.Admin; import org.apache.hadoop.hbase.client.ColumnFamilyDescriptor; import org.apache.hadoop.hbase.client.ColumnFamilyDescriptorBuilder; import org.apache.hadoop.hbase.client.Connection; import org.apache.hadoop.hbase.client.ConnectionFactory; import org.apache.hadoop.hbase.client.Get; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.client.Result; import org.apache.hadoop.hbase.client.ResultScanner; import org.apache.hadoop.hbase.client.Scan; import org.apache.hadoop.hbase.client.Table; import org.apache.hadoop.hbase.client.TableDescriptor; import org.apache.hadoop.hbase.client.TableDescriptorBuilder; import org.apache.hadoop.hbase.util.Bytes; /** * HBase 1.0 version of ExampleClient that uses {@code Connection}, * {@code Admin} and {@code Table}. */ public class Task{ public void createTable()throws Exception{ /********* Begin *********/ Configuration config = HBaseConfiguration.create(); Connection connection = ConnectionFactory.createConnection(config); try { // Create table Admin admin = connection.getAdmin(); try { TableName tableName = TableName.valueOf("dept"); // 新 API 构建表 // TableDescriptor 对象通过 TableDescriptorBuilder 构建; TableDescriptorBuilder tableDescriptor = TableDescriptorBuilder.newBuilder(tableName); ColumnFamilyDescriptor family = ColumnFamilyDescriptorBuilder.newBuilder(Bytes.toBytes("data")).build();// 构建列族对象 tableDescriptor.setColumnFamily(family); // 设置列族 admin.createTable(tableDescriptor.build()); // 创建表 TableName emp = TableName.valueOf("emp"); // 新 API 构建表 // TableDescriptor 对象通过 TableDescriptorBuilder 构建; TableDescriptorBuilder empDescriptor = TableDescriptorBuilder.newBuilder(emp); ColumnFamilyDescriptor empfamily = ColumnFamilyDescriptorBuilder.newBuilder(Bytes.toBytes("emp")).build();// 构建列族对象 empDescriptor.setColumnFamily(empfamily); // 设置列族 admin.createTable(empDescriptor.build()); // 创建表 } finally { admin.close(); } } finally { connection.close(); } /********* End *********/ } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

命令行:

start-dfs.sh ( Hadoop 启动)

回车

start-hbase.sh ( hbase 启动)

- 1

- 2

- 3

- 4

- 5

测评

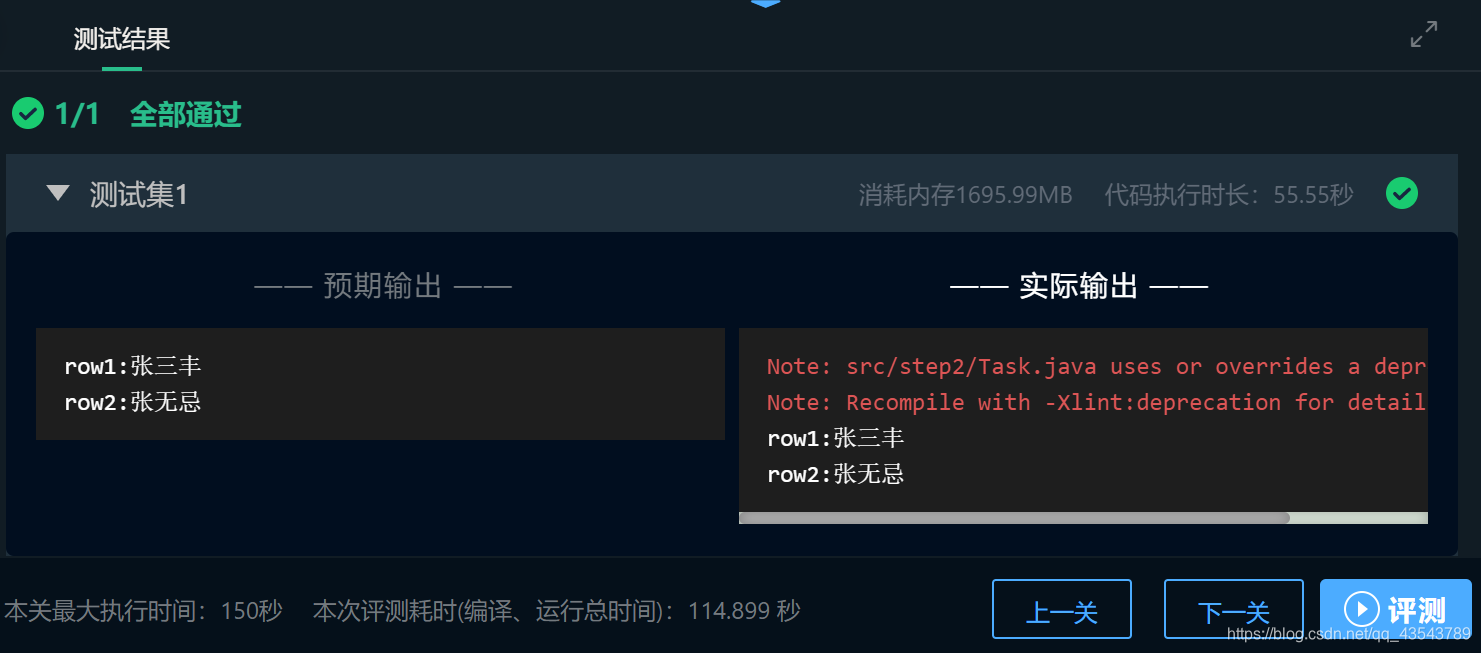

2、第2关:添加数据

package step2; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.Admin; import org.apache.hadoop.hbase.client.ColumnFamilyDescriptor; import org.apache.hadoop.hbase.client.ColumnFamilyDescriptorBuilder; import org.apache.hadoop.hbase.client.Connection; import org.apache.hadoop.hbase.client.ConnectionFactory; import org.apache.hadoop.hbase.client.Get; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.client.Result; import org.apache.hadoop.hbase.client.ResultScanner; import org.apache.hadoop.hbase.client.Scan; import org.apache.hadoop.hbase.client.Table; import org.apache.hadoop.hbase.client.TableDescriptor; import org.apache.hadoop.hbase.client.TableDescriptorBuilder; import org.apache.hadoop.hbase.util.Bytes; public class Task { public void insertInfo()throws Exception{ /********* Begin *********/ Configuration config = HBaseConfiguration.create(); Connection connection = ConnectionFactory.createConnection(config); Admin admin = connection.getAdmin(); TableName tableName = TableName.valueOf("tb_step2"); TableDescriptorBuilder tableDescriptor = TableDescriptorBuilder.newBuilder(tableName); ColumnFamilyDescriptor family = ColumnFamilyDescriptorBuilder.newBuilder(Bytes.toBytes("data")).build();// 构建列族对象 tableDescriptor.setColumnFamily(family); // 设置列族 admin.createTable(tableDescriptor.build()); // 创建表 // 添加数据 byte[] row1 = Bytes.toBytes("row1"); Put put1 = new Put(row1); byte[] columnFamily1 = Bytes.toBytes("data"); // 列 byte[] qualifier1 = Bytes.toBytes(String.valueOf(1)); // 列族修饰词 byte[] value1 = Bytes.toBytes("张三丰"); // 值 put1.addColumn(columnFamily1, qualifier1, value1); byte[] row2 = Bytes.toBytes("row2"); Put put2 = new Put(row2); byte[] columnFamily2 = Bytes.toBytes("data"); // 列 byte[] qualifier2 = Bytes.toBytes(String.valueOf(2)); // 列族修饰词 byte[] value2 = Bytes.toBytes("张无忌"); // 值 put2.addColumn(columnFamily2, qualifier2, value2); Table table = connection.getTable(tableName); table.put(put1); table.put(put2); /********* End *********/ } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

测评

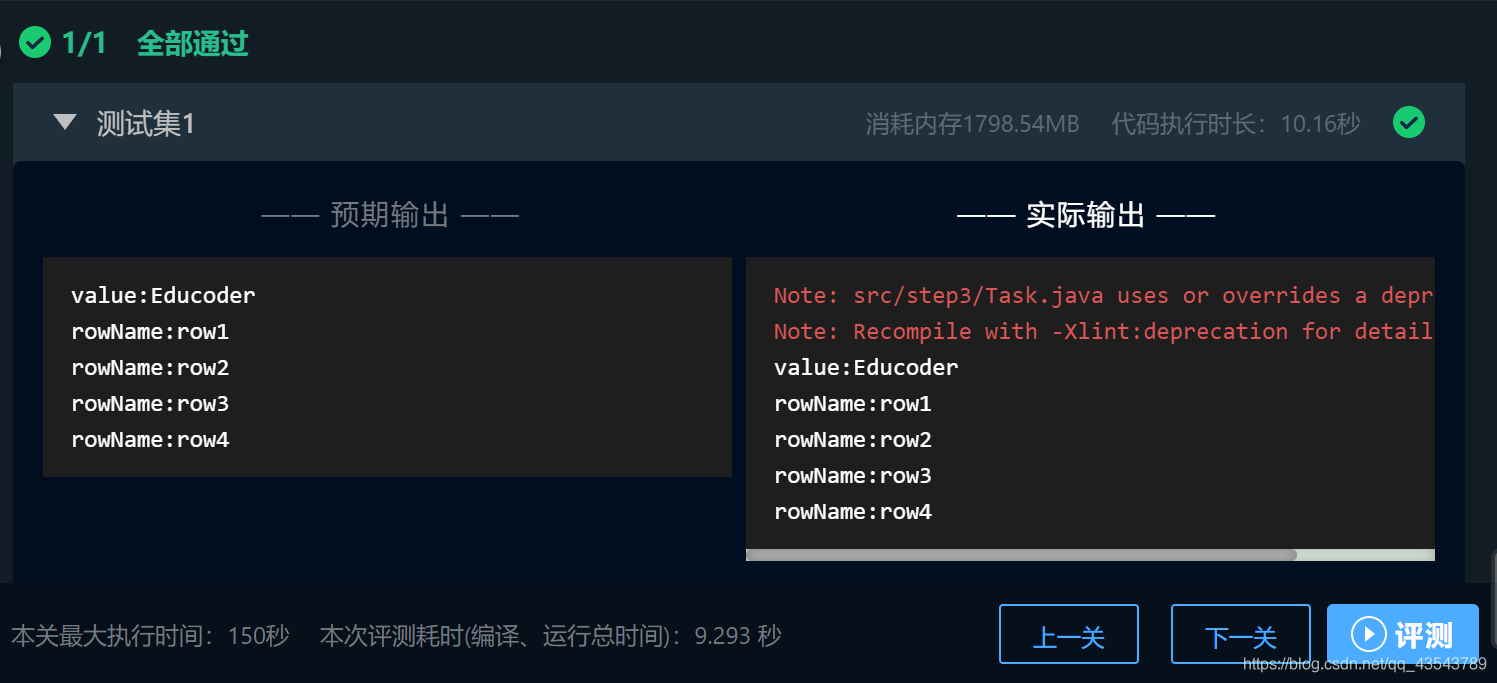

3、第3关:获取数据

package step3; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.Admin; import org.apache.hadoop.hbase.client.ColumnFamilyDescriptor; import org.apache.hadoop.hbase.client.ColumnFamilyDescriptorBuilder; import org.apache.hadoop.hbase.client.Connection; import org.apache.hadoop.hbase.client.ConnectionFactory; import org.apache.hadoop.hbase.client.Get; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.client.Result; import org.apache.hadoop.hbase.client.ResultScanner; import org.apache.hadoop.hbase.client.Scan; import org.apache.hadoop.hbase.client.Table; import org.apache.hadoop.hbase.client.TableDescriptor; import org.apache.hadoop.hbase.client.TableDescriptorBuilder; import org.apache.hadoop.hbase.util.Bytes; public class Task { public void queryTableInfo()throws Exception{ /********* Begin *********/ Configuration config = HBaseConfiguration.create(); Connection connection = ConnectionFactory.createConnection(config); Admin admin = connection.getAdmin(); TableName tableName = TableName.valueOf("t_step3"); Table table = connection.getTable(tableName); // 获取数据 Get get = new Get(Bytes.toBytes("row1")); // 定义 get 对象 Result result = table.get(get); // 通过 table 对象获取数据 //System.out.println("Result: " + result); // 很多时候我们只需要获取“值” 这里表示获取 data:1 列族的值 byte[] valueBytes = result.getValue(Bytes.toBytes("data"), Bytes.toBytes("1")); // 获取到的是字节数组 // 将字节转成字符串 String valueStr = new String(valueBytes,"utf-8"); System.out.println("value:" + valueStr); TableName tableStep3Name = TableName.valueOf("table_step3"); Table step3Table = connection.getTable(tableStep3Name); // 批量查询 Scan scan = new Scan(); ResultScanner scanner = step3Table.getScanner(scan); try { int i = 0; for (Result scannerResult: scanner) { //byte[] value = scannerResult.getValue(Bytes.toBytes("data"), Bytes.toBytes(1)); // System.out.println("Scan: " + scannerResult); byte[] row = scannerResult.getRow(); System.out.println("rowName:" + new String(row,"utf-8")); } } finally { scanner.close(); } /********* End *********/ } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

测评

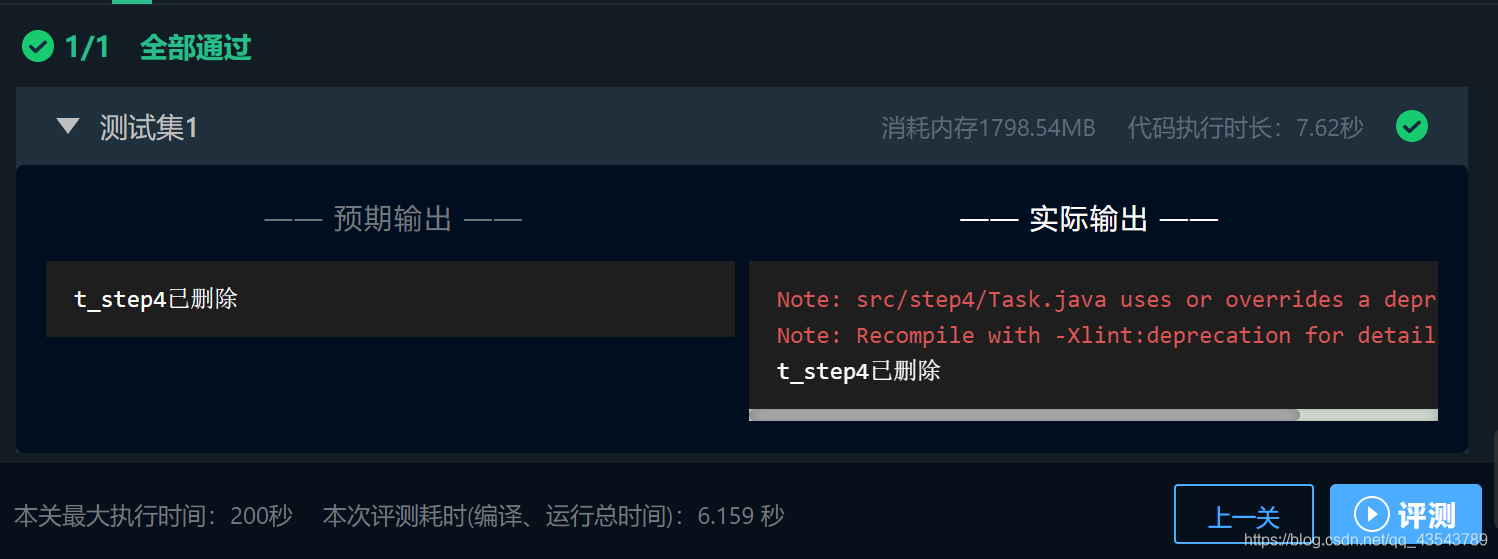

4、第4关:删除表

package step4; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.Admin; import org.apache.hadoop.hbase.client.ColumnFamilyDescriptor; import org.apache.hadoop.hbase.client.ColumnFamilyDescriptorBuilder; import org.apache.hadoop.hbase.client.Connection; import org.apache.hadoop.hbase.client.ConnectionFactory; import org.apache.hadoop.hbase.client.Get; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.client.Result; import org.apache.hadoop.hbase.client.ResultScanner; import org.apache.hadoop.hbase.client.Scan; import org.apache.hadoop.hbase.client.Table; import org.apache.hadoop.hbase.client.TableDescriptor; import org.apache.hadoop.hbase.client.TableDescriptorBuilder; import org.apache.hadoop.hbase.util.Bytes; public class Task { public void deleteTable()throws Exception{ /********* Begin *********/ Configuration config = HBaseConfiguration.create(); Connection connection = ConnectionFactory.createConnection(config); Admin admin = connection.getAdmin(); TableName tableName = TableName.valueOf("t_step4"); admin.disableTable(tableName); admin.deleteTable(tableName); /********* End *********/ } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

测评

Ending!

更多课程知识学习记录随后再来吧!

就酱,嘎啦!

- 1

注:

人生在勤,不索何获。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/你好赵伟/article/detail/580655

推荐阅读

相关标签