- 1程序员入门培训班多少钱?可以学到哪些东西?_程序员培训班要多少钱

- 2jwt 介绍_怎么查看一个浏览器网页的jwt

- 32021年危险化学品生产单位安全生产管理人员考试题库及危险化学品生产单位安全生产管理人员考试内容_人体是导体,在静电场中可能接触起电

- 4Vivado工程经验与时序收敛技巧_vivado fix cell

- 5算法——动态规划_完全加括号是什么意思

- 6git代码库迁移保留commit历史_Git 如何迁移仓库并保留 commit 记录

- 7RK3328 Debian安装OpenMediaVault

- 8git 拉取代码时显示Filename too long的解决办法_git file name too long

- 9系统盘50G,数据盘100G(需求:将数据磁盘扩容至1T)_系统盘和数据盘配比

- 10WooCommerce入门指南:简介

从建表语句带你学习doris_表索引_doris 建表

赞

踩

1、doris建表概述

1.1、doris建表模板

- CREATE [EXTERNAL] TABLE [IF NOT EXISTS] [DATABASE.]table_name

- (

- column_definition1

- [,column_deinition2,......]

- [,index_definition1,[,index_definition2,]]

- )

- [ENGINE = [olap|mysql|broker|hive]]

- [key_desc]

- [COMMENT "table comment"];

- [partition_desc]

- [distribute_desc]

- [rollup_index]

- [PROPERTIES("key"="value",...)]

- [BROKER PROPERTIES("key"="value",...)];

1.2、doris建表结构

doris建表包含以下部分:

- 内外部表定义

- 数据列定义

- 索引字段定义

- 数据模型定义

- 执行引擎定义

- 数据分区定义

- 数据分桶定义

- 表属性定义

- broker属性定义

本篇博客我们来学习索引字段部分,索引字段对于提升表的查询性能具有重要的意义,本篇文章我们将介绍表索引作用、表索引类型、表索引基本原理、表索引实践案例四部分内容

2、doris建表剖析_索引介绍

索引是所有数据库系统提高查询性能的一个重要手段,简单的说其作用相当于我们查询汉语词典的目录,通过部首或者拼音快速帮助我们定位到某个汉字。索引通过一定的组织格式能够快速定位到数据表中的数据。doris目前支持两种索引:

- 内建的智能索引,包括前缀索引和 ZoneMap 索引。

- 用户手动创建的二级索引,包括 倒排索引、 bloomfilter索引 和 ngram bloomfilter索引 。

3、前缀索引详解

3.1、前缀索引背景介绍

不同于传统的数据库设计,Doris 不支持在任意列上创建索引。Doris 这类 MPP 架构的 OLAP 数据库,通常都是通过提高并发,来处理大量数据的。本质上Doris 的数据存储在类似 SSTable(Sorted String Table)的数据结构中。该结构是一种有序的数据结构,可以按照指定的列进行排序存储。在这种数据结构上,以排序列作为条件进行查找,会非常的高效。在 Aggregate、Unique 和 Duplicate 三种数据模型中。底层的数据存储,是按照各自建表语句中,AGGREGATE KEY、UNIQUE KEY 和 DUPLICATE KEY 中指定的列进行排序存储的。前缀索引,即在排序的基础上,实现的一种根据给定前缀列,快速查询数据的索引方式。

3.2、前缀索引案例讲解

我们将一行数据的前 36 个字节 作为这行数据的前缀索引。当遇到 VARCHAR 类型时,前缀索引会直接截断。我们举例说明:

-

以下表结构的前缀索引为 user_id(8 Bytes) + age(4 Bytes) + message(prefix 24 Bytes)。

ColumnName Type user_id BIGINT age INT message VARCHAR(100) max_dwell_time DATETIME min_dwell_time DATETIME -

以下表结构的前缀索引为 user_name(20 Bytes)。即使没有达到 36 个字节,因为遇到 VARCHAR,所以直接截断,不再往后继续。

ColumnName Type user_name VARCHAR(20) age INT message VARCHAR(100) max_dwell_time DATETIME min_dwell_time DATETIME

当我们的查询条件,是前缀索引的前缀时,可以极大的加快查询速度。比如在第一个例子中,我们执行如下查询:

- --查询方式1

- SELECT * FROM table WHERE user_id=1829239 and age=20;

-

- --查询方式2

- SELECT * FROM table WHERE age=20;

查找速度方面,查询方式1会远高于查询方式2,所以在建表时,正确的选择列顺序,能够极大地提高查询效率。

3.3、前缀索引调整方法

由于建表时已指定列顺序,所以一个表只有一种前缀索引。这对于使用其他不能命中前缀索引的列作为条件进行的查询来说,效率上可能无法满足需求。因此,我们可以通过创建 ROLLUP 来人为的调整列顺序。详情可参考 ROLLUP。

4、zonemap索引详解

ZoneMap 索引是在列存格式上,对每一列自动维护的索引信息,包括 Min/Max,Null 值个数等等。这种索引对用户透明,我们在日常并不直接使用。

5、倒排索引详解

从2.0.0版本开始,Doris支持倒排索引,可以用来进行文本类型的全文检索、普通数值日期类型的等值范围查询,快速从海量数据中过滤出满足条件的行。接下来我们主要介绍倒排索引实现原理、如何倒排索引的创建、删除、查询等使用方式。

5.1、 原理介绍

倒排索引是信息检索领域常用的索引技术,其本质是将文本分割成一个个词,构建 词 -> 文档编号 的索引,可以快速查找一个词在哪些文档出现。在Doris的倒排索引实现中,table的一行对应一个文档、一列对应文档中的一个字段,因此利用倒排索引可以根据关键词快速定位包含它的行,达到WHERE子句加速的目的。且与Doris中其他索引不同的是,在存储层倒排索引使用独立的文件,跟segment文件有逻辑对应关系、但存储的文件相互独立。这样的好处是可以做到创建、删除索引不用重写tablet和segment文件,大幅降低处理开销。

5.2、功能介绍

Doris倒排索引的功能简要介绍如下:

- 增加了字符串类型的全文检索

- 支持字符串全文检索,包括同时匹配多个关键字MATCH_ALL、匹配任意一个关键字MATCH_ANY

- 支持短语查询 MATCH_PHRASE

- 支持短语+前缀 MATCH_PHRASE_PREFIX

- 支持正则查询 MATCH_REGEXP

- 支持字符串数组类型的全文检索

- 支持英文、中文以及Unicode多语言分词

- 加速普通等值、范围查询,覆盖bitmap索引的功能,可代替bitmap索引

- 支持字符串、数值、日期时间类型的 =, !=, >, >=, <, <= 快速过滤

- 支持字符串、数字、日期时间数组类型的 =, !=, >, >=, <, <=

- 支持完善的逻辑组合

- 新增索引对OR NOT逻辑的下推

- 支持多个条件的任意AND OR NOT组合

- 灵活、快速的索引管理

- 支持在创建表上定义倒排索引

- 支持在已有的表上增加倒排索引,而且支持增量构建倒排索引,无需重写表中的已有数据

- 支持删除已有表上的倒排索引,无需重写表中的已有数据

5.3、倒排索引属性介绍

5.3.1、分词器属性

parser指定分词器(默认不指定代表不分词)

- english是英文分词,适合被索引列是英文的情况,用空格和标点符号分词,性能高

- chinese是中文分词,适合被索引列主要是中文的情况,性能比english分词低

- unicode是多语言混合类型分词,适用于中英文混合、多语言混合的情况。它能够对邮箱前缀和后缀、IP地址以及字符数字混合进行分词,并且可以对中文按字符分词。

5.3.2、分词模式属性

目前parser = chinese时支持如下几种模式(默认coarse_grained):

- fine_grained:细粒度模式,倾向于分出比较短的词,比如 '武汉市长江大桥' 会分成 '武汉', '武汉市', '市长', '长江', '长江大桥', '大桥' 6个词

- coarse_grained:粗粒度模式,倾向于分出比较长的词,,比如 '武汉市长江大桥' 会分成 '武汉市' '长江大桥' 2个词

5.3.3、char_filter属性

- 功能主要在分词前对字符串提前处理

- char_filter_type:指定使用不同功能的char_filter(目前仅支持char_replace)

- char_replace 将pattern中每个char替换为一个replacement中的char

- char_filter_pattern:需要被替换掉的字符数组

- char_filter_replacement:替换后的字符数组,可以不用配置,默认为一个空格字符

- char_replace 将pattern中每个char替换为一个replacement中的char

5.3.4、lower_case属性

- lower_case: 是否将分词进行小写转换,从而在匹配的时候实现忽略大小写

- true: 转换小写

- false:不转换小写

5.3.5、ignore_above属性

- ignore_above:控制字符串是否建索引。

- 长度超过 ignore_above 设置的字符串(默认256字节)不会被索引。对于字符串数组,ignore_above 将分别应用于每个数组元素,长度超过 ignore_above 的字符串元素将不被索引。

5.3.6、support_phrase属性

用于指定索引是否支持MATCH_PHRASE短语查询加速

- true为支持,但是索引需要更多的存储空间

- false为不支持,更省存储空间,可以用MATCH_ALL查询多个关键字

- 默认false

- CREATE TABLE table_name

- (

- columns_difinition,

- INDEX idx_name1(column_name1) USING INVERTED [PROPERTIES("parser" = "english|unicode|chinese")] [COMMENT 'your comment']

- INDEX idx_name2(column_name2) USING INVERTED [PROPERTIES("parser" = "english|unicode|chinese")] [COMMENT 'your comment']

- INDEX idx_name3(column_name3) USING INVERTED [PROPERTIES("parser" = "chinese", "parser_mode" = "fine_grained|coarse_grained")] [COMMENT 'your comment']

- INDEX idx_name4(column_name4) USING INVERTED [PROPERTIES("parser" = "english|unicode|chinese", "support_phrase" = "true|false")] [COMMENT 'your comment']

- INDEX idx_name5(column_name4) USING INVERTED [PROPERTIES("char_filter_type" = "char_replace", "char_filter_pattern" = "._"), "char_filter_replacement" = " "] [COMMENT 'your comment']

- INDEX idx_name5(column_name4) USING INVERTED [PROPERTIES("char_filter_type" = "char_replace", "char_filter_pattern" = "._")] [COMMENT 'your comment']

- )

- table_properties;

5.4、使用限制

倒排索引在不同数据模型中有不同的使用限制:

- Aggregate 模型:只能为 Key 列建立倒排索引。

- Unique 模型:需要开启 merge on write 特性,开启后,可以为任意列建立倒排索引。

- Duplicate 模型:可以为任意列建立倒排索引。

- 已有表增加倒排索引

2.0-beta版本之前:

- -- 语法1

- CREATE INDEX idx_name ON table_name(column_name) USING INVERTED [PROPERTIES("parser" = "english|unicode|chinese")] [COMMENT 'your comment'];

- -- 语法2

- ALTER TABLE table_name ADD INDEX idx_name(column_name) USING INVERTED [PROPERTIES("parser" = "english|unicode|chinese")] [COMMENT 'your comment'];

2.0-beta版本(含2.0-beta)之后:

上述create/add index操作只对增量数据生成倒排索引,增加了BUILD INDEX的语法用于对存量数据加倒排索引:

- -- 语法1,默认给全表的存量数据加上倒排索引

- BUILD INDEX index_name ON table_name;

- -- 语法2,可指定partition,可指定一个或多个

- BUILD INDEX index_name ON table_name PARTITIONS(partition_name1, partition_name2);

5.5、倒排索引操作语法介绍

倒排索引定义的语法为:index index_name(column_name) using inverted [properties];

using inverted用于声明索引类型为倒排索引;properties用于声明倒排索引的额外属性,其属性主要包含分词器、分词模式、是否支持MATCH_PHRASE短语查询加速。

5.5.1、创建倒排索引

5.5.2、删除倒排索引

- -- 语法1

- DROP INDEX idx_name ON table_name;

-

- -- 语法2

- ALTER TABLE table_name DROP INDEX idx_name;

5.5.3、修改倒排索引

5.5.4、查询倒排索引

- SHOW BUILD INDEX [FROM db_name];

- -- 示例1,查看所有的BUILD INDEX任务进展

- SHOW BUILD INDEX;

- -- 示例2,查看指定table的BUILD INDEX任务进展

- SHOW BUILD INDEX where TableName = "table1";

取消 BUILD INDEX, 可通过以下语句进行

- CANCEL BUILD INDEX ON table_name;

- CANCEL BUILD INDEX ON table_name (job_id1,jobid_2,...);

- 利用倒排索引加速查询

- -- 1. 全文检索关键词匹配,通过MATCH_ANY MATCH_ALL完成

- SELECT * FROM table_name WHERE column_name MATCH_ANY | MATCH_ALL 'keyword1 ...';

-

- -- 1.1 logmsg中包含keyword1的行

- SELECT * FROM table_name WHERE logmsg MATCH_ANY 'keyword1';

-

- -- 1.2 logmsg中包含keyword1或者keyword2的行,后面还可以添加多个keyword

- SELECT * FROM table_name WHERE logmsg MATCH_ANY 'keyword1 keyword2';

-

- -- 1.3 logmsg中同时包含keyword1和keyword2的行,后面还可以添加多个keyword

- SELECT * FROM table_name WHERE logmsg MATCH_ALL 'keyword1 keyword2';

-

- -- 1.4 logmsg中同时包含keyword1和keyword2的行,并且按照keyword1在前,keyword2在后的顺序

- SELECT * FROM table_name WHERE logmsg MATCH_PHRASE 'keyword1 keyword2';

-

- -- 1.5 在保持词顺序的前提下,对最后一个词keyword2做前缀匹配,默认找50个前缀词(session变量inverted_index_max_expansions控制)

- SELECT * FROM table_name WHERE logmsg MATCH_PHRASE_PREFIX 'keyword1 keyword2';

-

- -- 1.6 如果只填一个词会退化为前缀查询,默认找50个前缀词(session变量inverted_index_max_expansions控制)

- SELECT * FROM table_name WHERE logmsg MATCH_PHRASE_PREFIX 'keyword1';

-

- -- 1.7 对分词后的词进行正则匹配,默认匹配50个(session变量inverted_index_max_expansions控制)

- SELECT * FROM table_name WHERE logmsg MATCH_REGEXP 'key*';

-

-

- -- 2. 普通等值、范围、IN、NOT IN,正常的SQL语句即可,例如

- SELECT * FROM table_name WHERE id = 123;

- SELECT * FROM table_name WHERE ts > '2023-01-01 00:00:00';

- SELECT * FROM table_name WHERE op_type IN ('add', 'delete');

- 分词函数

如果想检查分词实际效果或者对一段文本进行分词的话,可以使用tokenize函数

- mysql> SELECT TOKENIZE('武汉长江大桥','"parser"="chinese","parser_mode"="fine_grained"');

- +-----------------------------------------------------------------------------------+

- | tokenize('武汉长江大桥', '"parser"="chinese","parser_mode"="fine_grained"') |

- +-----------------------------------------------------------------------------------+

- | ["武汉", "武汉长江大桥", "长江", "长江大桥", "大桥"] |

- +-----------------------------------------------------------------------------------+

- 1 row in set (0.02 sec)

-

- mysql> SELECT TOKENIZE('武汉市长江大桥','"parser"="chinese","parser_mode"="fine_grained"');

- +--------------------------------------------------------------------------------------+

- | tokenize('武汉市长江大桥', '"parser"="chinese","parser_mode"="fine_grained"') |

- +--------------------------------------------------------------------------------------+

- | ["武汉", "武汉市", "市长", "长江", "长江大桥", "大桥"] |

- +--------------------------------------------------------------------------------------+

- 1 row in set (0.02 sec)

-

- mysql> SELECT TOKENIZE('武汉市长江大桥','"parser"="chinese","parser_mode"="coarse_grained"');

- +----------------------------------------------------------------------------------------+

- | tokenize('武汉市长江大桥', '"parser"="chinese","parser_mode"="coarse_grained"') |

- +----------------------------------------------------------------------------------------+

- | ["武汉市", "长江大桥"] |

- +----------------------------------------------------------------------------------------+

- 1 row in set (0.02 sec)

-

- mysql> SELECT TOKENIZE('I love CHINA','"parser"="english"');

- +------------------------------------------------+

- | tokenize('I love CHINA', '"parser"="english"') |

- +------------------------------------------------+

- | ["i", "love", "china"] |

- +------------------------------------------------+

- 1 row in set (0.02 sec)

-

- mysql> SELECT TOKENIZE('I love CHINA 我爱我的祖国','"parser"="unicode"');

- +-------------------------------------------------------------------+

- | tokenize('I love CHINA 我爱我的祖国', '"parser"="unicode"') |

- +-------------------------------------------------------------------+

- | ["i", "love", "china", "我", "爱", "我", "的", "祖", "国"] |

- +-------------------------------------------------------------------+

- 1 row in set (0.02 sec)

6、倒排索引实践案例

用hackernews 100万条数据展示倒排索引的创建、全文检索、普通查询,包括跟无索引的查询性能进行简单对比。

建表

- CREATE DATABASE test_inverted_index;

-

- USE test_inverted_index;

-

- -- 创建表的同时创建了comment的倒排索引idx_comment

- -- USING INVERTED 指定索引类型是倒排索引

- -- PROPERTIES("parser" = "english") 指定采用english分词,还支持"chinese"中文分词和"unicode"中英文多语言混合分词,如果不指定"parser"参数表示不分词

- CREATE TABLE hackernews_1m

- (

- `id` BIGINT,

- `deleted` TINYINT,

- `type` String,

- `author` String,

- `timestamp` DateTimeV2,

- `comment` String,

- `dead` TINYINT,

- `parent` BIGINT,

- `poll` BIGINT,

- `children` Array<BIGINT>,

- `url` String,

- `score` INT,

- `title` String,

- `parts` Array<INT>,

- `descendants` INT,

- INDEX idx_comment (`comment`) USING INVERTED PROPERTIES("parser" = "english") COMMENT 'inverted index for comment'

- )

- DUPLICATE KEY(`id`)

- DISTRIBUTED BY HASH(`id`) BUCKETS 10

- PROPERTIES ("replication_num" = "1");

-

导入数据

- 通过stream load导入数据

- wget https://doris-build-1308700295.cos.ap-beijing.myqcloud.com/regression/index/hacknernews_1m.csv.gz

-

- curl --location-trusted -u root: -H "compress_type:gz" -T hacknernews_1m.csv.gz http://127.0.0.1:8030/api/test_inverted_index/hackernews_1m/_stream_load

- {

- "TxnId": 2,

- "Label": "a8a3e802-2329-49e8-912b-04c800a461a6",

- "TwoPhaseCommit": "false",

- "Status": "Success",

- "Message": "OK",

- "NumberTotalRows": 1000000,

- "NumberLoadedRows": 1000000,

- "NumberFilteredRows": 0,

- "NumberUnselectedRows": 0,

- "LoadBytes": 130618406,

- "LoadTimeMs": 8988,

- "BeginTxnTimeMs": 23,

- "StreamLoadPutTimeMs": 113,

- "ReadDataTimeMs": 4788,

- "WriteDataTimeMs": 8811,

- "CommitAndPublishTimeMs": 38

- }

- SQL运行count()确认导入数据成功

- mysql> SELECT count() FROM hackernews_1m;

- +---------+

- | count() |

- +---------+

- | 1000000 |

- +---------+

- 1 row in set (0.02 sec)

查询

全文检索

- 用LIKE匹配计算comment中含有'OLAP'的行数,耗时0.18s

- mysql> SELECT count() FROM hackernews_1m WHERE comment LIKE '%OLAP%';

- +---------+

- | count() |

- +---------+

- | 34 |

- +---------+

- 1 row in set (0.18 sec)

- 用基于倒排索引的全文检索MATCH_ANY计算comment中含有'OLAP'的行数,耗时0.02s,加速9倍,在更大的数据集上效果会更加明显

- 这里结果条数的差异,是因为倒排索引对comment分词后,还会对词进行进行统一成小写等归一化处理,因此MATCH_ANY比LIKE的结果多一些

- mysql> SELECT count() FROM hackernews_1m WHERE comment MATCH_ANY 'OLAP';

- +---------+

- | count() |

- +---------+

- | 35 |

- +---------+

- 1 row in set (0.02 sec)

- 同样的对比统计'OLTP'出现次数的性能,0.07s vs 0.01s,由于缓存的原因LIKE和MATCH_ANY都有提升,倒排索引仍然有7倍加速

- mysql> SELECT count() FROM hackernews_1m WHERE comment LIKE '%OLTP%';

- +---------+

- | count() |

- +---------+

- | 48 |

- +---------+

- 1 row in set (0.07 sec)

-

- mysql> SELECT count() FROM hackernews_1m WHERE comment MATCH_ANY 'OLTP';

- +---------+

- | count() |

- +---------+

- | 51 |

- +---------+

- 1 row in set (0.01 sec)

- 同时出现'OLAP'和'OLTP'两个词,0.13s vs 0.01s,13倍加速

- 要求多个词同时出现时(AND关系)使用 MATCH_ALL 'keyword1 keyword2 ...'

- mysql> SELECT count() FROM hackernews_1m WHERE comment LIKE '%OLAP%' AND comment LIKE '%OLTP%';

- +---------+

- | count() |

- +---------+

- | 14 |

- +---------+

- 1 row in set (0.13 sec)

-

- mysql> SELECT count() FROM hackernews_1m WHERE comment MATCH_ALL 'OLAP OLTP';

- +---------+

- | count() |

- +---------+

- | 15 |

- +---------+

- 1 row in set (0.01 sec)

- 任意出现'OLAP'和'OLTP'其中一个词,0.12s vs 0.01s,12倍加速

- 只要求多个词任意一个或多个出现时(OR关系)使用 MATCH_ANY 'keyword1 keyword2 ...'

- mysql> SELECT count() FROM hackernews_1m WHERE comment LIKE '%OLAP%' OR comment LIKE '%OLTP%';

- +---------+

- | count() |

- +---------+

- | 68 |

- +---------+

- 1 row in set (0.12 sec)

-

- mysql> SELECT count() FROM hackernews_1m WHERE comment MATCH_ANY 'OLAP OLTP';

- +---------+

- | count() |

- +---------+

- | 71 |

- +---------+

- 1 row in set (0.01 sec)

普通等值、范围查询

- DataTime类型的列范围查询

- mysql> SELECT count() FROM hackernews_1m WHERE timestamp > '2007-08-23 04:17:00';

- +---------+

- | count() |

- +---------+

- | 999081 |

- +---------+

- 1 row in set (0.03 sec)

- 为timestamp列增加一个倒排索引

- -- 对于日期时间类型USING INVERTED,不用指定分词

- -- CREATE INDEX 是第一种建索引的语法,另外一种在后面展示

- mysql> CREATE INDEX idx_timestamp ON hackernews_1m(timestamp) USING INVERTED;

- Query OK, 0 rows affected (0.03 sec)

2.0-beta(含2.0-beta)后,需要再执行BUILD INDEX才能给存量数据加上倒排索引:

- mysql> BUILD INDEX idx_timestamp ON hackernews_1m;

- Query OK, 0 rows affected (0.01 sec)

- 查看索引创建进度,通过FinishTime和CreateTime的差值,可以看到100万条数据对timestamp列建倒排索引只用了1s

- mysql> SHOW ALTER TABLE COLUMN;

- +-------+---------------+-------------------------+-------------------------+---------------+---------+---------------+---------------+---------------+----------+------+----------+---------+

- | JobId | TableName | CreateTime | FinishTime | IndexName | IndexId | OriginIndexId | SchemaVersion | TransactionId | State | Msg | Progress | Timeout |

- +-------+---------------+-------------------------+-------------------------+---------------+---------+---------------+---------------+---------------+----------+------+----------+---------+

- | 10030 | hackernews_1m | 2023-02-10 19:44:12.929 | 2023-02-10 19:44:13.938 | hackernews_1m | 10031 | 10008 | 1:1994690496 | 3 | FINISHED | | NULL | 2592000 |

- +-------+---------------+-------------------------+-------------------------+---------------+---------+---------------+---------------+---------------+----------+------+----------+---------+

- 1 row in set (0.00 sec)

2.0-beta(含2.0-beta)后,可通过show builde index来查看存量数据创建索引进展:

- -- 若table没有分区,PartitionName默认就是TableName

- mysql> SHOW BUILD INDEX;

- +-------+---------------+---------------+----------------------------------------------------------+-------------------------+-------------------------+---------------+----------+------+----------+

- | JobId | TableName | PartitionName | AlterInvertedIndexes | CreateTime | FinishTime | TransactionId | State | Msg | Progress |

- +-------+---------------+---------------+----------------------------------------------------------+-------------------------+-------------------------+---------------+----------+------+----------+

- | 10191 | hackernews_1m | hackernews_1m | [ADD INDEX idx_timestamp (`timestamp`) USING INVERTED], | 2023-06-26 15:32:33.894 | 2023-06-26 15:32:34.847 | 3 | FINISHED | | NULL |

- +-------+---------------+---------------+----------------------------------------------------------+-------------------------+-------------------------+---------------+----------+------+----------+

- 1 row in set (0.04 sec)

- 索引创建后,范围查询用同样的查询方式,Doris会自动识别索引进行优化,但是这里由于数据量小性能差别不大

- mysql> SELECT count() FROM hackernews_1m WHERE timestamp > '2007-08-23 04:17:00';

- +---------+

- | count() |

- +---------+

- | 999081 |

- +---------+

- 1 row in set (0.01 sec)

- 在数值类型的列parent进行类似timestamp的操作,这里查询使用等值匹配

- mysql> SELECT count() FROM hackernews_1m WHERE parent = 11189;

- +---------+

- | count() |

- +---------+

- | 2 |

- +---------+

- 1 row in set (0.01 sec)

-

- -- 对于数值类型USING INVERTED,不用指定分词

- -- ALTER TABLE t ADD INDEX 是第二种建索引的语法

- mysql> ALTER TABLE hackernews_1m ADD INDEX idx_parent(parent) USING INVERTED;

- Query OK, 0 rows affected (0.01 sec)

-

- -- 2.0-beta(含2.0-beta)后,需要再执行BUILD INDEX才能给存量数据加上倒排索引:

- mysql> BUILD INDEX idx_parent ON hackernews_1m;

- Query OK, 0 rows affected (0.01 sec)

-

- mysql> SHOW ALTER TABLE COLUMN;

- +-------+---------------+-------------------------+-------------------------+---------------+---------+---------------+---------------+---------------+----------+------+----------+---------+

- | JobId | TableName | CreateTime | FinishTime | IndexName | IndexId | OriginIndexId | SchemaVersion | TransactionId | State | Msg | Progress | Timeout |

- +-------+---------------+-------------------------+-------------------------+---------------+---------+---------------+---------------+---------------+----------+------+----------+---------+

- | 10030 | hackernews_1m | 2023-02-10 19:44:12.929 | 2023-02-10 19:44:13.938 | hackernews_1m | 10031 | 10008 | 1:1994690496 | 3 | FINISHED | | NULL | 2592000 |

- | 10053 | hackernews_1m | 2023-02-10 19:49:32.893 | 2023-02-10 19:49:33.982 | hackernews_1m | 10054 | 10008 | 1:378856428 | 4 | FINISHED | | NULL | 2592000 |

- +-------+---------------+-------------------------+-------------------------+---------------+---------+---------------+---------------+---------------+----------+------+----------+---------+

-

- mysql> SHOW BUILD INDEX;

- +-------+---------------+---------------+----------------------------------------------------+-------------------------+-------------------------+---------------+----------+------+----------+

- | JobId | TableName | PartitionName | AlterInvertedIndexes | CreateTime | FinishTime | TransactionId | State | Msg | Progress |

- +-------+---------------+---------------+----------------------------------------------------+-------------------------+-------------------------+---------------+----------+------+----------+

- | 11005 | hackernews_1m | hackernews_1m | [ADD INDEX idx_parent (`parent`) USING INVERTED], | 2023-06-26 16:25:10.167 | 2023-06-26 16:25:10.838 | 1002 | FINISHED | | NULL |

- +-------+---------------+---------------+----------------------------------------------------+-------------------------+-------------------------+---------------+----------+------+----------+

- 1 row in set (0.01 sec)

-

- mysql> SELECT count() FROM hackernews_1m WHERE parent = 11189;

- +---------+

- | count() |

- +---------+

- | 2 |

- +---------+

- 1 row in set (0.01 sec)

- 对字符串类型的author建立不分词的倒排索引,等值查询也可以利用索引加速

- mysql> SELECT count() FROM hackernews_1m WHERE author = 'faster';

- +---------+

- | count() |

- +---------+

- | 20 |

- +---------+

- 1 row in set (0.03 sec)

-

- -- 这里只用了USING INVERTED,不对author分词,整个当做一个词处理

- mysql> ALTER TABLE hackernews_1m ADD INDEX idx_author(author) USING INVERTED;

- Query OK, 0 rows affected (0.01 sec)

-

- -- 2.0-beta(含2.0-beta)后,需要再执行BUILD INDEX才能给存量数据加上倒排索引:

- mysql> BUILD INDEX idx_author ON hackernews_1m;

- Query OK, 0 rows affected (0.01 sec)

-

- -- 100万条author数据增量建索引仅消耗1.5s

- mysql> SHOW ALTER TABLE COLUMN;

- +-------+---------------+-------------------------+-------------------------+---------------+---------+---------------+---------------+---------------+----------+------+----------+---------+

- | JobId | TableName | CreateTime | FinishTime | IndexName | IndexId | OriginIndexId | SchemaVersion | TransactionId | State | Msg | Progress | Timeout |

- +-------+---------------+-------------------------+-------------------------+---------------+---------+---------------+---------------+---------------+----------+------+----------+---------+

- | 10030 | hackernews_1m | 2023-02-10 19:44:12.929 | 2023-02-10 19:44:13.938 | hackernews_1m | 10031 | 10008 | 1:1994690496 | 3 | FINISHED | | NULL | 2592000 |

- | 10053 | hackernews_1m | 2023-02-10 19:49:32.893 | 2023-02-10 19:49:33.982 | hackernews_1m | 10054 | 10008 | 1:378856428 | 4 | FINISHED | | NULL | 2592000 |

- | 10076 | hackernews_1m | 2023-02-10 19:54:20.046 | 2023-02-10 19:54:21.521 | hackernews_1m | 10077 | 10008 | 1:1335127701 | 5 | FINISHED | | NULL | 2592000 |

- +-------+---------------+-------------------------+-------------------------+---------------+---------+---------------+---------------+---------------+----------+------+----------+---------+

-

- mysql> SHOW BUILD INDEX order by CreateTime desc limit 1;

- +-------+---------------+---------------+----------------------------------------------------+-------------------------+-------------------------+---------------+----------+------+----------+

- | JobId | TableName | PartitionName | AlterInvertedIndexes | CreateTime | FinishTime | TransactionId | State | Msg | Progress |

- +-------+---------------+---------------+----------------------------------------------------+-------------------------+-------------------------+---------------+----------+------+----------+

- | 13006 | hackernews_1m | hackernews_1m | [ADD INDEX idx_author (`author`) USING INVERTED], | 2023-06-26 17:23:02.610 | 2023-06-26 17:23:03.755 | 3004 | FINISHED | | NULL |

- +-------+---------------+---------------+----------------------------------------------------+-------------------------+-------------------------+---------------+----------+------+----------+

- 1 row in set (0.01 sec)

-

- -- 创建索引后,字符串等值匹配也有明显加速

- mysql> SELECT count() FROM hackernews_1m WHERE author = 'faster';

- +---------+

- | count() |

- +---------+

- | 20 |

- +---------+

6、bloomfilter索引详解

BloomFilter是由Bloom在1970年提出的一种多哈希函数映射的快速查找算法。通常应用在一些需要快速判断某个元素是否属于集合,但是并不严格要求100%正确的场合,BloomFilter有以下特点:

- 空间效率高的概率型数据结构,用来检查一个元素是否在一个集合中。

- 对于一个元素检测是否存在的调用,BloomFilter会告诉调用者两个结果之一:可能存在或者一定不存在。

- 缺点是存在误判,告诉你可能存在,不一定真实存在。

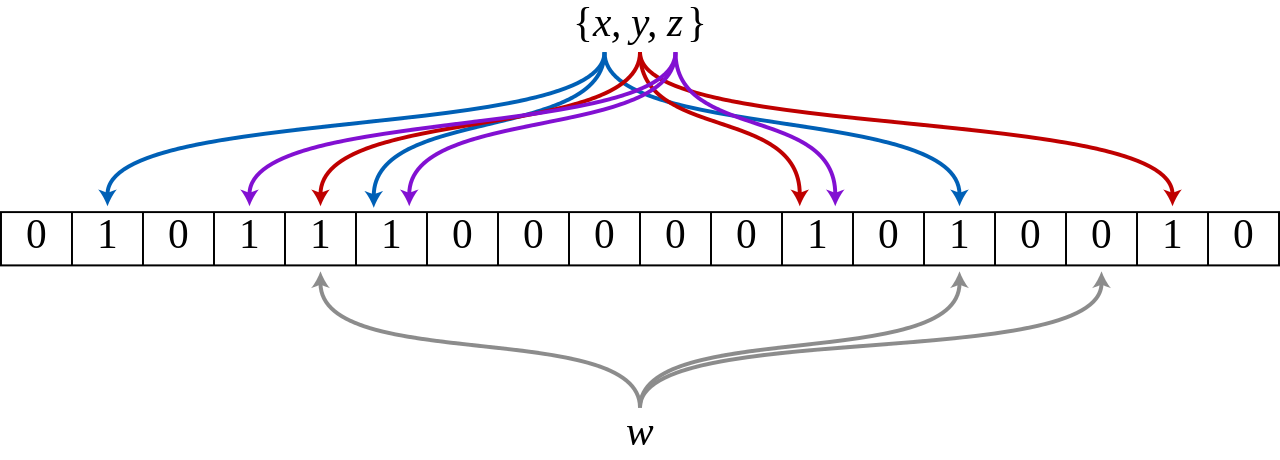

布隆过滤器实际上是由一个超长的二进制位数组和一系列的哈希函数组成。二进制位数组初始全部为0,当给定一个待查询的元素时,这个元素会被一系列哈希函数计算映射出一系列的值,所有的值在位数组的偏移量处置为1。

下图所示出一个 m=18, k=3 (m是该Bit数组的大小,k是Hash函数的个数)的Bloom Filter示例。集合中的 x、y、z 三个元素通过 3 个不同的哈希函数散列到位数组中。当查询元素w时,通过Hash函数计算之后因为有一个比特为0,因此w不在该集合中。

那么怎么判断某个元素是否在集合中呢?同样是这个元素经过哈希函数计算后得到所有的偏移位置,若这些位置全都为1,则判断这个元素在这个集合中,若有一个不为1,则判断这个元素不在这个集合中。就是这么简单!

Doris BloomFilter索引及使用使用场景

举个例子:如果要查找一个占用100字节存储空间大小的短行,一个64KB的HFile数据块应该包含(64 * 1024)/100 = 655.53 = ~700行,如果仅能在整个数据块的起始行键上建立索引,那么它是无法给你提供细粒度的索引信息的。因为要查找的行数据可能会落在该数据块的行区间上,也可能行数据没在该数据块上,也可能是表中根本就不存在该行数据,也或者是行数据在另一个HFile里,甚至在MemStore里。以上这几种情况,都会导致从磁盘读取数据块时带来额外的IO开销,也会滥用数据块的缓存,当面对一个巨大的数据集且处于高并发读时,会严重影响性能。

因此,HBase提供了布隆过滤器,它允许你对存储在每个数据块的数据做一个反向测试。当某行被请求时,通过布隆过滤器先检查该行是否不在这个数据块,布隆过滤器要么确定回答该行不在,要么回答它不知道。这就是为什么我们称它是反向测试。布隆过滤器同样也可以应用到行里的单元上,当访问某列标识符时可以先使用同样的反向测试。

但布隆过滤器也不是没有代价。存储这个额外的索引层次会占用额外的空间。布隆过滤器随着它们的索引对象数据增长而增长,所以行级布隆过滤器比列标识符级布隆过滤器占用空间要少。当空间不是问题时,它们可以帮助你榨干系统的性能潜力。 Doris的BloomFilter索引可以通过建表的时候指定,或者通过表的ALTER操作来完成。Bloom Filter本质上是一种位图结构,用于快速的判断一个给定的值是否在一个集合中。这种判断会产生小概率的误判。即如果返回false,则一定不在这个集合内。而如果范围true,则有可能在这个集合内。

BloomFilter索引也是以Block为粒度创建的。每个Block中,指定列的值作为一个集合生成一个BloomFilter索引条目,用于在查询是快速过滤不满足条件的数据。

下面我们通过实例来看看Doris怎么创建BloomFilter索引。

创建BloomFilter索引

Doris BloomFilter索引的创建是通过在建表语句的PROPERTIES里加上"bloom_filter_columns"="k1,k2,k3",这个属性,k1,k2,k3是你要创建的BloomFilter索引的Key列名称,例如下面我们对表里的saler_id,category_id创建了BloomFilter索引。

- CREATE TABLE IF NOT EXISTS sale_detail_bloom (

- sale_date date NOT NULL COMMENT "销售时间",

- customer_id int NOT NULL COMMENT "客户编号",

- saler_id int NOT NULL COMMENT "销售员",

- sku_id int NOT NULL COMMENT "商品编号",

- category_id int NOT NULL COMMENT "商品分类",

- sale_count int NOT NULL COMMENT "销售数量",

- sale_price DECIMAL(12,2) NOT NULL COMMENT "单价",

- sale_amt DECIMAL(20,2) COMMENT "销售总金额"

- )

- Duplicate KEY(sale_date, customer_id,saler_id,sku_id,category_id)

- PARTITION BY RANGE(sale_date)

- (

- PARTITION P_202111 VALUES [('2021-11-01'), ('2021-12-01'))

- )

- DISTRIBUTED BY HASH(saler_id) BUCKETS 10

- PROPERTIES (

- "replication_num" = "3",

- "bloom_filter_columns"="saler_id,category_id",

- "dynamic_partition.enable" = "true",

- "dynamic_partition.time_unit" = "MONTH",

- "dynamic_partition.time_zone" = "Asia/Shanghai",

- "dynamic_partition.start" = "-2147483648",

- "dynamic_partition.end" = "2",

- "dynamic_partition.prefix" = "P_",

- "dynamic_partition.replication_num" = "3",

- "dynamic_partition.buckets" = "3"

- );

查看BloomFilter索引

查看我们在表上建立的BloomFilter索引是使用:

SHOW CREATE TABLE <table_name>;

删除BloomFilter索引

删除索引即为将索引列从bloom_filter_columns属性中移除:

ALTER TABLE <db.table_name> SET ("bloom_filter_columns" = "");

修改BloomFilter索引

修改索引即为修改表的bloom_filter_columns属性:

ALTER TABLE <db.table_name> SET ("bloom_filter_columns" = "k1,k3");

Doris BloomFilter使用场景

满足以下几个条件时可以考虑对某列建立Bloom Filter 索引:

- 首先BloomFilter适用于非前缀过滤。

- 查询会根据该列高频过滤,而且查询条件大多是 in 和 = 过滤。

- BloomFilter适用于高基数列。比如UserID。因为如果创建在低基数的列上,比如 “性别” 列,则每个Block几乎都会包含所有取值,导致BloomFilter索引失去意义。

Doris BloomFilter使用注意事项

- 不支持对Tinyint、Float、Double 类型的列建Bloom Filter索引。

- Bloom Filter索引只对 in 和 = 过滤查询有加速效果。

- 如果要查看某个查询是否命中了Bloom Filter索引,可以通过查询的Profile信息查看。

7、ngram bloomfilter索引详解

NGram BloomFilter 索引

SinceVersion 2.0.0

为了提升like的查询性能,增加了NGram BloomFilter索引。

NGram BloomFilter创建

表创建时指定:

- CREATE TABLE `table3` (

- `siteid` int(11) NULL DEFAULT "10" COMMENT "",

- `citycode` smallint(6) NULL COMMENT "",

- `username` varchar(32) NULL DEFAULT "" COMMENT "",

- INDEX idx_ngrambf (`username`) USING NGRAM_BF PROPERTIES("gram_size"="3", "bf_size"="256") COMMENT 'username ngram_bf index'

- ) ENGINE=OLAP

- AGGREGATE KEY(`siteid`, `citycode`, `username`) COMMENT "OLAP"

- DISTRIBUTED BY HASH(`siteid`) BUCKETS 10

- PROPERTIES (

- "replication_num" = "1"

- );

-

- -- PROPERTIES("gram_size"="3", "bf_size"="256"),分别表示gram的个数和bloom filter的字节数。

- -- gram的个数跟实际查询场景相关,通常设置为大部分查询字符串的长度,bloom filter字节数,可以通过测试得出,通常越大过滤效果越好,可以从256开始进行验证测试看看效果。当然字节数越大也会带来索引存储、内存cost上升。

- -- 如果数据基数比较高,字节数可以不用设置过大,如果基数不是很高,可以通过增加字节数来提升过滤效果。

查看NGram BloomFilter索引

查看我们在表上建立的NGram BloomFilter索引是使用:

show index from example_db.table3;

删除NGram BloomFilter索引

alter table example_db.table3 drop index idx_ngrambf;

修改NGram BloomFilter索引

为已有列新增NGram BloomFilter索引:

alter table example_db.table3 add index idx_ngrambf(username) using NGRAM_BF PROPERTIES("gram_size"="2", "bf_size"="512")comment 'username ngram_bf index'

Doris NGram BloomFilter使用注意事项

- NGram BloomFilter只支持字符串列

- NGram BloomFilter索引和BloomFilter索引为互斥关系,即同一个列只能设置两者中的一个

- NGram大小和BloomFilter的字节数,可以根据实际情况调优,如果NGram比较小,可以适当增加BloomFilter大小

- 如果要查看某个查询是否命中了NGram Bloom Filter索引,可以通过查询的Profile信息查看