- 1原生微信小程序全流程(基础知识+项目全流程)_微信小程序原生开发

- 2【转载】EEG中常用的功能连接指标汇总_eegx

- 3MyBatis全局配置属性_mybatis org.apache.ibatis.parsing.propertyparser.e

- 4在windows通过VS Code开发Linux内核驱动程序_vscode如何编译虚拟机的linux驱动

- 5LaTeX绘图GUI工具介绍_latex draw

- 6第一次机器学习经历_tensorflow how_many_training_steps

- 7史上最全的Unity面试题(含答案)_unity 面试题

- 8(二进制安装)k8s1.9 证书过期及开启自动续期方案.md_10250端口证书过期了怎么处理呢

- 9Android Canvas的使用_android canvas.drawcolor

- 10软考中级系统集成项目管理工程师自学好不好过,怎么备考,给点经验

kubernetes K8s的监控系统Prometheus安装使用(一)

赞

踩

简单介绍

Prometheus 是一款基于时序数据库的开源监控告警系统,非常适合Kubernetes集群的监控。Prometheus的基本原理是通过HTTP协议周期性抓取被监控组件的状态,任意组件只要提供对应的HTTP接口就可以接入监控。不需要任何SDK或者其他的集成过程。这样做非常适合做虚拟化环境监控系统,比如VM、Docker、Kubernetes等。输出被监控组件信息的HTTP接口被叫做exporter 。目前互联网公司常用的组件大部分都有exporter可以直接使用,比如Varnish、Haproxy、Nginx、MySQL、Linux系统信息(包括磁盘、内存、CPU、网络等等)。

安装K8s

这次我们安装的版本是K8s是20.10.7 ,docker是20.10.5。 这里分配三台机器,分别是master(10.10.162.35),worker1(10.10.162.36),worker2(10.10.162.37)

关闭防火墙,selinux

setenforce 0

systemctl stop firewalld.service ; systemctl disable firewalld.service

- 1

- 2

1.安装docker

三台机器分别安装

yum -y install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager \

--add-repo \

https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装docker

yum install docker-ce-20.10.5 docker-ce-cli-20.10.5 docker-ce-rootless-extras-20.10.5 -y

//启动docker

systemctl start docker

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

修改docker的镜像源为国内镜像,修改/etc/docker/daemon.json

{

"registry-mirrors": ["https://mirror.ccs.tencentyun.com"]

}

- 1

- 2

- 3

修改完成后,重启docker

systemctl daemon-reload

systemctl restart docker.service

- 1

- 2

2.安装K8S

配置阿里云kubernetes源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean all

yum -y makecache

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

新增br_netfilter模块,要求iptables对bridge的数据进行处理

modprobe br_netfilter

echo "1" > /proc/sys/net/bridge/bridge-nf-call-iptables

echo "1" > /proc/sys/net/ipv4/ip_forward

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

禁用swap

swapoff -a

sed -i.bak '/swap/s/^/#/' /etc/fstab

安装20版本k8s,三台机器都开始安装

yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9

mkdir -p /etc/cni/net.d

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

分别设置三台主机的hostname

master主机设置

hostnamectl set-hostname master

worker主机设置

hostnamectl set-hostname worker1

worker2主机设置

hostnamectl set-hostname worker2

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

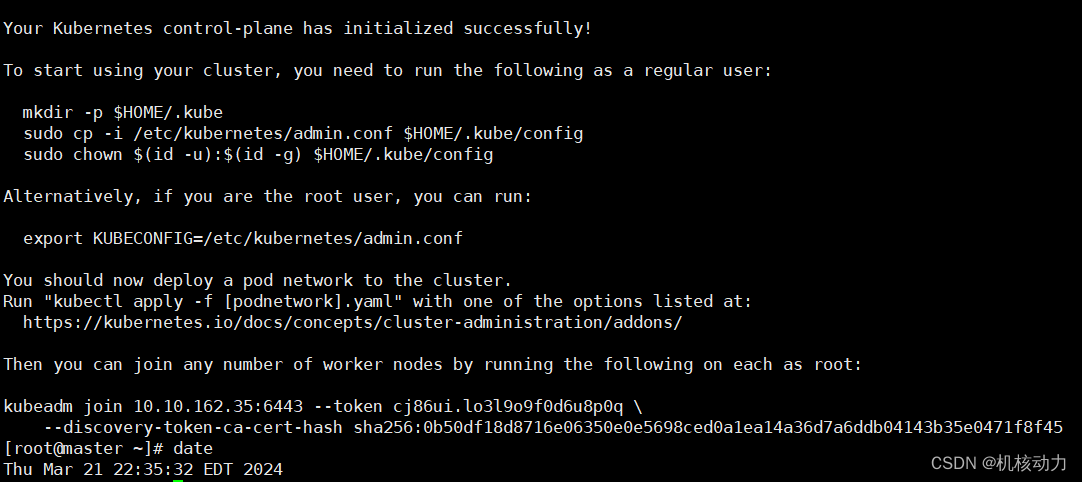

初始化Master主机

#service-cidr设置的service的主机ip范围,pod-network-cidr设置的是pod的ip范围,为什么要用10.244这个网段呢,因为10.244.0.0/16 这个网段这是flannel指定的网段.

kubeadm init --kubernetes-version=1.20.9 --apiserver-advertise-address=10.10.162.35 --image-repository registry.aliyuncs.com/google_containers --service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16

- 1

- 2

- 3

- 4

运行完成后会master主机会显示如下内容,代表主节点初始化成功。

这里有一个语句特别要记住,这是工作节点主机加入master的关键执行命令。

kubeadm join 10.10.162.35:6443 --token cj86ui.lo3l9o9f0d6u8p0q \

--discovery-token-ca-cert-hash sha256:0b50df18d8716e06350e0e5698ced0a1ea14a36d7a6ddb04143b35e0471f8f45

- 1

- 2

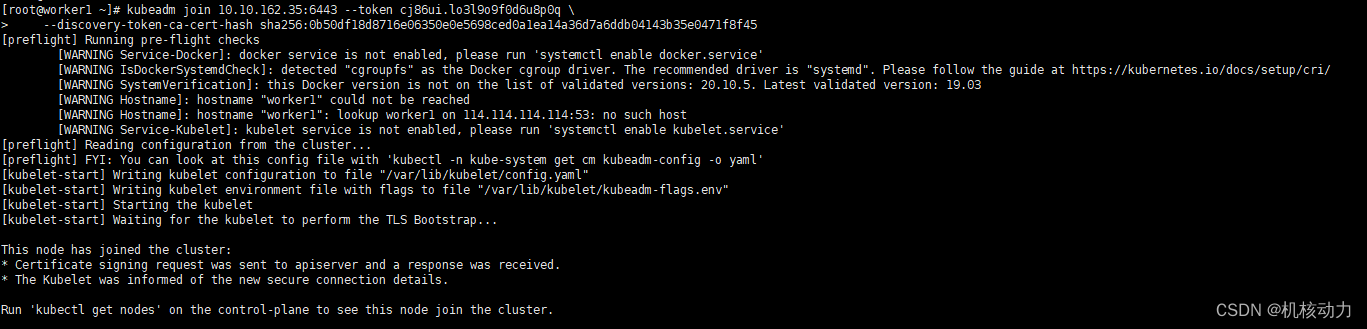

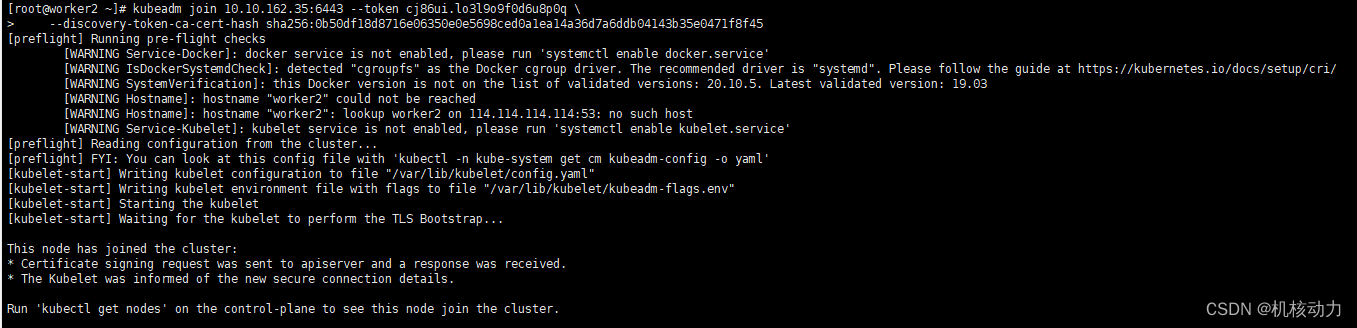

接下来的worker1,worker2分别运行上面的语句加入master主节点集群

worker1加入

worker2加入

master主节点增加kubectl密钥访问

mkdir -p $HOME/.kube

/usr/bin/cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

- 1

- 2

- 3

- 4

- 5

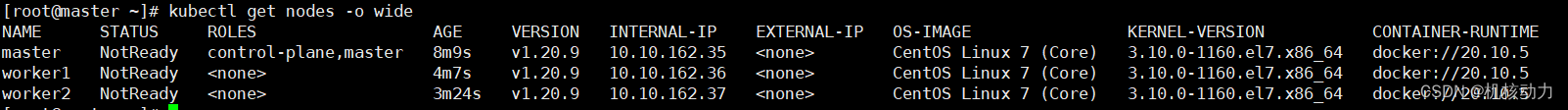

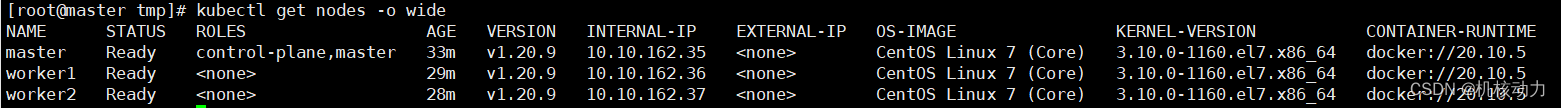

然后我们运行系统命令,看整个集群是否已经正常

kubectl get nodes -o wide

- 1

从上面的状态看,各个节点还没有网络启动,所以都是notReady状态。

3.安装flannel网络插件

下面命令用到的flannel插件可以 网盘下载到。https://pan.baidu.com/s/1yL_7EM-kEoqss12E1UDFXA 提取码: uikr

三台机器分别执行

master主机执行

拷贝cni plug执行程序

cp flannel /opt/cni/bin/

chmod 755 /opt/cni/bin/flannel

docker load < flanneld-v0.11.0-amd64.docker

kubectl delete -f kube-flannel.yml

kubectl apply -f kube-flannel.yml

worker主机执行

cp flannel /opt/cni/bin/

chmod 755 /opt/cni/bin/flannel

docker load < flanneld-v0.11.0-amd64.docker

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

这时候所有节点运行基本正常了,这样K8s安装成功

安装Prometheus

安装这个我们会启用Grafana的UI来查看Prometheus的数据展示。prometheus我们用的2.0.0,Grafana用的是4.2.0,node-exporter是收集所有节点信息的Pod。

为了安装方便,可以先把镜像拿下来

docker pull prom/node-exporter

docker pull prom/prometheus:v2.0.0

docker pull grafana/grafana:4.2.0

- 1

- 2

- 3

在拖完镜像后,现在我们就是要执行yaml来建立镜像了。

kubectl create -f node-exporter.yaml

kubectl create -f rbac-setup.yaml

kubectl create -f configmap.yaml

kubectl create -f prometheus.deploy.yaml

kubectl create -f prometheus.svc.yaml

kubectl create -f grafana-deploy.yaml

kubectl create -f grafana-svc.yaml --validate=false

kubectl create -f grafana-ing.yaml

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

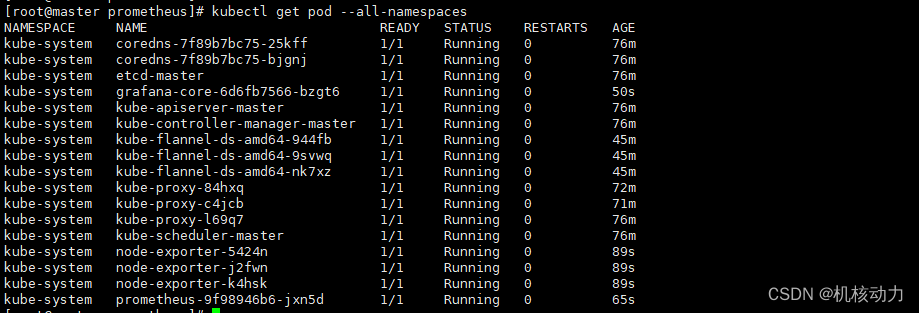

成功后执行kubectl get pod --all-namespaces,显示如下

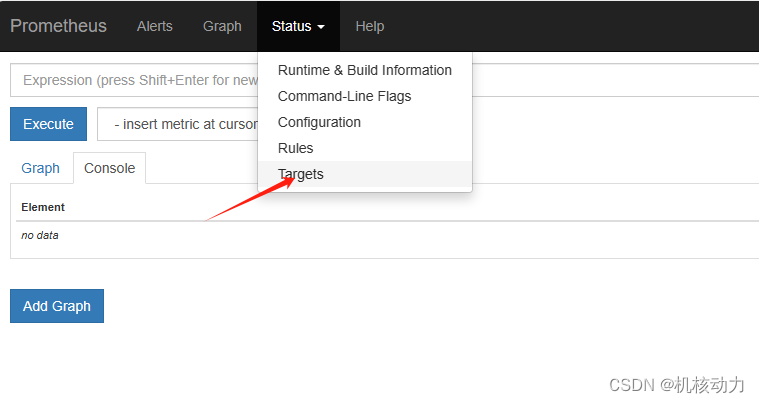

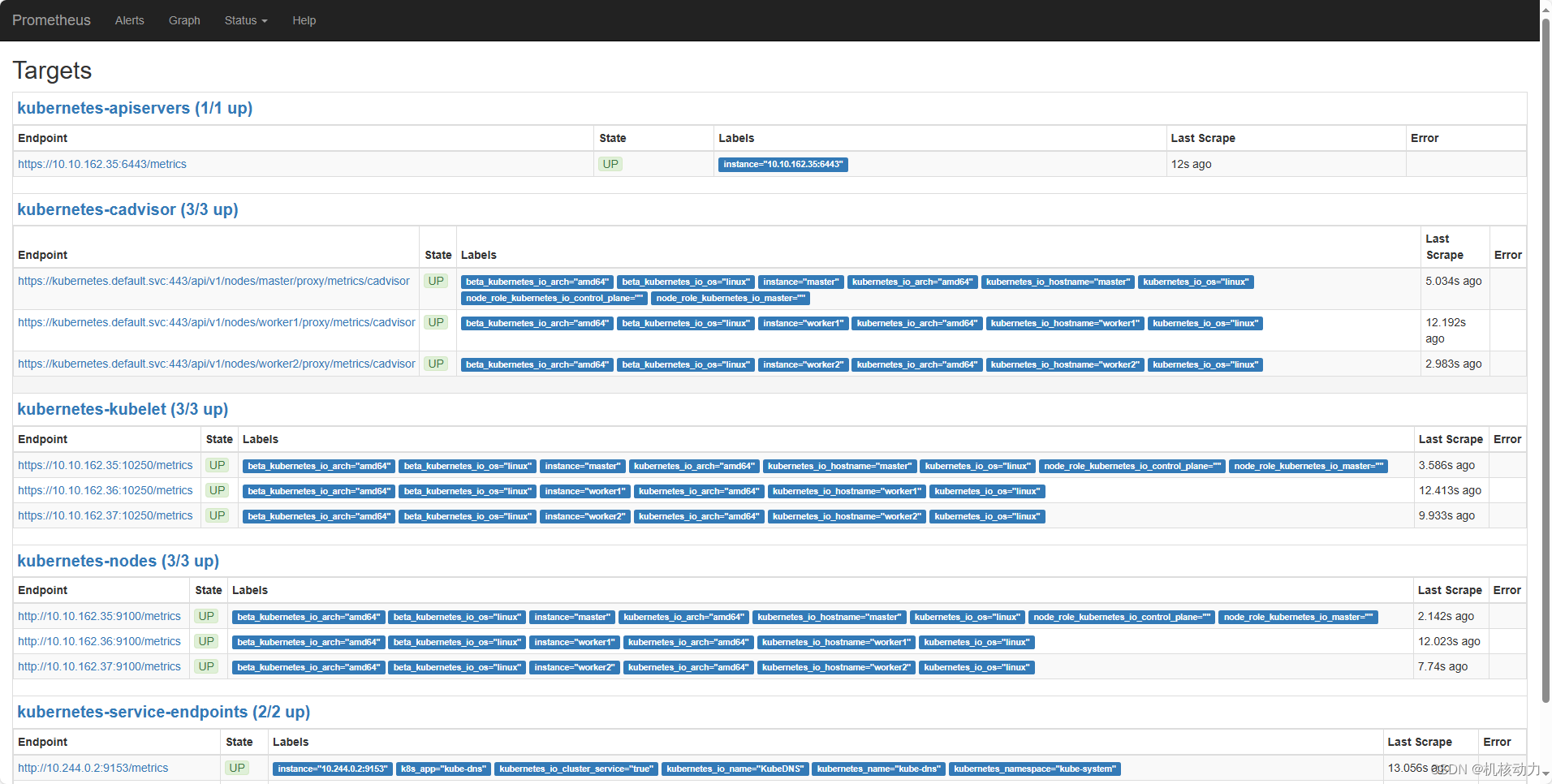

先访问http://10.10.162.35:30003/ 查看Prometheus,看看target是否都是成功的。

如果这样就是,全部成功了

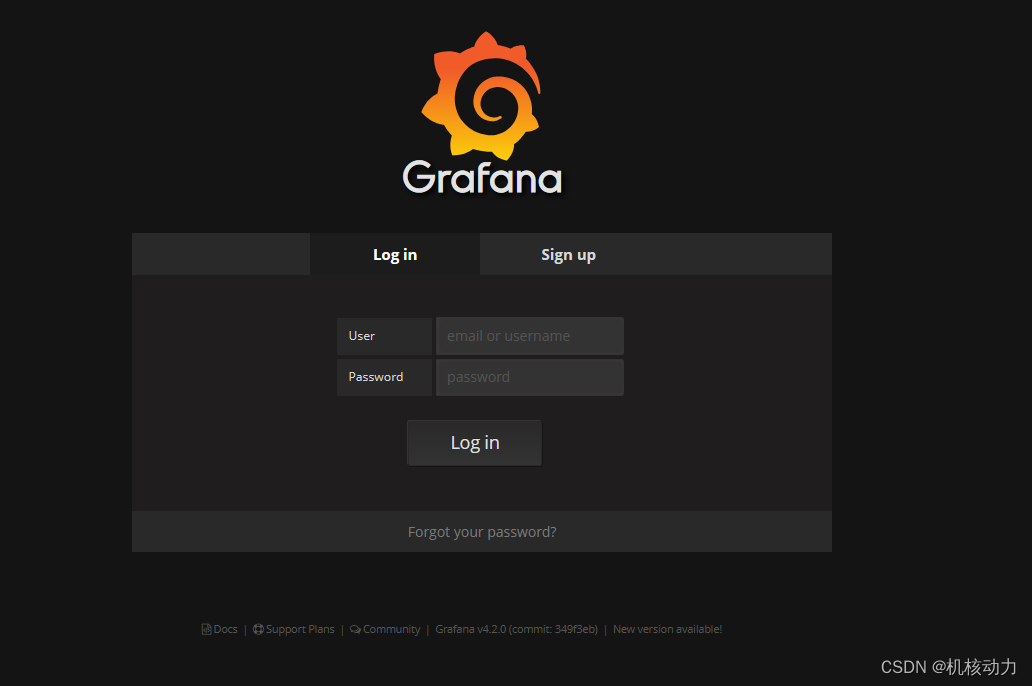

接下来我们配置,Grafana来查看Prometheus的数据内容。因为这里Grafana的nodeport是随机的,所以你可以看看哪些端口是开放的,可以访问一下,我这里是Grafana地址是http://10.10.162.35:32154/login,输入admin,admin默认用户名和密码

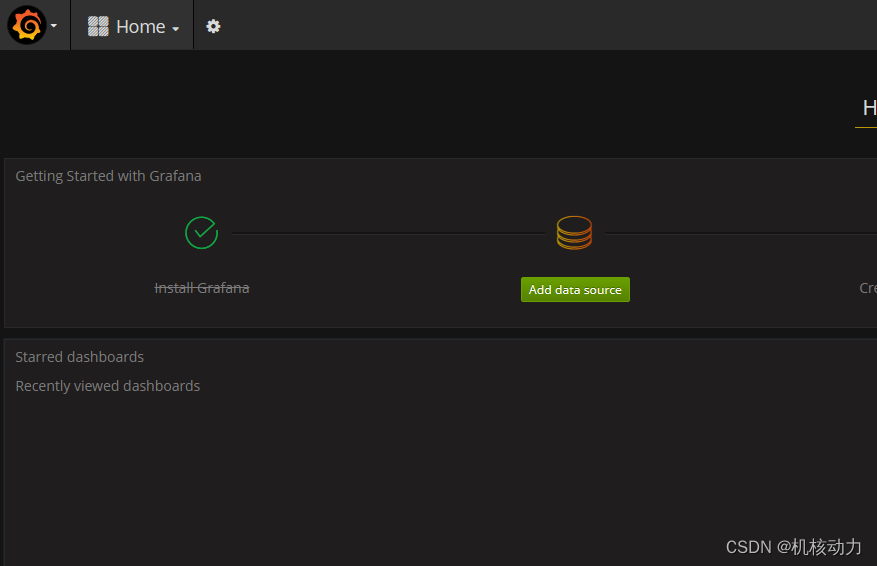

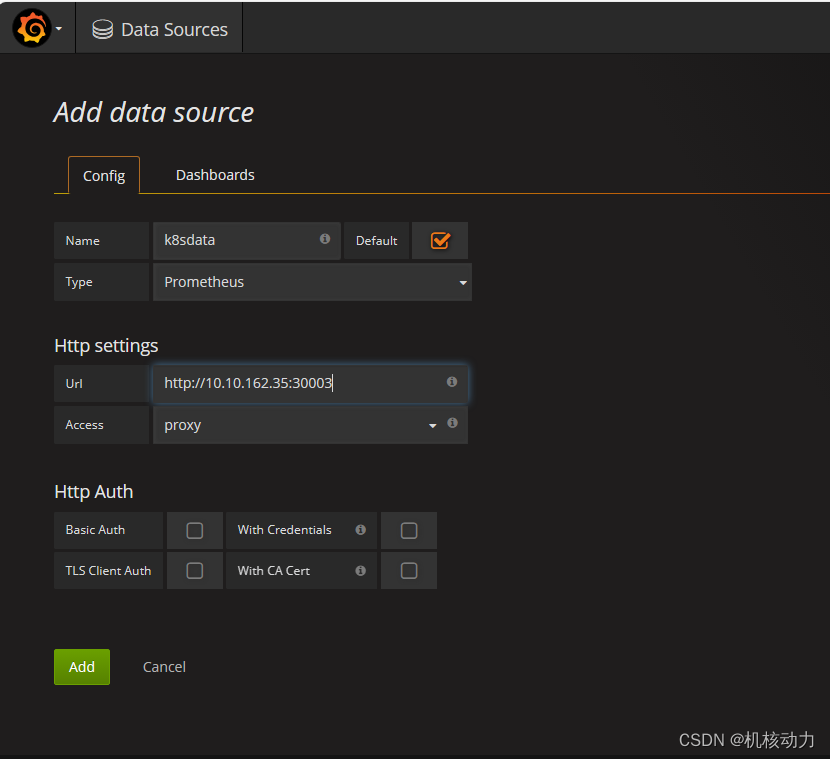

进入Grafana先配置数据源

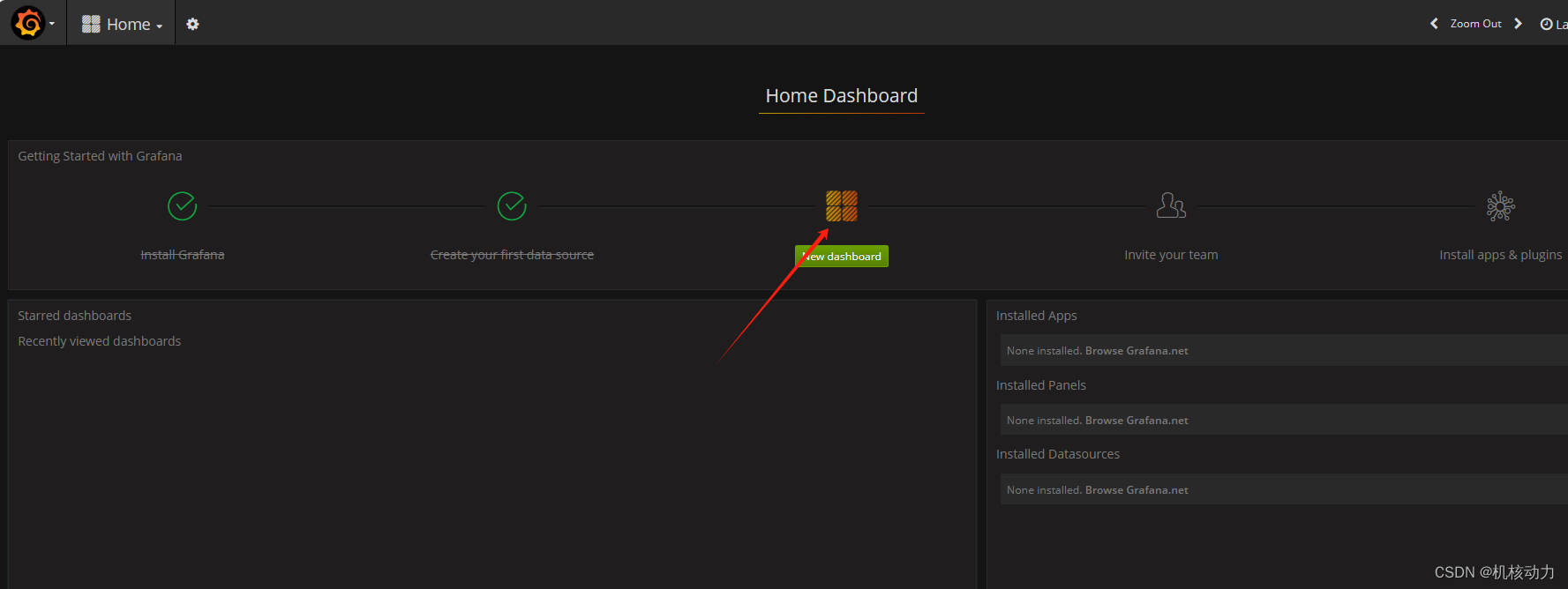

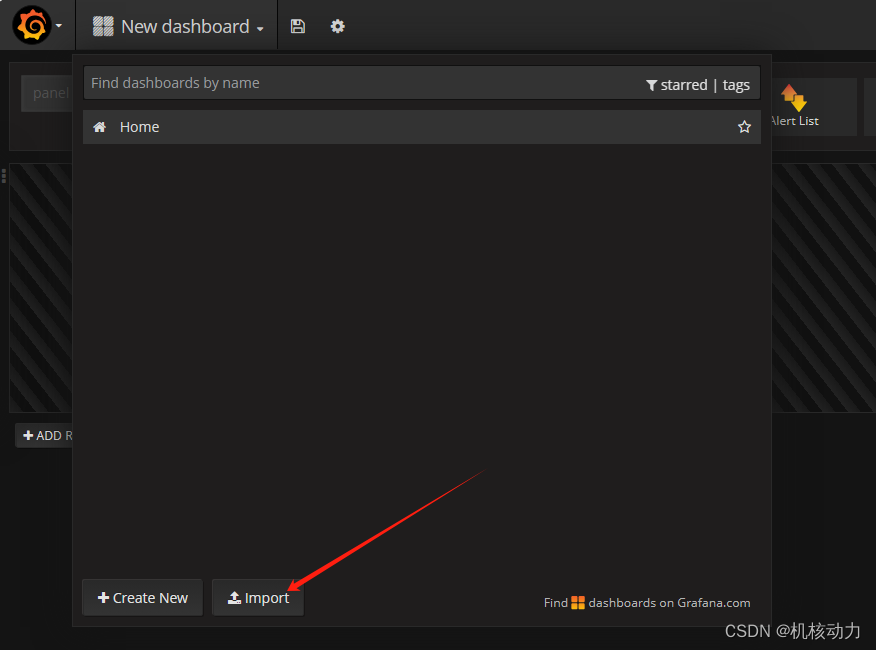

然后配置dashboard

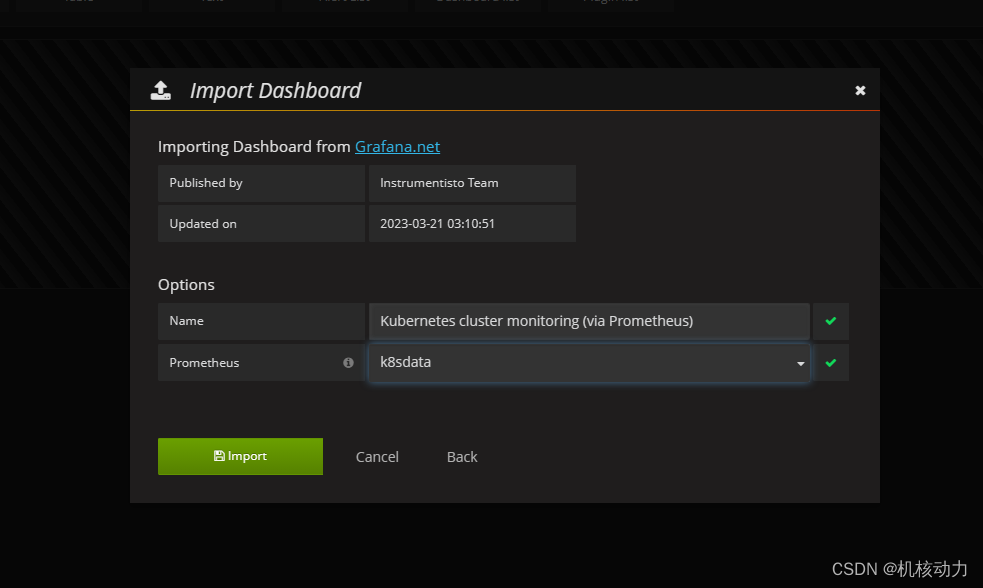

我这里导入的模板是

https://grafana.com/grafana/dashboards/315-kubernetes-cluster-monitoring-via-prometheus/

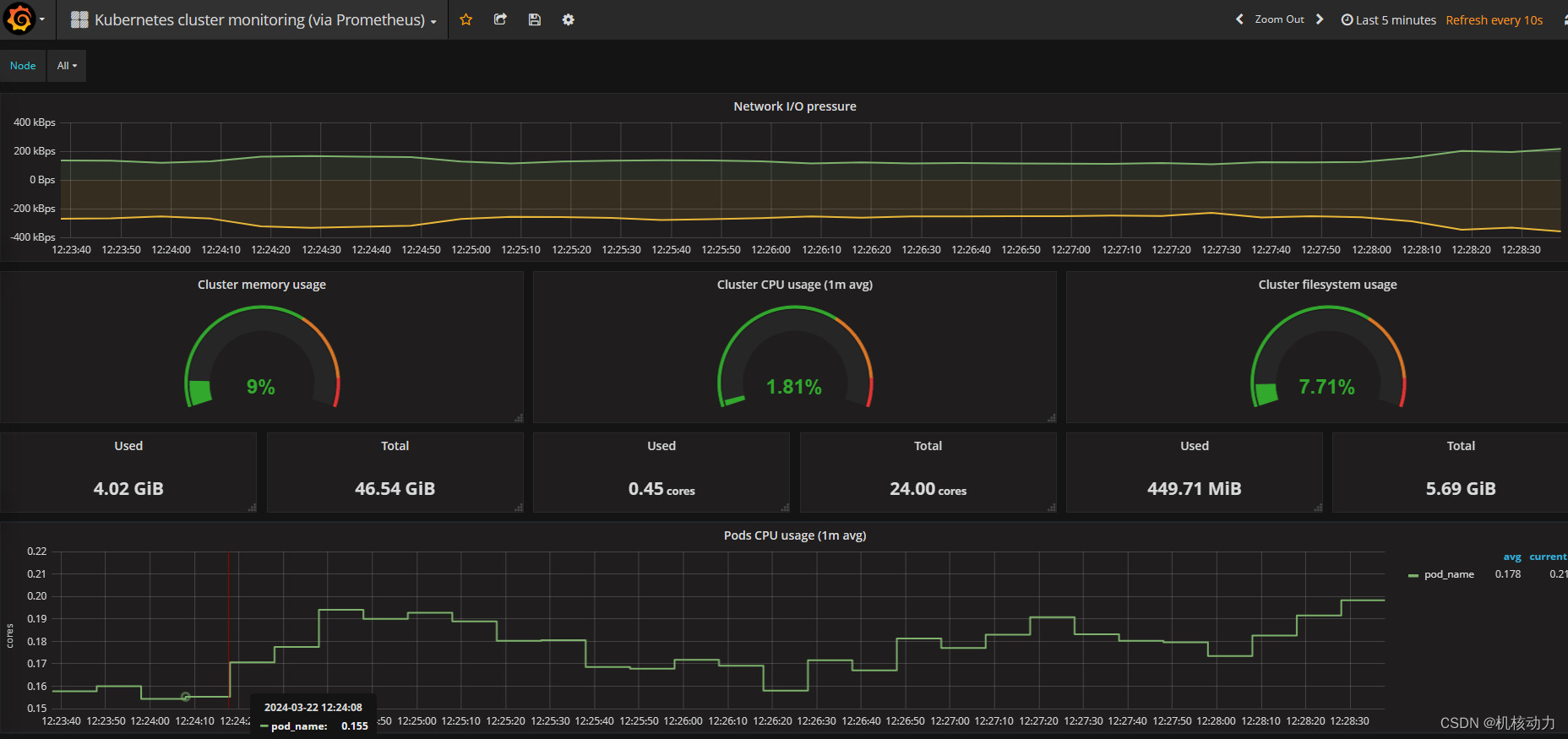

接下来就可以观看指标参数了。接下来我们会自己来定义其他的监控项。

node-exporter.yaml 内容如下

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-system

labels:

k8s-app: node-exporter

spec:

selector:

matchLabels:

k8s-app: node-exporter

template:

metadata:

labels:

k8s-app: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

containers:

- image: prom/node-exporter

name: node-exporter

ports:

- containerPort: 9100

protocol: TCP

name: http

securityContext:

privileged: true

args:

- --path.procfs

- /host/proc

- --path.sysfs

- /host/sys

- --collector.filesystem.ignored-mount-points

- '"^/(sys|proc|dev|host|etc)($|/)"'

volumeMounts:

- name: dev

mountPath: /host/dev

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: node-exporter

name: node-exporter

namespace: kube-system

spec:

ports:

- name: http

port: 9100

nodePort: 31672

protocol: TCP

type: NodePort

selector:

k8s-app: node-exporter

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

rbac-setup.yaml内容如下

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- nodes/metrics

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

scheme: http

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /metrics

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'kubernetes-kubelet'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

prometheus.deploy.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus:v2.0.0

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=7d"

- "--web.enable-admin-api"

- "--web.enable-lifecycle"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/prometheus"

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 2500Mi

serviceAccountName: prometheus

volumes:

- name: data

emptyDir: {}

- name: config-volume

configMap:

name: prometheus-config

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

prometheus.svc.yaml

---

kind: Service

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus

namespace: kube-system

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30003

selector:

app: prometheus

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

grafana-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana-core

namespace: kube-system

labels:

app: grafana

component: core

spec:

replicas: 1

selector:

matchLabels:

app: grafana

component: core

template:

metadata:

labels:

app: grafana

component: core

spec:

containers:

- image: grafana/grafana:4.2.0

name: grafana-core

imagePullPolicy: IfNotPresent

# env:

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

env:

# The following env variables set up basic auth twith the default admin user and admin password.

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

# - name: GF_AUTH_ANONYMOUS_ORG_ROLE

# value: Admin

# does not really work, because of template variables in exported dashboards:

# - name: GF_DASHBOARDS_JSON_ENABLED

# value: "true"

readinessProbe:

httpGet:

path: /login

port: 3000

# initialDelaySeconds: 30

# timeoutSeconds: 1

volumeMounts:

- name: grafana-persistent-storage

mountPath: /var

volumes:

- name: grafana-persistent-storage

emptyDir: {}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

grafana-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

labels:

app: grafana

component: core

spec:

type: NodePort

ports:

- port: 3000

selector:

app: grafana

component: core

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

grafana-ing.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana

namespace: kube-system

spec:

rules:

- host: k8s.grafana

http:

paths:

- path: /

backend:

serviceName: grafana

servicePort: 3000

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15