- 1四、Flink使用广播状态和定时器实现word_join_count有效时间1分钟_flink 定时器

- 2大模型的模型参数定义方法_大模型的参数数量是如何定义的

- 3优雅的实现EasyPoi动态导出列的两种方式_easypoi动态设置导出字段

- 4【源码+文档+调试讲解】微信小程序家政项目小程序

- 5Python—基本数据类型

- 6Git clone远程分支_git 克隆远程分支

- 7intellij idea web项目 目录结构解释,以及配置目录。解决导入eclipse项目,后无法跑起来。_ideaweb项目结构

- 8MySql监控工具断网部署Percona Monitoring and Management ,Perocona的官方监控工具Docker安装教程_mysql monitoring and managment

- 9进程调度_先来先服务进程调度

- 10有那些项目适合程序员业余时间做,并且短期内能赚点小钱?_短期it 开发

CV之DL之YoloV3:《YOLOv3: An Incremental Improvement》的翻译与解读_yolov3: an incremental improvement cvpr

赞

踩

CV之DL之YoloV3:《YOLOv3: An Incremental Improvement》的翻译与解读

目录

《YOLOv3: An Incremental Improvement》翻译与解读

相关论文

《YOLOv3: An Incremental Improvement》翻译与解读

| 地址 | |

| 时间 | 2018年4月8日 |

| 作者 | Joseph Redmon, Ali Farhadi |

| 总结 | 这篇论文总结了一种物体检测算法YOLOv3的改进版本。 背景:物体检测任务中,需要平衡检测速度和准确率两个指标,既要快而又不能误检。 痛点:之前的YOLOv2版本架构与损失函数在一定程度上限制了准确率的提升。 解决方案: >> 对网络结构进行了调整,如增加卷积层数量和宽度,收集更丰富的特征信息。 >> 使用更大规模的训练集重新训练得到的模型。 >> 修改损失函数,增加边缘区域的回归损失权重。 核心特点: >> 效果较之前版本有明显提高,在保持极高检测速度的同时,进一步提升了准确率。 >> 用最小的计算成本提升最大限度的准确率,在TitanX卡上57.9mAP@50的准确率仅需51ms。 优势: >> 性能表现整体超越同类算法如SSD和RetinaNet。 >> 具有开源实现,便于实际应用和进一步研究。 >> 经过连续改进,YOLO成熟可靠,为实时物体检测任务提供一个优质的解决方案。 总之,这篇论文通过对原有YOLOv3算法的细微修改,在保持快速的同时显著提高了准确率,很好解决了实时检测任务中的难点,算法效果优越。 |

Abstract

| We present some updates to YOLO! We made a bunch of little design changes to make it better. We also trained this new network that's pretty swell. It's a little bigger than last time but more accurate. It's still fast though, don't worry. At 320x320 YOLOv3 runs in 22 ms at 28.2 mAP, as accurate as SSD but three times faster. When we look at the old .5 IOU mAP detection metric YOLOv3 is quite good. It achieves 57.9 mAP@50 in 51 ms on a Titan X, compared to 57.5 mAP@50 in 198 ms by RetinaNet, similar performance but 3.8x faster. As always, all the code is online at this https URL | 我们介绍了一些YOLO的更新!我们进行了一系列小的设计更改以提升性能。我们还训练了一个相当不错的新网络。它比上一版本稍微大一些,但更准确。不过,它仍然很快,不用担心。在320x320的情况下,YOLOv3以22毫秒的速度运行,平均准确度为28.2 mAP,与SSD一样准确,但速度快三倍。当我们看看旧的0.5 IOU mAP检测指标时,YOLOv3表现相当不错。在Titan X上,它在51毫秒内达到了57.9 mAP@50,而RetinaNet在198毫秒内达到了57.5 mAP@50,性能相似但快了3.8倍。像往常一样,所有的代码都可以在这个https URL上找到。 |

What This All Means这一切意味着什么

| YOLOv3 is a good detector. It’s fast, it’s accurate. It’s not as great on the COCO average AP between .5 and .95 IOU metric. But it’s very good on the old detection metric of .5 IOU. Why did we switch metrics anyway? The original COCO paper just has this cryptic sentence: “A full discus-sion of evaluation metrics will be added once the evaluation server is complete”. Russakovsky et al report that that hu-mans have a hard time distinguishing an IOU of .3 from .5! “Training humans to visually inspect a bounding box with IOU of 0.3 and distinguish it from one with IOU 0.5 is surprisingly difficult.” [18] If humans have a hard time telling the difference, how much does it matter? But maybe a better question is: “What are we going to do with these detectors now that we have them?” A lot of the people doing this research are at Google and Facebook. I guess at least we know the technology is in good hands and definitely won’t be used to harvest your personal information and sell it to.... wait, you’re saying that’s exactly what it will be used for?? Oh. Well the other people heavily funding vision research are the military and they’ve never done anything horrible like killing lots of people with new technology oh wait..... I have a lot of hope that most of the people using com-puter vision are just doing happy, good stuff with it, like counting the number of zebras in a national park [13], or tracking their cat as it wanders around their house [19]. But computer vision is already being put to questionable use and as researchers we have a responsibility to at least consider the harm our work might be doing and think of ways to mit-igate it. We owe the world that much. In closing, do not @ me. (Because I finally quit Twitter). | YOLOv3是一个很好的检测器。它快速而准确。在COCO的0.5和0.95 IOU度量之间的平均AP上,它并不是那么出色。但在旧的0.5 IOU检测指标上表现非常好。 那么,为什么我们要切换度量标准呢?原始的COCO论文只有这样一句神秘的话:“一旦评估服务器完成,将添加有关评估指标的完整讨论。” Russakovsky等人报告说,人类很难区分0.3和0.5的IOU!“训练人类视觉检查IOU为0.3的边界框并将其与IOU为0.5的边界框区分开是令人惊讶地困难。” [18] 如果人类很难区分,那么这有多重要呢? 但也许更好的问题是:“既然我们已经拥有这些检测器,我们将用它们做什么?” 进行这项研究的许多人都在Google和Facebook。我猜至少我们知道技术在好手中,并且肯定不会被用来收集您的个人信息并将其出售给...等等,等等,你是说这正是它将被用来做的事情吗?哦。 嗯,大力资助视觉研究的另一群人是军方,他们从未利用新技术做过像是用它杀害大量人的可怕事情,哦等等..... 我对使用计算机视觉的大多数人都充满希望,他们只是用它来做一些愉快而有益的事情,比如在国家公园中数斑马的数量[13],或者追踪他们家里的猫在房子里游荡[19]。但是计算机视觉已经开始被用于有争议的用途,作为研究人员,我们有责任至少考虑我们的工作可能会造成的伤害,并考虑减轻伤害的方法。我们对这个世界负有这么多的责任。 |

YoloV3论文翻译与解读

Abstract

We present some updates to YOLO! We made a bunch of little design changes to make it better. We also trained this new network that’s pretty swell. It’s a little bigger than last time but more accurate. It’s still fast though, don’t worry. At 320 × 320 YOLOv3 runs in 22 ms at 28.2 mAP, as accurate as SSD but three times faster. When we look at the old .5 IOU mAP detection metric YOLOv3 is quite good. It achieves 57.9 AP50 in 51 ms on a Titan X, compared to 57.5 AP50 in 198 ms by RetinaNet, similar performance but 3.8× faster. As always, all the code is online at YOLO: Real-Time Object Detection

我们对YOLO系列算法进行一些最新情况介绍!我们做了一些小的设计更改以使它更好。我们还培训了这个非常棒的新网络。比上次大一点,但更准确。不过还是很快,别担心。在320×320处,Yolov3在22毫秒内以28.2 mAP的速度运行,与SSD一样精确,但速度快了三倍。当我们看到旧的.5 IOU地图检测标准yolov3是相当不错的。在Titan X上,51 ms内可达到57.9 AP50,而在198 ms内,Retinanet可达到57.5 AP50,性能相似,但速度快3.8倍。与往常一样,所有代码都在 YOLO: Real-Time Object Detection

1. Introduction

Sometimes you just kinda phone it in for a year, you know? I didn’t do a whole lot of research this year. Spent a lot of time on Twitter. Played around with GANs a little. I had a little momentum left over from last year [12] [1]; I managed to make some improvements to YOLO. But, honestly, nothing like super interesting, just a bunch of small changes that make it better. I also helped out with other people’s research a little. Actually, that’s what brings us here today. We have a camera-ready deadline [4] and we need to cite some of the random updates I made to YOLO but we don’t have a source. So get ready for a TECH REPORT! The great thing about tech reports is that they don’t need intros, y’all know why we’re here. So the end of this introduction will signpost for the rest of the paper. First we’ll tell you what the deal is with YOLOv3. Then we’ll tell you how we do. We’ll also tell you about some things we tried that didn’t work. Finally we’ll contemplate what this all means.

有时候你只需要打一年电话就行了,你知道吗?今年我没有做很多研究。在Twitter上花了很多时间。和GANs 玩了一会儿。去年我有一点动力,我设法对YOLO做了一些改进。但是,老实说,没有什么比这更有趣的了,只是一些小的改变让它变得更好。我也在其他人的研究上做了一点帮助。事实上,这就是我们今天来到这里的原因。我们有一个摄像头准备就绪的最后期限[4],我们需要引用我对Yolo所做的一些随机更新,但我们没有来源。所以准备一份技术报告吧!关于技术报告,最重要的是他们不需要介绍,你们都知道为什么我们会在这里。因此,本导言的结尾将为论文的其余部分做上标记。首先,我们会告诉你YOLOV3上处理了什么。然后我们会告诉你我们是怎么做的。我们也会告诉你一些我们尝试过但不起作用的事情。最后,我们将思考这一切意味着什么。

2. The Deal

So here’s the deal with YOLOv3: We mostly took good ideas from other people. We also trained a new classifier network that’s better than the other ones. We’ll just take you through the whole system from scratch so you can understand it all.

所以YOLOv3是这样的:我们主要从别人那里获得好主意。我们还训练了一个新的分类器网络,它比其他分类器更好。我们将从头开始介绍整个系统,这样您就能理解所有内容。

Figure 1. We adapt this figure from the Focal Loss paper [9]. YOLOv3 runs significantly faster than other detection methods with comparable performance. Times from either an M40 or Titan X, they are basically the same GPU.

图1.我们根据Focal Loss报告[9]调整了这个数字。Yolov3的运行速度明显快于其他具有类似性能的检测方法。从M40或Titan X获得的时间,都是基于相同的GPU。

2.1. Bounding Box Prediction

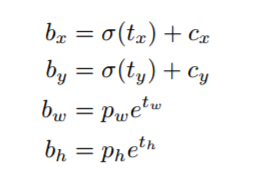

Following YOLO9000 our system predicts bounding boxes using dimension clusters as anchor boxes [15]. The network predicts 4 coordinates for each bounding box, tx, ty, tw, th. If the cell is offset from the top left corner of the image by (cx, cy) and the bounding box prior has width and height pw, ph, then the predictions correspond to:

按照YOLO9000,我们的系统预测使用维度集群作为锚定框[15]的边界框。网络为每个边界框预测4个坐标,分别为tx、ty、tw、th。如果单元格距图像左上角偏移(cx, cy),且边界框先验有宽和高pw, ph,则预测对应:

During training we use sum of squared error loss. If the ground truth for some coordinate prediction is tˆ * our gradient is the ground truth value (computed from the ground truth box) minus our prediction: tˆ * − t* . This ground truth value can be easily computed by inverting the equations above.

在训练中,我们使用误差损失的平方和。如果地面真理协调预测tˆ*我们的梯度是地面真值(从地面实况框计算)-我们的预测:tˆ*−t *。这一地面真值可以很容易地计算通过反演上述方程。

Figure 2. Bounding boxes with dimension priors and location prediction. We predict the width and height of the box as offsets from cluster centroids. We predict the center coordinates of the box relative to the location of filter application using a sigmoid function. This figure blatantly self-plagiarized from [15].

图2.带有尺寸优先和位置预测的边界框。我们预测了盒子的宽度和高度作为与簇形心的偏移。我们使用一个sigmoid函数来预测盒子相对于过滤器应用程序位置的中心坐标。这个数字公然自抄自[15]。

YOLOv3 predicts an objectness score for each bounding box using logistic regression. This should be 1 if the bounding box prior overlaps a ground truth object by more than any other bounding box prior. If the bounding box prior is not the best but does overlap a ground truth object by more than some threshold we ignore the prediction, following [17]. We use the threshold of .5. Unlike [17] our system only assigns one bounding box prior for each ground truth object. If a bounding box prior is not assigned to a ground truth object it incurs no loss for coordinate or class predictions, only objectness.

YOLOv3使用逻辑回归预测每个边界框的客观得分。如果边界框先验与地面真值对象的重叠超过任何其他边界框先验,则该值应为1。如果边界框先验不是最好的,但是重叠了超过某个阈值的地面真值对象,我们忽略预测,跟随[17]。我们使用的阈值是。5。与[17]不同的是,我们的系统只为每个地面真值对象分配一个边界框。如果一个边界框先验没有分配给一个地面真值对象,它不会导致坐标或类预测的损失,只会导致对象性的损失。

2.2. Class Prediction

Each box predicts the classes the bounding box may contain using multilabel classification. We do not use a softmax as we have found it is unnecessary for good performance, instead we simply use independent logistic classifiers. During training we use binary cross-entropy loss for the class predictions. This formulation helps when we move to more complex domains like the Open Images Dataset [7]. In this dataset there are many overlapping labels (i.e. Woman and Person). Using a softmax imposes the assumption that each box has exactly one class which is often not the case. A multilabel approach better models the data.

每个框使用多标签分类预测边界框可能包含的类。我们没有使用softmax,因为我们发现它对于良好的性能是不必要的,相反,我们只是使用独立的逻辑分类器。在训练过程中,我们使用二元交叉熵损失进行类预测。当我们移动到更复杂的领域,比如开放图像数据集[7]时,这个公式会有所帮助。在这个数据集中有许多重叠的标签(即女人和人)。使用softmax会假定每个框只有一个类,而通常情况并非如此。多标签方法可以更好地对数据建模。

2.3. Predictions Across Scales

YOLOv3 predicts boxes at 3 different scales. Our system extracts features from those scales using a similar concept to feature pyramid networks [8]. From our base feature extractor we add several convolutional layers. The last of these predicts a 3-d tensor encoding bounding box, objectness, and class predictions. In our experiments with COCO [10] we predict 3 boxes at each scale so the tensor is N × N × [3 ∗ (4 + 1 + 80)] for the 4 bounding box offsets, 1 objectness prediction, and 80 class predictions.

YOLOv3预测了三种不同尺度的盒子。我们的系统从这些尺度中提取特征,使用类似于特征金字塔网络[8]的概念。从我们的基本特征提取器,我们添加了几个卷积层。最后一个预测了一个三维张量编码的边界框、对象和类预测。在COCO[10]的实验中,我们在每个尺度上预测3个盒子,因此对于4个边界盒偏移量、1个对象预测和80个类预测,张量是N×N×[3(4 + 1 + 80)]。

Next we take the feature map from 2 layers previous and upsample it by 2×. We also take a feature map from earlier in the network and merge it with our upsampled features using concatenation. This method allows us to get more meaningful semantic information from the upsampled features and finer-grained information from the earlier feature map. We then add a few more convolutional layers to process this combined feature map, and eventually predict a similar tensor, although now twice the size. We perform the same design one more time to predict boxes for the final scale. Thus our predictions for the 3rd scale benefit from all the prior computation as well as finegrained features from early on in the network. We still use k-means clustering to determine our bounding box priors. We just sort of chose 9 clusters and 3 scales arbitrarily and then divide up the clusters evenly across scales. On the COCO dataset the 9 clusters were: (10×13),(16×30),(33×23),(30×61),(62×45),(59× 119),(116 × 90),(156 × 198),(373 × 326).

接下来,我们从之前的两层中提取特征图,并将其向上采样2×。我们还从网络的早期获取一个feature map,并使用连接将其与我们的上采样特性合并。该方法允许我们从上采样的特征中获取更有意义的语义信息,并从早期的特征图中获取更细粒度的信息。然后,我们再添加几个卷积层来处理这个组合的特征图,并最终预测出一个类似的张量,尽管现在张量是原来的两倍。我们再次执行相同的设计来预测最终规模的盒子。因此,我们对第三尺度的预测得益于所有的先验计算以及网络早期的细粒度特性。我们仍然使用k-means聚类来确定我们的边界框先验。我们只是随意选择了9个簇和3个尺度然后在尺度上均匀地划分簇。在COCO数据集中,9个簇分别为(10×13)、(16×30)、(33×23)、(30×61)、(62×45)、(59×119)、(116×90)、(156×198)、(373×326)。

2.4. Feature Extractor

We use a new network for performing feature extraction. Our new network is a hybrid approach between the network used in YOLOv2, Darknet-19, and that newfangled residual network stuff. Our network uses successive 3 × 3 and 1 × 1 convolutional layers but now has some shortcut connections as well and is significantly larger. It has 53 convolutional layers so we call it.... wait for it..... Darknet-53!

我们使用一个新的网络来进行特征提取。我们的新网络是YOLOv2、Darknet-19中使用的网络和新颖的剩余网络之间的混合方法。我们的网络使用连续的3×3和1×1卷积层,但现在也有一些快捷连接,而且明显更大。它有53个卷积层。等待.....Darknet-53 !

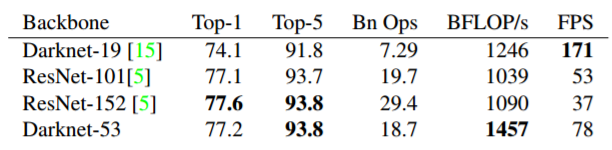

This new network is much more powerful than Darknet- 19 but still more efficient than ResNet-101 or ResNet-152. Here are some ImageNet results:

Table 2. Comparison of backbones. Accuracy, billions of operations, billion floating point operations per second, and FPS for various networks.

表2,backbones的比较,精确度,数十亿次运算,每秒数十亿次浮点运算,以及各种网络的FPS。

Each network is trained with identical settings and tested at 256×256, single crop accuracy. Run times are measured on a Titan X at 256 × 256. Thus Darknet-53 performs on par with state-of-the-art classifiers but with fewer floating point operations and more speed. Darknet-53 is better than ResNet-101 and 1.5× faster. Darknet-53 has similar performance to ResNet-152 and is 2× faster. Darknet-53 also achieves the highest measured floating point operations per second. This means the network structure better utilizes the GPU, making it more efficient to evaluate and thus faster. That’s mostly because ResNets have just way too many layers and aren’t very efficient.

每个网络都以相同的设置进行训练,并以256×256的单次裁剪精度进行测试。运行时间是在泰坦X上以256×256的速度测量的。因此,Darknet-53的性能与最先进的分类器相当,但浮点运算更少,速度更快。Darknet-53比ResNet-101好,并且1.5×更快。Darknet-53的性能与ResNet-152相似,并且速度是后者的2倍。Darknet-53还实现了每秒最高的浮点运算。这意味着网络结构更好地利用GPU,使其更有效地评估,从而更快。这主要是因为ResNets层太多,效率不高。

2.5. Training

We still train on full images with no hard negative mining or any of that stuff. We use multi-scale training, lots of data augmentation, batch normalization, all the standard stuff. We use the Darknet neural network framework for training and testing [14].

我们仍然训练完整的图像没有硬负面挖掘或任何东西。我们使用多尺度训练,大量的数据扩充,批量标准化,所有标准的东西。我们使用Darknet神经网络框架来训练和测试[14]。