- 1Android 10(Q)_11(R) 分区存储适配(1),腾讯&字节&爱奇艺&网易&华为实习面试汇总_为了帮助应用更顺畅地使用第三方媒体库,android 11 允许使用除 mediastore api

- 2《2024巨量引擎日化行业白皮书》丨附下载

- 3用java遍历所有文件夹,将word文件转换为txt格式_word提取txt java源码

- 4自然语言处理学习笔记(一)————概论_自然语言处理笔记

- 5python能做机器人吗,python怎么做机器人_python 机器人

- 6蓝桥杯物联网竞赛_STM32L071_15_ADC/脉冲模块

- 7spring boot中常用的安全框架 Security框架 利用Security框架实现用户登录验证token和用户授权(接口权限控制)_springboot的安全框架

- 8华为Atlas200DK硬件--矿大人工智能实验(环境搭建+人像语义分割实现)_安装acllite库

- 9Django-版本信息介绍-版本选择_django版本

- 10【stable-diffusion史诗级讲解+使用+插件和模型资源】_sd-webui-prompt-all-in-one

glm2大语言模型服务环境搭建

赞

踩

一、模型介绍

ChatGLM2-6B 是开源中英双语对话模型 ChatGLM-6B 的第二代版本,在保留了初代模型对话流畅、部署门槛较低等众多优秀特性的基础之上,ChatGLM2-6B 引入了如下新特性:

更强大的性能:基于 ChatGLM 初代模型的开发经验,我们全面升级了 ChatGLM2-6B 的基座模型。ChatGLM2-6B 使用了 GLM 的混合目标函数,经过了 1.4T 中英标识符的预训练与人类偏好对齐训练,评测结果显示,相比于初代模型,ChatGLM2-6B 在 MMLU(+23%)、CEval(+33%)、GSM8K(+571%) 、BBH(+60%)等数据集上的性能取得了大幅度的提升,在同尺寸开源模型中具有较强的竞争力。

更长的上下文:基于 FlashAttention 技术,我们将基座模型的上下文长度(Context Length)由 ChatGLM-6B 的 2K 扩展到了 32K,并在对话阶段使用 8K 的上下文长度训练。对于更长的上下文,我们发布了 ChatGLM2-6B-32K 模型。LongBench 的测评结果表明,在等量级的开源模型中,ChatGLM2-6B-32K 有着较为明显的竞争优势。

更高效的推理:基于 Multi-Query Attention 技术,ChatGLM2-6B 有更高效的推理速度和更低的显存占用:在官方的模型实现下,推理速度相比初代提升了 42%,INT4 量化下,6G 显存支持的对话长度由 1K 提升到了 8K。

更开放的协议:ChatGLM2-6B 权重对学术研究完全开放,在填写问卷进行登记后亦允许免费商业使用。

二、基本环境介绍

芯片:910a

操作系统:openEULER

三、环境搭建

1、下载与芯片型号版本相应的驱动

加速卡的话是910的包:

2)修改权限:

chmod +x Ascend-hdk-910-npu-driver_23.0.rc3_linux-aarch64.run

3)安装驱动:

./Ascend-hdk-910-npu-driver_23.0.rc3_linux-aarch64.run --full --install-for-all

4) 重启:

Reboot

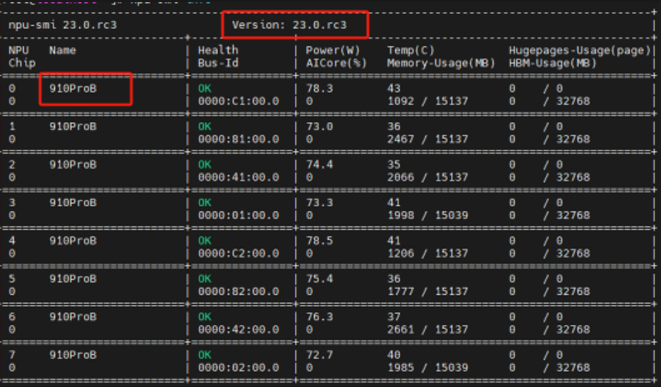

重启后可以查看驱动信息:npu-smi info

2、安装依赖库

# 安装gcc,make依赖软件等。

yum install -y gcc g++ make cmake unzip pciutils net-tools gfortran

sudo yum install openssl-devel

sudo yum install libffi-devel

sudo yum install zlib-devel

sudo yum install sqlite-devel

sudo yum install blas-devel

sudo yum install blas

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

3、安装python

使用python源码安装:

到python官网下载源码文件:Python Source Releases | Python.org

这里我们下载python3.8.10

https://www.python.org/ftp/python/3.8.10/Python-3.8.10.tgz

https://www.python.org/ftp/python/3.9.4/Python-3.9.4.tgz

下载成功后,安装:

tar -zxvf Python-3.9.4.tgz cd Python-3.9.4 ./configure --prefix=/usr/local/python3.8.10 --enable-optimizations --enable-shared --with-ssl make&make install 如果因为环境问题安装失败需要重新安装的话,务必执行一下 make clean 删除一下缓存 ln -s /usr/local/python3.9.4/bin/python3.9 /usr/bin/python ln -s /usr/local/python3.9.4/bin/pip3 /usr/bin/pip3 ln -s /usr/local/python3.9.4/bin/lib/libpython3.9m.so.1.0 /usr/lib64/ mv /usr/bin/python /usr/bin/python.bak ln -s /usr/bin/python3 /usr/bin/python export LD_LIBRARY_PATH=/usr/python3.9.4/lib:$LD_LIBRARY_PATH

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

4、安装依赖包

pip install attrs pip install numpy pip install decorator pip install sympy pip install cffi pip install pyyaml pip install pathlib2 pip install psutil pip install protobuf pip install scipy pip install requests pip install absl-py pip install loguru 服务依赖 pip install fastapi pip install "uvicorn[standard]" Pip install requests 为uvicorn添加软链: ln -s /usr/local/python3.8.10/bin/uvicorn /usr/bin/uvicorn pip uninstall te topi hccl -y pip install sympy pip install /usr/local/Ascend/ascend-toolkit/latest/lib64/te-*-py3-none-any.whl pip install /usr/local/Ascend/ascend-toolkit/latest/lib64/hccl-*-py3-none-any.whl

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

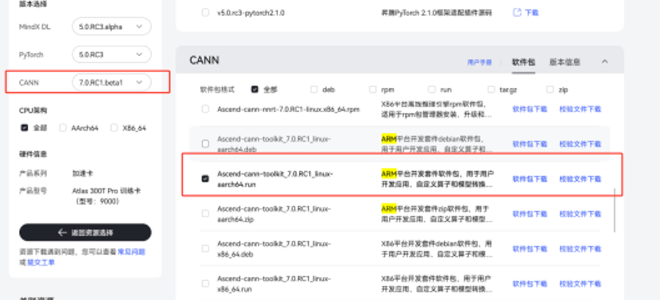

5、安装cann

cann不支持python 3.9.7以上版本

参考:安装步骤(openEuler 22.03)-安装依赖-安装开发环境-…-文档首页-昇腾社区 (hiascend.com)

- 安装cann:到资源下载中心下载相应的cann包:资源下载中心-昇腾社区 (hiascend.com)

- 基于arm架构的最新版cann:

- 下载:

wget https://ascend-repo.obs.cn-east-2.myhuaweicloud.com/CANN/CANN%207.0.RC1/Ascend-cann-toolkit_7.0.RC1_linux-aarch64.run

- 下载到npu目录后,修改为可执行的权限:

chmod -R +x Ascend-cann-toolkit_7.0.RC1_linux-aarch64.run

- 执行安装,指定安装目录到 /usr/local/Ascend

./Ascend-cann-toolkit_7.0.RC1_linux-aarch64.run --install-path=/usr/local/Ascend —full

6、安装mindspore

参考 :MindSpore官网

安装gcc

sudo yum install gcc -y

卸载安装包

pip uninstall te topi hccl -y

安装:

pip install sympy

pip install /usr/local/Ascend/ascend-toolkit/latest/lib64/te-*-py3-none-any.whl

pip install /usr/local/Ascend/ascend-toolkit/latest/lib64/hccl-*-py3-none-any.whl

- 1

- 2

- 3

- 4

- 5

- 6

安装mindspore:

pip install https://ms-release.obs.cn-north-4.myhuaweicloud.com/2.2.0/MindSpore/unified/aarch64/mindspore-2.2.0-cp39-cp39-linux_aarch64.whl --trusted-host ms-release.obs.cn-north-4.myhuaweicloud.com -i https://pypi.tuna.tsinghua.edu.cn/simple

- 1

配置环境变量:

# control log level. 0-DEBUG, 1-INFO, 2-WARNING, 3-ERROR, 4-CRITICAL, default level is WARNING. export GLOG_v=2 # Conda environmental options LOCAL_ASCEND=/usr/local/Ascend # the root directory of run package # lib libraries that the run package depends on export LD_LIBRARY_PATH=${LOCAL_ASCEND}/ascend-toolkit/latest/lib64:${LOCAL_ASCEND}/driver/lib64:${LOCAL_ASCEND}/ascend-toolkit/latest/opp/built-in/op_impl/ai_core/tbe/op_tiling:${LD_LIBRARY_PATH} # Environment variables that must be configured ## TBE operator implementation tool path export TBE_IMPL_PATH=${LOCAL_ASCEND}/ascend-toolkit/latest/opp/built-in/op_impl/ai_core/tbe ## OPP path export ASCEND_OPP_PATH=${LOCAL_ASCEND}/ascend-toolkit/latest/opp ## AICPU path export ASCEND_AICPU_PATH=${ASCEND_OPP_PATH}/.. ## TBE operator compilation tool path export PATH=${LOCAL_ASCEND}/ascend-toolkit/latest/compiler/ccec_compiler/bin/:${PATH} ## Python library that TBE implementation depends on export PYTHONPATH=${TBE_IMPL_PATH}:${PYTHONPATH}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

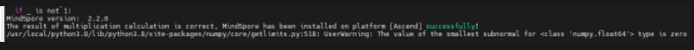

7、验证安装

python -c "import mindspore;mindspore.set_context(device_target='Ascend');mindspore.run_check()"

- 1

验证没问题

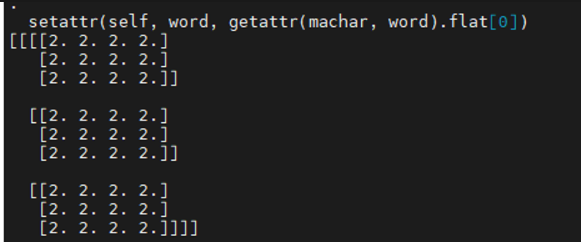

在python命令行中键入下列语句,输出正确,没问题

import numpy as np

import mindspore as ms

import mindspore.ops as ops

ms.set_context(device_target="Ascend")

x = ms.Tensor(np.ones([1,3,3,4]).astype(np.float32))

y = ms.Tensor(np.ones([1,3,3,4]).astype(np.float32))

print(ops.add(x, y))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

8、下载项目源码和模型文件

scp -r -P 25322 ./models root@180.169.210.135:/var/lib/docker/models

9、安装mindpet

Cd /usr/local/mindpet_code

wget https://gitee.com/mindspore-lab/mindpet/repository/archive/master.zip

unzip master.zip

cd mindpet-master/

python set_up.py bdist_wheel

pip install dist/mindpet-1.0.2-py3-none-any.whl

安装完成

10、安装mindformers

Cd /usr/local/mindformers_code

wget https://gitee.com/mindspore/mindformers/repository/archive/dev.zip

Unzip dev.zip

Cd mindformers-dev

bash build.sh