热门标签

热门文章

- 1Python语言系列 书籍介绍_python书本介绍

- 2知乎ajax采集,胖鼠采集WordPress插件教程-WP自动采集和发布简书,微信公众号,知乎等任意网站...

- 3关于yield scrapy.Request()不响应_yield scrapy.request不执行

- 4NTP协议网络时间同步 ios中应用 实现秒杀倒计时_互联网时间计时

- 5「Python系列」Python数据结构

- 6Roberta 源码阅读_roberta代码

- 7Python高级算法——线性规划(Linear Programming)_python线性规划

- 8Git版本控制系列:创建分支和分支合并_版本管理分支一定要合并

- 9C++:哈希表

- 10Go语言在云计算领域的应用和前景如何?_go语言前景如何

当前位置: article > 正文

docker compose kafka集群部署_docker compose部署 kafka2.2

作者:小舞很执着 | 2024-07-14 18:54:57

赞

踩

docker compose部署 kafka2.2

kafka集群部署

部署zookeeper准备工作

mkdir data/zookeeper-{1,2,3}/{data,datalog,logs,conf} -p

cat >data/zookeeper-1/conf/zoo.cfg<<EOF

clientPort=2181

dataDir=/data

dataLogDir=/datalog

tickTime=2000

initLimit=5

syncLimit=2

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

standaloneEnabled=true

admin.enableServer=true

#和下面的docker-compose 创建的docker container实例对应

server.1=zookeeper-1:2888:3888

server.2=zookeeper-2:2888:3888

server.3=zookeeper-3:2888:3888

EOF

cat >data/zookeeper-1/conf/log4j.properties<<EOF

# Copyright 2012 The Apache Software Foundation

#

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Define some default values that can be overridden by system properties

zookeeper.root.logger=INFO, CONSOLE

zookeeper.console.threshold=INFO

zookeeper.log.dir=/logs

zookeeper.log.file=zookeeper.log

zookeeper.log.threshold=INFO

zookeeper.log.maxfilesize=256MB

zookeeper.log.maxbackupindex=20

zookeeper.tracelog.dir=${zookeeper.log.dir}

zookeeper.tracelog.file=zookeeper_trace.log

log4j.rootLogger=${zookeeper.root.logger}

#

# console

# Add "console" to rootlogger above if you want to use this

#

log4j.appender.CONSOLE=org.apache.log4j.ConsoleAppender

log4j.appender.CONSOLE.Threshold=${zookeeper.console.threshold}

log4j.appender.CONSOLE.layout=org.apache.log4j.PatternLayout

log4j.appender.CONSOLE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add ROLLINGFILE to rootLogger to get log file output

#

log4j.appender.ROLLINGFILE=org.apache.log4j.RollingFileAppender

log4j.appender.ROLLINGFILE.Threshold=${zookeeper.log.threshold}

log4j.appender.ROLLINGFILE.File=${zookeeper.log.dir}/${zookeeper.log.file}

log4j.appender.ROLLINGFILE.MaxFileSize=${zookeeper.log.maxfilesize}

log4j.appender.ROLLINGFILE.MaxBackupIndex=${zookeeper.log.maxbackupindex}

log4j.appender.ROLLINGFILE.layout=org.apache.log4j.PatternLayout

log4j.appender.ROLLINGFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add TRACEFILE to rootLogger to get log file output

# Log TRACE level and above messages to a log file

#

log4j.appender.TRACEFILE=org.apache.log4j.FileAppender

log4j.appender.TRACEFILE.Threshold=TRACE

log4j.appender.TRACEFILE.File=${zookeeper.tracelog.dir}/${zookeeper.tracelog.file}

log4j.appender.TRACEFILE.layout=org.apache.log4j.PatternLayout

### Notice we are including log4j's NDC here (%x)

log4j.appender.TRACEFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L][%x] - %m%n

#

# zk audit logging

#

zookeeper.auditlog.file=zookeeper_audit.log

zookeeper.auditlog.threshold=INFO

audit.logger=INFO, RFAAUDIT

log4j.logger.org.apache.zookeeper.audit.Log4jAuditLogger=${audit.logger}

log4j.additivity.org.apache.zookeeper.audit.Log4jAuditLogger=false

log4j.appender.RFAAUDIT=org.apache.log4j.RollingFileAppender

log4j.appender.RFAAUDIT.File=${zookeeper.log.dir}/${zookeeper.auditlog.file}

log4j.appender.RFAAUDIT.layout=org.apache.log4j.PatternLayout

log4j.appender.RFAAUDIT.layout.ConversionPattern=%d{ISO8601} %p %c{2}: %m%n

log4j.appender.RFAAUDIT.Threshold=${zookeeper.auditlog.threshold}

# Max log file size of 10MB

log4j.appender.RFAAUDIT.MaxFileSize=10MB

log4j.appender.RFAAUDIT.MaxBackupIndex=10

EOF

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

2、部署kafka准备工作

3、编辑docker-compose.yml文件

version: '3'

# 配置zk集群

# container services下的每一个子配置都对应一个zk节点的docker container

# 给zk集群配置一个网络,网络名为zookeeper-net

networks:

zookeeper-net:

name: zookeeper-net

driver: bridge

services:

zookeeper-1:

image: zookeeper

container_name: zookeeper-1

restart: always

# 配置docker container和宿主机的端口映射

ports:

- 2181:2181

- 8081:8080

# 将docker container上的路径挂载到宿主机上 实现宿主机和docker container的数据共享

volumes:

- "./data/zookeeper-1/data:/data"

- "./data/zookeeper-1/datalog:/datalog"

- "./data/zookeeper-1/logs:/logs"

- "./data/zookeeper-1/conf:/conf"

# 配置docker container的环境变量

environment:

# 当前zk实例的id

ZOO_MY_ID: 1

# 整个zk集群的机器、端口列表

ZOO_SERVERS: server.1=zookeeper-1:2888:3888 server.2=zookeeper-2:2888:3888 server.3=zookeeper-3:2888:3888

networks:

- zookeeper-net

zookeeper-2:

image: zookeeper

# 配置docker container和宿主机的端口映射

container_name: zookeeper-2

restart: always

ports:

- 2182:2181

- 8082:8080

# 将docker container上的路径挂载到宿主机上 实现宿主机和docker container的数据共享

volumes:

- "./data/zookeeper-2/data:/data"

- "./data/zookeeper-2/datalog:/datalog"

- "./data/zookeeper-2/logs:/logs"

- "./data/zookeeper-2/conf:/conf"

# 配置docker container的环境变量

environment:

# 当前zk实例的id

ZOO_MY_ID: 2

# 整个zk集群的机器、端口列表

ZOO_SERVERS: server.1=zookeeper-1:2888:3888 server.2=zookeeper-2:2888:3888 server.3=zookeeper-3:2888:3888

networks:

- zookeeper-net

zookeeper-3:

image: zookeeper

container_name: zookeeper-3

restart: always

# 配置docker container和宿主机的端口映射

ports:

- 2183:2181

- 8083:8080

# 将docker container上的路径挂载到宿主机上 实现宿主机和docker container的数据共享

volumes:

- "./data/zookeeper-3/data:/data"

- "./data/zookeeper-3/datalog:/datalog"

- "./data/zookeeper-3/logs:/logs"

- "./data/zookeeper-3/conf:/conf"

# 配置docker container的环境变量

environment:

# 当前zk实例的id

ZOO_MY_ID: 3

# 整个zk集群的机器、端口列表

ZOO_SERVERS: server.1=zookeeper-1:2888:3888 server.2=zookeeper-2:2888:3888 server.3=zookeeper-3:2888:3888

networks:

- zookeeper-net

k1:

image: 'bitnami/kafka:3.2.0'

restart: always

container_name: k1

user: root

ports:

- "9092:9092"

- "9999:9999"

environment:

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper-1:2181,zookeeper-2:2181,zookeeper-3:2181

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_BROKER_ID=0

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://10.10.111.33:9092

- KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092

- KAFKA_CFG_NUM_PARTITIONS=3

- KAFKA_CFG_OFFSETS_TOPIC_REPLICATION_FACTOR=3

- KAFKA_HEAP_OPTS=-Xmx512M -Xms256M

- JMX_PORT=9999

volumes:

- ./data/kafka1:/bitnami/kafka:rw

networks:

- zookeeper-net

k2:

image: 'bitnami/kafka:3.2.0'

restart: always

container_name: k2

user: root

ports:

- "9093:9092"

environment:

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper-1:2181,zookeeper-2:2181,zookeeper-3:2181

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_BROKER_ID=1

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://10.10.111.33:9093

- KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092

- KAFKA_CFG_NUM_PARTITIONS=3

- KAFKA_CFG_OFFSETS_TOPIC_REPLICATION_FACTOR=3

- KAFKA_HEAP_OPTS=-Xmx512M -Xms256M

volumes:

- ./data/kafka2:/bitnami/kafka:rw

networks:

- zookeeper-net

k3:

image: 'bitnami/kafka:3.2.0'

restart: always

container_name: k3

user: root

ports:

- "9094:9092"

environment:

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper-1:2181,zookeeper-2:2181,zookeeper-3:2181

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_BROKER_ID=2

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://10.10.111.33:9094

- KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092

- KAFKA_NUM_PARTITIONS=3

- KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=3

- KAFKA_HEAP_OPTS=-Xmx512M -Xms256M

volumes:

- ./data/kafka3:/bitnami/kafka:rw

networks:

- zookeeper-net

kafka-manager:

image: hlebalbau/kafka-manager

restart: always

container_name: kafka-manager

hostname: kafka-manager

network_mode: zookeeper-net

ports:

- 9000:9000

environment:

ZK_HOSTS: zookeeper-1:2181,zookeeper-2:2181,zookeeper-3:2181

KAFKA_BROKERS: k1:9092,k2:9092,k3:9092

APPLICATION_SECRET: letmein

KAFKA_MANAGER_AUTH_ENABLED: "true" # 开启验证

KAFKA_MANAGER_USERNAME: "admin" # 用户名

KAFKA_MANAGER_PASSWORD: "admin" # 密码

KM_ARGS: -Djava.net.preferIPv4Stack=true

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

kafka配置解释:

environment:

### 通用配置

# 允许使用kraft,即Kafka替代Zookeeper

- KAFKA_ENABLE_KRAFT=yes

# kafka角色,做broker,也要做controller

- KAFKA_CFG_PROCESS_ROLES=broker,controller

# 指定供外部使用的控制类请求信息

- KAFKA_CFG_CONTROLLER_LISTENER_NAMES=CONTROLLER

# 定义kafka服务端socket监听端口

- KAFKA_CFG_LISTENERS=PLAINTEXT://:,CONTROLLER://:9093

# 定义安全协议

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT

# 使用Kafka时的集群id,集群内的Kafka都要用这个id做初始化,生成一个UUID即可

- KAFKA_KRAFT_CLUSTER_ID=LelMdIFQkiUFvXCEcqRWA

# 集群地址

- KAFKA_CFG_CONTROLLER_QUORUM_VOTERS=@kafka11:9093,2@kafka22:9093,3@kafka33:9093

# 允许使用PLAINTEXT监听器,默认false,不建议在生产环境使用

- ALLOW_PLAINTEXT_LISTENER=yes

# 设置broker最大内存,和初始内存

- KAFKA_HEAP_OPTS=-XmxM -Xms256M

# 不允许自动创建主题

- KAFKA_CFG_AUTO_CREATE_TOPICS_ENABLE=false

### broker配置

# 定义外网访问地址(宿主机ip地址和端口)

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://.168.1.54:9292

# broker.id,必须唯一

- KAFKA_BROKER_ID=

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

4、启动服务

docker compose up -d

- 1

5、测试kafka

#进入机器kafka容器内部,创建并查看是否存在刚创建的topic,如果存在则说明Kafka集群搭建成功。

docker exec -it k1 bash

#创建topic

kafka-topics.sh --create --bootstrap-server 10.10.111.33:9092 --replication-factor 1 --partitions 3 --topic ODSDataSync

#查看topic

kafka-topics.sh --bootstrap-server 10.10.111.33:9092 --describe --topic ODSDataSync

docker exec -it k2 bash

- 1

- 2

- 3

- 4

- 5

- 6

- 7

注意:

ports 端口映射要与“environment”的端口保持一致

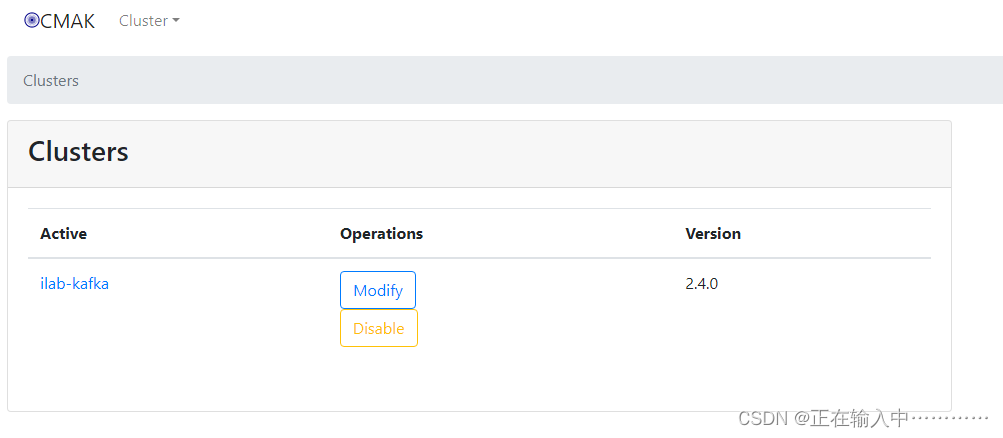

6、web监控管理

浏览器访问http://192.168.1.36:9000,输入用户名密码即可进入监控页面。

按照下图操作增加对kafka集群的监控

推荐阅读

相关标签