热门标签

热门文章

- 1WSL2 Ubuntu子系统安装cuda+cudnn+torch_wsl2 ubuntu22 cudnn

- 2搭建confluence环境_confluence搭建

- 3windows cuda11.x cudnn8.x TensorRT8.x 环境配置_nvfuser_codegen.dll

- 4SQLSugar仅供学习_sqlsugar whereif

- 5【完全开源】小安派-Cam-D200(AiPi-Cam-D200)200W摄像头开发板_开源摄像头

- 6MySQL中的事务_mysql事务

- 7OpenCV迭代去畸变undistortPoints 与vins的迭代不同 第二章vins前端 第三章imu预积分 第四章vio初始化_opencv 不动点迭代

- 8前端基础(一)—— ES6_生旦净末灰

- 9ChatGPT调教指南 | 咒语指南 | Prompts提示词教程(二)

- 10Unity中Sqlite的配置与使用_unity 中使用sqlift设置

当前位置: article > 正文

线上问题:SpringBoot中kafka消费者自动提交关闭无效_kafkaconsumer禁止自动ack无效

作者:小蓝xlanll | 2024-03-01 11:39:32

赞

踩

kafkaconsumer禁止自动ack无效

1 场景复现

关闭消费者自动提交offset,即enable-auto-commit: false,但是,消费者消费数据后,查询group-id的offset还是更新了。

配置profile:

spring: application: name: tutorial # 服务名称:注册中心server devtools: restart: enabled: true kafka: bootstrap-servers: 192.168.211.129:9092 producer: retries: 0 batch-size: 16384 buffer-memory: 33554432 key-serializer: org.apache.kafka.common.serialization.StringSerializer value-serializer: org.apache.kafka.common.serialization.StringSerializer properties: linger.ms: 1 consumer: enable-auto-commit: false auto-commit-interval: 100ms key-deserializer: org.apache.kafka.common.serialization.StringDeserializer value-deserializer: org.apache.kafka.common.serialization.StringDeserializer properties: session.timeout.ms: 15000 group-id: tutorial

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

消费者:

package com.monkey.tutorial.modules.kafka; import org.apache.kafka.clients.consumer.ConsumerRecord; import org.apache.kafka.clients.consumer.ConsumerRecords; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import org.springframework.kafka.annotation.KafkaListener; import org.springframework.kafka.support.Acknowledgment; import org.springframework.stereotype.Service; /** * 我是描述信息. * * @author xindaqi * @date 2022-01-20 12:01 */ @Service public class Consumer { private static final Logger logger = LoggerFactory.getLogger(Consumer.class); @KafkaListener(topics = {"tutorial"}, groupId = "g1") public void consumer1(ConsumerRecord<String, String> consumerRecord) { String topic = consumerRecord.topic(); String data = consumerRecord.value(); logger.info(">>>>>>>>>>Kafka consumer1, topic:{}, data:{}", topic, data); } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

2 原因

Kafka包中的消费者的自动提交不能单独使用,enable-auto-commit单独使用是无效的,即单独配置,最终都会自动提交offset。

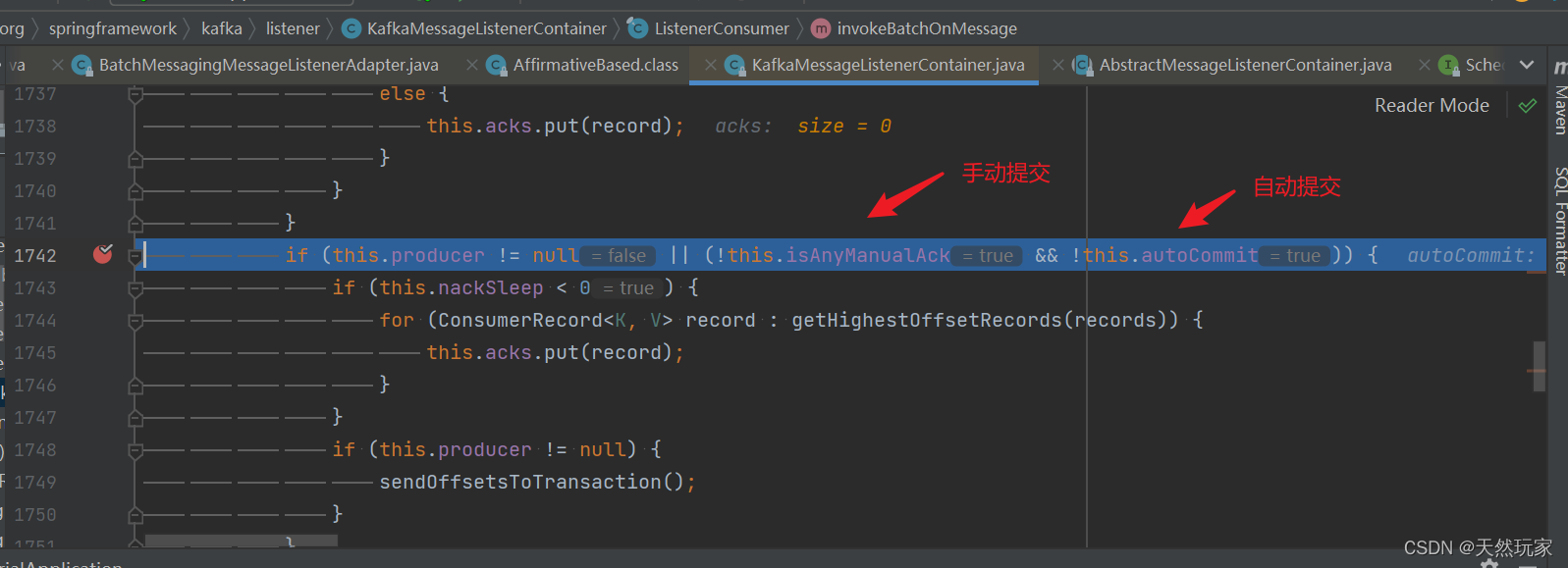

当只配置消费者enable-auto-commit: false,不会生效,因为此时手动提交也为false,按照表达式:!this.isAnyManualAck && !this.autoCommit最终都会为true,从而自动提交。

方法:org.springframework.kafka.listener.KafkaMessageListenerContainer.ListenerConsumer#invokeBatchOnMessage

3 方案

消费者自动提交offset时,无需配置参数:enable-auto-commit;

spring: application: name: tutorial # 服务名称:注册中心server devtools: restart: enabled: true kafka: bootstrap-servers: 192.168.211.129:9092 producer: retries: 0 batch-size: 16384 buffer-memory: 33554432 key-serializer: org.apache.kafka.common.serialization.StringSerializer value-serializer: org.apache.kafka.common.serialization.StringSerializer properties: linger.ms: 1 consumer: auto-commit-interval: 100ms key-deserializer: org.apache.kafka.common.serialization.StringDeserializer value-deserializer: org.apache.kafka.common.serialization.StringDeserializer properties: session.timeout.ms: 15000 group-id: tutorial

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

消费者手动提交offset时,配置enable-auto-commit: false和ack-mode: manual。

spring: application: name: tutorial # 服务名称:注册中心server devtools: restart: enabled: true kafka: bootstrap-servers: 192.168.211.129:9092 producer: retries: 0 batch-size: 16384 buffer-memory: 33554432 key-serializer: org.apache.kafka.common.serialization.StringSerializer value-serializer: org.apache.kafka.common.serialization.StringSerializer properties: linger.ms: 1 consumer: enable-auto-commit: false key-deserializer: org.apache.kafka.common.serialization.StringDeserializer value-deserializer: org.apache.kafka.common.serialization.StringDeserializer properties: session.timeout.ms: 15000 group-id: tutorial listener: missing-topics-fatal: false type: batch ack-mode: manual

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

手动ACK,自定义业务逻辑,样例如下:

package com.monkey.tutorial.modules.kafka; import org.apache.kafka.clients.consumer.ConsumerRecord; import org.apache.kafka.clients.consumer.ConsumerRecords; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import org.springframework.kafka.annotation.KafkaListener; import org.springframework.kafka.support.Acknowledgment; import org.springframework.stereotype.Service; /** * 我是描述信息. * * @author xindaqi * @date 2022-01-20 12:01 */ @Service public class Consumer { private static final Logger logger = LoggerFactory.getLogger(Consumer.class); @KafkaListener(topics = {"tutorial"}, groupId = "g1") public void consumer1(ConsumerRecords<String, String> consumerRecords, Acknowledgment acknowledgment) { for(ConsumerRecord<String, String> record : consumerRecords) { String topic = record.topic(); String data = record.value(); logger.info(">>>>>>>>>>Kafka consumer1, topic:{}, data:{}", topic, data); try { // TODO 业务逻辑 acknowledgment.acknowledge(); logger.info(">>>>>>>>>>成功--Group g1 consumer手动上报offset"); } catch(Exception ex) { logger.info(">>>>>>>>失败--Group g1 consumer手动上报offset"); } } } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

4 小结

Kafka包中的消费者的自动提交不能单独使用,enable-auto-commit单独使用是无效的,即单独配置,最终都会自动提交offset。

(1)enable-auto-commit是为手动提交设置的;

(2)Ack手动提交,配置消费者enable-auto-commit: false和手动提交ack-mode: manual,此时,在消费者中手动ack。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/小蓝xlanll/article/detail/173654

推荐阅读

相关标签