idm 假冒

An opinion piece surrounding Deep fake AI and its ethical dilemmas; as well as how to mitigate them.

围绕“深造假AI”及其道德困境的观点; 以及如何缓解它们。

前言 (Preface)

This is a more formal piece of writing, it was written this way since I want to make a real contribution to the discussion of Deep fake AI and all the ethical issues surrounding it. As such, the writing is stylized in a very academic essay style with even a bibliography. As such, the readability might have been sacrificed in an attempt for a more professional submission of thought.

Ť他是一个比较正式的一块写的,因为我想对深假AI的讨论和所有周围的伦理问题作出真正的贡献有人这样写。 这样的话,即使是参考书目,其文章风格也非常学术化。 因此,可能会为了提高专业性而牺牲可读性。

介绍 (Introduction)

Rene Descartes, a 17th-century philosopher, proposes a thought experiment wherein he suggests that there exists a hypothetical demon that could make one experience a reality that does not exist. Descartes then makes the claim that our perception of reality through the senses is unreliable and ought not to be trusted. Today, the advent of Deep fake AI affirms Descartes’ claim and even delivers the power of distorting reality not only to hypothetical demons but also to specialists with the correct set of computer software. Deep fake AI, a relatively new process of editing video, has increased the accessibility for media falsification. Deep fake AI allows for the alteration of video in a way that makes it indistinguishable from genuine media. While media falsification already exists, Deep fake AI changes the scope, scale and sophistication of the technology involved. This technological advancement threatens the authenticity of video and compromises reality through sensory experience, this further emphasizes the role of the general public to be aware of the veracity of political videos. Moreover, individual agency becomes increasingly important,, not only in the content of the media we absorb, but as well the medium and mode in which it is delivered in. In this article, I will examine Deep fake AI by exploring the historical precedents of media falsification, Deep fake AI’s socio-political relationship with users of technology and explain how public deliberation grants clarity against these false narratives promoted by Deep fake AI.

[R烯笛卡尔,一个17世纪的哲学家,提出了一个思想实验,其中他认为,存在一个假设的恶魔,可以使一个经验是不存在的现实。 笛卡尔随后宣称,我们通过感官对现实的感知是不可靠的,不应被信任。 如今,深层伪造AI的出现证实了笛卡尔的主张,甚至为扭曲现实提供了强大的力量,不仅使假想的恶魔,而且为拥有正确计算机软件集的专家提供了失真的现实。 深度伪造AI(一种相对较新的视频编辑过程)增加了媒体伪造的可访问性。 深度伪造的AI允许以与真实媒体无法区分的方式更改视频。 尽管媒体伪造已经存在,但深造假AI改变了所涉及技术的范围,规模和复杂程度。 这种技术进步威胁了视频的真实性,并通过感官体验损害了现实,这进一步强调了公众意识到政治视频的真实性的作用。 而且,个体代理变得越来越重要,不仅在我们吸收的媒体内容上,而且在其传播的媒介和方式上也越来越重要。在本文中,我将通过探索深度仿造AI的历史先例来研究深度仿造AI。媒体伪造,深层伪造AI与技术用户的社会政治关系,并说明公众审议如何针对深层伪造AI提倡的这些虚假陈述提供清晰度。

研究历史先例并回顾案例研究 (Studying Historical Precedents and Reviewing Case Studies)

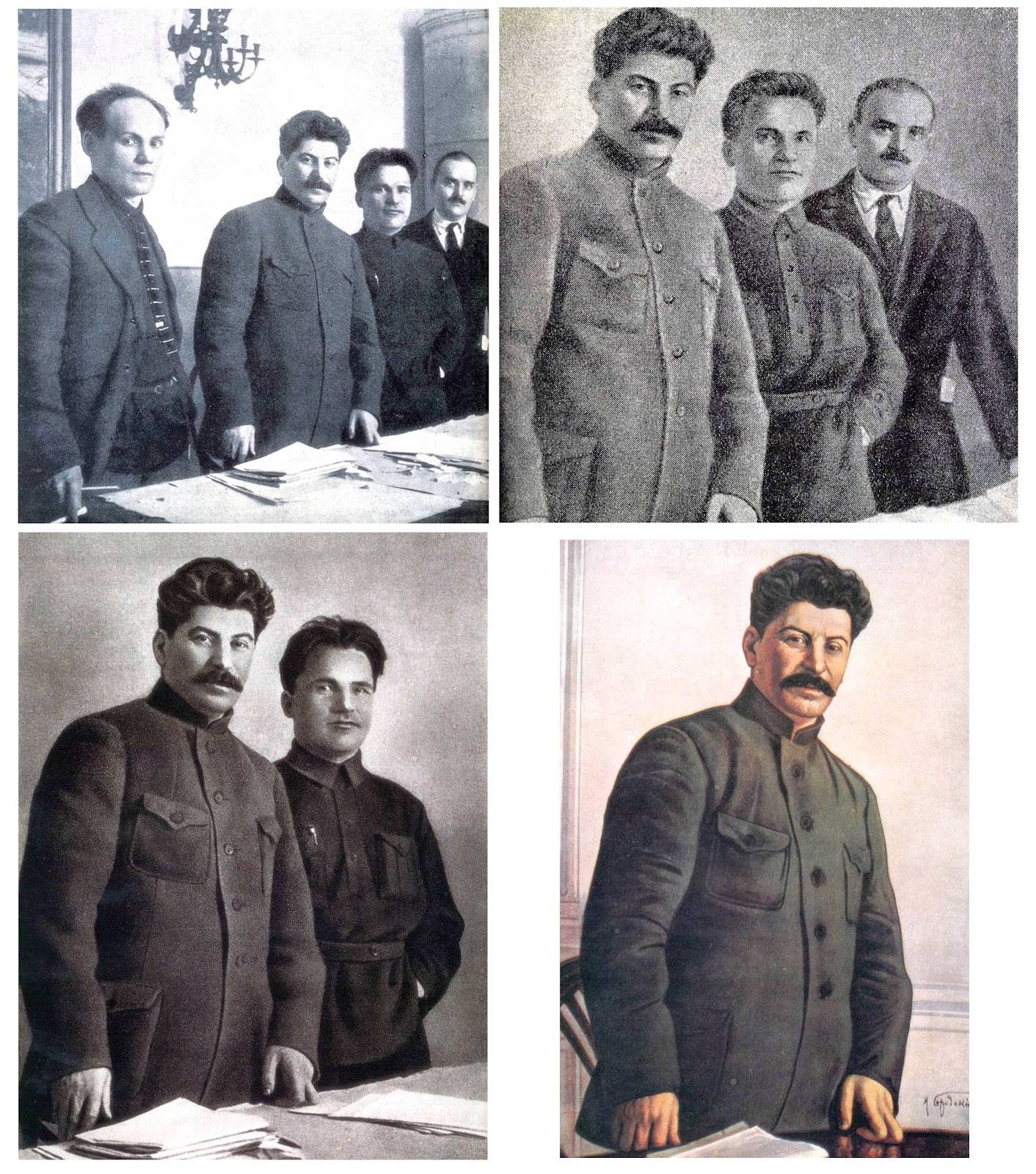

One specific historical precedent that examines media falsification was the rampant censorship during the Soviet era to silence Joseph Stalin’s political rivals. In David King’s book The Commissar Vanishes, King notes that “So much falsification took place during the Stalin years…” (Ch. 1) and that alteration of media was a means to control public perception and memory. Specifically, King finds Soviet dissidents, like Leon Trotsky, completely removed from official Soviet photographs through airbrushing and painting over faces. A large reason as to why the manipulation of the general public was so effective in Stalin’s photographic vandalism was largely due to the novelty of photography at the time. The awareness of photographic editing was almost non-existent at the time, which meant no one would question the authenticity of a photo. This resulted in serious socio-political ramifications like the oppression of the Left Opposition, a rival political faction within Russia.

ØNE特定的历史先例,检媒体窜改了猖獗的审查制度在苏联时期,以沉默约瑟夫·斯大林的政治对手。 在戴维·金(David King)的书《 The Commissar Vanishes》中 ,金指出,“在斯大林时代发生了如此多的伪造……”(第1章),改变媒体是控制公众感知和记忆的一种手段。 金特别指出,通过喷枪和在脸上涂满颜料,苏联异议人士,例如列昂·托洛茨基(Leon Trotsky),已完全从苏联官方照片中删除。 为什么在斯大林的摄影破坏活动中如此有效地操纵公众的很大原因很大程度上是由于当时摄影的新颖性。 当时几乎没有摄影编辑的意识,这意味着没有人会质疑照片的真实性。 这导致了严重的社会政治后果,例如压迫左翼反对派,这是俄罗斯内部的一个敌对政治派别。

The fundamental premise that underlies both Deep fake AI and Stalin’s use of photographic manipulation, allowing both to be effective in its way of spreading fake news, is its novelty. Another more relevant and modern example is Adobe Photoshop; prior to the emergence of Photoshop in 1990, images for the most part were taken as reliable pieces of evidence. This was evident by its popular use as evidence in the court of law, journalistic pieces, and even scientific literature. This changed with the introduction of Adobe Photoshop; part of the reason why there is more skepticism surrounding digital images is due to how accessible Photoshop is to manipulate those photos. Simply being in possession of an incriminating photo or dubious image is no longer a reliable way of ascertaining the truth, since the photo could have easily been doctored. Likewise, Deep fake AI introduces that same accessibility but with a larger scope and sophistication; as John Fletcher notes in his article, Deep fakes, Artificial Intelligence, and Some Kind of Dystopia: The New Faces of Online Post-Fact Performance: “Deep fakes mean[s] that anyone with a sufficiently powerful laptop [can] fabricate videos practically indistinguishable from authentic documentation” (456).

深度伪造AI和斯大林对摄影操纵的使用是其新颖性的基础,这两者都可以有效地传播伪造新闻。 另一个更相关和现代的示例是Adobe Photoshop。 在1990年Photoshop出现之前,大部分图像都是可靠的证据。 这在法院,新闻界甚至科学文献中广泛用作证据时,就可以证明这一点。 随着Adobe Photoshop的引入,这种情况发生了变化。 对数字图像抱有更多怀疑态度的部分原因是由于Photoshop处理这些照片的方便程度。 仅仅拥有一张令人难以置信的照片或可疑图像已不再是确定真相的可靠方法,因为照片很容易被篡改。 同样,深层伪造AI引入了相同的可访问性,但范围和复杂性更大。 正如约翰·弗莱彻(John Fletcher)在他的文章《 深层假货,人工智能和某种反乌托邦:事后在线表现的新面Kong 》中指出的那样:“深层假货意味着,任何人只要拥有足够强大的笔记本电脑,就可以制作视频。与真实文档无二致”(456)。

On a side note, the article listed above is very much worth a skim, if not a read. It provides an excellent math-free introduction to AI and makes several excellent points about the evolving Deep fake dilemma

附带一提,上面列出的文章非常值得一读,即使不读也可以。 它提供了关于AI的出色的无数学介绍,并针对不断发展的Deep false困境提出了许多出色的观点

I agree with Fletcher that the accessibility of Deep fake has allowed for the increased production of disingenuous media, however, I can not agree with the overall point that anyone possesses that ability. This is mainly due to the high technical understanding one must have in order to use the software, which detracts most users who don’t possess that skill. Much like how being able to make convincing Photoshop images is also a skill set that must be learned and developed. Being able to use Deep fake AI is a skill set unto its own, even without the mathematical understanding, simply implementing the algorithm with a desired data set takes a certain level of understanding. Furthermore, the implications for this availability is especially grim given the socio-political ramifications it has. Travis Wagner and Ashley Blewer demonstrate these disastrous consequences in their article, The Word Real Is No Longer Real, where they write: “[Deep fake AI] remains particularly troubling, primarily for its reification of women’s bodies as a thing to be visually consumed” (33). The articles discusses how Deep fake AI is being used to superimpose images of females unto videos of pornography, this misogynistic reduction of females as sexual objects to be consumed is one of many troubling issues with Deep fake AI.

我同意弗莱彻(Fletcher)的观点,即深层伪造的可访问性允许增加独立媒体的产生,但是,我不同意任何人都具备这种能力的总体观点。 这主要是由于使用该软件必须具备很高的技术知识,这会分散大多数不具备该技能的用户。 就像如何制作令人信服的Photoshop图像一样,这也是必须学习和发展的技能。 即使没有数学上的理解,能够使用深层伪造的AI本身也是一项技能,仅用所需的数据集实施算法就需要一定程度的理解。 此外,考虑到它的社会政治影响,对这种可用性的影响尤其严峻。 特拉维斯·瓦格纳(Travis Wagner)和阿什莉·布雷沃(Ashley Blewer)在他们的文章《真实不再是真实 》中论证了这些灾难性后果,他们写道:“ [深层假AI]仍然特别令人困扰,主要是因为它可以将女性的身体作为一种视觉上消耗的东西来体现” (33)。 文章讨论了如何使用深层伪造AI将女性的图像叠加到色情视频中,这种女性厌恶的减少,因为要消耗的性对象是深层伪造AI令人困扰的众多问题之一。

In essence, the novelty of Deep fake AI is a key reason for its efficacy as a tool for fake news and creating socio-political discord, as King had shown with Soviet era photography, however the audience also plays a critical role in deterring fake news.

从本质上讲,如金在苏维埃时代的摄影作品中所展示的那样,深层伪造AI的新颖性是其作为伪造新闻工具和造成社会政治分歧的功效的关键原因,但是观众在阻止伪造新闻方面也起着至关重要的作用。 。

深度虚假AI的复杂社会政治性质以及如何减轻虚假叙述 (The Complex Socio-Political Nature of Deep fake AI and How to Mitigate False Narratives)

Individual agency becomes integral in the methodology against absolving the pervasive Deep fake AI issue. In recent discussions of Deep fake AI and media legitimacy, a controversial issue has been the use of Deep fake AI by deviants to spread doubt and manipulate the public. On one hand, scholars like Fletcher argue that the inevitability of Deep fake AI dominating new media is imminent and that “Traditional mechanisms of trust and epistemic rigor prove outclassed” (457). On the other hand, scholars with similar views as Wagner and Blewer argue that Deep fake AI, while concerning, can be solved by promoting digital literacy among professionals across relevant fields. In the words of Wagner and Blewer: “[We] reject[] the inevitability of deep fakes… visual information literacy can stifle the distribution of violently sexist deep fakes” (32). In sum, the issue is whether Deep fake AI is unavoidable or a solution lies within technical literacy.

我 ndividual机构成为反对赦免的普遍深假AI问题的方法是一体的。 在有关深度仿造AI和媒体合法性的最新讨论中,一个有争议的问题是,变形者使用深度仿造AI传播疑问并操纵公众。 一方面,像弗莱彻(Fletcher)这样的学者认为,深度假冒AI主导新媒体的必然性迫在眉睫,“传统的信任和认知严谨机制已被淘汰”(457)。 另一方面,与Wagner和Blewer持类似观点的学者认为,深度假AI虽然令人担忧,但可以通过在相关领域的专业人员之间提升数字素养来解决。 用Wagner和Blewer的话说:“ [我们]拒绝[]深层假货的必然性……视觉信息素养可以扼杀暴力性别歧视深层假货的分布”(32)。 总而言之,问题在于深层伪造AI是不可避免的还是技术素养之内的解决方案。

My own view is that the panic of Deep fake AI is slightly hyperbolized but that hyperbolization is necessary in order to help inoculate the public from the technology’s influence. Understanding the possible malicious deeds that can be performed with this type of technology is a cautionary tale to alert the public. That, in itself, is already a powerful means to mitigating Deep fake AI and the serious ramifications it comes with. For example, the credibility of photos had been compromised because the public became aware of how easy it is to use Photoshop to doctor images. This might seem as though I favor Wagner and Blewer’s approach of technical literacy however I also agree with Fletcher that Deep fake AI is imminent and nearly impossible to combat. Additionally, I also concede to Fletcher’s main argument that “a critical awareness of online media and AI manipulations of the attention economy must similarly move from the margins to the center of our field’s awareness” (471). This differs from Wagner and Blewer’s argument since they assert a technical literacy approach, whereas Fletcher simply advocates for awareness and attention. I can see the merits of promoting visual information literacy as a viable solution, especially given the role an individual has in the spreadability of fake news.

我个人的观点是,深层伪造AI的恐慌略有夸张,但为了帮助使公众免受技术影响,必须进行夸张。 警惕性的故事是了解这种技术可能会执行的恶意行为,以提醒公众。 就其本身而言,这本身已经是缓解Deep Deep AI及其严重后果的有力手段。 例如,照片的信誉受到损害,因为公众意识到使用Photoshop篡改图像是多么容易。 似乎我似乎更喜欢Wagner和Blewer的技术素养方法,但是我也同意Fletcher的观点,即深造假AI迫在眉睫,几乎无法抗击。 此外,我也同意弗莱彻的主要论点,即“对在线媒体和AI对注意力经济的操纵的批判意识必须同样地从边缘转移到我们领域意识的中心”(471)。 这与Wagner和Blewer的论点不同,因为他们主张采用技术素养方法,而Fletcher只是主张提倡认识和关注。 我可以看到提高视觉信息素养是一种可行的解决方案,特别是考虑到个人在虚假新闻的传播中所起的作用。

Ultimately, I would argue that public awareness of Deep fake AI is a powerful enough mechanism to diminish the negative effects. I mainly believe this since anything more than awareness has diminishing returns, educating people on the dangers of Deep fake AI is akin to educating them on media deception. Although some might object, claiming that public awareness could also incentivize individuals to exploit Deep fake AI. I would reply with the fact that the utilization of Deep fake AI requires a deep mastery and technical understanding of the software, and that public awareness would still allow for recognition of false media. This is to say that the public release of Photoshop implores deviants to spread misinformation, it simply isn’t true and requires more thought and effort than most deviants would be willing to put in.

归根结底,我认为,公众对深层仿造AI的认识是一种足以消除负面影响的强大机制。 我主要相信这一点,因为除意识之外,任何事情都只会减少回报,因此,向人们宣传深层伪造AI的危险类似于对他们进行媒体欺骗的教育。 尽管有些人可能会反对,但声称公众的意识也可能会激励个人利用深层伪造AI。 我要回答一个事实,即使用深度伪造AI需要对软件有深刻的掌握和技术理解,并且公众的意识仍然可以识别伪造的媒体。 这就是说,Photoshop的公开发行会恳请不忠者散布错误信息,这根本不是事实,并且比大多数不忠者愿意提供的更多的思考和努力。

结束语 (Concluding Remarks)

I have examined Deep fake AI by exploring the previous methods of media falsification, Deep fake AI’s ramifications within a socio-political context, and how public awareness combats false narratives promoted by Deep fake AI. The misrepresentation of information is a very pertinent issue, especially in a culture fixated on fake news where personal recordings of controversial topics can instigate political turmoil. Deep fake AI introduces an entirely new tool of media falsification, however these technological advancements are simply successors to the doctored and deceptive media that came before it. Broadcasters of disinformation, as King discussed with Stalin during the Soviet era, and the introduction of Photoshop was distorting reality long before the arrival of Deep fake AI. Moreover, widespread coverage of Deep fake AI in media can help protect the public from the technology’s influence, as the public will be aware of its existence and ascertain false narratives promoted by fake news. Overall, Deep fake AI threatens the credibility of the media we digest, however historical precedents and public awareness can help us realize the issue, along with mitigating these concerns.

我已经研究了深度伪造AI,方法是探索媒体伪造的先前方法,在社会政治背景下深度伪造AI的后果以及公众意识如何与深度伪造AI提倡的虚假陈述作斗争。 信息的虚假陈述是一个非常相关的问题,尤其是在一种以假新闻为中心的文化中,有争议的话题的个人录音会引起政治动荡。 深度伪造的AI引入了一种全新的伪造媒体工具,但是这些技术进步只是其之前篡改和欺骗性媒体的继承者。 正如金在苏联时期与斯大林讨论的那样,虚假信息的广播公司,以及在深层伪造AI出现之前很久,Photoshop的引入就扭曲了现实。 此外,由于公众将意识到它的存在并确定由假新闻引起的虚假叙述,因此,在媒体中对“深层伪造AI”的广泛报道可以帮助保护公众免受该技术的影响。 总体而言,深层伪造AI威胁着我们消化的媒体的信誉,但是历史先例和公众意识可以帮助我们意识到这个问题,并减轻这些担忧。

引用的文章 (Work Cited)

Fletcher, John. “Deep fakes, Artificial Intelligence, and Some Kind of Dystopia: The New Faces of Online Post-Fact Performance.” Theatre Journal, vol. 70, no. 4, Dec. 2018, pp. 455–471., doi:10.1353/tj.2018.0097.

弗莱彻,约翰。 “深造假,人工智能和某种反乌托邦:在线事后表演的新面Kong。” 戏剧学报 70号 2018年12月4日,第455-471页,doi:10.1353 / tj.2018.0097。

King, David. The Commissar Vanishes: the Falsification of Photographs and Art in Stalin’s Russia. 1997. Tate Publishing, 2014.

国王,大卫。 委员消失:斯大林的俄罗斯伪造照片和艺术。 1997年,泰特出版社,2014年。

Wagner, Travis L., and Ashley Blewer. “‘The Word Real Is No Longer Real’: Deep fakes, Gender, and the Challenges of AI-Altered Video.” Open Information Science, vol. 3, no. 1, 10 July 2019, pp. 32–46., doi:10.1515/opis-2019–0003

Wagner,Travis L.和Ashley Blewer。 “'真实的单词不再真实':假货,性别和AI更改视频的挑战。” 开放信息科学 ,第一卷 3号 1,2019年7月10日,第32–46页,doi:10.1515 / opis-2019–0003

idm 假冒