- 1R语言实验报告_r语言实验报告模板

- 2如何安装使用秋叶comfyui整合包,手把手详细教程_秋叶整合包

- 3篮球竞赛预约平台设计与实现的源码+文档_体育赛事报名小程序 源码

- 4grpc介绍与通信协议详解_grpc协议

- 5Runtime Error可能产生的原因_c语言runtime error

- 6Spring Boot学习笔记——运行一个简单程序_spring boot 运行普通java程序

- 7git push报错 Missing or invalid credentials

- 8Python split() 分隔符_info.split()

- 9浅析Java基本数据类型_整数类型占用字节数为

- 10带你走进API安全的知识海洋(二)_api限流 防盗链等

葡萄酒(WINE)数据集分类(PyTorch实现)_wine数据集

赞

踩

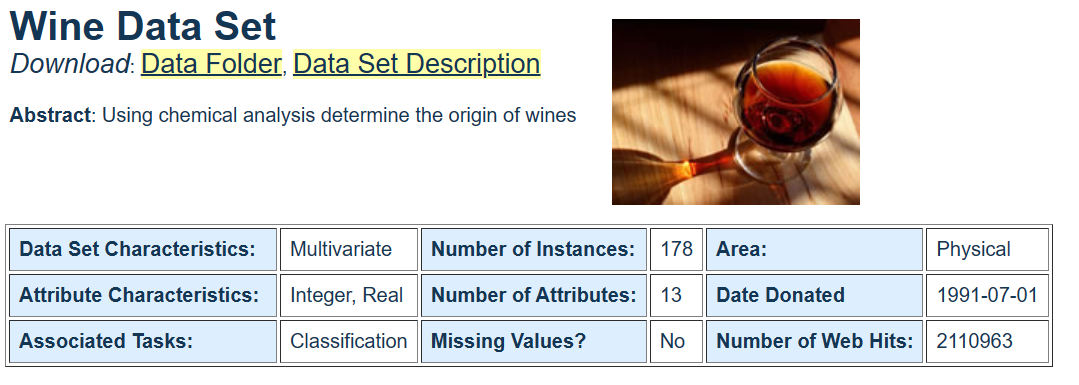

一、数据集介绍

Data Set Information:

These data are the results of a chemical analysis of wines grown in the same region in Italy but derived from three different cultivars. The analysis determined the quantities of 13 constituents found in each of the three types of wines.

I think that the initial data set had around 30 variables, but for some reason I only have the 13 dimensional version. I had a list of what the 30 or so variables were, but a.) I lost it, and b.), I would not know which 13 variables are included in the set.

The attributes are (dontated by Riccardo Leardi, riclea ‘@’ anchem.unige.it )

- Alcohol

- Malic acid

- Ash

- Alcalinity of ash

- Magnesium

- Total phenols

- Flavanoids

- Nonflavanoid phenols

- Proanthocyanins

- Color intensity

- Hue

- OD280/OD315 of diluted wines

- Proline

In a classification context, this is a well posed problem with “well behaved” class structures. A good data set for first testing of a new classifier, but not very challenging.

Attribute Information:

All attributes are continuous

No statistics available, but suggest to standardise variables for certain uses (e.g. for us with classifiers which are NOT scale invariant)

NOTE: 1st attribute is class identifier (1-3)

二、使用贝叶斯分类

代码首先加载WINE数据集,并对数据进行预处理,然后划分训练集和测试集,并将它们转换为PyTorch张量。接着计算每个类别的先验概率、均值和标准差,然后定义了一个朴素贝叶斯分类器。最后在测试集上进行预测并计算准确率。需要注意的是,在这个示例中,我们使用了PyTorch的正态分布概率密度函数来计算每个特征的似然概率,这是因为WINE数据集的特征是连续值。如果特征是离散值,我们需要使用多项式分布概率质量函数来计算似然概率。

import torch import numpy as np from sklearn.datasets import load_wine from sklearn.model_selection import train_test_split # 加载WINE数据集 data = load_wine() # 数据预处理 X = data.data y = data.target # 划分训练集和测试集 X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # 转换为PyTorch张量 X_train = torch.from_numpy(X_train).float() y_train = torch.from_numpy(y_train).long() X_test = torch.from_numpy(X_test).float() y_test = torch.from_numpy(y_test).long() # 计算每个类别的先验概率 priors = [] # [0.31690140845070425, 0.4014084507042254, 0.28169014084507044] for c in range(3): priors.append((y_train == c).sum().item() / len(y_train)) # 计算每个类别的均值和标准差 means = [] stds = [] for c in range(3): X_c = X_train[y_train == c] mean_c = X_c.mean(dim=0) std_c = X_c.std(dim=0) means.append(mean_c) stds.append(std_c) '''means:[tensor([1.3726e+01, 1.9773e+00, 2.4500e+00, 1.6744e+01, 1.0698e+02, 2.8398e+00, 2.9776e+00, 2.9978e-01, 1.9244e+00, 5.4229e+00, 1.0640e+00, 3.1607e+00, 1.0964e+03]), tensor([1.2285e+01, 1.9958e+00, 2.2412e+00, 2.0337e+01, 9.6175e+01, 2.2639e+00, 2.0846e+00, 3.5842e-01, 1.6579e+00, 3.1089e+00, 1.0606e+00, 2.8016e+00, 5.2558e+02]), tensor([1.3127e+01, 3.3575e+00, 2.4310e+00, 2.1312e+01, 9.9175e+01, 1.7055e+00, 7.8725e-01, 4.5850e-01, 1.1810e+00, 7.4235e+00, 6.8675e-01, 1.6565e+00, 6.2650e+02])] stds:[tensor([5.0639e-01, 6.2938e-01, 2.3979e-01, 2.5713e+00, 1.0699e+01, 3.6497e-01, 3.9599e-01, 7.2221e-02, 4.3477e-01, 1.1963e+00, 1.1375e-01, 3.5512e-01, 2.1585e+02]), tensor([5.6410e-01, 1.0495e+00, 3.2480e-01, 3.4544e+00, 1.7803e+01, 5.7815e-01, 7.3121e-01, 1.2871e-01, 6.0875e-01, 8.6685e-01, 2.1260e-01, 4.9461e-01, 1.6302e+02]), tensor([ 0.5619, 1.1488, 0.1743, 2.3251, 10.6888, 0.3741, 0.2818, 0.1261, 0.4243, 2.3462, 0.1217, 0.2385, 113.4099])]''' # 定义朴素贝叶斯分类器 def predict(X): scores = [] for c in range(3): log_prior = np.log(priors[c]) log_likelihood = torch.distributions.Normal(means[c], stds[c]).log_prob(X).sum(dim=1) score_c = log_prior + log_likelihood scores.append(score_c) scores = torch.stack(scores, dim=1) _, predicted = torch.max(scores, 1) return predicted # 在测试集上进行预测 y_pred = predict(X_test) accuracy = (y_pred == y_test).sum().item() / len(y_test) print('Accuracy on test set: %.2f%%' % (accuracy * 100)) '''scores:[tensor([-16.2063, -20.1661, -59.0722, -16.9989, -33.5326, -15.4014, -41.7632, -58.7885, -28.0045, -54.0653, -17.2750, -63.5537, -20.8636, -56.6549, -15.9323, -32.9764, -41.1679, -44.2954, -14.6590, -35.1883, -24.0837, -26.1084, -59.8588, -61.7415, -80.2487, -65.8907, -33.1282, -22.9195, -47.4456, -14.7912, -14.7452, -35.8007, -63.5418, -15.1896, -13.8471, -16.8022]), tensor([-27.7629, -33.8759, -24.7344, -37.6869, -19.3939, -41.7279, -19.0878, -38.3060, -19.7340, -28.5979, -22.3439, -49.1897, -25.1687, -22.6674, -44.3978, -17.5077, -17.9047, -18.3933, -39.4543, -17.3390, -72.5846, -25.4732, -24.6198, -47.5362, -56.7778, -54.3174, -16.6600, -18.8041, -17.9557, -34.2255, -28.7398, -17.4073, -27.6510, -47.9594, -35.9062, -36.2132]), tensor([ -85.3486, -75.8018, -18.5600, -98.8156, -68.1448, -89.2539, -46.4184, -12.5738, -83.1620, -21.1081, -88.6656, -23.2636, -64.7015, -26.4976, -93.1429, -50.1631, -43.4661, -47.4876, -102.3652, -59.2274, -154.9120, -101.8293, -33.0033, -19.1309, -16.7458, -13.7131, -51.7594, -54.1711, -31.7022, -79.9978, -82.3085, -53.0021, -15.9740, -107.1665, -84.9809, -119.2362])] predicted:tensor([0, 0, 2, 0, 1, 0, 1, 2, 1, 2, 0, 2, 0, 1, 0, 1, 1, 1, 0, 1, 0, 1, 1, 2, 2, 2, 1, 1, 1, 0, 0, 1, 2, 0, 0, 0])'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

三、使用支持向量机分类

代码首先加载WINE数据集,并对数据进行预处理,然后划分训练集和测试集,并将它们转换为PyTorch张量。接着训练一个支持向量机分类器,这里我们选择线性核函数并设置参数C为1.0。最后在测试集上进行预测并计算准确率。

PyTorch本身并不提供SVM分类器的实现,我们使用了scikit-learn库的SVC类来训练SVM分类器。在训练SVM分类器之前,我们将PyTorch张量转换为NumPy数组,这是因为scikit-learn库的SVC类需要接受NumPy数组作为输入。同样,在预测时,我们也需要将测试集的PyTorch张量转换为NumPy数组。

import torch from sklearn.datasets import load_wine from sklearn.model_selection import train_test_split from sklearn.svm import SVC # 加载WINE数据集 data = load_wine() # 数据预处理 X = data.data y = data.target # 划分训练集和测试集 X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # 转换为PyTorch张量 X_train = torch.from_numpy(X_train).float() y_train = torch.from_numpy(y_train).long() X_test = torch.from_numpy(X_test).float() # 训练SVM分类器 clf = SVC(kernel='linear', C=1.0) clf.fit(X_train.numpy(), y_train.numpy()) # 在测试集上进行预测 y_pred = clf.predict(X_test.numpy()) accuracy = (y_pred == y_test).sum().item() / len(y_test) print('Accuracy on test set: %.2f%%' % (accuracy * 100))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

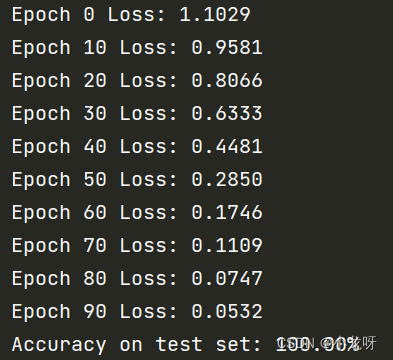

四、使用神经网络分类

代码首先加载WINE数据集,并对数据进行预处理,然后划分训练集和测试集,并将它们转换为PyTorch张量。接着定义了一个具有三个全连接层的神经网络,使用交叉熵损失函数和Adam优化器进行训练,最后在测试集上进行预测并计算准确率。

import torch import torch.nn as nn import torch.optim as optim from sklearn.datasets import load_wine from sklearn.model_selection import train_test_split from sklearn.preprocessing import StandardScaler # 加载WINE数据集 data = load_wine() # 数据预处理 X = data.data # 获取数据特征,一共178个样本,每个样本为13维向量 y = data.target # 获取数据标签,一共三类,为0,1,2 scaler = StandardScaler() X = scaler.fit_transform(X) # 对数据进行标准化 # 划分训练集和测试集 X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # 142个训练数据,36个测试数据 # 转换为PyTorch张量 X_train = torch.from_numpy(X_train).float() y_train = torch.from_numpy(y_train).long() X_test = torch.from_numpy(X_test).float() y_test = torch.from_numpy(y_test).long() # 定义神经网络 class Net(nn.Module): def __init__(self): super(Net, self).__init__() self.fc1 = nn.Linear(13, 64) self.fc2 = nn.Linear(64, 32) self.fc3 = nn.Linear(32, 3) def forward(self, x): x = torch.relu(self.fc1(x)) x = torch.relu(self.fc2(x)) x = self.fc3(x) return x net = Net() # 定义损失函数和优化器 criterion = nn.CrossEntropyLoss() optimizer = optim.Adam(net.parameters(), lr=0.001) # 训练神经网络 for epoch in range(100): optimizer.zero_grad() output = net(X_train) loss = criterion(output, y_train) loss.backward() optimizer.step() if epoch % 10 == 0: print('Epoch %d Loss: %.4f' % (epoch, loss.item())) # 在测试集上进行预测 with torch.no_grad(): output = net(X_test) _, predicted = torch.max(output, 1) total = y_test.size(0) correct = (predicted == y_test).sum().item() accuracy = correct / total print('Accuracy on test set: %.2f%%' % (accuracy * 100))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65