- 1我的参会记要:OceanBase第二届开发者大会

- 2用栈实现二叉树 C&java_根据栈建立树

- 3十、CentOS7安装HBase-2.1.0伪分布式_hbase2.1.0 伪分布式安装

- 4分布式一致性算法简介_持续一致性如何计算两个副本中的向量时钟

- 52021-03-11 php 获取文件/上传文 是否是图片格式(与后缀无关,取文件实际内容)_--enable-exif

- 6算法复杂度分析(3600字)_论文中为何要加复杂性分析

- 7rejected –non-fast-forward解决方法

- 8微信小程序与web-view网页进行通信的尝试

- 99、Flink 用户自定义 Functions 及 累加器详解

- 10使用Spring Boot实现大文件断点续传及文件校验_springboot整合文件断点续传

java爬虫---爬取某直聘招聘信息(超详细版)_招聘信息爬取

赞

踩

如有侵权,请联系删除。(求BOSS直聘放过)

前言:

我们爬取BOSS直聘的网站的方式是比较固定的,基本上爬取网站的方式都差不多。

静态网页的爬取比较简单,如下:

我们只需要获取网页的源代码,按照一些解析器,就可以完成对页面元素的获取。

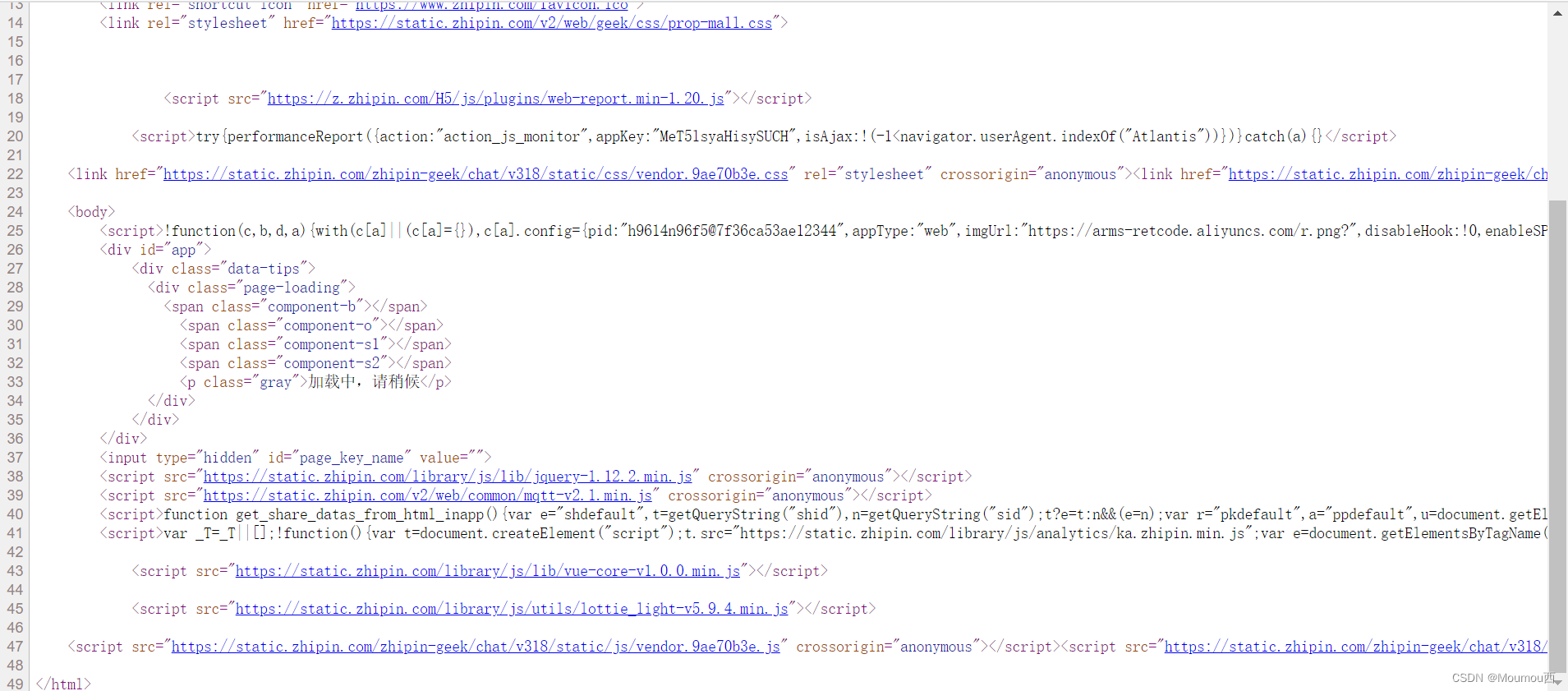

动态网页就比较麻烦了,如下:

就是一堆JS的链接。。。。。

不过既然我们正常浏览器可以访问得到我们想要的结果,那我们就一定有机会拿到我们想要的数据;

这次我采取的方案是,比较水、比较慢的一种方案;WebMagic+selenium爬取页面元素,POI实现Excel存储,最终结果没有入库,直接导出了;

前期准备:

- 下载驱动: 首先我们需要下载谷歌的驱动,可以去下面网址下载ChromeDriver - WebDriver for Chrome - Downloads (chromium.org)

- 下载Excel 本次使用的是xlsx后缀的新版本Excel

- Java的运行环境

- 使用Maven搭建项目,导入依赖

- <!-- https://mvnrepository.com/artifact/us.codecraft/webmagic-core -->

- <dependency>

- <groupId>us.codecraft</groupId>

- <artifactId>webmagic-core</artifactId>

- <version>0.8.0</version>

- </dependency>

- <!-- https://mvnrepository.com/artifact/us.codecraft/webmagic-extension -->

- <dependency>

- <groupId>us.codecraft</groupId>

- <artifactId>webmagic-extension</artifactId>

- <version>0.8.0</version>

- </dependency>

- <!--selenium依赖-->

- <dependency>

- <groupId>org.seleniumhq.selenium</groupId>

- <artifactId>selenium-java</artifactId>

- <version>3.13.0</version>

- </dependency>

- <!--配置POI-->

- <dependency>

- <groupId>org.apache.poi</groupId>

- <artifactId>poi</artifactId>

- <version>3.14</version>

- </dependency>

- <dependency>

- <groupId>org.apache.poi</groupId>

- <artifactId>poi-ooxml</artifactId>

- <version>3.14</version>

- </dependency>

- <dependency>

- <groupId>org.projectlombok</groupId>

- <artifactId>lombok</artifactId>

- <version>1.18.24</version>

- </dependency>

- 检索结果的pojo

- @Data

- public class WorkInf {

- //招聘链接

- private String url;

- //工作名

- private String workName;

- //薪水

- private String workSalary;

- //工作地址

- private String workAddress;

- //工作内容

- private String workContent;

- //要求工作年限

- private String workYear;

- //学历

- private String graduate;

- //招聘人什么时候活跃

- private String HRTime;

- //公司名

- private String companyName;

- }

代码:

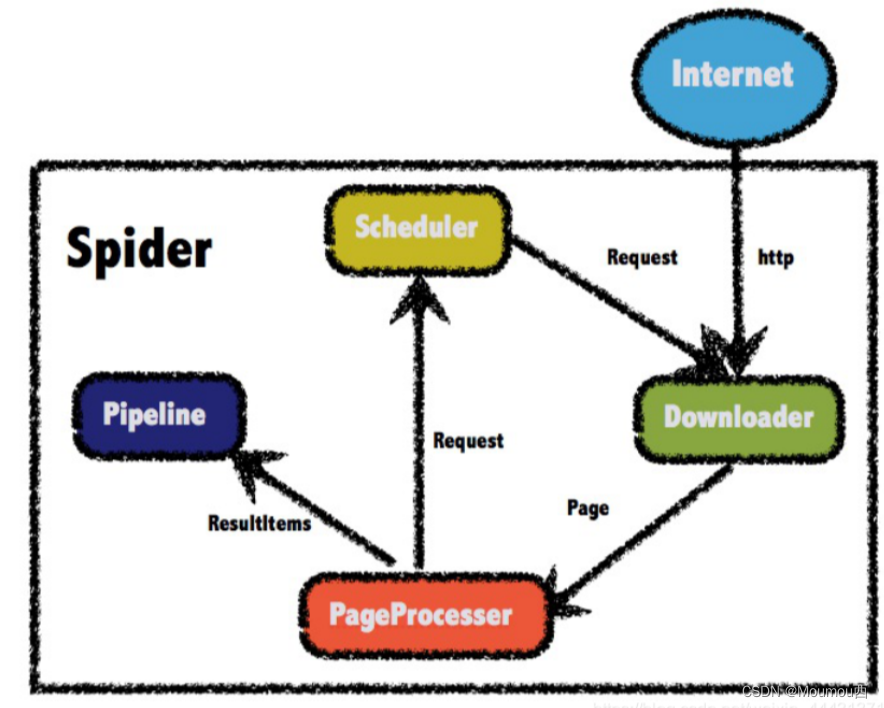

代码对着WebMagic来一步步实现;想学Java爬虫的兄弟一定要先记住这幅图,可以找教程学学;

浏览器模拟(Download部分):

上面讲了,动态页面没法直接通过获取源代码来实现爬取,所以我们要实现一个伪造的浏览器;selenium就帮我们很好的实现了这个技术(当然还有别的更好的解决方案,我感觉这个比较简单就用了这个)

首先我们需要下载谷歌的驱动,可以去下面网址下载ChromeDriver - WebDriver for Chrome - Downloads (chromium.org)

- public class ChromeDownloader implements Downloader {

-

- //声明驱动

- private RemoteWebDriver driver;

-

- public ChromeDownloader() {

- //第一个参数是使用哪种浏览器驱动

- //第二个参数是浏览器驱动的地址

- System.setProperty("webdriver.chrome.driver","谷歌驱动的下载地址,chromedriver.exe");

-

- //创建浏览器参数对象

- ChromeOptions chromeOptions = new ChromeOptions();

-

- // chromeOptions.addArguments("--headless");

- // 设置浏览器窗口打开大小

- chromeOptions.addArguments("--window-size=1280,700");

-

- //创建驱动

- this.driver = new ChromeDriver(chromeOptions);

- }

-

- @Override

- public Page download(Request request, Task task) {

- try {

- driver.get(request.getUrl());

- Thread.sleep(1500);

-

- //无论是搜索页还是详情页,都滚动到页面底部,所有该加载的资源都加载

- driver.executeScript("window.scrollTo(0, document.body.scrollHeight - 1000)");

- Thread.sleep(1500l);

-

- //获取页面对象

- Page page = createPage(request.getUrl(), driver.getPageSource());

-

- //关闭浏览器

- //driver.close();

-

- return page;

-

- } catch (InterruptedException e) {

- e.printStackTrace();

- }

-

-

- return null;

- }

-

- @Override

- public void setThread(int i) {

-

- }

-

- //构建page返回对象

- private Page createPage(String url, String content) {

- Page page = new Page();

- page.setRawText(content);

- page.setUrl(new PlainText(url));

- page.setRequest(new Request(url));

- page.setDownloadSuccess(true);

-

- /* System.out.println("==============page内容===============");

- System.out.println("1."+content);

- System.out.println("2."+new PlainText(url));

- System.out.println("3."+new Request(url));

- System.out.println("=====================================");*/

- return page;

- }

- }

这里的代码直接抄就好了;

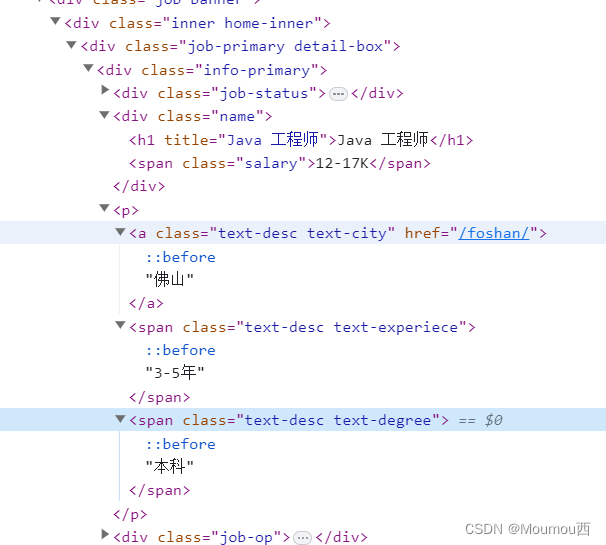

对页面进行解析(PageProcesser部分)

这里的逻辑分成两个部分,首先是BOSS的导航页,在这里我们可以拿到每一个工作的详情页的地址,然后把详情页地址添加到我们的Scheduler中,我们的爬虫程序有爬取的目标;

然后就是详情页,我们需要对其中的信息进行检索解析。

- public class BoosProcessor implements PageProcessor {

- public static AtomicInteger pageNum = new AtomicInteger(2);

- @Override

- public void process(Page page) {

- //去抓取职位列表

- List<Selectable> nodes = page.getHtml().css("li.job-card-wrapper").nodes();

-

- if(nodes != null && nodes.size() > 0 || page.getUrl().get().contains("geek/job")){

- //有值就是工作列表页

- //遍历所有的列表项,拿超链接

- for (Selectable node : nodes) {

- String s = node.css("a.job-card-left").links().get();

- page.addTargetRequest(s);

-

- }

- page.addTargetRequest("https://www.zhipin.com/web/geek/job?query=java实习&city=101280100&page="+pageNum.getAndIncrement());

-

- }else {

- //工作详情页 处理我们想要的信息,我这里都用了CSS选择器

- Selectable biggest = page.getHtml().css("div#wrap");

- WorkInf workInf = new WorkInf();

- Selectable primary = biggest.css("div.info-primary");

- String workName = primary.css("div.name h1").get();

- String salary = primary.css("div.name span").get();

- String year = primary.css("p span.text-experiece").get();

- String graduate = primary.css("p span.text-desc.text-degree").get();

- Selectable jobDetail = biggest.css("div.job-detail");

- String workContent = jobDetail.css("div.job-detail-section div.job-sec-text").get();

- String HrTime = jobDetail.css("h2.name span").get();

- Selectable jobSider = biggest.css("div.job-sider");

- String companyName = jobSider.css("div.sider-company a[ka=job-detail-company_custompage]").get();

- String workAddress = jobDetail.css("div.location-address").get();

-

- workInf.setWorkName(Jsoup.parse(workName).text());

- workInf.setWorkSalary(Jsoup.parse(salary).text());

- workInf.setWorkYear(Jsoup.parse(year).text());

- workInf.setGraduate(Jsoup.parse(graduate).text());

- workInf.setWorkContent(Jsoup.parse(workContent).text());

- workInf.setHRTime(Jsoup.parse(HrTime).text());

- workInf.setCompanyName(Jsoup.parse(companyName).text());

- workInf.setWorkAddress(Jsoup.parse(workAddress).text());

- workInf.setUrl(page.getUrl().get());

-

- page.putField("workInf",workInf);

- }

-

- }

-

- //可以对爬虫进行一些配置

- private Site site = Site.me()

- // 单位是秒

- .setCharset("UTF-8")//编码

- .setSleepTime(1)//抓取间隔时间,可以解决一些反爬限制

- .setTimeOut(1000 * 10)//超时时间

- .setRetrySleepTime(3000)//重试时间

- .setRetryTimes(3);//重试次数

-

-

- @Override

- public Site getSite() {

- return site;

- }

- }

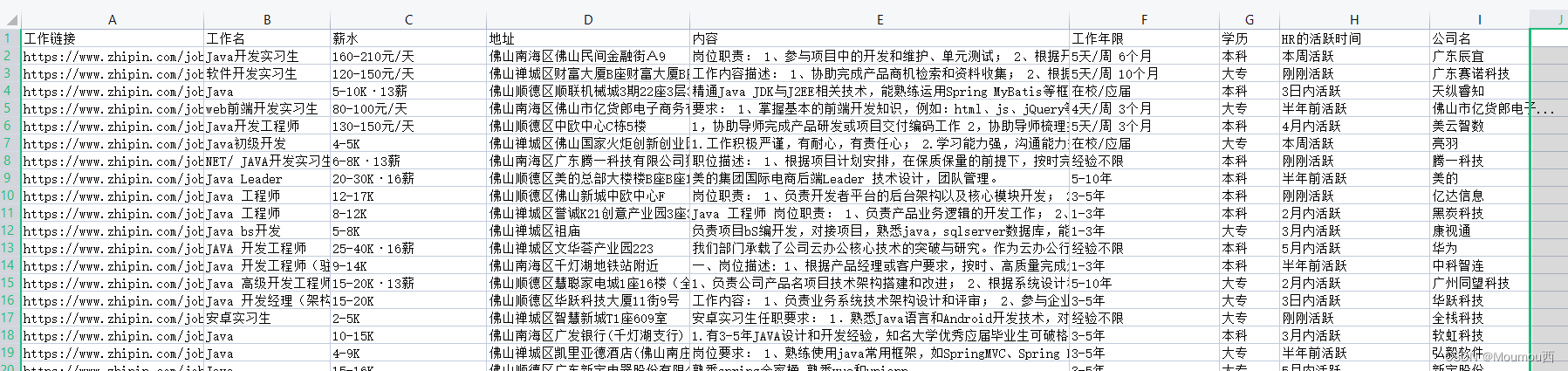

结果存储(Pipeline部分)

我没有让数据入库,而是直接导出到了Excel文件中。

- public class BoosPipeline implements Pipeline {

- private final static String excel2003L =".xls"; //2003- 版本的excel

- private final static String excel2007U =".xlsx"; //2007+ 版本的excel

- public static Integer integer= new Integer(0);

- public static List<WorkInf> workInfList = new ArrayList<>();

-

- @Override

- public void process(ResultItems result, Task task) {

- //设计存储过程

- WorkInf workInf = result.get("workInf");

- if(workInf==null){

- return;

- }

- synchronized (BoosPipeline.class){

- workInfList.add(workInf);

- /*try {

- Thread.sleep(500);

- } catch (InterruptedException e) {

- e.printStackTrace();

- }*/

- //多线程的话在这里获取一下锁

- int howManyWorkStart = 10;

- if(workInfList.size() >= howManyWorkStart){

- //追加存到Excel中

- try {

- String path = "Z:\\climbResult\\BOOSWork_java.xlsx";

- FileInputStream fileInputStream = new FileInputStream(path);

- XSSFWorkbook workbook=new XSSFWorkbook(fileInputStream);//得到文档对象

- Sheet sheet = workbook.getSheetAt(0);

- int lastRowNum = sheet.getLastRowNum();

- for(int i = 1 ; i<=howManyWorkStart ;i++){

- WorkInf inf = workInfList.get(i-1);

- Row row = sheet.createRow(lastRowNum+i);

- for(int j = 0 ; j < 9 ; j++){

- Cell cell = row.createCell(j);

- switch (j){

- case 0 : cell.setCellValue(inf.getUrl()); break;

- case 1 : cell.setCellValue(inf.getWorkName()); break;

- case 2 : cell.setCellValue(inf.getWorkSalary()); break;

- case 3 : cell.setCellValue(inf.getWorkAddress()); break;

- case 4 : cell.setCellValue(inf.getWorkContent()); break;

- case 5 : cell.setCellValue(inf.getWorkYear()); break;

- case 6 : cell.setCellValue(inf.getGraduate()); break;

- case 7 : cell.setCellValue(inf.getHRTime()); break;

- case 8 : cell.setCellValue(inf.getCompanyName()); break;

- default: break;

- }

- }

- }

- FileOutputStream fileOutputStream = new FileOutputStream(path);

- fileOutputStream.flush();

- workbook.write(fileOutputStream);

- fileOutputStream.close();

- //清空List

- workInfList.clear();

- System.out.println("===============workInfSize=======================");

- System.out.println("当前workInfList的长度为:"+workInfList.size()+"完成一轮爬取");

- System.out.println("==================================================");

- integer++;

- } catch (Exception e) {

- e.printStackTrace();

- }

- }

- if(integer>500){

- System.exit(0);

- }

- }

- }

-

- /*根据文件的后缀名去确定workbook的类型*/

- public static Workbook getWorkbook(InputStream inStr, String fileName) throws Exception{

- Workbook wb = null;

- String fileType = fileName.substring(fileName.lastIndexOf("."));

- if(excel2003L.equals(fileType)){

- wb = new HSSFWorkbook(inStr); //2003-

- }else if(excel2007U.equals(fileType)){

- wb = new XSSFWorkbook(inStr); //2007+

- }else{

- throw new Exception("解析的文件格式有误!");

- }

- return wb;

- }

- }

保存结果如下图所示:

启动类(程序入口):

将我们上面写的类,放入到Spider中,Spider这个类负责管理整个爬虫程序;

- public class StartClimb {

-

- public static void main(String[] args) {

- ChromeDownloader downloader = new ChromeDownloader();

- BoosPipeline boosPipeline = new BoosPipeline();

- //声明搜索页的初始地址

- String url = "https://www.zhipin.com/web/geek/job?query=java实习&city=101280100";

- Spider.create(new BoosProcessor())

- .addUrl(url)

- //设置下载器

- .setDownloader(downloader)

- //设置输出

- .addPipeline(boosPipeline)

- .run();

-

- }

- }

开始运行,大功告成,因为要模拟浏览器访问,所以速度是比较慢的,一分钟可能也就爬取4到5条想要的结果,不过有螃蟹吃,是不是已经很香了。