- 1LogisticRegression - 参数说明_logisticregression的权重classweight

- 2手机与电脑投屏互联方案_github电脑投屏

- 3吾爱2023新年红包题第六题 (CTF)_常见编码mzuzndm1mzuzmzm1mzuzmjm1mzmzndm1mzuznjm1ndeznt

- 4springboot项目整合百度AI内容审核(文本,图片)

- 5文件 写回硬盘_小猿圈python学习-用Python操作文件

- 6测试人员如何体现自身的专业性?_你认为从哪些方面能体现测试工作专业度?

- 7SuperMap GIS 倾斜摄影数据处理 Q&A

- 8使用LSTM进行情感分析:SentimentAnalysis_LSTM项目详解

- 9SQL语法

- 10Maven添加阿里云镜像_maven阿里云镜像

kafka常用命令总结_kafka.tools.getoffsetshell

赞

踩

1、集群管理

1.1、启动broker

bin/kafka-server-start.sh config/server.properties &

## 或者

bin/kafka-server-start.sh -daemon config/server.properties

- 1

- 2

- 3

1.2、关闭broker

bin/kafka-server-stop.sh

- 1

2、主题操作

2.1、创建主题

创建主题有多种方式:通过kafka-topic.sh命令行工具创建、API方式创建、直接向zookeeper的/brokers/topics路径下写入节点、auto.create.topics.enable为true时直接发送消息创建topic。通常使用前两种方式创建。

创建主题时用到三个参数:

- –topic :主题名字

- –partitions :分区数量

- –replication-factor :副本因子

副本因子不能超过集群broker数量。

bin/kafka-topics.sh --zookeeper cluster101:2181 --create --topic demo --partitions 3 --replication-factor 3

- 1

2.2、删除主题

若删除topic,要确保delete.topic.enable设置为true,不然无法删除topic。

bin/kafka-topics.sh --zookeeper cluster:2181 --delete --topic del_topic

Topic third is marked for deletion.

Note: This will have no impact if delete.topic.enable is not set to true.

- 1

- 2

- 3

- 4

该命令只是标记为待删除状态。删除逻辑由controller在后台默默执行,用户无法感知进度。需要列出topic看是否还在才能确定。

2.3、删除主题(手动)

手动删除过程如下:

- 关闭所有broker

- 删除Zookeeper路径/brokers/topics/TOPICNAME,命令:rmr /brokers/topics/TOPICNAME

- 删除每个broker中数据实际的存储分区目录,名字可能是 TOPICNAME-NUM,位置由server.properties文件log.dirs配置指定, 默认是/tmp/kafka-logs

- 重启所有broker

2.4、列出所有主题

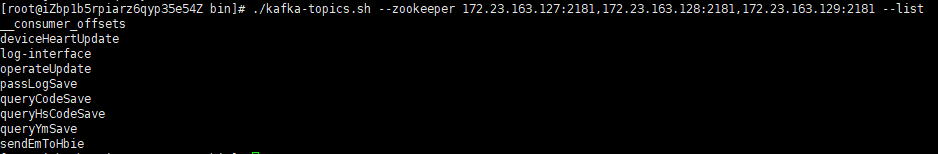

./kafka-topics.sh --zookeeper 172.23.163.127:2181,172.23.163.128:2181,172.23.163.129:2181 --list

- 1

2.5、查看主题详情

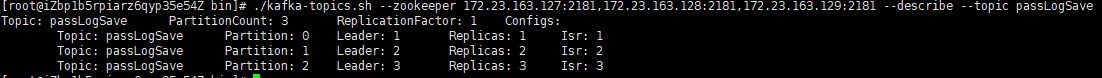

./kafka-topics.sh --zookeeper 172.23.163.127:2181,172.23.163.128:2181,172.23.163.129:2181 --describe --topic passLogSave

- 1

3、消费和生产

kafka-console-consumer.sh和kafka-console-producer.sh也许是用户最常用的两个Kafka脚本工具。它们让用户可以方便的在集群中测试consumer和producer。

3.1、生产者控制台

./kafka-console-producer.sh --broker-list cluster101:9092 --topic test

- 1

3.2、消费者控制台

./kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic test --from-beginning

- 1

--consumer-property可以指定消费者参数,如 group.id=test_group。多个参数可以用逗号隔开。

./kafka-console-consumer.sh --bootstrap-server cluster101:9092 --topic test --consumer-property group.id=test_group

- 1

- 2

4、分区管理

4.1、增加分区

增加分区可以扩展主题容量、降低单个分区的负载。

./kafka-topics.sh --zookeeper cluster101:2181 --alter --topic incr_part --partition 10

- 1

4.2、首选leader的选举

首选leader是指创建topic时选定的leader就是分区的首选leader,创建topic时会在broker之间均衡leader。

在kafka集群中,broker服务器难免会发生宕机或崩溃的情况。这种情况发生后,该broker上的leader副本变为不可用,kafka会将这些分区的leader转移到其它broker上。即使broker重启回来, 其上的副本也只能作为跟随者副本,不能对外提供服务。随着时间的增长,会导致leader的不均衡,集中在一小部分broker上。

可以通过kafka-preferred-replica-election.sh工具来手动触发首选的副本选举。

./kafka-preferred-replica-election.sh --zookeeper cluster101:2181

- 1

4.3、分区重分配

有些时候,你可能需要调整分区的分布:

- 主题的分区在集群中分布不均,导致不均衡的负载

- broker离线造成分区不同步

- 新加入的broker需要从集群里获得负载

可以使用kafka-reassign-partitions.sh工具来调整分区的分布情况:

- 根据broker列表和主题列表生成迁移计划

- 执行迁移计划

- 验证分区重分配的进度和完成情况(可选)

生成迁移计划需要创建一个包含主题清单的JSON文件:

{

"topics": [

{

"topic": "reassign"

}

],

"version": 1

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

生成迁移计划:

./kafka-reassign-partitions.sh --zookeeper cluster101:2181 --topics-to-move-json-file topics.json --broker-list 0,1,2 --generate

Current partition replica assignment

{"version":1,"partitions":[{"topic":"reassign","partition":1,"replicas":[1,0]},{"topic":"reassign","partition":3,"replicas":[1,0]},{"topic":"reassign","partition":6,"replicas":[0,1]},{"topic":"reassign","partition":4,"replicas":[0,1]},{"topic":"reassign","partition":0,"replicas":[0,1]},{"topic":"reassign","partition":7,"replicas":[1,0]},{"topic":"reassign","partition":2,"replicas":[0,1]},{"topic":"reassign","partition":5,"replicas":[1,0]}]}

Proposed partition reassignment configuration

{"version":1,"partitions":[{"topic":"reassign","partition":1,"replicas":[0,2]},{"topic":"reassign","partition":4,"replicas":[0,1]},{"topic":"reassign","partition":6,"replicas":[2,1]},{"topic":"reassign","partition":3,"replicas":[2,0]},{"topic":"reassign","partition":0,"replicas":[2,1]},{"topic":"reassign","partition":7,"replicas":[0,2]},{"topic":"reassign","partition":2,"replicas":[1,0]},{"topic":"reassign","partition":5,"replicas":[1,2]}]}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

终端中会输出两个json对象,分别为当前的分区分配情况和建议的分区分配方案。第一个json对象保存起来可以用于回滚,第二个json对象保存起来作为分区分配方案,这里保存为reassign.json。

执行方案:

./kafka-reassign-partitions.sh --zookeeper cluster101:2181 --execute --reassignment-json-file reassign.json

Current partition replica assignment

{"version":1,"partitions":[{"topic":"reassign","partition":1,"replicas":[1,0]},{"topic":"reassign","partition":3,"replicas":[1,0]},{"topic":"reassign","partition":6,"replicas":[0,1]},{"topic":"reassign","partition":4,"replicas":[0,1]},{"topic":"reassign","partition":0,"replicas":[0,1]},{"topic":"reassign","partition":7,"replicas":[1,0]},{"topic":"reassign","partition":2,"replicas":[0,1]},{"topic":"reassign","partition":5,"replicas":[1,0]}]}

Save this to use as the --reassignment-json-file option during rollback

Successfully started reassignment of partitions.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

验证结果:

./kafka-reassign-partitions.sh --zookeeper cluster101:2181 --verify --reassignment-json-file reassign.json

Status of partition reassignment:

Reassignment of partition [reassign,0] completed successfully

Reassignment of partition [reassign,1] completed successfully

Reassignment of partition [reassign,3] completed successfully

Reassignment of partition [reassign,7] completed successfully

Reassignment of partition [reassign,5] completed successfully

Reassignment of partition [reassign,6] completed successfully

Reassignment of partition [reassign,2] completed successfully

Reassignment of partition [reassign,4] completed successfully

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

5、消费者组

5.1、查看消费者组

./kafka-consumer-groups.sh --bootstrap-server 172.23.163.127:9092,172.23.163.128:9092,172.23.163.129:9092 --list

- 1

要获取指定的消费者组详细信息,使用–describe来代替–list,并通过–group来指定特定的消费者组。

./kafka-consumer-groups.sh --bootstrap-server cluster101:9092 --describe --group test

- 1

5.2、删除消费者组

新版本消费者组不需要删除,因为它在最后一个成员离开时会自动删除。

./kafka-consumer-groups.sh --zookeeper cluster101:2181 --delete --group t_group

- 1

6、测试脚本

6.1、生产者吞吐量测试

./kafka-producer-perf-test.sh --topic demo --num-records 100000 --record-size 200 --throughput 20000 --producer-props bootstrap.servers=192.168.180.64:9092 acks=-1

99962 records sent, 19992.4 records/sec (3.81 MB/sec), 2.8 ms avg latency, 387.0 ms max latency.

100000 records sent, 19968.051118 records/sec (3.81 MB/sec), 2.78 ms avg latency, 387.00 ms max latency, 1 ms 50th, 25 ms 95th, 37 ms 99th, 39 ms 99.9th.

- 1

- 2

- 3

- 4

--topictopic名称;--num-records总共需要发送的消息数,本例为1000000;--record-size每个记录的字节数,本例为200--throughput每秒钟发送的记录数,本例为20000--producer-propsbootstrap.servers=cluster101:9092 发送端的配置信息acks消息确认设置

6.2、消费者吞吐量测试

./kafka-consumer-perf-test.sh --topic demo --broker-list 192.168.180.64:9092 --messages 50000

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2022-09-13 11:06:40:149, 2022-09-13 11:06:40:739, 9.6285, 16.3195, 50481, 85561.0169, 1663038400528, -1663038399938, -0.0000, -0.0000

- 1

- 2

- 3

- 4

7、其它脚本工具

7.1、查看topic当前消息数

./kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list 192.168.180.64:9092 --topic demo --time -1

demo:0:100000

- 1

- 2

–time -1 表示最大位移

./kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list 192.168.180.64:9092 --topic demo --time -2

demo:0:0

- 1

- 2

–time -2 表示最早位移

8、对症下药

8.1、查看是否有消息堆积的情况

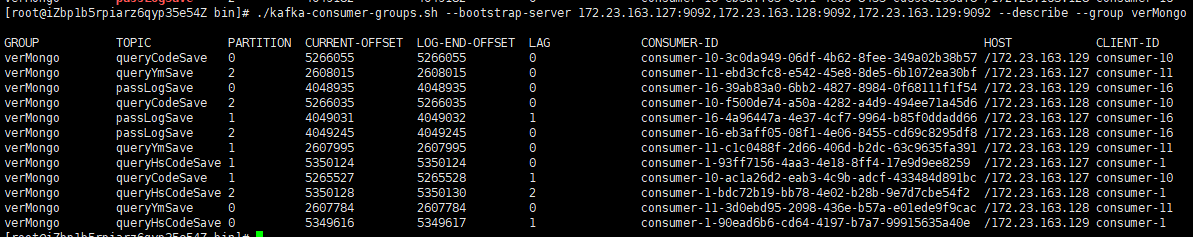

./kafka-consumer-groups.sh --bootstrap-server 172.23.163.127:9092,172.23.163.128:9092,172.23.163.129:9092 --describe --group verMongo

- 1

- PARTITION 分区

- CURRENT-OFFSET 当前消费消息的位移值。

- LOG-END-OFFSET 生产的消息的位移值。

- LAG 值(

CURRENT-OFFSET和LOG-END-OFFSET的差值),值越大说明消息堆积越是严重。

参考博客 https://zhuanlan.zhihu.com/p/91811634