- 1Final Fantasy背后的那些牛人们

- 2微信小程序-人脸检测_无效的 app.json permission["scope.bluetooth"]

- 3R语言-ggplot2图形语法_fontface r语言

- 4基于TP5、EasyWeChat、fastadmin微信公众号网页授权登录_fastadmin easywechat 网页授权

- 5语音信号处理之时域特征、频谱特征、MFCC特征、语谱图特征、谱熵图特征的提取与可视化_python 使用librosa获取频域特征

- 6【C# 基础精讲】条件语句:if、else、switch_c# switch

- 7Python的科学计数法表示_python科学计数法怎么写

- 8SpringMVC中的转发和重定向参数传递_spring mvc 转发正确的是

- 9Android 设置应用启动动画_android 如何实现 launcher3点开应用的动画

- 10chatGPT对英语论文怎么润色呢?_论文英语润色gpt

基于MediaPipeUnityPlugin的三维手势追踪

赞

踩

VID_20230421_005228

简述

目前比较好用的几种手势追踪,很多都是基于AR,需要硬件支持

而Google的MediaPipe则门槛比较低,用电脑摄像头也能跑

同时,又有MediaPipeUnityPlugin已经帮我们移植进了Unity

但 MediaPipe 是基于图像识别的,因此我手的往前往后,近大远小 在它看来只是在放大缩小,得到的数据都只发生在一个平面上,缺少硬件的支持所以没有深度信息。

幸运的是,即使只是一个平面,强大的Google至少帮我们做到了骨骼的相对深度

于是,本文要做的是,如最上面视频所示,模拟出手的深度,让我们的手真正在一个三维空间里面活动

如果你尚不了解 MediaPipeUnityPlugin,先看看我上一篇 MediaPipe-UnityPlugin的基本使用

如果你已经认识 MediaPipeUnityPlugin 的 HandTracking,可以直接跳到 [5.获取坐标] 环节

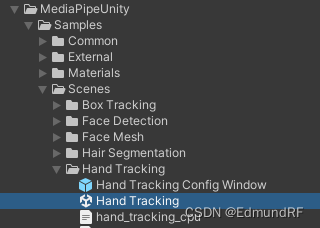

参考案例

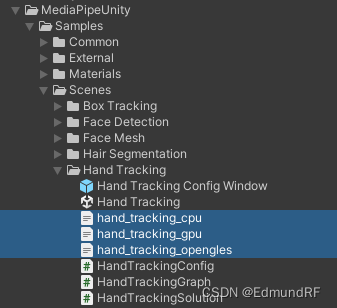

Assets\MediaPipeUnity\Samples\Scenes\Hand Tracking\Hand Tracking.unity

虽说插件已经提供了完整的案例,但因为我们要在这基础上加入额外的功能,所以还是模仿着搭一个自己的Graph和Solution,同时去掉一些用不到的内容,以简化代码

1. 声明一个 MyGraph

public class MyHandTrackingGraph : GraphRunner

{

public override void StartRun(ImageSource imageSource)

{

// 此处进行输出流的启动 和 CalculatorGraph的StartRun

}

protected override IList<WaitForResult> RequestDependentAssets()

{

// 此处加载用到的数据文件

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 首先声明我们的输出流 和 输出流的名称

输出流我们使用插件提供的 OutputStream<TPacket, TValue>

手部追踪的输出流有6种:

手掌检测、基于手掌检测的矩形区、手节点的坐标、手节点的世界坐标、基于坐标的矩形区、左右手检测

分别如下:

// 手掌检测 OutputStream<DetectionVectorPacket, List<Detection>> _palmDetectionsStream; const string _PalmDetectionsStreamName = "palm_detections"; // 基于手掌检测的矩形区 OutputStream<NormalizedRectVectorPacket, List<NormalizedRect>> _handRectsFromPalmDetectionsStream; const string _HandRectsFromPalmDetectionsStreamName = "hand_rects_from_palm_detections"; // 手节点的坐标 OutputStream<NormalizedLandmarkListVectorPacket, List<NormalizedLandmarkList>> _handLandmarksStream; const string _HandLandmarksStreamName = "hand_landmarks"; // 手节点的世界坐标 OutputStream<LandmarkListVectorPacket, List<LandmarkList>> _handWorldLandmarksStream; const string _HandWorldLandmarksStreamName = "hand_world_landmarks"; // 基于坐标的矩形区 OutputStream<NormalizedRectVectorPacket, List<NormalizedRect>> _handRectsFromLandmarksStream; const string _HandRectsFromLandmarksStreamName = "hand_rects_from_landmarks"; // 左右手检测 OutputStream<ClassificationListVectorPacket, List<ClassificationList>> _handednessStream; const string _HandednessStreamName = "handedness";

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

还有我们输入流的名称

const string _InputStreamName = "input_video";

- 1

我们此次用到的只有手节点的坐标,所以只需要

const string _InputStreamName = "input_video";

OutputStream<NormalizedLandmarkListVectorPacket, List<NormalizedLandmarkList>> _handLandmarksStream;

const string _HandLandmarksStreamName = "hand_landmarks";

- 1

- 2

- 3

- 4

- 随后在 ConfigureCalculatorGraph() 对它进行一下初始化和配置

protected override Status ConfigureCalculatorGraph(CalculatorGraphConfig config)

{

_handLandmarksStream = new OutputStream<NormalizedLandmarkListVectorPacket, List<NormalizedLandmarkList>>(

calculatorGraph, _HandLandmarksStreamName, config.AddPacketPresenceCalculator(_HandLandmarksStreamName), timeoutMicrosec);

return base.ConfigureCalculatorGraph(config);

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

OutputStream的构造函数如下

/// <summary>

/// 实例化一个 OutputStream class

/// 图形必须具有 PacketPresenceCalculator 节点,用于计算流是否有输出

/// </summary>

/// <remarks>

/// 当您希望同步获取输出,但不希望在等待输出时阻塞线程时,这很有用

/// </remarks>

/// <param name="calculatorGraph"> 流的所有者 </param>

/// <param name="streamName"> 输出流的名称 </param>

/// <param name="presenceStreamName"> 当输出存在时,输出true的流的名称 </param>

/// <param name="timeoutMicrosec"> 如果输出数据包为空,则 OutputStream 实例会丢弃数据包,直到此处指定的时间结束 </param>

public OutputStream(CalculatorGraph calculatorGraph, string streamName, string presenceStreamName, long timeoutMicrosec = 0) : this(calculatorGraph, streamName, false, timeoutMicrosec)

{

this.presenceStreamName = presenceStreamName;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 然后定义一个异步输出监听的接口 和 一个获取同步输出的接口

public event EventHandler<OutputEventArgs<List<NormalizedLandmarkList>>> OnHandLandmarksOutput

{

add => _handLandmarksStream.AddListener(value);

remove => _handLandmarksStream.RemoveListener(value);

}

public bool TryGetNext(out List<NormalizedLandmarkList> handLandmarks, bool allowBlock = true)

{

var currentTimestampMicrosec = GetCurrentTimestampMicrosec();

return TryGetNext(_handLandmarksStream, out handLandmarks, allowBlock, currentTimestampMicrosec);

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 在 StartRun() 和 Stop() 中进行启动和释放

public override void StartRun(ImageSource imageSource)

{

_handLandmarksStream.StartPolling().AssertOk();

calculatorGraph.StartRun().AssertOk();

}

public override void Stop()

{

_handLandmarksStream?.Close();

_handLandmarksStream = null;

base.Stop();

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 然后定义一个输入接口,用于将输入源传给输入流

public void AddTextureFrameToInputStream(TextureFrame textureFrame)

{

AddTextureFrameToInputStream(_InputStreamName, textureFrame);

}

- 1

- 2

- 3

- 4

- 至此,输入输出已经写好,我们的Graph目前应该是像这样

public class MyHandTrackingGraph : GraphRunner { const string _InputStreamName = "input_video"; OutputStream<NormalizedLandmarkListVectorPacket, List<NormalizedLandmarkList>> _handLandmarksStream; const string _HandLandmarksStreamName = "hand_landmarks"; public event EventHandler<OutputEventArgs<List<NormalizedLandmarkList>>> OnHandLandmarksOutput { add => _handLandmarksStream.AddListener(value); remove => _handLandmarksStream.RemoveListener(value); } public bool TryGetNext(out List<NormalizedLandmarkList> handLandmarks, bool allowBlock = true) { var currentTimestampMicrosec = GetCurrentTimestampMicrosec(); return TryGetNext(_handLandmarksStream, out handLandmarks, allowBlock, currentTimestampMicrosec); } public override void StartRun(ImageSource imageSource) { _handLandmarksStream.StartPolling().AssertOk(); calculatorGraph.StartRun().AssertOk(); } public override void Stop() { _handLandmarksStream?.Close(); _handLandmarksStream = null; base.Stop(); } public void AddTextureFrameToInputStream(TextureFrame textureFrame) { AddTextureFrameToInputStream(_InputStreamName, textureFrame); } protected override Status ConfigureCalculatorGraph(CalculatorGraphConfig config) { _handLandmarksStream = new OutputStream<NormalizedLandmarkListVectorPacket, List<NormalizedLandmarkList>>( calculatorGraph, _HandLandmarksStreamName, config.AddPacketPresenceCalculator(_HandLandmarksStreamName), timeoutMicrosec); return base.ConfigureCalculatorGraph(config); } protected override IList<WaitForResult> RequestDependentAssets() { } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

还差一个 RequestDependentAssets()

这里加载我们手坐标检测的数据文件 “hand_landmark_full.bytes”,它会通过我们在Bootstrap选择的加载方式去找到这个文件

protected override IList<WaitForResult> RequestDependentAssets()

{

return new List<WaitForResult>()

{

WaitForAsset("hand_landmark_full.bytes"),

};

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

2. 声明一个 MySolution

public class MyHandTrackingSolution : ImageSourceSolution<MyHandTrackingGraph> { protected override void OnStartRun() { // 此处绑定graph的输出监听 } protected override void AddTextureFrameToInputStream(TextureFrame textureFrame) { // 此处接入到graph的输入接口 } protected override IEnumerator WaitForNextValue() { // 此处获取graph的同步输出 } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 首先我们声明一个回调,绑定到graph的 OnHandLandmarksOutput

(这里的graphRunner即我们的MyHandTrackingGraph)

protected override void OnStartRun()

{

graphRunner.OnHandLandmarksOutput += OnHandLandmarksOutput;

}

void OnHandLandmarksOutput(object stream, OutputEventArgs<List<NormalizedLandmarkList>> eventArgs)

{

var handLandmarks = eventArgs.value;

// 这里对得到的数据进行处理

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 然后将图像输入传给graph

protected override void AddTextureFrameToInputStream(TextureFrame textureFrame)

{

graphRunner.AddTextureFrameToInputStream(textureFrame);

}

- 1

- 2

- 3

- 4

- 再获取graph的同步输出

这里我们区分一下,同步有两个模式,阻塞同步 和 不阻塞同步,由参数 runningMode 决定(定义在ImageSourceSolution)

protected override IEnumerator WaitForNextValue()

{

List<NormalizedLandmarkList> handLandmarks = null;

if (runningMode == RunningMode.Sync)

{

graphRunner.TryGetNext(out handLandmarks, true));

}

else if (runningMode == RunningMode.NonBlockingSync)

{

yield return new WaitUntil(() => graphRunner.TryGetNext(out handLandmarks, false));

}

// 这里对得到的数据进行处理

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

好了,我们的Solution这样就完事了,得到的数据怎么用,我们后面再说

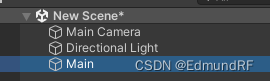

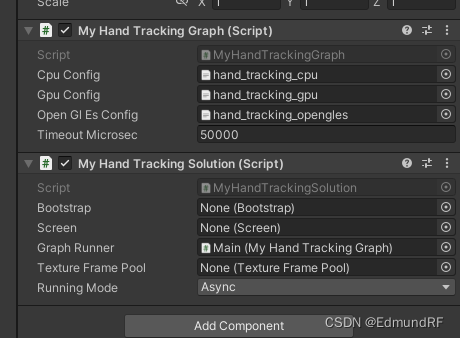

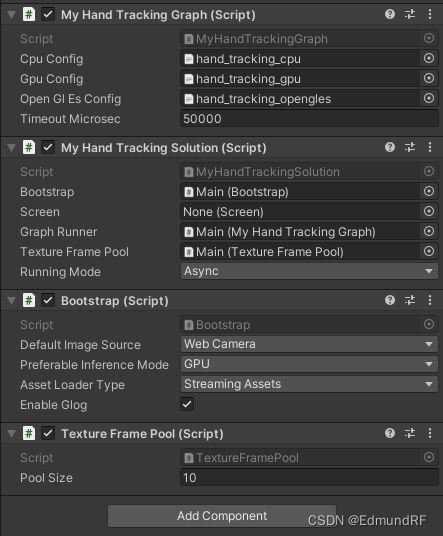

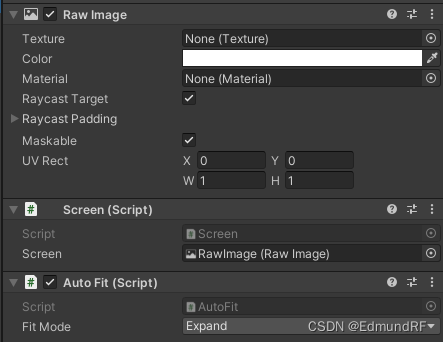

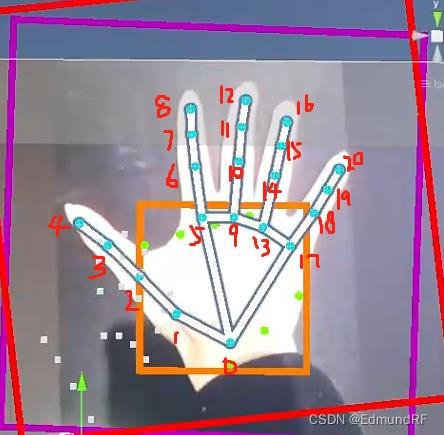

3. 场景搭建

新建个场景,起个空节点来挂脚本,就叫它Main吧

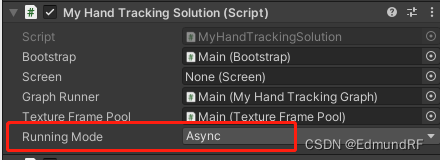

挂上我们的Graph和Solution,并配置

这里的3个Config直接使用预设好的,位于Assets\MediaPipeUnity\Samples\Scenes\Hand Tracking

可以看到我们还差Bootstrap、Screen 和 TextureFramePool

Bootstrap 和 TextureFramePool 直接也挂在这下面即可

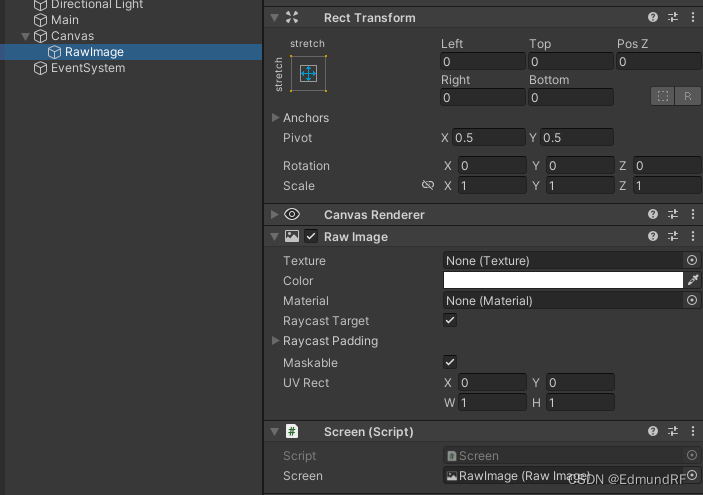

Screen 则新建一个UI/RawImage,挂在它下面,并简单设置一下Rect

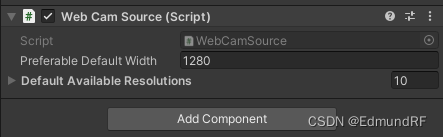

因为我们选择是的WebCamera作为输入源,所以还得加一个 WebCamSource,也挂在Main下面即可

Default Width是画面的分辨率,调高了会卡,按默认的1280即可

但因此我们的Screen会缩放成1280,所以我们给Screen加个AutoFit让它全屏

- 至此,我们可以尝试运行了

4. MyGraph 其二

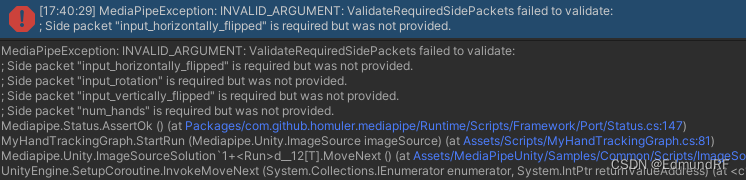

好的 画面是出来了,但不出所料的报错了

我们看看来源,发生在 Graph 中 StartRun 的 calculatorGraph.StartRun().AssertOk()

再看看报错,我们用到的 config 是带有SidePacket的,所以我们还得给他整一个

(上篇讲 CalculatorGraph 时提到过一下)

var sidePacket = new SidePacket();

calculatorGraph.StartRun(sidePacket).AssertOk();

- 1

- 2

怎么配置呢,有接口

sidePacket.Emplace("packet名", new xxxPacket());

- 1

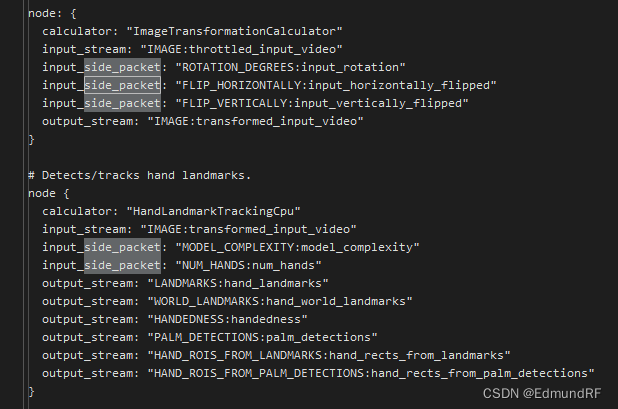

名字哪来,我们来打开 hang_tracking_cpu.txt 看看

在下面找到了我们的 side_packet,当然 直接看报错也能得知

我们直接从案例中搬运一下,其中 SetImageTransformationOptions() 是GraphRunner提供的接口,封装了前面3个SidePacket的配置,完事我们的GraphRunner也有提供 StartRun 的接口把SidePacket传进去

var sidePacket = new SidePacket();

SetImageTransformationOptions(sidePacket, imageSource, true); // GraphRunner提供的接口

sidePacket.Emplace("model_complexity", new IntPacket(1);

sidePacket.Emplace("num_hands", new IntPacket(2));

//calculatorGraph.StartRun(sidePacket).AssertOk();

StartRun(sidePacket);

- 1

- 2

- 3

- 4

- 5

- 6

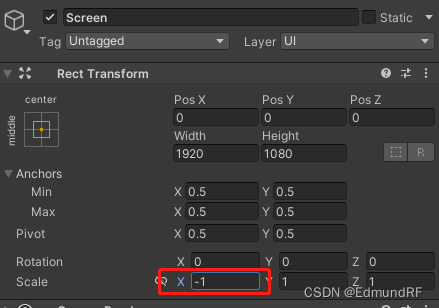

- 好的,现在已经不报错了,但画面好像反了,我们给Screen翻转一下

- 尝试伸个手,在异步回调里获取一下输出

异步获取需要在solution上的runningMode选择异步

void OnHandLandmarksOutput(object stream, OutputEventArgs<List<NormalizedLandmarkList>> eventArgs)

{

if (eventArgs.value != null) Debug.Log("--- Recv ---");

}

- 1

- 2

- 3

- 4

当然同步也行

protected override IEnumerator WaitForNextValue()

{

List<NormalizedLandmarkList> handLandmarks = null;

if (runningMode == RunningMode.Sync)

{

graphRunner.TryGetNext(out handLandmarks, true));

}

else if (runningMode == RunningMode.NonBlockingSync)

{

yield return new WaitUntil(() => graphRunner.TryGetNext(out handLandmarks, false));

}

if (handLandmarks != null) Debug.Log("--- Recv ---");

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

非常好,可以进行下一步了

5. 获取坐标

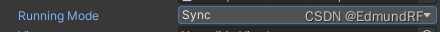

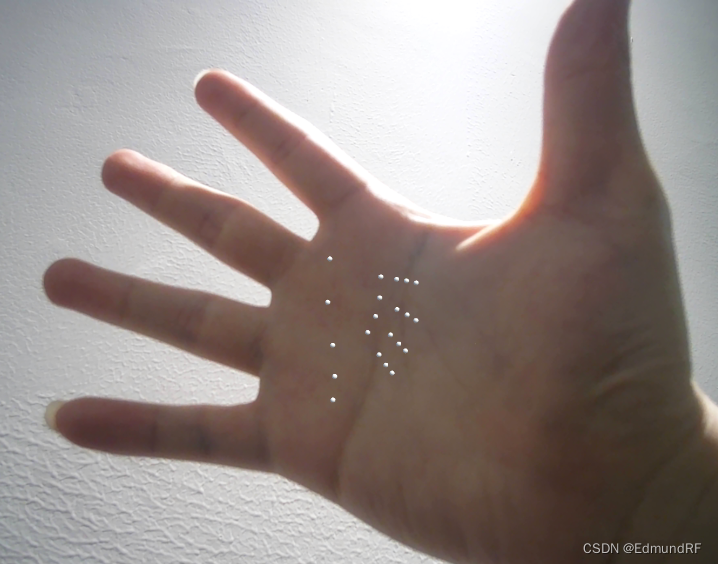

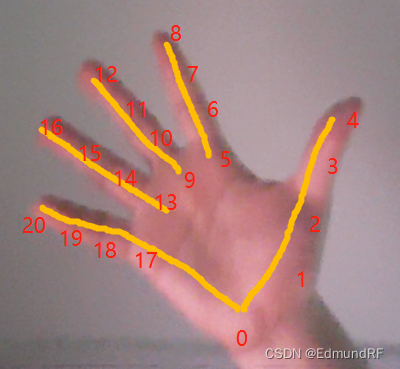

已知MediaPipe手势追踪的骨骼有21个节点,如下图

首先我们拿到的是 List<NormalizedLandmarkList>

遍历下来数量有0到2个,这是识别到手的数量,每只手一个NormalizedLandmarkList

它下面有个 Landmark,也是集合类型

遍历出来的是NormalizedLandmark,数量是21,对应手的骨骼节点,下面有3个属性:X、Y、Z,显然这是坐标

由此可得

if (eventArgs.value != null)

{

var handLandmarks = eventArgs.value;

foreach(var normalizedLandmarkList in handLandmarks)

{

var landmarks = normalizedLandmarkList.Landmark;

foreach(var landmark in landmarks)

{

var pos = new Vector3(landmark.X, landmark.Y, landmark.Z);

}

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

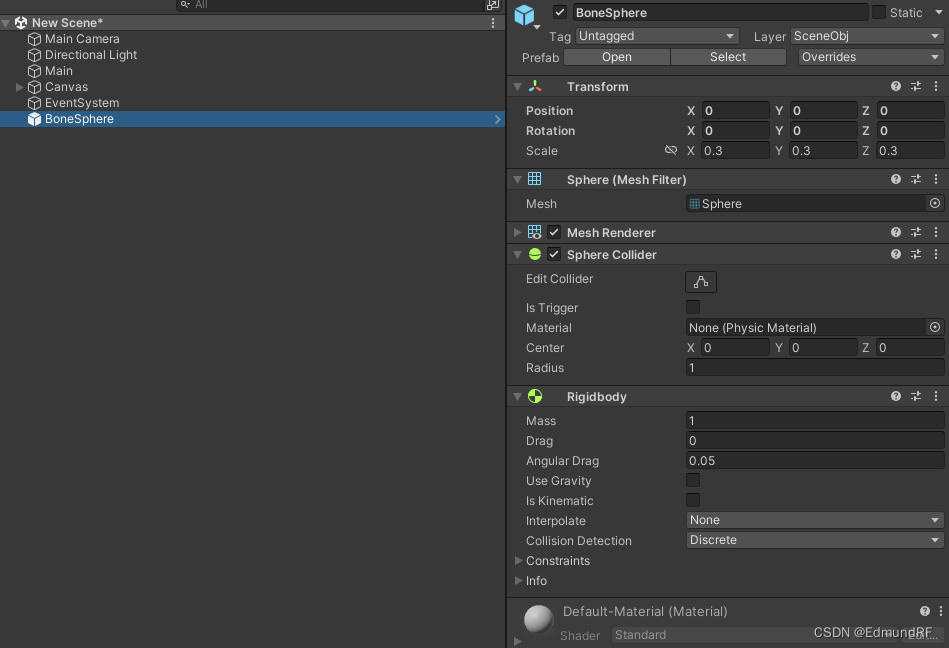

6. 可视化

我们预设一个小球体,运行时生成21个对齐到我们这些坐标点上

顺便加上碰撞体和刚体,让它能与场景物体交互(刚体记得去掉重力)

新建一个脚本来控制它,就叫 MyView 吧

绑定我们的预制体并在 Start 中提前生成好

public Transform objRoot; // 存放小球的父节点

public GameObject boneObj;

List<GameObject> m_boneObjList;

void Start()

{

m_boneObjList= new List<GameObject>();

while (m_boneObjList.Count < 21)

{

m_boneObjList.Add(Instantiate(boneObj, objRoot));

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

然后我们在 Update 中把坐标赋进去即可

void Update()

{

var landmarks = ... //上面拿到的landmarks

for (int i = 0; i < landmarks.Count; i++)

{

var mark = landmarks[i];

var pos = new Vector3(mark.X, mark.Y, mark.Z);

m_boneObjList[i].transform.localPosition = pos;

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

在场景中挂上MyView,运行一下

好像反了,我们给x和y翻转一下

var pos = new Vector3(-mark.X, -mark.Y, mark.Z);

- 1

好的没问题,可能看起来有点小,我们给pos乘一个倍数放大一下即可

我们把坐标获取从MySolution搬到MyView,并简单封装一下

public Transform objRoot; // 存放小球的父节点 public GameObject boneObj; List<GameObject> m_boneObjList; List<NormalizedLandmarkList> m_currList; bool m_newLandMark = false; void Start() { m_boneObjList= new List<GameObject>(); while (m_boneObjList.Count < 21) { m_boneObjList.Add(Instantiate(boneObj, objRoot)); } } public void DrawLater(List<NormalizedLandmarkList> list) { m_currList = list; m_newLandMark = true; } public void DrawNow(List<NormalizedLandmarkList> list) { if (list.Count == 0) return; var landmarks = list[0].Landmark; // 先忽略多只手的情况,只处理第一只手 if (landmarks.Count <= 0) return; for (int i = 0; i < landmarks.Count; i++) { var mark = landmarks[i]; var pos = new Vector3(mark.X, mark.Y, mark.Z); m_boneObjList[i].transform.localPosition = pos; } } void LateUpdate() { if (m_newLandMark) UpdateDraw(); } void UpdateDraw() { m_newLandMark = false; DrawNow(m_currList); }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

这里区分了同步和异步的处理,同步数据直接接入 DrawNow(),异步数据则接入到 DrawLater(),稍后从Update里处理,因为它不允许在异步线程里面操作。

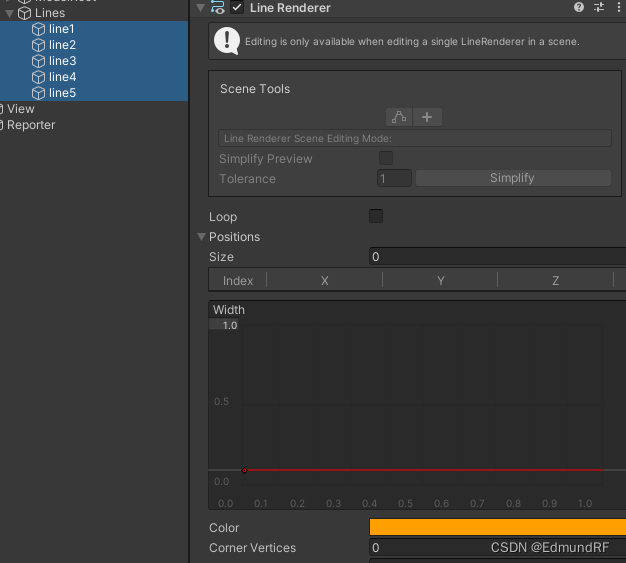

现在只有几个骨骼点看起来不太直观,我们用LineRenderer给他加几条Line

琢磨一下5条够了,对应的骨骼点如下

我们记录一下这些骨骼点,并绘制出来

public LineRenderer[] lines; readonly int[][] m_connections = { new []{0, 1, 2, 3, 4}, new []{0, 5, 6, 7, 8}, new []{9, 10, 11, 12}, new []{13, 14, 15, 16}, new []{0, 17, 18, 19, 20}, }; void DrawLine() { for (int i = 0; i < m_connections.Length; i++) { var connections = m_connections[i]; var pos = new Vector3[connections.Length]; for (int j = 0; j < connections.Length; j++) { pos[j] = m_boneObjList[connections[j]].transform.position; } lines[i].positionCount = pos.Length; lines[i].SetPositions(pos); } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

记得在外面绑定引用哦,来看看效果

7. 深度模拟

说了这么多前期工夫,终于来到我们的核心环节了

先来说一下思路,我们主要要做两件事:

– ①求得手的深度,也就是前后移动的距离

– ②把“放大/缩小”了的手还原回原来的大小

实现方式也很简单粗暴,我们不奢求精确值,只求个大概:

1、我们找两个掌心附近的相邻骨骼点,如[0]和[1],因为这里的点之间的相对位置比较稳定。

2、我们取他们的距离distance,然后某一时刻的值为初始值[d0];然后取之后某个位置的值为[d1]。

3、这个距离是会随着远近而一起放大缩小的,于是能得到他们的缩放比 [d0] / [d1] 。

4、如此一来,②就能完成了,将之后的坐标乘以这个缩放,自然也就恢复了原来的大小。

5、得到了缩放比,那①也好办,我们直接设定一个系数,让缩放比乘以这个系数,则为深度值

听着是不是过于简单粗暴哈哈,没法,我们手头上能用到的数据,只能办到这样,

对初始值和系数进行慢慢调整后,也能得到非常仿真的效果。

首先,我们定义两个常量作为初始距离和深度系数

static float m_baseDist = 0.5f;

static float m_depthRatio = 30f;

- 1

- 2

然后如上所述进行处理得到缩放比scale和深度值depth

void GetDepthByLandmark(NormalizedLandmark mark1, NormalizedLandmark mark2, out float depth, out float scale)

{

var pos1 = new Vector3(mark1.X, mark1.Y, mark1.Z);

var pos2 = new Vector3(mark2.X, mark2.Y, mark2.Z);

var length = Vector3.Distance(pos1, pos2);

scale = m_baseDist / length;

depth = scale * m_depthRatio;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

随后我们把得到的值直接给到 objRoot

GetDepthByLandmark(landmarks[0], landmarks[1], out var depth, out var scale);

var rootPos = objRoot.localPosition;

objRoot.localPosition = new Vector3(rootPos.x, rootPos.y, depth);

objRoot.localScale = new Vector3(scale, scale, scale);

- 1

- 2

- 3

- 4

- 5

至此,我们的 MyView 应该是像这样

public class MyView : MonoBehaviour { public Transform objRoot; // 存放小球的父节点 public GameObject boneObj; List<GameObject> m_boneObjList; public LineRenderer[] lines; List<NormalizedLandmarkList> m_currList; bool m_newLandMark = false; void Start() { m_boneObjList= new List<GameObject>(); while (m_boneObjList.Count < 21) { m_boneObjList.Add(Instantiate(boneObj, objRoot)); } } public void DrawLater(List<NormalizedLandmarkList> list) { m_currList = list; m_newLandMark = true; } public void DrawNow(List<NormalizedLandmarkList> list) { if (list.Count == 0) return; var landmarks = list[0].Landmark; // 先忽略多只手的情况,只处理第一只手 if (landmarks.Count <= 0) return; GetDepthByLandmark(landmarks[0], landmarks[1], out var depth, out var scale); var rootPos = objRoot.localPosition; objRoot.localPosition = new Vector3(rootPos.x, rootPos.y, depth); objRoot.localScale = new Vector3(scale, scale, scale); for (int i = 0; i < landmarks.Count; i++) { var mark = landmarks[i]; var pos = new Vector3(mark.X, mark.Y, mark.Z); m_boneObjList[i].transform.localPosition = pos * 14; // 我这里要14倍才看起来不那么小 } } void LateUpdate() { if (m_newLandMark) UpdateDraw(); } void UpdateDraw() { m_newLandMark = false; DrawNow(m_currList); } readonly int[][] m_connections = { new []{0, 1, 2, 3, 4}, new []{0, 5, 6, 7, 8}, new []{9, 10, 11, 12}, new []{13, 14, 15, 16}, new []{0, 17, 18, 19, 20}, }; void DrawLine() { for (int i = 0; i < m_connections.Length; i++) { var connections = m_connections[i]; var pos = new Vector3[connections.Length]; for (int j = 0; j < connections.Length; j++) { pos[j] = m_boneObjList[connections[j]].transform.position; } lines[i].positionCount = pos.Length; lines[i].SetPositions(pos); } } static float m_baseDist = 0.5f; static float m_depthRatio = 30f; void GetDepthByLandmark(NormalizedLandmark mark1, NormalizedLandmark mark2, out float depth, out float scale) { var pos1 = new Vector3(mark1.X, mark1.Y, mark1.Z); var pos2 = new Vector3(mark2.X, mark2.Y, mark2.Z); var length = Vector3.Distance(pos1, pos2); scale = m_baseDist / length; depth = scale * m_depthRatio; } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

运行一下

对味了,觉得有点歪的话也可以调一下objRoot的位置

8. 加点交互

我们往场景里面加点物体,给要交互的物体加上碰撞体和刚体

至此,我们已达到了视频中的效果

VID_20230421_005228

我们目前只处理了一只手的情况,要双手的话,我们用两份的小球和Line同样操作即可

改进思路:

有条件的话还是来点硬件支持,如 ARCore 的 Depth API ,它可以帮我们获取到画面上某个坐标的深度,Unity的AR包里就有。

目前正在尝试,成功的话我后续写一篇…