热门标签

热门文章

- 1Spring5源码分析六

- 2CashFiesta 攻略_cashfiesta攻略

- 3如何从零开始搭建一套四旋翼无人机?

- 42021年全国职业院校技能大赛-网络搭建与应用赛项-公开赛卷(五)技能要求_所有用户使用漫游用户配置文件,配置文件存储在windows-1的 c:\profiles文件夹

- 5Git 多个账户,多个SSH配置_多个gitlab账号可以共用同一个ssh keys吗

- 6fastadmin二次开发使用教程php,fastAdmin插件开发教程之简明开发入门教程

- 7使用yolov8对视频进行实时检测_yolov8 检测视频

- 8用Django构建网上商城:django网上商城从零到有开发讲解_django商城

- 9Java网络爬虫——jsoup快速上手,爬取京东数据。同时解决‘京东安全’防爬问题_京东爬虫

- 10升级uniapp后小程序编译提示[ project.config.json 文件内容错误] project.config.json: libVersion 字段需为string

当前位置: article > 正文

PG video llava

作者:盐析白兔 | 2024-04-21 11:40:07

赞

踩

PG video llava

git clone https://github.com/mbzuai-oryx/Video-LLaVA.git

conda create --name=pg_video_llava python=3.10

conda activate pg_video_llava

- 1

- 2

- 3

pip install triton>=2.0.0

nvcc -V

conda install pytorch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 pytorch-cuda=11.7 -c pytorch -c nvidia

- 1

- 2

- 3

#torch #==2.1.0

#torchaudio #==2.1.0

#torchvision #==0.16.0

tqdm==4.65.0

git+https://github.com/openai/CLIP.git

numpy==1.24.3

Pillow==9.5.0

decord==0.6.0

gradio==3.23.0

markdown2==2.4.8

einops==0.6.1

requests==2.30.0

sentencepiece==0.1.99

protobuf==4.23.2

accelerate==0.20.3

tokenizers>=0.13.3

pydantic==1.10.7

git+https://github.com/m-bain/whisperx.git

git+https://github.com/shehanmunasinghe/whisper-at.git@patch-1#subdirectory=package/whisper-at

git+https://github.com/xinyu1205/recognize-anything.git

#transformers@git+https://github.com/huggingface/transformers.git@cae78c46

openai==0.28.0

scenedetect[opencv-headless]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

pip install transformers -U

pip install transformers[torch]

pip install -r requirements.txt

- 1

- 2

- 3

transformers[torch] 連帶解決 accelerate 的bug 報錯

Download PG-Video-LLaVA Weights

Setup DEVA as mentioned here

git clone https://github.com/hkchengrex/Tracking-Anything-with-DEVA.git

cd Tracking-Anything-with-DEVA

pip install -e .

- 1

- 2

- 3

Setup Grounded-Segment-Anything as mentioned here

cd ../

git clone https://github.com/hkchengrex/Grounded-Segment-Anything.git

cd Grounded-Segment-Anything

python -m pip install -e segment_anything

python -m pip install -e GroundingDINO

- 1

- 2

- 3

- 4

- 5

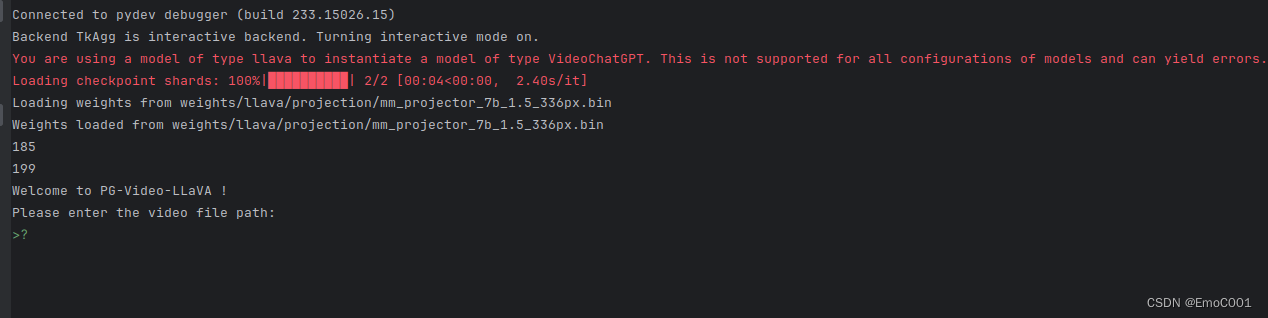

parser.add_argument("--model-name", type=str,default='weights/llava/llava-v1.5-7b')

parser.add_argument("--projection_path", type=str, default='weights/llava/projection/mm_projector_7b_1.5_336px.bin')

parser.add_argument("--use_asr", action='store_true', default=False, help='Whether to use audio transcripts or not')

parser.add_argument("--conv_mode", type=str, required=False, default='pg-video-llava')

parser.add_argument("--with_grounding", action='store_true',required=False, help='Run with grounding module')

- 1

- 2

- 3

- 4

- 5

python video_chatgpt/chat.py \

--model-name <path_to_LLaVA-7B-1.5_weights> \

--projection_path <path_to_projector_wights_for_LLaVA-7B-1.5> \

--use_asr \

--conv_mode pg-video-llava

- 1

- 2

- 3

- 4

- 5

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/盐析白兔/article/detail/462809

推荐阅读

相关标签