热门标签

热门文章

- 1javaScript实现动态规划(Dynamic Programming)01背包问题_动态规划代码实现js

- 2下载Spark并在单机模式下运行它_spark单机模式下载

- 3SublimeText4 安装_sublime text 4激活

- 4A guide towards phd study_towardsphd

- 5Android6.0源码分析之View(一)_android中的view源码在哪里

- 6RabbitMQ之延迟消息_rabbitmq发送延时消息

- 7Python爬虫 | 教你怎么用正则表达式~_爬虫正则表达式怎么写

- 8Spark调度系统——Stage详解_spark rdd stage

- 9C# Web控件与数据感应之 填充 HtmlTable_c# web razor前端 数据表格控件

- 10信息与熵-读书笔记1_熵减与信息量

当前位置: article > 正文

麒麟系统SP2 与昇腾300I芯片测试qwen7B模型记录_qwen mindspore

作者:盐析白兔 | 2024-06-16 19:32:26

赞

踩

qwen mindspore

1. 查看系统版本

uname -a

Linux localhost.localdomain 4.19.90-24.4.v2101.ky10.aarch64 #1 SMP Mon May 24 14:45:37 CST 2021 aarch64 aarch64 aarch64 GNU/Linux

2. 查看显卡

npu-smi info

前情提要:

官网给出支持昇腾910架构,刚好有300I资源,测试一下,给大家提供参考~~菜鸟一枚还需向各位大佬学习

暂时了解 该系统可以做简单的算法模型,主要是架构不同,需要重新写算法,可以安装pytorch、tensorflow和mindformers等。

查看具体参数:

uname -m && cat /etc/*release

- aarch64

- Kylin Linux Advanced Server release V10 (Sword)

- NAME="Kylin Linux Advanced Server"

- VERSION="V10 (Sword)"

- ID="kylin"

- VERSION_ID="V10"

- PRETTY_NAME="Kylin Linux Advanced Server V10 (Sword)"

- ANSI_COLOR="0;31"

-

- Kylin Linux Advanced Server release V10 (Sword)

3. 配置docker,有两种配置方法,一种在官网下载,一种直接用命令yum 安装即可

4. 安装minconda ,注意安装arrch64版本即可

5.按照教程配置,这里不做详细介绍了,直接给出记录

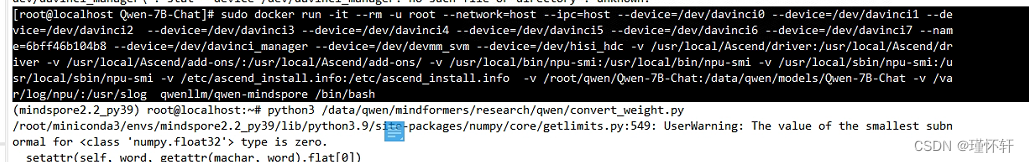

6.没有使用教程启动docker的命令,使用以下命令。

sudo docker run -it --rm -u root --network=host --ipc=host --device=/dev/davinci0 --device=/dev/davinci1 --device=/dev/davinci2 --device=/dev/davinci3 --device=/dev/davinci4 --device=/dev/davinci5 --device=/dev/davinci6 --device=/dev/davinci7 --name=6bff46b104b8 --device=/dev/davinci_manager --device=/dev/devmm_svm --device=/dev/hisi_hdc -v /usr/local/Ascend/driver:/usr/local/Ascend/driver -v /usr/local/Ascend/add-ons/:/usr/local/Ascend/add-ons/ -v /usr/local/bin/npu-smi:/usr/local/bin/npu-smi -v /usr/local/sbin/npu-smi:/usr/local/sbin/npu-smi -v /etc/ascend_install.info:/etc/ascend_install.info -v /root/qwen/Qwen-7B-Chat:/data/qwen/models/Qwen-7B-Chat -v /var/log/npu/:/usr/slog qwenllm/qwen-mindspore /bin/bash成功启动docker。

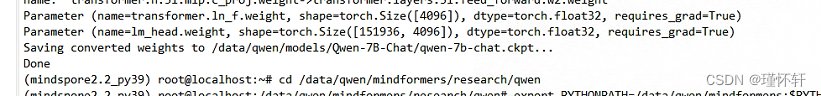

7.转换模型

python3 /data/qwen/mindformers/research/qwen/convert_weight.py

- /root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/numpy/core/getlimits.py:549: UserWarning: The value of the smallest subnormal for <class 'numpy.float32'> type is zero.

- setattr(self, word, getattr(machar, word).flat[0])

- /root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/numpy/core/getlimits.py:89: UserWarning: The value of the smallest subnormal for <class 'numpy.float32'> type is zero.

- return self._float_to_str(self.smallest_subnormal)

- /root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/numpy/core/getlimits.py:549: UserWarning: The value of the smallest subnormal for <class 'numpy.float64'> type is zero.

- setattr(self, word, getattr(machar, word).flat[0])

- /root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/numpy/core/getlimits.py:89: UserWarning: The value of the smallest subnormal for <class 'numpy.float64'> type is zero.

- return self._float_to_str(self.smallest_subnormal)

- Warning: please make sure that you are using the latest codes and checkpoints, especially if you used Qwen-7B before 09.25.2023.请使用最新模型和代码,尤其如果你在9月25日前已经开始使用Qwen-7B,千万注意不要使用错误代码和模型。

- Flash attention will be disabled because it does NOT support fp32.

- Warning: import flash_attn rotary fail, please install FlashAttention rotary to get higher efficiency https://github.com/Dao-AILab/flash-attention/tree/main/csrc/rotary

- Warning: import flash_attn rms_norm fail, please install FlashAttention layer_norm to get higher efficiency https://github.com/Dao-AILab/flash-attention/tree/main/csrc/layer_norm

- Warning: import flash_attn fail, please install FlashAttention to get higher efficiency https://github.com/Dao-AILab/flash-attention

- Loading checkpoint shards: 100%|??????????????????????????????????????????????????????????????????????????????| 8/8 [00:03<00:00, 2.35it/s]

- Parameter (name=transformer.wte.weight, shape=torch.Size([151936, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.wte.weight->transformer.wte.embedding_weight

- Parameter (name=transformer.h.0.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.0.ln_1.weight->transformer.layers.0.attention_norm.weight

- Parameter (name=transformer.h.0.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.0.attn.c_attn.weight->transformer.layers.0.attn.c_attn.weight

- Parameter (name=transformer.h.0.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.0.attn.c_attn.bias->transformer.layers.0.attn.c_attn.bias

- Parameter (name=transformer.h.0.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.0.attn.c_proj.weight->transformer.layers.0.attention.wo.weight

- Parameter (name=transformer.h.0.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.0.ln_2.weight->transformer.layers.0.ffn_norm.weight

- Parameter (name=transformer.h.0.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.0.mlp.w1.weight->transformer.layers.0.feed_forward.w1.weight

- Parameter (name=transformer.h.0.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.0.mlp.w2.weight->transformer.layers.0.feed_forward.w3.weight

- Parameter (name=transformer.h.0.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.0.mlp.c_proj.weight->transformer.layers.0.feed_forward.w2.weight

- Parameter (name=transformer.h.1.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.1.ln_1.weight->transformer.layers.1.attention_norm.weight

- Parameter (name=transformer.h.1.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.1.attn.c_attn.weight->transformer.layers.1.attn.c_attn.weight

- Parameter (name=transformer.h.1.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.1.attn.c_attn.bias->transformer.layers.1.attn.c_attn.bias

- Parameter (name=transformer.h.1.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.1.attn.c_proj.weight->transformer.layers.1.attention.wo.weight

- Parameter (name=transformer.h.1.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.1.ln_2.weight->transformer.layers.1.ffn_norm.weight

- Parameter (name=transformer.h.1.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.1.mlp.w1.weight->transformer.layers.1.feed_forward.w1.weight

- Parameter (name=transformer.h.1.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.1.mlp.w2.weight->transformer.layers.1.feed_forward.w3.weight

- Parameter (name=transformer.h.1.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.1.mlp.c_proj.weight->transformer.layers.1.feed_forward.w2.weight

- Parameter (name=transformer.h.2.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.2.ln_1.weight->transformer.layers.2.attention_norm.weight

- Parameter (name=transformer.h.2.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.2.attn.c_attn.weight->transformer.layers.2.attn.c_attn.weight

- Parameter (name=transformer.h.2.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.2.attn.c_attn.bias->transformer.layers.2.attn.c_attn.bias

- Parameter (name=transformer.h.2.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.2.attn.c_proj.weight->transformer.layers.2.attention.wo.weight

- Parameter (name=transformer.h.2.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.2.ln_2.weight->transformer.layers.2.ffn_norm.weight

- Parameter (name=transformer.h.2.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.2.mlp.w1.weight->transformer.layers.2.feed_forward.w1.weight

- Parameter (name=transformer.h.2.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.2.mlp.w2.weight->transformer.layers.2.feed_forward.w3.weight

- Parameter (name=transformer.h.2.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.2.mlp.c_proj.weight->transformer.layers.2.feed_forward.w2.weight

- Parameter (name=transformer.h.3.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.3.ln_1.weight->transformer.layers.3.attention_norm.weight

- Parameter (name=transformer.h.3.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.3.attn.c_attn.weight->transformer.layers.3.attn.c_attn.weight

- Parameter (name=transformer.h.3.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.3.attn.c_attn.bias->transformer.layers.3.attn.c_attn.bias

- Parameter (name=transformer.h.3.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.3.attn.c_proj.weight->transformer.layers.3.attention.wo.weight

- Parameter (name=transformer.h.3.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.3.ln_2.weight->transformer.layers.3.ffn_norm.weight

- Parameter (name=transformer.h.3.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.3.mlp.w1.weight->transformer.layers.3.feed_forward.w1.weight

- Parameter (name=transformer.h.3.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.3.mlp.w2.weight->transformer.layers.3.feed_forward.w3.weight

- Parameter (name=transformer.h.3.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.3.mlp.c_proj.weight->transformer.layers.3.feed_forward.w2.weight

- Parameter (name=transformer.h.4.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.4.ln_1.weight->transformer.layers.4.attention_norm.weight

- Parameter (name=transformer.h.4.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.4.attn.c_attn.weight->transformer.layers.4.attn.c_attn.weight

- Parameter (name=transformer.h.4.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.4.attn.c_attn.bias->transformer.layers.4.attn.c_attn.bias

- Parameter (name=transformer.h.4.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.4.attn.c_proj.weight->transformer.layers.4.attention.wo.weight

- Parameter (name=transformer.h.4.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.4.ln_2.weight->transformer.layers.4.ffn_norm.weight

- Parameter (name=transformer.h.4.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.4.mlp.w1.weight->transformer.layers.4.feed_forward.w1.weight

- Parameter (name=transformer.h.4.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.4.mlp.w2.weight->transformer.layers.4.feed_forward.w3.weight

- Parameter (name=transformer.h.4.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.4.mlp.c_proj.weight->transformer.layers.4.feed_forward.w2.weight

- Parameter (name=transformer.h.5.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.5.ln_1.weight->transformer.layers.5.attention_norm.weight

- Parameter (name=transformer.h.5.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.5.attn.c_attn.weight->transformer.layers.5.attn.c_attn.weight

- Parameter (name=transformer.h.5.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.5.attn.c_attn.bias->transformer.layers.5.attn.c_attn.bias

- Parameter (name=transformer.h.5.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.5.attn.c_proj.weight->transformer.layers.5.attention.wo.weight

- Parameter (name=transformer.h.5.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.5.ln_2.weight->transformer.layers.5.ffn_norm.weight

- Parameter (name=transformer.h.5.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.5.mlp.w1.weight->transformer.layers.5.feed_forward.w1.weight

- Parameter (name=transformer.h.5.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.5.mlp.w2.weight->transformer.layers.5.feed_forward.w3.weight

- Parameter (name=transformer.h.5.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.5.mlp.c_proj.weight->transformer.layers.5.feed_forward.w2.weight

- Parameter (name=transformer.h.6.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.6.ln_1.weight->transformer.layers.6.attention_norm.weight

- Parameter (name=transformer.h.6.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.6.attn.c_attn.weight->transformer.layers.6.attn.c_attn.weight

- Parameter (name=transformer.h.6.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.6.attn.c_attn.bias->transformer.layers.6.attn.c_attn.bias

- Parameter (name=transformer.h.6.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.6.attn.c_proj.weight->transformer.layers.6.attention.wo.weight

- Parameter (name=transformer.h.6.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.6.ln_2.weight->transformer.layers.6.ffn_norm.weight

- Parameter (name=transformer.h.6.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.6.mlp.w1.weight->transformer.layers.6.feed_forward.w1.weight

- Parameter (name=transformer.h.6.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.6.mlp.w2.weight->transformer.layers.6.feed_forward.w3.weight

- Parameter (name=transformer.h.6.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.6.mlp.c_proj.weight->transformer.layers.6.feed_forward.w2.weight

- Parameter (name=transformer.h.7.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.7.ln_1.weight->transformer.layers.7.attention_norm.weight

- Parameter (name=transformer.h.7.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.7.attn.c_attn.weight->transformer.layers.7.attn.c_attn.weight

- Parameter (name=transformer.h.7.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.7.attn.c_attn.bias->transformer.layers.7.attn.c_attn.bias

- Parameter (name=transformer.h.7.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.7.attn.c_proj.weight->transformer.layers.7.attention.wo.weight

- Parameter (name=transformer.h.7.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.7.ln_2.weight->transformer.layers.7.ffn_norm.weight

- Parameter (name=transformer.h.7.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.7.mlp.w1.weight->transformer.layers.7.feed_forward.w1.weight

- Parameter (name=transformer.h.7.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.7.mlp.w2.weight->transformer.layers.7.feed_forward.w3.weight

- Parameter (name=transformer.h.7.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.7.mlp.c_proj.weight->transformer.layers.7.feed_forward.w2.weight

- Parameter (name=transformer.h.8.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.8.ln_1.weight->transformer.layers.8.attention_norm.weight

- Parameter (name=transformer.h.8.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.8.attn.c_attn.weight->transformer.layers.8.attn.c_attn.weight

- Parameter (name=transformer.h.8.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.8.attn.c_attn.bias->transformer.layers.8.attn.c_attn.bias

- Parameter (name=transformer.h.8.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.8.attn.c_proj.weight->transformer.layers.8.attention.wo.weight

- Parameter (name=transformer.h.8.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.8.ln_2.weight->transformer.layers.8.ffn_norm.weight

- Parameter (name=transformer.h.8.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.8.mlp.w1.weight->transformer.layers.8.feed_forward.w1.weight

- Parameter (name=transformer.h.8.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.8.mlp.w2.weight->transformer.layers.8.feed_forward.w3.weight

- Parameter (name=transformer.h.8.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.8.mlp.c_proj.weight->transformer.layers.8.feed_forward.w2.weight

- Parameter (name=transformer.h.9.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.9.ln_1.weight->transformer.layers.9.attention_norm.weight

- Parameter (name=transformer.h.9.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.9.attn.c_attn.weight->transformer.layers.9.attn.c_attn.weight

- Parameter (name=transformer.h.9.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.9.attn.c_attn.bias->transformer.layers.9.attn.c_attn.bias

- Parameter (name=transformer.h.9.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.9.attn.c_proj.weight->transformer.layers.9.attention.wo.weight

- Parameter (name=transformer.h.9.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.9.ln_2.weight->transformer.layers.9.ffn_norm.weight

- Parameter (name=transformer.h.9.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.9.mlp.w1.weight->transformer.layers.9.feed_forward.w1.weight

- Parameter (name=transformer.h.9.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.9.mlp.w2.weight->transformer.layers.9.feed_forward.w3.weight

- Parameter (name=transformer.h.9.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.9.mlp.c_proj.weight->transformer.layers.9.feed_forward.w2.weight

- Parameter (name=transformer.h.10.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.10.ln_1.weight->transformer.layers.10.attention_norm.weight

- Parameter (name=transformer.h.10.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.10.attn.c_attn.weight->transformer.layers.10.attn.c_attn.weight

- Parameter (name=transformer.h.10.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.10.attn.c_attn.bias->transformer.layers.10.attn.c_attn.bias

- Parameter (name=transformer.h.10.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.10.attn.c_proj.weight->transformer.layers.10.attention.wo.weight

- Parameter (name=transformer.h.10.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.10.ln_2.weight->transformer.layers.10.ffn_norm.weight

- Parameter (name=transformer.h.10.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.10.mlp.w1.weight->transformer.layers.10.feed_forward.w1.weight

- Parameter (name=transformer.h.10.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.10.mlp.w2.weight->transformer.layers.10.feed_forward.w3.weight

- Parameter (name=transformer.h.10.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.10.mlp.c_proj.weight->transformer.layers.10.feed_forward.w2.weight

- Parameter (name=transformer.h.11.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.11.ln_1.weight->transformer.layers.11.attention_norm.weight

- Parameter (name=transformer.h.11.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.11.attn.c_attn.weight->transformer.layers.11.attn.c_attn.weight

- Parameter (name=transformer.h.11.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.11.attn.c_attn.bias->transformer.layers.11.attn.c_attn.bias

- Parameter (name=transformer.h.11.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.11.attn.c_proj.weight->transformer.layers.11.attention.wo.weight

- Parameter (name=transformer.h.11.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.11.ln_2.weight->transformer.layers.11.ffn_norm.weight

- Parameter (name=transformer.h.11.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.11.mlp.w1.weight->transformer.layers.11.feed_forward.w1.weight

- Parameter (name=transformer.h.11.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.11.mlp.w2.weight->transformer.layers.11.feed_forward.w3.weight

- Parameter (name=transformer.h.11.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.11.mlp.c_proj.weight->transformer.layers.11.feed_forward.w2.weight

- Parameter (name=transformer.h.12.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.12.ln_1.weight->transformer.layers.12.attention_norm.weight

- Parameter (name=transformer.h.12.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.12.attn.c_attn.weight->transformer.layers.12.attn.c_attn.weight

- Parameter (name=transformer.h.12.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.12.attn.c_attn.bias->transformer.layers.12.attn.c_attn.bias

- Parameter (name=transformer.h.12.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.12.attn.c_proj.weight->transformer.layers.12.attention.wo.weight

- Parameter (name=transformer.h.12.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.12.ln_2.weight->transformer.layers.12.ffn_norm.weight

- Parameter (name=transformer.h.12.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.12.mlp.w1.weight->transformer.layers.12.feed_forward.w1.weight

- Parameter (name=transformer.h.12.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.12.mlp.w2.weight->transformer.layers.12.feed_forward.w3.weight

- Parameter (name=transformer.h.12.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.12.mlp.c_proj.weight->transformer.layers.12.feed_forward.w2.weight

- Parameter (name=transformer.h.13.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.13.ln_1.weight->transformer.layers.13.attention_norm.weight

- Parameter (name=transformer.h.13.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.13.attn.c_attn.weight->transformer.layers.13.attn.c_attn.weight

- Parameter (name=transformer.h.13.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.13.attn.c_attn.bias->transformer.layers.13.attn.c_attn.bias

- Parameter (name=transformer.h.13.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.13.attn.c_proj.weight->transformer.layers.13.attention.wo.weight

- Parameter (name=transformer.h.13.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.13.ln_2.weight->transformer.layers.13.ffn_norm.weight

- Parameter (name=transformer.h.13.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.13.mlp.w1.weight->transformer.layers.13.feed_forward.w1.weight

- Parameter (name=transformer.h.13.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.13.mlp.w2.weight->transformer.layers.13.feed_forward.w3.weight

- Parameter (name=transformer.h.13.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.13.mlp.c_proj.weight->transformer.layers.13.feed_forward.w2.weight

- Parameter (name=transformer.h.14.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.14.ln_1.weight->transformer.layers.14.attention_norm.weight

- Parameter (name=transformer.h.14.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.14.attn.c_attn.weight->transformer.layers.14.attn.c_attn.weight

- Parameter (name=transformer.h.14.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.14.attn.c_attn.bias->transformer.layers.14.attn.c_attn.bias

- Parameter (name=transformer.h.14.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.14.attn.c_proj.weight->transformer.layers.14.attention.wo.weight

- Parameter (name=transformer.h.14.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.14.ln_2.weight->transformer.layers.14.ffn_norm.weight

- Parameter (name=transformer.h.14.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.14.mlp.w1.weight->transformer.layers.14.feed_forward.w1.weight

- Parameter (name=transformer.h.14.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.14.mlp.w2.weight->transformer.layers.14.feed_forward.w3.weight

- Parameter (name=transformer.h.14.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.14.mlp.c_proj.weight->transformer.layers.14.feed_forward.w2.weight

- Parameter (name=transformer.h.15.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.15.ln_1.weight->transformer.layers.15.attention_norm.weight

- Parameter (name=transformer.h.15.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.15.attn.c_attn.weight->transformer.layers.15.attn.c_attn.weight

- Parameter (name=transformer.h.15.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.15.attn.c_attn.bias->transformer.layers.15.attn.c_attn.bias

- Parameter (name=transformer.h.15.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.15.attn.c_proj.weight->transformer.layers.15.attention.wo.weight

- Parameter (name=transformer.h.15.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.15.ln_2.weight->transformer.layers.15.ffn_norm.weight

- Parameter (name=transformer.h.15.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.15.mlp.w1.weight->transformer.layers.15.feed_forward.w1.weight

- Parameter (name=transformer.h.15.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.15.mlp.w2.weight->transformer.layers.15.feed_forward.w3.weight

- Parameter (name=transformer.h.15.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.15.mlp.c_proj.weight->transformer.layers.15.feed_forward.w2.weight

- Parameter (name=transformer.h.16.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.16.ln_1.weight->transformer.layers.16.attention_norm.weight

- Parameter (name=transformer.h.16.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.16.attn.c_attn.weight->transformer.layers.16.attn.c_attn.weight

- Parameter (name=transformer.h.16.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.16.attn.c_attn.bias->transformer.layers.16.attn.c_attn.bias

- Parameter (name=transformer.h.16.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.16.attn.c_proj.weight->transformer.layers.16.attention.wo.weight

- Parameter (name=transformer.h.16.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.16.ln_2.weight->transformer.layers.16.ffn_norm.weight

- Parameter (name=transformer.h.16.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.16.mlp.w1.weight->transformer.layers.16.feed_forward.w1.weight

- Parameter (name=transformer.h.16.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.16.mlp.w2.weight->transformer.layers.16.feed_forward.w3.weight

- Parameter (name=transformer.h.16.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.16.mlp.c_proj.weight->transformer.layers.16.feed_forward.w2.weight

- Parameter (name=transformer.h.17.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.17.ln_1.weight->transformer.layers.17.attention_norm.weight

- Parameter (name=transformer.h.17.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.17.attn.c_attn.weight->transformer.layers.17.attn.c_attn.weight

- Parameter (name=transformer.h.17.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.17.attn.c_attn.bias->transformer.layers.17.attn.c_attn.bias

- Parameter (name=transformer.h.17.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.17.attn.c_proj.weight->transformer.layers.17.attention.wo.weight

- Parameter (name=transformer.h.17.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.17.ln_2.weight->transformer.layers.17.ffn_norm.weight

- Parameter (name=transformer.h.17.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.17.mlp.w1.weight->transformer.layers.17.feed_forward.w1.weight

- Parameter (name=transformer.h.17.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.17.mlp.w2.weight->transformer.layers.17.feed_forward.w3.weight

- Parameter (name=transformer.h.17.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.17.mlp.c_proj.weight->transformer.layers.17.feed_forward.w2.weight

- Parameter (name=transformer.h.18.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.18.ln_1.weight->transformer.layers.18.attention_norm.weight

- Parameter (name=transformer.h.18.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.18.attn.c_attn.weight->transformer.layers.18.attn.c_attn.weight

- Parameter (name=transformer.h.18.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.18.attn.c_attn.bias->transformer.layers.18.attn.c_attn.bias

- Parameter (name=transformer.h.18.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.18.attn.c_proj.weight->transformer.layers.18.attention.wo.weight

- Parameter (name=transformer.h.18.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.18.ln_2.weight->transformer.layers.18.ffn_norm.weight

- Parameter (name=transformer.h.18.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.18.mlp.w1.weight->transformer.layers.18.feed_forward.w1.weight

- Parameter (name=transformer.h.18.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.18.mlp.w2.weight->transformer.layers.18.feed_forward.w3.weight

- Parameter (name=transformer.h.18.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.18.mlp.c_proj.weight->transformer.layers.18.feed_forward.w2.weight

- Parameter (name=transformer.h.19.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.19.ln_1.weight->transformer.layers.19.attention_norm.weight

- Parameter (name=transformer.h.19.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.19.attn.c_attn.weight->transformer.layers.19.attn.c_attn.weight

- Parameter (name=transformer.h.19.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.19.attn.c_attn.bias->transformer.layers.19.attn.c_attn.bias

- Parameter (name=transformer.h.19.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.19.attn.c_proj.weight->transformer.layers.19.attention.wo.weight

- Parameter (name=transformer.h.19.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.19.ln_2.weight->transformer.layers.19.ffn_norm.weight

- Parameter (name=transformer.h.19.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.19.mlp.w1.weight->transformer.layers.19.feed_forward.w1.weight

- Parameter (name=transformer.h.19.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.19.mlp.w2.weight->transformer.layers.19.feed_forward.w3.weight

- Parameter (name=transformer.h.19.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.19.mlp.c_proj.weight->transformer.layers.19.feed_forward.w2.weight

- Parameter (name=transformer.h.20.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.20.ln_1.weight->transformer.layers.20.attention_norm.weight

- Parameter (name=transformer.h.20.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.20.attn.c_attn.weight->transformer.layers.20.attn.c_attn.weight

- Parameter (name=transformer.h.20.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.20.attn.c_attn.bias->transformer.layers.20.attn.c_attn.bias

- Parameter (name=transformer.h.20.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.20.attn.c_proj.weight->transformer.layers.20.attention.wo.weight

- Parameter (name=transformer.h.20.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.20.ln_2.weight->transformer.layers.20.ffn_norm.weight

- Parameter (name=transformer.h.20.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.20.mlp.w1.weight->transformer.layers.20.feed_forward.w1.weight

- Parameter (name=transformer.h.20.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.20.mlp.w2.weight->transformer.layers.20.feed_forward.w3.weight

- Parameter (name=transformer.h.20.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.20.mlp.c_proj.weight->transformer.layers.20.feed_forward.w2.weight

- Parameter (name=transformer.h.21.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.21.ln_1.weight->transformer.layers.21.attention_norm.weight

- Parameter (name=transformer.h.21.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.21.attn.c_attn.weight->transformer.layers.21.attn.c_attn.weight

- Parameter (name=transformer.h.21.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.21.attn.c_attn.bias->transformer.layers.21.attn.c_attn.bias

- Parameter (name=transformer.h.21.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.21.attn.c_proj.weight->transformer.layers.21.attention.wo.weight

- Parameter (name=transformer.h.21.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.21.ln_2.weight->transformer.layers.21.ffn_norm.weight

- Parameter (name=transformer.h.21.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.21.mlp.w1.weight->transformer.layers.21.feed_forward.w1.weight

- Parameter (name=transformer.h.21.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.21.mlp.w2.weight->transformer.layers.21.feed_forward.w3.weight

- Parameter (name=transformer.h.21.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.21.mlp.c_proj.weight->transformer.layers.21.feed_forward.w2.weight

- Parameter (name=transformer.h.22.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.22.ln_1.weight->transformer.layers.22.attention_norm.weight

- Parameter (name=transformer.h.22.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.22.attn.c_attn.weight->transformer.layers.22.attn.c_attn.weight

- Parameter (name=transformer.h.22.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.22.attn.c_attn.bias->transformer.layers.22.attn.c_attn.bias

- Parameter (name=transformer.h.22.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.22.attn.c_proj.weight->transformer.layers.22.attention.wo.weight

- Parameter (name=transformer.h.22.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.22.ln_2.weight->transformer.layers.22.ffn_norm.weight

- Parameter (name=transformer.h.22.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.22.mlp.w1.weight->transformer.layers.22.feed_forward.w1.weight

- Parameter (name=transformer.h.22.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.22.mlp.w2.weight->transformer.layers.22.feed_forward.w3.weight

- Parameter (name=transformer.h.22.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.22.mlp.c_proj.weight->transformer.layers.22.feed_forward.w2.weight

- Parameter (name=transformer.h.23.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.23.ln_1.weight->transformer.layers.23.attention_norm.weight

- Parameter (name=transformer.h.23.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.23.attn.c_attn.weight->transformer.layers.23.attn.c_attn.weight

- Parameter (name=transformer.h.23.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.23.attn.c_attn.bias->transformer.layers.23.attn.c_attn.bias

- Parameter (name=transformer.h.23.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.23.attn.c_proj.weight->transformer.layers.23.attention.wo.weight

- Parameter (name=transformer.h.23.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.23.ln_2.weight->transformer.layers.23.ffn_norm.weight

- Parameter (name=transformer.h.23.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.23.mlp.w1.weight->transformer.layers.23.feed_forward.w1.weight

- Parameter (name=transformer.h.23.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.23.mlp.w2.weight->transformer.layers.23.feed_forward.w3.weight

- Parameter (name=transformer.h.23.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.23.mlp.c_proj.weight->transformer.layers.23.feed_forward.w2.weight

- Parameter (name=transformer.h.24.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.24.ln_1.weight->transformer.layers.24.attention_norm.weight

- Parameter (name=transformer.h.24.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.24.attn.c_attn.weight->transformer.layers.24.attn.c_attn.weight

- Parameter (name=transformer.h.24.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.24.attn.c_attn.bias->transformer.layers.24.attn.c_attn.bias

- Parameter (name=transformer.h.24.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.24.attn.c_proj.weight->transformer.layers.24.attention.wo.weight

- Parameter (name=transformer.h.24.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.24.ln_2.weight->transformer.layers.24.ffn_norm.weight

- Parameter (name=transformer.h.24.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.24.mlp.w1.weight->transformer.layers.24.feed_forward.w1.weight

- Parameter (name=transformer.h.24.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.24.mlp.w2.weight->transformer.layers.24.feed_forward.w3.weight

- Parameter (name=transformer.h.24.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.24.mlp.c_proj.weight->transformer.layers.24.feed_forward.w2.weight

- Parameter (name=transformer.h.25.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.25.ln_1.weight->transformer.layers.25.attention_norm.weight

- Parameter (name=transformer.h.25.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.25.attn.c_attn.weight->transformer.layers.25.attn.c_attn.weight

- Parameter (name=transformer.h.25.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.25.attn.c_attn.bias->transformer.layers.25.attn.c_attn.bias

- Parameter (name=transformer.h.25.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.25.attn.c_proj.weight->transformer.layers.25.attention.wo.weight

- Parameter (name=transformer.h.25.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.25.ln_2.weight->transformer.layers.25.ffn_norm.weight

- Parameter (name=transformer.h.25.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.25.mlp.w1.weight->transformer.layers.25.feed_forward.w1.weight

- Parameter (name=transformer.h.25.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.25.mlp.w2.weight->transformer.layers.25.feed_forward.w3.weight

- Parameter (name=transformer.h.25.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.25.mlp.c_proj.weight->transformer.layers.25.feed_forward.w2.weight

- Parameter (name=transformer.h.26.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.26.ln_1.weight->transformer.layers.26.attention_norm.weight

- Parameter (name=transformer.h.26.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.26.attn.c_attn.weight->transformer.layers.26.attn.c_attn.weight

- Parameter (name=transformer.h.26.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.26.attn.c_attn.bias->transformer.layers.26.attn.c_attn.bias

- Parameter (name=transformer.h.26.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.26.attn.c_proj.weight->transformer.layers.26.attention.wo.weight

- Parameter (name=transformer.h.26.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.26.ln_2.weight->transformer.layers.26.ffn_norm.weight

- Parameter (name=transformer.h.26.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.26.mlp.w1.weight->transformer.layers.26.feed_forward.w1.weight

- Parameter (name=transformer.h.26.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.26.mlp.w2.weight->transformer.layers.26.feed_forward.w3.weight

- Parameter (name=transformer.h.26.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.26.mlp.c_proj.weight->transformer.layers.26.feed_forward.w2.weight

- Parameter (name=transformer.h.27.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.27.ln_1.weight->transformer.layers.27.attention_norm.weight

- Parameter (name=transformer.h.27.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.27.attn.c_attn.weight->transformer.layers.27.attn.c_attn.weight

- Parameter (name=transformer.h.27.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.27.attn.c_attn.bias->transformer.layers.27.attn.c_attn.bias

- Parameter (name=transformer.h.27.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.27.attn.c_proj.weight->transformer.layers.27.attention.wo.weight

- Parameter (name=transformer.h.27.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.27.ln_2.weight->transformer.layers.27.ffn_norm.weight

- Parameter (name=transformer.h.27.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.27.mlp.w1.weight->transformer.layers.27.feed_forward.w1.weight

- Parameter (name=transformer.h.27.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.27.mlp.w2.weight->transformer.layers.27.feed_forward.w3.weight

- Parameter (name=transformer.h.27.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.27.mlp.c_proj.weight->transformer.layers.27.feed_forward.w2.weight

- Parameter (name=transformer.h.28.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.28.ln_1.weight->transformer.layers.28.attention_norm.weight

- Parameter (name=transformer.h.28.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.28.attn.c_attn.weight->transformer.layers.28.attn.c_attn.weight

- Parameter (name=transformer.h.28.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.28.attn.c_attn.bias->transformer.layers.28.attn.c_attn.bias

- Parameter (name=transformer.h.28.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.28.attn.c_proj.weight->transformer.layers.28.attention.wo.weight

- Parameter (name=transformer.h.28.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.28.ln_2.weight->transformer.layers.28.ffn_norm.weight

- Parameter (name=transformer.h.28.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.28.mlp.w1.weight->transformer.layers.28.feed_forward.w1.weight

- Parameter (name=transformer.h.28.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.28.mlp.w2.weight->transformer.layers.28.feed_forward.w3.weight

- Parameter (name=transformer.h.28.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.28.mlp.c_proj.weight->transformer.layers.28.feed_forward.w2.weight

- Parameter (name=transformer.h.29.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.29.ln_1.weight->transformer.layers.29.attention_norm.weight

- Parameter (name=transformer.h.29.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.29.attn.c_attn.weight->transformer.layers.29.attn.c_attn.weight

- Parameter (name=transformer.h.29.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.29.attn.c_attn.bias->transformer.layers.29.attn.c_attn.bias

- Parameter (name=transformer.h.29.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.29.attn.c_proj.weight->transformer.layers.29.attention.wo.weight

- Parameter (name=transformer.h.29.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.29.ln_2.weight->transformer.layers.29.ffn_norm.weight

- Parameter (name=transformer.h.29.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.29.mlp.w1.weight->transformer.layers.29.feed_forward.w1.weight

- Parameter (name=transformer.h.29.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.29.mlp.w2.weight->transformer.layers.29.feed_forward.w3.weight

- Parameter (name=transformer.h.29.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.29.mlp.c_proj.weight->transformer.layers.29.feed_forward.w2.weight

- Parameter (name=transformer.h.30.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.30.ln_1.weight->transformer.layers.30.attention_norm.weight

- Parameter (name=transformer.h.30.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.30.attn.c_attn.weight->transformer.layers.30.attn.c_attn.weight

- Parameter (name=transformer.h.30.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.30.attn.c_attn.bias->transformer.layers.30.attn.c_attn.bias

- Parameter (name=transformer.h.30.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.30.attn.c_proj.weight->transformer.layers.30.attention.wo.weight

- Parameter (name=transformer.h.30.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.30.ln_2.weight->transformer.layers.30.ffn_norm.weight

- Parameter (name=transformer.h.30.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.30.mlp.w1.weight->transformer.layers.30.feed_forward.w1.weight

- Parameter (name=transformer.h.30.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.30.mlp.w2.weight->transformer.layers.30.feed_forward.w3.weight

- Parameter (name=transformer.h.30.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.30.mlp.c_proj.weight->transformer.layers.30.feed_forward.w2.weight

- Parameter (name=transformer.h.31.ln_1.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.31.ln_1.weight->transformer.layers.31.attention_norm.weight

- Parameter (name=transformer.h.31.attn.c_attn.weight, shape=torch.Size([12288, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.31.attn.c_attn.weight->transformer.layers.31.attn.c_attn.weight

- Parameter (name=transformer.h.31.attn.c_attn.bias, shape=torch.Size([12288]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.31.attn.c_attn.bias->transformer.layers.31.attn.c_attn.bias

- Parameter (name=transformer.h.31.attn.c_proj.weight, shape=torch.Size([4096, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.31.attn.c_proj.weight->transformer.layers.31.attention.wo.weight

- Parameter (name=transformer.h.31.ln_2.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.31.ln_2.weight->transformer.layers.31.ffn_norm.weight

- Parameter (name=transformer.h.31.mlp.w1.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.31.mlp.w1.weight->transformer.layers.31.feed_forward.w1.weight

- Parameter (name=transformer.h.31.mlp.w2.weight, shape=torch.Size([11008, 4096]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.31.mlp.w2.weight->transformer.layers.31.feed_forward.w3.weight

- Parameter (name=transformer.h.31.mlp.c_proj.weight, shape=torch.Size([4096, 11008]), dtype=torch.float32, requires_grad=True)

- name: transformer.h.31.mlp.c_proj.weight->transformer.layers.31.feed_forward.w2.weight

- Parameter (name=transformer.ln_f.weight, shape=torch.Size([4096]), dtype=torch.float32, requires_grad=True)

- Parameter (name=lm_head.weight, shape=torch.Size([151936, 4096]), dtype=torch.float32, requires_grad=True)

- Saving converted weights to /data/qwen/models/Qwen-7B-Chat/qwen-7b-chat.ckpt...

- Done

配置路径,启动推理脚本。

cd /data/qwen/mindformers/research/qwen

export PYTHONPATH=/data/qwen/mindformers:$PYTHONPATH

python3 infer_qwen.py

- /root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/numpy/core/getlimits.py:549: UserWarning: The value of the smallest subnormal for <class 'numpy.float32'> type is zero.

- setattr(self, word, getattr(machar, word).flat[0])

- /root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/numpy/core/getlimits.py:89: UserWarning: The value of the smallest subnormal for <class 'numpy.float32'> type is zero.

- return self._float_to_str(self.smallest_subnormal)

- /root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/numpy/core/getlimits.py:549: UserWarning: The value of the smallest subnormal for <class 'numpy.float64'> type is zero.

- setattr(self, word, getattr(machar, word).flat[0])

- /root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/numpy/core/getlimits.py:89: UserWarning: The value of the smallest subnormal for <class 'numpy.float64'> type is zero.

- return self._float_to_str(self.smallest_subnormal)

- [Warning]Can not find libascendalog.so

- [Warning]Can not find libascendalog.so

- Traceback (most recent call last):

- File "/data/qwen/mindformers/research/qwen/infer_qwen.py", line 4, in <module>

- from mindformers.trainer import Trainer

- File "/data/qwen/mindformers/mindformers/__init__.py", line 17, in <module>

- from mindformers import core, auto_class, dataset, \

- File "/data/qwen/mindformers/mindformers/core/__init__.py", line 19, in <module>

- from .metric import build_metric

- File "/data/qwen/mindformers/mindformers/core/metric/__init__.py", line 17, in <module>

- from .metric import *

- File "/data/qwen/mindformers/mindformers/core/metric/metric.py", line 37, in <module>

- from mindformers.models import BasicTokenizer

- File "/data/qwen/mindformers/mindformers/models/__init__.py", line 21, in <module>

- from .blip2 import *

- File "/data/qwen/mindformers/mindformers/models/blip2/__init__.py", line 17, in <module>

- from .blip2_config import Blip2Config

- File "/data/qwen/mindformers/mindformers/models/blip2/blip2_config.py", line 23, in <module>

- from mindformers.models.llama import LlamaConfig

- File "/data/qwen/mindformers/mindformers/models/llama/__init__.py", line 18, in <module>

- from .llama import LlamaForCausalLM, LlamaForCausalLMWithLora, LlamaModel

- File "/data/qwen/mindformers/mindformers/models/llama/llama.py", line 30, in <module>

- from mindspore.nn.layer.flash_attention import FlashAttention

- File "/root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/mindspore/nn/layer/flash_attention.py", line 24, in <module>

- from mindspore.ops._op_impl._custom_op.flash_attention.flash_attention_impl import get_flash_attention

- File "/root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/mindspore/ops/_op_impl/_custom_op/__init__.py", line 17, in <module>

- from mindspore.ops._op_impl._custom_op.dsd_impl import dsd_matmul

- File "/root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/mindspore/ops/_op_impl/_custom_op/dsd_impl.py", line 17, in <module>

- from te import tik

- File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/te/__init__.py", line 128, in <module>

- from tbe import tvm

- File "/usr/local/Ascend/ascend-toolkit/latest/python/site-packages/tbe/__init__.py", line 44, in <module>

- import tvm

- File "/usr/local/Ascend/ascend-toolkit/7.0.RC1/python/site-packages/tbe/tvm/__init__.py", line 26, in <module>

- from ._ffi.base import TVMError, __version__, _RUNTIME_ONLY

- File "/usr/local/Ascend/ascend-toolkit/7.0.RC1/python/site-packages/tbe/tvm/_ffi/__init__.py", line 28, in <module>

- from .base import register_error

- File "/usr/local/Ascend/ascend-toolkit/7.0.RC1/python/site-packages/tbe/tvm/_ffi/base.py", line 72, in <module>

- _LIB, _LIB_NAME = _load_lib()

- File "/usr/local/Ascend/ascend-toolkit/7.0.RC1/python/site-packages/tbe/tvm/_ffi/base.py", line 52, in _load_lib

- lib_path = libinfo.find_lib_path()

- File "/usr/local/Ascend/ascend-toolkit/7.0.RC1/python/site-packages/tbe/tvm/_ffi/libinfo.py", line 147, in find_lib_path

- raise RuntimeError(message)

- RuntimeError: Cannot find the files.

- List of candidates:

- /root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/mindspore/lib/plugin/cpu/libtvm.so

- /usr/local/Ascend/driver/libtvm.so

- /data/qwen/mindformers/research/qwen/libtvm.so

- /usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/bin/libtvm.so

- /usr/local/Ascend/ascend-toolkit/7.0.RC1/aarch64-linux/ccec_compiler/bin/libtvm.so

- /root/miniconda3/envs/mindspore2.2_py39/bin/libtvm.so

- /root/miniconda3/condabin/libtvm.so

- /usr/local/sbin/libtvm.so

- /usr/local/bin/libtvm.so

- /usr/sbin/libtvm.so

- /usr/bin/libtvm.so

- /usr/sbin/libtvm.so

- /usr/bin/libtvm.so

- /usr/local/Ascend/ascend-toolkit/7.0.RC1/python/site-packages/tbe/libtvm.so

- /usr/local/Ascend/ascend-toolkit/7.0.RC1/libtvm.so

- /root/miniconda3/envs/mindspore2.2_py39/lib/python3.9/site-packages/mindspore/lib/plugin/cpu/libtvm_runtime.so

- /usr/local/Ascend/driver/libtvm_runtime.so

- /data/qwen/mindformers/research/qwen/libtvm_runtime.so

- /usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/bin/libtvm_runtime.so

- /usr/local/Ascend/ascend-toolkit/7.0.RC1/aarch64-linux/ccec_compiler/bin/libtvm_runtime.so

- /root/miniconda3/envs/mindspore2.2_py39/bin/libtvm_runtime.so

- /root/miniconda3/condabin/libtvm_runtime.so

- /usr/local/sbin/libtvm_runtime.so

- /usr/local/bin/libtvm_runtime.so

- /usr/sbin/libtvm_runtime.so

- /usr/bin/libtvm_runtime.so

- /usr/sbin/libtvm_runtime.so

- /usr/bin/libtvm_runtime.so

- /usr/local/Ascend/ascend-toolkit/7.0.RC1/python/site-packages/tbe/libtvm_runtime.so

- /usr/local/Ascend/ascend-toolkit/7.0.RC1/libtvm_runtime.so

报错信息,应该是和配置芯片架构中缺少的文件,当前不做深入探究。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/盐析白兔/article/detail/727922

推荐阅读

相关标签