热门标签

热门文章

- 1西安华为OD面试经验(德科)_华为od背调

- 2python入门14:os库,遍历文件夹下的所有文件,一些常用函数(获取文件目录、判断文件是否存在、合并路径、删除文件等),以及应用举例_os库遍历删除文件

- 3在linux操作系统上安装sqlserver_linux安装sqlserver

- 4【机器学习】Exam4

- 5UniApp与React的比较:移动应用开发框架的对比_uniapp react

- 6ComfyUI + Stable Diffusion 3(Windows 11)本地部署教程_stable diffusion 3.0 windows本地部署

- 7运行stable-diffusion出现的问题_checkout your internet connection or see how to ru

- 8如何降低AI辅写疑似度?7招助你论文过关!_aigc论文降低

- 9MySQL索引的正确使用姿势_mysql index lookup

- 10Visual Studio Code 常见的配置、常用好用插件以及【vsCode 开发相应项目推荐安装的插件】_vscode structure

当前位置: article > 正文

AdaSpeech1/2/3/4_adaspeech 4: adaptive text to speech in zero-shot

作者:码创造者 | 2024-07-10 11:23:15

赞

踩

adaspeech 4: adaptive text to speech in zero-shot scenarios

文章目录

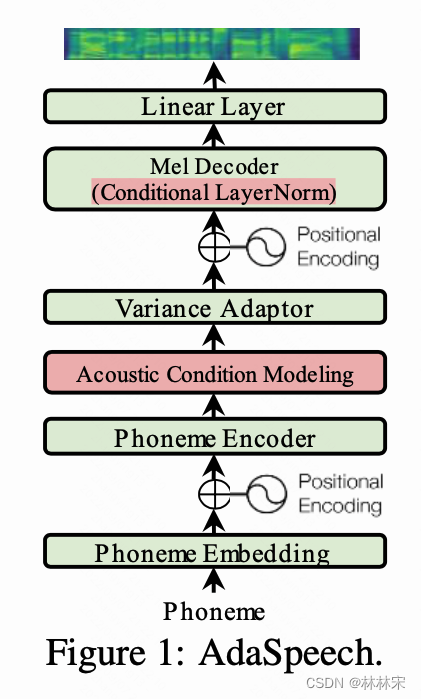

adaspeech1:ADAPTIVE TEXT TO SPEECH FOR CUSTOM VOICE

会议:2021ICLR

作者:chenmingjian,tanxu

单位:微软

abstract

- 解决小数据TTS finetune的问题

- challenge:(1) finetune的说话人情况和base数据可能相差很大(发音习惯、录制环境);(2)每个说话人只更新尽量少的模型参数,以避免finetune人数增多带来的存储开销爆炸。

- 方法:(1)Encoder中引入,同时利用utterance level(spk embedding)和phn level的特征作为补充,让模型更听输入特征的话而不是对训练数据过拟合,以至于ft的时候泛化不好;(2)Decoder layer norm,

Methods

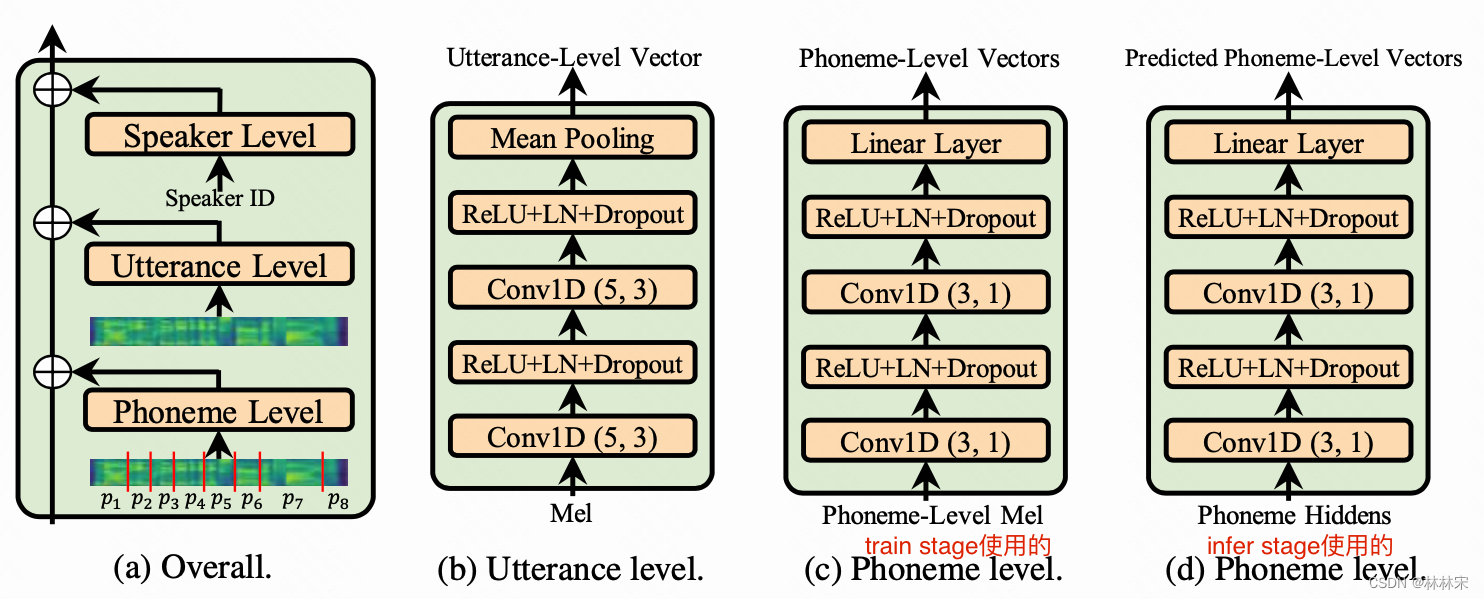

acoustic condition modeling

- fastspeech本身倾向于memorize and overfit 训练数据,在adaptation的时候泛化不好,对应的解决办法就是提供更多的声学输入信息,让模型更好的泛化而不是记住训练数据的特征。

- 将特征分为三种

- speaker lelvel:使用spk id得到spk_emb表征;

- utterance lelvel:和这个人这句话有关的global信息,训练的时候用target speech,infer的时候随机挑选ref speech;(有一个疑问,是否必要,而且infer的时候ref speech和syn txt也不一致?)

- phn level:特殊的发音细节,训练的时候target speech按照align将单个phn的mel平均,预测phn level vector;同时另外训练一个phn level encoder,输入phn,预测phn level vector, infer阶段使用;

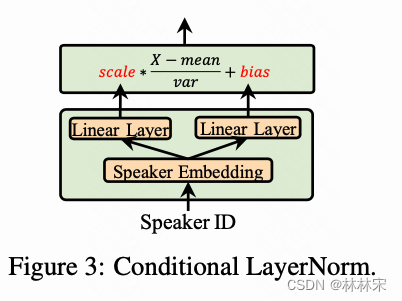

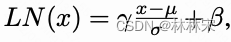

conditional layer normalization

- Decoder的基础block包括slf-attn和Feed-forward net,直接对参数finetune不好操作,但是每一个slf-attn和FFN之后都有layer norm。

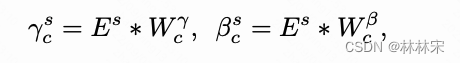

- LN的原理如上,其中 μ \mu μ和 σ \sigma σ是层参数对应的均值方差, γ , β \gamma,\beta γ,β是网络中可以学习的参数,作者提出让 γ , β \gamma,\beta γ,β和说话人有关,在遇到新的说话人时,只需要更新对应的 γ , β \gamma,\beta γ,β,产生很小的参数量存储。

- 说话人相关的均值方差来自于speaker embedding,

E

s

E^s

Es是说话人s对应的向量矩阵,每一层LN都会两个可学习的矩阵

W

γ

,

W

β

W^{\gamma}, W^{\beta}

Wγ,Wβ

- conditional_layernorm实现 这个代码adaLN 写的没问题,但是加的位置不对;论文里写的加入decoder的每一层,git代码只在decoder的输出作用一次,然后送给postnet。

class Condional_LayerNorm(nn.Module): def __init__(self, normal_shape, epsilon=1e-5 ): super(Condional_LayerNorm, self).__init__() if isinstance(normal_shape, int): self.normal_shape = normal_shape self.speaker_embedding_dim = 256 self.epsilon = epsilon self.W_scale = nn.Linear(self.speaker_embedding_dim, self.normal_shape) self.W_bias = nn.Linear(self.speaker_embedding_dim, self.normal_shape) self.reset_parameters() def reset_parameters(self): torch.nn.init.constant_(self.W_scale.weight, 0.0) torch.nn.init.constant_(self.W_scale.bias, 1.0) torch.nn.init.constant_(self.W_bias.weight, 0.0) torch.nn.init.constant_(self.W_bias.bias, 0.0) def forward(self, x, speaker_embedding): mean = x.mean(dim=-1, keepdim=True) var = ((x - mean) ** 2).mean(dim=-1, keepdim=True) std = (var + self.epsilon).sqrt() y = (x - mean) / std scale = self.W_scale(speaker_embedding) bias = self.W_bias(speaker_embedding) y *= scale.unsqueeze(1) y += bias.unsqueeze(1) return y

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

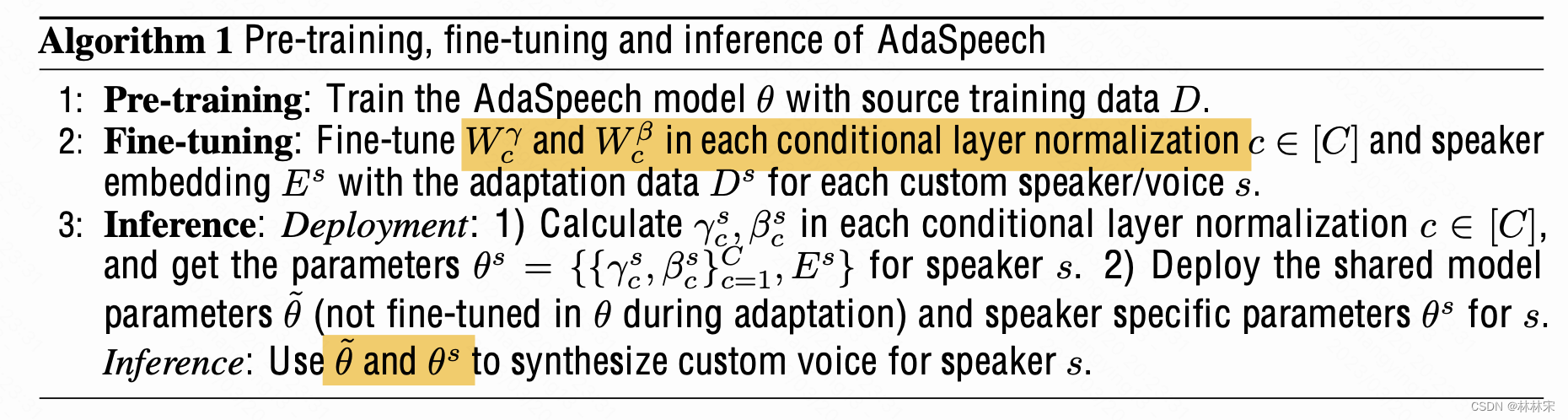

pipeline of adaspeech

- 更新的是所有CLN层的 W W W

- inference的时候是pretrain model和finetune model的结合

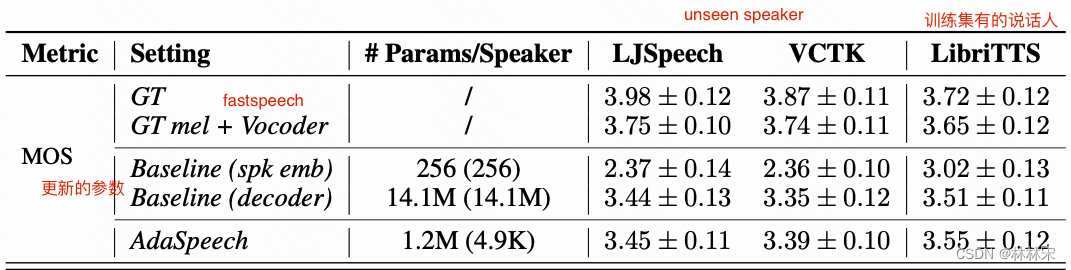

experiment

dataset

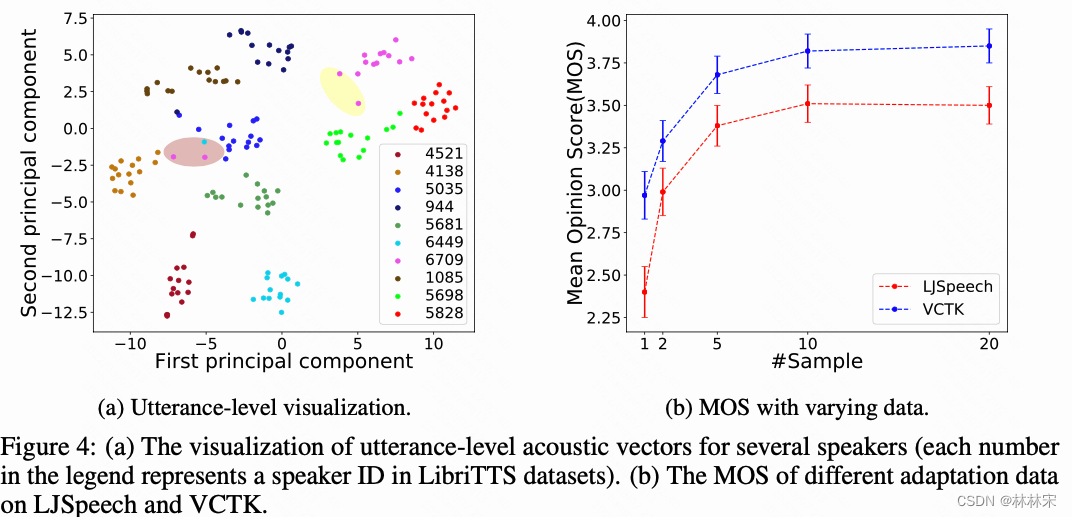

- 训练集:LibriTTS, 2456 spaekers,586h,基于16k数据进行的

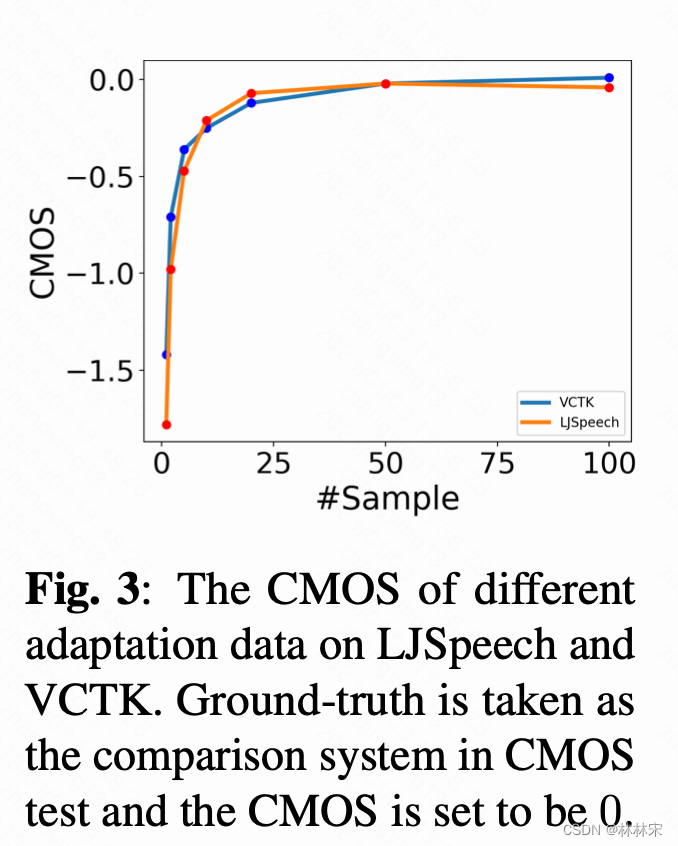

- 测试集:VCTK, LJspech,说话人挑选20句,但是结果表明在10句时已经取得比较好的结果。

adaspeech2: ADAPTIVE TEXT TO SPEECH WITH UNTRANSCRIBED DATA

- ICASSP 2021

- Yuzi Yan, tan xu

abstract

- 针对只有speech,没有对应的转录文本的情况,如何更好的利用数据进行finetune

method

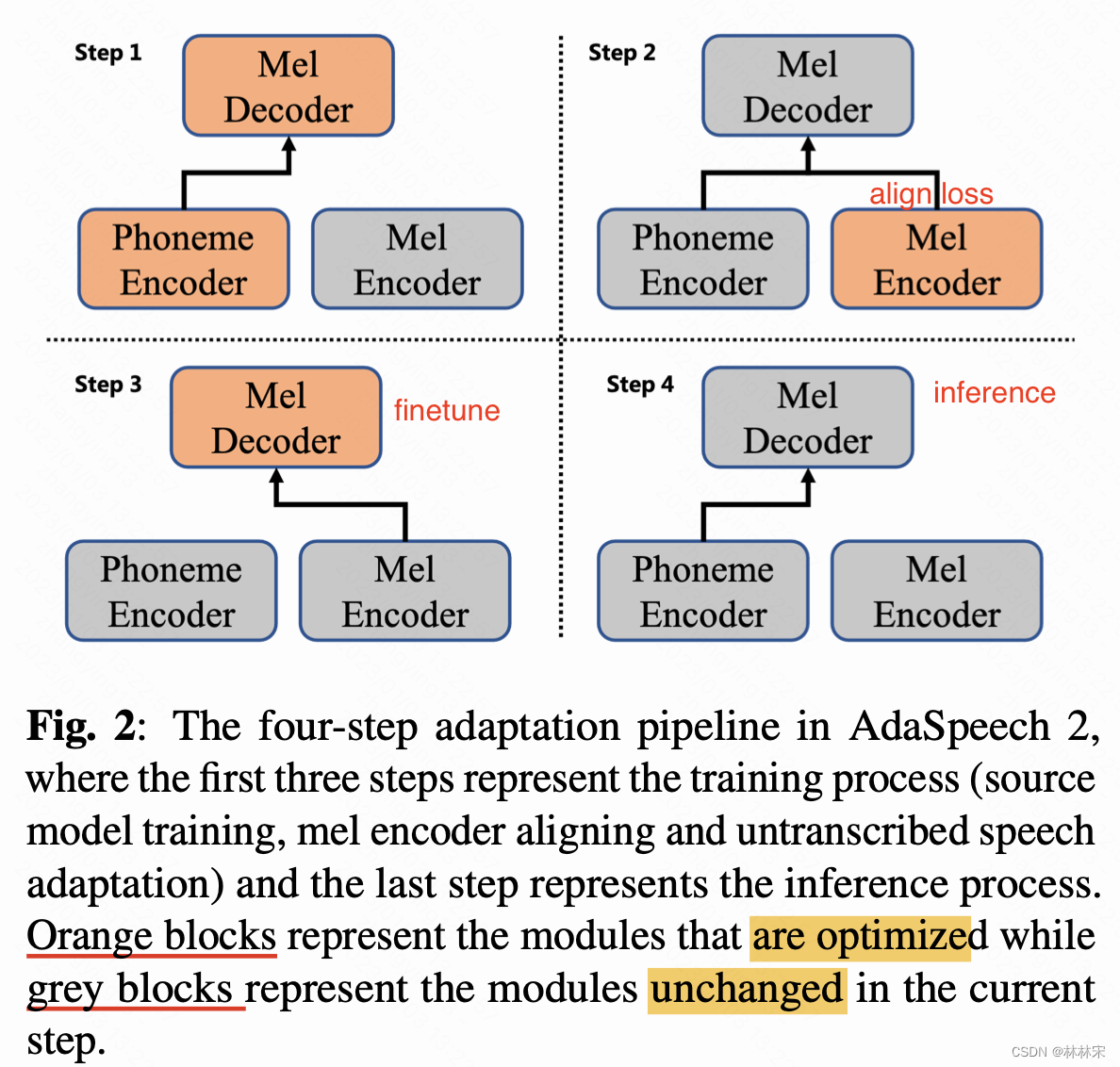

- 训练过程分4步:(1)多说话人数据训练一个TTS非自回归模型;(2)mel encoder aligning:使用align loss,使得mel encoder的输出和phn encoder的输出尽可能一致;(3)无转录文本自适应:无转录文本的语音输入mel encoder,finetune decoder,得到目标说话人相关的模型。(4)inference:phn encoder+FT mel decoder。

- align loss是L2 Loss, mel encoder编码的hidden emb和扩帧后的phn-encoder-hidden emb计算,不涉及到时长变换的部分。

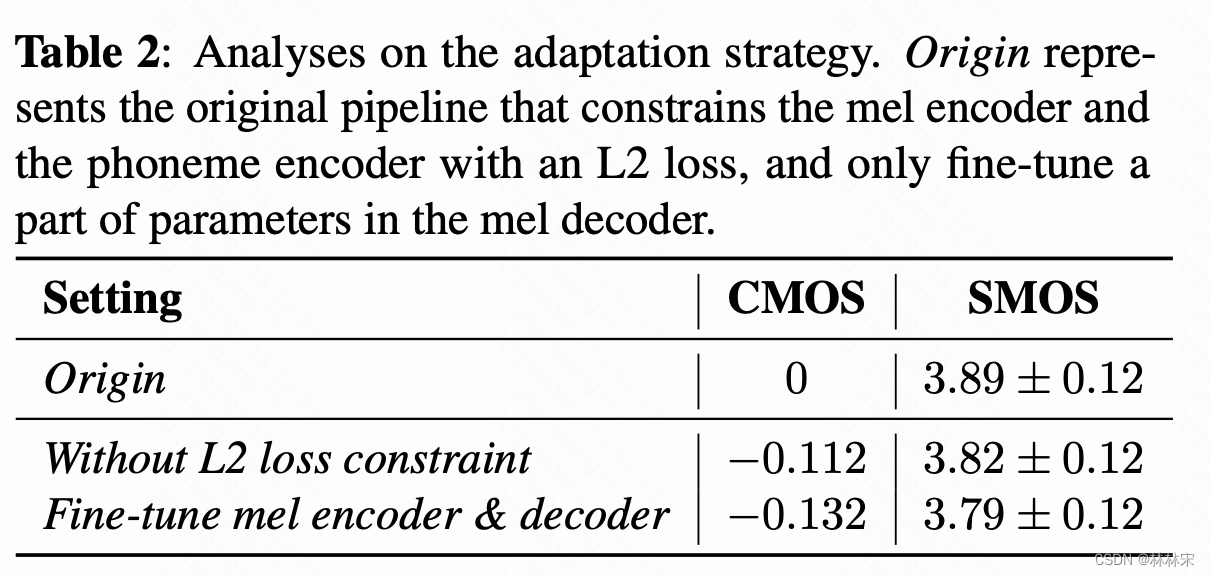

- finetune时候mel decoder涉及的参数更新,和adaspeech1中一样,LN中相关参数的更新。

- 这种方法训练的模型,可组合性更强。

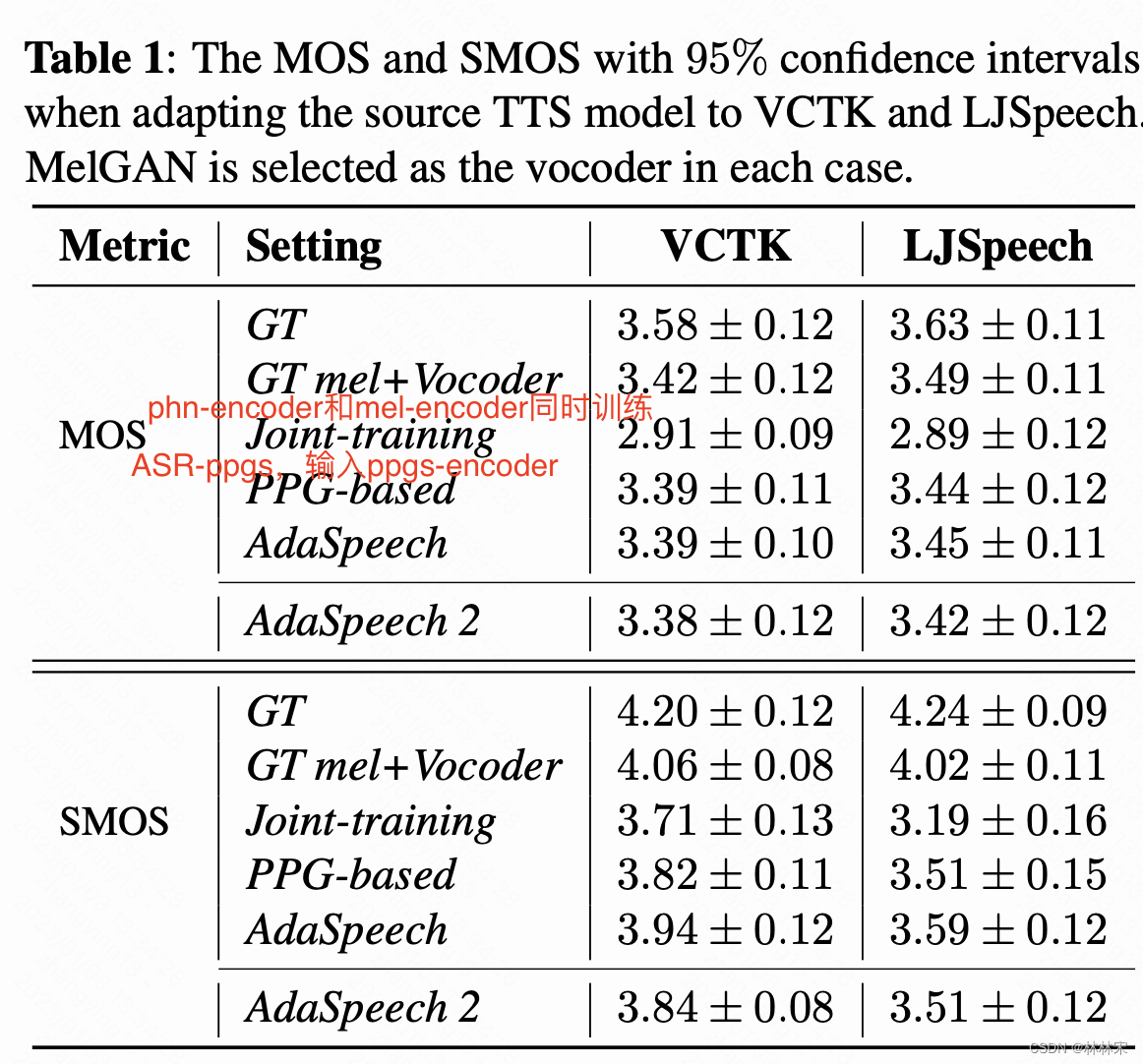

experiment

- VCTK+LibriTTS+LJspeech+internal data

- base model(100k step),mel encoder(10K step),finetune(2k step)

-

ppgs-based:ASR提取PPGs,输入额外的ppgs-encoder,本质上是mel-encoder的上限,纯文本的内容;

-

joint-training:mel-encoder和phn-encoder同时训练,对比是为了证明本文提出的阶段式训练结果偏差更小。

-

adaptation策略的对比

-

adaptation 数据的对比

adaspeech3:Adaptive Text to Speech for Spontaneous Style

- INTERSPEECH 2021

- Yuzi Yan, tanxu

abstract

- 针对将audio-book的朗读风格,自适应到podcast(播客)或者对话风格,也可以把非口播的音色迁移到口播风格上。

- 难点在于:(1)base model很少见到这种风格的数据;(2)对话风格中有停顿(filled pause, um and uh),韵律也不一样。

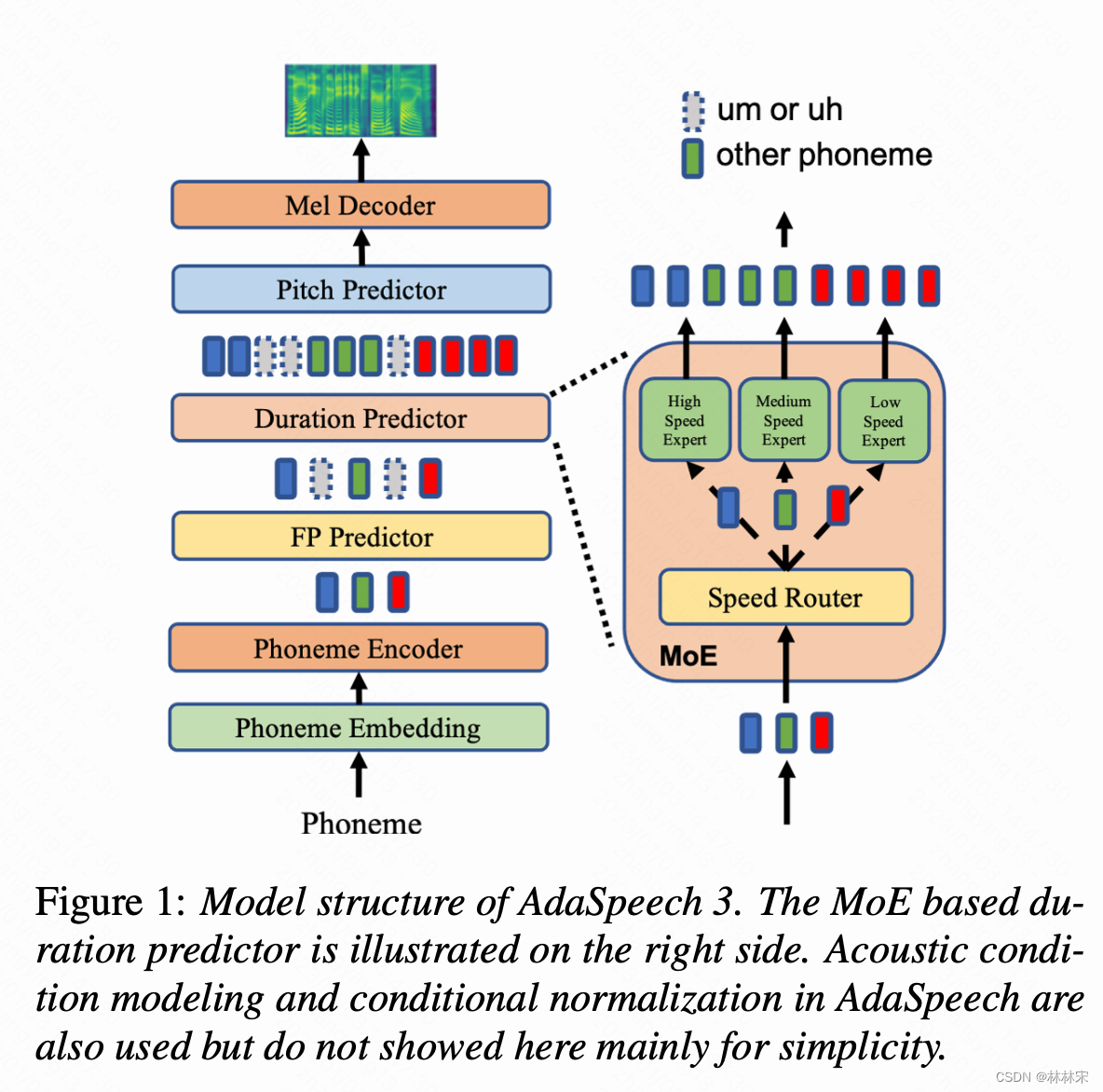

- (1)FP predictor,预测停顿;(2)基于mixture of experts (MoE)的duration predictor,预测生成快/中/慢三种不同的语速,会和pitch predictor一起,在finetune过程中用于建模新的韵律。(3)finetune decoder以建模音色。

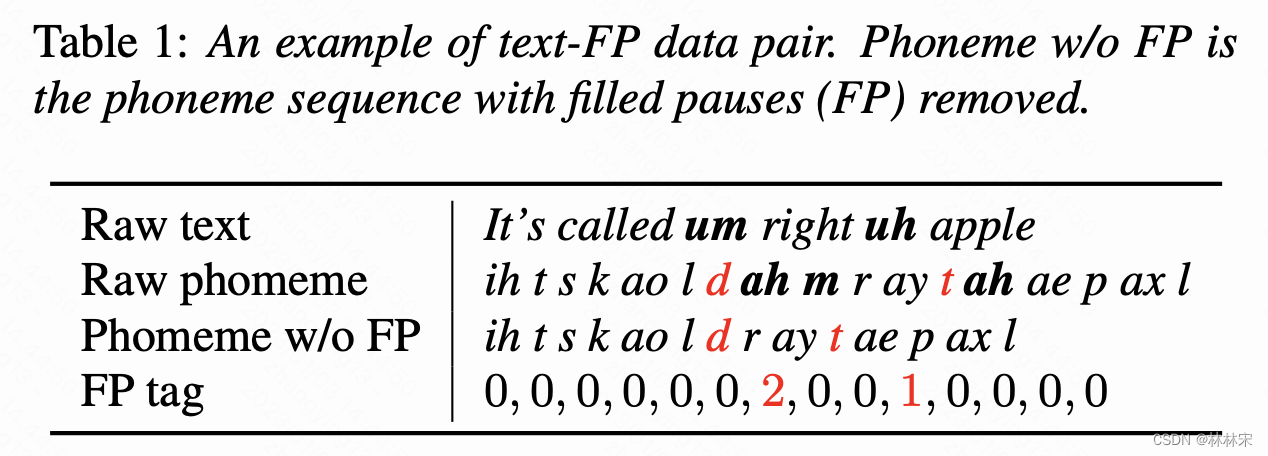

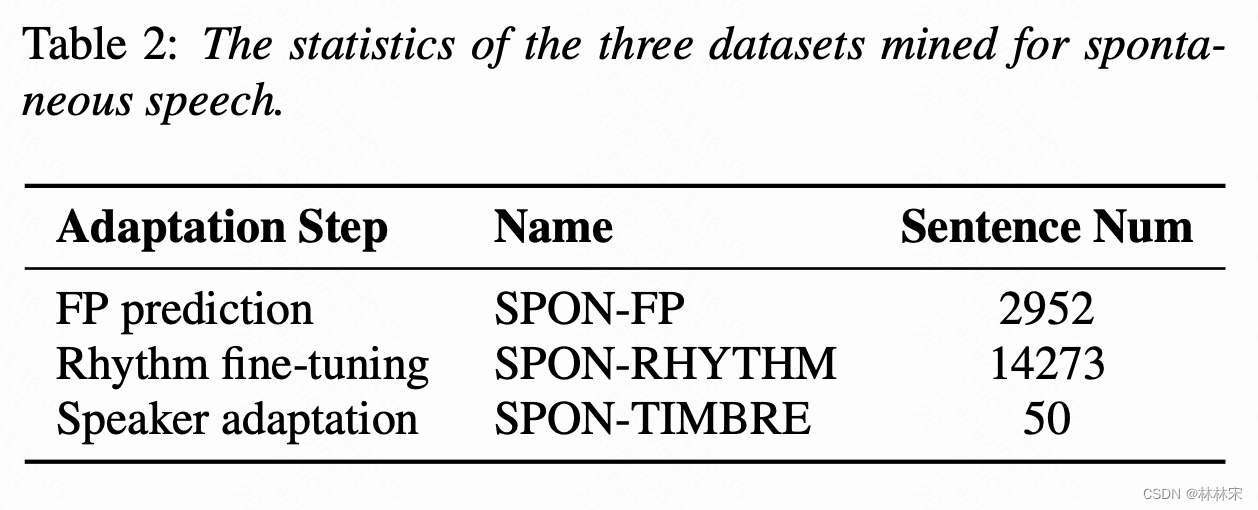

SpontaneousDataset

- 数据集的构建方法

- 爬虫:爬取一个播客前30章的28h数据

- ASR转录:ASR转录,给到细的时间戳,裁剪成7-10s的片段

- SPON-FP dataset construction:ASR识别的停顿(um/uh),移除这样的字段,添加FP tag,没有停顿tag=0,uh停顿tag=1,um停顿tag=2,用于FP predictor的训练在合适的phn后边插入停顿tag;

- SPON-RHYTHM dataset construction:从spontaneous speech数据中提取对齐后的pitch和duration,用于finetune阶段使用

method

FP predictor

- 语料中FP的比例很少,三分类问题(no fp=0, uh=1, um=2),为了防止正例过少,预测全是负例,删除没有FP的语料,

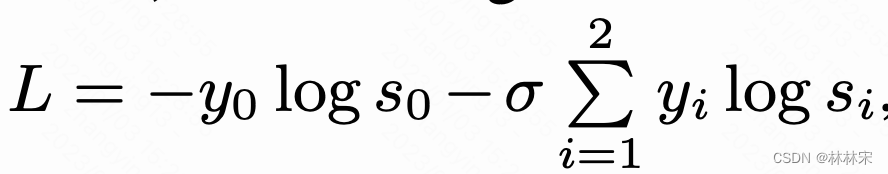

- FP Loss

[ s 0 , s 1 , s 2 ] [s_0, s_1, s_2] [s0,s1,s2]分别是no fp,uh,um的预测概率;

[ y 0 , y 1 , y 2 ] [y_0, y_1,y_2] [y0,y1,y2]分别是三者的gt的one-hot编码,实际上是一个指定维度的emb。 - (1)可以通过调节 σ \sigma σ保证训练时候的数据均衡;(2)调节FP Loss的阈值,控制预测的FP的密度。——如果 s 0 s_0 s0大于阈值T,认为是no FP,反之,归类为FP;T从0.1增加到0.99,召回率从0.8增加到0.95,代价是精确度(precision)的一些些损失。

- precision 精确率:预测结果的准确性,正例预测为正例,负例预测为负例

- accuracy:准确率,对于所有样本,多少被正确预测了。

- recall:对于正例,多少样本被预测正确了(单独一类预测的精确度)

- 在预测为FP的phn之后添加对应的FP-phn编码

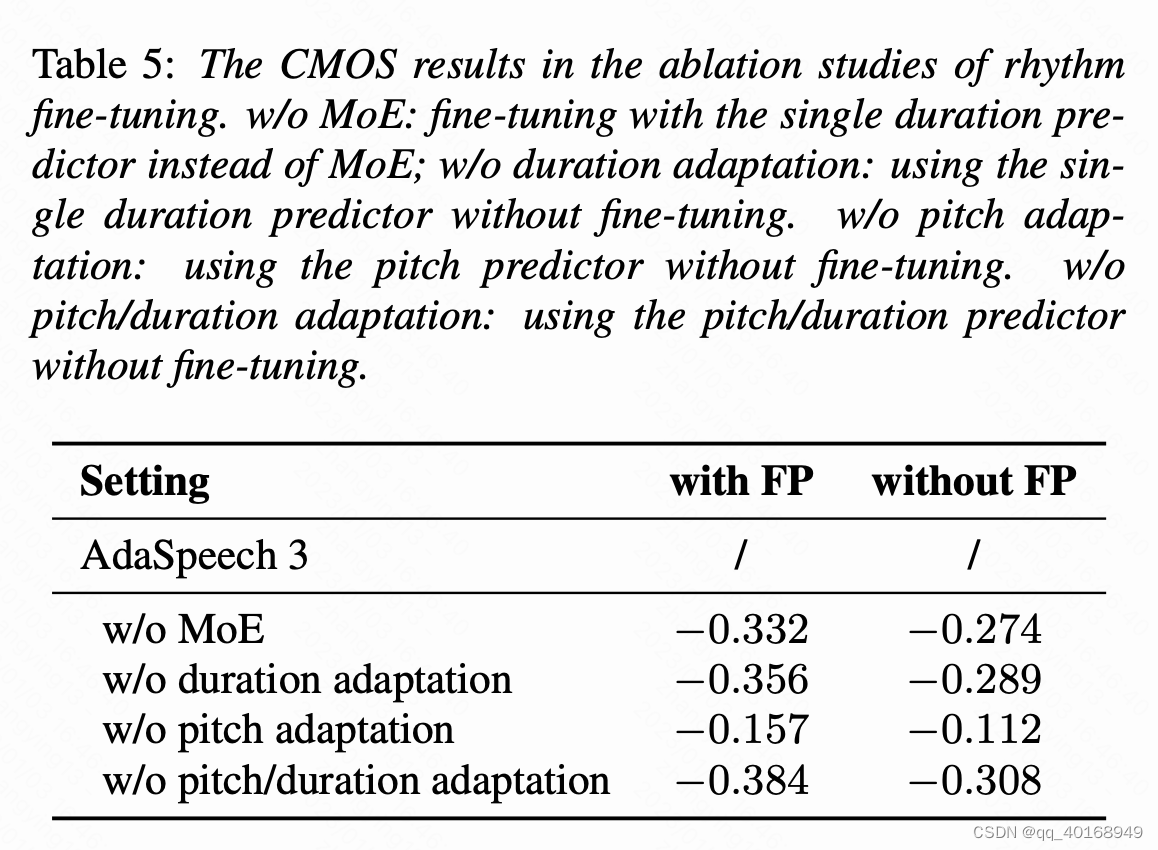

Rhythm Adaptation

- 韵律主要和duration以及pitch有关,检查数据发现spontaneous-style和reading-style的基频分布没有太大的区别,但是时长的range有变化。spontaneous-style中会通过拖音、快读等方式表达个人风格,单个phn时长范围(0,40】,而reading-style的数据时长比较稳定在(0,25】。因此,对时长预测做专门的控制。

- 做法:设计三个speed tag(快、中、慢),phn-emb+speed tag训练speed router(和FP Predictor结构一样,conv+fc)。finetune阶段,将base model的duration experts参数全部初始化,使用新的数据对更新。train阶段使用真实的时长信息进行扩帧,infer阶段使用speed router 预测的概率值的加权平均作为时长。——router预测一个speed tag的概率,将当前phn分别送入不同的duration predictor,得到预测时长,预测时长和speed tag的概率加权平均得到infer阶段真正实用的phn duration。

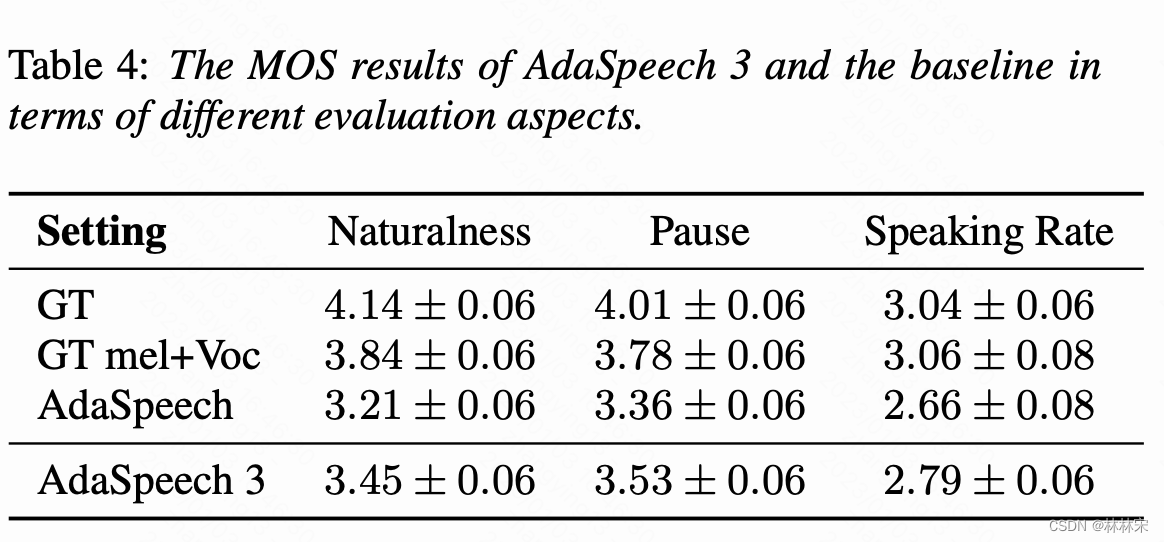

experiment

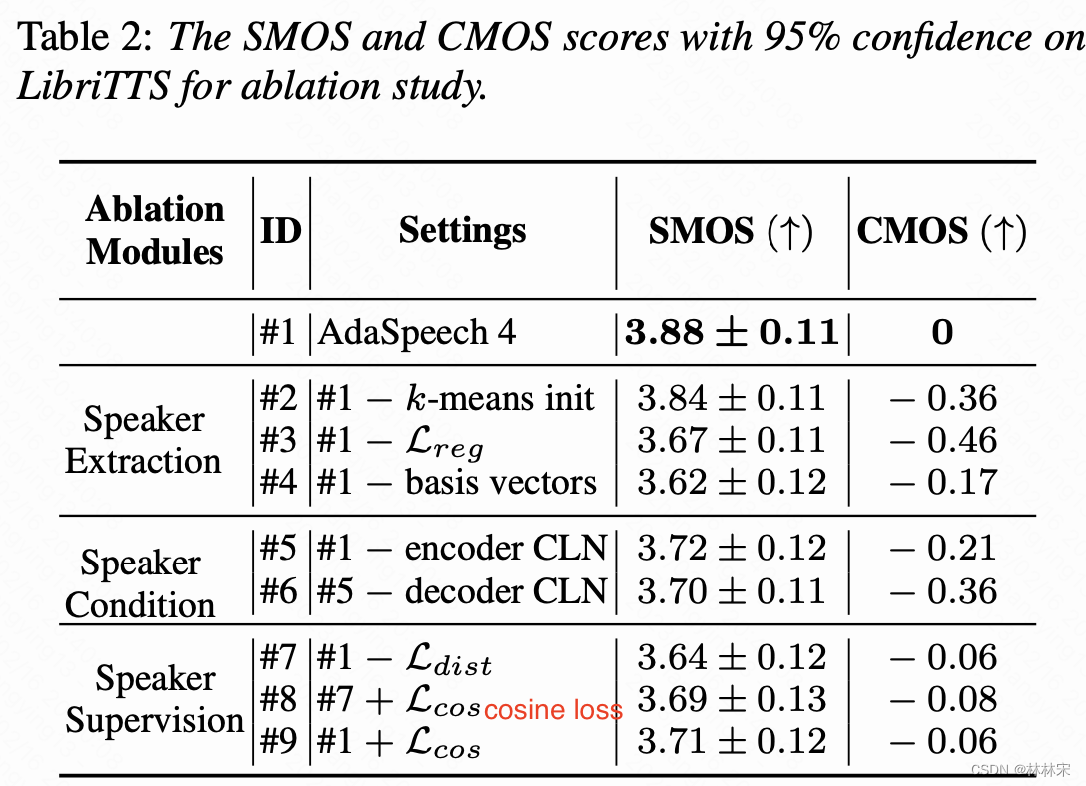

AdaSpeech 4: Adaptive Text to Speech in Zero-Shot Scenarios

- interspeech2022

- Yihan Wu(人大), tanxu

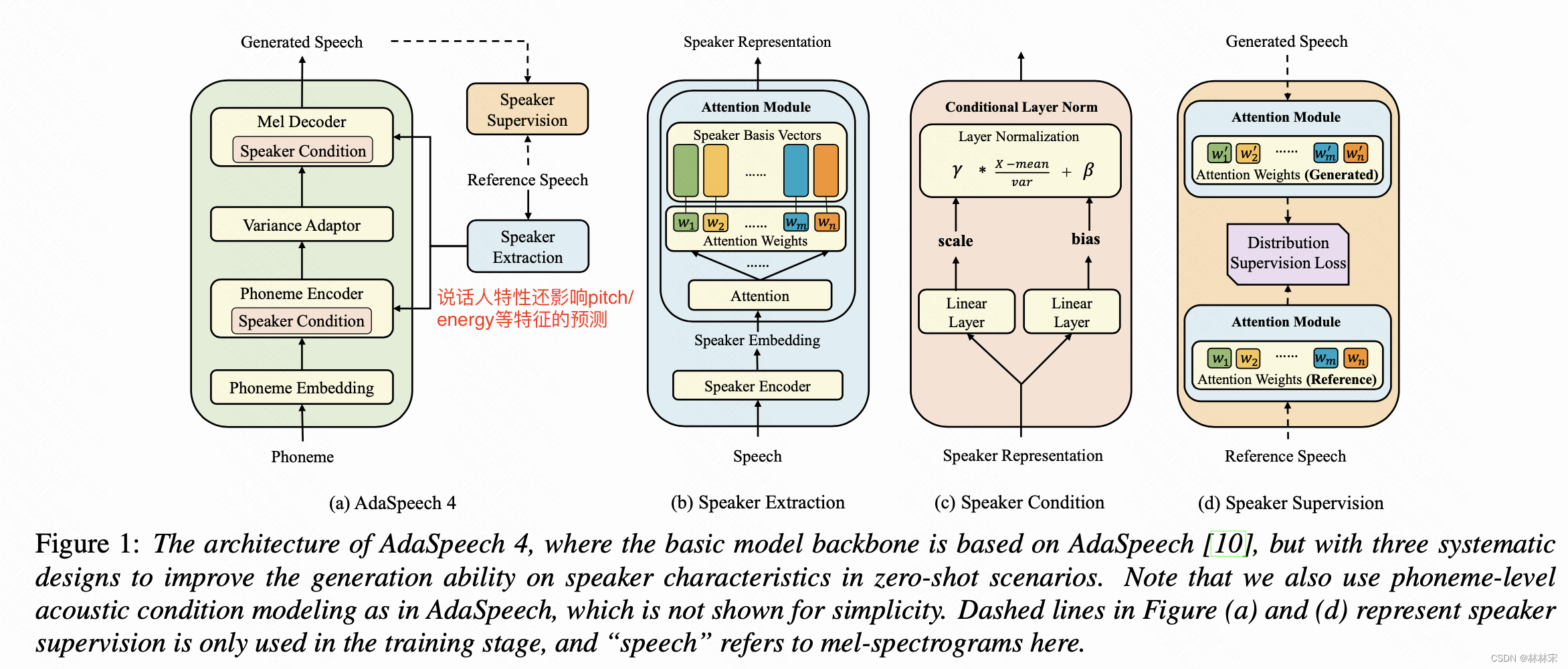

abstract

- zero-shot TTS without adaptation

- 本文的工作:(1)讲speaker represenation组成基础块,用attention量化求和的方式提升表达的泛化性;(2)使用conditional layer norm将提取的speaker represenation集成到TTS模型中。(3)基于basis vector的分布,提出一个新的监督loss,以确保生成mel-spec和说话人音色相关。

intro

zero-shot TTS的困难:(1)之前的工作致力于让speaker encoder提取更加可靠的speaker embedding,但实际上在one-shot的场景并不是很靠谱,而且说话人特性不仅和音色有关,还和韵律、风格等强相关;(2)之前的工作将speaker embedding直接和phn-emb拼接送入decoder,但是unseen speaker embedding会影响decoder的泛化性;(3)根据ref生成音色一致的mel-spec,但实际上很难,也有用说话人分类loss作为辅助,但是改进效果不明显。

method

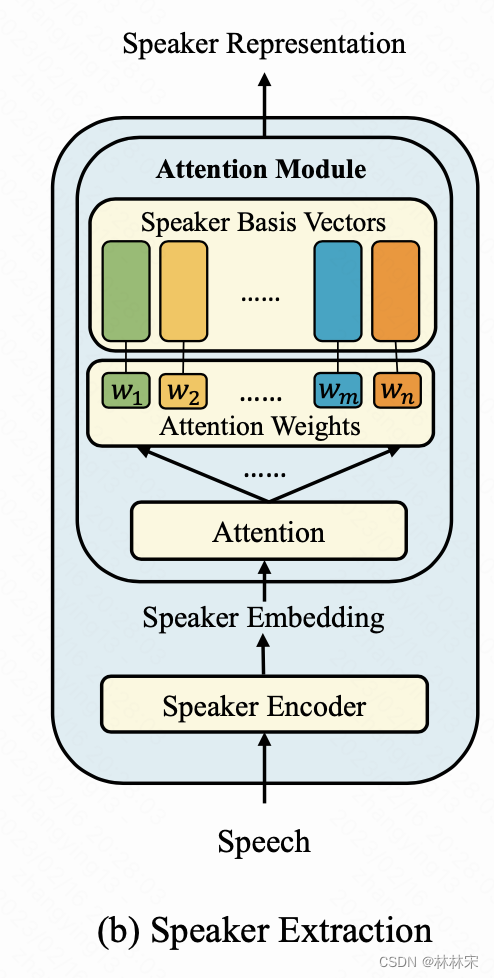

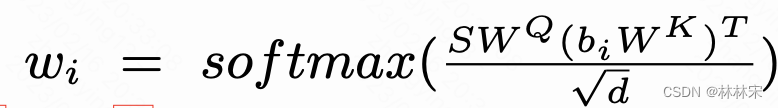

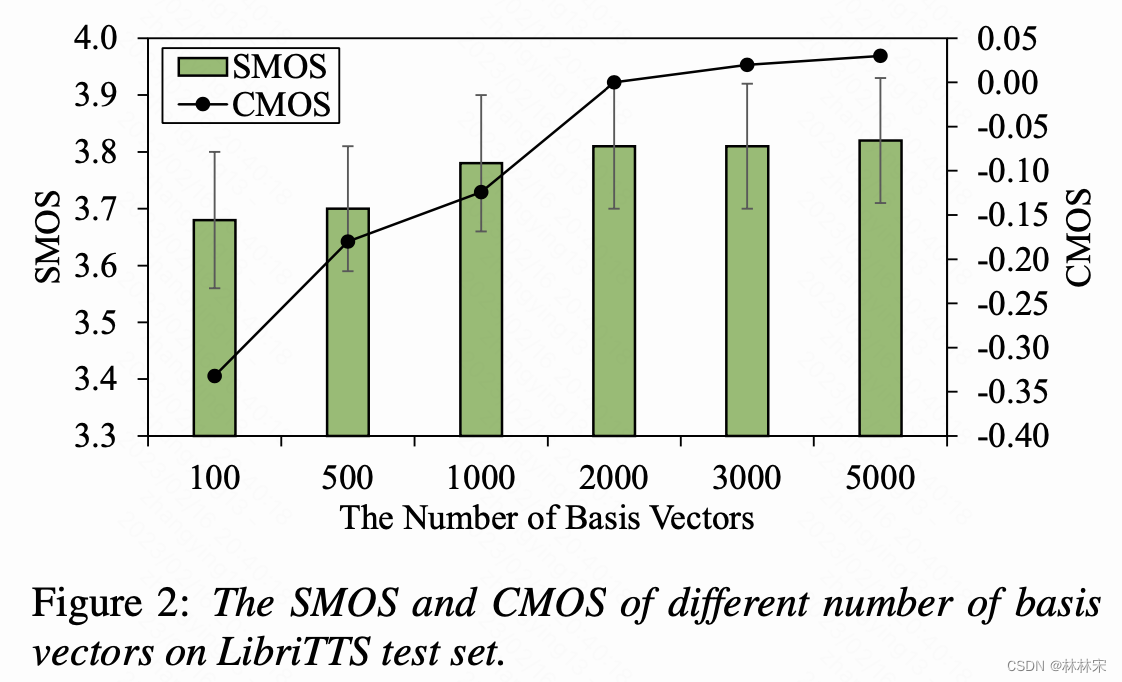

Extracting Speaker Representation by Basis Vectors

- 提取speaker embedding作为bias vector,使用attention加权求和,为了保证对unseen speaker的泛化性,需要bias vector的spk-emb分布足够松散,布满空间;

- 因此添加操作:(1)使用cluster centers(by k-means)对提取的speaker embedding进行初始化,使他们足够的dissimilar,理想情况是正交矩阵,可以充满speaker space;(2)使用regularization loss 使得训练集的speaker embedding不相似,将提取到的embedding分成N类(N=speaker number)

- b i , b j b_i,b_j bi,bj是第i,第j个speaker bias

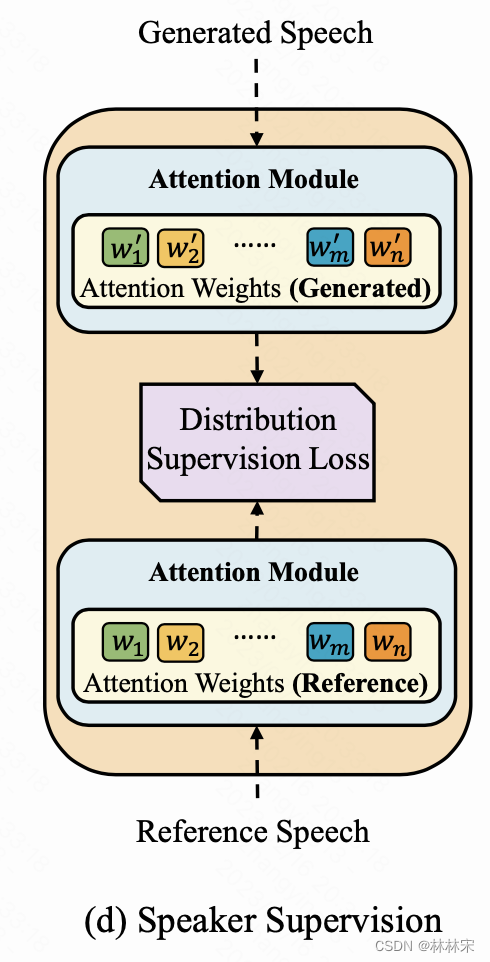

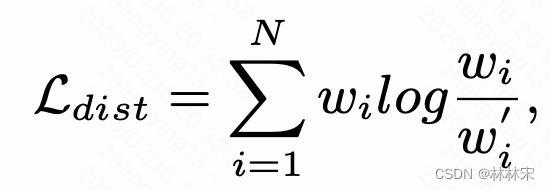

Supervising Speaker Representation in Synthesized Speech with Distribution Loss

- 计算ref speech和合成speech在speaker bias上加权和的最小距离,用于控制生成说话人特性

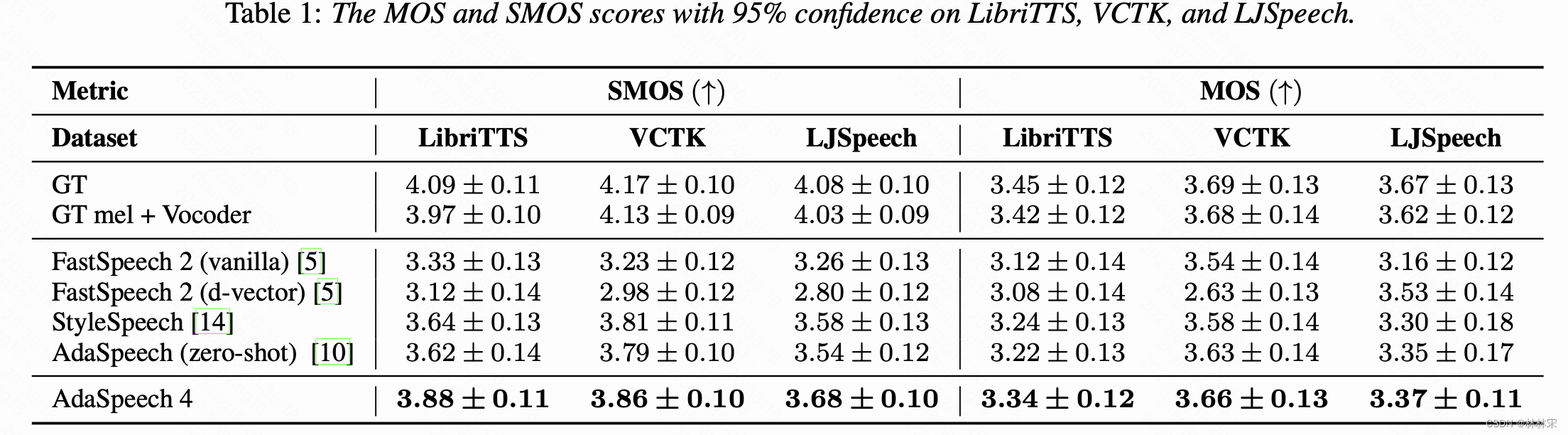

experiment

- base model: adaspeech1

- dataset:LibriTTS分成训练集,测试集;VCTK,ljspeech用于unseen的测试;

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/码创造者/article/detail/806076

推荐阅读

相关标签