- 1服务器发回了不可路由的地址。使用服务器地址代替,宝塔系统 ftp设置,fillza ftp 如何设置 被动模式的ip端口 ,阿里云设置

- 2怎么在网上找计算机答案,试卷上怎么啊

- 32020最新文本检测算法TextFuseNet

- 4Python之FileNotFoundError: [Errno 2] No such file or directory问题处理

- 5SpringCloud快速入门

- 6鸿蒙系统(HarmonyOS)中的API 9和API 11在多个方面存在差异_鸿蒙不支持展开运算符

- 7【ML】windows 安装使用pytorch_window pytorch

- 8【esp32c3配置arduino IDE教程】

- 9推荐几款好用的思维导图软件

- 10docker hub 官网_dockerhub官网

基于RTMP协议流媒体直播的整体解决方案_rtmp流媒体

赞

踩

前言

随着自媒体的短视频和直播带货的流行,再加上这几年疫情的肆虐,直接把实体店给干趴下了。叫苦连天的实体店老板们,原来是不懂这些东西,而被割了韭菜。当然这只是开个玩笑,哈哈。他们没必要懂技术。今天我就来剖析直播的前端和后端的实现流程,干货满满,不要忘了先点个小赞,谢谢了。那么就开始我们今天要讲的内容。

Android端

Android端的推流我们需要使用到NDK,而拉流播放就简单了,使用google官方的ExoPlayer播放器进行播放即可。ExoPlayer官方中文文档[developer.android.com/media/media…] 。

Java层

在Java层,我们做一些编码推流的流程控制。

代码实现

CameraHelper.kt

package site.doramusic.app.live import android.app.Activity import android.graphics.ImageFormat import android.hardware.Camera import android.hardware.Camera.CameraInfo import android.hardware.Camera.PreviewCallback import android.util.Log import android.view.Surface import android.view.SurfaceHolder class CameraHelper( private val activity: Activity, private var cameraId: Int, private var width: Int, private var height: Int ) : SurfaceHolder.Callback, PreviewCallback { private var camera: Camera? = null private var buffer: ByteArray? = null private var surfaceHolder: SurfaceHolder? = null private var previewCallback: PreviewCallback? = null private var rotation = 0 private var onChangedSizeListener: OnChangedSizeListener? = null var bytes: ByteArray? = null fun switchCamera() { cameraId = if (cameraId == CameraInfo.CAMERA_FACING_BACK) { CameraInfo.CAMERA_FACING_FRONT } else { CameraInfo.CAMERA_FACING_BACK } stopPreview() startPreview() } private fun stopPreview() { // 预览数据回调接口 camera?.setPreviewCallback(null) // 停止预览 camera?.stopPreview() // 释放摄像头 camera?.release() camera = null } private fun startPreview() { try { // 获得camera对象 camera = Camera.open(cameraId) // 配置camera的属性 val parameters = camera!!.getParameters() // 设置预览数据格式为nv21 parameters.previewFormat = ImageFormat.NV21 // 这是摄像头宽、高 setPreviewSize(parameters) // 设置摄像头 图像传感器的角度、方向 setPreviewOrientation(parameters) camera!!.setParameters(parameters) buffer = ByteArray(width * height * 3 / 2) bytes = ByteArray(buffer!!.size) // 数据缓存区 camera!!.addCallbackBuffer(buffer) camera!!.setPreviewCallbackWithBuffer(this) // 设置预览画面 camera!!.setPreviewDisplay(surfaceHolder) camera!!.startPreview() } catch (ex: Exception) { ex.printStackTrace() } } private fun setPreviewOrientation(parameters: Camera.Parameters) { val info = CameraInfo() Camera.getCameraInfo(cameraId, info) rotation = activity.windowManager.defaultDisplay.rotation var degrees = 0 when (rotation) { Surface.ROTATION_0 -> { degrees = 0 onChangedSizeListener!!.onChanged(height, width) } Surface.ROTATION_90 -> { degrees = 90 onChangedSizeListener!!.onChanged(width, height) } Surface.ROTATION_270 -> { degrees = 270 onChangedSizeListener!!.onChanged(width, height) } } var result: Int if (info.facing == CameraInfo.CAMERA_FACING_FRONT) { result = (info.orientation + degrees) % 360 result = (360 - result) % 360 // compensate the mirror } else { // back-facing result = (info.orientation - degrees + 360) % 360 } // 设置角度 camera!!.setDisplayOrientation(result) } private fun setPreviewSize(parameters: Camera.Parameters) { // 获取摄像头支持的宽、高 val supportedPreviewSizes = parameters.supportedPreviewSizes var size = supportedPreviewSizes[0] Log.d(TAG, "支持 " + size.width + "x" + size.height) // 选择一个与设置的差距最小的支持分辨率 // 10x10 20x20 30x30 // 12x12 var m = Math.abs(size.height * size.width - width * height) supportedPreviewSizes.removeAt(0) val iterator: Iterator<Camera.Size> = supportedPreviewSizes.iterator() // 遍历 while (iterator.hasNext()) { val next = iterator.next() Log.d(TAG, "支持 " + next.width + "x" + next.height) val n = Math.abs(next.height * next.width - width * height) if (n < m) { m = n size = next } } width = size.width height = size.height parameters.setPreviewSize(width, height) Log.d( TAG, "设置预览分辨率 width:" + size.width + " height:" + size.height ) } fun setPreviewDisplay(surfaceHolder: SurfaceHolder) { this.surfaceHolder = surfaceHolder this.surfaceHolder!!.addCallback(this) } fun setPreviewCallback(previewCallback: PreviewCallback) { this.previewCallback = previewCallback } override fun surfaceCreated(holder: SurfaceHolder) {} override fun surfaceChanged( holder: SurfaceHolder, format: Int, width: Int, height: Int ) { // 释放摄像头 stopPreview() // 开启摄像头 startPreview() } override fun surfaceDestroyed(holder: SurfaceHolder) { stopPreview() } override fun onPreviewFrame( data: ByteArray, camera: Camera ) { when (rotation) { Surface.ROTATION_0 -> rotation90(data) Surface.ROTATION_90 -> { } Surface.ROTATION_270 -> { } } // data数据依然是倒的 previewCallback!!.onPreviewFrame(bytes, camera) camera.addCallbackBuffer(buffer) } private fun rotation90(data: ByteArray) { var index = 0 val ySize = width * height // u和v val uvHeight = height / 2 // 后置摄像头顺时针旋转90度 if (cameraId == CameraInfo.CAMERA_FACING_BACK) { // 将y的数据旋转之后 放入新的byte数组 for (i in 0 until width) { for (j in height - 1 downTo 0) { bytes!![index++] = data[width * j + i] } } // 每次处理两个数据 var i = 0 while (i < width) { for (j in uvHeight - 1 downTo 0) { // v bytes!![index++] = data[ySize + width * j + i] // u bytes!![index++] = data[ySize + width * j + i + 1] } i += 2 } } else { // 逆时针旋转90度 for (i in 0 until width) { var nPos = width - 1 for (j in 0 until height) { bytes!![index++] = data[nPos - i] nPos += width } } // u v var i = 0 while (i < width) { var nPos = ySize + width - 1 for (j in 0 until uvHeight) { bytes!![index++] = data[nPos - i - 1] bytes!![index++] = data[nPos - i] nPos += width } i += 2 } } } fun setOnChangedSizeListener(listener: OnChangedSizeListener) { onChangedSizeListener = listener } fun release() { surfaceHolder!!.removeCallback(this) stopPreview() } interface OnChangedSizeListener { fun onChanged(w: Int, h: Int) } companion object { private const val TAG = "CameraHelper" } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

这个类实现的是使用Android系统相机预览画面的功能,包括了切换前置后置摄像头以及旋转画面。

AudioChannel.kt

package site.doramusic.app.live import android.media.AudioFormat import android.media.AudioRecord import android.media.MediaRecorder import java.util.concurrent.ExecutorService import java.util.concurrent.Executors class AudioChannel(private val livePusher: LivePusher) { private val inputSamples: Int private val executor: ExecutorService private val audioRecord: AudioRecord private val channels = 1 private var isLiving = false fun startLive() { isLiving = true executor.submit(AudioTask()) } fun stopLive() { isLiving = false } fun release() { audioRecord.release() } internal inner class AudioTask : Runnable { override fun run() { // 启动录音机 audioRecord.startRecording() val bytes = ByteArray(inputSamples) while (isLiving) { val len = audioRecord.read(bytes, 0, bytes.size) if (len > 0) { // 送去编码 livePusher.native_pushAudio(bytes) } } // 停止录音机 audioRecord.stop() } } init { executor = Executors.newSingleThreadExecutor() // 准备录音机,采集pcm数据 val channelConfig: Int channelConfig = if (channels == 2) { AudioFormat.CHANNEL_IN_STEREO } else { AudioFormat.CHANNEL_IN_MONO } livePusher.native_setAudioEncodeInfo(44100, channels) // 16位,2个字节 inputSamples = livePusher.inputSamples * 2 // 最小需要的缓冲区 val minBufferSize = AudioRecord.getMinBufferSize( 44100, channelConfig, AudioFormat.ENCODING_PCM_16BIT ) * 2 // 1、麦克风 2、采样率 3、声道数 4、采样位 audioRecord = AudioRecord( MediaRecorder.AudioSource.MIC, 44100, channelConfig, AudioFormat.ENCODING_PCM_16BIT, if (minBufferSize > inputSamples) minBufferSize else inputSamples ) } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

这个类中,我们实现了音频采样,即录音的功能。

VideoChannel.kt

package site.doramusic.app.live import android.app.Activity import android.hardware.Camera import android.hardware.Camera.PreviewCallback import android.view.SurfaceHolder import site.doramusic.app.live.CameraHelper.OnChangedSizeListener class VideoChannel( private val livePusher: LivePusher, activity: Activity, width: Int, height: Int, private val bitrate: Int, private val fps: Int, cameraId: Int ) : PreviewCallback, OnChangedSizeListener { private val cameraHelper: CameraHelper private var isLiving = false fun setPreviewDisplay(surfaceHolder: SurfaceHolder) { cameraHelper.setPreviewDisplay(surfaceHolder) } init { cameraHelper = CameraHelper(activity, cameraId, width, height) cameraHelper.setPreviewCallback(this) cameraHelper.setOnChangedSizeListener(this) } /** * 得到nv21数据,已经旋转好的。 * * @param data * @param camera */ override fun onPreviewFrame( data: ByteArray, camera: Camera ) { if (isLiving) { livePusher.native_pushVideo(data) } } fun switchCamera() { cameraHelper.switchCamera() } /** * 真实摄像头数据的宽、高。 * * @param w * @param h */ override fun onChanged(w: Int, h: Int) { // 初始化编码器 livePusher.native_setVideoEncInfo(w, h, fps, bitrate) } fun startLive() { isLiving = true } fun stopLive() { isLiving = false } fun release() { cameraHelper.release() } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

这个类中我们实现了视频编码的流程控制。

LivePusher.kt

package site.doramusic.app.live import android.app.Activity import android.view.SurfaceHolder /** * 直播推流。 */ class LivePusher( activity: Activity, width: Int, height: Int, bitrate: Int, fps: Int, cameraId: Int) { /** * 音频编码通道。 */ private val audioChannel: AudioChannel /** * 视频编码通道。 */ private val videoChannel: VideoChannel val inputSamples: Int external get companion object { init { System.loadLibrary("doralive") } } init { native_init() videoChannel = VideoChannel(this, activity, width, height, bitrate, fps, cameraId) audioChannel = AudioChannel(this) } fun setPreviewDisplay(surfaceHolder: SurfaceHolder?) { videoChannel.setPreviewDisplay(surfaceHolder) } /** * 切换前后摄像头。 */ fun switchCamera() { videoChannel.switchCamera() } fun startLive(path: String?) { native_start(path) videoChannel.startLive() audioChannel.startLive() } fun stopLive() { videoChannel.stopLive() audioChannel.stopLive() native_stop() } fun release() { videoChannel.release() audioChannel.release() native_release() } external fun native_init() external fun native_start(path: String?) external fun native_setVideoEncInfo( width: Int, height: Int, fps: Int, bitrate: Int ) external fun native_setAudioEncodeInfo(sampleRateInHz: Int, channels: Int) external fun native_pushVideo(data: ByteArray?) external fun native_stop() external fun native_release() external fun native_pushAudio(data: ByteArray?) }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

最后,我们使用LivePusher这个类组合音频轨道和视频轨道数据的整合。

Native层

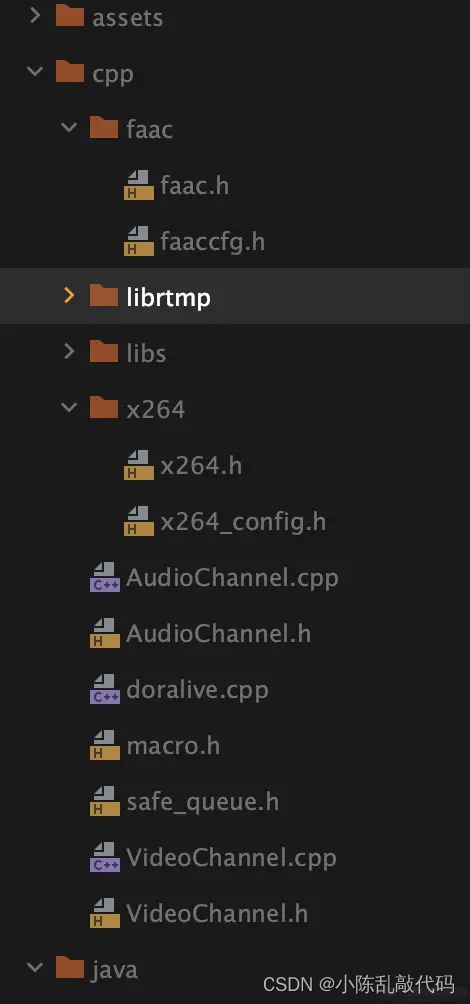

先看一下大致的目录结构,后文附有native层的完整代码。

NDK的目录结构

主要代码

AudioChannel.h

#ifndef PUSHER_AUDIO_CHANNEL_H #define PUSHER_AUDIO_CHANNEL_H #include "librtmp/rtmp.h" #include "faac/faac.h" #include <sys/types.h> class AudioChannel { typedef void (*AudioCallback)(RTMPPacket *packet); public: AudioChannel(); ~AudioChannel(); void setAudioEncInfo(int samplesInHZ, int channels); void setAudioCallback(AudioCallback audioCallback); int getInputSamples(); void encodeData(int8_t *data); RTMPPacket* getAudioTag(); private: AudioCallback m_audioCallback; int m_channels; faacEncHandle m_audioCodec = 0; u_long m_inputSamples; u_long m_maxOutputBytes; u_char *m_buffer = 0; }; #endif //PUSHER_AUDIO_CHANNEL_H

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

AudioChannel.cpp

#include <cstring> #include "AudioChannel.h" #include "macro.h" AudioChannel::AudioChannel() { } AudioChannel::~AudioChannel() { DELETE(m_buffer); // 释放编码器 if (m_audioCodec) { faacEncClose(m_audioCodec); m_audioCodec = 0; } } void AudioChannel::setAudioCallback(AudioCallback audioCallback) { this->m_audioCallback = audioCallback; } void AudioChannel::setAudioEncInfo(int samplesInHZ, int channels) { // 打开编码器 m_channels = channels; // 参数3:一次最大能输入编码器的样本数量,也编码的数据的个数 (一个样本是16位 2字节) // 参数4:最大可能的输出数据,编码后的最大字节数 m_audioCodec = faacEncOpen(samplesInHZ, channels, &m_inputSamples, &m_maxOutputBytes); // 设置编码器参数 faacEncConfigurationPtr config = faacEncGetCurrentConfiguration(m_audioCodec); // 指定为 mpeg4 标准 config->mpegVersion = MPEG4; // lc 标准 config->aacObjectType = LOW; // 16位 config->inputFormat = FAAC_INPUT_16BIT; // 编码出原始数据,既不是adts也不是adif config->outputFormat = 0; faacEncSetConfiguration(m_audioCodec, config); // 输出缓冲区,编码后的数据用这个缓冲区来保存 m_buffer = new u_char[m_maxOutputBytes]; } int AudioChannel::getInputSamples() { return m_inputSamples; } RTMPPacket *AudioChannel::getAudioTag() { u_char *buf; u_long len; faacEncGetDecoderSpecificInfo(m_audioCodec, &buf, &len); int bodySize = 2 + len; RTMPPacket *packet = new RTMPPacket; RTMPPacket_Alloc(packet, bodySize); // 双声道 packet->m_body[0] = 0xAF; if (m_channels == 1) { packet->m_body[0] = 0xAE; } packet->m_body[1] = 0x00; // 图片数据 memcpy(&packet->m_body[2], buf, len); packet->m_hasAbsTimestamp = 0; packet->m_nBodySize = bodySize; packet->m_packetType = RTMP_PACKET_TYPE_AUDIO; packet->m_nChannel = 0x11; packet->m_headerType = RTMP_PACKET_SIZE_LARGE; return packet; } void AudioChannel::encodeData(int8_t *data) { // 返回编码后数据字节的长度 int bytelen = faacEncEncode(m_audioCodec, reinterpret_cast<int32_t *>(data), m_inputSamples, m_buffer, m_maxOutputBytes); if (bytelen > 0) { int bodySize = 2 + bytelen; RTMPPacket *packet = new RTMPPacket; RTMPPacket_Alloc(packet, bodySize); // 双声道 packet->m_body[0] = 0xAF; if (m_channels == 1) { packet->m_body[0] = 0xAE; } // 编码出的声音,都是0x01 packet->m_body[1] = 0x01; // 图片数据 memcpy(&packet->m_body[2], m_buffer, bytelen); packet->m_hasAbsTimestamp = 0; packet->m_nBodySize = bodySize; packet->m_packetType = RTMP_PACKET_TYPE_AUDIO; packet->m_nChannel = 0x11; packet->m_headerType = RTMP_PACKET_SIZE_LARGE; m_audioCallback(packet); } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

以上为音频编码的实现。无论是音频编码还是视频编码,最终都会使用到RTMPPacket这个结构体保存数据。音频编码我们采用的是faac的库。

VideoChannel.h

#ifndef PUSHER_VIDEO_CHANNEL_H #define PUSHER_VIDEO_CHANNEL_H #include <inttypes.h> #include "x264/x264.h" #include <pthread.h> #include "librtmp/rtmp.h" class VideoChannel { typedef void (*VideoCallback)(RTMPPacket* packet); public: VideoChannel(); ~VideoChannel(); // 创建x264编码器 void setVideoEncInfo(int width, int height, int fps, int bitrate); void encodeData(int8_t *data); void setVideoCallback(VideoCallback videoCallback); private: pthread_mutex_t m_mutex; int m_width; int m_height; int m_fps; int m_bitrate; x264_t *m_videoCodec = 0; x264_picture_t *m_picIn = 0; int m_ySize; int m_uvSize; VideoCallback m_videoCallback; void sendSpsPps(uint8_t *sps, uint8_t *pps, int sps_len, int pps_len); void sendFrame(int type, uint8_t *payload, int i_payload); }; #endif //PUSHER_VIDEO_CHANNEL_H

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

VideoChannel.cpp

#include "VideoChannel.h" #include "librtmp/rtmp.h" #include "macro.h" #include "string.h" VideoChannel::VideoChannel() { pthread_mutex_init(&m_mutex, 0); } VideoChannel::~VideoChannel() { pthread_mutex_destroy(&m_mutex); if (m_videoCodec) { x264_encoder_close(m_videoCodec); m_videoCodec = 0; } if (m_picIn) { x264_picture_clean(m_picIn); DELETE(m_picIn); } } void VideoChannel::setVideoEncInfo(int width, int height, int fps, int bitrate) { pthread_mutex_lock(&m_mutex); m_width = width; m_height = height; m_fps = fps; m_bitrate = bitrate; m_ySize = width * height; m_uvSize = m_ySize / 4; if (m_videoCodec) { x264_encoder_close(m_videoCodec); m_videoCodec = 0; } if (m_picIn) { x264_picture_clean(m_picIn); DELETE(m_picIn); } // 打开x264编码器 // x264编码器的属性 x264_param_t param; // 参数2:最快 // 参数3:无延迟编码 x264_param_default_preset(¶m, "ultrafast", "zerolatency"); // base_line 3.2 编码规格 param.i_level_idc = 32; // 输入数据格式 param.i_csp = X264_CSP_I420; param.i_width = width; param.i_height = height; // 无b帧 param.i_bframe = 0; // 参数i_rc_method表示码率控制,CQP(恒定质量),CRF(恒定码率),ABR(平均码率) param.rc.i_rc_method = X264_RC_ABR; // 码率(比特率,单位Kbps) param.rc.i_bitrate = bitrate / 1000; // 瞬时最大码率 param.rc.i_vbv_max_bitrate = bitrate / 1000 * 1.2; // 设置了i_vbv_max_bitrate必须设置此参数,码率控制区大小,单位kbps param.rc.i_vbv_buffer_size = bitrate / 1000; // 帧率 param.i_fps_num = fps; param.i_fps_den = 1; param.i_timebase_den = param.i_fps_num; param.i_timebase_num = param.i_fps_den; // param.pf_log = x264_log_default2; // 用fps而不是时间戳来计算帧间距离 param.b_vfr_input = 0; // 帧距离(关键帧) 2s一个关键帧 param.i_keyint_max = fps * 2; // 是否复制sps和pps放在每个关键帧的前面 该参数设置是让每个关键帧(I帧)都附带sps/pps。 param.b_repeat_headers = 1; // 多线程 param.i_threads = 1; x264_param_apply_profile(¶m, "baseline"); // 打开编码器 m_videoCodec = x264_encoder_open(¶m); m_picIn = new x264_picture_t; x264_picture_alloc(m_picIn, X264_CSP_I420, width, height); pthread_mutex_unlock(&m_mutex); } void VideoChannel::setVideoCallback(VideoCallback videoCallback) { this->m_videoCallback = videoCallback; } void VideoChannel::encodeData(int8_t *data) { pthread_mutex_lock(&m_mutex); // y数据 memcpy(m_picIn->img.plane[0], data, m_ySize); for (int i = 0; i < m_uvSize; ++i) { // u数据 *(m_picIn->img.plane[1] + i) = *(data + m_ySize + i * 2 + 1); *(m_picIn->img.plane[2] + i) = *(data + m_ySize + i * 2); } // 编码出来的数据(帧数据) x264_nal_t *pp_nal; // 编码出来有几个数据(多少帧) int pi_nal; x264_picture_t pic_out; x264_encoder_encode(m_videoCodec, &pp_nal, &pi_nal, m_picIn, &pic_out); // 如果是关键帧 3 int sps_len; int pps_len; uint8_t sps[100]; uint8_t pps[100]; for (int i = 0; i < pi_nal; ++i) { if (pp_nal[i].i_type == NAL_SPS) { // 排除掉 h264的间隔 00 00 00 01 sps_len = pp_nal[i].i_payload - 4; memcpy(sps, pp_nal[i].p_payload + 4, sps_len); } else if (pp_nal[i].i_type == NAL_PPS) { pps_len = pp_nal[i].i_payload - 4; memcpy(pps, pp_nal[i].p_payload + 4, pps_len); // pps肯定是跟着sps的 sendSpsPps(sps, pps, sps_len, pps_len); } else { sendFrame(pp_nal[i].i_type, pp_nal[i].p_payload, pp_nal[i].i_payload); } } pthread_mutex_unlock(&m_mutex); } void VideoChannel::sendSpsPps(uint8_t *sps, uint8_t *pps, int sps_len, int pps_len) { int bodySize = 13 + sps_len + 3 + pps_len; RTMPPacket *packet = new RTMPPacket; RTMPPacket_Alloc(packet, bodySize); int i = 0; // 固定头 packet->m_body[i++] = 0x17; // 类型 packet->m_body[i++] = 0x00; // composition time 0x000000 packet->m_body[i++] = 0x00; packet->m_body[i++] = 0x00; packet->m_body[i++] = 0x00; // 版本 packet->m_body[i++] = 0x01; // 编码规格 packet->m_body[i++] = sps[1]; packet->m_body[i++] = sps[2]; packet->m_body[i++] = sps[3]; packet->m_body[i++] = 0xFF; // 整个sps packet->m_body[i++] = 0xE1; // sps长度 packet->m_body[i++] = (sps_len >> 8) & 0xff; packet->m_body[i++] = sps_len & 0xff; memcpy(&packet->m_body[i], sps, sps_len); i += sps_len; // pps packet->m_body[i++] = 0x01; packet->m_body[i++] = (pps_len >> 8) & 0xff; packet->m_body[i++] = (pps_len) & 0xff; memcpy(&packet->m_body[i], pps, pps_len); // 视频 packet->m_packetType = RTMP_PACKET_TYPE_VIDEO; packet->m_nBodySize = bodySize; // 随意分配一个管道(尽量避开rtmp.c中使用的) packet->m_nChannel = 10; // sps pps没有时间戳 packet->m_nTimeStamp = 0; // 不使用绝对时间 packet->m_hasAbsTimestamp = 0; packet->m_headerType = RTMP_PACKET_SIZE_MEDIUM; m_videoCallback(packet); } void VideoChannel::sendFrame(int type, uint8_t *payload, int i_payload) { if (payload[2] == 0x00) { i_payload -= 4; payload += 4; } else { i_payload -= 3; payload += 3; } int bodySize = 9 + i_payload; RTMPPacket *packet = new RTMPPacket; RTMPPacket_Alloc(packet, bodySize); packet->m_body[0] = 0x27; if(type == NAL_SLICE_IDR){ packet->m_body[0] = 0x17; LOGE("关键帧"); } // 类型 packet->m_body[1] = 0x01; // 时间戳 packet->m_body[2] = 0x00; packet->m_body[3] = 0x00; packet->m_body[4] = 0x00; // 数据长度 int 4个字节 packet->m_body[5] = (i_payload >> 24) & 0xff; packet->m_body[6] = (i_payload >> 16) & 0xff; packet->m_body[7] = (i_payload >> 8) & 0xff; packet->m_body[8] = (i_payload) & 0xff; // 图片数据 memcpy(&packet->m_body[9], payload, i_payload); packet->m_hasAbsTimestamp = 0; packet->m_nBodySize = bodySize; packet->m_packetType = RTMP_PACKET_TYPE_VIDEO; packet->m_nChannel = 0x10; packet->m_headerType = RTMP_PACKET_SIZE_LARGE; m_videoCallback(packet); }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

以上为视频编码的实现。视频编码我们采用的是x264的库。这里牵扯到了IBP帧的概念,我来简单解释下。

“IPB帧”是视频编码中的一个概念,通常用于描述视频压缩中的帧类型。在IPB帧结构中,I代表“Intra-frame”,P代表“Predictive frame”,B代表“Bidirectional frame”。

- Intra-frame (I帧) :I帧是视频序列中的关键帧,也称为帧内编码帧。每个I帧都是独立编码的,不依赖于其他帧。它们包含了图像的完整信息,没有依赖其他帧的数据。I帧通常用于视频的起始点或者画面发生较大变化的地方。

- Predictive frame (P帧) :P帧是通过参考前面的帧进行编码的,也称为帧间预测帧。P帧存储了相对于前面的I帧或者P帧的差异信息,以便在解码时进行恢复。它们依赖于前面的帧来提供预测信息。

- Bidirectional frame (B帧) :B帧是通过参考前后的帧进行编码的,也称为双向预测帧。B帧存储了相对于前后帧的差异信息,可以最大程度地减小数据量。它们依赖于前后的帧来提供预测信息。

IPB帧结构的使用可以显著减小视频数据量,从而实现更高效的视频压缩和传输。

这里还有另外一个概念就是SPS和PPS。

SPS (Sequence Parameter Set) 和 PPS (Picture Parameter Set) 是 H.264/AVC(高级视频编码)标准中的两个重要概念,用于描述视频序列的参数和图像的参数。

-

SPS (Sequence Parameter Set) :

- SPS 包含了描述视频序列的参数信息,如图像尺寸、帧率、颜色空间等。

- SPS 是对整个视频序列共享的参数的描述,一个视频序列通常只有一个 SPS。

- SPS 信息在视频编码开始时发送,并在整个视频序列中保持不变,直到视频编码器发送新的 SPS。

-

PPS (Picture Parameter Set) :

- PPS 包含了描述图像的参数信息,如编码类型、量化参数等。

- PPS 是对图像级别的参数的描述,每个图像都有一个对应的 PPS。

- PPS 提供了一些特定于图像的参数,允许编码器在编码不同图像时进行灵活的设置。

这两个参数集合在 H.264/AVC 中的引入,使得视频编码器可以更加灵活地进行视频压缩和解码,并且可以提高编解码器的性能和效率。SPS 和 PPS 的发送通常会随着视频流的传输一起发送,以确保解码器能够正确地解析和解码视频数据。

这些都属于音视频编解码的范畴了。

doralive.cpp

#include <jni.h> #include <string> #include "librtmp/rtmp.h" #include "safe_queue.h" #include "macro.h" #include "VideoChannel.h" #include "AudioChannel.h" SafeQueue<RTMPPacket *> packets; VideoChannel *videoChannel = 0; int isStart = 0; pthread_t pid; int readyPushing = 0; uint32_t start_time; AudioChannel *audioChannel = 0; void releasePackets(RTMPPacket *&packet) { if (packet) { RTMPPacket_Free(packet); delete packet; packet = 0; } } void callback(RTMPPacket *packet) { if (packet) { // 设置时间戳 packet->m_nTimeStamp = RTMP_GetTime() - start_time; packets.push(packet); } } extern "C" JNIEXPORT void JNICALL Java_site_doramusic_app_live_LivePusher_native_1init(JNIEnv *env, jobject instance) { // 准备一个Video编码器的工具类,进行编码 videoChannel = new VideoChannel; videoChannel->setVideoCallback(callback); audioChannel = new AudioChannel; audioChannel->setAudioCallback(callback); // 准备一个队列,打包好的数据放入队列,在线程中统一的取出数据再发送给服务器 packets.setReleaseCallback(releasePackets); } extern "C" JNIEXPORT void JNICALL Java_site_doramusic_app_live_LivePusher_native_1setVideoEncInfo(JNIEnv *env, jobject instance, jint width, jint height, jint fps, jint bitrate) { if (videoChannel) { videoChannel->setVideoEncInfo(width, height, fps, bitrate); } } void *start(void *args) { char *url = static_cast<char *>(args); RTMP *rtmp = 0; do { rtmp = RTMP_Alloc(); if (!rtmp) { LOGE("alloc rtmp失败"); break; } RTMP_Init(rtmp); int ret = RTMP_SetupURL(rtmp, url); if (!ret) { LOGE("设置地址失败:%s", url); break; } // 5s超时时间 rtmp->Link.timeout = 5; RTMP_EnableWrite(rtmp); ret = RTMP_Connect(rtmp, 0); if (!ret) { LOGE("连接服务器:%s", url); break; } ret = RTMP_ConnectStream(rtmp, 0); if (!ret) { LOGE("连接流:%s", url); break; } // 记录一个开始时间 start_time = RTMP_GetTime(); // 表示可以开始推流了 readyPushing = 1; packets.setWork(1); // 保证第一个数据是aac解码数据包 callback(audioChannel->getAudioTag()); RTMPPacket *packet = 0; while (readyPushing) { packets.pop(packet); if (!readyPushing) { break; } if (!packet) { continue; } packet->m_nInfoField2 = rtmp->m_stream_id; // 发送rtmp包 1:队列 // 意外断网?发送失败,rtmpdump 内部会调用RTMP_Close // RTMP_Close 又会调用 RTMP_SendPacket // RTMP_SendPacket 又会调用 RTMP_Close // 将rtmp.c 里面WriteN方法的 Rtmp_Close注释掉 ret = RTMP_SendPacket(rtmp, packet, 1); releasePackets(packet); if (!ret) { LOGE("发送失败"); break; } } releasePackets(packet); } while (0); isStart = 0; readyPushing = 0; packets.setWork(0); packets.clear(); if (rtmp) { RTMP_Close(rtmp); RTMP_Free(rtmp); } delete (url); return 0; } extern "C" JNIEXPORT void JNICALL Java_site_doramusic_app_live_LivePusher_native_1start(JNIEnv *env, jobject instance, jstring path_) { if (isStart) { return; } isStart = 1; const char *path = env->GetStringUTFChars(path_, 0); char *url = new char[strlen(path) + 1]; strcpy(url, path); pthread_create(&pid, 0, start, url); env->ReleaseStringUTFChars(path_, path); } extern "C" JNIEXPORT void JNICALL Java_site_doramusic_app_live_LivePusher_native_1pushVideo(JNIEnv *env, jobject instance, jbyteArray data_) { if (!videoChannel || !readyPushing) { return; } jbyte *data = env->GetByteArrayElements(data_, NULL); videoChannel->encodeData(data); env->ReleaseByteArrayElements(data_, data, 0); } extern "C" JNIEXPORT void JNICALL Java_site_doramusic_app_live_LivePusher_native_1stop(JNIEnv *env, jobject instance) { readyPushing = 0; // 关闭队列工作 packets.setWork(0); pthread_join(pid, 0); } extern "C" JNIEXPORT void JNICALL Java_site_doramusic_app_live_LivePusher_native_1release(JNIEnv *env, jobject instance) { DELETE(videoChannel); DELETE(audioChannel); } extern "C" JNIEXPORT void JNICALL Java_site_doramusic_app_live_LivePusher_native_1setAudioEncodeInfo(JNIEnv *env, jobject instance, jint sampleRateInHz, jint channels) { if (audioChannel) { audioChannel->setAudioEncInfo(sampleRateInHz, channels); } } extern "C" JNIEXPORT jint JNICALL Java_site_doramusic_app_live_LivePusher_getInputSamples(JNIEnv *env, jobject instance) { if (audioChannel) { return audioChannel->getInputSamples(); } return -1; } extern "C" JNIEXPORT void JNICALL Java_site_doramusic_app_live_LivePusher_native_1pushAudio(JNIEnv *env, jobject instance, jbyteArray data_) { if (!audioChannel || !readyPushing) { return; } jbyte *data = env->GetByteArrayElements(data_, NULL); audioChannel->encodeData(data); env->ReleaseByteArrayElements(data_, data, 0); }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

这里的_1是对Java方法名称中_的转义。最后使用可移植操作系统的POSIX线程pthread发送rtmp包推流到服务器就完成了。

CMakeLists.txt

cmake_minimum_required(VERSION 3.4.1) # 引入指定目录下的CMakeLists.txt add_subdirectory(src/main/cpp/librtmp) add_library( doralive SHARED src/main/cpp/doralive.cpp src/main/cpp/VideoChannel.cpp src/main/cpp/AudioChannel.cpp) include_directories(src/main/cpp/faac src/main/cpp/x264) set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -L${CMAKE_SOURCE_DIR}/src/main/cpp/libs/${ANDROID_ABI}") target_link_libraries( doralive rtmp x264 faac log)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

总结

一套完整的直播推拉流的解决方案到此就结束了。云里雾里的同学,可以系统学习下Linux操作系统的使用。对于native层代码看得头晕的同学,建议先学习cpp、jni以及音视频编解码的相关内容。

如果你看到了这里,觉得文章写得不错就给个赞呗?

更多Android进阶指南 可以扫码 解锁更多Android进阶资料

敲代码不易,关注一下吧。ღ( ´・ᴗ・` )