- 1FPGA的基础知识_fpga基础

- 2【Redis】什么是Redis缓存 雪崩、穿透、击穿?(一篇文章就够了)_redis雪崩

- 3Linux虚拟机设置静态ip之后不能ping通外网_linux设置静态ip后无法ping

- 4Java毕业设计作品(22):基于thymeleaf前后端分离 医院预约挂号系统设计与实现

- 5mysql 双主复制_配置步骤、遇到的问题及解决办法_mysql双主复制

- 6Spring MVC框架详解_springmvc框架搭建及详解

- 7整理*软件测试的方法一共有几种_测试方法有哪几种

- 8Python Selenium常见的报错以及措施_selenium报错

- 9python串口通信的接收与发送_python串口编程整理(更新完)

- 10【前端笔记】js简单代码实现贪吃蛇~~~_js贪吃蛇代码截图

MySQL集群高可用架构之MySQL InnoDB Cluste_mysql innodb cluster

赞

踩

今天我将详细的为大家介绍Centos 7.5 基于 MySQL 5.7的 InnoDB Cluster 多节点高可用集群环境部署的相关知识,希望大家能够从中收获多多!如有帮助,请点在看、转发支持一波!!!

一、MySQL InnoDB Cluster 介绍

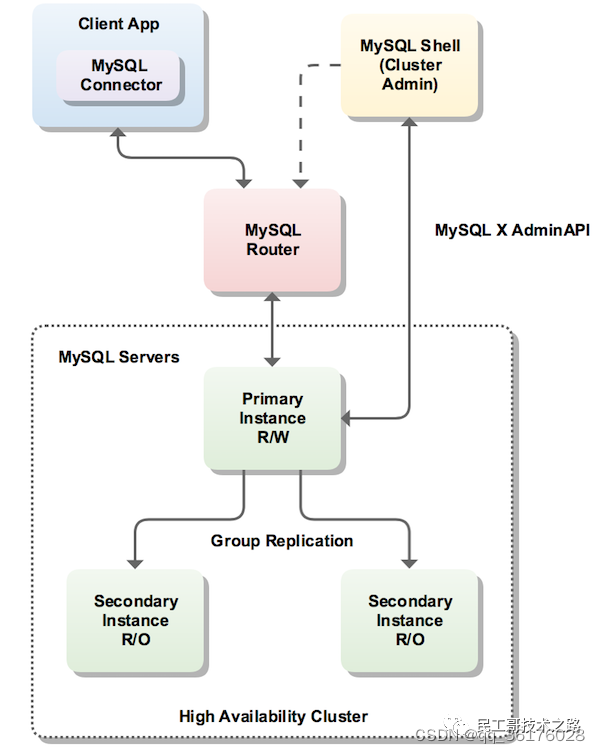

MySQL的高可用架构无论是社区还是官方,一直在技术上进行探索,这么多年提出了多种解决方案,比如 MMM, MHA, NDB Cluster, Galera Cluster, InnoDB Cluster, 腾讯的PhxSQL, MySQL Fabric., aliSQL。MySQL官方在2017年4月推出了一套完整的、高可用的Mysql解决方案 - MySQL InnoDB Cluster, 即一组MySQL服务器可以配置为一个MySQL集群。在默认的单主节点模式下,集群服务器具有一个读写主节点和多个只读辅节点。辅助服务器是主服务器的副本。客户端应用程序通过MySQL Router连接到主服务程序。如果主服务连接失败,则次要的节点自动提升为主节点,MySQL Router请求到新的主节点。InnoDB Cluster不提供NDB Cluster支持。

1、分布式MySQL之InnoDB和NDB

分布式MySQL主要有InnoDB和NDB模式, NDB是基于集群的引擎-数据被自动切分并复制到数个机器上(数据节点), 适合于那些需要极高查询性能和高可用性的应用, 原来是为爱立信的电信应用设计的。 NDB提供了高达99.999%的可靠性,在读操作多的应用中表现优异。 对于有很多并发写操作的应用, 还是推荐用InnoDB。

========== NDB和InnoDB存储引擎之间的特性差异 ==========

1.1 InnoDB(MySQL 5.7)特性:

-

InnoDB版本:InnoDB 5.7.20;

-

NDB Cluster版本:不支持;

-

大存储长度:64TB;

-

事物:所有标准事物类型;

-

多版本并发控制:支持;

-

数据压缩:支持;

-

大行支:VARBINARY、VARCHAR、BLOB;

-

同步支持:半同步、异步;

-

块读取:支持;

-

块写入:需要使用水平分区;

-

高可用性:高;

1.2 NDB 7.5/7.6特性:

-

InnoDB版本:InnoDB 5.7.20;

-

NDB Cluster版本: NDB 7.5.8/7.6.4;

-

大存储长度:128TB;

-

事物:读提交;

-

多版本并发控制:不支持;

-

数据压缩支持:不支持;

-

大行支持:BLOB、 TEXT;

-

同步支持半:自动同步;

-

块读取:支持;

-

块写入:支持;

-

高可用性:非常高;

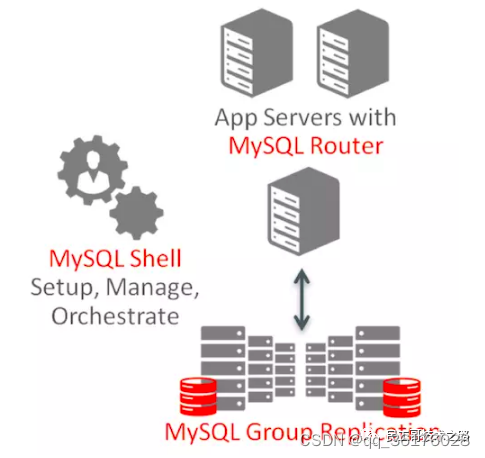

二、Mysql InnoDB Cluster 工作原理和流程

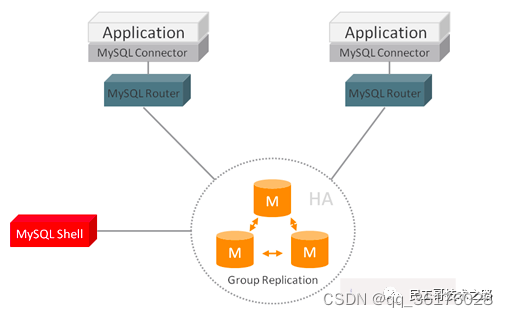

MySQL InnoDB集群提供了一个集成的,本地的,HA解决方案。Mysq Innodb Cluster是利用组复制的 pxos 协议,保障数据一致性,组复制支持单主模式和多主模式。

MySQL InnoDB 集群由以下几部分组成:

MySQL Servers with Group Replication:向集群的所有成员复制数据,同时提供容错、自动故障转移和弹性。MySQL Server 5.7.17或更高的版本。

MySQL Router:确保客户端请求是负载平衡的,并在任何数据库故障时路由到正确的服务器。MySQL Router 2.1.3或更高的版本。

MySQL Shell:通过内置的管理API创建及管理Innodb集群。MySQL Shell 1.0.9或更高的版本。

各个组件的关系和工作流程如下:

三、MySQL InnoDB Cluster 集群特性

1、集成易用

MySQL InnoDB集群紧密集成了MySQL Servers with Group Replication,MySQL Router,和MySQL Shell,所以不必依赖于外部工具,脚本或其他部件。 另外它利用了现有的MySQL特性,如:InnoDB, GTIDs, binary logs, multi-threaded slave execution, multi-source replication and Performance Schema。可以在五分钟内利用MySQL Shell中的脚本化的管理API来创建及管理MySQL集群。

2、使用组复制的 mysql server HA

组复制提供了内置的组成员管理、数据一致性保证、冲突检测和处理、节点故障检测和数据库故障转移相关操作的本地高可用性,无需人工干预或自定义工具。组复制同时实现了带自动选主的单主模式及任意更新的多主模式。通过使用一个强大的新的组通信系统,它提供了流行的Paxos算法的内部实现,来自动协调数据复制、一致性、membership。这提供了使MySQL数据库高度可用所需的所有内置机制。

3、弹性

通过组复制,一组服务器协调在一起形成一个组。组成员是动态的,服务器可以自愿或强制的地离开及随时加入。组将根据需要自动重新配置自己,并确保任何加入成员与组同步。这样就可以方便地在需要时快速地调整数据库的总容量。

4、故障检测

组复制实现了一个分布式故障检测器来查找并报告failed或不再参与组的服务器,组中剩余成员将重新配置。

5、容错

组复制基于流行的Paxos分布式算法来提供服务器之间的分布式协调。为了使一个小组继续发挥作用,它要求大多数成员在线,并就每一个变化达成协议。这允许MySQL数据库在发生故障时安全地继续操作,而无需人工干预,不存在数据丢失或数据损坏的风险。

6、自愈

如果一个服务器加入该组,它将自动将其状态与现有成员同步。如果服务器离开该组,例如它被取下来进行维护,剩下的服务器将看到它已离开,并将自动重新配置组。当服务器后重新加入组,它会自动重新与组同步。

7、监测

MySQL Enterprise Monitor 3.4及以后的版本全面支持组复制;监控每个节点的配置,健康,和性能。并且提供佳实践建议和提醒,以及易于理解的可视化工具,允许您轻松地监控和管理您的组复制和InnoDB集群。

8、通过MySQL Router为mysql客户机应用程序实现HA

MySQL的路由器允许您轻松迁移您的独立的MySQL实例到本地分布式高可用集群而不影响现有的应用程序。新metadata_cache插件为Innodb 集群提供了透明的客户端连接路由、负载平衡和故障转移的能力。

9、简单易用的 MySQL shell

MySQL Shell为所有MySQL相关的任务提供了一个直观、灵活、功能强大的接口。 新的adminapi使得它很容易用一种自我描述的自然语言来创建,监控和管理包括MySQL Router在内的MySQL InnoDB集群,而不需要了解低层次的概念,配置选项,或其他复杂的方面。

四、基于 MySQL5.7 的 InnoDB Cluster 高可用部署

下面部署采用InnoDB Cluster, 每台服务器实例都运行MySQL Group Replication (即冗余复制机制,内置failover), MGR有两种模式,一种是Single-Primary,一种是Multi-Primary,即单主或者多主。

需求注意:模式Multi-Primary中,所有的节点都是主节点,都可以同时被读写,看上去这似乎更好,但是因为多主的复杂性,在功能上如果设置了多主模式,则会有一些使用的限制,比如不支持Foreign Keys with Cascading Constraints。

1、环境准备

这里准备了4台centos7版本的服务器用来部署innodb cluster多节点集群环境 (至少也要需要3台服务器), 其中:

db-node01、db-node02、db-node03 作为 cluster 节点服务器, 三个节点都要安装 mysql8.0.x 与 mysql-shell

db-route01 作为管理节点服务器,用来负责创建 cluster,并作为 cluster 的路由, 该节点需要安装mysql-shell、mysql-router

所有节点的python版本要在2.7以上

ip地址 主机名 角色 安装软件

172.16.60.211 db-node01 cluster节点1 Mysql5.7, mysql-shell

172.16.60.212 db-node02 cluster节点2 Mysql5.7, mysql-shell

172.16.60.213 db-node03 cluster节点3 Mysql5.7, mysql-shell

172.16.60.214 db-route01 管理节点1 mysql-shell, mysql-route

- [root@db-node01 ~]# cat /etc/redhat-release

- CentOS Linux release 7.5.1804 (Core)

-

- [root@db-node01 ~]# python -V

- Python 2.7.5

为了方便实验,关闭所有节点的防火墙

- [root@MGR-node1 ~]# systemctl stop firewalld

- [root@MGR-node1 ~]# firewall-cmd --state

- not running

关闭每个节点的selinux

- [root@MGR-node1 ~]# cat /etc/sysconfig/selinux |grep "SELINUX=disabled"

- SELINUX=disabled

- [root@MGR-node1 ~]# setenforce 0

- setenforce: SELinux is disabled

- [root@MGR-node1 ~]# getenforce

- Disabled

配置每个节点的/etc/hosts主机映射, 方便通过节点的hostname进行连接。这一步很重要,否则可能会出现无法同步的情况,因为数据库需要根据member_host同步,如果不配置,默认就是localhost,这样时无法通信的!!!

- [root@db-node01 ~]# vim /etc/hosts

- ...........

- 172.16.60.211 db-node01

- 172.16.60.212 db-node02

- 172.16.60.213 db-node03

- 172.16.60.214 db-route01

所有节点进行如下的相关优化配置

- [root@db-node01 ~]# cat>>/etc/sysctl.conf <<EOF

- fs.aio-max-nr = 1048576

- fs.file-max = 681574400

- kernel.shmmax = 137438953472

- kernel.shmmni = 4096

- kernel.sem = 250 32000 100 200

- net.ipv4.ip_local_port_range = 9000 65000

- net.core.rmem_default = 262144

- net.core.rmem_max = 4194304

- net.core.wmem_default = 262144

- net.core.wmem_max = 1048586

- EOF

-

- [root@db-node01 ~]# sysctl -p

-

- [root@db-node01 ~]# cat>>/etc/security/limits.conf <<EOF

- mysql soft nproc 65536

- mysql hard nproc 65536

- mysql soft nofile 65536

- mysql hard nofile 65536

- EOF

-

- [root@db-node01 ~]# cat>>/etc/pam.d/login <<EOF

- session required /lib/security/pam_limits.so

- session required pam_limits.so

- EOF

-

- [root@db-node01 ~]# cat>>/etc/profile<<EOF

- if [ $USER = "mysql" ]; then

- ulimit -u 16384 -n 65536

- fi

- EOF

-

- [root@db-node01 ~]# source /etc/profile

mysql-shell下载地址:https://downloads.mysql.com/archives/get/p/43/file/mysql-shell-1.0.9-linux-glibc2.12-x86-64bit.tar.gz

mysql-route下载地址: https://downloads.mysql.com/archives/get/p/41/file/mysql-router-2.1.4-linux-glibc2.12-x86-64bit.tar.gz

2、在管理节点安装mysql shell 和 mysql-route

- [root@db-route01 ~]# cd /usr/local/src/

- [root@db-route01 src]# ll

- total 21648

- -rw-rw-r-- 1 root root 15526654 Mar 8 16:08 mysql-router-2.1.4-linux-glibc2.12-x86-64bit.tar.gz

- -rw-rw-r-- 1 root root 6635831 Mar 22 2017 mysql-shell-1.0.9-linux-glibc2.12-x86-64bit.tar.gz

-

- [root@db-route01 src]# tar -zvxf mysql-shell-1.0.9-linux-glibc2.12-x86-64bit.tar.gz

- [root@db-route01 src]# tar -zvxf mysql-router-2.1.4-linux-glibc2.12-x86-64bit.tar.gz

-

- [root@db-route01 src]# mv mysql-router-2.1.4-linux-glibc2.12-x86-64bit mysql-route

- [root@db-route01 src]# mv mysql-shell-1.0.9-linux-glibc2.12-x86-64bit mysql-shell

- [root@db-route01 src]# mv mysql-route /usr/local/

- [root@db-route01 src]# mv mysql-shell /usr/local/

-

- [root@db-route01 src]# vim /etc/profile

- ..............

- export PATH=$PATH:/usr/local/mysql-shell/bin/:/usr/local/mysql-route/bin/

-

- [root@db-route01 src]# source /etc/profile

-

- [root@db-route01 ~]# mysqlprovision --version

- mysqlprovision version 2.0.0

-

- [root@db-route01 ~]# mysqlsh --version

- MySQL Shell Version 1.0.9

-

- [root@db-route01 ~]# mysqlrouter --version

- MySQL Router v2.1.4 on Linux (64-bit) (GPL community edition)

3、在三个cluster节点安装和部署Mysql5.7及 mysql-shell

3.1、安装mysql-shell (三个节点同样操作)

- [root@db-node01 ~]# cd /usr/local/src/

- [root@db-node01 src]# ll mysql-shell-1.0.9-linux-glibc2.12-x86-64bit.tar.gz

- -rw-r--r-- 1 root root 6635831 Mar 22 2017 mysql-shell-1.0.9-linux-glibc2.12-x86-64bit.tar.gz

-

- [root@db-node01 src]# tar -zvxf mysql-shell-1.0.9-linux-glibc2.12-x86-64bit.tar.gz

- [root@db-node01 src]# mv mysql-shell-1.0.9-linux-glibc2.12-x86-64bit mysql-shell

- [root@db-node01 src]# mv mysql-shell /usr/local/

-

- [root@db-node01 src]# echo "export PATH=$PATH:/usr/local/mysql-shell/bin/" >> /etc/profile

- [root@db-node01 src]# source /etc/profile

-

- [root@db-node01 ~]# mysqlprovision --version

- mysqlprovision version 2.0.

-

- [root@db-node01 ~]# mysqlsh --version

- MySQL Shell Version 1.0.9

3.2 安装mysql5.7 (三个节点同样操作)

安装MySQL yum资源库

[root@db-node01 ~]# yum localinstall https://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm安装MySQL 5.7

[root@db-node01 ~]# yum install -y mysql-community-server启动MySQL服务器和MySQL的自动启动

- [root@db-node01 ~]# systemctl start mysqld.service

- [root@db-node01 ~]# systemctl enable mysqld.service

设置登录密码

由于MySQL从5.7开始不允许安装后使用空密码进行登录!为了加强安全性,系统会随机生成一个密码以供管理员登录使用,这个密码记录在/var/log/mysqld.log文件中,使用下面的命令可以查看此密码:

- [root@db-node01 ~]# cat /var/log/mysqld.log|grep 'A temporary password'

- 2019-01-11T05:53:17.824073Z 1 [Note] A temporary password is generated for root@localhost: TaN.k:*Qw2xs

使用上面查看的密码TaN.k:*Qw2xs 登录mysql,并重置密码为123456

- [root@db-node01 ~]# mysql -p #输入默认的密码:TaN.k:*Qw2xs

- .............

- mysql> set global validate_password_policy=;

- Query OK, rows affected (0.00 sec)

-

- mysql> set global validate_password_length=1;

- Query OK, rows affected (0.00 sec)

-

- mysql> set password=password("123456");

- Query OK, rows affected, 1 warning (0.00 sec)

-

- mysql> flush privileges;

- Query OK, rows affected (0.00 sec)

查看mysql版本

- [root@db-node01 ~]# mysql -p123456

- ........

- mysql> select version();

- +-----------+

- | version() |

- +-----------+

- | 5.7.24 |

- +-----------+

- 1 row in set (0.00 sec)

温馨提示:mysql5.7通过上面默认安装后,执行语句可能会报错:

ERROR 1819 (HY00): Your password does not satisfy the current policy requirements这个报错与Mysql 密码安全策略validate_password_policy的值有关,validate_password_policy可以取0、1、2三个值: 解决办法:

- set global validate_password_policy=;

- set global validate_password_length=1;

4、配置my.cnf

先配置db-node01节点的my.cnf

- [root@db-node01 ~]# cp /etc/my.cnf /etc/my.cnf.bak

- [root@db-node01 ~]# >/etc/my.cnf

- [root@db-node01 ~]# vim /etc/my.cnf

- [mysqld]

- datadir = /var/lib/mysql

- socket = /var/lib/mysql/mysql.sock

-

- symbolic-links =

-

- log-error = /var/log/mysqld.log

- pid-file = /var/run/mysqld/mysqld.pid

-

- #复制框架

- server_id=1

- gtid_mode=ON

- enforce_gtid_consistency=ON

- binlog_checksum=NONE

-

- log_bin=binlog

- log_slave_updates=ON

- binlog_format=ROW

- master_info_repository=TABLE

- relay_log_info_repository=TABLE

-

- #组复制设置

- #server必须为每个事务收集写集合,并使用XXHASH64哈希算法将其编码为散列

- transaction_write_set_extraction=XXHASH64

- #告知插件加入或创建组命名,UUID

- loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"

- #server启动时不自启组复制,为了避免每次启动自动引导具有相同名称的第二个组,所以设置为OFF。

- loose-group_replication_start_on_boot=off

- #告诉插件使用IP地址,端口24901用于接收组中其他成员转入连接

- loose-group_replication_local_address="172.16.60.211:24901"

- #启动组server,种子server,加入组应该连接这些的ip和端口;其他server要加入组得由组成员同意

- loose-group_replication_group_seeds="172.16.60.211:24901,172.16.60.212:24901,172.16.60.213:24901"

- loose-group_replication_bootstrap_group=off

- # 使用MGR的单主模式

- loose-group_replication_single_primary_mode = on

- disabled_storage_engines = MyISAM,BLACKHOLE,FEDERATED,CSV,ARCHIVE

- report_port=3306

如上配置完成后, 将db-node01节点的/etc/my.cnf文件拷贝到其他两个节点

- [root@db-node01 ~]# rsync -e "ssh -p22" -avpgolr /etc/my.cnf root@172.16.60.212:/etc/

- [root@db-node01 ~]# rsync -e "ssh -p22" -avpgolr /etc/my.cnf root@172.16.60.213:/etc/

3个cluster节点除了server_id、loose-group_replication_local_address 两个参数不一样外,其他保持一致。

所以待拷贝完成后, 分别修改db-node02和db-node03节点/etc/my.cnf文件的server_id、loose-group_replication_local_address两个参数

配置完成后, 要依次重启三个节点的数据库,安装MGR插件,设置复制账号(所有MGR节点都要执行)

[root@db-node01 ~]# systemctl restart mysqld5、创建Innodb Cluster集群

在 db-node01 上创建集群,通过 db-node01 上的 shell 连接db-node01 的 mysql

- [root@db-node01 ~]# mysqlsh

- ...................

- # 执行配置命令,也需要密码

- # 然后需要输入MySQL配置文件路径,本示例中的路径是 /usr/local/data/s1/s1.cnf

- # 接下来需要创建供其他主机访问的用户,这里选择第1项,为root用户授权

-

- mysql-js> shell.connect('root@localhost:3306');

- Please provide the password for 'root@localhost:3306': #输入密码123456

- Creating a Session to 'root@localhost:3306'

- Classic Session successfully established. No default schema selected.

-

- mysql-js> dba.configureLocalInstance();

- Please provide the password for 'root@localhost:3306': #输入密码123456

-

- Detecting the configuration file...

- Found configuration file at standard location: /etc/my.cnf

- Do you want to modify this file? [Y|n]: #直接回车, 使用默认的/etc/my.cnf配置文件

- MySQL user 'root' cannot be verified to have access to other hosts in the network.

-

- 1) Create root@% with necessary grants

- 2) Create account with different name

- 3) Continue without creating account

- 4) Cancel

- Please select an option [1]: 1 #选择第1项,为root用户授权, 创建供其他主机访问的用户

- Password for new account: #输入供其他主机访问的用户root用户授权的密码. 这里依然设置123456

- Confirm password:

- Validating instance...

-

- Dba.configureLocalInstance: Your password does not satisfy the current policy requirements (MySQL Error 1819)

出现上面报错的解决办法:打开另一个终端窗口, 登录db-node01节点的mysql,执行下面命令:

- [root@db-node01 ~]# mysql -p123456

- .................

- mysql> set global validate_password_policy=;

- Query OK, rows affected (0.00 sec)

-

- mysql> set global validate_password_length=1;

- Query OK, rows affected (0.00 sec)

然后接着上面db-node01终端窗口的mysql-shell继续执行:

- mysql-js> shell.connect('root@localhost:3306');

- Please provide the password for 'root@localhost:3306': #输入密码123456

- Creating a Session to 'root@localhost:3306'

- Classic Session successfully established. No default schema selected.

-

- mysql-js> dba.configureLocalInstance();

- Please provide the password for 'root@localhost:3306': #输入密码123456

-

- Detecting the configuration file...

- Found configuration file at standard location: /etc/my.cnf

- Do you want to modify this file? [Y|n]: #直接回车, 使用默认的/etc/my.cnf配置文件

- MySQL user 'root' cannot be verified to have access to other hosts in the network.

-

- 1) Create root@% with necessary grants

- 2) Create account with different name

- 3) Continue without creating account

- 4) Cancel

- Please select an option [1]: 1 # 选择第1项,为root用户授权, 即供其他主机访问的用户root用户授权的密码, 否则其他机器使用root用户连接不上不本机的mysql

- Password for new account: #输入. 这里依然设置123456. 这里授权之后, 登录db-node01节点的mysql, 执行"select host, user from mysql.user" 命令就能发现

- Confirm password:

- Validating instance...

-

- The instance 'localhost:3306' is valid for Cluster usage

- You can now use it in an InnoDB Cluster.

-

- {

- "status": "ok"

- }

- mysql-js>

由上面的信息看出, status 为 ok 说明配置没问题了,可以用来创建cluster。

通过 db-route01 的 mysql-shell 连接 node01 创建 cluster

- [root@db-route01 ~]# mysqlsh

- ................

- # 连接db-node01

- mysql-js> shell.connect('root@db-node01:3306');

- Please provide the password for 'root@db-node01:3306': #输入密码123456

- Creating a Session to 'root@db-node01:3306'

- Classic Session successfully established. No default schema selected.

-

- # 创建一个 cluster,命名为 'myCluster'

- mysql-js> var cluster = dba.createCluster('myCluster');

- A new InnoDB cluster will be created on instance 'root@db-node01:3306'.

-

- Creating InnoDB cluster 'myCluster' on 'root@db-node01:3306'...

- Adding Seed Instance...

-

- Cluster successfully created. Use Cluster.addInstance() to add MySQL instances.

- At least 3 instances are needed for the cluster to be able to withstand up to

- one server failure.

如上的信息, 如果创建成功, 则会输出的信息中会有类似“Cluster successfully created.”的语句。

- #创建成功后,查看cluster状态

- mysql-js> cluster.status();

- {

- "clusterName": "myCluster",

- "defaultReplicaSet": {

- "name": "default",

- "primary": "db-node01:3306",

- "status": "OK_NO_TOLERANCE",

- "statusText": "Cluster is NOT tolerant to any failures.",

- "topology": {

- "db-node01:3306": {

- "address": "db-node01:3306",

- "mode": "R/W",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- }

- }

- }

- }

-

- mysql-js> dba.getCluster();

- <Cluster:myCluster>

注意上面这个db-route01的mysql-shell终端窗口就不要关闭了, 一直保持连接中,也就是一直在当前集群状态中, 后面添加其他节点到cluster集群中会用到! (后面说到常用命令时会解释)。

添加节点 db-node02到上面创建的"myCluster"集群中

通过db-node02本机 mysql-shell 对 mysql 进行配置

- [root@db-node02 ~]# mysqlsh

- ................

- MySQL JS > shell.connect('root@localhost:3306');

- Creating a session to 'root@localhost:3306'

- Please provide the password for 'root@localhost:3306': ****** #输入密码123456

- Save password for 'root@localhost:3306'? [Y]es/[N]o/Ne[v]er (default No): Y

- Fetching schema names for autocompletion... Press ^C to stop.

- Your MySQL connection id is 251

- Server version: 5.7.25-log MySQL Community Server (GPL)

- No default schema selected; type \use <schema> to set one.

- <ClassicSession:root@localhost:3306>

-

- MySQL localhost:3306 ssl JS > dba.configureLocalInstance();

- Configuring local MySQL instance listening at port 3306 for use in an InnoDB cluster...

-

- This instance reports its own address as 172.16.60.212

-

- WARNING: User 'root' can only connect from localhost.

- If you need to manage this instance while connected from other hosts, new account(s) with the proper source address specification must be created.

-

- 1) Create remotely usable account for 'root' with same grants and password

- 2) Create a new admin account for InnoDB cluster with minimal required grants

- 3) Ignore and continue

- 4) Cancel

-

- Please select an option [1]: 1

- Please provide a source address filter for the account (e.g: 192.168.% or % etc) or leave empty and press Enter to cancel.

- Account Host: % #选择%, 表示允许任何机器远程使用root用户连接本机的mysql

-

- The instance 'localhost:3306' is valid for InnoDB cluster usage.

-

- Your password does not satisfy the current policy requirements (MySQL Error 1819)

解决办法:登录db-node02节点的mysql

- [root@db-node02 ~]# mysql -p123456

- ...............

-

- mysql> set global validate_password_policy=;

- Query OK, rows affected (0.00 sec)

-

- mysql> set global validate_password_length=1;

- Query OK, rows affected (0.00 sec)

接着继续登录db-node02本机的mysql-shell 进行配置

- [root@db-node02 ~]# mysqlsh

- .............

- MySQL JS > shell.connect('root@localhost:3306');

- Creating a session to 'root@localhost:3306'

- Fetching schema names for autocompletion... Press ^C to stop.

- Your MySQL connection id is 180

- Server version: 5.7.25-log MySQL Community Server (GPL)

- No default schema selected; type \use <schema> to set one.

- <ClassicSession:root@localhost:3306>

-

- MySQL localhost:3306 ssl JS > dba.configureLocalInstance();

- Configuring local MySQL instance listening at port 3306 for use in an InnoDB cluster...

-

- This instance reports its own address as db-node02

- Clients and other cluster members will communicate with it through this address by default. If this is not correct, the report_host MySQL system variable should be changed.

-

- WARNING: User 'root' can only connect from localhost.

- If you need to manage this instance while connected from other hosts, new account(s) with the proper source address specification must be created.

-

- 1) Create remotely usable account for 'root' with same grants and password

- 2) Create a new admin account for InnoDB cluster with minimal required grants

- 3) Ignore and continue

- 4) Cancel

-

- Please select an option [1]: 1

- Please provide a source address filter for the account (e.g: 192.168.% or % etc) or leave empty and press Enter to cancel.

- Account Host: %

-

- The instance 'localhost:3306' is valid for InnoDB cluster usage.

-

- Cluster admin user 'root'@'%' created.

-

- MySQL localhost:3306 ssl JS >

然后登录db-node02节点的mysql, 发现上面使用root用户远程连接的授权已经有了

- [root@db-node02 ~]# mysql -p123456

- ..............

- mysql> select host,user from mysql.user;

- +-----------+---------------+

- | host | user |

- +-----------+---------------+

- | % | root |

- | localhost | mysql.session |

- | localhost | mysql.sys |

- | localhost | root |

- +-----------+---------------+

- 4 rows in set (0.00 sec)

接着修改 my.cnf,添加配置项:

- [root@db-node02 ~]# vim /etc/my.cnf

- ............

- loose-group_replication_allow_local_disjoint_gtids_join=on

重启mysql服务

[root@db-node02 ~]# systemctl restart mysqld然后通过 db-route01节点 的 mysql-shell 添加 node02 到 "myCluster"集群中 接着上面的db-route01的mysql-shell终端窗口 (注意这个终端窗口是上面执行后, 没有关闭一直开着的)

- mysql-js> cluster.addInstance('root@db-node02:3306');

- A new instance will be added to the InnoDB cluster. Depending on the amount of

- data on the cluster this might take from a few seconds to several hours.

-

- Please provide the password for 'root@db-node02:3306':

- Adding instance to the cluster ...

-

- The instance 'root@db-node02:3306' was successfully added to the cluster.

上面信息表示db-node02节点已经成功添加到"myCluster"集群中了. 如下查看集群状态

- mysql-js> cluster.status();

- {

- "clusterName": "myCluster",

- "defaultReplicaSet": {

- "name": "default",

- "primary": "db-node01:3306",

- "status": "OK_NO_TOLERANCE",

- "statusText": "Cluster is NOT tolerant to any failures.",

- "topology": {

- "db-node01:3306": {

- "address": "db-node01:3306",

- "mode": "R/W",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- },

- "db-node02:3306": {

- "address": "db-node02:3306",

- "mode": "R/O",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- }

- }

- }

- }

同样, 上面操作后, 这个db-route01节点的mysql-shell当前终端窗口不要关闭,继续保持在集群状态中, 下面添加db-node03节点到集群中会用到这里.(后面常用命令中会提到)

添加节点 db-node03到上面创建的"myCluster"集群中

首先登录db-node03节点的mysql

- [root@db-node03 ~]# mysql -p123456

- ............

- mysql> set global validate_password_length=1;

- Query OK, rows affected (0.00 sec)

-

- mysql> set global validate_password_length=1;

- Query OK, rows affected (0.00 sec)

登录db-node03节点的mysql-shell, 进行配置

- [root@db-node03 ~]# mysqlsh

- .............

- MySQL JS > shell.connect('root@localhost:3306');

- Creating a session to 'root@localhost:3306'

- Please provide the password for 'root@localhost:3306': ******

- Save password for 'root@localhost:3306'? [Y]es/[N]o/Ne[v]er (default No): Y

- Fetching schema names for autocompletion... Press ^C to stop.

- Your MySQL connection id is 393

- Server version: 5.7.25-log MySQL Community Server (GPL)

- No default schema selected; type \use <schema> to set one.

- <ClassicSession:root@localhost:3306>

-

- MySQL localhost:3306 ssl JS > dba.configureLocalInstance();

- Configuring local MySQL instance listening at port 3306 for use in an InnoDB cluster...

-

- This instance reports its own address as db-node03

- Clients and other cluster members will communicate with it through this address by default. If this is not correct, the report_host MySQL system variable should be changed.

-

- WARNING: User 'root' can only connect from localhost.

- If you need to manage this instance while connected from other hosts, new account(s) with the proper source address specification must be created.

-

- 1) Create remotely usable account for 'root' with same grants and password

- 2) Create a new admin account for InnoDB cluster with minimal required grants

- 3) Ignore and continue

- 4) Cancel

-

- Please select an option [1]: 1

- Please provide a source address filter for the account (e.g: 192.168.% or % etc) or leave empty and press Enter to cancel.

- Account Host: %

-

- The instance 'localhost:3306' is valid for InnoDB cluster usage.

-

- Cluster admin user 'root'@'%' created.

接着修改 my.cnf,添加配置项:

- [root@db-node03 ~]# vim /etc/my.cnf

- ............

- loose-group_replication_allow_local_disjoint_gtids_join=on

重启mysql服务

[root@db-node03 ~]# systemctl restart mysqld然后通过 db-route01节点 的 mysql-shell 添加 node03 到 "myCluster"集群中 接着上面的db-route01的mysql-shell终端窗口 (注意这个终端窗口是上面执行后, 没有关闭一直开着的)

- mysql-js> cluster.addInstance('root@db-node03:3306');

- A new instance will be added to the InnoDB cluster. Depending on the amount of

- data on the cluster this might take from a few seconds to several hours.

-

- Please provide the password for 'root@db-node03:3306':

- Adding instance to the cluster ...

-

- The instance 'root@db-node03:3306' was successfully added to the cluster.

上面信息表示db-node02节点已经成功添加到"myCluster"集群中了. 如下查看集群状态

- mysql-js> cluster.status();

- {

- "clusterName": "myCluster",

- "defaultReplicaSet": {

- "name": "default",

- "primary": "db-node01:3306",

- "status": "OK",

- "statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

- "topology": {

- "db-node01:3306": {

- "address": "db-node01:3306",

- "mode": "R/W",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- },

- "db-node02:3306": {

- "address": "db-node02:3306",

- "mode": "R/O",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- },

- "db-node03:3306": {

- "address": "db-node03:3306",

- "mode": "R/O",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- }

- }

- }

- }

通过上面cluster集群信息可知, db-node01节点是主节点, 具有R/W读写权限, 其他两个节点是从节点, 具有R/O 只读权限

6、启动管理节点的route

进入 db-route01管理节点中mysql-router 安装目录,配置并启动 router

- [root@db-route01 ~]# /usr/local/mysql-route/bin/mysqlrouter --bootstrap root@db-node01:3306 -d myrouter --user=root

- Please enter MySQL password for root:

-

- Bootstrapping MySQL Router instance at /root/myrouter...

- MySQL Router has now been configured for the InnoDB cluster 'myCluster'.

-

- The following connection information can be used to connect to the cluster.

-

- Classic MySQL protocol connections to cluster 'myCluster':

- - Read/Write Connections: localhost:6446 #读写端口

- - Read/Only Connections: localhost:6447 #只读端口

-

- X protocol connections to cluster 'myCluster':

- - Read/Write Connections: localhost:64460

- - Read/Only Connections: localhost:64470

这里会在当前目录下产生mysql-router 目录, 并生成router配置文件,接着把配置文件修改一下:

- [root@db-route01 ~]# ls /root/myrouter/

- data log mysqlrouter.conf mysqlrouter.key run start.sh stop.sh

- [root@db-route01 ~]# cat /root/myrouter/mysqlrouter.conf #可以修改配置文件, 也可以默认不修改

默认通过route连接mysql后, 6446端口连接后可以进行读写操作. 6447端口连接后只能进行只读操作.

然后启动mysqlroute

- [root@db-route01 ~]# /root/myrouter/start.sh

- PID 16484 written to /root/myrouter/mysqlrouter.pid

-

- [root@db-route01 ~]# ps -ef|grep myroute

- root 18473 1 22:26 pts/1 00:00:00 sudo ROUTER_PID=/root/myrouter/mysqlrouter.pid /usr/local/mysql-route/bin/mysqlrouter -c /root/myrouter/mysqlrouter.conf --user=root

- root 18486 18473 22:26 pts/1 00:00:00 /usr/local/mysql-route/bin/mysqlrouter -c /root/myrouter/mysqlrouter.conf --user=root

- root 18612 5091 22:26 pts/1 00:00:00 grep --color=auto myroute

-

- [root@db-route01 ~]# netstat -tunlp|grep 18486

- tcp 0.0.0.0:64460 0.0.0.0:* LISTEN 18486/mysqlrouter

- tcp 0.0.0.0:6446 0.0.0.0:* LISTEN 18486/mysqlrouter

- tcp 0.0.0.0:6447 0.0.0.0:* LISTEN 18486/mysqlrouter

- tcp 0.0.0.0:64470 0.0.0.0:* LISTEN 18486/mysqlroute

这样就可以使用MySQL客户端连接router了. 下面验证下连接router:

a) 管理节点本机mysql-shell连接:

[root@db-route01 ~]# mysqlsh --uri root@localhost:6446b) 管理节点本机mysql连接:

[root@db-route01 ~]# mysql -u root -h 127.0.0.1 -P 6446 -pc) 远程客户机通过route连接mysql

[root@db-node01 ~]# mysql -u root -h 172.16.60.214 -P 6446 -p测试cluster节点数据同步. 这里选择db-node03节点作为远程客户端连接router

- [root@db-node03 ~]# mysql -u root -h 172.16.60.214 -P 6446 -p

- Enter password:

- Welcome to the MySQL monitor. Commands end with ; or \g.

- Your MySQL connection id is 1054

- Server version: 5.7.25-log MySQL Community Server (GPL)

-

- Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

-

- Oracle is a registered trademark of Oracle Corporation and/or its

- affiliates. Other names may be trademarks of their respective

- owners.

-

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

-

- mysql> show databases;

- +-------------------------------+

- | Database |

- +-------------------------------+

- | information_schema |

- | mysql |

- | mysql_innodb_cluster_metadata |

- | performance_schema |

- | sys |

- +-------------------------------+

- 5 rows in set (0.00 sec)

测试测试库kevin

- mysql> CREATE DATABASE kevin CHARACTER SET utf8 COLLATE utf8_general_ci;

- ERROR 1044 (42000): Access denied for user 'root'@'%' to database 'kevin'

这是因为'root@%'没有创建库的权限

- mysql> select host,user from mysql.user;

- +-----------+----------------------------------+

- | host | user |

- +-----------+----------------------------------+

- | % | mysql_innodb_cluster_rp496261783 |

- | % | mysql_innodb_cluster_rp496457975 |

- | % | mysql_innodb_cluster_rp496569258 |

- | % | mysql_innodb_cluster_rp496629685 |

- | % | mysql_router1_olzau3ltjqzx |

- | % | root |

- | localhost | mysql.session |

- | localhost | mysql.sys |

- | localhost | root |

- +-----------+----------------------------------+

- 9 rows in set (.00 sec)

-

- mysql> show grants for root@'%';

- +-----------------------------------------------------------------------------------------------------------------------------------------+

- | Grants for root@% |

- +-----------------------------------------------------------------------------------------------------------------------------------------+

- | GRANT RELOAD, SHUTDOWN, PROCESS, FILE, SUPER, REPLICATION SLAVE, REPLICATION CLIENT, CREATE USER ON *.* TO 'root'@'%' WITH GRANT OPTION |

- | GRANT SELECT, INSERT, UPDATE, DELETE ON `mysql`.* TO 'root'@'%' WITH GRANT OPTION |

- | GRANT SELECT ON `performance_schema`.* TO 'root'@'%' WITH GRANT OPTION |

- | GRANT ALL PRIVILEGES ON `mysql_innodb_cluster_metadata`.* TO 'root'@'%' WITH GRANT OPTION |

- +-----------------------------------------------------------------------------------------------------------------------------------------+

- 4 rows in set (.00 sec)

登录主库, 创建一个具有管理权权限的用户

- [root@db-node01 ~]# mysql -p123456

- .............

- mysql> set global validate_password_policy=;

- Query OK, rows affected (0.00 sec)

-

- mysql> set global validate_password_length=1;

- Query OK, rows affected (0.00 sec)

-

- mysql> grant all on *.* to bobo@'%' identified by "bo@123" with grant option;

- Query OK, rows affected, 1 warning (0.05 sec)

接着远程使用上面创建的新账号登录router操作

- [root@db-node03 ~]# mysql -u bobo -h 172.16.60.214 -P 6446 -p

- ........

- mysql> show grants for bobo@'%';

- +-------------------------------------------------------------+

- | Grants for bobo@% |

- +-------------------------------------------------------------+

- | GRANT ALL PRIVILEGES ON *.* TO 'bobo'@'%' WITH GRANT OPTION |

- +-------------------------------------------------------------+

- 1 row in set (0.00 sec)

测试测试库kevin

- mysql> CREATE DATABASE kevin CHARACTER SET utf8 COLLATE utf8_general_ci;

- Query OK, 1 row affected (0.06 sec)

-

- mysql> use kevin;

- Database changed

- mysql> create table if not exists haha (id int(10) PRIMARY KEY AUTO_INCREMENT,name varchar(50) NOT NULL);

- Query OK, rows affected (0.22 sec)

-

- mysql> insert into kevin.haha values(1,"wangshibo"),(2,"guohuihui"),(3,"yangyang"),(4,"shikui");

- Query OK, 4 rows affected (0.13 sec)

- Records: 4 Duplicates: 0 Warnings: 0

-

- mysql> select * from kevin.haha;

- +----+-----------+

- | id | name |

- +----+-----------+

- | 1 | wangshibo |

- | 2 | guohuihui |

- | 3 | yangyang |

- | 4 | shikui |

- +----+-----------+

- 4 rows in set (0.00 sec)

分别登录三个cluster节点的mysql, 发现测试库kevin已经完成同步了, 其中:写操作的数据会先写到db-node01节点, 然后同步到db-node02和db-node03只读节点上.

注意: 上面使用6446端口连接的route, 可以进行读写操作. 但是使用6447端口连接后, 就只能进行只读操作了. 登录后可以执行" select @@hostname" 查看登录到哪个节点上.

- [root@db-node03 ~]# mysql -u bobo -h 172.16.60.214 -P 6447 -p

- .............

- mysql> select * from kevin.haha;

- +----+-----------+

- | id | name |

- +----+-----------+

- | 1 | wangshibo |

- | 2 | guohuihui |

- | 3 | yangyang |

- | 4 | shikui |

- +----+-----------+

- 4 rows in set (0.00 sec)

-

- mysql> delete from kevin.haha where id>2;

- ERROR 1290 (HY000): The MySQL server is running with the --super-read-only option so it cannot execute this statement

此外, 还可以利用keepalived实现InnoDB Cluster的高可用, 即两台db-route管理节点, 通过VIP资源实现故障无感知切换. 这样需要准备5台节点, 其中3个cluster节点(安装mysql, mysql-shell), 2个route管理节点(安装keepalived, mysql-shell, mysql-route, mysql-client)

五、InnoDB Cluster 集群日常维护命令

在各节点配置之后, 创建cluster集群之前, 可以依次检查下cluster各个节点是否可用

- [root@db-node01 ~]# mysqls

- .................

- mysql-js> dba.checkInstanceConfiguration("root@localhost:3306")

- Please provide the password for 'root@localhost:3306':

- Validating instance...

-

- The instance 'localhost:3306' is valid for Cluster usage

- {

- "status": "ok"

- }

-

- mysql-js> dba.checkInstanceConfiguration("root@db-node02:3306")

- Please provide the password for 'root@db-node02:3306':

- Validating instance...

-

- The instance 'db-node02:3306' is valid for Cluster usage

- {

- "status": "ok"

- }

-

- mysql-js> dba.checkInstanceConfiguration("root@db-node03:3306")

- Please provide the password for 'root@db-node03:3306':

- Validating instance...

-

- The instance 'db-node03:3306' is valid for Cluster usage

- {

- "status": "ok"

- }

比如在上面创建Innodb cluster集群过程中, 再次登录mysqlsh (从客户端远程登录, 或任意节点本地登录), 怎么获得并查看集群状态

- [root@db-node01 ~]# mysqlsh

- .................

- mysql-js> shell.connect("root@db-node01:3306");

- Please provide the password for 'root@db-node01:3306':

- Creating a Session to 'root@db-node01:3306'

- Classic Session successfully established. No default schema selected.

查看集群状态

- mysql-js> cluster.status();

- ReferenceError: cluster is not defined

上面方式查看, 会报错说集群没有定义, 这时需要先执行下面这条语句之后,才看查看到集群状态!!!!!

- mysql-js> cluster.status();

- ReferenceError: cluster is not defined

然后就可以查看集群状态了

- mysql-js> cluster=dba.getCluster();

- <Cluster:myCluster>

- mysql-js> cluster.status();

- {

- "clusterName": "myCluster",

- "defaultReplicaSet": {

- "name": "default",

- "primary": "db-node01:3306",

- "status": "OK",

- "statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

- "topology": {

- "db-node01:3306": {

- "address": "db-node01:3306",

- "mode": "R/W",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- },

- "db-node02:3306": {

- "address": "db-node02:3306",

- "mode": "R/O",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- },

- "db-node03:3306": {

- "address": "db-node03:3306",

- "mode": "R/O",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- }

- }

- }

- }

从远程客户端登录

- [root@db-node03 ~]# mysqlsh --uri root@172.16.60.214:6446

- ......................

- MySQL 172.16.60.214:6446 ssl JS > cluster=dba.getCluster();

- <Cluster:myCluster>

-

- MySQL 172.16.60.214:6446 ssl JS > cluster.status();

- {

- "clusterName": "myCluster",

- "defaultReplicaSet": {

- "name": "default",

- "primary": "db-node01:3306",

- "ssl": "REQUIRED",

- "status": "OK",

- "statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

- "topology": {

- "db-node01:3306": {

- "address": "db-node01:3306",

- "mode": "R/W",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- },

- "db-node02:3306": {

- "address": "db-node02:3306",

- "mode": "R/O",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- },

- "db-node03:3306": {

- "address": "db-node03:3306",

- "mode": "R/O",

- "readReplicas": {},

- "role": "HA",

- "status": "ONLINE"

- }

- }

- },

- "groupInformationSourceMember": "mysql://root@172.16.60.214:6446"

- }

查看已创建的集群名称

- MySQL 172.16.60.214:6446 ssl JS > dba.getCluster();

- <Cluster:myCluster>

六、总结

- dba.getCluster(); #查看创建的集群

- cluster=dba.getCluster(); #获取当前集群

- cluster.status(); #查看集群状态

6.1 InnoDB Cluster集群维护的命令帮助

- mysql-js> dba.help();

-

- The global variable 'dba' is used to access the MySQL AdminAPI functionality

- and perform DBA operations. It is used for managing MySQL InnoDB clusters.

-

- The following properties are currently supported.

-

- - verbose Enables verbose mode on the Dba operations.

-

-

- The following functions are currently supported.

-

- - checkInstanceConfiguration Validates an instance for usage in Group

- Replication.

- - configureLocalInstance Validates and configures an instance for

- cluster usage.

- - createCluster Creates a MySQL InnoDB cluster.

- - deleteSandboxInstance Deletes an existing MySQL Server instance on

- localhost.

- - deploySandboxInstance Creates a new MySQL Server instance on

- localhost.

- - dropMetadataSchema Drops the Metadata Schema.

- - getCluster Retrieves a cluster from the Metadata Store.

- - help Provides help about this class and it's

- members

- - killSandboxInstance Kills a running MySQL Server instance on

- localhost.

- - rebootClusterFromCompleteOutage Brings a cluster back ONLINE when all

- members are OFFLINE.

- - resetSession Sets the session object to be used on the

- Dba operations.

- - startSandboxInstance Starts an existing MySQL Server instance on

- localhost.

- - stopSandboxInstance Stops a running MySQL Server instance on

- localhost.

-

- For more help on a specific function use: dba.help('<functionName>')

-

- e.g. dba.help('deploySandboxInstance')

比如获取当前集群名称

- mysql-js> dba.getCluster();

- <Cluster:myCluster>

6.2 日常使用的几个重要命令 (mysqlsh的JS语法)

- dba.checkInstanceConfiguration("root@hostname:3306") #检查节点配置实例,用于加入cluster之前

-

- dba.rebootClusterFromCompleteOutage('myCluster'); #重启

-

- dba.dropMetadataSchema(); #删除schema

-

- var cluster = dba.getCluster('myCluster') #获取当前集群

-

- cluster.checkInstanceState("root@hostname:3306") #检查cluster里节点状态

-

- cluster.rejoinInstance("root@hostname:3306") #重新加入节点,我本地测试的时候发现rejoin一直,每次是delete后

-

- addcluster.dissolve({force:true}) #删除集群

-

- cluster.addInstance("root@hostname:3306") #增加节点

-

- cluster.removeInstance("root@hostname:3306") #删除节点

-

- cluster.removeInstance('root@host:3306',{force:true}) #强制删除节点

-

- cluster.dissolve({force:true}) #解散集群

-

- cluster.describe(); #集群描述

集群节点状态

- - ONLINE: The instance is online and participating in the cluster.

- - OFFLINE: The instance has lost connection to the other instances.

- - RECOVERING: The instance is attempting to synchronize with the cluster by retrieving transactions it needs before it can become an ONLINE member.

- - UNREACHABLE: The instance has lost communication with the cluster.

- - ERROR: The instance has encountered an error during the recovery phase or while applying a transaction

七、备注:InnoDB Cluster 集群部署中的注意事项

请保证所有的集群机器在一个子网内,网络必须要通, 不然会失败;考虑到可以用桥接的方式实现不同网络之间集群的搭建, 这个并没有亲测;

统一使用hostname进行配置;请更改每台机器的hosts文件;

报错1

ERROR:

Group Replication join failed.

ERROR: Error joining instance to cluster: 'host-192-168-1-101:3306' - Query failed. MySQL Error (3092): The server is not configureroperly to be an active member of the group. Please see more details on error log.. Query: START group_replication

通过如下方式进行处理

- mysql> install plugin group_replication soname 'group_replication.so'; ##安装插件

- mysql> set global group_replication_allow_local_disjoint_gtids_join=ON;

- mysql> START GROUP_REPLICATION;

- mysql> select * from performance_schema.replication_group_members;

报错2

Dba.getCluster: This function is not available through a session to a standalone instance (RuntimeError)

说明集群中的主节点已经不在该机器上,查询后更改机器重试一下即可;

报错3

Dba.getCluster: Dba.getCluster: Unable to get cluster. The instance 'host-192-168-1-101:3306'

may belong to a different ReplicaSet as the one registered in the Metadata since the value of 'group_replication_group_name'

does not match the one registered in the ReplicaSet's Metadata: possible split-brain scenario. Please connect to another member of the ReplicaSet to get the Cluster. (RuntimeError)

致命的错误,master/slave的数据不一致所致,没办法,只能重新来

mysql-js>dba.dropMetadataSchema();请保证集群中的数据库表都存在主键,不然会挂掉;

安装集群监控,保证集群中机器挂掉的时候及时启动,不然所有节点宕机的时候就是灾难到来之时!!! 到时哭都来不及;

如何重置Innodb cluster集群环境?

主节点:

- mysql-js>dba.dropMetadataSchema(); 登录mysql-shell清空集群

-

- mysql> stop group_replication;

- mysql> reset master; (清空日志,确保和从库的表没有冲突奥,)

- mysql> reset slave;

其他节点(主要清理和主库的主从信息, 确保主库和从库的表没有冲突)

- mysql> stop group_replication;

- mysql> reset master;

- mysql> reset slave

主机名和 /etc/hosts中名字不一致,出现报错:

- [Repl] Slave I/O for channel 'group_replication_recovery': error connecting to master 'mysql_innodb_cluster_r0430970923@mysql3:3306' - retry-time: 60 retries: 1, Error_code: MY-002005

- [ERROR] [MY-011582] [Repl] Plugin group_replication reported: 'There was an error when connecting to the donor server. Please check that group_replication_recovery channel credentials and all MEMBER_HOST column values of performance_schema.replication_group_members table are correct and DNS resolvable.'

- [ERROR] [MY-011583] [Repl] Plugin group_replication reported: 'For details please check performance_schema.replication_connection_status table and error log messages of Slave I/O for channel group_replication_recovery.'

主库的日志应用卡在某个位置无法应用到从库,出现报错:

- [ERROR] [MY-010586] [Repl] Error running query, slave SQL thread aborted. Fix the problem, and restart the slave SQL thread with "SLAVE START". We stopped at log 'binlog.000007' position 151

- [ERROR] [MY-010584] [Repl] Slave SQL for channel 'group_replication_applier': Error executing row event: 'Unknown database 'mysql_innodb_cluster_metadata'', Error_code: MY-001049

重建master:

- mysql> stop group_replication;

- mysql> reset master;

报错 4

- [ERROR] Slave SQL for channel 'group_replication_recovery': Could not execute Write_rows event on table mysql_innodb_cluster_metadata.instances;

- Cannot add or update a child row: a foreign key constraint fails (mysql_innodb_cluster_metadata.instances, CONSTRAINT instances_ibfk_1 FOREIGN KEY (host_id) REFERENCES hosts (host_id)),

- Error_code: 1452; handler error HA_ERR_NO_REFERENCED_ROW; the event's master log binlog.000001, end_log_pos 3059, Error_code: 1452

解决方式:清空表mysql_innodb_cluster_metadata.hosts; 重新建立集群

报错5

This member has more executed transactions than those present in the group解决方式:

- mysql> stop group_replication;

- mysql> reset master;

用户操作系统资源的限制

- [Warning] Buffered warning: Changed limits: max_open_files: 1024 (requested 5000)

- [Warning] Buffered warning: Changed limits: table_open_cache: 431 (requested 2000)

解决方式:

- # vim /etc/security/limits.conf # 添加下面内容

- mysql soft nproc 2047

- mysql hard nproc 16384

- mysql soft nofile 1024

- mysql hard nofile 65535

报错 6

- dba.rebootClusterFromCompleteOutage: The active session instance isn't the most updated in comparison with the ONLINE instances of the

- Cluster's metadata.

在集群没有起来时某些机器的数据表发生变动,导致数据不一致;

解决方式:所有MySQL机器通过reset master命令清空binlogs

- mysql> reset master;

- mysql> show master logs;

然后再运行Dba.rebootClusterFromCompleteOutage重启集群。

service mysql restart 无法重启mysql,mysql stuck,并一直输出日志'

[Note] Plugin group_replication reported: '[GCS] cli_err 2''解决方式:停止MySQL的命令为:

#pkill -9 mysqld如何将Multi-Primary改为Single-Primary?

a) 解散原来的集群:mysql-js> cluster.dissolve({force: true})

b) 每台主机MySQL修改如下配置:

- mysql> set global group_replication_enforce_update_everywhere_checks=OFF;

- mysql> set global group_replication_single_primary_mode=ON;

c) 重新创建集群:

- mysql-js> var cluster = dba.createCluster('mysqlCluster');

- mysql-js> cluster.addInstance('chianyu@svr2:3306');

- mysql-js> cluster.addInstance('chianyu@svr3:3306');

组复制的限制

事物锁缺失问题:

组复制建议,事物隔离级别,read commit

序列化隔离级别:多主模式不支持

并发DDL和DML: 多主模式下,不支持 一边对一个表进行DDL,另一边进行更新,这样对于DDL在其他实例上操作有未检出的风险

外键级联约束:多主模式下,多级外键依赖对引起多级操作, 因此可能导致未知冲突,建议打开 group_replication_enforce_update_everywhere_checks=ON

大事物,超过5秒未提交,会导致组通信失败,

多主模式下:select * for update 会导致 死锁。因为这个锁并非全组共享。

部分复制不支持:组复制下,设置部分复制,会过滤事物,导致组事物不一致。

Mysql 8.0.11 group_replication_enforce_update_everywhere_checks=ON 多主模式下不支持。

停止复制的情况下,某个节点执行命令后再启动,会因为本地有私有事物,无法加入集群。需要全局 reset master 重新开始集群复制。

多实例环境不要用 3306端口

多实例环境下,某个实例采用了默认的3306端口,会导致经常性的误操作。一台主机多部署10个实例。比如:

cluster节点A服务器启用三个端口实例: 3310, 3320, 3330,

cluster节点B服务器启用三个端口实例: 3310, 3320, 3330

cluster节点C服务器启用三个端口实例: 3310, 3320, 3330

实例数据目录分别为: /data/mysql3310, /data/mysql3320, /data/mysql3330

管理节点D服务启动三个端口route端口实例: 3310, 3320, 3330

管理节点E服务启动三个端口route端口实例: 3310, 3320, 3330

实例数据目录分别为: /data/router3310, /data/router3320, /data/router3330