热门标签

热门文章

- 1wx.chooseimage 超过了最大请求长度_从Timing看HTTP请求的优化方向

- 2微信小程序开发

- 3Pycharm通过ssh远程连接服务器_pycharmssh连接远程服务器

- 4Angalur 框架 文本域textarea 绑定input事件 内容汉字不能触发事件 问题分析与解决_textarea事件

- 5房屋租赁小程序|租赁小程序开发|租赁系统开发功能_租房商铺信息发布系统小程序

- 6基于elementUI的loading全局加载_element loading 全局

- 7New Moto X 2014 全版本官方解锁Bootloader图文教程

- 8小和问题_在一个数组中,每一个数左边比当前数小的数累加起来,叫做这个数组的小和。求一个数

- 9mac 安装 Homebrew教程_mac homebrew

- 10鸿蒙开发HTTP‘request data error‘问题解决_鸿蒙网络请求

当前位置: article > 正文

flash attention

作者:笔触狂放9 | 2024-03-20 03:13:30

赞

踩

flash attention

一、目录

- flash attention

- flash attention 与 standard attention 时间/内存 对比。

- flash attention 算法实现

- 比较flash attention 计算、memory-efficient attention 等不同内核下用时

二、实现

- flash attention

目的: 提高运行速度,减少内存消耗。 - flash attention 与 standard attention 时间/内存 对比。

参考:https://zhuanlan.zhihu.com/p/638468472

以 batch=32, seq_len=512, n_head=16,head_dim=64 为例,记录flash attention 与standard attention 时间/内存对比。

flash attention实现:

import torch from xformers import ops as xops import time bs = 32 seq_len = 512 n_head = 16 head_dim = 64 query_states = torch.randn((bs, n_head, seq_len, head_dim), dtype=torch.float16).to("cuda:0") key_states = torch.randn((bs, n_head, seq_len, head_dim), dtype=torch.float16).to("cuda:0") value_states = torch.randn((bs, n_head, seq_len, head_dim), dtype=torch.float16).to("cuda:0") flash_query_states = query_states.transpose(1, 2) flash_key_states = key_states.transpose(1, 2) flash_value_states = value_states.transpose(1, 2) start_time = time.time() #xformers 实现的注意力机制, 加速框架 flash_attn_output = xops.memory_efficient_attention( flash_query_states, flash_key_states, flash_value_states, attn_bias=xops.LowerTriangularMask() ) print(f'flash attention time: {(time.time()-start_time)*1000} ms') print(torch.cuda.max_memory_allocated("cuda:0")/1024**2) #192M print("=============================") print(torch.cuda.memory_allocated("cuda:0")/1024**2) #128M

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

standard attention 实现:

import torch from xformers import ops as xops import time bs = 32 seq_len = 512 n_head = 16 head_dim = 64 query_states = torch.randn((bs, n_head, seq_len, head_dim), dtype=torch.float16).to("cuda:0") key_states = torch.randn((bs, n_head, seq_len, head_dim), dtype=torch.float16).to("cuda:0") value_states = torch.randn((bs, n_head, seq_len, head_dim), dtype=torch.float16).to("cuda:0") flash_query_states = query_states.transpose(1, 2) flash_key_states = key_states.transpose(1, 2) flash_value_states = value_states.transpose(1, 2) start_time = time.time() import math import torch.nn as nn attention_mask = torch.tril(torch.ones((seq_len, seq_len), dtype=torch.bool)).view(1, 1, seq_len, seq_len) attention_mask = attention_mask.to(dtype=torch.float16).cuda() # fp16 compatibility attention_mask = (1.0 - attention_mask) * torch.finfo(torch.float16).min #数据类型 def standard_attention(query_states, key_states, value_states, attention_mask): attn_weights = torch.matmul(query_states, key_states.transpose(2, 3)) / math.sqrt(head_dim) attn_weights = attn_weights + attention_mask # upcast attention to fp32 attn_weights = nn.functional.softmax(attn_weights, dim=-1, dtype=torch.float32).to(query_states.dtype) attn_output = torch.matmul(attn_weights, value_states) attn_output = attn_output.transpose(1, 2) return attn_output start_time = time.time() attn_output = standard_attention(query_states, key_states, value_states, attention_mask) print(f'standard attention time: {(time.time()-start_time)*1000} ms') #print(torch.allclose(attn_output, flash_attn_output, rtol=2e-3, atol=2e-3)) #判断两个张量是否接近相等(计算机计算的不精确性,完全相等的浮点数可能存在微小差异) print(torch.cuda.max_memory_allocated("cuda:0")/1024**2) #1128M print("=============================") print(torch.cuda.memory_allocated("cuda:0")/1024**2) #136M

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- flash attention 算法

参考:https://blog.csdn.net/qinduohao333/article/details/131449876

FlashAttention算法实现的关键在于以下三点:

softmax的tiling展开,可以支持softmax的拆分并行计算,从而提升计算效率

反向过程中的重计算,减少大量的显存占用,节省显存开销。

通过CUDA编程实现fusion kernel

参数了解:

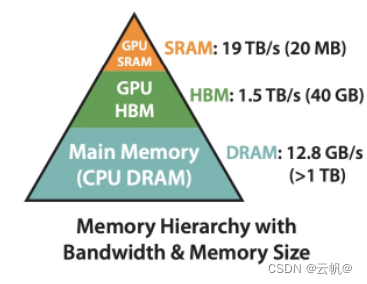

SRAM:静态显存。嵌入在GPU芯片上的SRAM存储器。

HBM:高带宽内存。使得GPU能够更快地读取和写入数据。

DRAM: 动态显存。嵌入在CPU芯片上的DARM存储器。

所以:读写速度 SRAM>HBM>DRAM.

代码参考:https://blog.csdn.net/bornfree5511/article/details/133657656?utm_medium=distribute.pc_relevant.none-task-blog-2defaultbaidujs_baidulandingword~default-1-133657656-blog-131927436.235v40pc_relevant_3m_sort_dl_base2&spm=1001.2101.3001.4242.2&utm_relevant_index=4

flash attention1 实现:

import torch torch.manual_seed(456) N, d = 16, 8 Q_mat = torch.rand((N, d)) K_mat = torch.rand((N, d)) V_mat = torch.rand((N, d)) # 执行标准的pytorch softmax和attention计算 expected_softmax = torch.softmax(Q_mat @ K_mat.T, dim=1) expected_attention = expected_softmax @ V_mat # 分块(tiling)尺寸,以SRAM的大小计算得到 Br = 4 Bc = d # flash attention算法流程的第2步,首先在HBM中创建用于存储输出结果的O,全部初始化为0 O = torch.zeros((N, d)) # flash attention算法流程的第2步,用来存储softmax的分母值,在HBM中创建 l = torch.zeros((N, 1)) # flash attention算法流程的第2步,用来存储每个block的最大值,在HBM中创建 m = torch.full((N, 1), -torch.inf) # 算法流程的第5步,执行外循环 for block_start_Bc in range(0, N, Bc): block_end_Bc = block_start_Bc + Bc # line 6, load a block from matmul input tensor # 算法流程第6步,从HBM中load Kj, Vj的一个block到SRAM Kj = K_mat[block_start_Bc:block_end_Bc, :] # shape Bc x d Vj = V_mat[block_start_Bc:block_end_Bc, :] # shape Bc x d # 算法流程第7步,执行内循环 for block_start_Br in range(0, N, Br): block_end_Br = block_start_Br + Br # 算法流程第8行,从HBM中分别load以下几项到SRAM中 mi = m[block_start_Br:block_end_Br, :] # shape Br x 1 li = l[block_start_Br:block_end_Br, :] # shape Br x 1 Oi = O[block_start_Br:block_end_Br, :] # shape Br x d Qi = Q_mat[block_start_Br:block_end_Br, :] # shape Br x d # 算法流程第9行 Sij = Qi @ Kj.T # shape Br x Bc # 算法流程第10行,计算当前block每行的最大值 mij_hat = torch.max(Sij, dim=1).values[:, None] # 算法流程第10行,计算softmax的分母 pij_hat = torch.exp(Sij - mij_hat) lij_hat = torch.sum(pij_hat, dim=1)[:, None] # 算法流程第11行,找到当前block的每行最大值以及之前的最大值 mi_new = torch.max(torch.column_stack([mi, mij_hat]), dim=1).values[:, None] # 算法流程第11行,计算softmax的分母,但是带了online计算的校正,此公式与前面说的online safe softmax不一致,但是是同样的数学表达式,只是从针对标量的逐个计算扩展到了针对逐个向量的计算 li_new = torch.exp(mi - mi_new) * li + torch.exp(mij_hat - mi_new) * lij_hat # 算法流程第12行,计算每个block的输出值 Oi = (li * torch.exp(mi - mi_new) * Oi / li_new) + (torch.exp(mij_hat - mi_new) * pij_hat / li_new) @ Vj # 算法流程第13行 m[block_start_Br:block_end_Br, :] = mi_new # row max l[block_start_Br:block_end_Br, :] = li_new # softmax denominator # 算法流程第12行,将Oi再写回到HBM O[block_start_Br:block_end_Br, :] = Oi print(torch.allclose(O, expected_attention))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

flash attention2 实现:

import torch torch.manual_seed(456) N, d = 16, 8 Q_mat = torch.rand((N, d)) K_mat = torch.rand((N, d)) V_mat = torch.rand((N, d)) # 执行标准的pytorch softmax和attention计算 expected_softmax = torch.softmax(Q_mat @ K_mat.T, dim=1) expected_attention = expected_softmax @ V_mat # 分块(tiling)尺寸,以SRAM的大小计算得到 Br = 4 Bc = d O = torch.zeros((N, d)) # 算法流程第3步,执行外循环 for block_start_Br in range(0, N, Br): block_end_Br = block_start_Br + Br # 算法流程第4步,从HBM中load Qi 的一个block到SRAM Qi = Q_mat[block_start_Br:block_end_Br, :] # 算法流程第5步,初始化每个block的值 Oi = torch.zeros((Br, d)) # shape Br x d li = torch.zeros((Br, 1)) # shape Br x 1 mi = torch.full((Br, 1), -torch.inf) # shape Br x 1 # 算法流程第6步,执行内循环 for block_start_Bc in range(0, N, Bc): block_end_Bc = block_start_Bc + Bc # 算法流程第7步,load Kj, Vj到SRAM Kj = K_mat[block_start_Bc:block_end_Bc, :] Vj = V_mat[block_start_Bc:block_end_Bc, :] # 算法流程第8步 Sij = Qi @ Kj.T # 算法流程第9步 mi_new = torch.max(torch.column_stack([mi, torch.max(Sij, dim=1).values[:, None]]), dim=1).values[:, None] Pij_hat = torch.exp(Sij - mi_new) li = torch.exp(mi - mi_new) * li + torch.sum(Pij_hat, dim=1)[:, None] # 算法流程第10步 Oi = Oi * torch.exp(mi - mi_new) + Pij_hat @ Vj mi = mi_new # 第12步 Oi = Oi / li # 第14步 O[block_start_Br:block_end_Br, :] = Oi print(torch.allclose(O, expected_attention))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 比较flash attention 计算、memory-efficient attention 等不同内核下用时

参考:https://blog.51cto.com/u_15293476/6131364

用时比较: 内核下torch 实现>不指定内核下torch 实现> 内核下flash attention> 内核下 efficient attention.

import torch import torch.nn.functional as F from rich import print from torch.backends.cuda import sdp_kernel #内核计算 from enum import IntEnum import torch.utils.benchmark as benchmark device = "cuda" if torch.cuda.is_available() else "cpu" #cudnn 需要使用gpu # 超参数定义 batch_size = 64 max_sequence_len = 256 num_heads = 32 embed_dimension = 32 dtype = torch.float16 # 模拟 q k v query = torch.rand(batch_size, num_heads, max_sequence_len, embed_dimension, device=device, dtype=dtype) key = torch.rand(batch_size, num_heads, max_sequence_len, embed_dimension, device=device, dtype=dtype) value = torch.rand(batch_size, num_heads, max_sequence_len, embed_dimension, device=device, dtype=dtype) # 定义一个计时器: def torch_timer(f, *args, **kwargs): t0 = benchmark.Timer( stmt="f(*args, **kwargs)", globals={"args": args, "kwargs": kwargs, "f": f} ) return t0.blocked_autorange().mean * 1e6 # torch.backends.cuda中也实现了,这里拿出了为了好理解backend_map是啥 class SDPBackend(IntEnum): r""" Enum class for the scaled dot product attention backends. """ ERROR = -1 MATH = 0 FLASH_ATTENTION = 1 EFFICIENT_ATTENTION = 2 # 使用上下文管理器context manager来 # 其他三种方案,字典映射 backend_map = { SDPBackend.MATH: { #启用pytorch 实现 "enable_math": True, "enable_flash": False, "enable_mem_efficient": False}, SDPBackend.FLASH_ATTENTION: { #启用flashattention "enable_math": False, "enable_flash": True, "enable_mem_efficient": False}, SDPBackend.EFFICIENT_ATTENTION: { #启用memory_efficient attention "enable_math": False, "enable_flash": False, "enable_mem_efficient": True} } # 基本版,不指定 print(f"基本对照方案 运行时间: {torch_timer(F.scaled_dot_product_attention, query, key, value):.3f} microseconds") # 基本对照方案 运行时间: 558.831 microseconds #内核中运行 with sdp_kernel(**backend_map[SDPBackend.MATH]): print(f"math 运行时间: {torch_timer(F.scaled_dot_product_attention, query, key, value):.3f} microseconds") # math 运行时间: 1013.422 microseconds with sdp_kernel(**backend_map[SDPBackend.FLASH_ATTENTION]): try: print(f"flash attention 运行时间: {torch_timer(F.scaled_dot_product_attention, query, key, value):.3f} microseconds") except RuntimeError: print("FlashAttention is not supported") # flash attention 运行时间: 557.343 microseconds with sdp_kernel(**backend_map[SDPBackend.EFFICIENT_ATTENTION]): try: print(f"Memory efficient 运行时间: {torch_timer(F.scaled_dot_product_attention, query, key, value):.3f} microseconds") except RuntimeError: print("EfficientAttention is not supported") # Memory efficient 运行时间: 428.007 microseconds

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/笔触狂放9/article/detail/270257

推荐阅读

相关标签