- 1在 Windows 上安装 Java指南_java windows

- 2数据聚类:Mean-Shift和EM算法

- 3pycharm使用虚拟环境中的环境_pycharm 用哪个环境

- 4Windows环境下安装hadoop_windows安装hoodoop

- 5计算机组成原理8位算术逻辑运算实验报告,《计算机组成原理》实验报告-8位算术逻辑运算实验.pdf...

- 6固件远程更新之STARTUPE2原语(fpga控制flash)

- 7解决方案:MySQL安装失败——Initializing database(初始化数据库失败)_mysql安装initalizing

- 8光伏无人机巡检主要有些什么功能和特点?

- 9中文自然语言处理学习笔记(二)——语料库的安装与使用_下载nlp安装包之后怎样使用

- 10gem5学习——HeteroGarnet:对多样互连系统的详细模拟器_gem5 garnet 介绍

离线安装k8s集群_error: no containers to start

赞

踩

搭建过程

本文遇到的安装包,我都会统一放在网盘上,省去再收集的步骤,需要注意的是每台主机的hostname需要不同,不然hostname相同的主机只能注册上一台

yum源设置

介于用rpm安装docker时,会遇到各种依赖问题,最方便的方式就是搭一个离线yum源,搭建过程可参看另一篇文章:离线yum源搭建

添加repo:

[c7-media]

name=CentOS-$releasever - Media

baseurl=http://192.168.211.157/yum-iso/

gpgcheck=0

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

- 1

- 2

- 3

- 4

- 5

- 6

docker安装

注意关闭防火墙和SELinux

setenforce 0

systemctl stop firewalld

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

rpm -ivh libtool-ltdl-2.4.2-22.el7_3.x86_64.rpm container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm docker-ce-18.06.1.ce-3.el7.x86_64.rpm

systemctl start docker

systemctl enable docker

- 1

- 2

- 3

- 4

- 5

- 6

添加daemon.json到/etc/docker下

#registry-mirrors为harbor仓库地址 #insecure-registries也需要填上仓库地址,不然拉镜像时会报443错误 [root@k8s-master docker]# vi ../daemon.json { "registry-mirrors": ["http://192.168.211.166"], "max-concurrent-downloads": 10, "insecure-registries": ["192.168.211.165","192.168.211.166","192.168.211.167","134.108.20.13"], "log-driver": "json-file", "log-level": "warn", "experimental": false, "log-opts": { "max-size": "10m", "max-file": "3" }, "graph": "/data/dockerlib/docker" }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

添加完毕后重启docker

每个节点设置hostname

hostnamectl set-hostname kube-master-1

hostnamectl set-hostname kube-node-1

hostnamectl set-hostname kube-node-2

cat <<EOF >>/etc/hosts

172.16.140.100 kube-master-1

172.16.140.101 kube-node-1

172.16.140.102 kube-node-2

EOF

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

harbor离线安装

以下安装过程主要参考:离线搭建k8s环境

安装docker,下面进入harbor安装,

下载离线安装包:

curl -O https://github.com/goharbor/harbor/releases/download/v1.9.3/harbor-offline-installer-v1.9.3.tgz

yumdownloader docker-compose --resolve --destdir=/root/download/docker-compose/

#安装docker-compose

rpm -Uvh /root/download/docker-compose/*.rpm

#安装harbor

tar xf /root/harbor-offline-installer-v1.9.3.tgz

cd harbor/

#这里172就是自己harbor所在的服务器ip

sed -i 's/reg.mydomain.com/172.16.140.103/g' harbor.yml

./install.sh

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

登录harbor:http://172.16.140.103/harbor/

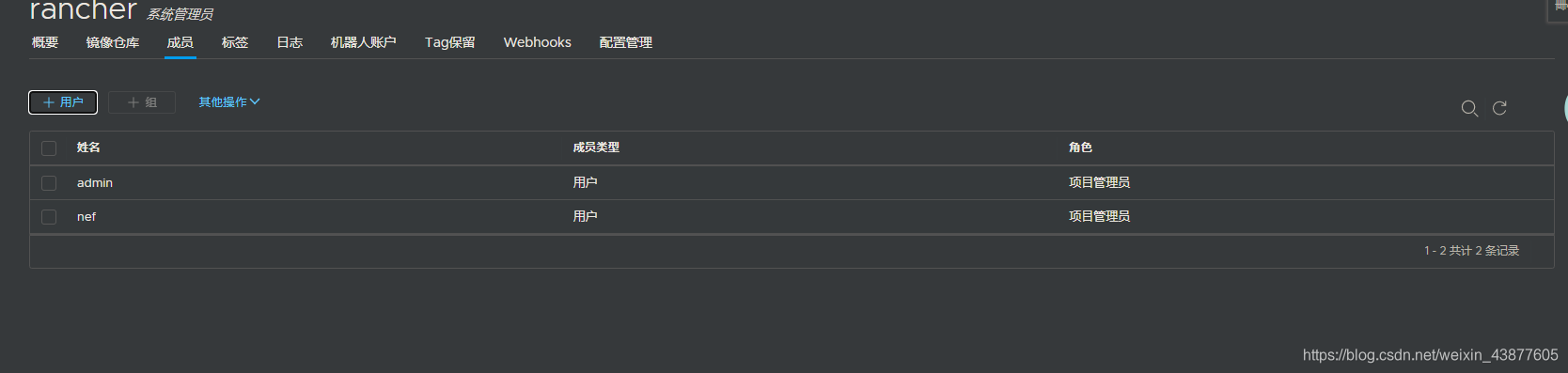

输入用户名admin,密码Harbor12345登陆,新建一个rancher项目,再新建一个用户installer,加入到rancher项目里,角色为项目管理员,为后续推送镜像做准备

harbor服务器的启动和停止:

#注意执行的时候要在刚harbor-offline-installer-v1.9.3.tgz解压出来的harbor目录下,需要有个docker-compose.yml文件

docker-compose start/docker-compose stop

#如果启动失败先执行再执行start,不一定正确

docker-compose up

- 1

- 2

- 3

- 4

如果遇到ERROR: No containers to start,先执行docker-compose create再docker-compose start即可

镜像推送

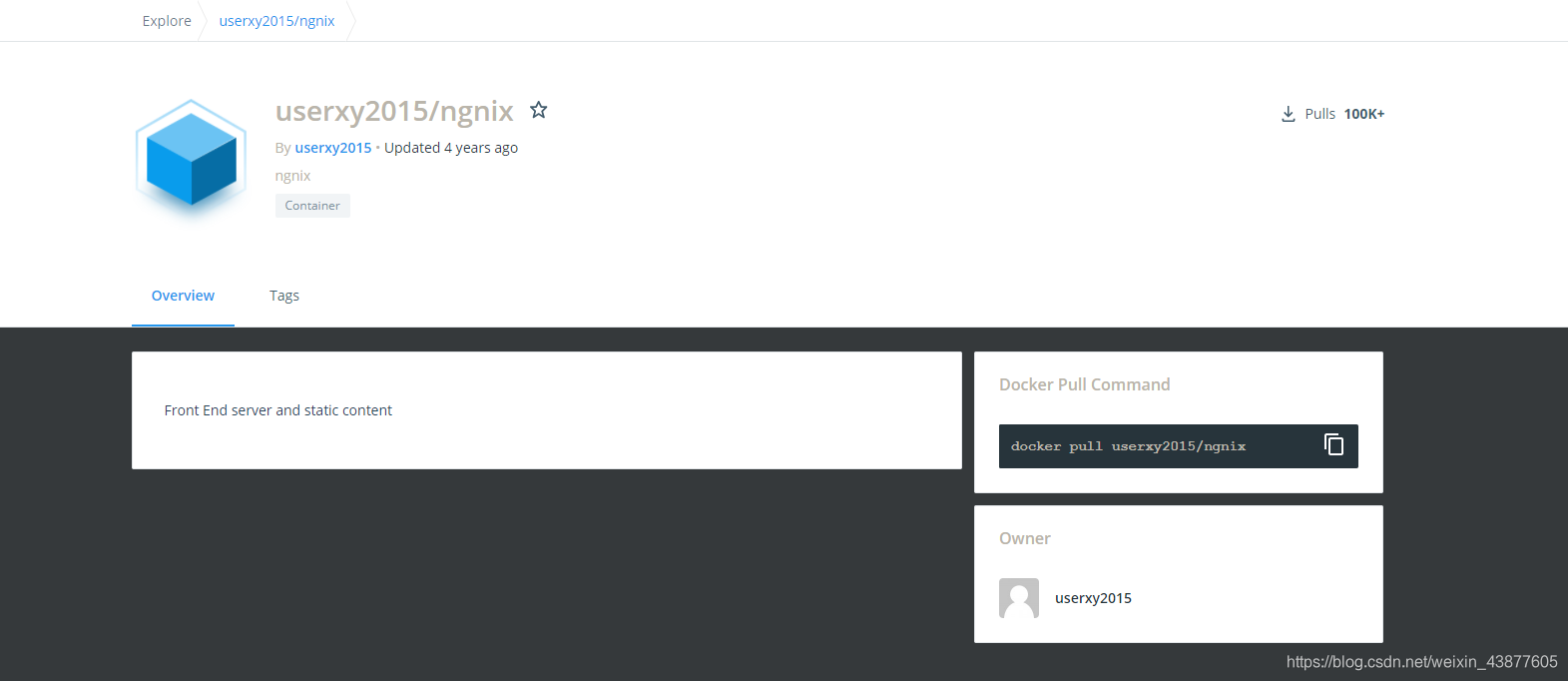

如果安装k8s或者其他组件时需要镜像放到harbor上,先去docker hub上找需要的镜像,先在联网的服务器上下载,再传到内网环境主机上,再推送到harbor,以nginx为例

进入dockers hub搜索nginx,

复制pull命令在服务器执行,

docker pull userxy2015/ngnix

[root@beta-5gcc-1 chart]# docker images|grep ngnix

userxy2015/ngnix latest 17a92fa0c614 4 years ago 182MB

docker save userxy2015/ngnix:latest -o ~/nginx.tar

#将保存下来的tar镜像复制到内网环境主机上,再执行

docker load -i nginx.tar

#重新打标签,192.168的地址为harbor地址

docker tag userxy2015/ngnix:latest 192.168.2.1/rancher/nginx:latest

docker login 192.168.2.1

docker push 192.168.2.1/rancher/nginx:latest

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

登录harbor进入rancher项目里就能看到新推送上来的镜像

打包jar镜像

创建一个Dockerfile:

FROM 192.168.211.166/nef/jdk:1.8

MAINTAINER holytan

RUN apt-get update

RUN apt-get install zip -y

RUN apt-get install unzip -y

ADD nef-server.jar nef-server.jar

EXPOSE 8085

ENTRYPOINT ["java","-jar","nef-server.jar"]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

执行

dockers build -t nef-server:version .

- 1

这里得version是版本号可以自定义,上述的Dockerfile安装了zip和unzip,但这个要依赖于可联网的基础,如果没有该环境可以考虑在通外网的服务器上打出该镜像作为基础镜像,然后将jar包拷入docker容器内。

如果想更新jar包,只需要使用docker cp demo-0.0.1-SNAPSHOT.jar 容器ID:/demo.jar,就可以将demo-0.0.1-SNAPSHOT.jar拷贝进容器并重命名,然后 docker restart 容器ID 重启容器。还有个思路就是将rpm安装包和服务jar一起打入镜像里,运行镜像的时候安装该离线包,这个会比较好,只是要收集一下离软件线包

rancher 安装

rancher离线安装官方教程

rancher和harbor都需要单独在一台服务器上,无法加入k8s集群

利用rancher来安装k8s是相对简单的方式,搭建好rancher后,只需要一条命令就能轻松创建集群,这里的难点就是在于k8s组件的镜像收集,而这些其实官方都为你做好了,在能联网的主机上执行保存镜像脚本rancher-save-images.sh:./rancher-save-images.sh --image-list ./rancher-images.txt,用到的脚本都可以在rancher中文网找到,本文采用的是v2.5.0版本,对应的k8s版本为V1.19.2

等待脚本执行完毕后,推送前需要在harbor里创建一个rancher的公开库,搭建rancher的服务器空间建议在80G,建完后docker login harbor_addressc再执行rancher-load-images.sh将镜像推送到自己的仓库:./rancher-load-images.sh --image-list ./rancher-images.txt --registry harbor地址

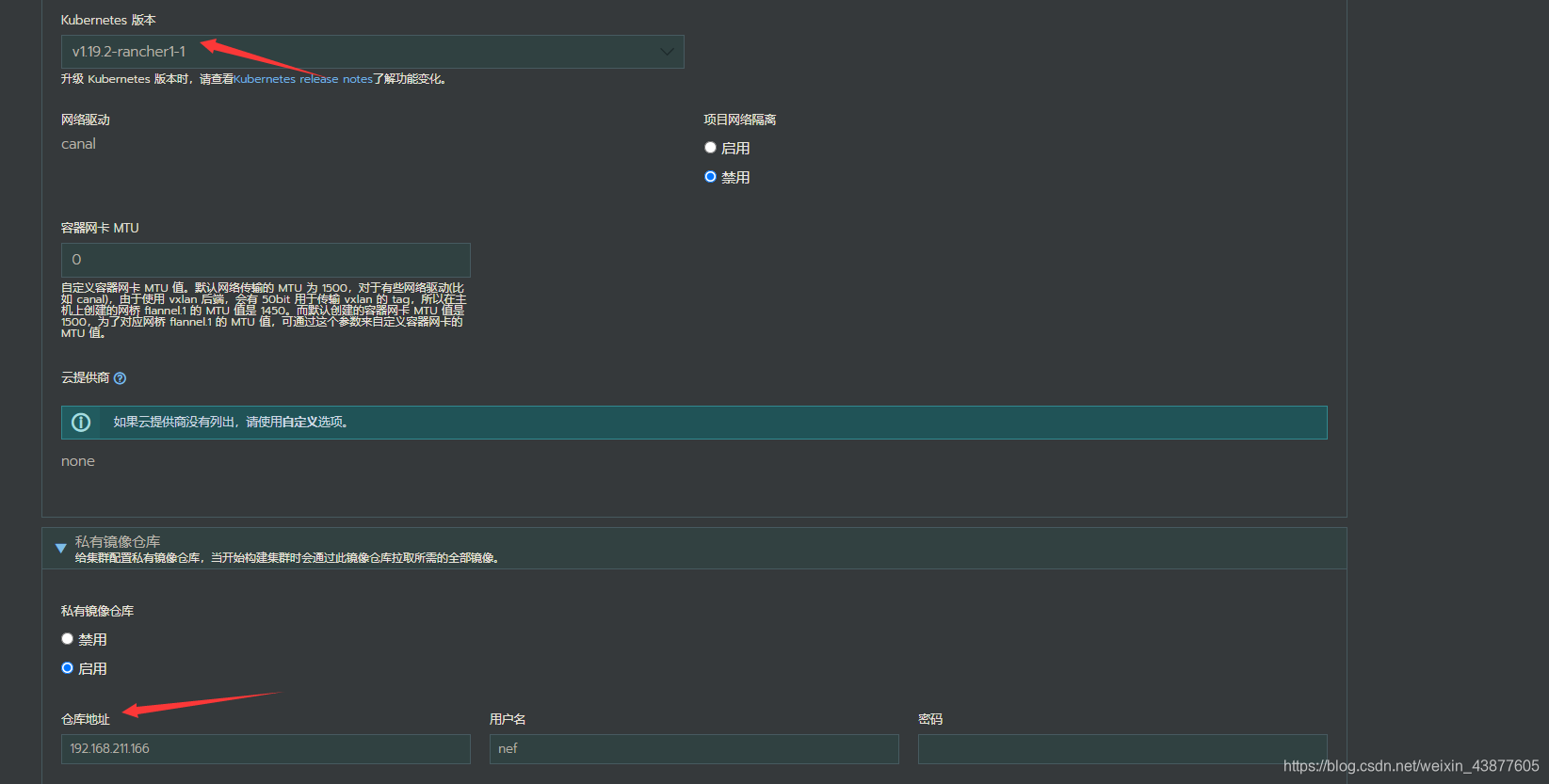

rancher-images.txt,这里的images列表配合的是k8s v1.19.2版本,如果在rancher创建集群时没有把版本跟镜像匹配对,就会搭建失败

镜像准备完毕后,进入内网环境,搭建rancher server,执行下面任一命令,

docker run -d --privileged --restart=unless-stopped -p 80:80 -p 443:443 192.168.211.166/rancher/rancher:v2.5.0

docker run -d --privileged --restart=unless-stopped -p 80:80 -p 443:443 -e CATTLE_SYSTEM_CATALOG=bundled -e CATTLE_SYSTEM_DEFAULT_REGISTRY=192.168.211.166 192.168.211.166/rancher/rancher:v2.5.0

- 1

- 2

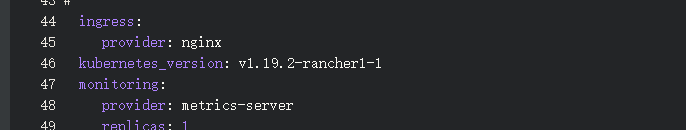

等待1分钟后,输入rancher所在的IP,登录rancher,创建集群,这里需要注意2个点,一个是开启私有仓库,一个是k8s的版本一定要跟k8s组件的镜像版本对应,如果k8s版本不能选择,将创建集群的方式改为yaml文件输入方式,在kubernetes_version处直接输入v1.19.2-rancher1-1

接下来就是根据主机角色,生成命令复制到目标服务器执行即可,如果遇到问题要具体用docker logs -f去看日志,核心组件主要是rancher agent,他负责k8s组件的拉取和集群注册。

k8s master节点安装

master节点需要安装kubectl,helm,kubectl下载指定版本,替换命令中的版本号

curl -LO https://dl.k8s.io/release/v1.18.17/bin/linux/amd64/kubectl

sudo mv kubectl /usr/local/bin/kubectl

- 1

- 2

而helm到helm3后不再依赖tiller,只需在github helm版本发布下载即可,另外复制rancher上的kubeConfig文件内容放到~/.kube/config处,没有该文件夹就创建一个即可

NFS PVC PV自动创建

搭建NFS服务器:

yum install nfs-utils -y

yum install rpcbind -y

#设置共享文件夹

mkdir -p /nfs/data/

chmod 755 /nfs/data

cat <<EOF >>/etc/exports

/nfs/ *(async,insecure,no_root_squash,no_subtree_check,rw)

/nfs/data/ *(async,insecure,no_root_squash,no_subtree_check,rw)

EOF

exportfs -r

systemctl start rpcbind

systemctl start nfs

systemctl enable rpcbind

systemctl enable nfs-server.service

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

nfs-client-provisioner搭建:

需要nfs-client-provisioner镜像,一样可以通过前文的方式去获取镜像推到私有仓库里

kubectl create -f cluster-admin.rbac.yaml 创建权限(以下为cluster-admin.rbac.yaml内容)

--- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: dcm-rbac name: k8s-admin namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: k8s-admin-crb roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: k8s-admin namespace: kube-system

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

kubectl create -f nfs-client-provisioner.yaml创建nfs-client-provisioner(以下为nfs-client-provisioner.yaml内容)

kind: Deployment apiVersion: apps/v1 metadata: name: nfs-client-provisioner namespace: kube-system spec: replicas: 1 selector: matchLabels: app: nfs-client-provisioner strategy: type: Recreate template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: k8s-admin containers: - name: nfs-client-provisioner image: alpha-harbor.domain.com/k8s-depend/quay.io/external_storage/nfs-client-provisioner:latest imagePullPolicy: IfNotPresent volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs - name: NFS_SERVER value: 172.20.131.251 - name: NFS_PATH value: /nfs/data volumes: - name: nfs-client-root nfs: server: 172.20.131.251 path: /nfs/data

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

kubectl create -f nfs-client-class.yaml 创建storageclass(以下为nfs-client-class.yaml内容)

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs

- 1

- 2

- 3

- 4

- 5

创建pvc使用对应nfs-client-provisioner验证nfs是否配置成功

kubectl create -f nfs-client-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-client-pvc

namespace: kube-system

spec:

accessModes:

- ReadWriteMany

storageClassName: managed-nfs-storage

resources:

requests:

storage: 100Mi

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

kubectl get pvc nfs-client-pvc -n kube-system 查看pvc 是否自动关联上pv

kubectl delete pvc nfs-client-pvc -n kube-system 验证结束后删除pvc

脚本安装

从零开始搭建离线环境的k8s集群(harbor,推荐)

kubesphere离线安装

ingress-nginx安装配置

ingress有两个核心组件,如果用上述rancher安装k8s集群,这些组件都会自动安装完毕

[root@k8s-master chart]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

default-http-backend-8455484774-jl96f 1/1 Running 3 13d

nginx-ingress-controller-bvsp2 1/1 Running 3 8d

[root@k8s-master chart]# kubectl get service|grep opf

opf-message-push-history-kafka-listener NodePort 10.43.198.52 <none> 8085:30711/TCP 13d

- 1

- 2

- 3

- 4

- 5

- 6

- 7

这里作为试验的服务为opf-message,在查看service的时候可以看到其对外的暴露nodeport为30711,实际内部服务起的是8085

创建一个ingress:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: example-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: rules: - host: nefserver.com http: paths: - path: / pathType: Prefix backend: service: name: opf-message-push-history-kafka-listener port: number: 8085 - path: /v2 pathType: Prefix backend: service: name: web2 port: number: 8080

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

host处这里可以随意定义,定义完要配合后续的外部nginx做访问,service层级下的name就是上面kubectl get service看到的,port就是为实际服务运行的端口,path可以根据不同的二级路径转发到不同的service里,annotations处的rewrite-target后面切记不能变成 /$1,如果这样会导致nginx转发来的二级路径全部丢失,这是k8s官方教程–在Minikube环境使用nginx-ingress里留下的坑

创建完毕后执行:kubectl apply -f example-ingress.yaml

[root@k8s-master chart]# kubectl get ingress

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

example-ingress <none> nefserver.com 192.168.225.73,192.168.226.106,192.168.226.220,192.168.227.186 80 7d23h

- 1

- 2

- 3

- 4

这里的address就是对外可提供访问的服务地址,这里有多个地址,可以用外部nginx做负载均衡

upstream nef_server_pool { server 192.168.211.169:80; server 192.168.211.165:80; server 192.168.213.179:80; server 192.168.211.177:80; } server { listen 8080; server_name 192.168.154.200; access_log /service/log/nef.log; location /nef { proxy_set_header Host nefserver.com; proxy_pass http://nef_server_pool/; proxy_set_header X-Forwarded-For $remote_addr; proxy_set_header X-Forwarded-Port $remote_port; } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

以上配置思路存在一个缺陷,server_name填的是ip,意味着只能以二级路径做为区分服务做转发,这样灵活性太差,改变了原服务对外的接口形式,一般这里填的是服务的域名,也就是这里的nefserver.com,但是这属于内网的域名,需要配合搭建一个内网DNS,将涉及到的服务域名都指向这个nginx,然后利用一个端口监听多个域名的方式,可以实现一个nginx做k8s服务的代理

通过二级路径区分多个服务

假设一个域名下,想通过二级路径作为区分服务,比如domain.com/A/hi,是想转发给A,A得到的是hi的请求,domain.com/B/hey,B得到的是hey请求

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: example-ingress namespace: example-namespace annotations: nginx.ingress.kubernetes.io/rewrite-target: /$2 spec: rules: - host: myexample.com http: paths: - path: /aaa(/|$)(.*) pathType: Prefix backend: service: name: app-a port: number: 80 - path: /bbb(/|$)(.*) pathType: Prefix backend: service: name: app-b port: number: 80

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

示例:

rewrite.bar.com/something rewrites to rewrite.bar.com/

rewrite.bar.com/something/ rewrites to rewrite.bar.com/

rewrite.bar.com/something/new rewrites to rewrite.bar.com/new

ingress-kubernetes-io-rewrite-target-1-mean

在Minikube使用ingress–主要参考

Ingress-kubernates

节点推倒重建

最好参看官网的教程:clean-cluster-nodes

docker stop $(docker ps -aq)

docker system prune -f

docker volume rm $(docker volume ls -q)

rm -rf /etc/ceph /etc/cni /etc/kubernetes /opt/cni /opt/rke /run/secrets/kubernetes.io /run/calico /run/flannel /var/lib/calico /var/lib/etcd /var/lib/cni /var/lib/kubelet /var/lib/rancher/rke/log /var/log/containers /var/log/pods /var/run/calico /usr/local/software/rancher-home

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

另一脚本:

#!/bin/bash KUBE_SVC=' kubelet kube-scheduler kube-proxy kube-controller-manager kube-apiserver ' for kube_svc in ${KUBE_SVC}; do # 停止服务 if [[ `systemctl is-active ${kube_svc}` == 'active' ]]; then systemctl stop ${kube_svc} fi # 禁止服务开机启动 if [[ `systemctl is-enabled ${kube_svc}` == 'enabled' ]]; then systemctl disable ${kube_svc} fi done # 停止所有容器 docker stop $(docker ps -aq) # 删除所有容器 docker rm -f $(docker ps -qa) # 删除所有容器卷 docker volume rm $(docker volume ls -q) # 卸载mount目录 for mount in $(mount | grep tmpfs | grep '/var/lib/kubelet' | awk '{ print $3 }') /var/lib/kubelet /var/lib/rancher; do umount $mount; done # 备份目录 mv /etc/kubernetes /etc/kubernetes-bak-$(date +"%Y%m%d%H%M") mv /var/lib/etcd /var/lib/etcd-bak-$(date +"%Y%m%d%H%M") mv /var/lib/rancher /var/lib/rancher-bak-$(date +"%Y%m%d%H%M") mv /opt/rke /opt/rke-bak-$(date +"%Y%m%d%H%M") # 删除残留路径 rm -rf /etc/ceph \ /etc/cni \ /opt/cni \ /run/secrets/kubernetes.io \ /run/calico \ /run/flannel \ /var/lib/calico \ /var/lib/cni \ /var/lib/kubelet \ /var/log/containers \ /var/log/kube-audit \ /var/log/pods \ /var/run/calico \ /usr/libexec/kubernetes # 清理网络接口 no_del_net_inter=' lo docker0 eth ens bond ' network_interface=`ls /sys/class/net` for net_inter in $network_interface; do if ! echo "${no_del_net_inter}" | grep -qE ${net_inter:0:3}; then ip link delete $net_inter fi done # 清理残留进程 port_list=' 80 443 6443 2376 2379 2380 8472 9099 10250 10254 ' for port in $port_list; do pid=`netstat -atlnup | grep $port | awk '{print $7}' | awk -F '/' '{print $1}' | grep -v - | sort -rnk2 | uniq` if [[ -n $pid ]]; then kill -9 $pid fi done kube_pid=`ps -ef | grep -v grep | grep kube | awk '{print $2}'` if [[ -n $kube_pid ]]; then kill -9 $kube_pid fi # 清理Iptables表 ## 注意:如果节点Iptables有特殊配置,以下命令请谨慎操作,该操作会使hbase不可用,执行后需要重启hbase sudo iptables --flush sudo iptables --flush --table nat sudo iptables --flush --table filter sudo iptables --table nat --delete-chain sudo iptables --table filter --delete-chain systemctl restart docker

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

如果遇到问题可参考以下链接给出的方案

rancher安装部署

结合jenkins自动打包,推送镜像

在jenkins构建里执行shell:jenkins构建节点需要安装好docker,且配置好harbor仓库地址,参看上一章节

mvn clean package

version_img=${SVN_REVISION}.${BUILD_NUMBER}

#此处的cp的文件为自己工程打包出来的文件,目的目录为含有Dockerfile的文件目录

cp -f ${WORKSPACE}/nef-core/nef-server/target/nef-server.jar /opt/k8s-jenkins/nef-server

cd /opt/k8s-jenkins/nef-server

docker build -t 192.168.211.166/nef/nef-server:${version_img} .

#需要先docker login harbor地址才能push

docker push 192.168.211.166/nef/nef-server:${version_img}

docker rmi 192.168.211.166/nef/nef-server:${version_img}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

遇见的问题

can not find RKE state file

vim /etc/hostname 或者 hostnamectl set-hostname master

failed pulling image "k8s.gcr.io/pause:3.1

先借用一台能上外网的服务器:

docker pull registry.cn-beijing.aliyuncs.com/zhoujun/pause:3.1

docker save registry.aliyuncs.com/google_containers/pause:3.1 -o /root/download/images/pause.tar

- 1

- 2

将下载下来的pause.tar上传到离线服务器

[root@k8s-worker2 download]# docker load -i pause.tar

Loaded image: rancher/pause:3.1

##执行

docker tag rancher/pause:3.1 k8s.gcr.io/pause:3.1

- 1

- 2

- 3

- 4

tiller无法启动

wget https://get.helm.sh/helm-v2.14.3-linux-amd64.tar.gz

sed -i '$a\export HELM_HOST=localhost:44134' /etc/profile

source /etc/profile

nohup tiller &

- 1

- 2

- 3

- 4

The connection to the server localhost:8080 was refused

没有启动tiller

动态伸缩pod数量

kubectl scale deployments/litemall-wx --replicas=2