- 1Java项目:医院挂号管理系统(java+SpringBoot+Vue+ElementUI+Layui+Mysql)_java基于springboot+vue的医院体检预约挂号系统 elementui - 忆云竹

- 2RDD的创建 - Python_educoder rdd的创建 - python

- 3Shiro反序列化漏洞(Shiro-550、Shiro-721)_shiro 550和shiro 721

- 4ABAP 数据写入Excel 并保存

- 5 org.postgresql.util.PSQLException: FATAL: password authentication failed for us

- 6Github 使用教程之如何发布AndroidStudio中的项目_github上安卓源码怎么使用需要改什么配置文件

- 7vue-router 之 keep-alive 渲染_to.meta.keepalive = true;

- 8数据结构 栈实现队列

- 9Codigger开发者篇:开启全新的开发体验(三)

- 10【数据结构-算法题】C++| 二叉树全家桶 | 二叉树的遍历 |前序遍历 中序遍历 后续遍历 层序遍历 |学习笔记_中序遍历,采用链栈的迭代方法c++

搭建k8s集群所遇到的坑_code":5200,"message":"failed to unmarshal configur

赞

踩

1.创建Kubernetes证书时错误

环境:需要在k8s上集群自签APIServer SSL证书+master1配置

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

- 1

报错

Failed to load config file: {"code":5200,"message":"failed to unmarshal configuration: invalid character '\"' after array element"}Failed to parse input: unexpected end of JSON input

- 1

原因是因为ca-config.json和ca-csr.json文件为空,后写入内容再执行,成功。

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

- 1

- 2

- 3

- 4

- 5

搭建是参考别人的文档,原文链接:搭建一个完整的Kubernetes集群–自签APIServer SSL证书+master1配置

但是我最后执行到systemctl start kube-scheduler报错

Failed to start kube-scheduler.service: Unit not found.

- 1

然后查看 kube-apiserver状态

[root@localhost kubernetes]# systemctl status kube-apiserver ● kube-apiserver.service - Kubernetes API Server Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled) Active: failed (Result: start-limit) since Sat 2020-08-15 15:43:51 CST; 10s ago Docs: https://github.com/GoogleCloudPlatform/kubernetes Process: 56292 ExecStart=/usr/bin/kube-apiserver $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBE_ETCD_SERVERS $KUBE_API_ADDRESS $KUBE_API_PORT $KUBELET_PORT $KUBE_ALLOW_PRIV $KUBE_SERVICE_ADDRESSES $KUBE_ADMISSION_CONTROL $KUBE_API_ARGS (code=exited, status=255) Main PID: 56292 (code=exited, status=255) Aug 15 15:43:50 localhost.localdomain systemd[1]: kube-apiserver.service: main process exited, code=exited, status=255/n/a Aug 15 15:43:50 localhost.localdomain systemd[1]: Unit kube-apiserver.service entered failed state. Aug 15 15:43:50 localhost.localdomain systemd[1]: kube-apiserver.service failed. Aug 15 15:43:51 localhost.localdomain systemd[1]: kube-apiserver.service holdoff time over, scheduling restart. Aug 15 15:43:51 localhost.localdomain systemd[1]: Stopped Kubernetes API Server. Aug 15 15:43:51 localhost.localdomain systemd[1]: start request repeated too quickly for kube-apiserver.service Aug 15 15:43:51 localhost.localdomain systemd[1]: Failed to start Kubernetes API Server. Aug 15 15:43:51 localhost.localdomain systemd[1]: Unit kube-apiserver.service entered failed state. Aug 15 15:43:51 localhost.localdomain systemd[1]: kube-apiserver.service failed.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

查看日志发现报错kube-scheduler启动失败导致kube-apiserver启动失败,

此时kubectl get pods也会报错

E0919 14:57:21.242964 414467 memcache.go:265] couldn’t get current server API group list: Get “http://localhost:8080/api?timeout=32s 58”: dial tcp 127.0.0.1:8080: connect: connection refused

E0919 14:57:21.243436 414467 memcache.go:265] couldn’t get current server API group list: Get “http://localhost:8080/api?timeout=32s 58”: dial tcp 127.0.0.1:8080: connect: connection refused

E0919 14:57:21.245295 414467 memcache.go:265] couldn’t get current server API group list: Get “http://localhost:8080/api?timeout=32s 58”: dial tcp 127.0.0.1:8080: connect: connection refused

E0919 14:57:21.246960 414467 memcache.go:265] couldn’t get current server API group list: Get “http://localhost:8080/api?timeout=32s 58”: dial tcp 127.0.0.1:8080: connect: connection refused

E0919 14:57:21.248726 414467 memcache.go:265] couldn’t get current server API group list: Get “http://localhost:8080/api?timeout=32s 58”: dial tcp 127.0.0.1:8080: connect: connection refused

- 1

- 2

- 3

- 4

- 5

kubectl报错通过 journalctl -f -u kubelet 查看日志

[root@localhost rpm]# tail -100f /var/log/messages|grep kube

Jun 24 23:35:39 localhost kubelet: F0624 23:35:39.190803 25600 server.go:190] failed to load Kubelet config file /var/lib/kubelet/config.yaml, error failed to read kubelet config file "/var/lib/kubelet/config.yaml", error: open /var/lib/kubelet/config.yaml: no such file or directory

Jun 24 23:35:39 localhost systemd: kubelet.service: main process exited, code=exited, status=255/n/a

Jun 24 23:35:39 localhost systemd: Unit kubelet.service entered failed state.

Jun 24 23:35:39 localhost systemd: kubelet.service failed.

Jun 24 23:35:49 localhost systemd: kubelet.service holdoff time over, scheduling restart.

Jun 24 23:35:49 localhost systemd: Started kubelet: The Kubernetes Node Agent.

Jun 24 23:35:49 localhost systemd: Starting kubelet: The Kubernetes Node Agent...

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

原因是kubelet 找不到配置文件/var/lib/kubelet/config.yaml,不知道为什么被删了,需要执行

kubeadm init

- 1

然后查看目录

[root@localhost rpm]# cd /var/lib/kubelet/

[root@localhost kubelet]# ls -l

total 12

-rw-r--r--. 1 root root 1609 Jun 24 23:40 config.yaml

-rw-r--r--. 1 root root 40 Jun 24 23:40 cpu_manager_state

drwxr-xr-x. 2 root root 61 Jun 24 23:41 device-plugins

-rw-r--r--. 1 root root 124 Jun 24 23:40 kubeadm-flags.env

drwxr-xr-x. 2 root root 170 Jun 24 23:41 pki

drwx------. 2 root root 6 Jun 24 23:40 plugin-containers

drwxr-x---. 2 root root 6 Jun 24 23:40 plugins

drwxr-x---. 7 root root 210 Jun 24 23:41 pods

[root@localhost kubelet]#

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

再次启动kubectl运行正常

root@localhost kubelet]# systemctl status kubelet.service ● kubelet.service - kubelet: The Kubernetes Node Agent Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled) Drop-In: /etc/systemd/system/kubelet.service.d └─10-kubeadm.conf Active: active (running) since Thu 2021-06-24 23:40:36 PDT; 4min 31s ago Docs: http://kubernetes.io/docs/ Main PID: 26379 (kubelet) Memory: 40.1M CGroup: /system.slice/kubelet.service └─26379 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubele... Jun 24 23:44:46 localhost.localdomain kubelet[26379]: W0624 23:44:46.705701 26379 cni.go:172] Unable to update cni config: No networks found in /etc/cni/net.d Jun 24 23:44:46 localhost.localdomain kubelet[26379]: E0624 23:44:46.705816 26379 kubelet.go:2110] Container runtime network not ready: NetworkReady=fals...itialized Jun 24 23:44:51 localhost.localdomain kubelet[26379]: W0624 23:44:51.706635 26379 cni.go:172] Unable to update cni config: No networks found in /etc/cni/net.d Jun 24 23:44:51 localhost.localdomain kubelet[26379]: E0624 23:44:51.706743 26379 kubelet.go:2110] Container runtime network not ready: NetworkReady=fals...itialized Jun 24 23:44:56 localhost.localdomain kubelet[26379]: W0624 23:44:56.708389 26379 cni.go:172] Unable to update cni config: No networks found in /etc/cni/net.d Jun 24 23:44:56 localhost.localdomain kubelet[26379]: E0624 23:44:56.708506 26379 kubelet.go:2110] Container runtime network not ready: NetworkReady=fals...itialized Jun 24 23:45:01 localhost.localdomain kubelet[26379]: W0624 23:45:01.710355 26379 cni.go:172] Unable to update cni config: No networks found in /etc/cni/net.d Jun 24 23:45:01 localhost.localdomain kubelet[26379]: E0624 23:45:01.710556 26379 kubelet.go:2110] Container runtime network not ready: NetworkReady=fals...itialized Jun 24 23:45:06 localhost.localdomain kubelet[26379]: W0624 23:45:06.712081 26379 cni.go:172] Unable to update cni config: No networks found in /etc/cni/net.d Jun 24 23:45:06 localhost.localdomain kubelet[26379]: E0624 23:45:06.712241 26379 kubelet.go:2110] Container runtime network not ready: NetworkReady=fals...itialized Hint: Some lines were ellipsized, use -l to show in full. [root@localhost kubelet]#

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

但是运行systemctl start kube-scheduler一直报错,apiserver起不起来

Failed to start kube-scheduler.service: Unit not found.

- 1

因为笔者不是专门研究k8s的,只是借助k8s搭建服务,所以就直接重新运行了

Master初始化

kubeadm init --node-name=master --image-repository=registry.aliyuncs.com/google_containers --cri-socket=unix:///var/run/cri-dockerd.sock --apiserver-advertise-address=<服务器IP> --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12

- 1

此后一切都正常

小插曲:

使用kubeadm init时报错10259端口一直被占用,卡死之后初始化还是会报错端口被占用

查看10259端口哪个服务在用,发现是是kube-apiserver这个服务占用了这个端口,于是执行

systemctl stop kube-apiserver

- 1

- 2

初始化成功

笔者个人觉得,自己需要在k8s上搭建服务但又不太了解k8s(比如我),集群上又没有业务和重要的服务,可以考虑将集群重启,可以参考下文,原文链接:Kubernetes集群如何重启

2.执行 kubeadm reset 命令时,系统发现主机上存在多个 CRI 的终端

[root@master kubernetes]# kubeadm reset

Found multiple CRI endpoints on the host. Please define which one do you wish to use by setting the 'criSocket' field in the kubeadm configuration file: unix:///var/run/containerd/containerd.sock, unix:///var/run/cri-dockerd.sock

- 1

- 2

命令后加上–cri-socket=unix:///var/run/cri-dockerd.sock(换成你实际使用的终端)

3.kubeadm需要重新生成admin.conf文件

执行

kubeadm init phase kubeconfig admin

- 1

如果报错i存在多个 CRI 的终端,同理命令最后加上参数–cri-socket=unix:///var/run/cri-dockerd.sock(换成你实际使用的终端)

4.master节点始终处于notReady状态

通过journalctl -xeu kubelet命令查看日志发现如下所示

“Unable to update cni config”

原因是网络插件未安装,下载 kube-flannel.yml或者calico.yaml文件,并执行kubectl apply -f calico.yaml或者执行kubectl apply -f kube-flannel.yml

5.Pod 一直处于 Pending 状态

检查 Node 是否存在 Pod 没有容忍的污点

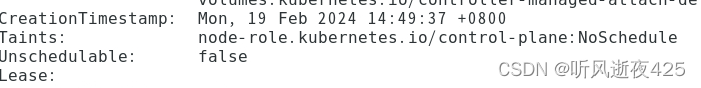

然后执行kubectl describe node master,发现了污点

然后用kubectl删除相关deployment文件,添加容忍后pod成功running

这里也推荐一篇文章,原文链接:Pod 一直处于 Pending 状态

推荐一篇关于k8s如何排查错误的文章,笔者觉得很不错,原文链接如下:

Kubernetes集群管理-故障排错指南