热门标签

热门文章

- 1李彦宏回顾大模型重构百度这一年

- 2手把手教你使用PLSQL远程连接Oracle数据库【内网穿透】_plsql连接数据库

- 3基于ssh免密码登陆服务器_服务器脚本 ssh免密登录

- 4常见的jmeter面试题及答案

- 5git分支的合并与提交_git merge后提交

- 6ES+HBase【案例】仿百度搜索04:开发仿百度搜索项目_如何实现类似百度的搜索引擎

- 7restful服务接口访问乱码 和 505错误_aws 文件url有空格 505

- 8注意!Gradle的Android插件不支持Java8!(可能包括Maven等等)_gradle与java8不匹配

- 9vue-cli自定义创建项目-eslint依赖冲突解决方式_conflicting peer dependency: eslint-plugin-vue@7.2

- 10计算机系统常见故障及处理,电脑常见故障以及解决方案都在这里_电脑常见的故障与处理

当前位置: article > 正文

Python BiLSTM_CRF实现代码,电子病历命名实体识别和关系抽取,序列标注_bilstm-crf模型代码

作者:繁依Fanyi0 | 2024-05-30 20:30:42

赞

踩

bilstm-crf模型代码

参考刘焕勇老师的代码,按照自己数据的训练集进行修改,

1.原始数据样式:

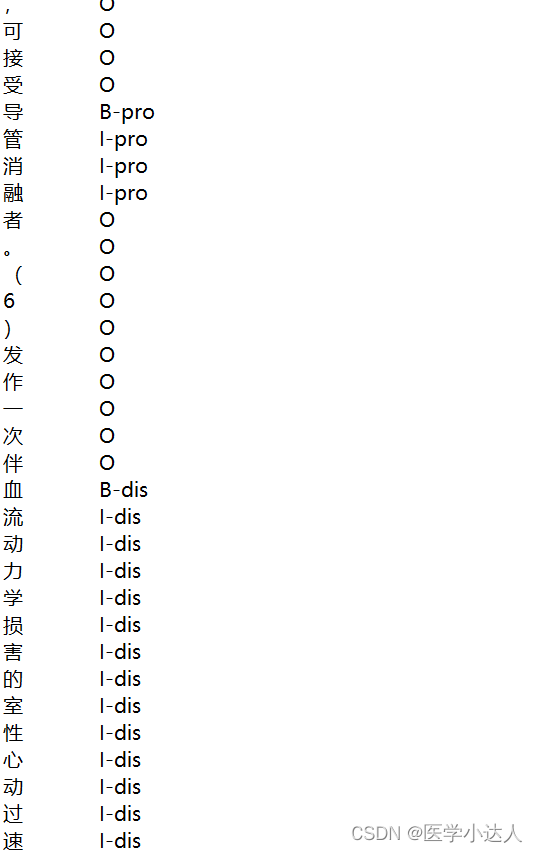

2.清洗后数据样式:

3.训练数据

- import numpy as np

- import matplotlib.pyplot as plt

- import os

-

- from keras import backend as K

- from keras.preprocessing.sequence import pad_sequences

- from keras.models import Sequential

- from keras.layers import Embedding, Bidirectional, LSTM, Dense, TimeDistributed, Dropout

- from keras_contrib.layers.crf import CRF

-

- os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

- '''

- 默认为0,显示所有日志要过滤INFO日志,

- 请将其设置为1WARNINGS另外,2并进一步过滤掉ERROR日志,

- 将其设置为3可以执行以下操作使警告静音

- '''

- class LSTMNER:

- def __init__(self):

- cur = '/'.join(os.path.abspath(__file__).split('/')[:-1])

- self.train_path = os.path.join(cur, 'data/train.txt')

- self.vocab_path = os.path.join(cur, 'model/vocab.txt')

- self.embedding_file = os.path.join(cur, 'model/token_vec_300.bin')

- self.model_path = os.path.join(cur, 'model/tokenvec_bilstm2_crf_model_20.h5')

- self.datas, self.word_dict = self.build_data()

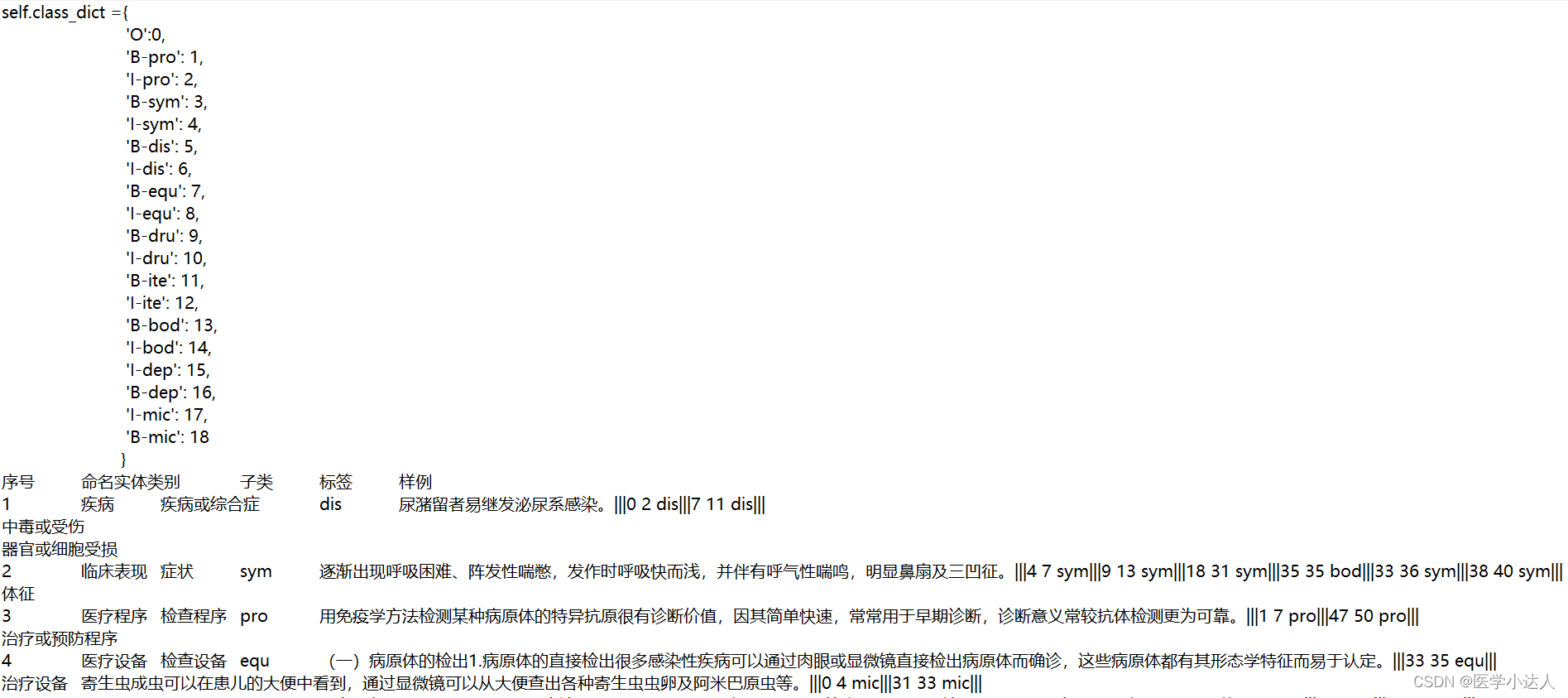

- self.class_dict = {

- 'O': 0,

- 'B-pro': 1,

- 'I-pro': 2,

- 'B-sym': 3,

- 'I-sym': 4,

- 'B-dis': 5,

- 'I-dis': 6,

- 'B-equ': 7,

- 'I-equ': 8,

- 'B-dru': 9,

- 'I-dru': 10,

- 'B-ite': 11,

- 'I-ite': 12,

- 'B-bod': 13,

- 'I-bod': 14,

- 'I-dep': 15,

- 'B-dep': 16,

- 'I-mic': 17,

- 'B-mic': 18

- }

-

- self.EMBEDDING_DIM = 300

- self.EPOCHS = 50

- self.BATCH_SIZE = 64

- self.NUM_CLASSES = len(self.class_dict)

- self.VOCAB_SIZE = len(self.word_dict)

- self.TIME_STAMPS = 150

- self.embedding_matrix = self.build_embedding_matrix()

-

- '''构造数据集'''

- def build_data(self):

- datas = []

- sample_x = []

- sample_y = []

- vocabs = {'UNK'}

- for line in open(self.train_path,encoding = 'utf-8'):

- # if line=='$':

- # continue

- # else:

- line = line.rstrip().strip('$').split('\t')

- if not line:

- continue

- char = line[0]

- if not char:

- continue

- cate = line[-1]

- sample_x.append(char)

- sample_y.append(cate)

- vocabs.add(char)

- if char in ['。','?','!','!','?']:

- datas.append([sample_x, sample_y])

- sample_x = []

- sample_y = []

- word_dict = {wd:index for index, wd in enumerate(list(vocabs))}

- self.write_file(list(vocabs), self.vocab_path)

- return datas, word_dict

-

-

- '''将数据转换成keras所需的格式'''

- def modify_data(self):

- x_train = [[self.word_dict[char] for char in data[0]] for data in self.datas]

- y_train = [[self.class_dict[label] for label in data[1]] for data in self.datas]

- x_train = pad_sequences(x_train, self.TIME_STAMPS)

- y = pad_sequences(y_train, self.TIME_STAMPS)

- y_train = np.expand_dims(y, 2)

- return x_train, y_train

-

- '''保存字典文件'''

- def write_file(self, wordlist, filepath):

- with open(filepath, 'w+',encoding = 'utf-8') as f:

- f.write('\n'.join(wordlist))

-

- '''加载预训练词向量'''

- def load_pretrained_embedding(self):

- embeddings_dict = {}

- with open(self.embedding_file, 'r',encoding = 'utf-8') as f:

- for line in f:

- values = line.strip().split(' ')

- if len(values) < 300:

- continue

- word = values[0]

- coefs = np.asarray(values[1:], dtype='float32')

- embeddings_dict[word] = coefs

- print('Found %s word vectors.' % len(embeddings_dict))

- return embeddings_dict

-

- '''加载词向量矩阵'''

- def build_embedding_matrix(self):

- embedding_dict = self.load_pretrained_embedding()

- embedding_matrix = np.zeros((self.VOCAB_SIZE + 1, self.EMBEDDING_DIM))

- for word, i in self.word_dict.items():

- embedding_vector = embedding_dict.get(word)

- if embedding_vector is not None:

- embedding_matrix[i] = embedding_vector

- return embedding_matrix

-

- '''使用预训练向量进行模型训练'''

- def tokenvec_bilstm2_crf_model(self):

- model = Sequential()

- embedding_layer = Embedding(self.VOCAB_SIZE + 1,

- self.EMBEDDING_DIM,

- weights=[self.embedding_matrix],

- input_length=self.TIME_STAMPS,

- trainable=False,

- mask_zero=True)

- model.add(embedding_layer)

- model.add(Bidirectional(LSTM(128, return_sequences=True)))

- model.add(Dropout(0.5))

- model.add(Bidirectional(LSTM(64, return_sequences=True)))

- model.add(Dropout(0.5))

- model.add(TimeDistributed(Dense(self.NUM_CLASSES)))

- crf_layer = CRF(self.NUM_CLASSES, sparse_target=True)

- model.add(crf_layer)

- model.compile('adam', loss=crf_layer.loss_function, metrics=[crf_layer.accuracy])

- model.summary()

- return model

-

- '''训练模型'''

- def train_model(self):

- x_train, y_train = self.modify_data()

- print(x_train.shape, y_train.shape)

- model = self.tokenvec_bilstm2_crf_model()

- history = model.fit(x_train[:],

- y_train[:],

- validation_split=0.2,

- batch_size=self.BATCH_SIZE,

- epochs=self.EPOCHS)

- # self.draw_train(history)

- model.save(self.model_path)

- return model

-

- '''绘制训练曲线'''

- def draw_train(self, history):

- # Plot training & validation accuracy values

- plt.plot(history.history['acc'])

- plt.title('Model accuracy')

- plt.ylabel('Accuracy')

- plt.xlabel('Epoch')

- plt.legend(['Train'], loc='upper left')

- plt.show()

- # Plot training & validation loss values

- plt.plot(history.history['loss'])

- plt.title('Model loss')

- plt.ylabel('Loss')

- plt.xlabel('Epoch')

- plt.legend(['Train'], loc='upper left')

- plt.show()

- # 7836/7836 [==============================] - 205s 26ms/step - loss: 17.1782 - acc: 0.9624

- '''

- 6268/6268 [==============================] - 145s 23ms/step - loss: 18.5272 - acc: 0.7196 - val_loss: 15.7497 - val_acc: 0.8109

- 6268/6268 [==============================] - 142s 23ms/step - loss: 17.8446 - acc: 0.9099 - val_loss: 15.5915 - val_acc: 0.8378

- 6268/6268 [==============================] - 136s 22ms/step - loss: 17.7280 - acc: 0.9485 - val_loss: 15.5570 - val_acc: 0.8364

- 6268/6268 [==============================] - 133s 21ms/step - loss: 17.6918 - acc: 0.9593 - val_loss: 15.5187 - val_acc: 0.8451

- 6268/6268 [==============================] - 144s 23ms/step - loss: 17.6723 - acc: 0.9649 - val_loss: 15.4944 - val_acc: 0.8451

- '''

-

- if __name__ == '__main__':

- ner = LSTMNER()

- ner.train_model()

4.预测数据:

- import numpy as np

- from keras import backend as K

- from keras.preprocessing.sequence import pad_sequences

- from keras.models import Sequential,load_model

- from keras.layers import Embedding, Bidirectional, LSTM, Dense, TimeDistributed, Dropout

- from keras_contrib.layers.crf import CRF

- import matplotlib.pyplot as plt

- import os

-

- os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

-

- class LSTMNER:

- def __init__(self):

- cur = '/'.join(os.path.abspath(__file__).split('/')[:-1])

- self.train_path = os.path.join(cur, 'data/train.txt')

- self.vocab_path = os.path.join(cur, 'model/vocab.txt')

- self.embedding_file = os.path.join(cur, 'model/token_vec_300.bin')

- self.model_path = os.path.join(cur, 'model/tokenvec_bilstm2_crf_model_20.h5')

- self.word_dict = self.load_worddict()

- self.class_dict = {

- 'O': 0,

- 'B-pro': 1,

- 'I-pro': 2,

- 'B-sym': 3,

- 'I-sym': 4,

- 'B-dis': 5,

- 'I-dis': 6,

- 'B-equ': 7,

- 'I-equ': 8,

- 'B-dru': 9,

- 'I-dru': 10,

- 'B-ite': 11,

- 'I-ite': 12,

- 'B-bod': 13,

- 'I-bod': 14,

- 'I-dep': 15,

- 'B-dep': 16,

- 'I-mic': 17,

- 'B-mic': 18

- }

- self.label_dict = {j:i for i,j in self.class_dict.items()}

- self.EMBEDDING_DIM = 300

- self.EPOCHS = 20

- self.BATCH_SIZE = 64

- self.NUM_CLASSES = len(self.class_dict)

- self.VOCAB_SIZE = len(self.word_dict)

- self.TIME_STAMPS = 150

- self.embedding_matrix = self.build_embedding_matrix()

- self.model = self.tokenvec_bilstm2_crf_model()

- self.model.load_weights(self.model_path)

-

- '加载词表'

- def load_worddict(self):

- vocabs = [line.strip() for line in open(self.vocab_path)]

- word_dict = {wd: index for index, wd in enumerate(vocabs)}

- return word_dict

-

- '''构造输入,转换成所需形式'''

- def build_input(self, text):

- x = []

- for char in text:

- if char not in self.word_dict:

- char = 'UNK'

- x.append(self.word_dict.get(char))

- x = pad_sequences([x], self.TIME_STAMPS)

- return x

-

- def predict(self, text):

- str = self.build_input(text)

- raw = self.model.predict(str)[0][-self.TIME_STAMPS:]

- result = [np.argmax(row) for row in raw]

- chars = [i for i in text]

- tags = [self.label_dict[i] for i in result][len(result)-len(text):]

- res = list(zip(chars, tags))

- print(res)

- return res

-

- '''加载预训练词向量'''

- def load_pretrained_embedding(self):

- embeddings_dict = {}

- with open(self.embedding_file, 'r') as f:

- for line in f:

- values = line.strip().split(' ')

- if len(values) < 300:

- continue

- word = values[0]

- coefs = np.asarray(values[1:], dtype='float32')

- embeddings_dict[word] = coefs

- print('Found %s word vectors.' % len(embeddings_dict))

- return embeddings_dict

-

- '''加载词向量矩阵'''

- def build_embedding_matrix(self):

- embedding_dict = self.load_pretrained_embedding()

- embedding_matrix = np.zeros((self.VOCAB_SIZE + 1, self.EMBEDDING_DIM))

- for word, i in self.word_dict.items():

- embedding_vector = embedding_dict.get(word)

- if embedding_vector is not None:

- embedding_matrix[i] = embedding_vector

-

- return embedding_matrix

-

- '''使用预训练向量进行模型训练'''

- def tokenvec_bilstm2_crf_model(self):

- model = Sequential()

- embedding_layer = Embedding(self.VOCAB_SIZE + 1,

- self.EMBEDDING_DIM,

- weights=[self.embedding_matrix],

- input_length=self.TIME_STAMPS,

- trainable=False,

- mask_zero=True)

- model.add(embedding_layer)

- model.add(Bidirectional(LSTM(128, return_sequences=True)))

- model.add(Dropout(0.5))

- model.add(Bidirectional(LSTM(64, return_sequences=True)))

- model.add(Dropout(0.5))

- model.add(TimeDistributed(Dense(self.NUM_CLASSES)))

- crf_layer = CRF(self.NUM_CLASSES, sparse_target=True)

- model.add(crf_layer)

- model.compile('adam', loss=crf_layer.loss_function, metrics=[crf_layer.accuracy])

- model.summary()

- return model

-

- if __name__ == '__main__':

- ner = LSTMNER()

- while 1:

- s = input('enter an sent:').strip()

- ner.predict(s)

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/繁依Fanyi0/article/detail/648496

推荐阅读

相关标签