- 1并行和并发的区别_并发和并行的区别

- 2【机器学习】在【PyCharm中的学习】:从【基础到进阶的全面指南】

- 3企业跨境文件传输的核心痛点,怎样保证稳定可靠的传输性能?

- 4如何训练一个大语言模型(LLMs)_如何对大语言模型进行训练

- 5Moveit+Gazebo联合仿真_6轴机械臂 moveit gazebo csdn

- 6Python --- GUI编程(1)_python的图形界面gui编程

- 7使用 Gitee 进行代码管理(包括本地仓库如何同时关联Git和Gitee)_gitee pull request能在本地发起吗

- 8数字图像处理(3)—— 卷积_数字图像处理卷积运算

- 92024大环境差、行业卷,程序员依然要靠这份大厂级24 W字java面试手册成功逆袭!_2024年java环境怎么了样

- 10hadoop运行原理之Job运行(五) 任务调度

CXL 论文总结《Direct Access, High-Performance Memory Disaggregation with DirectCXL》_cxl.mem conflict

赞

踩

前言

这篇论文,我来看,就是CXL 的标准应用,重要的工作就是实现了实际环境,并且对延时进行了分析总结,数据可信。

Abstract

we propose directly accessible memory disaggregation, DIRECTCXL that straight connects a host processor complex and remote memory resources over CXL’s memory protocol (CXL.mem).To this end, we explore a practical design for CXL-based memory disaggregation and make it real. As there is no operating system that supports CXL, we also offer CXL software runtime that allows users to utilize the underlying disaggregated memory resources via sheer load/store instructions. Since DIRECTCXL does not require any data copies between the host memory and remote memory, it can expose the true performance of remote-side disaggregated memory resources to the users.

提出了可直接访问的内存分解方案 DIRECTCXL,它通过 CXL 内存协议(CXL.mem)直接连接主机处理器 complex 和远程内存资源。为此,我们探索了一种实用的基于 CXL 的内存分解设计,并将其变为现实。由于目前还没有支持 CXL 的操作系统,我们还提供了 CXL 运行时软件,该软件允许用户通过纯粹的加载/存储指令分解底层内存资源。由于 DIRECTCXL 不需要在主机内存和远程内存之间进行任何数据拷贝,因此可以向用户展示远端分解内存资源的真实性能。

1 Introduction

We can broadly classify the existing memory disaggregation runtimes into two different approaches based on how they manage data between a host and memory server(s):

i) pagebased and ii) object-based.

我们可以将现有的内存分解运行时根据管理主机和内存服务器之间的数据分为两种不同的方法。: 基于页面的 和 基于对象的。

The page-based approach utilizes virtual memory techniques to use disaggregated memory without a code change. It swaps page cache data residing on the host’s local DRAMs from/to the remote memory systems over a network in cases of a page fault. On the other hand, the object-based approach handles disaggregated memory from a remote using their own database such as a key-value store instead of leveraging the virtual memory systems This approach can address the challenges imposed by address translation (e.g., page faults, context switching, and write amplification), but it requires significant sourcelevel modifications and interface changes.

基于页面的方法利用虚拟内存技术,在不修改代码的情况下使用分解内存。在发生页面故障时,它通过网络将驻留在主机本地 DRAM 上的页面缓存数据与远程内存系统进行交换。另一方面,基于对象的方法使用自己的数据库(如键值存储)而不是利用虚拟内存系统来处理来自远程的分解内存。这种方法可以解决地址转换带来的挑战(如页面故障、上下文切换和写放大),但需要进行大量源代码级修改和接口变更。

While there are many variants, all the existing approaches need to move data from the remote memory to the host memory over remote direct memory access (RDMA)(or similar fine-grain network interfaces). In addition, they even require managing locally cached data in either the host or memory nodes. Unfortunately, the data movement and its accompanying operations (e.g., page cache management) introduce redundant memory copies and software fabric intervention, which makes the latency of disaggregated memory longer than that of local DRAM accesses by multiple orders of magnitude

虽然有许多变体,但所有现有方法都需要通过远程直接内存访问(RDMA)(或类似的细粒度网络接口)将数据从远程内存移动到主机内存。此外,它们甚至还需要管理主机或内存节点中的本地缓存数据。遗憾的是,数据移动及其伴随操作(如页面缓存管理)会引入冗余内存副本和软件结构干预,这使得分解内存的延迟比本地 DRAM 访问的延迟要长多个数量级。

In this work, we advocate compute express link (CXL), which is a new concept of open industry standard interconnects offering high-performance connectivity among multiple host processors, hardware accelerators, and I/O devices. CXL is originally designed to achieve the excellency of heterogeneity management across different processor complexes, but both industry and academia anticipate its cache coherence ability can help improve memory utilization and alleviate memory over-provisioning with low latency. Even though CXL exhibits a great potential to realize memory disaggregation with low monetary cost and high performance, it has not been yet made for production, and there is no platform to integrate memory into a memory pooling network.

在这项工作中,我们提倡使用 CXL,它是一种全新的开放式行业标准互连概念,可在多个主机处理器、硬件加速器和 I/O 设备之间提供高性能连接。CXL 的设计初衷是为了在不同处理器群之间实现卓越的异构管理,但业界和学术界都期待它的高速缓存一致性能力能帮助提高内存利用率,并以低延迟缓解内存超配问题。尽管 CXL 在以低成本和高性能实现内存分解方面展现出巨大潜力,但它尚未投入生产,也没有将内存集成到内存池网络中的平台。

We demonstrate DIRECTCXL, direct accessible disaggregated memory that connects host processor complex and remote memory resources over CXL’s memory protocol (CXL.mem). To this end, we explore a practical design for CXL-based memory disaggregation and make it real. Specifically, we first show how to disaggregate memory over CXL and integrate the disaggregated memory into processor-side system memory. This includes implementing CXL controller that employs multiple DRAM modules on a remote side. We then prototype a set of network infrastructure components such as a CXL switch in order to make the disaggregated memory connected to the host in a scalable manner.

我们展示了直接访问分解内存 DIRECTCXL,它通过 CXL 内存协议(CXL.mem)连接主机处理器 complex 和远程内存资源。为此,我们探索了基于 CXL 的内存分解的实用设计,并将其变为现实。具体来说,我们首先展示了如何通过 CXL 分解内存,并将分解后的内存集成到处理器侧系统内存中。这包括实现在远程侧采用多个 DRAM 模块的 CXL 控制器。然后,我们对 CXL switch等一系列网络基础设施组件进行原型设计,以便以可扩展的方式将分解内存连接到主机。

As there is no operating system that support CXL, we also offer CXL software runtime that allows users to utilize the underlying disaggregated memory resources through sheer load/store instructions. DIRECTCXL does not require any data copies between the host memory and remote memory, and therefore, it can expose the true performance of remote-side disaggregated memory resources to the users.

由于没有支持 CXL 的操作系统,我们还提供了 CXL 软件 runtime,允许用户通过纯粹的加载/存储指令利用底层分解内存资源。DIRECTCXL 不需要在主机内存和远程内存之间进行任何数据拷贝,因此可以向用户展示远程分解内存资源的真实性能。

In this work, we prototype DIRECTCXL using many customized memory add-in-cards, 16nm FPGA-based processor nodes, a switch, and a PCIe backplane. On the other hand, DIRECTCXL software runtime is implemented based on Linux 5.13. To the best of our knowledge, this is the first work that brings CXL 2.0 into a real system and analyzes the performance characteristics of CXL-enabled disaggregated memory design.

在这项工作中,我们使用许多定制的内存 add-in-cards、基于 16nm FPGA 的处理器节点、switch 和 PCIe 背板来制作 DIRECTCXL 的原型。另一方面,DIRECTCXL 软件运行时基于 Linux 5.13 实现。据我们所知,这是第一项将 CXL 2.0 引入实际系统并分析支持 CXL 的分解内存设计性能特征的工作。

The results of our real system evaluation show that the disaggregated memory resources of DIRECTCXL can exhibit DRAM-like performance when the workload can enjoy the host processor’s cache. When the load/store instructions go through the CXL network and are served from the disaggregated memory, DIRECTCXL’s latency is shorter than the best latency of RDMA by 6.2×, on average. For real-world applications, DIRECTCXL exhibits 3× better performance than RDMA-based memory disaggregation, on average.

我们的实际系统评估结果表明,当工作负载可以使用主机处理器的高速缓存时,DIRECTCXL 的分解内存资源可以表现出类似 DRAM 的性能。当加载/存储指令通过 CXL 网络并由分解内存提供服务时,DIRECTCXL 的延迟平均比 RDMA 的最佳延迟短 6.2 倍。在实际应用中,DIRECTCXL 的性能比基于 RDMA 的内存分解平均高出 3 倍。

2 Memory Disaggregation and Related Work

2.1 Remote Direct Memory Access

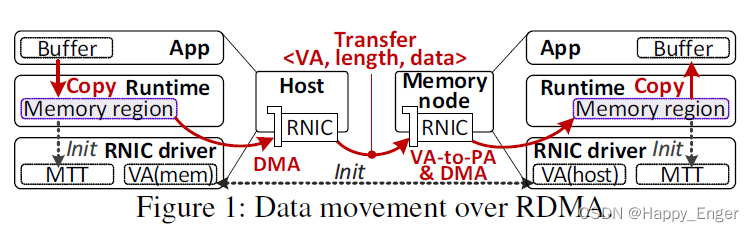

The basic idea of memory disaggregation is to connect a host with one or more memory nodes, such that it does not restrict a given job execution because of limited local memory space. For the backend network control, most disaggregation work employ remote direct memory access (RDMA) or similar customized DMA protocols. Figure 1 shows how RDMA-style data transfers (one-sided RDMA) work. For both the host and memory node sides,RDMA needs hardware support such as RDMA NIC (RNIC), which is designed toward removing the intervention of the network software stack as much as possible. To move data between them, processes on each side first require defining one or more memory regions (MRs) and letting the MR(s) to the underlying RNIC.

内存分解的基本思想是将主机与一个或多个内存节点连接起来,这样就不会因为本地内存空间有限而限制特定作业的执行。对于后端网络控制,大多数分解工作都采用远程直接内存访问(RDMA)或类似的定制 DMA 协议。图 1 显示了 RDMA 式数据传输(单边 RDMA)的工作原理。在主机和内存节点两侧,RDMA 都需要 RDMA NIC(RNIC)等硬件支持,其目的是尽可能消除网络软件栈的干预。要在它们之间移动数据,每一方的进程首先需要定义一个或多个内存区域(MR),并将这些内存区域让给底层的 RNIC。

During this time, the RNIC driver checks all physical addresses associated with the MR’s pages and registers them to RNIC’s memory translation table (MTT). Since those two RNICs also exchange their MR’s virtual address at the initialization, the host can simply send the memory node’s destination virtual address with data for a write. The remote node then translates the address by referring to its MTT and copies the incoming data to the target location of MR. Reads over RDMA can also be performed in a similar manner. Note that, in addition to the memory copy operations (for DMA), each side’s application needs to prepare or retrieve the data into/from MRs for the data transfers, introducing additional data copies within their local DRAM.

在此期间,RNIC 驱动程序会检查与 MR 页面相关的所有物理地址,并将其注册到 RNIC 的内存转换表 (MTT)。由于这两个 RNIC 也会在初始化时交换其 MR 的虚拟地址,因此主机只需将内存节点的目标虚拟地址连同写入数据一起发送即可。然后,远程节点参考其 MTT 转换地址,并将输入的数据复制到 MR 的目标位置。通过 RDMA 的读取也可以类似的方式进行。需要注意的是,除了内存复制操作(用于 DMA)外,每一方的应用都需要为数据传输准备数据或从 MR 中检索数据,从而在本地 DRAM 中引入额外的数据副本。

2.2 Swap: Page-based Memory Pool

Page-based memory disaggregation achieves memory elasticity by relying on virtual memory systems. Specifically, this approach intercepts paging requests when there is a page fault, and then it swaps the data to a remote memory node instead of the underlying storage. To this end, a disaggregation driver underneath the host’s kernel swap daemon (kswapd) converts the incoming block address to the memory node’s virtual address.

基于分页的内存分解依靠虚拟内存系统实现内存管理。具体来说,这种方法会在出现页面故障时拦截页请求,然后将数据交换到远程内存节点,而不是底层存储。为此,主机内核交换守护进程(kswapd)下的分解驱动程序会将传入的块地址转换为内存节点的虚拟地址。

It then copies the target page to RNIC’s MR and issues the corresponding RDMA request to the memory node. Since all operations for memory disaggregation is managed under kswapd, it is easy-to-adopt and transparent to all user applications. However, page-based systems suffer from performance degradation due to the overhead of page fault handling, I/O amplifications, and context switching when there are excessive requests for the remote memory.

然后,它将目标页面复制到 RNIC 的 MR 中,并向内存节点发出相应的 RDMA 请求。由于内存分解的所有操作都由 kswapd 管理,因此易于采用,对所有用户应用程序都是透明的。然而,当对远程内存的请求过多时,页面故障处理、I/O 放大和上下文切换等开销会导致基于页面的系统性能下降。

Note that there are several studies that migrate locally cached data in a finer granular manner or reduce the page fault overhead by offloading memory management (including page cache coherence) to the network or memory nodes. However, all these approaches use RDMA (or a similar network protocol), which is essential to cache the data and pay the cost of memory operations for network handling.

需要注意的是,有一些研究以更精细的方式迁移本地缓存数据,或通过将内存管理(包括页面缓存一致性)卸载到网络或内存节点来减少页面故障开销。不过,所有这些方法都使用 RDMA(或类似的网络协议),而 RDMA 是缓存数据和支付网络处理内存操作成本所必需的。

2.3 KVS: Object-based Memory Pool

In contrast, object-based memory disaggregation systems directly intervene in RDMA data transfers using their own database such as key-value store (KVS). Object-based systems create two MRs for both host and memory node sides, each dealing with buffer data and submission/completion queues (SQ/CQ). Generally, they employ a KV hash-table whose entries point to corresponding (remote) memory objects. Whenever there is a request of Put (or Get) from an application, the systems place the corresponding value into the host’s buffer MR and submit it by writing the remote side of SQ MR over RDMA. Since the memory node keeps polling SQ MR, it can recognize the request. The memory node then reads the host’s buffer MR, copies the value to its buffer MR over RDMA, a completes the request by writing the host’s CQ MR.

相比之下,基于对象的内存分解系统使用自己的数据库(如键值存储(KVS))直接干预 RDMA 数据传输。基于对象的系统为主机和内存节点两侧创建两个 MR,分别处理缓冲区数据和提交/完成队列(SQ/CQ)。一般来说,它们采用 KV 哈希表,其条目指向相应的(远程)内存对象。每当应用程序发出 Put(或 Get)请求时,系统就会将相应的值放入主机的缓冲区 MR 中,并通过 RDMA 写入 SQ MR 的远程端来提交。由于内存节点一直在轮询 SQ MR,因此它能识别该请求。然后,内存节点读取主机的缓冲区 MR,通过 RDMA 将值复制到自己的缓冲区 MR,并通过写入主机的 CQ MR 完成请求。

As it does not lean on virtual memory systems, object-based systems can address the overhead imposed by page swap. However, the performance of objectbased systems varies based on the semantics of applications compared to page-based systems; kswapd fully utilizes local page caches, but KVS does not for remote accesses. In addition, this approach is unfortunately limited because it requires significant source-level modifications for legacy applications.

由于不依赖虚拟内存系统,基于对象的系统可以解决页面交换带来的开销问题。不过,与基于页面的系统相比,基于对象的系统的性能因应用程序的语义而异;kswapd 可以充分利用本地页面缓存,但 KVS 在远程访问时却不能。此外,遗憾的是,这种方法受到限制,因为它需要对传统应用程序进行大量源代码级修改。

3 Direct Accessible Memory Aggregation

While caching pages and network-based data exchange are essential in the current technologies, they can unfortunately significantly deteriorate the performance of memory disaggregation. DIRECTCXL instead directly connects remote memory resources to the host’s computing complex and allows users to access them through sheer load/store instructions.

虽然缓存页面和基于网络的数据交换在当前技术中必不可少,但遗憾的是,它们会大大降低内存分解的性能。DIRECTCXL 直接将远程内存资源连接到主机的计算综合体,并允许用户通过纯粹的加载/存储指令访问这些资源。

3.1 Connecting Host and Memory over CXL

CXL devices and controllers. In practice, existing memory disaggregation techniques still require computing resources at the remote memory node side. This is because all DRAM modules and their interfaces are designed as passive peripherals, which require the control computing resources. CXL.mem in contrast allows the host computing resources directly access the underlying memory through PCIe buses (FlexBus); it works similar to local DRAM, connected to their system buses. Thus, we design and implement CXL devices as pure passive modules, each being able to have many DRAM DIMMs with its own hardware controllers. Our CXL device employs multiple DRAM controllers, connecting DRAM DIMMs over the conventional DDR interfaces. Its CXL controller then exposes the internal DRAM modules to FlexBus through many PCIe lanes. In the current architecture, the device’s CXL controller parses incoming PCIe-based CXL packets, called CXL flits, converts their information (address and length) to DRAM requests, and serves them from the underlying DRAMs using the DRAM controllers.

CXL 设备和控制器。实际上,现有的内存分解技术仍然需要远程内存节点端的计算资源。这是因为所有 DRAM 模块及其接口都被设计为被动外设,需要控制计算资源。相比之下,CXL.mem 允许主机计算资源通过 PCIe 总线(FlexBus)直接访问底层内存;其工作原理类似于连接到系统总线的本地 DRAM。因此,我们将 CXL 设备设计和实施为纯无源模块,每个模块都可以拥有多个 DRAM DIMM 和自己的硬件控制器。我们的 CXL 设备采用多个 DRAM 控制器,通过传统的 DDR 接口连接 DRAM DIMM。然后,其 CXL 控制器通过许多 PCIe 通道将内部 DRAM 模块暴露给 FlexBus。在当前架构中,设备的 CXL 控制器会解析传入的基于 PCIe 的 CXL 数据包(称为 CXL flits),将其信息(地址和长度)转换为 DRAM 请求,并使用 DRAM 控制器从底层 DRAM 提供这些请求。

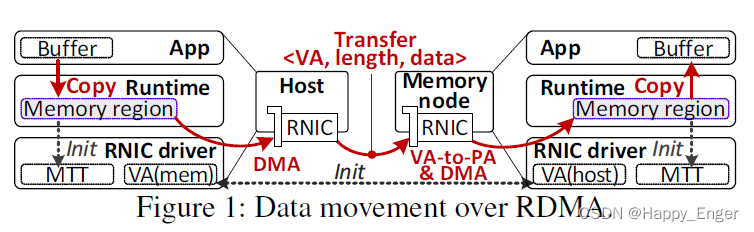

Integrating devices into system memory. Figure 2 shows how CXL devices’ internal DRAMs are mapped (exposed) to a host’s memory space over CXL. The host CPU’s system bus contains one or more CXL root ports (RPs), which connect one or more CXL devices as endpoint (EP) devices.Our host-side kernel driver first enumerates CXL devices by querying the size of their base address register (BAR) and their internal memory, called host-managed device memory (HDM), through PCIe transactions. Based on the retrieved sizes, the kernel driver maps BAR and HDM in the host’s reserved system memory space and lets the underlying CXL devices know where their BAR and HDM (base addresses) are mapped in the host’s system memory. When the host CPU accesses an HDM system memory through load/store instruction, the request is delivered to the corresponding RP, and the RP converts the requests to a CXL flit. Since HDM is mapped to a different location of the system memory, the memory address space of HDM is different from that of EP’s internal DRAMs. Thus, the CXL controller translates the incoming addresses by simply deducting HDM’s base address from them and issues the translated request to the underlying DRAM controllers. The results are returned to the host via a CXL switch and FlexBus. Note that, since HDM accesses have no software intervention or memory data copies, DIRECTCXL can expose the CXL device’s memory resources to the host with low access latency.

将设备集成到系统内存中。图 2 显示了 CXL 设备的内部 DRAM 如何通过 CXL 映射(暴露)到主机内存空间。主机 CPU 的系统总线包含一个或多个 CXL 根端口 (RP),这些端口将一个或多个 CXL 设备连接为端点 (EP) 设备。我们的主机端内核驱动程序首先通过 PCIe 事务查询 CXL 设备的基地址寄存器 (BAR) 和内部内存(称为主机管理设备内存 (HDM))的大小,从而枚举 CXL 设备。根据检索到的大小,内核驱动程序将 BAR 和 HDM 映射到主机预留的系统内存空间中,并让底层 CXL 设备知道它们的 BAR 和 HDM(基地址)在主机系统内存中的映射位置。当主机 CPU 通过加载/存储指令访问 HDM 系统内存时,请求会传送到相应的 RP,RP 会将请求转换为 CXL flit。由于 HDM 映射到系统内存的不同位置,因此 HDM 的内存地址空间与 EP 内部 DRAM 的地址空间不同。因此,CXL 控制器只需从中扣除 HDM 的基地址,就能转换传入地址,并将转换后的请求发送给底层 DRAM 控制器。结果通过 CXL switch 和 FlexBus 返回主机。请注意,由于 HDM 访问不需要软件干预或内存数据拷贝,DIRECTCXL 可以以较低的访问延迟将 CXL 设备的内存资源暴露给主机。

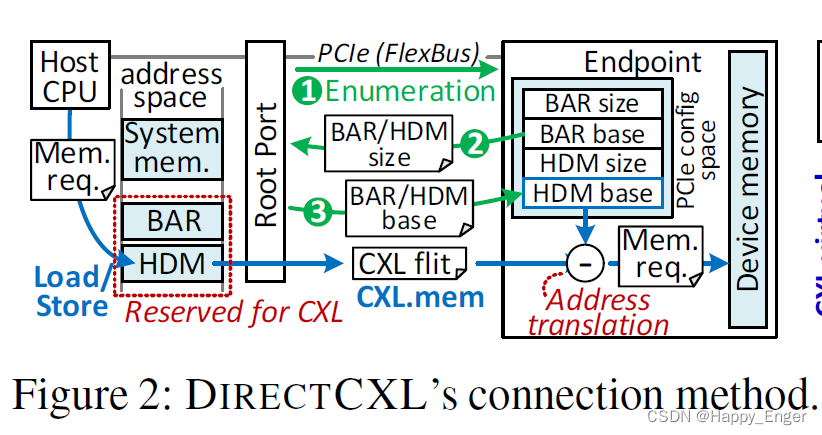

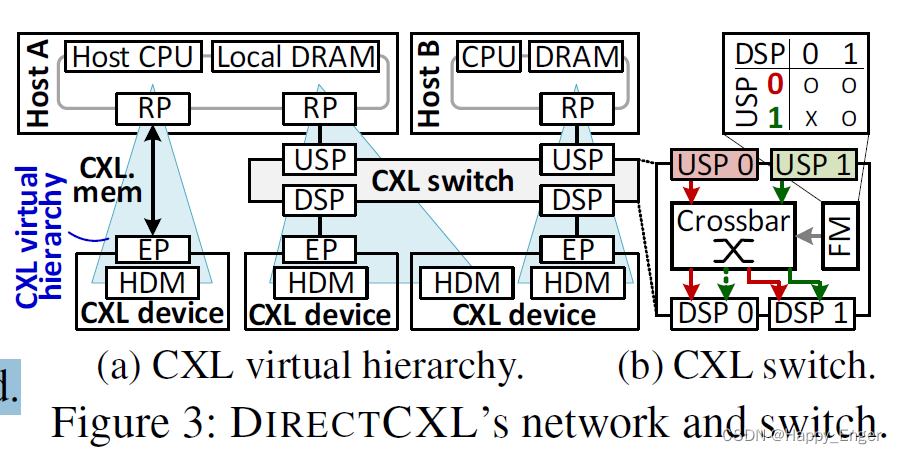

Designing CXL network switch. Figure 3a illustrates how DIRECTCXL can disaggregate memory resources from a host using one or more and CXL devices, and Figure 3b shows our CXL switch organization therein. The host’s CXL RP is connected to upstream port (USP) of either a CXL switch or the CXL device directly. The CXL switch’s downstream port (DSP) also connects either another CXL switch’s USP or the CXL device. Note that our CXL switch employs multiple USPs and DSPs. By setting an internal routing table, our CXL switch’s fabric manager (FM) reconfigures the switch’s crossbar to connect each USP to a different DSP, which creates a virtual hierarchy from a root (host) to a terminal (CXL device). Since a CXL device can employ one or more controllers and many DRAMs, it can also define multiple logical devices, each exposing its own HDM to a host. Thus, different hosts can be connected to a CXL switch and a CXL device. Note that each CXL virtual hierarchy only offers the path from one to another to ensure that no host is sharing an HDM.

设计 CXL 网络 switch。图 3a 展示了 DIRECTCXL 如何使用一个或多个 CXL 设备从主机中分解内存资源,图 3b 展示了其中的 CXL 交换机组织结构。主机的 CXL RP 直接连接到 CXL switch 或 CXL 设备的上游端口 (USP)。CXL switch 的下游端口 (DSP) 也连接另一个 CXL switch 的 USP 或 CXL 设备。请注意,我们的 CXL 交换机采用了多个 USP 和 DSP。通过设置内部路由表,我们的 CXL switch 的 Fabric Manager (FM) 会重新配置 switch 的 crossbar,将每个 USP 连接到不同的 DSP,从而创建一个从根(主机)到终端(CXL 设备)的虚拟层次结构。由于 CXL 设备可以使用一个或多个控制器和多个 DRAM,因此它还可以定义多个逻辑设备,每个逻辑设备向主机开放自己的 HDM。因此,不同的主机可以连接到一个 CXL switch 和一个 CXL 设备。请注意,每个 CXL 虚拟层次结构只提供从一个到另一个的路径,以确保没有主机共享 HDM。

3.2 Software Runtime for DirectCXL

In contrast to RDMA, once a virtual hierarchy is established between a host and CXL device(s), applications running on the host can directly access the CXL device by referring to HDM’s memory space. However, it requires software runtime/ driver to manage the underlying CXL devices and expose their HDM in the application’s memory space. We thus support DIRECTCXL runtime that simply splits the address space of HDM into multiple segments, called cxl-namespace. DIRECTCXL runtime then allows the applications to access each CXL-namespace as memory-mapped files (mmap).

与 RDMA 不同的是,一旦在主机和 CXL 设备之间建立了虚拟层次结构,主机上运行的应用程序就可以通过引用 HDM 的内存空间直接访问 CXL 设备。不过,这需要软件运行时/驱动程序来管理底层 CXL 设备,并在应用程序的内存空间中公开其 HDM。因此,我们支持 DIRECTCXL 运行时,它能简单地将 HDM 的地址空间分割成多个段(称为 cxl-namespace)。DIRECTCXL 运行时允许应用程序以内存映射文件(mmap)的形式访问每个 CXL 名称空间。

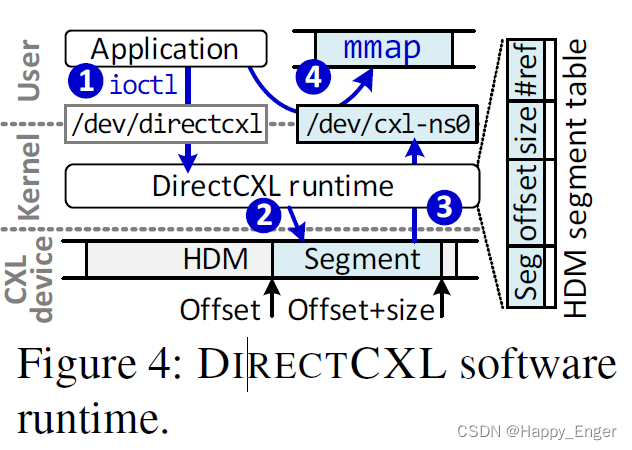

Figure 4 shows the software stack of our runtime and how the application can use the disaggregated memory through cxl-namespaces. When a CXL device is detected (at a PCIe enumeration time), DIRECTCXL driver creates an entry device (e.g., /dev/directcxl) to allow users to manage a cxl-namespace via ioctl. If users ask a cxl-namespace to /dev/directcxl, the driver checks a (physically) contiguous address space on an HDM by referring to its HDM segment table whose entry includes a segment’s offset, size, and reference count (recording how many cxl-namespaces that indicate this segment). Since multiple processes can access this table, its header also keeps necessary information such as spinlock, read/write locks, and a summary of table entries (e.g., valid entry numbers). Once DIRECTCXL driver allocates a segment based on the user request, it creates a device for mmap (e.g., /dev/cxl-ns0) and updates the segment table.

图 4 显示了运行时的软件栈,以及应用程序如何通过 cxl 命名空间使用分解内存。当检测到 CXL 设备时(PCIe 枚举时间),DIRECTCXL 驱动程序会创建一个入口设备(如 /dev/directcxl),允许用户通过 ioctl 管理 cxl 名称空间。如果用户向 /dev/directcxl 请求一个 cxl 名称空间,驱动程序就会参考 HDM 段表检查 HDM 上的(物理)连续地址空间,段表的条目包括段的偏移量、大小和引用计数(记录有多少个 cxl 名称空间指示该段)。由于多个进程都可以访问该表,因此表头还保留了必要的信息,如自旋锁、读/写锁和表项摘要(如有效项号)。一旦 DIRECTCXL 驱动程序根据用户请求分配了网段,它就会为 mmap 创建一个设备(如 /dev/cxl-ns0)并更新网段表。

Note that DIRECTCXL software runtime is designed for direct access of CXL devices, which is a similar concept to the memory-mapped file management of persistent memory development toolkit (PMDK). However, it is much simpler and more flexible for namespace management than PMDK. For example, PMDK’s namespace is very much the same idea as NVMe namespace, managed by file systems or DAX with a fixed size . In contrast, our cxl-namespace is more similar to the conventional memory segment, which is directly exposed to the application without a file system employment.

请注意,DIRECTCXL 软件运行时是为直接访问 CXL 设备而设计的,其概念与持久内存开发工具包(PMDK)的内存映射文件管理类似。不过,它的命名空间管理比 PMDK 简单得多,也灵活得多。例如,PMDK 的命名空间与 NVMe 命名空间的理念非常相似,由文件系统或 DAX 以固定大小进行管理。相比之下,我们的 cxl 命名空间更类似于传统的内存段,它直接暴露给应用程序,无需文件系统调用。

3.3 Prototype Implementation

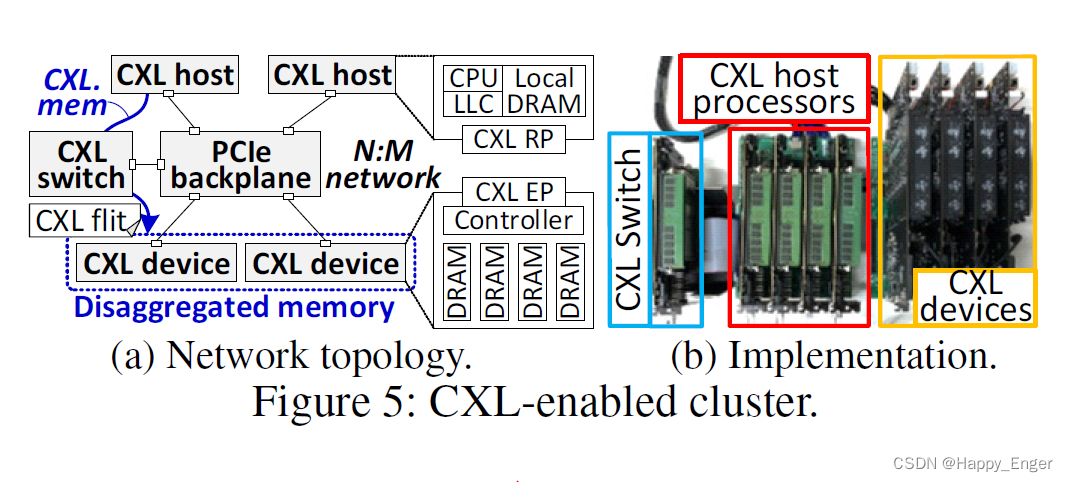

Figure 5a illustrates our design of a CXL network topology to disaggregate memory resources, and the corresponding implementation in a real system is shown in Figure 5b. There are n numbers of compute hosts connected to m number of CXL devices through a CXL switch; in our prototype, n and m are four, but those numbers can scale by having more CXL switches. Specifically, each CXL device prototype is built on our customized add-in-card (AIC) CXL memory blade that employs 16nm FPGA and 8 different DDR4 DRAM modules (64GB). In the FPGA, we fabricate a CXL controller and eight DRAM controllers, each managing the CXL endpoint and internal DRAM channels. As yet there is no processor architecture supporting CXL, we also build our own in-house host processor using RISC-V ISAs, which employs four out-oforder cores whose last-level cache (LLC) implements CXL RP. Each CXL-enabled host processor is implemented in a highperformance datacenter accelerator card, taking a role of a host, which can individually run Linux 5.13 and DIRECTCXL software runtime. We expose four CXL devices (32 DRAM modules) to the four hosts through our PCIe backplane. We extended the backplane with one more accelerator card that implements DIRECTCXL’s CXL switch. This switch implements FM that can create multiple virtual hierarchies, each connecting a host and a CXL device in a flexible manner.

图 5a 展示了我们设计的用于分解内存资源的 CXL 网络拓扑结构,图 5b 展示了在实际系统中的相应实现。有 n 个计算主机通过一个 CXL switch 连接到 m 个 CXL 设备;在我们的原型中,n 和 m 均为 4,但这些数字可以通过增加 CXL switch 来扩展。具体来说,每个 CXL 设备原型都建立在我们定制的附加卡 (AIC) CXL 内存 blade 上,该blade 采用 16 纳米 FPGA 和 8 个不同的 DDR4 DRAM 模块(64GB)。在 FPGA 中,我们制造了一个 CXL 控制器和八个 DRAM 控制器,每个控制器管理 CXL 端点和内部 DRAM 通道。由于目前还没有支持 CXL 的处理器架构,我们还利用 RISC-V ISA 构建了自己的内部主机处理器,其中采用了四个无序内核,其末级高速缓存 (LLC) 实现了 CXL RP。每个支持 CXL 的主处理器都是在高性能数据中心加速卡中实现的,扮演着主机的角色,可以单独运行 Linux 5.13 和 DIRECTCXL 软件运行时。我们通过 PCIe 背板将四个 CXL 设备(32 个 DRAM 模块)暴露给四个主机。我们又用一块实现 DIRECTCXL 的 CXL switch 的加速卡扩展了背板。该 switch 实现的 FM 可以创建多个虚拟层次结构,每个层次结构以灵活的方式连接一个主机和一个 CXL 设备。

To the best of our knowledge, there are no commercialized CXL 2.0 IPs for the processor side’s CXL engines and CXL switch. Thus, we built all DIRECTCXL IPs from the ground. The host-side processors require advanced configuration and power interface (ACPI) for CXL 2.0 enumeration (e.g., RP location and RP’s reserved address space). Since RISC-V does not support ACPI yet, we enable the CXL enumeration by adding such information into the device tree . Specifically, we update an MMIO register designated as a property of the tree’s node to let the processor know where CXL RP exists. On the other hand, we add a new field (cxl-reserved-area) in the node to indicate where an HDM can be mapped. Our in-house softcore processors work at 100MHz while CXL and PCIe IPs (RP, EP, and Switch) operate at 250MHz.

据我们所知,目前还没有用于处理器侧 CXL 引擎和 CXL switch 的商业化 CXL 2.0 IP。因此,我们从头开始构建所有 DIRECTCXL IP。主机侧处理器需要高级配置和电源接口(ACPI)来枚举 CXL 2.0(如 RP 位置和 RP 的预留地址空间)。由于 RISC-V 尚不支持 ACPI,我们通过在设备树中添加此类信息来实现 CXL 枚举。具体来说,我们更新了作为设备树节点属性的 MMIO 寄存器,让处理器知道 CXL RP 存在于何处。另一方面,我们在节点中添加了一个新字段(cxl-reserved-area),以指示可映射 HDM 的位置。我们的内部软核处理器工作频率为 100MHz,而 CXL 和 PCIe IP(RP、EP 和 Switch)的工作频率为 250MHz。

4 Evaluation

Testbed prototypes for memory disaggregation. In addition to the CXL environment that we implemented in Section 3.3 (DirectCXL), we set up the same configuration with it for our RDMA-enabled hardware system (RDMA). For RDMA, we use Mellanox ConnectX-3 VPI InfiniBand RNIC (56Gbps) instead of our CXL switch as RDMA network interface card (RNIC). In addition, we port Mellanox OpenFabric Enterprise Distribution (OFED) v4.9 as an RDMA driver to enable RNIC in our evaluation testbed. Lastly, we port FastSwap and HERD into RISC-V Linux 5.13.19 computing environment atop RDMA, each realizing page-based disaggregation (Swap) and object-based disaggregation (KVS).

内存分解试验台原型。除了在第 3.3 节中实施的 CXL 环境(DirectCXL)外,我们还为支持 RDMA 的硬件系统(RDMA)设置了相同的配置。对于 RDMA,我们使用 Mellanox ConnectX-3 VPI InfiniBand RNIC(56Gbps)代替 CXL switch 作为 RDMA 网络接口卡(RNIC)。此外,我们还移植了 Mellanox OpenFabric Enterprise Distribution (OFED) v4.9 作为 RDMA 驱动程序,以便在评估测试平台中启用 RNIC。最后,我们将 FastSwap 和 HERD 移植到 RDMA 上的 RISC-V Linux 5.13.19 计算环境中,分别实现了基于页面的分解(Swap)和基于对象的分解(KVS)。

For better comparison, we also configure the host processors to use only their local DRAM (Local) by disabling all the CXL memory nodes. Note that we used the same testbed hardware mentioned above for both CXL experiments and non-CXL experiments but differently configured the testbed for each reference. For example, our testbed’s FPGA chips for the host (in-house) processors and CXL devices use all the same architecture/technology and product line-up.

为了更好地进行比较,我们还通过禁用所有 CXL 内存节点,将主机处理器配置为仅使用其本地 DRAM(本地)。请注意,我们在 CXL 实验和非 CXL 实验中都使用了上述相同的测试平台硬件,但为每个参照物配置了不同的测试平台。例如,我们测试平台上用于主机(内部)处理器和 CXL 设备的 FPGA 芯片采用了相同的架构/技术和产品系列。

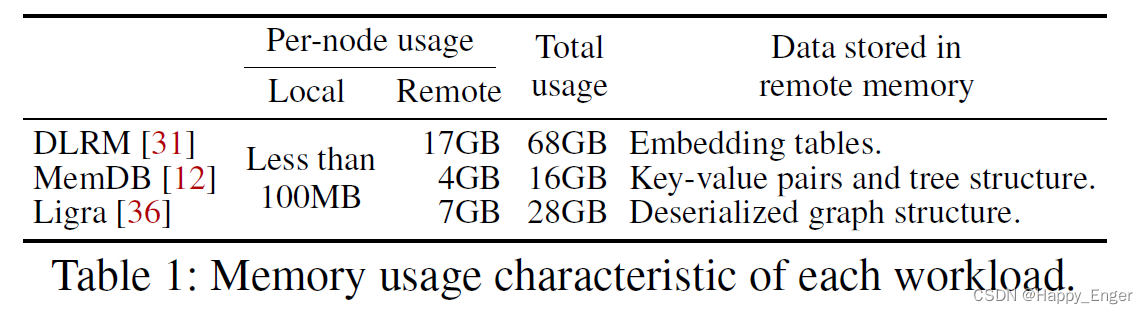

Benchmark and workloads. Since there is no microbenchmark that we can compare different memory pooling technologies (RDMA vs. DirectCXL), we also build an in-house memory benchmark for in-depth analysis of those two technologies (Section 4.1). For RDMA, this benchmark allocates a large size of the memory pool at the remote side in advance. This benchmark allows a host processor to send random memory requests to a remote node with varying lengths; the remote node serves the requests using the pre-allocated memory pool. For DirectCXL and Local, the benchmark maps cxl namespace or anonymous mmap to user spaces, respectively.The benchmark then generates a group of RISC-V memory instructions, which can cover a given address length in a random pattern and directly issues them without software intervention. For the real workloads, we use Facebook’s deep learning recommendation model (DLRM), an in-memory database used for the HERD evaluation (MemDB), and four graph analysis workloads (MIS , BFS , CC, and BC) coming from Ligra. All their tables and data structures are stored in the remote node, while each host’s local memory handles the execution code and static data. Table 1 summarizes the per-node memory usage and total data sizes for each workload that we tested.

基准和工作负载。由于没有微基准可以比较不同的内存池技术(RDMA 与 DirectCXL),我们还建立了一个内部内存基准,以便深入分析这两种技术(第 4.1 节)。对于 RDMA,该基准会提前在远端分配一个大容量的内存池。该基准允许主机处理器向远程节点发送不同长度的随机内存请求;远程节点使用预先分配的内存池为请求提供服务。对于 DirectCXL 和 Local,该基准分别将 cxl 命名空间或匿名 mmap 映射到用户空间。然后,该基准生成一组 RISC-V 内存指令,这些指令可以随机模式覆盖给定的地址长度,并在没有软件干预的情况下直接发出。在实际工作负载中,我们使用了 Facebook 的深度学习推荐模型(DLRM)、用于 HERD 评估的内存数据库(MemDB)以及来自 Ligra 的四种图形分析工作负载(MIS、BFS、CC 和 BC)。它们的所有表格和数据结构都存储在远程节点中,而每个主机的本地内存则处理执行代码和静态数据。表 1 总结了我们测试的每个工作负载的每个节点内存使用情况和总数据大小。

4.1 In-depth Analysis of RDMA and CXL

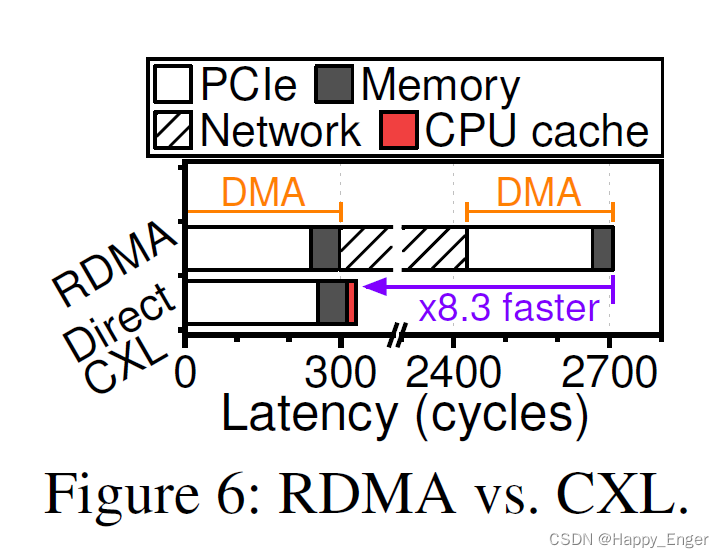

In this subsection, we compare the performance of RDMA and CXL technologies when the host and memory nodes are configured through a 1:1 connection. Figure 6 shows latency

breakdown of RDMA and DirectCXL when reading 64 bytes of data. One can observe from the figure that RDMA requires two DMA operations, which doubles the PCIe transfer and memory access latency. In addition, the communication overhead of InfiniBand (Network) takes 78.7% (2129 cycles) of the total latency (2705 cycles). In contrast, DirectCXL only takes 328 cycles for memory load request, which is 8.3× faster than RDMA. There are two reasons behind this performance difference. First, DirectCXL straight connects the compute nodes and memory nodes using PCIe while RDMA requires protocol/ interface changes between InfiniBand and PCIe. Second, DirectCXL can translate memory load/store request from LLC into the CXL flits whereas RDMA must use DMA to read/write data from/to memory.

在本小节中,我们比较了主机和内存节点通过 1:1 连接配置时 RDMA 和 CXL 技术的性能。图 6 显示了读取 64 字节数据时 RDMA 和 DirectCXL 的延迟明细。从图中可以看出,RDMA 需要两次 DMA 操作,这使得 PCIe 传输和内存访问延迟增加了一倍。此外,InfiniBand(网络)的通信开销占总延迟(2705 个周期)的 78.7%(2129 个周期)。相比之下,DirectCXL 的内存加载请求只需 328 个周期,比 RDMA 快 8.3 倍。造成这种性能差异的原因有两个。首先,DirectCXL 使用 PCIe 直接连接计算节点和内存节点,而 RDMA 需要在 InfiniBand 和 PCIe 之间更改协议/接口。其次,DirectCXL 可以将 LLC 的内存加载/存储请求转换为 CXL flits,而 RDMA 必须使用 DMA 才能从内存读/写数据。

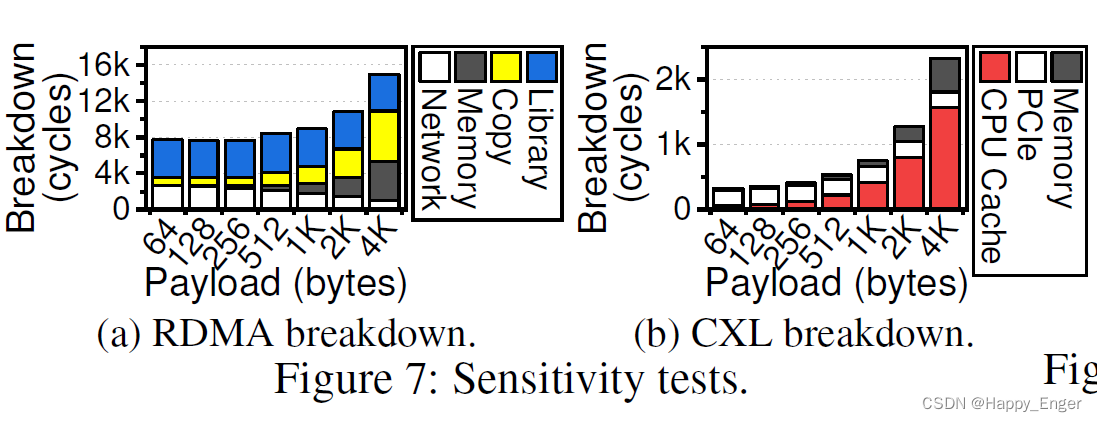

Sensitivity tests. Figure 7a decomposes RDMA latency into essential hardware (Memory and Network), software (Library), and data transfer latencies (Copy). In this evaluation, we instrument two user-level InfiniBand libraries, libibverbs and libmlx4 to measure the software side latency. Library is the primary performance bottleneck in RDMA when the size of payloads is smaller than 1KB (4158 cycles, on average). As the payloads increase, Copy gets longer and reaches 28.9% of total execution time. This is because users must copy all their data into RNIC’s MR, which takes extra overhead in RDMA. On the other hand, Memory and Network shows a performance trend similar to RDMA analyzed in Figure 6. Note that the actual times of Network (Figure 7a) do not decrease as the payload increases; while Memory increases to handle large size of data, RNIC can simultaneously transmit the data to the underlying network. These overlapped cycles are counted by Memory in our analysis.

敏感性测试。图 7a 将 RDMA 延迟分解为基本硬件(内存和网络)、软件(库)和数据传输延迟(复制)。在本次评估中,我们使用 libibverbs 和 libmlx4 这两个用户级 InfiniBand 库来测量软件延迟。当有效载荷小于 1KB 时(平均 4158 个周期),库是 RDMA 的主要性能瓶颈。随着有效载荷的增加,复制时间会越来越长,达到总执行时间的 28.9%。这是因为用户必须将所有数据复制到 RNIC 的 MR 中,这在 RDMA 中需要额外的开销。另一方面,内存和网络的性能趋势与图 6 中分析的 RDMA 相似。请注意,网络的实际时间(图 7a)并没有随着有效载荷的增加而减少;在内存增加以处理大容量数据的同时,RNIC 可以将数据同时传输到底层网络。在我们的分析中,这些重叠的周期由内存计算。

As shown in Figure 7b, the breakdown analysis for DirectCXL shows a completely different story; there is neither software nor data copy overhead. As the payloads increase, the dominant component of DirectCXL’s latency is LLC (CPU Cache). This is because LLC can handle 16 concurrent misses through miss status holding registers (MSHR) in our custom CPU. Thus, many memory requests (64B) composing a large payload data can be stalled at CPU, which takes 67% of the total latency to handle 4KB payloads. PCIe shown in Figure 7a does not decrease as the payloads increase because of a similar reason of RDMA’s Network. However, it is not as much as what Network did as only 16 concurrent misses can be overlapped. ote that PCIe shown in Figures 6 and 7b includes the latency of CXL IPs (RP, EP, and Switch), which is different from the pure cycles of PCIe physical bus. The pure cycles of PCIe physical bus (FlexBus) account for 28% of DirectCXL latency. The detailed latency decomposition will be analyzed in Section 4.2.

如图 7b 所示,DirectCXL 的细分分析显示了完全不同的情况;既没有软件开销,也没有数据拷贝开销。随着有效载荷的增加,DirectCXL 延迟的主要部分是 LLC(CPU 高速缓存)。这是因为通过我们定制 CPU 中的未命中状态寄存器(MSHR),LLC 可以处理 16 个并发未命中。因此,构成大有效载荷数据的许多内存请求(64B)都会停滞在 CPU 上,这占了处理 4KB 有效载荷总延迟的 67%。由于 RDMA 网络的类似原因,图 7a 中显示的 PCIe 延迟并没有随着有效载荷的增加而减少。图 6 和 图 7b 中显示的 PCIe 包括 CXL IP(RP、EP 和 Switch)的延迟,这与 PCIe 物理总线的纯周期不同。PCIe 物理总线(FlexBus)的纯周期占 DirectCXL 延迟的 28%。详细的延迟分解将在第 4.2 节中分析。

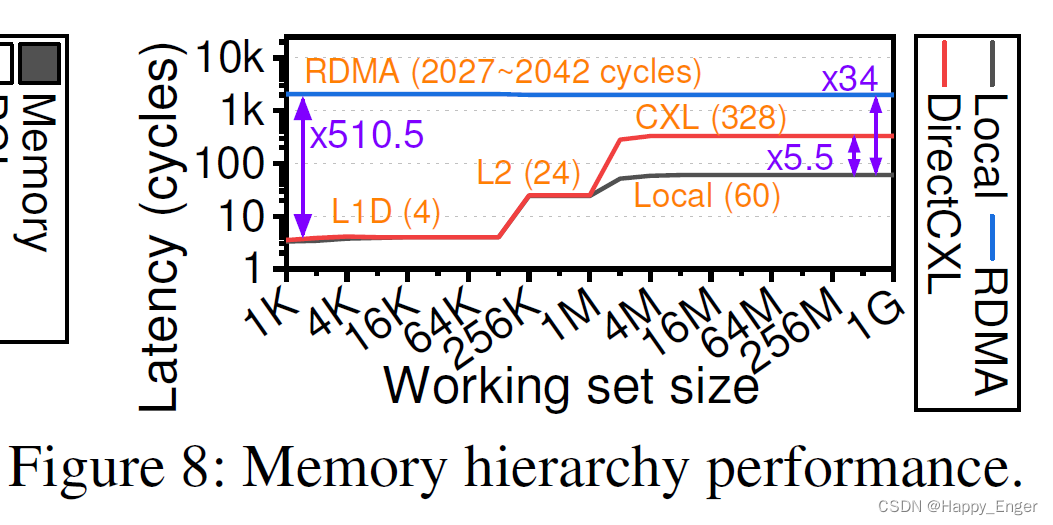

Memory hierarchy performance. Figure 8 shows latency cycles of different components in the system’s memory hierarchy. While Local and DirectCXL exhibits CPU cache by lowering the memory access latency to 4 cycles, RDMA has negligible impacts on CPU cache as their network overhead is much higher than that of Local. The best-case performance of RDMA was 2027 cycles, which is 6.2× and 510.5× slower than that of DirectCXL and L1 cache, respectively. DirectCXL requires 328 cycles whereas Local requires only 60 cycles in the case of L2 misses. Note that the performance bottleneck of DirectCXL is PCIe including CXL IPs (77.8% of the total latency). This can be accelerated by increasing the working frequency, which will be discussed shortly.

内存层次结构性能。图 8 显示了系统内存层次结构中不同组件的延迟周期。Local 和 DirectCXL 通过将内存访问延迟降低到 4 个周期来展示 CPU 缓存,而 RDMA 对 CPU 缓存的影响可以忽略不计,因为它们的网络开销远高于 Local。RDMA 的最佳性能为 2027 个周期,分别比 DirectCXL 和 L1 缓存慢 6.2 倍和 510.5 倍。DirectCXL 需要 328 个周期,而在二级缓存未命中的情况下,Local 仅需要 60 个周期。请注意,DirectCXL 的性能瓶颈是包括 CXL IP 在内的 PCIe(占总延迟的 77.8%)。这可以通过提高工作频率来加速,稍后将对此进行讨论。

4.2 Latency Distribution and Scaling Study

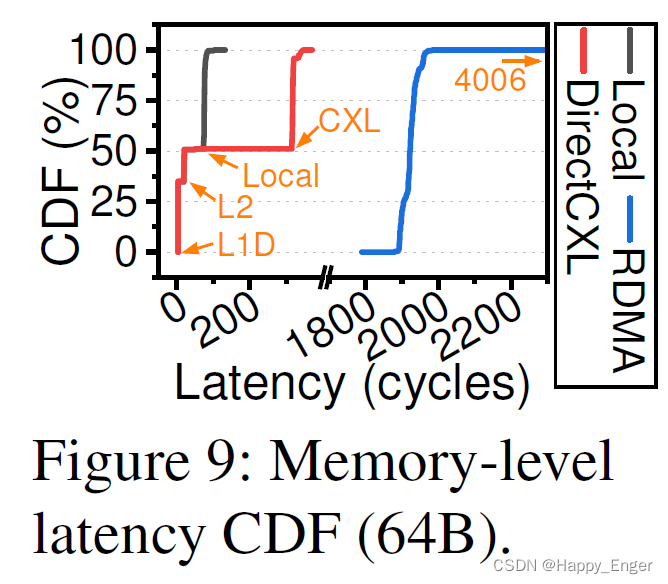

Latency distribution. In addition to the latency trend (average) we reported above, we also analyze complete latency behaviors of Local, RDMA, and DirectCXL. Figure 9 shows the latency CDF of memory accesses (64B) for the different pooling methods. RDMA shows the performance curve, which ranges from 1790 cycles to 4006 cycles. The reason why there is a difference between the minimum and maximum latency of RDMA is RNIC’s MTT memory buffer and CPU caches for data transfers. While RDMA cannot take the benefits from direct load/store instruction with CPU caches, its data transfers themselves utilize CPU caches. Nevertheless, RDMA cannot avoid the network accesses for remote memory accesses, making its latency worse than Local by 36.8×, on average. In contrast, the latency behaviors of DirectCXL are similar to Local. Even though the latency of DirectCXL (reported in Figures 6 and 7b) is the average value, its best performance is the same as Local (4∼24 cycles). This is because, as we showed in the previous section, DirectCXL can take the benefits of CPU caches directly. The tail latency is 2.8× worse than Local, but its latency curve is similar to that of Local. This is because both DirectCXL and Local use the same DRAM (and there is no network access overhead).

延迟分布。除了上面报告的延迟趋势(平均值),我们还分析了本地、RDMA 和 DirectCXL 的完整延迟行为。图 9 显示了不同池化方法的内存访问延迟 CDF(64B)。RDMA 显示了从 1790 个周期到 4006 个周期的性能曲线。RDMA 的最小延迟和最大延迟之间存在差异的原因是 RNIC 的 MTT 内存缓冲区和用于数据传输的 CPU 缓存。虽然 RDMA 无法利用 CPU 缓存直接加载/存储指令,但其数据传输本身却利用了 CPU 缓存。尽管如此,RDMA 无法避免远程内存访问时的网络访问,因此其延迟平均比本地差 36.8 倍。相比之下,DirectCXL 的延迟行为与本地类似。尽管 DirectCXL 的延迟(如图 6 和图 7b 所示)是平均值,但其最佳性能与本地相同(4∼24 个周期)。这是因为,正如我们在上一节所展示的,DirectCXL 可以直接利用 CPU 缓存的优势。尾部延迟比本地差 2.8 倍,但其延迟曲线与本地相似。这是因为 DirectCXL 和 Local 使用相同的 DRAM(没有网络访问开销)。

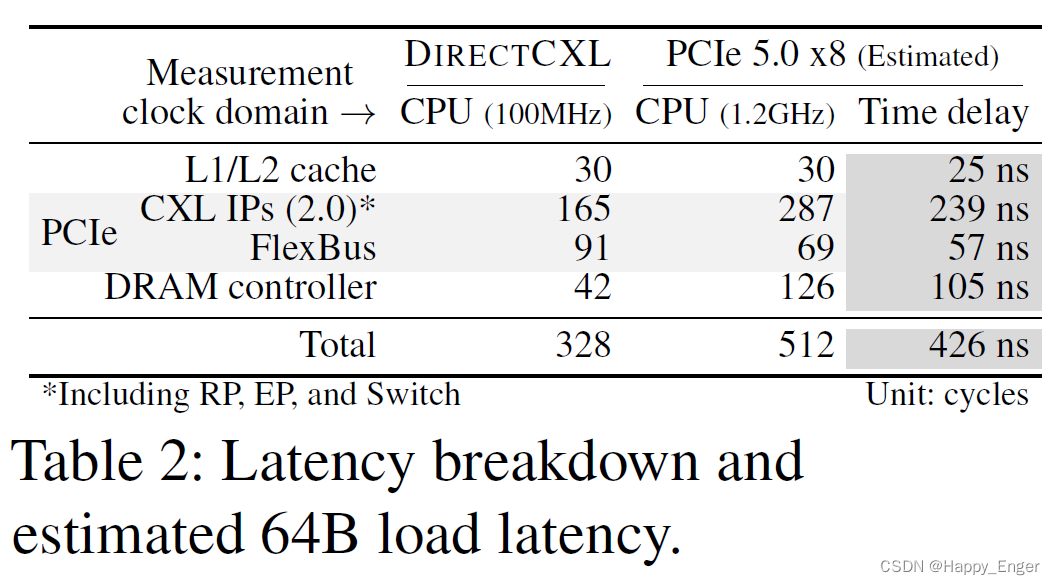

Speed scaling estimation. The cycle numbers that we reported here are measured at each host’s CPU using register level instrumentation. We believe it is sufficient and better than a cross-time-domain analysis to decompose the system latency. Nevertheless, we estimate a time delay in cases where the target system accelerates the frequency of its processor complex and CXL IPs (RP, EP, and Switch) by 1.2GHz and 1GHz, respectively. Table 2 decomposes DirectCXL’s latency of a 64B memory load and compares it with the estimated time delay. The cycle counts of L1/L2 cache misses are not different as they work in all the same clock domain of CPU. While other components (FlexBus, CXL IPs, and DRAM controller) speed up by 4× (250MHz → 1GHz), the number of cycles increases since CPU gets faster by 12×. Note that, as the version of PCIe is changed and the number of lanes for PCIe increases by double, FlexBus’s cycles decrease. The table includes the time delays corresponding to the estimated system from the CPU’s viewpoint. While the time delay of FlexBus is pretty good (∼60ns), the corresponding CXL IPs have room to improve further with a higher working frequency.

速度缩放估算。我们在此报告的周期数是使用寄存器级仪器在每个主机的 CPU 上测量的。我们认为这足以分解系统延迟,而且比跨时域分析更好。不过,在目标系统将其处理器和 CXL IP(RP、EP 和 Switch)的频率分别提高 1.2GHz 和 1GHz 的情况下,我们估计会出现时间延迟。表 2 分解了 DirectCXL 的 64B 内存负载延迟,并将其与估计的时间延迟进行了比较。由于 L1/L2 高速缓存缺失的周期计数与 CPU 的时钟域相同,因此两者并无差异。虽然其他组件(FlexBus、CXL IP 和 DRAM 控制器)的速度提高了 4 倍(250MHz → 1GHz),但由于 CPU 的速度提高了 12 倍,因此周期数也增加了。需要注意的是,随着 PCIe 版本的改变和 PCIe 通道数增加一倍,FlexBus 的周期也会减少。表中包括从 CPU 角度估算的系统时间延迟。虽然 FlexBus 的时间延迟相当不错(∼60ns),但相应的 CXL IP 仍有进一步提高工作频率的空间。

4.3 Performance of Real Workloads

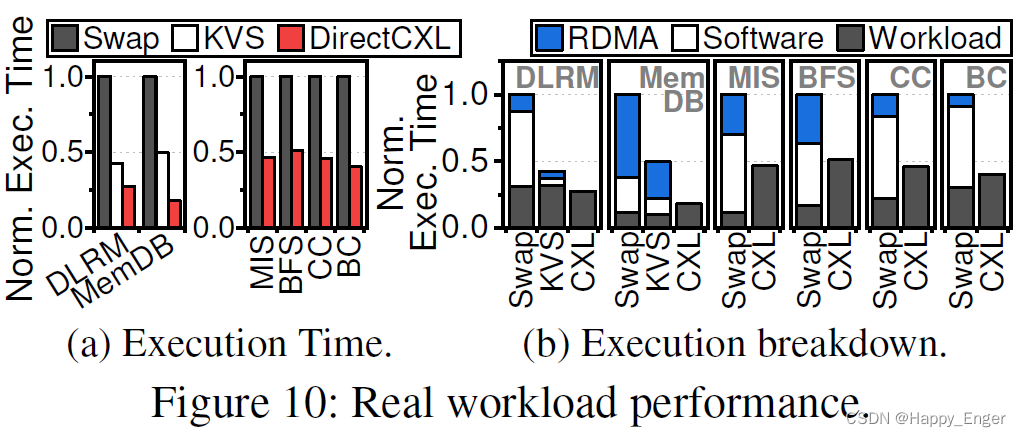

Figure 10a shows the execution latency of Swap, KVS, and DirectCXL when running DLRM, MemDB, and four workloads from Ligra. For better understanding, all the results in this subsection are normalized to those of Swap. For Ligra, we only compare DirectCXL with Swap because Ligra’s graph processing engines (handling in-/out-edges and vertices) is not compatible with a key-value structure. KVS can reduce the latency of Swap as it addresses the overhead imposed by pagebased I/O granularity to access the remote memory. However, it has two major issues behind KVS. First, it requires significant modification of the application’s source codes, which is often unable to service (e.g., MIS, BFS, CC, BC). Second, KVS requires heavy computation such as hashing at the memory node, which increases monetary costs. In contrast, DirectCXL without having a source modification and remoteside resource exhibits 3× and 2.2× better performance than Swap and even KVS, respectively.

图 10a 显示了 Swap、KVS 和 DirectCXL 在运行 DLRM、MemDB 和来自 Ligra 的四个工作负载时的执行延迟。为了更好地理解,本小节中的所有结果都与 Swap 的结果进行了归一化处理。对于 Ligra,我们只将 DirectCXL 与 Swap 进行了比较,因为 Ligra 的图形处理引擎(处理出入边和顶点)与键值结构不兼容。KVS 可以减少 Swap 的延迟,因为它解决了访问远程内存时基于页面的 I/O 粒度带来的开销。不过,KVS 背后有两个主要问题。首先,它需要对应用程序的源代码进行重大修改,而这通常是无法实现的(如 MIS、BFS、CC、BC)。其次,KVS 需要在内存节点进行哈希等繁重的计算,从而增加了成本。相比之下,没有源代码修改和远程资源的 DirectCXL 的性能分别比 Swap 和 KVS 高出 3 倍和 2.2 倍。

To better understand this performance improvement of DirectCXL, we also decompose the execution times into RDMA, network library intervention (Software), and application execution itself (Workload) latencies, and the results are shown in Figure 10b. This figure demonstrates where Swap degrades the overall performance from its execution; 51.8% of the execution time is consumed by kernel swap daemon (kswapd) and FastSwap driver, on average. This is because Swap just expands memory with the local and remote based on LRU, which makes its page exchange frequent. The reason why KVS shows performance better than Swap in the cases of DLRM and MemDB is mainly related to workload characteristics and its service optimization. For DLRM, KVS loads the exact size of embeddings rather than a page, which reduces Swap’s data transfer overhead as high as 6.9×. While KVS shows the low overhead in our evaluation, RDMA and Software can linearly increase as the number of inferences increases; in our case, we only used 13.5MB (0.0008%) of embeddings for single inference. For MemDB, as KVS stores all key-value pairs into local DRAM, it only accesses remoteside DRAM to inquiry values. However, it spends 55.3% and 24.9% of the execution time for RDMA and Software to handle the remote DRAMs, respectively.

为了更好地理解 DirectCXL 性能的提升,我们还将执行时间分解为 RDMA、网络库干预(软件)和应用执行本身(工作负载)的延迟,结果如图 10b 所示。该图显示了 Swap 在执行过程中降低整体性能的地方;内核交换守护进程 (kswapd) 和 FastSwap 驱动程序平均消耗了 51.8% 的执行时间。这是因为 Swap 只是根据 LRU 扩展本地和远程内存,这使得其页面交换频繁。在 DLRM 和 MemDB 的情况下,KVS 的性能之所以优于 Swap,主要与工作负载特性及其服务优化有关。在 DLRM 中,KVS 加载的是嵌入的精确大小而不是页面,这使 Swap 的数据传输开销降低了 6.9 倍。虽然 KVS 在我们的评估中显示出较低的开销,但 RDMA 和 Software 会随着推理次数的增加而线性增加;在我们的案例中,单次推理仅使用了 13.5MB (0.0008%)的嵌入式数据。对于 MemDB,由于 KVS 将所有键值对存储在本地 DRAM 中,因此它只访问远程 DRAM 来查询值。不过,它在 RDMA 和软件上分别花费了 55.3% 和 24.9% 的执行时间来处理远程 DRAM。

In contrast, DirectCXL removes such hardware and software overhead, which exhibits much better performance than Swap and KVS. Note that MemDB contains 2M key-value pairs whose value size is 2KB, and its host queries 8M Get requests by randomly generating their keys. This workload characteristic roughly makes DirectCXL’s memory accesses be faced with a cache miss for every four queries. Note that Workload of DirectCXL is longer than that of KVS, because DirectCXL places all hash table and tree for key-value pairs whereas KVS has it in local DRAM. Lastly, all the four graph workloads show similar trends; Swap is always slower than DirectCXL. They require multiple graph traverses, which frequently generate random memory access patterns. As Swap requires exchanging 4KB pages to read 8B pointers for graph traversing, it shows 2.2× worse performance than DirectCXL.

相比之下,DirectCXL 消除了这些硬件和软件开销,其性能远远优于 Swap 和 KVS。请注意,MemDB 包含 200 万个键值对,键值大小为 2KB,其主机通过随机生成键值来查询 800 万个 Get 请求。这一工作负载特征使得 DirectCXL 的内存访问每四次查询就会面临一次缓存缺失。请注意,DirectCXL 的工作负载比 KVS 长,因为 DirectCXL 将所有键值对的哈希表和树都放在设备本地 DRAM 中,而 KVS 则将其放在本地 DRAM 中。最后,所有四种图形工作负载都呈现出类似的趋势;Swap 总是比 DirectCXL 慢。它们需要多次图形遍历,经常产生随机内存访问模式。由于 Swap 需要交换 4KB 页面才能读取 8B 指针进行图形遍历,因此其性能比 DirectCXL 差 2.2 倍。

5. Conclusion

In this paper, we propose DIRECTCXL that connects host processor complex and remote memory resources over CXL’s memory protocol (CXL.mem). The results of our real system evaluation show that the disaggregated memory resources of DIRECTCXL can exhibit DRAM-like performance when the workload can enjoy the host-processor’s cache. For realworld applications, it exhibits 3× better performance than RDMA-based memory disaggregation, on average.

本文提出的 DIRECTCXL 通过 CXL 的内存协议(CXL.mem)连接主机处理器复合内存和远程内存资源。实际系统评估结果表明,当工作负载可以使用主机处理器的高速缓存时,DIRECTCXL 的分解内存资源可以表现出类似 DRAM 的性能。在实际应用中,它的性能比基于 RDMA 的内存分解平均高出 3 倍。