热门标签

热门文章

- 1android studio设置窗口颜色和字体_androidstudio newui 打开的tabs颜色

- 2android开发之高德地图API篇:1、高德地图API之实时定位+轨迹可视化_高德地图api实时定位

- 3聊聊RPC之Consumer_rpcconsumer

- 4Qt入门教程:配置Qt Creator_qt creator配置

- 5《数据结构与算法》(十七)- 二叉排序树_对于给定二叉排序树t,对于给出的正整数x

- 6基于LSTM的方面级情感分析模型_lstm文本情感分析模型的敏感性分析

- 7【Git】Git配置环境变量 - 设置.config在用户家目录_git 设置用户目录

- 8Python常用基础语法知识点大全合集,看完这一篇文章就够了

- 9Android APK打包 + APK签名_apk生成签名

- 10Python读取txt文件时报错UnicodeDecodeError: ‘utf-8‘ codec can‘t decode ......_unicodedecodeerror: 'utf-8' codec can't decode byt

当前位置: article > 正文

Hadoop综合实战——音乐排行榜_hadoop音乐排行榜

作者:羊村懒王 | 2024-04-20 16:53:11

赞

踩

hadoop音乐排行榜

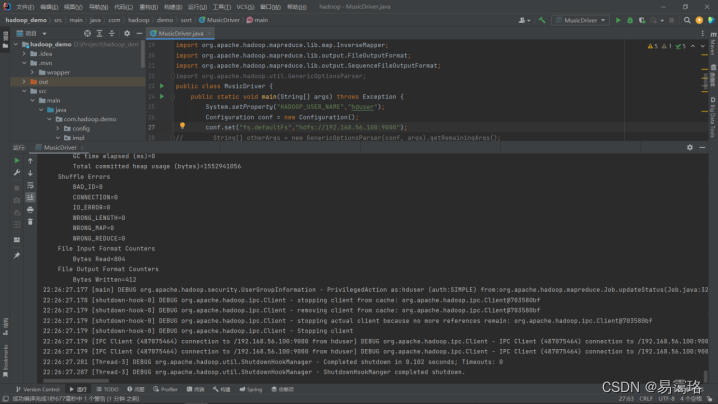

此实验在windows操作系统下进行的,使用IDEA编译运行

一、环境准备

二、解题思路

- 上传n个文件

- 读取n个文件内容

- 统计每个单曲的数量

- 比较每个单曲数量,得出排名

算法描述:它的本质还是通过Mapreduce编程思想对输入的数据进行排序

三、完整代码

//主类: package com.hadoop.demo.sort; /** * @author: 易霭珞 * @description * @date: 2022/11/7 19:15 */ import java.util.Random; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.io.WritableComparator; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.SequenceFileInputFormat; import org.apache.hadoop.mapreduce.lib.map.InverseMapper; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.mapreduce.lib.output.SequenceFileOutputFormat; import org.apache.hadoop.util.GenericOptionsParser; public class MusicDriver { public static void main(String[] args) throws Exception { System.setProperty("HADOOP_USER_NAME","hduser"); Configuration conf = new Configuration(); conf.set("fs.defaultFs","hdfs://192.168.56.100:9000"); Path tempDir = new Path("wordcount-temp-" + Integer.toString(new Random().nextInt(Integer.MAX_VALUE))); @SuppressWarnings("deprecation") Job job = new Job(conf, "word count"); job.setJarByClass(MusicDriver.class); try { job.setMapperClass(MusicMapper.class); job.setCombinerClass(MusicReducer.class); job.setReducerClass(MusicReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); FileInputFormat.addInputPath(job, new Path("hdfs://192.168.56.100:9000/user/hduser/music")); FileOutputFormat.setOutputPath(job, tempDir); job.setOutputFormatClass(SequenceFileOutputFormat.class); if (job.waitForCompletion(true)) { Job sortJob = new Job(conf, "sort"); sortJob.setJarByClass(MusicDriver.class); FileInputFormat.addInputPath(sortJob, tempDir); sortJob.setInputFormatClass(SequenceFileInputFormat.class); sortJob.setMapperClass(InverseMapper.class); FileOutputFormat.setOutputPath(sortJob, new Path("hdfs://192.168.56.100:9000/user/hduser/music/out")); sortJob.setOutputKeyClass(IntWritable.class); sortJob.setOutputValueClass(Text.class); sortJob.setSortComparatorClass(MusicSort.class); System.exit(sortJob.waitForCompletion(true) ? 0 : 1); } } finally { FileSystem.get(conf).deleteOnExit(tempDir); } } } //数据预处理类: package com.hadoop.demo.sort; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; /** * @author: 易霭珞 * @description *对数据进行格式化处理 * @date: 2022/11/7 22:00 */ public class MusicLine { private String music; private IntWritable one = new IntWritable(1); private boolean right = true; public MusicLine(String musicLine) { if (musicLine == null || "".equals(musicLine)) { this.right = false; return; } String[] strs = musicLine.split("//"); this.music = strs[0]; } public boolean isRight() { return right; } public Text getMusicCountMapOutKey() { return new Text(this.music); } public IntWritable getMusicCountMapOutValue() { return this.one; } } //Mapper实现类: package com.hadoop.demo.sort; /** * @author: 易霭珞 * @description *实现Mapper接口,输入是Text,这里需要把输入的value赋值给输出的key,而输出的value可以 为任意类型 * @date: 2022/11/7 22:30 */ import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; public class MusicMapper extends Mapper<Object, Text, Text, IntWritable> { private final static IntWritable one = new IntWritable(1); private Text word = new Text(); public void map(Object key, Text value, Context context) throws IOException, InterruptedException { MusicLine musicLine = new MusicLine(value.toString()); if (musicLine.isRight()) { context.write(musicLine.getMusicCountMapOutKey(), musicLine.getMusicCountMapOutValue()); } } } //Reducer实现类: package com.hadoop.demo.sort; import java.io.IOException; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; /** * @author: 易霭珞 * @description、 *实现Mapper接口,输入是Text,这里需要把输入的value赋值给输出的key,而输出的value可以 为任意类型 * @date: 2022/11/7 22:50 */ public class MusicReducer extends Reducer<Text, IntWritable, Text, IntWritable> { private IntWritable result = new IntWritable(); public void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { int sum = 0; for (IntWritable val : values) { sum += val.get(); } result.set(sum); context.write(key, result); } } //数据排序类: package com.hadoop.demo.sort; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.WritableComparator; /** * @author: 易霭珞 * @description *根据单曲数量对歌曲进行排序 * @date: 2022/11/7 23:30 */ public class MusicSort extends IntWritable.Comparator{ public int compare(WritableComparator a,WritableComparator b) { return -super.compare(a, b); } public int compare(byte[]b1,int s1,int l1,byte[]b2,int s2,int l2) { return -super.compare(b1, s1, l1, b2, s2, l2); } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

四、实验结果以及数据集

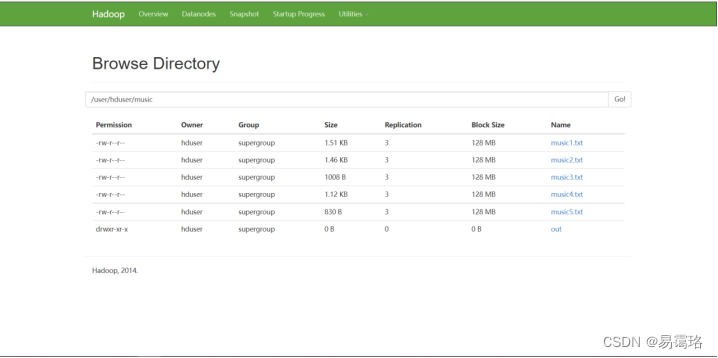

上传的数据集

音乐数据集

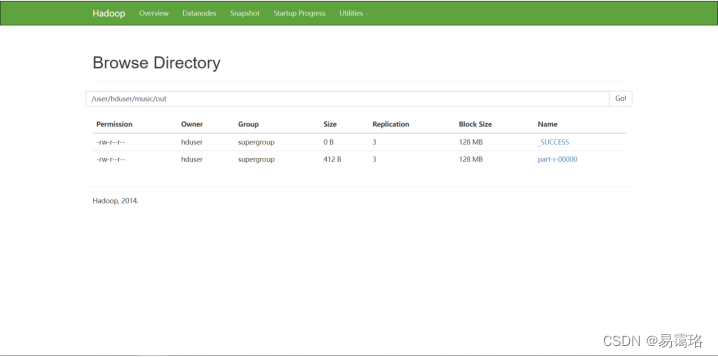

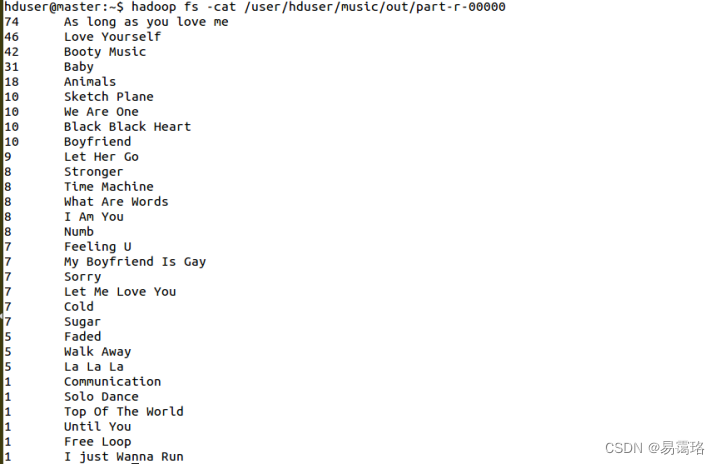

运行后的结果:

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/羊村懒王/article/detail/458364

推荐阅读

相关标签