1.抓取某个公众号所有的文件信息

Charles +电脑版微信+pycharm+python

2. 分析

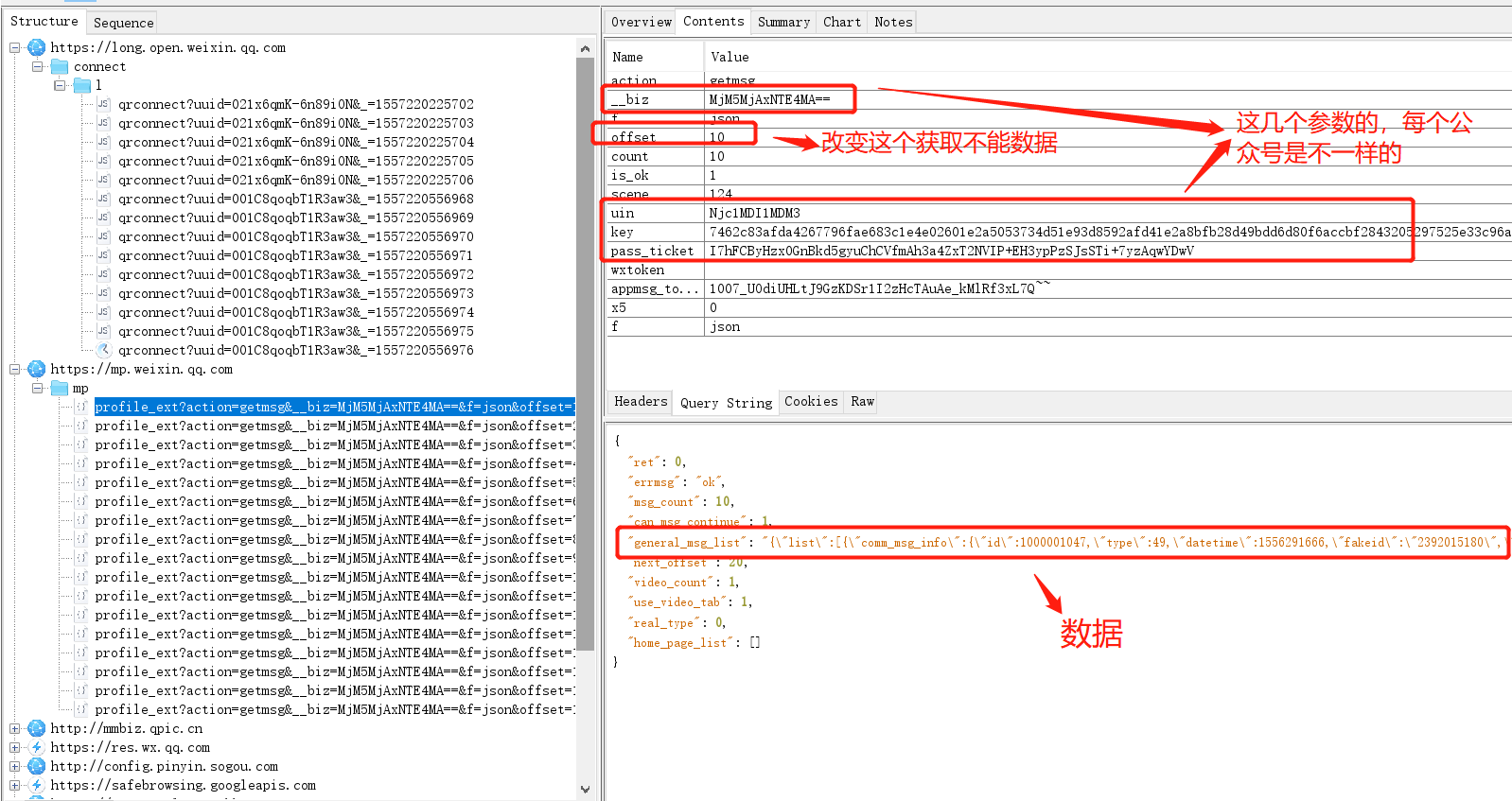

经过分析:每个公众号文章列表页连接都是

https://mp.weixin.qq.com/mp/profile_ext 开头 ,抓取时每个公众号的只有几个参照不一样

抓取:

3. 代码

import requests import json import time def parse(__biz, uin, key, pass_ticket, appmsg_token="", offset="0"): """ 文章信息获取 """ url = 'https://mp.weixin.qq.com/mp/profile_ext' headers = { "User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36 MicroMessenger/6.5.2.501 NetType/WIFI WindowsWechat QBCore/3.43.901.400 QQBrowser/9.0.2524.400", } params = { "action": "getmsg", "__biz": __biz, "f": "json", "offset": str(offset), "count": "10", "is_ok": "1", "scene": "124", "uin": uin, "key": key, "pass_ticket": pass_ticket, "wxtoken": "", "appmsg_token": appmsg_token, "x5": "0", } res = requests.get(url, headers=headers, params=params, timeout=3) data = json.loads(res.text) print(data) # 获取信息列表 msg_list = eval(data.get("general_msg_list")).get("list", []) for i in msg_list: # 去除文字链接 try: # 文章标题 title = i["app_msg_ext_info"]["title"].replace(',', ',') # 文章摘要 digest = i["app_msg_ext_info"]["digest"].replace(',', ',') # 文章链接 url = i["app_msg_ext_info"]["content_url"].replace("\\", "").replace("http", "https") # 文章发布时间 date = i["comm_msg_info"]["datetime"] print(title, digest, url, date) with open('article.csv', 'a') as f: f.write(title + ',' + digest + ',' + url + ',' + str(date) + '\n') except: pass # 判断是否可继续翻页 1-可以翻页 0-到底了 if 1 == data.get("can_msg_continue", 0): time.sleep(3) parse(__biz, uin, key, pass_ticket, appmsg_token, data["next_offset"]) else: print("爬取完毕") if __name__ == '__main__': # 请求参数 __biz = input('biz: ') uin = input('uin: ') key = input('key: ') pass_ticket = input('passtick: ') # 解析函数 parse(__biz, uin, key, pass_ticket, appmsg_token="", offset="0")

通过抓取获取到不同公众号的__biz, uin, key, pass_ticket的参数,就可以完成对该公众号的抓取。

注意: 程序运行前,用抓包工具获取需要的参数后,需要将charles 或filddle 抓包工具先关闭,然后再运行该程序,否则会报错。(这个程序需要电脑端微信登录后,进行抓取,如果使用的是模拟器,模拟器上的uni和key 参数和电脑端不一样,一般请求不能成功!)